时序预测:多头注意力+宽度学习

✨✨ 欢迎大家来访Srlua的博文(づ ̄3 ̄)づ╭❤~✨✨🌟🌟 欢迎各位亲爱的读者,感谢你们抽出宝贵的时间来阅读我的文章。

我是Srlua小谢,在这里我会分享我的知识和经验。🎥

希望在这里,我们能一起探索IT世界的奥妙,提升我们的技能。🔮

记得先点赞👍后阅读哦~ 👏👏

📘📚 所属专栏:传知代码论文复现

欢迎访问我的主页:Srlua小谢 获取更多信息和资源。✨✨🌙🌙

目录

概述

文章的主要贡献点

Multi-Attn整体架构

基于BLS随机映射的非线性动态特征重新激活

利用多头注意力机制进行多层语义信息提取

核心代码复现

代码优化方法

实验结果

使用方式

环境配置

文件结构

概述

Liyun Su, Lang Xiong和Jialing Yang在2024年发表了题为“Multi-Attn BLS: Multi-head attention mechanism with broad learning system for chaotic time series prediction”的论文,发表在《Applied Soft Computing》杂志上(CiteScore14.3,影响因子8.7)。这篇论文针对混沌时间序列数据的高复杂性和非线性提出了一种新的范式,即将宽度学习模型与多头自注意力机制相结合。在此之前,将这两种高度非线性映射算法融合的主要方法是使用堆叠的多头自注意力来提取特征,然后使用宽度学习模型进行分类预测。这篇论文提出了一种直接将多头注意力模块集成到宽度学习中的方法,从而实现了端到端的预测模型。

需要本文的详细复现过程的项目源码、数据和预训练好的模型可从该地址处获取完整版:地址

深度神经网络虽然具有残差连接来确保信息完整性,但需要较长的训练时间。宽度学习模型则采用级联结构实现信息重用,保证原始信息的完整性。它是一个单一、简单且专门化的网络,无需重新训练,并具有大多数机器学习模型的快速解决能力和大多数深度学习模型的拟合能力。对于宽度学习模型的更深入理解,请参阅原文(链接提供)。此外,该论文指出,多头注意力机制能够充分提取不同维度和层次的关键特征,并有效利用这些关键特征。他们通过列举之前的研究表明,带有注意力机制的模型可以通过捕获一部分语义信息来确保信息的有效性,从而在不同层次捕获丰富的信息。

因此,作者提出了使用宽度学习系统(BLS)来扩展混沌时间序列数据的维度,并引入多头注意力机制来提取不同级别的语义信息,包括线性和非线性相关性、混沌机制和噪声。同时,他们还利用残差连接来确保信息完整性。

文章的主要贡献点

1、提出了一种名为“Multi-Attn BLS”的BLS新范式,可以用于动态建模混沌时序数据。该模型可以通过级联和注意机制最大程度地丰富固定特征,并从混沌时间序列系统中有效提取语义信息。 2、Multi-Attn BLS使用带有位置编码的多头注意力机制来学习复杂的混沌时间序列模式,并通过捕捉时空关系最大化地提取语义信息。 3、Multi-Attn BLS在三个基准测试上取得了出色的预测效果,其它在混沌时间序列中也具有很强的可解释性。

Multi-Attn整体架构

Multi-Attn BLS主要可分为三个部分:1)混沌时序数据预处理;2)基于BLS随机映射的非线性动态特征重新激活;3)利用多头注意力机制进行多层语义信息提取。

Multi-Attn BLS主要可分为三个部分:1)混沌时序数据预处理;2)基于BLS随机映射的非线性动态特征重新激活;3)利用多头注意力机制进行多层语义信息提取。

首先,根据相空间重构理论,Liyun Su,Lang Xiong和Jialing Yang使用C-C方法来解决嵌入维度和延迟时间,以恢复混沌系统,并将混沌时间序列转变为可预测模式。然后,重新构建的混沌时间序列数据被BLS的特征层和增强层随机映射并增强到高维系统,从而生成含有不同模式的混沌时间序列的混合特征。最后,使用多头注意力机制和残差连接来提取系统中保留的时空关系,包括线性相关、非线性确定性和噪声。

混沌时序数据预处理:基于相空间重构理论的混沌系统恢复 混沌时间序列是动力系统产生的单变量或多变量时间序列。相空间重构定理将混沌时间序列映射到高维空间,以重构原始动力系统的一组表示。根据Takens嵌入定理,必须重构相空间以恢复原始的混沌吸引子,并使混沌系统的时间序列获得固定维度。

基于BLS随机映射的非线性动态特征重新激活

BLS的整体架构如上图所示,在这里我们实际上只用到了它的映射能力,即特征节点层和增强节点层,也就是上面的mapping feature nodes和enhancement feature nodes。这两层的搭建方法如下:

利用多头注意力机制进行多层语义信息提取

在这一部分,作者使用了堆叠的多头自注意力机制来处理经BLS映射得到的高维节点。因此,我们着重介绍了多头自注意力机制的原理。

多头自注意力机制的推导过程如下所示:首先,我们定义了多头自注意力操作:

其中,每个头的计算过程为:

再经过足够多的多头注意力模块处理后,作者使用了一个全连接层将结果映射到输出空间。

核心代码复现

在本文中,我们主要关注MultiAttn-BLS中多头自注意力机制和BLS模型的融合,对时序数据预处理的复现不是本文重点。在这里给出由笔者复现的MultiAttn-BLS代码,代码采用pytorch框架搭建模型框架:

<span style="background-color:#f8f8f8"><span style="color:#333333"><span style="color:#aa5500"># BLS映射层</span>

<span style="color:#770088">import</span> <span style="color:#000000">numpy</span> <span style="color:#770088">as</span> <span style="color:#000000">np</span>

<span style="color:#770088">from</span> <span style="color:#000000">sklearn</span> <span style="color:#770088">import</span> <span style="color:#000000">preprocessing</span>

<span style="color:#770088">from</span> <span style="color:#000000">numpy</span> <span style="color:#770088">import</span> <span style="color:#000000">random</span>

<span style="color:#770088">from</span> <span style="color:#000000">scipy</span> <span style="color:#770088">import</span> <span style="color:#000000">linalg</span> <span style="color:#770088">as</span> <span style="color:#000000">LA</span>

<span style="color:#770088">def</span> <span style="color:#0000ff">show_accuracy</span>(<span style="color:#000000">predictLabel</span>, <span style="color:#000000">Label</span>):<span style="color:#000000">count</span> <span style="color:#981a1a">=</span> <span style="color:#116644">0</span><span style="color:#000000">label_1</span> <span style="color:#981a1a">=</span> <span style="color:#000000">Label</span>.<span style="color:#000000">argmax</span>(<span style="color:#000000">axis</span><span style="color:#981a1a">=</span><span style="color:#116644">1</span>)<span style="color:#000000">predlabel</span> <span style="color:#981a1a">=</span> <span style="color:#000000">predictLabel</span>.<span style="color:#000000">argmax</span>(<span style="color:#000000">axis</span><span style="color:#981a1a">=</span><span style="color:#116644">1</span>)<span style="color:#770088">for</span> <span style="color:#000000">j</span> <span style="color:#770088">in</span> <span style="color:#3300aa">list</span>(<span style="color:#3300aa">range</span>(<span style="color:#000000">Label</span>.<span style="color:#000000">shape</span>[<span style="color:#116644">0</span>])):<span style="color:#770088">if</span> <span style="color:#000000">label_1</span>[<span style="color:#000000">j</span>] <span style="color:#981a1a">==</span> <span style="color:#000000">predlabel</span>[<span style="color:#000000">j</span>]:<span style="color:#000000">count</span> <span style="color:#981a1a">+=</span> <span style="color:#116644">1</span><span style="color:#770088">return</span> (<span style="color:#3300aa">round</span>(<span style="color:#000000">count</span> <span style="color:#981a1a">/</span> <span style="color:#3300aa">len</span>(<span style="color:#000000">Label</span>), <span style="color:#116644">5</span>))

<span style="color:#770088">def</span> <span style="color:#0000ff">tansig</span>(<span style="color:#000000">x</span>):<span style="color:#770088">return</span> (<span style="color:#116644">2</span> <span style="color:#981a1a">/</span> (<span style="color:#116644">1</span> <span style="color:#981a1a">+</span> <span style="color:#000000">np</span>.<span style="color:#000000">exp</span>(<span style="color:#981a1a">-</span><span style="color:#116644">2</span> <span style="color:#981a1a">*</span> <span style="color:#000000">x</span>))) <span style="color:#981a1a">-</span> <span style="color:#116644">1</span>

<span style="color:#770088">def</span> <span style="color:#0000ff">sigmoid</span>(<span style="color:#000000">data</span>):<span style="color:#770088">return</span> <span style="color:#116644">1.0</span> <span style="color:#981a1a">/</span> (<span style="color:#116644">1</span> <span style="color:#981a1a">+</span> <span style="color:#000000">np</span>.<span style="color:#000000">exp</span>(<span style="color:#981a1a">-</span><span style="color:#000000">data</span>))

<span style="color:#770088">def</span> <span style="color:#0000ff">linear</span>(<span style="color:#000000">data</span>):<span style="color:#770088">return</span> <span style="color:#000000">data</span>

<span style="color:#770088">def</span> <span style="color:#0000ff">tanh</span>(<span style="color:#000000">data</span>):<span style="color:#770088">return</span> (<span style="color:#000000">np</span>.<span style="color:#000000">exp</span>(<span style="color:#000000">data</span>) <span style="color:#981a1a">-</span> <span style="color:#000000">np</span>.<span style="color:#000000">exp</span>(<span style="color:#981a1a">-</span><span style="color:#000000">data</span>)) <span style="color:#981a1a">/</span> (<span style="color:#000000">np</span>.<span style="color:#000000">exp</span>(<span style="color:#000000">data</span>) <span style="color:#981a1a">+</span> <span style="color:#000000">np</span>.<span style="color:#000000">exp</span>(<span style="color:#981a1a">-</span><span style="color:#000000">data</span>))

<span style="color:#770088">def</span> <span style="color:#0000ff">relu</span>(<span style="color:#000000">data</span>):<span style="color:#770088">return</span> <span style="color:#000000">np</span>.<span style="color:#000000">maximum</span>(<span style="color:#000000">data</span>, <span style="color:#116644">0</span>)

<span style="color:#770088">def</span> <span style="color:#0000ff">pinv</span>(<span style="color:#000000">A</span>, <span style="color:#000000">reg</span>):<span style="color:#770088">return</span> <span style="color:#000000">np</span>.<span style="color:#000000">mat</span>(<span style="color:#000000">reg</span> <span style="color:#981a1a">*</span> <span style="color:#000000">np</span>.<span style="color:#000000">eye</span>(<span style="color:#000000">A</span>.<span style="color:#000000">shape</span>[<span style="color:#116644">1</span>]) <span style="color:#981a1a">+</span> <span style="color:#000000">A</span>.<span style="color:#000000">T</span>.<span style="color:#000000">dot</span>(<span style="color:#000000">A</span>)).<span style="color:#000000">I</span>.<span style="color:#000000">dot</span>(<span style="color:#000000">A</span>.<span style="color:#000000">T</span>)

<span style="color:#770088">def</span> <span style="color:#0000ff">shrinkage</span>(<span style="color:#000000">a</span>, <span style="color:#000000">b</span>):<span style="color:#000000">z</span> <span style="color:#981a1a">=</span> <span style="color:#000000">np</span>.<span style="color:#000000">maximum</span>(<span style="color:#000000">a</span> <span style="color:#981a1a">-</span> <span style="color:#000000">b</span>, <span style="color:#116644">0</span>) <span style="color:#981a1a">-</span> <span style="color:#000000">np</span>.<span style="color:#000000">maximum</span>(<span style="color:#981a1a">-</span><span style="color:#000000">a</span> <span style="color:#981a1a">-</span> <span style="color:#000000">b</span>, <span style="color:#116644">0</span>)<span style="color:#770088">return</span> <span style="color:#000000">z</span>

<span style="color:#770088">def</span> <span style="color:#0000ff">sparse_bls</span>(<span style="color:#000000">A</span>, <span style="color:#000000">b</span>): <span style="color:#aa5500">#A:映射后每个窗口的节点,b:加入bias的输入数据</span><span style="color:#000000">lam</span> <span style="color:#981a1a">=</span> <span style="color:#116644">0.001</span><span style="color:#000000">itrs</span> <span style="color:#981a1a">=</span> <span style="color:#116644">50</span><span style="color:#000000">AA</span> <span style="color:#981a1a">=</span> <span style="color:#000000">A</span>.<span style="color:#000000">T</span>.<span style="color:#000000">dot</span>(<span style="color:#000000">A</span>)<span style="color:#000000">m</span> <span style="color:#981a1a">=</span> <span style="color:#000000">A</span>.<span style="color:#000000">shape</span>[<span style="color:#116644">1</span>]<span style="color:#000000">n</span> <span style="color:#981a1a">=</span> <span style="color:#000000">b</span>.<span style="color:#000000">shape</span>[<span style="color:#116644">1</span>]<span style="color:#000000">x1</span> <span style="color:#981a1a">=</span> <span style="color:#000000">np</span>.<span style="color:#000000">zeros</span>([<span style="color:#000000">m</span>, <span style="color:#000000">n</span>])<span style="color:#000000">wk</span> <span style="color:#981a1a">=</span> <span style="color:#000000">x1</span><span style="color:#000000">ok</span> <span style="color:#981a1a">=</span> <span style="color:#000000">x1</span><span style="color:#000000">uk</span> <span style="color:#981a1a">=</span> <span style="color:#000000">x1</span><span style="color:#000000">L1</span> <span style="color:#981a1a">=</span> <span style="color:#000000">np</span>.<span style="color:#000000">mat</span>(<span style="color:#000000">AA</span> <span style="color:#981a1a">+</span> <span style="color:#000000">np</span>.<span style="color:#000000">eye</span>(<span style="color:#000000">m</span>)).<span style="color:#000000">I</span><span style="color:#000000">L2</span> <span style="color:#981a1a">=</span> (<span style="color:#000000">L1</span>.<span style="color:#000000">dot</span>(<span style="color:#000000">A</span>.<span style="color:#000000">T</span>)).<span style="color:#000000">dot</span>(<span style="color:#000000">b</span>)<span style="color:#770088">for</span> <span style="color:#000000">i</span> <span style="color:#770088">in</span> <span style="color:#3300aa">range</span>(<span style="color:#000000">itrs</span>):<span style="color:#000000">ck</span> <span style="color:#981a1a">=</span> <span style="color:#000000">L2</span> <span style="color:#981a1a">+</span> <span style="color:#000000">np</span>.<span style="color:#000000">dot</span>(<span style="color:#000000">L1</span>, (<span style="color:#000000">ok</span> <span style="color:#981a1a">-</span> <span style="color:#000000">uk</span>))<span style="color:#000000">ok</span> <span style="color:#981a1a">=</span> <span style="color:#000000">shrinkage</span>(<span style="color:#000000">ck</span> <span style="color:#981a1a">+</span> <span style="color:#000000">uk</span>, <span style="color:#000000">lam</span>)<span style="color:#000000">uk</span> <span style="color:#981a1a">=</span> <span style="color:#000000">uk</span> <span style="color:#981a1a">+</span> <span style="color:#000000">ck</span> <span style="color:#981a1a">-</span> <span style="color:#000000">ok</span><span style="color:#000000">wk</span> <span style="color:#981a1a">=</span> <span style="color:#000000">ok</span><span style="color:#770088">return</span> <span style="color:#000000">wk</span>

<span style="color:#770088">def</span> <span style="color:#0000ff">generate_mappingFeaturelayer</span>(<span style="color:#000000">train_x</span>, <span style="color:#000000">FeatureOfInputDataWithBias</span>, <span style="color:#000000">N1</span>, <span style="color:#000000">N2</span>, <span style="color:#000000">OutputOfFeatureMappingLayer</span>, <span style="color:#000000">u</span><span style="color:#981a1a">=</span><span style="color:#116644">0</span>):<span style="color:#000000">Beta1OfEachWindow</span> <span style="color:#981a1a">=</span> <span style="color:#3300aa">list</span>()<span style="color:#000000">distOfMaxAndMin</span> <span style="color:#981a1a">=</span> []<span style="color:#000000">minOfEachWindow</span> <span style="color:#981a1a">=</span> []<span style="color:#770088">for</span> <span style="color:#000000">i</span> <span style="color:#770088">in</span> <span style="color:#3300aa">range</span>(<span style="color:#000000">N2</span>):<span style="color:#000000">random</span>.<span style="color:#000000">seed</span>(<span style="color:#000000">i</span> <span style="color:#981a1a">+</span> <span style="color:#000000">u</span>)<span style="color:#000000">weightOfEachWindow</span> <span style="color:#981a1a">=</span> <span style="color:#116644">2</span> <span style="color:#981a1a">*</span> <span style="color:#000000">random</span>.<span style="color:#000000">randn</span>(<span style="color:#000000">train_x</span>.<span style="color:#000000">shape</span>[<span style="color:#116644">1</span>] <span style="color:#981a1a">+</span> <span style="color:#116644">1</span>, <span style="color:#000000">N1</span>) <span style="color:#981a1a">-</span> <span style="color:#116644">1</span><span style="color:#000000">FeatureOfEachWindow</span> <span style="color:#981a1a">=</span> <span style="color:#000000">np</span>.<span style="color:#000000">dot</span>(<span style="color:#000000">FeatureOfInputDataWithBias</span>, <span style="color:#000000">weightOfEachWindow</span>)<span style="color:#000000">scaler1</span> <span style="color:#981a1a">=</span> <span style="color:#000000">preprocessing</span>.<span style="color:#000000">MinMaxScaler</span>(<span style="color:#000000">feature_range</span><span style="color:#981a1a">=</span>(<span style="color:#981a1a">-</span><span style="color:#116644">1</span>, <span style="color:#116644">1</span>)).<span style="color:#000000">fit</span>(<span style="color:#000000">FeatureOfEachWindow</span>)<span style="color:#000000">FeatureOfEachWindowAfterPreprocess</span> <span style="color:#981a1a">=</span> <span style="color:#000000">scaler1</span>.<span style="color:#000000">transform</span>(<span style="color:#000000">FeatureOfEachWindow</span>)<span style="color:#000000">betaOfEachWindow</span> <span style="color:#981a1a">=</span> <span style="color:#000000">sparse_bls</span>(<span style="color:#000000">FeatureOfEachWindowAfterPreprocess</span>, <span style="color:#000000">FeatureOfInputDataWithBias</span>).<span style="color:#000000">T</span><span style="color:#aa5500"># betaOfEachWindow = graph_autoencoder(FeatureOfInputDataWithBias, FeatureOfEachWindowAfterPreprocess).T</span><span style="color:#000000">Beta1OfEachWindow</span>.<span style="color:#000000">append</span>(<span style="color:#000000">betaOfEachWindow</span>)<span style="color:#000000">outputOfEachWindow</span> <span style="color:#981a1a">=</span> <span style="color:#000000">np</span>.<span style="color:#000000">dot</span>(<span style="color:#000000">FeatureOfInputDataWithBias</span>, <span style="color:#000000">betaOfEachWindow</span>)<span style="color:#000000">distOfMaxAndMin</span>.<span style="color:#000000">append</span>(<span style="color:#000000">np</span>.<span style="color:#000000">max</span>(<span style="color:#000000">outputOfEachWindow</span>, <span style="color:#000000">axis</span><span style="color:#981a1a">=</span><span style="color:#116644">0</span>) <span style="color:#981a1a">-</span> <span style="color:#000000">np</span>.<span style="color:#000000">min</span>(<span style="color:#000000">outputOfEachWindow</span>, <span style="color:#000000">axis</span><span style="color:#981a1a">=</span><span style="color:#116644">0</span>))<span style="color:#000000">minOfEachWindow</span>.<span style="color:#000000">append</span>(<span style="color:#000000">np</span>.<span style="color:#000000">mean</span>(<span style="color:#000000">outputOfEachWindow</span>, <span style="color:#000000">axis</span><span style="color:#981a1a">=</span><span style="color:#116644">0</span>))<span style="color:#000000">outputOfEachWindow</span> <span style="color:#981a1a">=</span> (<span style="color:#000000">outputOfEachWindow</span> <span style="color:#981a1a">-</span> <span style="color:#000000">minOfEachWindow</span>[<span style="color:#000000">i</span>]) <span style="color:#981a1a">/</span> <span style="color:#000000">distOfMaxAndMin</span>[<span style="color:#000000">i</span>]<span style="color:#000000">OutputOfFeatureMappingLayer</span>[:, <span style="color:#000000">N1</span> <span style="color:#981a1a">*</span> <span style="color:#000000">i</span>:<span style="color:#000000">N1</span> <span style="color:#981a1a">*</span> (<span style="color:#000000">i</span> <span style="color:#981a1a">+</span> <span style="color:#116644">1</span>)] <span style="color:#981a1a">=</span> <span style="color:#000000">outputOfEachWindow</span><span style="color:#770088">return</span> <span style="color:#000000">OutputOfFeatureMappingLayer</span>, <span style="color:#000000">Beta1OfEachWindow</span>, <span style="color:#000000">distOfMaxAndMin</span>, <span style="color:#000000">minOfEachWindow</span>

<span style="color:#770088">def</span> <span style="color:#0000ff">generate_enhancelayer</span>(<span style="color:#000000">OutputOfFeatureMappingLayer</span>, <span style="color:#000000">N1</span>, <span style="color:#000000">N2</span>, <span style="color:#000000">N3</span>, <span style="color:#000000">s</span>):<span style="color:#000000">InputOfEnhanceLayerWithBias</span> <span style="color:#981a1a">=</span> <span style="color:#000000">np</span>.<span style="color:#000000">hstack</span>([<span style="color:#000000">OutputOfFeatureMappingLayer</span>, <span style="color:#116644">0.1</span> <span style="color:#981a1a">*</span> <span style="color:#000000">np</span>.<span style="color:#000000">ones</span>((<span style="color:#000000">OutputOfFeatureMappingLayer</span>.<span style="color:#000000">shape</span>[<span style="color:#116644">0</span>], <span style="color:#116644">1</span>))])<span style="color:#770088">if</span> <span style="color:#000000">N1</span> <span style="color:#981a1a">*</span> <span style="color:#000000">N2</span> <span style="color:#981a1a">>=</span> <span style="color:#000000">N3</span>:<span style="color:#000000">random</span>.<span style="color:#000000">seed</span>(<span style="color:#116644">67797325</span>)<span style="color:#000000">weightOfEnhanceLayer</span> <span style="color:#981a1a">=</span> <span style="color:#000000">LA</span>.<span style="color:#000000">orth</span>(<span style="color:#116644">2</span> <span style="color:#981a1a">*</span> <span style="color:#000000">random</span>.<span style="color:#000000">randn</span>(<span style="color:#000000">N2</span> <span style="color:#981a1a">*</span> <span style="color:#000000">N1</span> <span style="color:#981a1a">+</span> <span style="color:#116644">1</span>, <span style="color:#000000">N3</span>) <span style="color:#981a1a">-</span> <span style="color:#116644">1</span>)<span style="color:#770088">else</span>:<span style="color:#000000">random</span>.<span style="color:#000000">seed</span>(<span style="color:#116644">67797325</span>)<span style="color:#000000">weightOfEnhanceLayer</span> <span style="color:#981a1a">=</span> <span style="color:#000000">LA</span>.<span style="color:#000000">orth</span>(<span style="color:#116644">2</span> <span style="color:#981a1a">*</span> <span style="color:#000000">random</span>.<span style="color:#000000">randn</span>(<span style="color:#000000">N2</span> <span style="color:#981a1a">*</span> <span style="color:#000000">N1</span> <span style="color:#981a1a">+</span> <span style="color:#116644">1</span>, <span style="color:#000000">N3</span>).<span style="color:#000000">T</span> <span style="color:#981a1a">-</span> <span style="color:#116644">1</span>).<span style="color:#000000">T</span>

<span style="color:#000000">tempOfOutputOfEnhanceLayer</span> <span style="color:#981a1a">=</span> <span style="color:#000000">np</span>.<span style="color:#000000">dot</span>(<span style="color:#000000">InputOfEnhanceLayerWithBias</span>, <span style="color:#000000">weightOfEnhanceLayer</span>)<span style="color:#000000">parameterOfShrink</span> <span style="color:#981a1a">=</span> <span style="color:#000000">s</span> <span style="color:#981a1a">/</span> <span style="color:#000000">np</span>.<span style="color:#000000">max</span>(<span style="color:#000000">tempOfOutputOfEnhanceLayer</span>)<span style="color:#000000">OutputOfEnhanceLayer</span> <span style="color:#981a1a">=</span> <span style="color:#000000">tansig</span>(<span style="color:#000000">tempOfOutputOfEnhanceLayer</span> <span style="color:#981a1a">*</span> <span style="color:#000000">parameterOfShrink</span>)<span style="color:#770088">return</span> <span style="color:#000000">OutputOfEnhanceLayer</span>, <span style="color:#000000">parameterOfShrink</span>, <span style="color:#000000">weightOfEnhanceLayer</span>

<span style="color:#770088">def</span> <span style="color:#0000ff">BLS_Genfeatures</span>(<span style="color:#000000">train_x</span>, <span style="color:#000000">test_x</span>, <span style="color:#000000">N1</span>, <span style="color:#000000">N2</span>, <span style="color:#000000">N3</span>, <span style="color:#000000">s</span>):<span style="color:#000000">u</span> <span style="color:#981a1a">=</span> <span style="color:#116644">0</span><span style="color:#000000">train_x</span> <span style="color:#981a1a">=</span> <span style="color:#000000">preprocessing</span>.<span style="color:#000000">scale</span>(<span style="color:#000000">train_x</span>, <span style="color:#000000">axis</span><span style="color:#981a1a">=</span><span style="color:#116644">1</span>)<span style="color:#000000">FeatureOfInputDataWithBias</span> <span style="color:#981a1a">=</span> <span style="color:#000000">np</span>.<span style="color:#000000">hstack</span>([<span style="color:#000000">train_x</span>, <span style="color:#116644">0.1</span> <span style="color:#981a1a">*</span> <span style="color:#000000">np</span>.<span style="color:#000000">ones</span>((<span style="color:#000000">train_x</span>.<span style="color:#000000">shape</span>[<span style="color:#116644">0</span>], <span style="color:#116644">1</span>))])<span style="color:#000000">OutputOfFeatureMappingLayer</span> <span style="color:#981a1a">=</span> <span style="color:#000000">np</span>.<span style="color:#000000">zeros</span>([<span style="color:#000000">train_x</span>.<span style="color:#000000">shape</span>[<span style="color:#116644">0</span>], <span style="color:#000000">N2</span> <span style="color:#981a1a">*</span> <span style="color:#000000">N1</span>])

<span style="color:#000000">OutputOfFeatureMappingLayer</span>, <span style="color:#000000">Beta1OfEachWindow</span>, <span style="color:#000000">distOfMaxAndMin</span>, <span style="color:#000000">minOfEachWindow</span> <span style="color:#981a1a">=</span> \<span style="color:#000000">generate_mappingFeaturelayer</span>(<span style="color:#000000">train_x</span>, <span style="color:#000000">FeatureOfInputDataWithBias</span>, <span style="color:#000000">N1</span>, <span style="color:#000000">N2</span>, <span style="color:#000000">OutputOfFeatureMappingLayer</span>, <span style="color:#000000">u</span>)

<span style="color:#000000">OutputOfEnhanceLayer</span>, <span style="color:#000000">parameterOfShrink</span>, <span style="color:#000000">weightOfEnhanceLayer</span> <span style="color:#981a1a">=</span> \<span style="color:#000000">generate_enhancelayer</span>(<span style="color:#000000">OutputOfFeatureMappingLayer</span>, <span style="color:#000000">N1</span>, <span style="color:#000000">N2</span>, <span style="color:#000000">N3</span>, <span style="color:#000000">s</span>)

<span style="color:#000000">InputOfOutputLayerTrain</span> <span style="color:#981a1a">=</span> <span style="color:#000000">np</span>.<span style="color:#000000">hstack</span>([<span style="color:#000000">OutputOfFeatureMappingLayer</span>, <span style="color:#000000">OutputOfEnhanceLayer</span>])

<span style="color:#000000">test_x</span> <span style="color:#981a1a">=</span> <span style="color:#000000">preprocessing</span>.<span style="color:#000000">scale</span>(<span style="color:#000000">test_x</span>, <span style="color:#000000">axis</span><span style="color:#981a1a">=</span><span style="color:#116644">1</span>)<span style="color:#000000">FeatureOfInputDataWithBiasTest</span> <span style="color:#981a1a">=</span> <span style="color:#000000">np</span>.<span style="color:#000000">hstack</span>([<span style="color:#000000">test_x</span>, <span style="color:#116644">0.1</span> <span style="color:#981a1a">*</span> <span style="color:#000000">np</span>.<span style="color:#000000">ones</span>((<span style="color:#000000">test_x</span>.<span style="color:#000000">shape</span>[<span style="color:#116644">0</span>], <span style="color:#116644">1</span>))])<span style="color:#000000">OutputOfFeatureMappingLayerTest</span> <span style="color:#981a1a">=</span> <span style="color:#000000">np</span>.<span style="color:#000000">zeros</span>([<span style="color:#000000">test_x</span>.<span style="color:#000000">shape</span>[<span style="color:#116644">0</span>], <span style="color:#000000">N2</span> <span style="color:#981a1a">*</span> <span style="color:#000000">N1</span>])<span style="color:#770088">for</span> <span style="color:#000000">i</span> <span style="color:#770088">in</span> <span style="color:#3300aa">range</span>(<span style="color:#000000">N2</span>):<span style="color:#000000">outputOfEachWindowTest</span> <span style="color:#981a1a">=</span> <span style="color:#000000">np</span>.<span style="color:#000000">dot</span>(<span style="color:#000000">FeatureOfInputDataWithBiasTest</span>, <span style="color:#000000">Beta1OfEachWindow</span>[<span style="color:#000000">i</span>])<span style="color:#000000">OutputOfFeatureMappingLayerTest</span>[:, <span style="color:#000000">N1</span> <span style="color:#981a1a">*</span> <span style="color:#000000">i</span>:<span style="color:#000000">N1</span> <span style="color:#981a1a">*</span> (<span style="color:#000000">i</span> <span style="color:#981a1a">+</span> <span style="color:#116644">1</span>)] <span style="color:#981a1a">=</span> (<span style="color:#000000">outputOfEachWindowTest</span> <span style="color:#981a1a">-</span> <span style="color:#000000">minOfEachWindow</span>[<span style="color:#000000">i</span>]) <span style="color:#981a1a">/</span> \<span style="color:#000000">distOfMaxAndMin</span>[<span style="color:#000000">i</span>]

<span style="color:#000000">InputOfEnhanceLayerWithBiasTest</span> <span style="color:#981a1a">=</span> <span style="color:#000000">np</span>.<span style="color:#000000">hstack</span>([<span style="color:#000000">OutputOfFeatureMappingLayerTest</span>, <span style="color:#116644">0.1</span> <span style="color:#981a1a">*</span> <span style="color:#000000">np</span>.<span style="color:#000000">ones</span>((<span style="color:#000000">OutputOfFeatureMappingLayerTest</span>.<span style="color:#000000">shape</span>[<span style="color:#116644">0</span>], <span style="color:#116644">1</span>))])<span style="color:#000000">tempOfOutputOfEnhanceLayerTest</span> <span style="color:#981a1a">=</span> <span style="color:#000000">np</span>.<span style="color:#000000">dot</span>(<span style="color:#000000">InputOfEnhanceLayerWithBiasTest</span>, <span style="color:#000000">weightOfEnhanceLayer</span>)

<span style="color:#000000">OutputOfEnhanceLayerTest</span> <span style="color:#981a1a">=</span> <span style="color:#000000">tansig</span>(<span style="color:#000000">tempOfOutputOfEnhanceLayerTest</span> <span style="color:#981a1a">*</span> <span style="color:#000000">parameterOfShrink</span>)

<span style="color:#000000">InputOfOutputLayerTest</span> <span style="color:#981a1a">=</span> <span style="color:#000000">np</span>.<span style="color:#000000">hstack</span>([<span style="color:#000000">OutputOfFeatureMappingLayerTest</span>, <span style="color:#000000">OutputOfEnhanceLayerTest</span>])

<span style="color:#770088">return</span> <span style="color:#000000">InputOfOutputLayerTrain</span>, <span style="color:#000000">InputOfOutputLayerTest</span></span></span><span style="background-color:#f8f8f8"><span style="color:#333333"><span style="color:#aa5500"># 多头注意力层</span>

<span style="color:#770088">import</span> <span style="color:#000000">torch</span>

<span style="color:#770088">import</span> <span style="color:#000000">math</span>

<span style="color:#770088">import</span> <span style="color:#000000">ssl</span>

<span style="color:#770088">import</span> <span style="color:#000000">numpy</span> <span style="color:#770088">as</span> <span style="color:#000000">np</span>

<span style="color:#770088">import</span> <span style="color:#000000">torch</span>.<span style="color:#000000">nn</span>.<span style="color:#000000">functional</span> <span style="color:#770088">as</span> <span style="color:#000000">F</span>

<span style="color:#770088">import</span> <span style="color:#000000">torch</span>.<span style="color:#000000">nn</span> <span style="color:#770088">as</span> <span style="color:#000000">nn</span>

<span style="color:#000000">ssl</span>.<span style="color:#000000">_create_default_https_context</span> <span style="color:#981a1a">=</span> <span style="color:#000000">ssl</span>.<span style="color:#000000">_create_unverified_context</span>

<span style="color:#770088">def</span> <span style="color:#0000ff">pos_encoding</span>(<span style="color:#000000">OutputOfFeatureMappingLayer</span>, <span style="color:#000000">B</span>, <span style="color:#000000">N</span>, <span style="color:#000000">C</span>):<span style="color:#000000">OutputOfFeatureMappingLayer</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">tensor</span>(<span style="color:#000000">OutputOfFeatureMappingLayer</span>, <span style="color:#000000">dtype</span><span style="color:#981a1a">=</span><span style="color:#000000">torch</span>.<span style="color:#000000">float</span>).<span style="color:#000000">reshape</span>(<span style="color:#000000">B</span>, <span style="color:#000000">N</span>, <span style="color:#000000">C</span>)

<span style="color:#aa5500"># 定义位置编码的最大序列长度和特征维度</span><span style="color:#000000">max_sequence_length</span> <span style="color:#981a1a">=</span> <span style="color:#000000">OutputOfFeatureMappingLayer</span>.<span style="color:#000000">size</span>(<span style="color:#116644">1</span>)<span style="color:#000000">feature_dim</span> <span style="color:#981a1a">=</span> <span style="color:#000000">OutputOfFeatureMappingLayer</span>.<span style="color:#000000">size</span>(<span style="color:#116644">2</span>)<span style="color:#aa5500"># 计算位置编码矩阵</span><span style="color:#000000">position_encodings</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">zeros</span>(<span style="color:#000000">max_sequence_length</span>, <span style="color:#000000">feature_dim</span>)<span style="color:#000000">position</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">arange</span>(<span style="color:#116644">0</span>, <span style="color:#000000">max_sequence_length</span>, <span style="color:#000000">dtype</span><span style="color:#981a1a">=</span><span style="color:#000000">torch</span>.<span style="color:#000000">float</span>).<span style="color:#000000">unsqueeze</span>(<span style="color:#116644">1</span>)<span style="color:#000000">div_term</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">exp</span>(<span style="color:#000000">torch</span>.<span style="color:#000000">arange</span>(<span style="color:#116644">0</span>, <span style="color:#000000">feature_dim</span>, <span style="color:#116644">2</span>).<span style="color:#000000">float</span>() <span style="color:#981a1a">*</span> (<span style="color:#981a1a">-</span><span style="color:#000000">math</span>.<span style="color:#000000">log</span>(<span style="color:#116644">10000.0</span>) <span style="color:#981a1a">/</span> <span style="color:#000000">feature_dim</span>))<span style="color:#000000">position_encodings</span>[:, <span style="color:#116644">0</span>::<span style="color:#116644">2</span>] <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">sin</span>(<span style="color:#000000">position</span> <span style="color:#981a1a">*</span> <span style="color:#000000">div_term</span>)<span style="color:#000000">position_encodings</span>[:, <span style="color:#116644">1</span>::<span style="color:#116644">2</span>] <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">cos</span>(<span style="color:#000000">position</span> <span style="color:#981a1a">*</span> <span style="color:#000000">div_term</span>)<span style="color:#aa5500"># 将位置编码矩阵扩展为和输入张量 x 的形状一致</span><span style="color:#000000">position_encodings</span> <span style="color:#981a1a">=</span> <span style="color:#000000">position_encodings</span>.<span style="color:#000000">unsqueeze</span>(<span style="color:#116644">0</span>).<span style="color:#000000">expand_as</span>(<span style="color:#000000">OutputOfFeatureMappingLayer</span>)<span style="color:#000000">OutputOfFeatureMappingLayer</span> <span style="color:#981a1a">=</span> <span style="color:#000000">OutputOfFeatureMappingLayer</span> <span style="color:#981a1a">+</span> <span style="color:#000000">position_encodings</span><span style="color:#770088">return</span> <span style="color:#000000">OutputOfFeatureMappingLayer</span>

<span style="color:#770088">class</span> <span style="color:#0000ff">MultiHeadSelfAttentionWithResidual</span>(<span style="color:#000000">torch</span>.<span style="color:#000000">nn</span>.<span style="color:#000000">Module</span>):<span style="color:#770088">def</span> <span style="color:#0000ff">__init__</span>(<span style="color:#0055aa">self</span>, <span style="color:#000000">input_dim</span>, <span style="color:#000000">output_dim</span>, <span style="color:#000000">num_heads</span><span style="color:#981a1a">=</span><span style="color:#116644">4</span>):<span style="color:#3300aa">super</span>(<span style="color:#000000">MultiHeadSelfAttentionWithResidual</span>, <span style="color:#0055aa">self</span>).<span style="color:#000000">__init__</span>()<span style="color:#0055aa">self</span>.<span style="color:#000000">input_dim</span> <span style="color:#981a1a">=</span> <span style="color:#000000">input_dim</span><span style="color:#0055aa">self</span>.<span style="color:#000000">output_dim</span> <span style="color:#981a1a">=</span> <span style="color:#000000">output_dim</span><span style="color:#0055aa">self</span>.<span style="color:#000000">num_heads</span> <span style="color:#981a1a">=</span> <span style="color:#000000">num_heads</span>

<span style="color:#aa5500"># 每个注意力头的输出维度</span><span style="color:#0055aa">self</span>.<span style="color:#000000">head_dim</span> <span style="color:#981a1a">=</span> <span style="color:#000000">output_dim</span> <span style="color:#981a1a">//</span> <span style="color:#000000">num_heads</span>

<span style="color:#aa5500"># 初始化查询、键、值权重矩阵</span><span style="color:#0055aa">self</span>.<span style="color:#000000">query_weights</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">nn</span>.<span style="color:#000000">Linear</span>(<span style="color:#000000">input_dim</span>, <span style="color:#000000">output_dim</span>)<span style="color:#0055aa">self</span>.<span style="color:#000000">key_weights</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">nn</span>.<span style="color:#000000">Linear</span>(<span style="color:#000000">input_dim</span>, <span style="color:#000000">output_dim</span>)<span style="color:#0055aa">self</span>.<span style="color:#000000">value_weights</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">nn</span>.<span style="color:#000000">Linear</span>(<span style="color:#000000">input_dim</span>, <span style="color:#000000">output_dim</span>)

<span style="color:#aa5500"># 输出权重矩阵</span><span style="color:#0055aa">self</span>.<span style="color:#000000">output_weights</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">nn</span>.<span style="color:#000000">Linear</span>(<span style="color:#000000">output_dim</span>, <span style="color:#000000">output_dim</span>)

<span style="color:#770088">def</span> <span style="color:#0000ff">forward</span>(<span style="color:#0055aa">self</span>, <span style="color:#000000">inputs</span>):<span style="color:#aa5500"># 输入形状: (batch_size, seq_len, input_dim)</span><span style="color:#000000">batch_size</span>, <span style="color:#000000">seq_len</span>, <span style="color:#000000">_</span> <span style="color:#981a1a">=</span> <span style="color:#000000">inputs</span>.<span style="color:#000000">size</span>()

<span style="color:#aa5500"># 计算查询、键、值向量</span><span style="color:#000000">queries</span> <span style="color:#981a1a">=</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">query_weights</span>(<span style="color:#000000">inputs</span>) <span style="color:#aa5500"># (batch_size, seq_len, output_dim)</span><span style="color:#000000">keys</span> <span style="color:#981a1a">=</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">key_weights</span>(<span style="color:#000000">inputs</span>) <span style="color:#aa5500"># (batch_size, seq_len, output_dim)</span><span style="color:#000000">values</span> <span style="color:#981a1a">=</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">value_weights</span>(<span style="color:#000000">inputs</span>) <span style="color:#aa5500"># (batch_size, seq_len, output_dim)</span>

<span style="color:#aa5500"># 将向量分割成多个头</span><span style="color:#000000">queries</span> <span style="color:#981a1a">=</span> <span style="color:#000000">queries</span>.<span style="color:#000000">view</span>(<span style="color:#000000">batch_size</span>, <span style="color:#000000">seq_len</span>, <span style="color:#0055aa">self</span>.<span style="color:#000000">num_heads</span>, <span style="color:#0055aa">self</span>.<span style="color:#000000">head_dim</span>).<span style="color:#000000">transpose</span>(<span style="color:#116644">1</span>,<span style="color:#116644">2</span>) <span style="color:#aa5500"># (batch_size, num_heads, seq_len, head_dim)</span><span style="color:#000000">keys</span> <span style="color:#981a1a">=</span> <span style="color:#000000">keys</span>.<span style="color:#000000">view</span>(<span style="color:#000000">batch_size</span>, <span style="color:#000000">seq_len</span>, <span style="color:#0055aa">self</span>.<span style="color:#000000">num_heads</span>, <span style="color:#0055aa">self</span>.<span style="color:#000000">head_dim</span>).<span style="color:#000000">transpose</span>(<span style="color:#116644">1</span>,<span style="color:#116644">2</span>) <span style="color:#aa5500"># (batch_size, num_heads, seq_len, head_dim)</span><span style="color:#000000">values</span> <span style="color:#981a1a">=</span> <span style="color:#000000">values</span>.<span style="color:#000000">view</span>(<span style="color:#000000">batch_size</span>, <span style="color:#000000">seq_len</span>, <span style="color:#0055aa">self</span>.<span style="color:#000000">num_heads</span>, <span style="color:#0055aa">self</span>.<span style="color:#000000">head_dim</span>).<span style="color:#000000">transpose</span>(<span style="color:#116644">1</span>,<span style="color:#116644">2</span>) <span style="color:#aa5500"># (batch_size, num_heads, seq_len, head_dim)</span>

<span style="color:#aa5500"># 计算注意力分数</span><span style="color:#000000">scores</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">matmul</span>(<span style="color:#000000">queries</span>, <span style="color:#000000">keys</span>.<span style="color:#000000">transpose</span>(<span style="color:#981a1a">-</span><span style="color:#116644">2</span>, <span style="color:#981a1a">-</span><span style="color:#116644">1</span>)) <span style="color:#aa5500"># (batch_size, num_heads, seq_len, seq_len)</span>

<span style="color:#aa5500"># 对注意力分数进行缩放</span><span style="color:#000000">scores</span> <span style="color:#981a1a">=</span> <span style="color:#000000">scores</span> <span style="color:#981a1a">/</span> <span style="color:#000000">np</span>.<span style="color:#000000">sqrt</span>(<span style="color:#0055aa">self</span>.<span style="color:#000000">head_dim</span>)

<span style="color:#aa5500"># 计算注意力权重</span><span style="color:#000000">attention_weights</span> <span style="color:#981a1a">=</span> <span style="color:#000000">F</span>.<span style="color:#000000">softmax</span>(<span style="color:#000000">scores</span>, <span style="color:#000000">dim</span><span style="color:#981a1a">=-</span><span style="color:#116644">1</span>) <span style="color:#aa5500"># (batch_size, num_heads, seq_len, seq_len)</span>

<span style="color:#aa5500"># 使用注意力权重对值向量加权求和</span><span style="color:#000000">attention_output</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">matmul</span>(<span style="color:#000000">attention_weights</span>, <span style="color:#000000">values</span>) <span style="color:#aa5500"># (batch_size, num_heads, seq_len, head_dim)</span>

<span style="color:#aa5500"># 将多个头的输出拼接并投影到输出维度</span><span style="color:#000000">attention_output</span> <span style="color:#981a1a">=</span> <span style="color:#000000">attention_output</span>.<span style="color:#000000">transpose</span>(<span style="color:#116644">1</span>, <span style="color:#116644">2</span>).<span style="color:#000000">contiguous</span>().<span style="color:#000000">view</span>(<span style="color:#000000">batch_size</span>, <span style="color:#000000">seq_len</span>,<span style="color:#0055aa">self</span>.<span style="color:#000000">output_dim</span>) <span style="color:#aa5500"># (batch_size, seq_len, output_dim)</span>

<span style="color:#aa5500"># 使用线性层进行输出变换</span><span style="color:#000000">output</span> <span style="color:#981a1a">=</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">output_weights</span>(<span style="color:#000000">attention_output</span>) <span style="color:#aa5500"># (batch_size, seq_len, output_dim)</span>

<span style="color:#aa5500"># 添加残差连接</span><span style="color:#000000">output</span> <span style="color:#981a1a">=</span> <span style="color:#000000">output</span> <span style="color:#981a1a">+</span> <span style="color:#000000">inputs</span>

<span style="color:#770088">return</span> <span style="color:#000000">output</span>

<span style="color:#770088">class</span> <span style="color:#0000ff">FeedForwardLayerWithResidual</span>(<span style="color:#000000">torch</span>.<span style="color:#000000">nn</span>.<span style="color:#000000">Module</span>):<span style="color:#770088">def</span> <span style="color:#0000ff">__init__</span>(<span style="color:#0055aa">self</span>, <span style="color:#000000">input_dim</span>, <span style="color:#000000">hidden_dim</span>):<span style="color:#3300aa">super</span>(<span style="color:#000000">FeedForwardLayerWithResidual</span>, <span style="color:#0055aa">self</span>).<span style="color:#000000">__init__</span>()<span style="color:#0055aa">self</span>.<span style="color:#000000">input_dim</span> <span style="color:#981a1a">=</span> <span style="color:#000000">input_dim</span><span style="color:#0055aa">self</span>.<span style="color:#000000">hidden_dim</span> <span style="color:#981a1a">=</span> <span style="color:#000000">hidden_dim</span><span style="color:#0055aa">self</span>.<span style="color:#000000">output_dim</span> <span style="color:#981a1a">=</span> <span style="color:#000000">input_dim</span>

<span style="color:#aa5500"># 定义第一个线性层</span><span style="color:#0055aa">self</span>.<span style="color:#000000">linear1</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">nn</span>.<span style="color:#000000">Linear</span>(<span style="color:#000000">input_dim</span>, <span style="color:#000000">hidden_dim</span>)

<span style="color:#aa5500"># 定义激活函数</span><span style="color:#0055aa">self</span>.<span style="color:#000000">relu</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">nn</span>.<span style="color:#000000">ReLU</span>()

<span style="color:#aa5500"># 定义第二个线性层</span><span style="color:#0055aa">self</span>.<span style="color:#000000">linear2</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">nn</span>.<span style="color:#000000">Linear</span>(<span style="color:#000000">hidden_dim</span>, <span style="color:#0055aa">self</span>.<span style="color:#000000">output_dim</span>)

<span style="color:#770088">def</span> <span style="color:#0000ff">forward</span>(<span style="color:#0055aa">self</span>, <span style="color:#000000">inputs</span>):<span style="color:#aa5500"># 输入形状: (batch_size, seq_len, input_dim)</span><span style="color:#000000">batch_size</span>, <span style="color:#000000">seq_len</span>, <span style="color:#000000">input_dim</span> <span style="color:#981a1a">=</span> <span style="color:#000000">inputs</span>.<span style="color:#000000">size</span>()

<span style="color:#aa5500"># 将输入展平</span><span style="color:#000000">inputs</span> <span style="color:#981a1a">=</span> <span style="color:#000000">inputs</span>.<span style="color:#000000">view</span>(<span style="color:#000000">batch_size</span>, <span style="color:#000000">input_dim</span> <span style="color:#981a1a">*</span> <span style="color:#000000">seq_len</span>)<span style="color:#aa5500"># 第一个线性层</span><span style="color:#000000">hidden</span> <span style="color:#981a1a">=</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">linear1</span>(<span style="color:#000000">inputs</span>)

<span style="color:#aa5500"># 激活函数</span><span style="color:#000000">hidden</span> <span style="color:#981a1a">=</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">relu</span>(<span style="color:#000000">hidden</span>)

<span style="color:#aa5500"># 第二个线性层</span><span style="color:#000000">output</span> <span style="color:#981a1a">=</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">linear2</span>(<span style="color:#000000">hidden</span>)<span style="color:#aa5500"># 将输出形状重塑为与输入相同</span>

<span style="color:#aa5500"># output = output.view(batch_size, seq_len, self.output_dim)</span>

<span style="color:#aa5500"># 添加残差连接</span><span style="color:#000000">output</span> <span style="color:#981a1a">=</span> <span style="color:#000000">output</span> <span style="color:#981a1a">+</span> <span style="color:#000000">inputs</span>

<span style="color:#770088">return</span> <span style="color:#000000">output</span>

<span style="color:#770088">class</span> <span style="color:#0000ff">MultiAttn_layer</span>(<span style="color:#000000">torch</span>.<span style="color:#000000">nn</span>.<span style="color:#000000">Module</span>):<span style="color:#770088">def</span> <span style="color:#0000ff">__init__</span>(<span style="color:#0055aa">self</span>, <span style="color:#000000">input_dim</span>, <span style="color:#000000">output_dim</span>, <span style="color:#000000">in_features</span>, <span style="color:#000000">hidden_features</span>, <span style="color:#000000">layer_num</span>, <span style="color:#000000">num_heads</span><span style="color:#981a1a">=</span><span style="color:#116644">4</span>):<span style="color:#3300aa">super</span>(<span style="color:#000000">MultiAttn_layer</span>, <span style="color:#0055aa">self</span>).<span style="color:#000000">__init__</span>()<span style="color:#0055aa">self</span>.<span style="color:#000000">input_dim</span> <span style="color:#981a1a">=</span> <span style="color:#000000">input_dim</span><span style="color:#0055aa">self</span>.<span style="color:#000000">output_dim</span> <span style="color:#981a1a">=</span> <span style="color:#000000">output_dim</span><span style="color:#0055aa">self</span>.<span style="color:#000000">num_heads</span> <span style="color:#981a1a">=</span> <span style="color:#000000">num_heads</span><span style="color:#0055aa">self</span>.<span style="color:#000000">norm</span> <span style="color:#981a1a">=</span> <span style="color:#000000">nn</span>.<span style="color:#000000">LayerNorm</span>(<span style="color:#000000">in_features</span>, <span style="color:#000000">eps</span><span style="color:#981a1a">=</span><span style="color:#116644">1e-06</span>, <span style="color:#000000">elementwise_affine</span><span style="color:#981a1a">=</span><span style="color:#770088">True</span>)<span style="color:#0055aa">self</span>.<span style="color:#000000">FC</span> <span style="color:#981a1a">=</span> <span style="color:#000000">nn</span>.<span style="color:#000000">Linear</span>(<span style="color:#000000">in_features</span><span style="color:#981a1a">=</span><span style="color:#000000">in_features</span>, <span style="color:#000000">out_features</span><span style="color:#981a1a">=</span><span style="color:#116644">2</span>)<span style="color:#0055aa">self</span>.<span style="color:#000000">layer_num</span> <span style="color:#981a1a">=</span> <span style="color:#000000">layer_num</span>

<span style="color:#770088">if</span> <span style="color:#000000">hidden_features</span> <span style="color:#770088">is</span> <span style="color:#770088">None</span>:<span style="color:#0055aa">self</span>.<span style="color:#000000">hidden_dim</span> <span style="color:#981a1a">=</span> <span style="color:#116644">4</span> <span style="color:#981a1a">*</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">output_dim</span><span style="color:#770088">else</span>:<span style="color:#0055aa">self</span>.<span style="color:#000000">hidden_dim</span> <span style="color:#981a1a">=</span> <span style="color:#000000">hidden_features</span>

<span style="color:#aa5500"># 多头自注意力层</span><span style="color:#0055aa">self</span>.<span style="color:#000000">self_attn</span> <span style="color:#981a1a">=</span> <span style="color:#000000">MultiHeadSelfAttentionWithResidual</span>(<span style="color:#000000">input_dim</span>, <span style="color:#000000">output_dim</span>, <span style="color:#000000">num_heads</span>)

<span style="color:#aa5500"># 前馈神经网络层</span><span style="color:#0055aa">self</span>.<span style="color:#000000">feed_forward</span> <span style="color:#981a1a">=</span> <span style="color:#000000">FeedForwardLayerWithResidual</span>(<span style="color:#000000">in_features</span>, <span style="color:#000000">hidden_features</span>)

<span style="color:#770088">def</span> <span style="color:#0000ff">forward</span>(<span style="color:#0055aa">self</span>, <span style="color:#000000">inputs</span>):<span style="color:#aa5500"># 输入形状: (batch_size, seq_len, input_dim)</span><span style="color:#000000">attn_output</span> <span style="color:#981a1a">=</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">self_attn</span>(<span style="color:#000000">inputs</span>)<span style="color:#aa5500"># 先经过多头自注意力层</span><span style="color:#770088">for</span> <span style="color:#000000">i</span> <span style="color:#770088">in</span> <span style="color:#3300aa">range</span>(<span style="color:#0055aa">self</span>.<span style="color:#000000">layer_num</span><span style="color:#981a1a">-</span><span style="color:#116644">1</span>):<span style="color:#000000">attn_output</span> <span style="color:#981a1a">=</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">self_attn</span>(<span style="color:#000000">attn_output</span>)<span style="color:#aa5500"># 再经过前馈神经网络层</span><span style="color:#000000">output</span> <span style="color:#981a1a">=</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">feed_forward</span>(<span style="color:#000000">attn_output</span>)<span style="color:#000000">output</span> <span style="color:#981a1a">=</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">norm</span>(<span style="color:#000000">output</span>)<span style="color:#000000">output</span> <span style="color:#981a1a">=</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">FC</span>(<span style="color:#000000">output</span>)<span style="color:#770088">return</span> <span style="color:#000000">output</span></span></span>代码优化方法

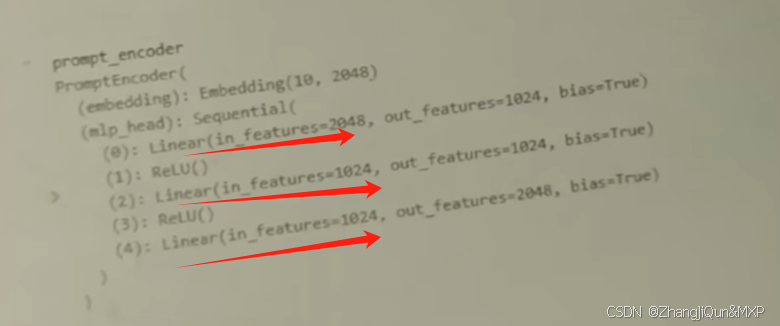

在上面的多头注意力层中,我们手动搭建了一个多头注意力层,并复现了论文中的前馈网络层。实际上,我们可以使用目前比较火热的一些Transformer模型中的模块来帮助我们快速搭建好想要的模型,这些优秀模型的架构在一定程度上更合理、不易出错。在这里我使用了Vision Transformer模型中的部分网络架构来改进了多头注意力层,代码如下:

<span style="background-color:#f8f8f8"><span style="color:#333333"><span style="color:#aa5500"># 改进后的多头注意力层</span>

<span style="color:#770088">import</span> <span style="color:#000000">torch</span>

<span style="color:#770088">import</span> <span style="color:#000000">math</span>

<span style="color:#770088">import</span> <span style="color:#000000">ssl</span>

<span style="color:#770088">import</span> <span style="color:#000000">torch</span>.<span style="color:#000000">nn</span> <span style="color:#770088">as</span> <span style="color:#000000">nn</span>

<span style="color:#770088">from</span> <span style="color:#000000">timm</span>.<span style="color:#000000">models</span>.<span style="color:#000000">vision_transformer</span> <span style="color:#770088">import</span> <span style="color:#000000">vit_base_patch8_224</span>

<span style="color:#000000">ssl</span>.<span style="color:#000000">_create_default_https_context</span> <span style="color:#981a1a">=</span> <span style="color:#000000">ssl</span>.<span style="color:#000000">_create_unverified_context</span>

<span style="color:#770088">def</span> <span style="color:#0000ff">pos_encoding</span>(<span style="color:#000000">OutputOfFeatureMappingLayer</span>, <span style="color:#000000">B</span>, <span style="color:#000000">N</span>, <span style="color:#000000">C</span>):<span style="color:#000000">OutputOfFeatureMappingLayer</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">tensor</span>(<span style="color:#000000">OutputOfFeatureMappingLayer</span>, <span style="color:#000000">dtype</span><span style="color:#981a1a">=</span><span style="color:#000000">torch</span>.<span style="color:#000000">float</span>).<span style="color:#000000">reshape</span>(<span style="color:#000000">B</span>, <span style="color:#000000">N</span>, <span style="color:#000000">C</span>)

<span style="color:#aa5500"># 定义位置编码的最大序列长度和特征维度</span><span style="color:#000000">max_sequence_length</span> <span style="color:#981a1a">=</span> <span style="color:#000000">OutputOfFeatureMappingLayer</span>.<span style="color:#000000">size</span>(<span style="color:#116644">1</span>)<span style="color:#000000">feature_dim</span> <span style="color:#981a1a">=</span> <span style="color:#000000">OutputOfFeatureMappingLayer</span>.<span style="color:#000000">size</span>(<span style="color:#116644">2</span>)<span style="color:#aa5500"># 计算位置编码矩阵</span><span style="color:#000000">position_encodings</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">zeros</span>(<span style="color:#000000">max_sequence_length</span>, <span style="color:#000000">feature_dim</span>)<span style="color:#000000">position</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">arange</span>(<span style="color:#116644">0</span>, <span style="color:#000000">max_sequence_length</span>, <span style="color:#000000">dtype</span><span style="color:#981a1a">=</span><span style="color:#000000">torch</span>.<span style="color:#000000">float</span>).<span style="color:#000000">unsqueeze</span>(<span style="color:#116644">1</span>)<span style="color:#000000">div_term</span> <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">exp</span>(<span style="color:#000000">torch</span>.<span style="color:#000000">arange</span>(<span style="color:#116644">0</span>, <span style="color:#000000">feature_dim</span>, <span style="color:#116644">2</span>).<span style="color:#000000">float</span>() <span style="color:#981a1a">*</span> (<span style="color:#981a1a">-</span><span style="color:#000000">math</span>.<span style="color:#000000">log</span>(<span style="color:#116644">10000.0</span>) <span style="color:#981a1a">/</span> <span style="color:#000000">feature_dim</span>))<span style="color:#000000">position_encodings</span>[:, <span style="color:#116644">0</span>::<span style="color:#116644">2</span>] <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">sin</span>(<span style="color:#000000">position</span> <span style="color:#981a1a">*</span> <span style="color:#000000">div_term</span>)<span style="color:#000000">position_encodings</span>[:, <span style="color:#116644">1</span>::<span style="color:#116644">2</span>] <span style="color:#981a1a">=</span> <span style="color:#000000">torch</span>.<span style="color:#000000">cos</span>(<span style="color:#000000">position</span> <span style="color:#981a1a">*</span> <span style="color:#000000">div_term</span>)<span style="color:#aa5500"># 将位置编码矩阵扩展为和输入张量 x 的形状一致</span><span style="color:#000000">position_encodings</span> <span style="color:#981a1a">=</span> <span style="color:#000000">position_encodings</span>.<span style="color:#000000">unsqueeze</span>(<span style="color:#116644">0</span>).<span style="color:#000000">expand_as</span>(<span style="color:#000000">OutputOfFeatureMappingLayer</span>)<span style="color:#000000">OutputOfFeatureMappingLayer</span> <span style="color:#981a1a">=</span> <span style="color:#000000">OutputOfFeatureMappingLayer</span> <span style="color:#981a1a">+</span> <span style="color:#000000">position_encodings</span><span style="color:#770088">return</span> <span style="color:#000000">OutputOfFeatureMappingLayer</span>

<span style="color:#770088">class</span> <span style="color:#0000ff">MultiAttn_layer</span>(<span style="color:#000000">torch</span>.<span style="color:#000000">nn</span>.<span style="color:#000000">Module</span>):<span style="color:#770088">def</span> <span style="color:#0000ff">__init__</span>(<span style="color:#0055aa">self</span>, <span style="color:#000000">input_dim</span>, <span style="color:#000000">output_dim</span>, <span style="color:#000000">in_features</span>, <span style="color:#000000">hidden_features</span>, <span style="color:#000000">layer_num</span>, <span style="color:#000000">num_heads</span><span style="color:#981a1a">=</span><span style="color:#116644">4</span>):<span style="color:#3300aa">super</span>(<span style="color:#000000">MultiAttn_layer</span>, <span style="color:#0055aa">self</span>).<span style="color:#000000">__init__</span>()<span style="color:#0055aa">self</span>.<span style="color:#000000">input_dim</span> <span style="color:#981a1a">=</span> <span style="color:#000000">input_dim</span><span style="color:#0055aa">self</span>.<span style="color:#000000">output_dim</span> <span style="color:#981a1a">=</span> <span style="color:#000000">output_dim</span><span style="color:#0055aa">self</span>.<span style="color:#000000">num_head</span> <span style="color:#981a1a">=</span> <span style="color:#000000">num_heads</span><span style="color:#0055aa">self</span>.<span style="color:#000000">norm</span> <span style="color:#981a1a">=</span> <span style="color:#000000">nn</span>.<span style="color:#000000">LayerNorm</span>(<span style="color:#000000">input_dim</span>, <span style="color:#000000">eps</span><span style="color:#981a1a">=</span><span style="color:#116644">1e-06</span>, <span style="color:#000000">elementwise_affine</span><span style="color:#981a1a">=</span><span style="color:#770088">True</span>)<span style="color:#0055aa">self</span>.<span style="color:#000000">fc</span> <span style="color:#981a1a">=</span> <span style="color:#000000">nn</span>.<span style="color:#000000">Linear</span>(<span style="color:#000000">in_features</span><span style="color:#981a1a">=</span><span style="color:#000000">in_features</span>, <span style="color:#000000">out_features</span><span style="color:#981a1a">=</span><span style="color:#116644">2</span>, <span style="color:#000000">bias</span><span style="color:#981a1a">=</span><span style="color:#770088">True</span>)<span style="color:#0055aa">self</span>.<span style="color:#000000">infeatures</span> <span style="color:#981a1a">=</span> <span style="color:#000000">in_features</span><span style="color:#0055aa">self</span>.<span style="color:#000000">layer_num</span> <span style="color:#981a1a">=</span> <span style="color:#000000">layer_num</span><span style="color:#000000">model</span> <span style="color:#981a1a">=</span> <span style="color:#000000">vit_base_patch8_224</span>(<span style="color:#000000">pretrained</span><span style="color:#981a1a">=</span><span style="color:#770088">True</span>)<span style="color:#000000">model</span>.<span style="color:#000000">blocks</span>[<span style="color:#116644">0</span>].<span style="color:#000000">norm1</span> <span style="color:#981a1a">=</span> <span style="color:#000000">nn</span>.<span style="color:#000000">LayerNorm</span>(<span style="color:#000000">input_dim</span>, <span style="color:#000000">eps</span><span style="color:#981a1a">=</span><span style="color:#116644">1e-06</span>, <span style="color:#000000">elementwise_affine</span><span style="color:#981a1a">=</span><span style="color:#770088">True</span>)<span style="color:#000000">model</span>.<span style="color:#000000">blocks</span>[<span style="color:#116644">0</span>].<span style="color:#000000">attn</span>.<span style="color:#000000">qkv</span> <span style="color:#981a1a">=</span> <span style="color:#000000">nn</span>.<span style="color:#000000">Linear</span>(<span style="color:#000000">in_features</span><span style="color:#981a1a">=</span><span style="color:#000000">input_dim</span>, <span style="color:#000000">out_features</span><span style="color:#981a1a">=</span><span style="color:#000000">input_dim</span><span style="color:#981a1a">*</span><span style="color:#116644">3</span>, <span style="color:#000000">bias</span><span style="color:#981a1a">=</span><span style="color:#770088">True</span>)<span style="color:#000000">model</span>.<span style="color:#000000">blocks</span>[<span style="color:#116644">0</span>].<span style="color:#000000">attn</span>.<span style="color:#000000">proj</span> <span style="color:#981a1a">=</span> <span style="color:#000000">nn</span>.<span style="color:#000000">Linear</span>(<span style="color:#000000">in_features</span><span style="color:#981a1a">=</span><span style="color:#000000">input_dim</span>, <span style="color:#000000">out_features</span><span style="color:#981a1a">=</span><span style="color:#000000">input_dim</span>, <span style="color:#000000">bias</span><span style="color:#981a1a">=</span><span style="color:#770088">True</span>)<span style="color:#000000">model</span>.<span style="color:#000000">blocks</span>[<span style="color:#116644">0</span>].<span style="color:#000000">proj</span> <span style="color:#981a1a">=</span> <span style="color:#000000">nn</span>.<span style="color:#000000">Linear</span>(<span style="color:#000000">in_features</span><span style="color:#981a1a">=</span><span style="color:#000000">input_dim</span>, <span style="color:#000000">out_features</span><span style="color:#981a1a">=</span><span style="color:#000000">input_dim</span>, <span style="color:#000000">bias</span><span style="color:#981a1a">=</span><span style="color:#770088">True</span>)<span style="color:#000000">model</span>.<span style="color:#000000">blocks</span>[<span style="color:#116644">0</span>].<span style="color:#000000">norm2</span> <span style="color:#981a1a">=</span> <span style="color:#000000">nn</span>.<span style="color:#000000">LayerNorm</span>(<span style="color:#000000">input_dim</span>, <span style="color:#000000">eps</span><span style="color:#981a1a">=</span><span style="color:#116644">1e-06</span>, <span style="color:#000000">elementwise_affine</span><span style="color:#981a1a">=</span><span style="color:#770088">True</span>)<span style="color:#000000">model</span>.<span style="color:#000000">blocks</span>[<span style="color:#116644">0</span>].<span style="color:#000000">mlp</span>.<span style="color:#000000">fc1</span> <span style="color:#981a1a">=</span> <span style="color:#000000">nn</span>.<span style="color:#000000">Linear</span>(<span style="color:#000000">in_features</span><span style="color:#981a1a">=</span><span style="color:#000000">input_dim</span>, <span style="color:#000000">out_features</span><span style="color:#981a1a">=</span><span style="color:#000000">input_dim</span><span style="color:#981a1a">*</span><span style="color:#116644">3</span>, <span style="color:#000000">bias</span><span style="color:#981a1a">=</span><span style="color:#770088">True</span>)<span style="color:#000000">model</span>.<span style="color:#000000">blocks</span>[<span style="color:#116644">0</span>].<span style="color:#000000">mlp</span>.<span style="color:#000000">fc2</span> <span style="color:#981a1a">=</span> <span style="color:#000000">nn</span>.<span style="color:#000000">Linear</span>(<span style="color:#000000">in_features</span><span style="color:#981a1a">=</span><span style="color:#000000">input_dim</span><span style="color:#981a1a">*</span><span style="color:#116644">3</span>, <span style="color:#000000">out_features</span><span style="color:#981a1a">=</span><span style="color:#000000">input_dim</span>, <span style="color:#000000">bias</span><span style="color:#981a1a">=</span><span style="color:#770088">True</span>)<span style="color:#0055aa">self</span>.<span style="color:#000000">blocks</span> <span style="color:#981a1a">=</span> <span style="color:#000000">model</span>.<span style="color:#000000">blocks</span>[<span style="color:#116644">0</span>]<span style="color:#770088">def</span> <span style="color:#0000ff">forward</span>(<span style="color:#0055aa">self</span>, <span style="color:#000000">inputs</span>):<span style="color:#000000">output</span> <span style="color:#981a1a">=</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">blocks</span>(<span style="color:#000000">inputs</span>)<span style="color:#770088">for</span> <span style="color:#000000">i</span> <span style="color:#770088">in</span> <span style="color:#3300aa">range</span>(<span style="color:#0055aa">self</span>.<span style="color:#000000">layer_num</span><span style="color:#981a1a">-</span><span style="color:#116644">1</span>):<span style="color:#000000">output</span> <span style="color:#981a1a">=</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">blocks</span>(<span style="color:#000000">output</span>)<span style="color:#000000">output</span> <span style="color:#981a1a">=</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">norm</span>(<span style="color:#000000">output</span>)<span style="color:#000000">output</span> <span style="color:#981a1a">=</span> <span style="color:#000000">output</span>.<span style="color:#000000">view</span>(<span style="color:#000000">output</span>.<span style="color:#000000">shape</span>[<span style="color:#116644">0</span>], <span style="color:#0055aa">self</span>.<span style="color:#000000">infeatures</span>)<span style="color:#000000">output</span> <span style="color:#981a1a">=</span> <span style="color:#0055aa">self</span>.<span style="color:#000000">fc</span>(<span style="color:#000000">output</span>)<span style="color:#770088">return</span> <span style="color:#000000">output</span>

</span></span>实验结果

原文作者将他们所提出的模型在两份数据集上做了对比实验,结果如下图所示,可以看到Multi-Attn BLS在四类指标上都能取得最优值,其性能其优于岭回归算法、传统BLS模型和LSTM。

使用方式

我将本次复现的代码集成到一个main.py文件中,需要的小伙伴们可以在终端输入相应命令调用。

环境配置

笔者本地的环境为macOS M2,使用的编程语言为python3.9,注意尽量不要使用版本太低的python。本次复现没有使用很复杂、冷门的库,因此最重要的是配置好pytorch环境,关于这个的文档很多,大家可以任意找一份配置好环境。

文件结构

代码的文件结构如上图所示, Data_Processing.py是对数据的前处理函数,如果需要使用自有数据集可以忽略这部分; MultiAttn_layer.py是复现的多头注意力层; VIT_layer.py则是改进的使用VIT模块搭建的多头注意力层; BLSlayer.py是BLS映射层,用来得到特征层和增强层; 我们用main.py来集成这些下游代码函数,进行端对端的分类;

X.npy和y.npy文件是我本次使用的测试数据,分别是数据和代码,使用时请注意将您的数据和代码也以这种.npy格式保存到该文件夹中。它们具体的数据类型和格式为

X每一行表示一个样本,545是每一个样本的输入维度;Y采用数字编码,是一个一维向量。

终端调用 终端进入该文件夹后,可以使用以下命令来调用main.py启动模型训练

<span style="background-color:#f8f8f8"><span style="color:#333333"><span style="color:#000000">python3</span> <span style="color:#981a1a">-</span><span style="color:#000000">c</span> <span style="color:#aa1111">"from main import MultiAttn_BLS; MultiAttn_BLS('./X.npy','./y.npy')"</span></span></span>MultiAttn_BLS函数的输入参数介绍如下:

<span style="background-color:#f8f8f8"><span style="color:#333333">:<span style="color:#000000">param</span> <span style="color:#000000">datapath</span>: <span style="color:#3300aa">str</span> <span style="color:#000000">数据的路径</span>

:<span style="color:#000000">param</span> <span style="color:#000000">labelpath</span>: <span style="color:#3300aa">str</span> <span style="color:#000000">标签的路径</span>

:<span style="color:#000000">param</span> <span style="color:#000000">N1</span>: <span style="color:#3300aa">int</span> <span style="color:#000000">特征层节点组数,默认为12</span>

:<span style="color:#000000">param</span> <span style="color:#000000">N2</span>: <span style="color:#3300aa">int</span> <span style="color:#000000">特征层组内节点数,默认为12</span>

:<span style="color:#000000">param</span> <span style="color:#000000">num_heads</span>: <span style="color:#3300aa">int</span> <span style="color:#000000">多头自注意力头的数量,默认为4</span>

<span style="color:#000000">(为了让多头自注意力机制能适应BLS生成的节点维度,N1应为num_heads的奇数倍)</span>

:<span style="color:#000000">param</span> <span style="color:#000000">layer_num</span>: <span style="color:#3300aa">int</span> <span style="color:#000000">多头自注意力层数,默认为3</span>

:<span style="color:#000000">param</span> <span style="color:#000000">batch_size</span>: <span style="color:#3300aa">int</span> <span style="color:#000000">多头自注意力层训练的批次量,默认为16</span>

:<span style="color:#000000">param</span> <span style="color:#000000">s</span>: <span style="color:#3300aa">float</span> <span style="color:#000000">缩放尺度,一般设置为0</span><span style="color:#116644">.8</span>

:<span style="color:#000000">param</span> <span style="color:#000000">num_epochs</span>: <span style="color:#3300aa">int</span> <span style="color:#000000">多头自注意力层训练的迭代次数,默认为50</span>

:<span style="color:#000000">param</span> <span style="color:#000000">test_size</span>: <span style="color:#3300aa">float</span> <span style="color:#000000">划分数据集:测试集的比例,默认为0</span><span style="color:#116644">.3</span>

:<span style="color:#770088">return</span>: <span style="color:#000000">训练结束后的模型</span></span></span>我基于本地的数据集做了一份分类任务,可以看到随着epoch增加,训练损失在不断下降,测试的准确率可以达到77.52%,还不错的结果。大家可以根据自己的需要修改模型的输出层来适应不同的学习任务。

需要本文的详细复现过程的项目源码、数据和预训练好的模型可从该地址处获取完整版:传知代码论文复现

希望对你有帮助!加油!

若您认为本文内容有益,请不吝赐予赞同并订阅,以便持续接收有价值的信息。衷心感谢您的关注和支持!

相关文章:

时序预测:多头注意力+宽度学习

✨✨ 欢迎大家来访Srlua的博文(づ ̄3 ̄)づ╭❤~✨✨ 🌟🌟 欢迎各位亲爱的读者,感谢你们抽出宝贵的时间来阅读我的文章。 我是Srlua小谢,在这里我会分享我的知识和经验。&am…...

day06(单片机)IIC+STH20

目录 IICSHT20 I2C基础简介 为什么I2C需要使用上拉电阻? I2C特点 时序图分析 起始信号与终止信号 数据传输时序 字节传输和应答信号 I2C寻址 主机给从机发送一个字节 主机给从机发送多个字节 主机从从机接收一个字节 主机从从机接收多个字节 I2C寄存器 I2C_RXDR&…...

Bugku CTF_Web——文件上传

Bugku CTF_Web——文件上传 进入靶场 My name is margin,give me a image file not a php抓个包上传试试 改成png也上传失败 应该校验了文件头 增加了文件头也不行 试了一下 把文件类型改成gif可以上传 但是还是不能连接 将Content-Type改大小写 再把文件后缀名改成php4 成…...

C#版使用融合通信API发送手机短信息

目录 功能实现 范例运行环境 实现范例 类设计 类代码实现 调用范例 总结 功能实现 融合云通信服务平台,为企业提供全方位通信服务,发送手机短信是其一项核心功能,本文将讲述如何使用融合云服务API为终端手机用户发送短信信息…...

人工智能:重塑医疗、企业与生活的未来知识管理——以HelpLook为例

一、医疗行业:AI引领的医疗革新 随着人工智能(AI)技术的持续飞跃,我们正身处一场跨行业的深刻变革之中。在医疗健康的广阔舞台上,人工智能技术正扮演着日益重要的角色。它不仅能够辅助医生进行病例的精准诊断…...

模型)

MVVM(Model-View-ViewModel)模型

MVVM(ModelViewViewModel)模型是一种常用于软件开发中的架构模式,尤其在前端框架(如 Vue.js、React、Angular)中被广泛应用。它将程序的用户界面与业务逻辑分离,便于维护和扩展。 MVVM 的三个组成部分 1. …...

权限系统:权限应用服务设计

今天聊聊权限系统的应用服务设计。 从业务需求的角度来看,权限系统需要解决两个核心问题: 1、菜单渲染与动态展示 当用户成功登录并接入系统后,系统需要动态获取并展示该用户有权限访问的菜单项。 这一过程涉及前端系统与权限系统的交互。前端…...

Android音频架构

音频基础知识 声音有哪些重要属性呢? 响度(Loudness) 响度就是人类可以感知到的各种声音的大小,也就是音量。响度与声波的振幅有直接关系。 音调(Pitch) 音调与声音的频率有关系,当声音的频率越大时,人耳所感知到的音调就越高&a…...

AI 智享直播:开启直播新篇,引领未来互动新趋势!

在数字化浪潮席卷全球的今天,AI技术正以不可阻挡之势渗透进我们生活的方方面面,从智能家居到自动驾驶,从医疗健康到金融服务,无一不彰显着其强大的影响力和无限的潜力。而在这一波科技革新的洪流中,直播行业也迎来了前…...

【AIGC】国内AI工具复现GPTs效果详解

博客主页: [小ᶻZ࿆] 本文专栏: AIGC | GPTs应用实例 文章目录 💯前言💯本文所要复现的GPTs介绍💯GPTs指令作为提示词在ChatGPT实现类似效果💯国内AI工具复现GPTs效果可能出现的问题解决方法解决后的效果 …...

Charles抓https包-配置系统证书(雷电)

1、导出证书 2、下载 主页上传资源中有安装包,免费的 openssl 安装教程自己搜 openssl x509 -subject_hash_old -in charles.pem 3、修改证书名、后缀改成点0 雷电打开root和磁盘写入 4、导入雷电证书根目录 证书拖进去,基本就完成了ÿ…...

在卷积神经网络中真正占用内存的是什么

在卷积神经网络(CNN)中,占用内存的主要部分包括以下几个方面: 1. 模型参数(Weights and Biases) CNN 中的权重和偏置(即模型的参数)通常是占用内存的最大部分。具体来说࿱…...

2024 ECCV | DualDn: 通过可微ISP进行双域去噪

文章标题:《DualDn: Dual-domain Denoising via Differentiable ISP》 论文链接: DualDn 代码链接: https://openimaginglab.github.io/DualDn/ 本文收录于2024ECCV,是上海AI Lab、浙江大学、香港中文大学(薛天帆等…...

Elasticsearch 和 Kibana 8.16:Kibana 获得上下文和 BBQ 速度并节省开支!

作者:来自 Elastic Platform Product Team Elastic Search AI 平台(Elasticsearch、Kibana 和机器学习)的 8.16 版本包含大量新功能,可提高性能、优化工作流程和简化数据管理。 使用更好的二进制量化 (Better Binary Quantizatio…...

Linux 抓包工具 --- tcpdump

序言 在传输层 Tcp 的学习中,我们了解了 三次握手和四次挥手 的概念,但是看了这么多篇文章,我们也只是停留在 纸上谈兵。 欲知事情如何,我们其实可以尝试去看一下具体的网络包的信息。在这篇文章中将向大家介绍,在 L…...

Vector Optimization – Stride

文章目录 Vector优化 – stride跳跃Vector优化 – stride跳跃 This distance between memory locations that separates the elements to be gathered into a single register is called the stride. A stride of one unit is called a unit-stride. This is equivalent to se…...

git config是做什么的?

git config是做什么的? git config作用配置级别三种配置级别的介绍及使用,配置文件说明 使用说明git confi查看参数 默认/不使用这个参数 情况下 Git 使用哪个配置等级? 一些常见的行为查看配置信息设置配置信息删除配置信息 一些常用的配置信…...

数据链路层)

计算机网络(7) 数据链路层

数据链路层的内容不学不知道,一学真的是吓一跳哦,内容真的挺多的,但是大家不要害怕,总会学完的。 还有由于数据链路层的内容太多,一篇肯定是讲不完的所以我决定把它分为好几个部分进行学习与讲解。大家可以关注以后文…...

2024年秋国开电大《建筑结构试验》形考任务1-4

形考作业一 1.下列选项中,( )项不属于科学研究性试验。 答案:检验结构的质量,说明工程的可靠性 2.下列各项,( )项不属于工程鉴定性试验。 答案:验证结构计算理论的假定 3.按试验目的进行分类,可将结构试验分成( )。 答案:工程鉴定性试验和科学研究性试验…...

【MySQL】explain之type类型

explain的type共有以下几种类型,system、const、eq_ref、ref、range、index、all。 system:当表中只有一条记录并且该表使用的存储引擎的统计数据是精确的,比如MyISAM、Memory,那么对该表的访问方法就是system。 constÿ…...

XML Group端口详解

在XML数据映射过程中,经常需要对数据进行分组聚合操作。例如,当处理包含多个物料明细的XML文件时,可能需要将相同物料号的明细归为一组,或对相同物料号的数量进行求和计算。传统实现方式通常需要编写脚本代码,增加了开…...

AI-调查研究-01-正念冥想有用吗?对健康的影响及科学指南

点一下关注吧!!!非常感谢!!持续更新!!! 🚀 AI篇持续更新中!(长期更新) 目前2025年06月05日更新到: AI炼丹日志-28 - Aud…...

Prompt Tuning、P-Tuning、Prefix Tuning的区别

一、Prompt Tuning、P-Tuning、Prefix Tuning的区别 1. Prompt Tuning(提示调优) 核心思想:固定预训练模型参数,仅学习额外的连续提示向量(通常是嵌入层的一部分)。实现方式:在输入文本前添加可训练的连续向量(软提示),模型只更新这些提示参数。优势:参数量少(仅提…...

linux arm系统烧录

1、打开瑞芯微程序 2、按住linux arm 的 recover按键 插入电源 3、当瑞芯微检测到有设备 4、松开recover按键 5、选择升级固件 6、点击固件选择本地刷机的linux arm 镜像 7、点击升级 (忘了有没有这步了 估计有) 刷机程序 和 镜像 就不提供了。要刷的时…...

Qt Http Server模块功能及架构

Qt Http Server 是 Qt 6.0 中引入的一个新模块,它提供了一个轻量级的 HTTP 服务器实现,主要用于构建基于 HTTP 的应用程序和服务。 功能介绍: 主要功能 HTTP服务器功能: 支持 HTTP/1.1 协议 简单的请求/响应处理模型 支持 GET…...

Python 包管理器 uv 介绍

Python 包管理器 uv 全面介绍 uv 是由 Astral(热门工具 Ruff 的开发者)推出的下一代高性能 Python 包管理器和构建工具,用 Rust 编写。它旨在解决传统工具(如 pip、virtualenv、pip-tools)的性能瓶颈,同时…...

AI+无人机如何守护濒危物种?YOLOv8实现95%精准识别

【导读】 野生动物监测在理解和保护生态系统中发挥着至关重要的作用。然而,传统的野生动物观察方法往往耗时耗力、成本高昂且范围有限。无人机的出现为野生动物监测提供了有前景的替代方案,能够实现大范围覆盖并远程采集数据。尽管具备这些优势…...

libfmt: 现代C++的格式化工具库介绍与酷炫功能

libfmt: 现代C的格式化工具库介绍与酷炫功能 libfmt 是一个开源的C格式化库,提供了高效、安全的文本格式化功能,是C20中引入的std::format的基础实现。它比传统的printf和iostream更安全、更灵活、性能更好。 基本介绍 主要特点 类型安全:…...

Java中HashMap底层原理深度解析:从数据结构到红黑树优化

一、HashMap概述与核心特性 HashMap作为Java集合框架中最常用的数据结构之一,是基于哈希表的Map接口非同步实现。它允许使用null键和null值(但只能有一个null键),并且不保证映射顺序的恒久不变。与Hashtable相比,Hash…...

【Java】Ajax 技术详解

文章目录 1. Filter 过滤器1.1 Filter 概述1.2 Filter 快速入门开发步骤:1.3 Filter 执行流程1.4 Filter 拦截路径配置1.5 过滤器链2. Listener 监听器2.1 Listener 概述2.2 ServletContextListener3. Ajax 技术3.1 Ajax 概述3.2 Ajax 快速入门服务端实现:客户端实现:4. Axi…...