AI - 谈谈RAG中的查询分析(2)

AI - 谈谈RAG中的查询分析(2)

大家好,RAG中的查询分析是比较有趣的一个点,内容丰富,并不是一句话能聊的清楚的。今天接着上一篇,继续探讨RAG中的查询分析,并在功能层面和代码层面持续改进。

功能层面

如果用户问了一个不着边际的问题,也就是和工具无关的问题,那么无须调用工具,直接生成答案。否则,就调用工具,检索本地知识库,生成答案。

代码方面

-

考虑到在对答聊天中,对话状态是如此重要,所以我们可以直接使用LangChain内置的

MessagesState,而不用自己定义State类。class State(TypedDict):question: strquery: Searchcontext: List[Document]answer: str -

上一篇的

Search工具主要用于结构化输出,工具本身没有实质性内容,所以本篇会将retrieve作为一个工具,既可以绑定到LLM,也可以通过LangGraph内置的组件 ToolNode,,形成一个Graph节点,在收到LLM的应答之后,开始执行从本地知识库语义搜索的动作,最终生成一个ToolMessage。

实例代码

备注:对于本文中的代码片段,主体来源于LangChain官网,有兴趣的读者可以去官网查看。

import os

from langchain_openai import ChatOpenAI

from langchain_openai import OpenAIEmbeddings

from langchain_core.vectorstores import InMemoryVectorStore

import bs4

from langchain import hub

from langchain_community.document_loaders import WebBaseLoader

from langchain_core.documents import Document

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langgraph.graph import START, StateGraph, MessagesState

from typing_extensions import List, TypedDict

from langchain_core.tools import tool

from langchain_core.messages import SystemMessage

from langgraph.graph import END

from langgraph.prebuilt import ToolNode, tools_condition# Setup environment variables for authentication

os.environ["OPENAI_API_KEY"] = 'your_openai_api_key'# Initialize OpenAI embeddings using a specified model

embeddings = OpenAIEmbeddings(model="text-embedding-3-large")# Create an in-memory vector store to store the embeddings

vector_store = InMemoryVectorStore(embeddings)# Initialize the language model from OpenAI

llm = ChatOpenAI(model="gpt-4o-mini")# Setup the document loader for a given web URL, specifying elements to parse

loader = WebBaseLoader(web_paths=("https://lilianweng.github.io/posts/2023-06-23-agent/",),bs_kwargs=dict(parse_only=bs4.SoupStrainer(class_=("post-content", "post-title", "post-header"))),

)

# Load the documents from the web page

docs = loader.load()# Initialize a text splitter to chunk the document text

text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

all_splits = text_splitter.split_documents(docs)# Index the chunks in the vector store

_ = vector_store.add_documents(documents=all_splits)# Define a retrieval tool to get relevant documents for a query

@tool(response_format="content_and_artifact")

def retrieve(query: str):"""Retrieve information related to a query."""retrieved_docs = vector_store.similarity_search(query, k=2)serialized = "\n\n".join((f"Source: {doc.metadata}\n" f"Content: {doc.page_content}")for doc in retrieved_docs)return serialized, retrieved_docs# Step 1: Function to generate a tool call or respond based on the state

def query_or_respond(state: MessagesState):"""Generate tool call for retrieval or respond."""llm_with_tools = llm.bind_tools([retrieve]) # Bind the retrieve tool to LLMresponse = llm_with_tools.invoke(state["messages"]) # Invoke the LLM with current messagesreturn {"messages": [response]} # Return the response messages# Step 2: Execute the retrieval tool

tools = ToolNode([retrieve])# Step 3: Function to generate a response using retrieved content

def generate(state: MessagesState):"""Generate answer."""# Get the most recent tool messagesrecent_tool_messages = []for message in reversed(state["messages"]):if message.type == "tool":recent_tool_messages.append(message)else:breaktool_messages = recent_tool_messages[::-1] # Reverse to get the original order# Create a system message with the retrieved contextdocs_content = "\n\n".join(doc.content for doc in tool_messages)system_message_content = ("You are an assistant for question-answering tasks. ""Use the following pieces of retrieved context to answer ""the question. If you don't know the answer, say that you ""don't know. Use three sentences maximum and keep the ""answer concise.""\n\n"f"{docs_content}")# Filter human and system messages for the promptconversation_messages = [messagefor message in state["messages"]if message.type in ("human", "system")or (message.type == "ai" and not message.tool_calls)]prompt = [SystemMessage(system_message_content)] + conversation_messages# Invoke the LLM with the promptresponse = llm.invoke(prompt)return {"messages": [response]}# Build the state graph for managing message state transitions

graph_builder = StateGraph(MessagesState)

graph_builder.add_node(query_or_respond) # Add query_or_respond node to the graph

graph_builder.add_node(tools) # Add tools node to the graph

graph_builder.add_node(generate) # Add generate node to the graph# Set the entry point for the state graph

graph_builder.set_entry_point("query_or_respond")

# Define conditional edges based on tool invocation

graph_builder.add_conditional_edges("query_or_respond",tools_condition,{END: END, "tools": "tools"},

)

graph_builder.add_edge("tools", "generate") # Define transition from tools to generate

graph_builder.add_edge("generate", END) # Define transition from generate to END# Compile the graph

graph = graph_builder.compile()# Interact with the compiled graph using an initial input message

input_message = "Hello"

for step in graph.stream({"messages": [{"role": "user", "content": input_message}]},stream_mode="values",

):step["messages"][-1].pretty_print() # Print the final message# Another interaction with the graph with a different input message

input_message = "What is Task Decomposition?"

for step in graph.stream({"messages": [{"role": "user", "content": input_message}]},stream_mode="values",

):step["messages"][-1].pretty_print() # Print the final message

代码详解

导入必要的库

import os

from langchain_openai import ChatOpenAI, OpenAIEmbeddings

from langchain_core.vectorstores import InMemoryVectorStore

import bs4

from langchain import hub

from langchain_community.document_loaders import WebBaseLoader

from langchain_core.documents import Document

from langchain_text_splitters import RecursiveCharacterTextSplitter

from langgraph.graph import START, StateGraph, MessagesState

from typing_extensions import List, TypedDict

from langchain_core.tools import tool

from langchain_core.messages import SystemMessage

from langgraph.graph import END

from langgraph.prebuilt import ToolNode, tools_condition

我们首先导入了需要的库,这些库提供了处理语言和存储向量的工具。

设置环境变量

os.environ["OPENAI_API_KEY"] = 'your_openai_api_key'

设置一些环境变量,用于API的身份验证和项目配置。

初始化嵌入模型和向量存储

embeddings = OpenAIEmbeddings(model="text-embedding-3-large")

vector_store = InMemoryVectorStore(embeddings)

我们使用OpenAI的嵌入模型来创建文本嵌入,并在内存中初始化一个向量存储,用于后续的向量操作。

llm = ChatOpenAI(model="gpt-4o-mini")

初始化GPT-4小版本的语言模型,用于后续的AI对话生成。

加载和分割文档

loader = WebBaseLoader(web_paths=("https://lilianweng.github.io/posts/2023-06-23-agent/",),bs_kwargs=dict(parse_only=bs4.SoupStrainer(class_=("post-content", "post-title", "post-header"))),

)

docs = loader.load()text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

all_splits = text_splitter.split_documents(docs)

加载指定网页的内容,并对页面内容进行解析和分割。分割后的文本块将用于嵌入和向量存储。

向量存储文档

_ = vector_store.add_documents(documents=all_splits)

将分割后的文档片段添加到向量存储中,以供后续检索操作。

定义检索工具

@tool(response_format="content_and_artifact")

def retrieve(query: str):"""Retrieve information related to a query."""retrieved_docs = vector_store.similarity_search(query, k=2)serialized = "\n\n".join((f"Source: {doc.metadata}\n" f"Content: {doc.page_content}")for doc in retrieved_docs)return serialized, retrieved_docs

定义一个检索工具函数retrieve,该函数可以根据查询在向量存储中进行相似性搜索,并返回检索到的文档内容。

定义步骤:生成工具调用或直接回复

def query_or_respond(state: MessagesState):"""Generate tool call for retrieval or respond."""llm_with_tools = llm.bind_tools([retrieve])response = llm_with_tools.invoke(state["messages"])return {"messages": [response]}

该函数根据当前的消息状态生成调用检索工具的请求或直接生成回复。

定义步骤:执行检索工具

tools = ToolNode([retrieve])

定义一个执行检索工具的步骤。

定义步骤:生成回答

def generate(state: MessagesState):"""Generate answer."""recent_tool_messages = []for message in reversed(state["messages"]):if message.type == "tool":recent_tool_messages.append(message)else:breaktool_messages = recent_tool_messages[::-1]docs_content = "\n\n".join(doc.content for doc in tool_messages)system_message_content = ("You are an assistant for question-answering tasks. ""Use the following pieces of retrieved context to answer ""the question. If you don't know the answer, say that you ""don't know. Use three sentences maximum and keep the ""answer concise.""\n\n"f"{docs_content}")conversation_messages = [messagefor message in state["messages"]if message.type in ("human", "system")or (message.type == "ai" and not message.tool_calls)]prompt = [SystemMessage(system_message_content)] + conversation_messagesresponse = llm.invoke(prompt)return {"messages": [response]}

该函数生成最后的回答。它会首先收集最近的工具消息,并结合这些消息内容生成系统消息,然后与现有对话消息一起作为提示,最终调用LLM生成回复。

构建状态图

graph_builder = StateGraph(MessagesState)

graph_builder.add_node(query_or_respond)

graph_builder.add_node(tools)

graph_builder.add_node(generate)graph_builder.set_entry_point("query_or_respond")

graph_builder.add_conditional_edges("query_or_respond",tools_condition,{END: END, "tools": "tools"},

)

graph_builder.add_edge("tools", "generate")

graph_builder.add_edge("generate", END)graph = graph_builder.compile()

使用状态图构建器创建一个消息状态图,并添加节点和条件边,确定消息的流转逻辑。

与状态图进行交互

input_message = "Hello"

for step in graph.stream({"messages": [{"role": "user", "content": input_message}]},stream_mode="values",

):step["messages"][-1].pretty_print()input_message = "What is Task Decomposition?"

for step in graph.stream({"messages": [{"role": "user", "content": input_message}]},stream_mode="values",

):step["messages"][-1].pretty_print()

我们通过给定的输入消息与状态图进行互动,流式处理消息,并最终打印出生成的回复。

LLM消息抓取

以上整个过程中,我们都是在调用LangChain API与LLM在进行交互,至于底层发送的请求细节,一无所知。在某些场景下面,我们还是需要去探究一下这些具体的细节,这样可以有一个全面的了解。下面我们看一下具体的发送内容,以上代码涉及到三个LLM交互。

交互1

提问

{"messages": [[{"lc": 1,"type": "constructor","id": ["langchain","schema","messages","HumanMessage"],"kwargs": {"content": "Hello","type": "human","id": "da95e909-50bb-4204-8aad-4181dcccbffb"}}]]

}

回答

{"generations": [[{"text": "Hello! How can I assist you today?","generation_info": {"finish_reason": "stop","logprobs": null},"type": "ChatGeneration","message": {"lc": 1,"type": "constructor","id": ["langchain","schema","messages","AIMessage"],"kwargs": {"content": "Hello! How can I assist you today?","additional_kwargs": {"refusal": null},"response_metadata": {"token_usage": {"completion_tokens": 10,"prompt_tokens": 44,"total_tokens": 54,"completion_tokens_details": {"accepted_prediction_tokens": 0,"audio_tokens": 0,"reasoning_tokens": 0,"rejected_prediction_tokens": 0},"prompt_tokens_details": {"audio_tokens": 0,"cached_tokens": 0}},"model_name": "gpt-4o-mini-2024-07-18","system_fingerprint": "fp_3de1288069","finish_reason": "stop","logprobs": null},"type": "ai","id": "run-611efcc9-1fe5-47e4-83fc-f42623556d93-0","usage_metadata": {"input_tokens": 44,"output_tokens": 10,"total_tokens": 54,"input_token_details": {"audio": 0,"cache_read": 0},"output_token_details": {"audio": 0,"reasoning": 0}},"tool_calls": [],"invalid_tool_calls": []}}}]],"llm_output": {"token_usage": {"completion_tokens": 10,"prompt_tokens": 44,"total_tokens": 54,"completion_tokens_details": {"accepted_prediction_tokens": 0,"audio_tokens": 0,"reasoning_tokens": 0,"rejected_prediction_tokens": 0},"prompt_tokens_details": {"audio_tokens": 0,"cached_tokens": 0}},"model_name": "gpt-4o-mini-2024-07-18","system_fingerprint": "fp_3de1288069"},"run": null,"type": "LLMResult"

}

交互2

提问

{"messages": [[{"lc": 1,"type": "constructor","id": ["langchain","schema","messages","HumanMessage"],"kwargs": {"content": "What is Task Decomposition?","type": "human","id": "6a790b36-fafd-4ff3-b293-9bb3ac9f4157"}}]]

}

回答

{"generations": [[{"text": "","generation_info": {"finish_reason": "tool_calls","logprobs": null},"type": "ChatGeneration","message": {"lc": 1,"type": "constructor","id": ["langchain","schema","messages","AIMessage"],"kwargs": {"content": "","additional_kwargs": {"tool_calls": [{"id": "call_RClqnmrtp2sbwIbb2jHm0VeQ","function": {"arguments": "{\"query\":\"Task Decomposition\"}","name": "retrieve"},"type": "function"}],"refusal": null},"response_metadata": {"token_usage": {"completion_tokens": 15,"prompt_tokens": 49,"total_tokens": 64,"completion_tokens_details": {"accepted_prediction_tokens": 0,"audio_tokens": 0,"reasoning_tokens": 0,"rejected_prediction_tokens": 0},"prompt_tokens_details": {"audio_tokens": 0,"cached_tokens": 0}},"model_name": "gpt-4o-mini-2024-07-18","system_fingerprint": "fp_0705bf87c0","finish_reason": "tool_calls","logprobs": null},"type": "ai","id": "run-056b1c5a-cd5c-40cf-940c-bbf98512615d-0","tool_calls": [{"name": "retrieve","args": {"query": "Task Decomposition"},"id": "call_RClqnmrtp2sbwIbb2jHm0VeQ","type": "tool_call"}],"usage_metadata": {"input_tokens": 49,"output_tokens": 15,"total_tokens": 64,"input_token_details": {"audio": 0,"cache_read": 0},"output_token_details": {"audio": 0,"reasoning": 0}},"invalid_tool_calls": []}}}]],"llm_output": {"token_usage": {"completion_tokens": 15,"prompt_tokens": 49,"total_tokens": 64,"completion_tokens_details": {"accepted_prediction_tokens": 0,"audio_tokens": 0,"reasoning_tokens": 0,"rejected_prediction_tokens": 0},"prompt_tokens_details": {"audio_tokens": 0,"cached_tokens": 0}},"model_name": "gpt-4o-mini-2024-07-18","system_fingerprint": "fp_0705bf87c0"},"run": null,"type": "LLMResult"

}

交互3

提问

{"messages": [[{"lc": 1,"type": "constructor","id": ["langchain","schema","messages","SystemMessage"],"kwargs": {"content": "You are an assistant for question-answering tasks. Use the following pieces of retrieved context to answer the question. If you don't know the answer, say that you don't know. Use three sentences maximum and keep the answer concise.\n\nSource: {'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/'}\nContent: Fig. 1. Overview of a LLM-powered autonomous agent system.\nComponent One: Planning#\nA complicated task usually involves many steps. An agent needs to know what they are and plan ahead.\nTask Decomposition#\nChain of thought (CoT; Wei et al. 2022) has become a standard prompting technique for enhancing model performance on complex tasks. The model is instructed to “think step by step” to utilize more test-time computation to decompose hard tasks into smaller and simpler steps. CoT transforms big tasks into multiple manageable tasks and shed lights into an interpretation of the model’s thinking process.\n\nSource: {'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/'}\nContent: Tree of Thoughts (Yao et al. 2023) extends CoT by exploring multiple reasoning possibilities at each step. It first decomposes the problem into multiple thought steps and generates multiple thoughts per step, creating a tree structure. The search process can be BFS (breadth-first search) or DFS (depth-first search) with each state evaluated by a classifier (via a prompt) or majority vote.\nTask decomposition can be done (1) by LLM with simple prompting like \"Steps for XYZ.\\n1.\", \"What are the subgoals for achieving XYZ?\", (2) by using task-specific instructions; e.g. \"Write a story outline.\" for writing a novel, or (3) with human inputs.","type": "system"}},{"lc": 1,"type": "constructor","id": ["langchain","schema","messages","HumanMessage"],"kwargs": {"content": "What is Task Decomposition?","type": "human","id": "6a790b36-fafd-4ff3-b293-9bb3ac9f4157"}}]]

}

回答

{"generations": [[{"text": "Task Decomposition is the process of breaking down a complicated task into smaller, more manageable steps. It often involves techniques like Chain of Thought (CoT), where the model is prompted to think step-by-step, enhancing performance on complex tasks. This approach helps to clarify the model's thinking process and makes it easier to tackle difficult problems.","generation_info": {"finish_reason": "stop","logprobs": null},"type": "ChatGeneration","message": {"lc": 1,"type": "constructor","id": ["langchain","schema","messages","AIMessage"],"kwargs": {"content": "Task Decomposition is the process of breaking down a complicated task into smaller, more manageable steps. It often involves techniques like Chain of Thought (CoT), where the model is prompted to think step-by-step, enhancing performance on complex tasks. This approach helps to clarify the model's thinking process and makes it easier to tackle difficult problems.","additional_kwargs": {"refusal": null},"response_metadata": {"token_usage": {"completion_tokens": 67,"prompt_tokens": 384,"total_tokens": 451,"completion_tokens_details": {"accepted_prediction_tokens": 0,"audio_tokens": 0,"reasoning_tokens": 0,"rejected_prediction_tokens": 0},"prompt_tokens_details": {"audio_tokens": 0,"cached_tokens": 0}},"model_name": "gpt-4o-mini-2024-07-18","system_fingerprint": "fp_0705bf87c0","finish_reason": "stop","logprobs": null},"type": "ai","id": "run-b3565b23-18d5-439d-a87b-f836ee281d91-0","usage_metadata": {"input_tokens": 384,"output_tokens": 67,"total_tokens": 451,"input_token_details": {"audio": 0,"cache_read": 0},"output_token_details": {"audio": 0,"reasoning": 0}},"tool_calls": [],"invalid_tool_calls": []}}}]],"llm_output": {"token_usage": {"completion_tokens": 67,"prompt_tokens": 384,"total_tokens": 451,"completion_tokens_details": {"accepted_prediction_tokens": 0,"audio_tokens": 0,"reasoning_tokens": 0,"rejected_prediction_tokens": 0},"prompt_tokens_details": {"audio_tokens": 0,"cached_tokens": 0}},"model_name": "gpt-4o-mini-2024-07-18","system_fingerprint": "fp_0705bf87c0"},"run": null,"type": "LLMResult"

}

总结

本文通过OpenAI语言模型和自定义检索工具,构建了一个智能问答系统。首先,从网络上加载和分割文档内容,并将其存储到向量数据库中。然后,定义一个检索工具,可以根据查询请求从数据库中寻找相关文档。使用状态图管理对话流程,根据不同条件,系统会决定是否调用检索工具或者直接生成回复。最终,通过与状态图交互,实现智能应答。这样一个系统大大增强了自动化问答的能力,通过结合嵌入模型和语言模型,能够处理更为复杂和多样化的用户查询。

相关文章:

AI - 谈谈RAG中的查询分析(2)

AI - 谈谈RAG中的查询分析(2) 大家好,RAG中的查询分析是比较有趣的一个点,内容丰富,并不是一句话能聊的清楚的。今天接着上一篇,继续探讨RAG中的查询分析,并在功能层面和代码层面持续改进。 功…...

Java基础面试题,46道Java基础八股文(4.8万字,30+手绘图)

Java是一种广泛使用的编程语言,由Sun Microsystems(现为Oracle Corporation的一部分)在1995年首次发布。它是一种面向对象的语言,这意味着它支持通过类和对象的概念来构造程序。 Java设计有一个核心理念:“编写一次&am…...

taro小程序马甲包插件

插件名 maloulab/taro-plugins-socksuppet-ci maloulab/taro-plugins-socksuppet-ci安装 yarn add maloulab/taro-plugins-socksuppet-ci or npm i maloulab/taro-plugins-socksuppet-ci插件描述 taro官方是提供了小程序集成插件的tarojs/plugin-mini-ci ,且支持…...

【分组去重】.NET开源 ORM 框架 SqlSugar 系列

💥 .NET开源 ORM 框架 SqlSugar 系列 🎉🎉🎉 【开篇】.NET开源 ORM 框架 SqlSugar 系列【入门必看】.NET开源 ORM 框架 SqlSugar 系列【实体配置】.NET开源 ORM 框架 SqlSugar 系列【Db First】.NET开源 ORM 框架 SqlSugar 系列…...

2020年

C D A C B B A B C B A 42...

基于Matlab卡尔曼滤波的GPS/INS集成导航系统研究与实现

随着智能交通和无人驾驶技术的迅猛发展,精确可靠的导航系统已成为提升车辆定位精度与安全性的重要技术。全球定位系统(GPS)和惯性导航系统(INS)在导航应用中各具优势:GPS提供全球定位信息,而INS…...

《只狼》运行时提示“mfc140u.dll文件缺失”是什么原因?“找不到mfc140u.dll文件”要怎么解决?教你几招轻松搞定

《只狼》运行时提示“mfc140u.dll文件缺失”的科普与解决方案 作为一名软件开发从业者,在游戏开发和维护过程中,我们经常会遇到各种运行时错误和系统报错。今天,我们就来探讨一下《只狼》这款游戏在运行时提示“mfc140u.dll文件缺失”的原因…...

C语言:指针与数组

一、. 数组名的理解 int arr[5] { 0,1,2,3,4 }; int* p &arr[0]; 在之前我们知道要取一个数组的首元素地址就可以使用&arr[0],但其实数组名本身就是地址,而且是数组首元素的地址。在下图中我们就通过测试看出,结果确实如此。 可是…...

win11无法检测到其他显示器-NVIDIA

https://www.nvidia.cn/software/nvidia-app/ https://cn.download.nvidia.cn/nvapp/client/11.0.1.163/NVIDIA_app_v11.0.1.163.exe 下载安装后,检测驱动、更新驱动。...

SQLite:DDL(数据定义语言)的基本用法

SQLite:DDL(数据定义语言)的基本用法 1 主要内容说明2 相关内容说明2.1 创建表格(create table)2.1.1 SQLite常见的数据类型2.1.1.1 integer(整型)2.1.1.2 text(文本型)2…...

AI工具集:一站式1000+人工智能工具导航站

在当今数字化时代,人工智能(AI)技术的飞速发展催生了众多实用的AI工具,但面对如此多的选择,想要找到适合自己的高质量AI工具却并非易事。网络搜索往往充斥着推广内容,真正有价值的信息被淹没其中。为了解决…...

视觉处理基础2

目录 1.池化层 1.1 概述 1.2 池化层计算 1.3 步长Stride 1.4 边缘填充Padding 1.5 多通道池化计算 1.6 池化层的作用 2. 卷积拓展 2.1 二维卷积 2.1.1 单通道版本 2.1.2 多通道版本 2.2 三维卷积 2.3 反卷积 2.4 空洞卷积(膨胀卷积) 2.5 …...

代码随想录第十四天|二叉树part02--226.翻转二叉树、101.对称二叉树、104.二叉树的最大深度、111.二叉树的最小深度

资料引用: 226.翻转二叉树(226.翻转二叉树) 101.对称二叉树(101.对称二叉树) 104.二叉树的最大深度(104.二叉树的最大深度) 111.二叉树的最小深度(111.二叉树的最小深度)…...

vue基础之7:天气案例、监视属性、深度监视、监视属性(简写)

欢迎来到“雪碧聊技术”CSDN博客! 在这里,您将踏入一个专注于Java开发技术的知识殿堂。无论您是Java编程的初学者,还是具有一定经验的开发者,相信我的博客都能为您提供宝贵的学习资源和实用技巧。作为您的技术向导,我将…...

JS实现高效导航——A*寻路算法+导航图简化法

一、如何实现两点间路径导航 导航实现的通用步骤,一般是: 1、网格划分 将地图划分为网格,即例如地图是一张图片,其像素为1000*1000,那我们将此图片划分为各个10*10的网格,从而提高寻路算法的计算量。 2、标…...

Spring Authorization Server登出说明与实践

本章内容概览 Spring Security提供的/logout登出接口做了什么与如何自定义。Spring Authorization Server提供的/connect/logout登出接口做了什么与如何自定义。Spring Authorization Server提供的/oauth2/revoke撤销token接口做了什么与如何自定义。 前言 既然系统中有登录功…...

浏览器报错 | 代理服务器可能有问题,或地址不正确

1 问题描述 Windows连网情况下,浏览器访问地址显示“你尚未连接,代理服务器可能有问题,或地址不正确。”出现如下画面: 2 解决方法 途径1 控制面板-->网络与internet-->internet选项-->Internet属性-->连接-->…...

泷羽sec:shell编程(9)不同脚本的互相调用和重定向操作

声明: 学习视频来自B站up主 泷羽sec 有兴趣的师傅可以关注一下,如涉及侵权马上删除文章,笔记只是方便各位师傅的学习和探讨,文章所提到的网站以及内容,只做学习交流,其他均与本人以及泷羽sec团队无关&#…...

Milvus×OPPO:如何构建更懂你的大模型助手

01. 背景 AI业务快速增长下传统关系型数据库无法满足需求。 2024年恰逢OPPO品牌20周年,OPPO也宣布正式进入AI手机的时代。超千万用户开始通过例如通话摘要、新小布助手、小布照相馆等搭载在OPPO手机上的应用体验AI能力。 与传统的应用不同的是,在AI驱动的…...

单片机几大时钟源

在单片机中,MSI、HSI和HSE通常指的是用于内部晶振配置的不同功能模块: MSI (Master Oscillator System Interface):这是最低级的一种时钟源管理单元,它控制着最基本的系统时钟(SYSCLK),一般由外…...

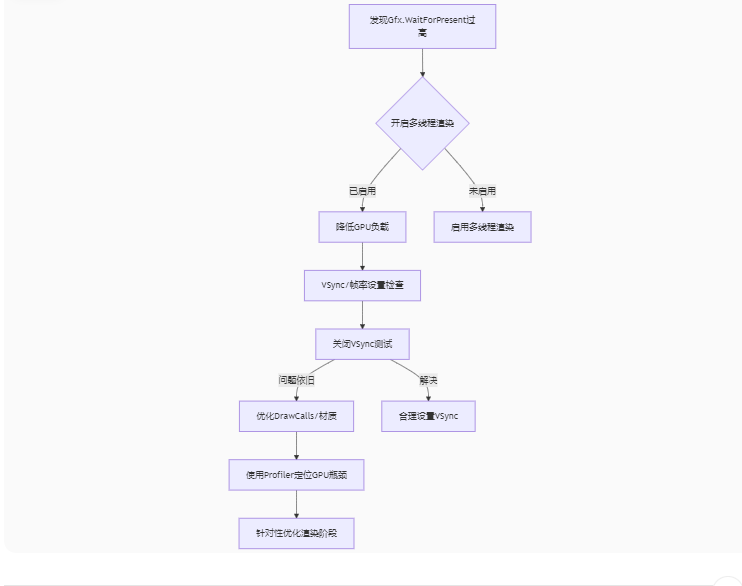

Unity3D中Gfx.WaitForPresent优化方案

前言 在Unity中,Gfx.WaitForPresent占用CPU过高通常表示主线程在等待GPU完成渲染(即CPU被阻塞),这表明存在GPU瓶颈或垂直同步/帧率设置问题。以下是系统的优化方案: 对惹,这里有一个游戏开发交流小组&…...

前端倒计时误差!

提示:记录工作中遇到的需求及解决办法 文章目录 前言一、误差从何而来?二、五大解决方案1. 动态校准法(基础版)2. Web Worker 计时3. 服务器时间同步4. Performance API 高精度计时5. 页面可见性API优化三、生产环境最佳实践四、终极解决方案架构前言 前几天听说公司某个项…...

HTML 列表、表格、表单

1 列表标签 作用:布局内容排列整齐的区域 列表分类:无序列表、有序列表、定义列表。 例如: 1.1 无序列表 标签:ul 嵌套 li,ul是无序列表,li是列表条目。 注意事项: ul 标签里面只能包裹 li…...

2021-03-15 iview一些问题

1.iview 在使用tree组件时,发现没有set类的方法,只有get,那么要改变tree值,只能遍历treeData,递归修改treeData的checked,发现无法更改,原因在于check模式下,子元素的勾选状态跟父节…...

C# SqlSugar:依赖注入与仓储模式实践

C# SqlSugar:依赖注入与仓储模式实践 在 C# 的应用开发中,数据库操作是必不可少的环节。为了让数据访问层更加简洁、高效且易于维护,许多开发者会选择成熟的 ORM(对象关系映射)框架,SqlSugar 就是其中备受…...

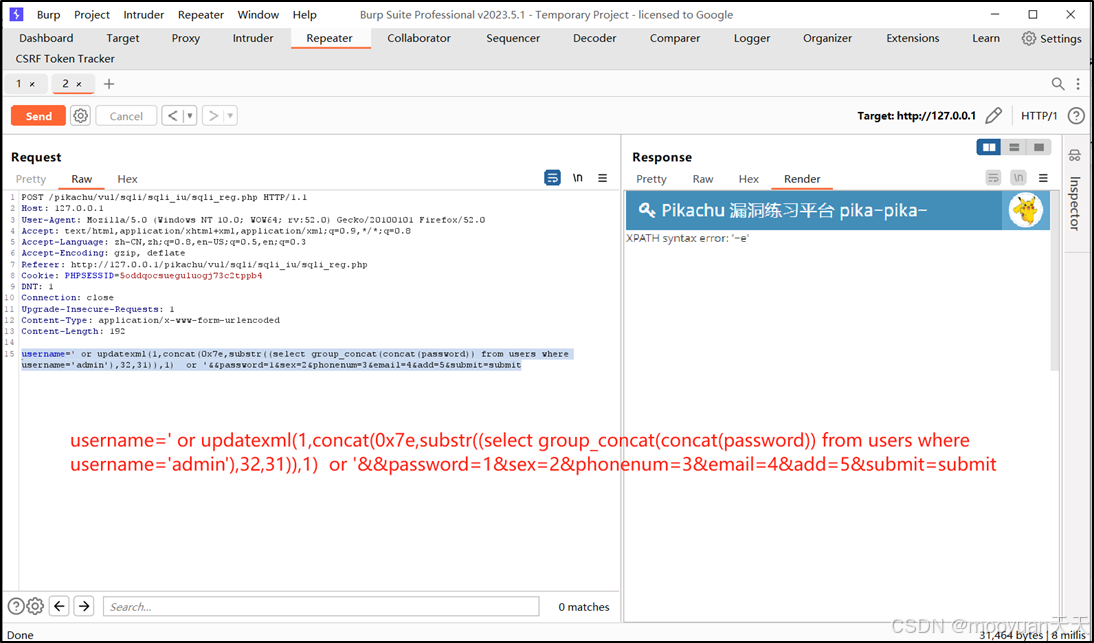

pikachu靶场通关笔记22-1 SQL注入05-1-insert注入(报错法)

目录 一、SQL注入 二、insert注入 三、报错型注入 四、updatexml函数 五、源码审计 六、insert渗透实战 1、渗透准备 2、获取数据库名database 3、获取表名table 4、获取列名column 5、获取字段 本系列为通过《pikachu靶场通关笔记》的SQL注入关卡(共10关࿰…...

Spring数据访问模块设计

前面我们已经完成了IoC和web模块的设计,聪明的码友立马就知道了,该到数据访问模块了,要不就这俩玩个6啊,查库势在必行,至此,它来了。 一、核心设计理念 1、痛点在哪 应用离不开数据(数据库、No…...

智能分布式爬虫的数据处理流水线优化:基于深度强化学习的数据质量控制

在数字化浪潮席卷全球的今天,数据已成为企业和研究机构的核心资产。智能分布式爬虫作为高效的数据采集工具,在大规模数据获取中发挥着关键作用。然而,传统的数据处理流水线在面对复杂多变的网络环境和海量异构数据时,常出现数据质…...

AI书签管理工具开发全记录(十九):嵌入资源处理

1.前言 📝 在上一篇文章中,我们完成了书签的导入导出功能。本篇文章我们研究如何处理嵌入资源,方便后续将资源打包到一个可执行文件中。 2.embed介绍 🎯 Go 1.16 引入了革命性的 embed 包,彻底改变了静态资源管理的…...

力扣-35.搜索插入位置

题目描述 给定一个排序数组和一个目标值,在数组中找到目标值,并返回其索引。如果目标值不存在于数组中,返回它将会被按顺序插入的位置。 请必须使用时间复杂度为 O(log n) 的算法。 class Solution {public int searchInsert(int[] nums, …...