AI - 浅聊一下基于LangChain的AI Agent

AI - 浅聊一下基于LangChain的AI Agent

大家好,今天我们来聊聊一个很有意思的主题: AI Agent ,就是目前非常流行的所谓的AI智能体。AI的发展日新月异,都2024年末了,如果此时小伙伴们对这个非常火的概念还不清楚的话,似乎有点落伍于时代了,希望今天通过这个短文来普及一下。

什么是AI Agent?

简单来说,AI Agent 就是一个能够自主进行任务的人工智能系统。它可以理解输入、处理数据并做出决策。比如自动客服机器人就在很多情况下充当AI Agent。AI Agent通过接收用户的指令和信息,利用内置的AI模型或算法进行处理,并提供相应的输出或采取行动。

AI Agent与RAG

RAG(Retrieval-Augmented Generation) 是一种结合检索和生成模型的方法,用来回答复杂问题或者生成内容。它的主要思路是先从一个大型数据库中检索出相关的信息,再用生成模型(比如GPT-3)来生成最终的回答。

Agent与RAG的比较

-

处理方式:

- AI Agent:更多是独立执行任务,可能会包括决策过程。它处理的任务可以是广泛的,比如对话、数据处理、业务操作等。

- RAG:主要用于回答问题或者生成内容,通过检索相关信息然后生成回答。

-

优势:

- AI Agent:更通用,可以用于多种任务。适用于自动化操作、实时交互等。

- RAG:结合了检索和生成的优势,能够回答非常复杂的问题,并且回答质量通常比较高。

-

实现难度:

- AI Agent:需要实现框架、决策逻辑、模型集成等,复杂度较高。

- RAG:实现相对简单一些,主要是将检索和生成模型有效结合。

Agent与RAG的关系

理解AI Agent与RAG(Retrieval-Augmented Generation)的关系以及它们如何一起工作是一个很重要的课题。AI Agent 可以看作是一个更高层次的系统,它能够自主进行任务并做出决策。而 RAG 是一个技术方法,主要用于增强生成模型的能力,使其可以利用从大数据集或知识库中检索到的信息来生成更准确和相关的回答。简而言之,AI Agent可以构建在RAG之上,利用RAG的方法来提升问答和生成任务的效果。

那么为什么要在RAG上构建AI Agent?

-

提高优质回答的能力:RAG方法结合了检索和生成的优点,能够从庞大的知识库中检索出相关的信息,再通过生成模型来生成准确的回答。

-

保持上下文和连续性:RAG方法可以通过检索到的相关文档来保持对话的上下文和连续性,这对于长对话和复杂问题尤为重要。

-

增强多任务处理:AI Agent可以利用RAG来处理多个任务,比如询问问题、提供建议或者执行操作等。

AI Agent的优点和缺点

优点

- 自动化:AI Agent可以自动处理很多重复性任务,比如客服、数据分析等,减少人力成本。

- 24/7服务:与人类不同,AI Agent可以全天候工作,不受时间限制。

- 速度快:处理信息和响应速度非常快,能够即时提供服务。

- 数据驱动:能够处理和分析大量数据,从中提取有价值的见解。

缺点

-

依赖数据和模型:AI Agent的性能高度依赖于训练数据和模型的质量。如果数据有偏见,结果可能也会有偏见。

-

缺乏创造性:AI Agent在处理突发情况和非结构化问题时,往往不如人类灵活。

-

隐私问题:处理敏感数据时,存在隐私和安全风险。

用LangChain实现一个基于RAG的AI Agent

通过以下步骤,我们可以基于LangChain和OpenAI实现一个最小化的基于RAG的AI Agent。代码的主要目的是创建一个基于LangChain的AI Agent,该AI Agent能够检索和生成回答,并保持对话的上下文。以下是逐步解析每个部分的内容:

导入所需模块

首先,我们需要导入各种库和模块:

import os

from langchain_openai import ChatOpenAI # 导入OpenAI聊天模型类

from langchain_openai import OpenAIEmbeddings # 导入OpenAI嵌入模型类

from langchain_core.vectorstores import InMemoryVectorStore # 导入内存向量存储类

import bs4 # 导入BeautifulSoup库用于网页解析

from langchain_core.tools import tool # 导入工具装饰器

from langchain_community.document_loaders import WebBaseLoader # 导入Web页面加载器

from langchain_text_splitters import RecursiveCharacterTextSplitter # 导入递归字符文本切分器

from langgraph.checkpoint.memory import MemorySaver # 导入内存保存器

from langgraph.prebuilt import create_react_agent # 导入create_react_agent函数

设置API密钥

设置OpenAI的API密钥,这是为了能够使用OpenAI提供的模型和服务:

os.environ["OPENAI_API_KEY"] = 'your-api-key'

初始化嵌入模型和向量存储

初始化OpenAI嵌入模型和内存向量存储,用于后续的文档处理和搜索:

embeddings = OpenAIEmbeddings(model="text-embedding-3-large")

vector_store = InMemoryVectorStore(embeddings)

初始化聊天模型

初始化一个OpenAI的聊天模型,这里使用的是gpt-4o-mini:

llm = ChatOpenAI(model="gpt-4o-mini")

加载并切分文档内容

使用WebBaseLoader从指定的网址加载文档内容,并使用BeautifulSoup仅解析指定的部分(如文章内容、标题和头部),然后使用文本切分器来将文档切分成小块:

loader = WebBaseLoader(web_paths=("https://lilianweng.github.io/posts/2023-06-23-agent/",),bs_kwargs=dict(parse_only=bs4.SoupStrainer(class_=("post-content", "post-title", "post-header"))),

)

docs = loader.load() # 加载文档内容text_splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=200)

all_splits = text_splitter.split_documents(docs) # 切分文档# 将切分后的文档添加到向量存储

_ = vector_store.add_documents(documents=all_splits)

定义检索工具函数

定义一个用@tool装饰器修饰的检索工具函数,用于根据用户的查询检索相关内容:

@tool(response_format="content_and_artifact")

def retrieve(query: str):"""Retrieve information related to a query."""retrieved_docs = vector_store.similarity_search(query, k=2) # 从向量存储中检索最相似的2个文档serialized = "\n\n".join((f"Source: {doc.metadata}\n" f"Content: {doc.page_content}")for doc in retrieved_docs)return serialized, retrieved_docs # 返回序列化内容和检索到的文档

初始化内存保存器和创建反应代理

使用MemorySaver来保存对话状态,并使用create_react_agent函数创建AI Agent:

memory = MemorySaver() # 初始化内存保存器

agent_executor = create_react_agent(llm, [retrieve], checkpointer=memory) # 创建AI Agent

指定对话线程ID

指定一个对话的线程ID,用于管理多轮对话:

config = {"configurable": {"thread_id": "def234"}}

示例对话

定义一个输入消息,然后运行AI Agent来处理这个消息,并打印最后的响应:

input_message = ("What is the standard method for Task Decomposition?\n\n""Once you get the answer, look up common extensions of that method."

)for event in agent_executor.stream({"messages": [{"role": "user", "content": input_message}]},stream_mode="values",config=config,

):event["messages"][-1].pretty_print() # 打印最后一条消息内容

通过这种方式,可以实现一个基于LangChain的最小化AI Agent,它能够智能地响应用户查询,并在对话中保持上下文的一致性。

代码输出

================================ Human Message =================================What is the standard method for Task Decomposition?Once you get the answer, look up common extensions of that method.

================================== Ai Message ==================================

Tool Calls:retrieve (call_HmlIDGfLMemXCh88ut0Fqzxz)Call ID: call_HmlIDGfLMemXCh88ut0FqzxzArgs:query: standard method for Task Decomposition

================================= Tool Message =================================

Name: retrieveSource: {'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/'}

Content: Tree of Thoughts (Yao et al. 2023) extends CoT by exploring multiple reasoning possibilities at each step. It first decomposes the problem into multiple thought steps and generates multiple thoughts per step, creating a tree structure. The search process can be BFS (breadth-first search) or DFS (depth-first search) with each state evaluated by a classifier (via a prompt) or majority vote.

Task decomposition can be done (1) by LLM with simple prompting like "Steps for XYZ.\n1.", "What are the subgoals for achieving XYZ?", (2) by using task-specific instructions; e.g. "Write a story outline." for writing a novel, or (3) with human inputs.Source: {'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/'}

Content: Fig. 1. Overview of a LLM-powered autonomous agent system.

Component One: Planning#

A complicated task usually involves many steps. An agent needs to know what they are and plan ahead.

Task Decomposition#

Chain of thought (CoT; Wei et al. 2022) has become a standard prompting technique for enhancing model performance on complex tasks. The model is instructed to “think step by step” to utilize more test-time computation to decompose hard tasks into smaller and simpler steps. CoT transforms big tasks into multiple manageable tasks and shed lights into an interpretation of the model’s thinking process.

================================== Ai Message ==================================

Tool Calls:retrieve (call_gk1TfyTqR131FuctblLrjkz4)Call ID: call_gk1TfyTqR131FuctblLrjkz4Args:query: common extensions of Task Decomposition methods

================================= Tool Message =================================

Name: retrieveSource: {'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/'}

Content: Tree of Thoughts (Yao et al. 2023) extends CoT by exploring multiple reasoning possibilities at each step. It first decomposes the problem into multiple thought steps and generates multiple thoughts per step, creating a tree structure. The search process can be BFS (breadth-first search) or DFS (depth-first search) with each state evaluated by a classifier (via a prompt) or majority vote.

Task decomposition can be done (1) by LLM with simple prompting like "Steps for XYZ.\n1.", "What are the subgoals for achieving XYZ?", (2) by using task-specific instructions; e.g. "Write a story outline." for writing a novel, or (3) with human inputs.Source: {'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/'}

Content: Fig. 1. Overview of a LLM-powered autonomous agent system.

Component One: Planning#

A complicated task usually involves many steps. An agent needs to know what they are and plan ahead.

Task Decomposition#

Chain of thought (CoT; Wei et al. 2022) has become a standard prompting technique for enhancing model performance on complex tasks. The model is instructed to “think step by step” to utilize more test-time computation to decompose hard tasks into smaller and simpler steps. CoT transforms big tasks into multiple manageable tasks and shed lights into an interpretation of the model’s thinking process.

================================== Ai Message ==================================The standard method for Task Decomposition involves a technique known as the Chain of Thought (CoT), which enhances model performance on complex tasks by instructing it to "think step by step." This allows the model to break down large tasks into smaller, more manageable steps. Task decomposition can be achieved through various means, such as:1. **Simple Prompting**: Using prompts like "Steps for XYZ." or "What are the subgoals for achieving XYZ?"

2. **Task-Specific Instructions**: Providing specific instructions tailored to the task, e.g., "Write a story outline." for writing a novel.

3. **Human Inputs**: Involving human guidance in the decomposition process.### Common Extensions of Task Decomposition Methods

One notable extension of the standard method is the **Tree of Thoughts** (Yao et al. 2023). This method builds upon CoT by exploring multiple reasoning possibilities at each step. It decomposes the problem into various thought steps and generates multiple thoughts for each step, forming a tree structure. The search process can utilize either breadth-first search (BFS) or depth-first search (DFS), with each state being evaluated by a classifier or majority vote.Thus, while the Chain of Thought is the foundational method, the Tree of Thoughts represents an advanced extension that allows for a more nuanced exploration of tasks.

重点解释

让我们详细解释一下create_react_agent和agent_executor在上面代码中的作用和实现。

create_react_agent

create_react_agent 是一个预构建的方法,用于创建一个反应式(reactive)的AI Agent。这个Agent能够根据特定的查询和工具来生成响应。在这个方法中,我们会整合一个语言模型(LLM)和一组工具,并添加一个检查点(Checkpointer)来维护对话的状态。

以下是这个方法的详细解释:

from langgraph.prebuilt import create_react_agent# 使用已定义的工具和语言模型创建一个反应式的AI Agent

agent_executor = create_react_agent(llm, [retrieve], checkpointer=memory)

参数解释:

- llm: 这个参数传递了一个大语言模型,这里是

ChatOpenAI,它是用于生成自然语言回答的核心组件。 - tools: 一个工具列表,这里传递了

retrieve工具。这个工具是用来进行文档检索的,可以基于用户的查询,从存储的文档中找到相关内容。 - checkpointer: 传递了一个

MemorySaver实例,用于保存和加载对话的状态。这个组件确保了即使在对话中断后,也可以恢复之前的对话状态。

agent_executor

agent_executor 是通过 create_react_agent 创建的AI Agent执行器,它能够接收用户的输入,利用预定义的工具和语言模型生成对应的回答,并管理对话状态。

以下是这个执行器的详细解释和使用示例:

# Specify an ID for the thread

config = {"configurable": {"thread_id": "def234"}}input_message = ("What is the standard method for Task Decomposition?\n\n""Once you get the answer, look up common extensions of that method."

)for event in agent_executor.stream({"messages": [{"role": "user", "content": input_message}]},stream_mode="values",config=config,

):event["messages"][-1].pretty_print()

流程解释:

-

配置线程ID:

config = {"configurable": {"thread_id": "def234"}}:这里指定了一个线程ID,用于管理特定对话线程的状态。

-

定义输入消息:

input_message定义了用户的输入,包括两个部分:请求标准的任务分解方法,然后查询这个方法的常见扩展。

-

执行对话流:

for event in agent_executor.stream(...):启动AI Agent的对话流。messages: 包含了用户输入信息的字典列表,每个字典表示一条消息。stream_mode="values": 配置流式输出模式。config: 传递配置选项,这里主要传递了线程ID。

-

处理和输出每个事件:

event["messages"][-1].pretty_print():对于每个事件,输出对话流末尾的消息内容(即AI生成的回复)。

代码总结

通过创建一个反应式的AI Agent (agent_executor),并结合了文档检索工具 (retrieve) 和大语言模型 (llm), 使得整个AI系统能够:

- 接收用户的查询。

- 通过工具从存储的文档中检索相关信息。

- 使用语言模型生成自然语言回答。

- 管理对话状态,使得多轮对话能够连贯进行。

这种设计实现了检索增强生成(RAG)架构的功能,既利用了检索模块带来的丰富上下文信息,又结合了生成模型的灵活应答能力,为用户提供更精准和个性化的服务。

LLM消息抓取

交互1

请求

{"messages": [[{"lc": 1,"type": "constructor","id": ["langchain","schema","messages","HumanMessage"],"kwargs": {"content": "What is the standard method for Task Decomposition?\n\nOnce you get the answer, look up common extensions of that method.","type": "human","id": "bb39ed14-f51d-48cc-ac4c-e4eeefffaf55"}}]]

}

回复

{"generations": [[{"text": "","generation_info": {"finish_reason": "tool_calls","logprobs": null},"type": "ChatGeneration","message": {"lc": 1,"type": "constructor","id": ["langchain","schema","messages","AIMessage"],"kwargs": {"content": "","additional_kwargs": {"tool_calls": [{"id": "call_HmlIDGfLMemXCh88ut0Fqzxz","function": {"arguments": "{\"query\":\"standard method for Task Decomposition\"}","name": "retrieve"},"type": "function"}],"refusal": null},"response_metadata": {"token_usage": {"completion_tokens": 18,"prompt_tokens": 67,"total_tokens": 85,"completion_tokens_details": {"accepted_prediction_tokens": 0,"audio_tokens": 0,"reasoning_tokens": 0,"rejected_prediction_tokens": 0},"prompt_tokens_details": {"audio_tokens": 0,"cached_tokens": 0}},"model_name": "gpt-4o-mini-2024-07-18","system_fingerprint": "fp_bba3c8e70b","finish_reason": "tool_calls","logprobs": null},"type": "ai","id": "run-1992178b-d402-49b0-9c2b-6087ab5c2535-0","tool_calls": [{"name": "retrieve","args": {"query": "standard method for Task Decomposition"},"id": "call_HmlIDGfLMemXCh88ut0Fqzxz","type": "tool_call"}],"usage_metadata": {"input_tokens": 67,"output_tokens": 18,"total_tokens": 85,"input_token_details": {"audio": 0,"cache_read": 0},"output_token_details": {"audio": 0,"reasoning": 0}},"invalid_tool_calls": []}}}]],"llm_output": {"token_usage": {"completion_tokens": 18,"prompt_tokens": 67,"total_tokens": 85,"completion_tokens_details": {"accepted_prediction_tokens": 0,"audio_tokens": 0,"reasoning_tokens": 0,"rejected_prediction_tokens": 0},"prompt_tokens_details": {"audio_tokens": 0,"cached_tokens": 0}},"model_name": "gpt-4o-mini-2024-07-18","system_fingerprint": "fp_bba3c8e70b"},"run": null,"type": "LLMResult"

}

交互2

请求

{"messages": [[{"lc": 1,"type": "constructor","id": ["langchain","schema","messages","HumanMessage"],"kwargs": {"content": "What is the standard method for Task Decomposition?\n\nOnce you get the answer, look up common extensions of that method.","type": "human","id": "bb39ed14-f51d-48cc-ac4c-e4eeefffaf55"}},{"lc": 1,"type": "constructor","id": ["langchain","schema","messages","AIMessage"],"kwargs": {"content": "","additional_kwargs": {"tool_calls": [{"id": "call_HmlIDGfLMemXCh88ut0Fqzxz","function": {"arguments": "{\"query\":\"standard method for Task Decomposition\"}","name": "retrieve"},"type": "function"}],"refusal": null},"response_metadata": {"token_usage": {"completion_tokens": 18,"prompt_tokens": 67,"total_tokens": 85,"completion_tokens_details": {"accepted_prediction_tokens": 0,"audio_tokens": 0,"reasoning_tokens": 0,"rejected_prediction_tokens": 0},"prompt_tokens_details": {"audio_tokens": 0,"cached_tokens": 0}},"model_name": "gpt-4o-mini-2024-07-18","system_fingerprint": "fp_bba3c8e70b","finish_reason": "tool_calls","logprobs": null},"type": "ai","id": "run-1992178b-d402-49b0-9c2b-6087ab5c2535-0","tool_calls": [{"name": "retrieve","args": {"query": "standard method for Task Decomposition"},"id": "call_HmlIDGfLMemXCh88ut0Fqzxz","type": "tool_call"}],"usage_metadata": {"input_tokens": 67,"output_tokens": 18,"total_tokens": 85,"input_token_details": {"audio": 0,"cache_read": 0},"output_token_details": {"audio": 0,"reasoning": 0}},"invalid_tool_calls": []}},{"lc": 1,"type": "constructor","id": ["langchain","schema","messages","ToolMessage"],"kwargs": {"content": "Source: {'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/'}\nContent: Tree of Thoughts (Yao et al. 2023) extends CoT by exploring multiple reasoning possibilities at each step. It first decomposes the problem into multiple thought steps and generates multiple thoughts per step, creating a tree structure. The search process can be BFS (breadth-first search) or DFS (depth-first search) with each state evaluated by a classifier (via a prompt) or majority vote.\nTask decomposition can be done (1) by LLM with simple prompting like \"Steps for XYZ.\\n1.\", \"What are the subgoals for achieving XYZ?\", (2) by using task-specific instructions; e.g. \"Write a story outline.\" for writing a novel, or (3) with human inputs.\n\nSource: {'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/'}\nContent: Fig. 1. Overview of a LLM-powered autonomous agent system.\nComponent One: Planning#\nA complicated task usually involves many steps. An agent needs to know what they are and plan ahead.\nTask Decomposition#\nChain of thought (CoT; Wei et al. 2022) has become a standard prompting technique for enhancing model performance on complex tasks. The model is instructed to “think step by step” to utilize more test-time computation to decompose hard tasks into smaller and simpler steps. CoT transforms big tasks into multiple manageable tasks and shed lights into an interpretation of the model’s thinking process.","type": "tool","name": "retrieve","id": "290c729c-60c5-4624-a41f-514c0b67208d","tool_call_id": "call_HmlIDGfLMemXCh88ut0Fqzxz","artifact": [{"lc": 1,"type": "constructor","id": ["langchain","schema","document","Document"],"kwargs": {"id": "e42c541d-e1c7-4023-84a2-bded42cef315","metadata": {"source": "https://lilianweng.github.io/posts/2023-06-23-agent/"},"page_content": "Tree of Thoughts (Yao et al. 2023) extends CoT by exploring multiple reasoning possibilities at each step. It first decomposes the problem into multiple thought steps and generates multiple thoughts per step, creating a tree structure. The search process can be BFS (breadth-first search) or DFS (depth-first search) with each state evaluated by a classifier (via a prompt) or majority vote.\nTask decomposition can be done (1) by LLM with simple prompting like \"Steps for XYZ.\\n1.\", \"What are the subgoals for achieving XYZ?\", (2) by using task-specific instructions; e.g. \"Write a story outline.\" for writing a novel, or (3) with human inputs.","type": "Document"}},{"lc": 1,"type": "constructor","id": ["langchain","schema","document","Document"],"kwargs": {"id": "2511dbdc-2c14-46d7-831f-53182e28d607","metadata": {"source": "https://lilianweng.github.io/posts/2023-06-23-agent/"},"page_content": "Fig. 1. Overview of a LLM-powered autonomous agent system.\nComponent One: Planning#\nA complicated task usually involves many steps. An agent needs to know what they are and plan ahead.\nTask Decomposition#\nChain of thought (CoT; Wei et al. 2022) has become a standard prompting technique for enhancing model performance on complex tasks. The model is instructed to “think step by step” to utilize more test-time computation to decompose hard tasks into smaller and simpler steps. CoT transforms big tasks into multiple manageable tasks and shed lights into an interpretation of the model’s thinking process.","type": "Document"}}],"status": "success"}}]]

}

回复

{"generations": [[{"text": "","generation_info": {"finish_reason": "tool_calls","logprobs": null},"type": "ChatGeneration","message": {"lc": 1,"type": "constructor","id": ["langchain","schema","messages","AIMessage"],"kwargs": {"content": "","additional_kwargs": {"tool_calls": [{"id": "call_gk1TfyTqR131FuctblLrjkz4","function": {"arguments": "{\"query\":\"common extensions of Task Decomposition methods\"}","name": "retrieve"},"type": "function"}],"refusal": null},"response_metadata": {"token_usage": {"completion_tokens": 19,"prompt_tokens": 414,"total_tokens": 433,"completion_tokens_details": {"accepted_prediction_tokens": 0,"audio_tokens": 0,"reasoning_tokens": 0,"rejected_prediction_tokens": 0},"prompt_tokens_details": {"audio_tokens": 0,"cached_tokens": 0}},"model_name": "gpt-4o-mini-2024-07-18","system_fingerprint": "fp_bba3c8e70b","finish_reason": "tool_calls","logprobs": null},"type": "ai","id": "run-28ca8d6e-c417-4784-a97f-0664b909dcaa-0","tool_calls": [{"name": "retrieve","args": {"query": "common extensions of Task Decomposition methods"},"id": "call_gk1TfyTqR131FuctblLrjkz4","type": "tool_call"}],"usage_metadata": {"input_tokens": 414,"output_tokens": 19,"total_tokens": 433,"input_token_details": {"audio": 0,"cache_read": 0},"output_token_details": {"audio": 0,"reasoning": 0}},"invalid_tool_calls": []}}}]],"llm_output": {"token_usage": {"completion_tokens": 19,"prompt_tokens": 414,"total_tokens": 433,"completion_tokens_details": {"accepted_prediction_tokens": 0,"audio_tokens": 0,"reasoning_tokens": 0,"rejected_prediction_tokens": 0},"prompt_tokens_details": {"audio_tokens": 0,"cached_tokens": 0}},"model_name": "gpt-4o-mini-2024-07-18","system_fingerprint": "fp_bba3c8e70b"},"run": null,"type": "LLMResult"

}

交互3

请求

{"messages": [[{"lc": 1,"type": "constructor","id": ["langchain","schema","messages","HumanMessage"],"kwargs": {"content": "What is the standard method for Task Decomposition?\n\nOnce you get the answer, look up common extensions of that method.","type": "human","id": "bb39ed14-f51d-48cc-ac4c-e4eeefffaf55"}},{"lc": 1,"type": "constructor","id": ["langchain","schema","messages","AIMessage"],"kwargs": {"content": "","additional_kwargs": {"tool_calls": [{"id": "call_HmlIDGfLMemXCh88ut0Fqzxz","function": {"arguments": "{\"query\":\"standard method for Task Decomposition\"}","name": "retrieve"},"type": "function"}],"refusal": null},"response_metadata": {"token_usage": {"completion_tokens": 18,"prompt_tokens": 67,"total_tokens": 85,"completion_tokens_details": {"accepted_prediction_tokens": 0,"audio_tokens": 0,"reasoning_tokens": 0,"rejected_prediction_tokens": 0},"prompt_tokens_details": {"audio_tokens": 0,"cached_tokens": 0}},"model_name": "gpt-4o-mini-2024-07-18","system_fingerprint": "fp_bba3c8e70b","finish_reason": "tool_calls","logprobs": null},"type": "ai","id": "run-1992178b-d402-49b0-9c2b-6087ab5c2535-0","tool_calls": [{"name": "retrieve","args": {"query": "standard method for Task Decomposition"},"id": "call_HmlIDGfLMemXCh88ut0Fqzxz","type": "tool_call"}],"usage_metadata": {"input_tokens": 67,"output_tokens": 18,"total_tokens": 85,"input_token_details": {"audio": 0,"cache_read": 0},"output_token_details": {"audio": 0,"reasoning": 0}},"invalid_tool_calls": []}},{"lc": 1,"type": "constructor","id": ["langchain","schema","messages","ToolMessage"],"kwargs": {"content": "Source: {'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/'}\nContent: Tree of Thoughts (Yao et al. 2023) extends CoT by exploring multiple reasoning possibilities at each step. It first decomposes the problem into multiple thought steps and generates multiple thoughts per step, creating a tree structure. The search process can be BFS (breadth-first search) or DFS (depth-first search) with each state evaluated by a classifier (via a prompt) or majority vote.\nTask decomposition can be done (1) by LLM with simple prompting like \"Steps for XYZ.\\n1.\", \"What are the subgoals for achieving XYZ?\", (2) by using task-specific instructions; e.g. \"Write a story outline.\" for writing a novel, or (3) with human inputs.\n\nSource: {'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/'}\nContent: Fig. 1. Overview of a LLM-powered autonomous agent system.\nComponent One: Planning#\nA complicated task usually involves many steps. An agent needs to know what they are and plan ahead.\nTask Decomposition#\nChain of thought (CoT; Wei et al. 2022) has become a standard prompting technique for enhancing model performance on complex tasks. The model is instructed to “think step by step” to utilize more test-time computation to decompose hard tasks into smaller and simpler steps. CoT transforms big tasks into multiple manageable tasks and shed lights into an interpretation of the model’s thinking process.","type": "tool","name": "retrieve","id": "290c729c-60c5-4624-a41f-514c0b67208d","tool_call_id": "call_HmlIDGfLMemXCh88ut0Fqzxz","artifact": [{"lc": 1,"type": "constructor","id": ["langchain","schema","document","Document"],"kwargs": {"id": "e42c541d-e1c7-4023-84a2-bded42cef315","metadata": {"source": "https://lilianweng.github.io/posts/2023-06-23-agent/"},"page_content": "Tree of Thoughts (Yao et al. 2023) extends CoT by exploring multiple reasoning possibilities at each step. It first decomposes the problem into multiple thought steps and generates multiple thoughts per step, creating a tree structure. The search process can be BFS (breadth-first search) or DFS (depth-first search) with each state evaluated by a classifier (via a prompt) or majority vote.\nTask decomposition can be done (1) by LLM with simple prompting like \"Steps for XYZ.\\n1.\", \"What are the subgoals for achieving XYZ?\", (2) by using task-specific instructions; e.g. \"Write a story outline.\" for writing a novel, or (3) with human inputs.","type": "Document"}},{"lc": 1,"type": "constructor","id": ["langchain","schema","document","Document"],"kwargs": {"id": "2511dbdc-2c14-46d7-831f-53182e28d607","metadata": {"source": "https://lilianweng.github.io/posts/2023-06-23-agent/"},"page_content": "Fig. 1. Overview of a LLM-powered autonomous agent system.\nComponent One: Planning#\nA complicated task usually involves many steps. An agent needs to know what they are and plan ahead.\nTask Decomposition#\nChain of thought (CoT; Wei et al. 2022) has become a standard prompting technique for enhancing model performance on complex tasks. The model is instructed to “think step by step” to utilize more test-time computation to decompose hard tasks into smaller and simpler steps. CoT transforms big tasks into multiple manageable tasks and shed lights into an interpretation of the model’s thinking process.","type": "Document"}}],"status": "success"}},{"lc": 1,"type": "constructor","id": ["langchain","schema","messages","AIMessage"],"kwargs": {"content": "","additional_kwargs": {"tool_calls": [{"id": "call_gk1TfyTqR131FuctblLrjkz4","function": {"arguments": "{\"query\":\"common extensions of Task Decomposition methods\"}","name": "retrieve"},"type": "function"}],"refusal": null},"response_metadata": {"token_usage": {"completion_tokens": 19,"prompt_tokens": 414,"total_tokens": 433,"completion_tokens_details": {"accepted_prediction_tokens": 0,"audio_tokens": 0,"reasoning_tokens": 0,"rejected_prediction_tokens": 0},"prompt_tokens_details": {"audio_tokens": 0,"cached_tokens": 0}},"model_name": "gpt-4o-mini-2024-07-18","system_fingerprint": "fp_bba3c8e70b","finish_reason": "tool_calls","logprobs": null},"type": "ai","id": "run-28ca8d6e-c417-4784-a97f-0664b909dcaa-0","tool_calls": [{"name": "retrieve","args": {"query": "common extensions of Task Decomposition methods"},"id": "call_gk1TfyTqR131FuctblLrjkz4","type": "tool_call"}],"usage_metadata": {"input_tokens": 414,"output_tokens": 19,"total_tokens": 433,"input_token_details": {"audio": 0,"cache_read": 0},"output_token_details": {"audio": 0,"reasoning": 0}},"invalid_tool_calls": []}},{"lc": 1,"type": "constructor","id": ["langchain","schema","messages","ToolMessage"],"kwargs": {"content": "Source: {'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/'}\nContent: Tree of Thoughts (Yao et al. 2023) extends CoT by exploring multiple reasoning possibilities at each step. It first decomposes the problem into multiple thought steps and generates multiple thoughts per step, creating a tree structure. The search process can be BFS (breadth-first search) or DFS (depth-first search) with each state evaluated by a classifier (via a prompt) or majority vote.\nTask decomposition can be done (1) by LLM with simple prompting like \"Steps for XYZ.\\n1.\", \"What are the subgoals for achieving XYZ?\", (2) by using task-specific instructions; e.g. \"Write a story outline.\" for writing a novel, or (3) with human inputs.\n\nSource: {'source': 'https://lilianweng.github.io/posts/2023-06-23-agent/'}\nContent: Fig. 1. Overview of a LLM-powered autonomous agent system.\nComponent One: Planning#\nA complicated task usually involves many steps. An agent needs to know what they are and plan ahead.\nTask Decomposition#\nChain of thought (CoT; Wei et al. 2022) has become a standard prompting technique for enhancing model performance on complex tasks. The model is instructed to “think step by step” to utilize more test-time computation to decompose hard tasks into smaller and simpler steps. CoT transforms big tasks into multiple manageable tasks and shed lights into an interpretation of the model’s thinking process.","type": "tool","name": "retrieve","id": "c7583058-518c-437a-90a0-07c20c11703a","tool_call_id": "call_gk1TfyTqR131FuctblLrjkz4","artifact": [{"lc": 1,"type": "constructor","id": ["langchain","schema","document","Document"],"kwargs": {"id": "e42c541d-e1c7-4023-84a2-bded42cef315","metadata": {"source": "https://lilianweng.github.io/posts/2023-06-23-agent/"},"page_content": "Tree of Thoughts (Yao et al. 2023) extends CoT by exploring multiple reasoning possibilities at each step. It first decomposes the problem into multiple thought steps and generates multiple thoughts per step, creating a tree structure. The search process can be BFS (breadth-first search) or DFS (depth-first search) with each state evaluated by a classifier (via a prompt) or majority vote.\nTask decomposition can be done (1) by LLM with simple prompting like \"Steps for XYZ.\\n1.\", \"What are the subgoals for achieving XYZ?\", (2) by using task-specific instructions; e.g. \"Write a story outline.\" for writing a novel, or (3) with human inputs.","type": "Document"}},{"lc": 1,"type": "constructor","id": ["langchain","schema","document","Document"],"kwargs": {"id": "2511dbdc-2c14-46d7-831f-53182e28d607","metadata": {"source": "https://lilianweng.github.io/posts/2023-06-23-agent/"},"page_content": "Fig. 1. Overview of a LLM-powered autonomous agent system.\nComponent One: Planning#\nA complicated task usually involves many steps. An agent needs to know what they are and plan ahead.\nTask Decomposition#\nChain of thought (CoT; Wei et al. 2022) has become a standard prompting technique for enhancing model performance on complex tasks. The model is instructed to “think step by step” to utilize more test-time computation to decompose hard tasks into smaller and simpler steps. CoT transforms big tasks into multiple manageable tasks and shed lights into an interpretation of the model’s thinking process.","type": "Document"}}],"status": "success"}}]]

}

回复

{"generations": [[{"text": "The standard method for Task Decomposition involves a technique known as the Chain of Thought (CoT), which enhances model performance on complex tasks by instructing it to \"think step by step.\" This allows the model to break down large tasks into smaller, more manageable steps. Task decomposition can be achieved through various means, such as:\n\n1. **Simple Prompting**: Using prompts like \"Steps for XYZ.\" or \"What are the subgoals for achieving XYZ?\"\n2. **Task-Specific Instructions**: Providing specific instructions tailored to the task, e.g., \"Write a story outline.\" for writing a novel.\n3. **Human Inputs**: Involving human guidance in the decomposition process.\n\n### Common Extensions of Task Decomposition Methods\nOne notable extension of the standard method is the **Tree of Thoughts** (Yao et al. 2023). This method builds upon CoT by exploring multiple reasoning possibilities at each step. It decomposes the problem into various thought steps and generates multiple thoughts for each step, forming a tree structure. The search process can utilize either breadth-first search (BFS) or depth-first search (DFS), with each state being evaluated by a classifier or majority vote.\n\nThus, while the Chain of Thought is the foundational method, the Tree of Thoughts represents an advanced extension that allows for a more nuanced exploration of tasks.","generation_info": {"finish_reason": "stop","logprobs": null},"type": "ChatGeneration","message": {"lc": 1,"type": "constructor","id": ["langchain","schema","messages","AIMessage"],"kwargs": {"content": "The standard method for Task Decomposition involves a technique known as the Chain of Thought (CoT), which enhances model performance on complex tasks by instructing it to \"think step by step.\" This allows the model to break down large tasks into smaller, more manageable steps. Task decomposition can be achieved through various means, such as:\n\n1. **Simple Prompting**: Using prompts like \"Steps for XYZ.\" or \"What are the subgoals for achieving XYZ?\"\n2. **Task-Specific Instructions**: Providing specific instructions tailored to the task, e.g., \"Write a story outline.\" for writing a novel.\n3. **Human Inputs**: Involving human guidance in the decomposition process.\n\n### Common Extensions of Task Decomposition Methods\nOne notable extension of the standard method is the **Tree of Thoughts** (Yao et al. 2023). This method builds upon CoT by exploring multiple reasoning possibilities at each step. It decomposes the problem into various thought steps and generates multiple thoughts for each step, forming a tree structure. The search process can utilize either breadth-first search (BFS) or depth-first search (DFS), with each state being evaluated by a classifier or majority vote.\n\nThus, while the Chain of Thought is the foundational method, the Tree of Thoughts represents an advanced extension that allows for a more nuanced exploration of tasks.","additional_kwargs": {"refusal": null},"response_metadata": {"token_usage": {"completion_tokens": 274,"prompt_tokens": 762,"total_tokens": 1036,"completion_tokens_details": {"accepted_prediction_tokens": 0,"audio_tokens": 0,"reasoning_tokens": 0,"rejected_prediction_tokens": 0},"prompt_tokens_details": {"audio_tokens": 0,"cached_tokens": 0}},"model_name": "gpt-4o-mini-2024-07-18","system_fingerprint": "fp_bba3c8e70b","finish_reason": "stop","logprobs": null},"type": "ai","id": "run-4f607e3c-ad2c-41ca-8259-6a6ce3a07c7f-0","usage_metadata": {"input_tokens": 762,"output_tokens": 274,"total_tokens": 1036,"input_token_details": {"audio": 0,"cache_read": 0},"output_token_details": {"audio": 0,"reasoning": 0}},"tool_calls": [],"invalid_tool_calls": []}}}]],"llm_output": {"token_usage": {"completion_tokens": 274,"prompt_tokens": 762,"total_tokens": 1036,"completion_tokens_details": {"accepted_prediction_tokens": 0,"audio_tokens": 0,"reasoning_tokens": 0,"rejected_prediction_tokens": 0},"prompt_tokens_details": {"audio_tokens": 0,"cached_tokens": 0}},"model_name": "gpt-4o-mini-2024-07-18","system_fingerprint": "fp_bba3c8e70b"},"run": null,"type": "LLMResult"

}

小结

AI Agent可以被设计为独立完成任务的系统,而RAG是一种用来增强生成模型回答能力的方法。在实际应用中,可以将AI Agent构建在RAG方法之上,从而利用RAG的优点,提升对复杂问题的回答质量和上下文连贯性。通过LangChain,我们可以轻松实现一个最小化的基于RAG的AI Agent,并利用它进行智能问答。希望这篇技术博客对大家有所帮助,如果有更多问题或者讨论,欢迎随时交流!

相关文章:

AI - 浅聊一下基于LangChain的AI Agent

AI - 浅聊一下基于LangChain的AI Agent 大家好,今天我们来聊聊一个很有意思的主题: AI Agent ,就是目前非常流行的所谓的AI智能体。AI的发展日新月异,都2024年末了,如果此时小伙伴们对这个非常火的概念还不清楚的话&a…...

《【Linux】深入理解进程管理与 fork 系统调用的实现原理》

一、引言 在 Linux 操作系统中,进程管理是核心功能之一。进程是操作系统进行资源分配和调度的基本单位。理解进程管理的原理以及 fork 系统调用的实现对于深入掌握 Linux 系统的运行机制至关重要。本文将深入探讨 Linux 中的进程管理以及 fork 系统调用的实现原理&a…...

docker-compose部署skywalking 8.1.0

一、下载镜像 #注意 skywalking-oap-server和skywalking java agent版本强关联,版本需要保持一致性 docker pull elasticsearch:7.9.0 docker pull apache/skywalking-oap-server:8.1.0-es7 docker pull apache/skywalking-ui:8.1.0二、部署文件docker-compose.yam…...

AI 总结的的 AI 学习路线

一、入门阶段:数学基础与编程语言 数学基础 线性代数 当年白纸黑字推演, 都是泪啊,草稿本都用了一卷。 学习向量、矩阵的基本概念,包括向量的加法、减法、点积和叉积,矩阵的乘法、转置等运算。例如,在计算…...

详解)

离散傅里叶级数(DFS)详解

1. 引言 离散傅里叶级数(Discrete Fourier Series, DFS)是信号处理领域中一项基础且重要的数学工具,用于分析和处理周期性的离散信号。它通过将离散时间信号表示为一组正弦和余弦的和,从而使得信号在频域上得到更清晰的描述。与连…...

Java 类加载机制详解

🧑 博主简介:CSDN博客专家,历代文学网(PC端可以访问:https://literature.sinhy.com/#/literature?__c1000,移动端可微信小程序搜索“历代文学”)总架构师,15年工作经验,…...

1.1 Beginner Level学习之“编写简单的发布服务器和订阅服务器”(第十一节)

学习大纲: 1. 编写发布服务器节点 在 ROS 中,节点是连接到 ROS 网络的可执行文件。我创建了一个名为 talker 的发布者节点,它会向一个主题 chatter 不断发送消息。 首先,进入你的工作包 beginner_tutorials(假设你已…...

AIQuora:开启论文写作新篇章

在这个信息爆炸的时代,学术写作已成为研究者不可或缺的技能。然而,面对繁重的写作任务,许多学者和学生常常感到力不从心。AIQuora,一个专业的文理工科论文智能写作助手,以其免费开题报告生成功能,为学术写作…...

:字符串处理函数)

【C语言】库函数常见的陷阱与缺陷(1):字符串处理函数

目录 一、 strcpy 函数 1.1. 功能与常见用法 1.2. 陷阱与缺陷 1.3. 安全替代 1.4. 代码示例 二、strcat 函数 2.1. 功能与常见用法 2.2. 陷阱与缺陷 2.3. 安全替代 2.4. 代码示例 三、strcmp 函数 3.1. 功能与常见用法 3.2. 陷阱与缺陷 3.3. 安全替代 3.4. 代…...

Mysql索引原理及优化——岁月云实战笔记

根据Mysql索引原理及优化这个博主的视频学习,对现在的岁月云项目中mysql进行优化,我要向这个博主致敬,之前应用居多,理论所知甚少,于是将学习到东西,应用下来,看看是否有好的改观。 1 索引原理…...

AGCRN论文解读

一、创新点 传统GCN只能基于静态预定义图建模全局共享模式,而AGCRN通过两种GCN的增强模块(NAPL、DAGG)实现了更精细的节点特性学习和图结构生成。 1 节点自适应参数学习模块(NAPL) 传统GCN通过共享参数(权重…...

Python机器学习笔记(五、决策树集成)

集成(ensemble)是合并多个机器学习模型来构建更强大模型的方法。这里主要学习两种集成模型:一是随机森林(random forest);二是梯度提升决策树(gradient boosted decision tree)。 1…...

Kafka单机及集群部署及基础命令

目录 一、 Kafka介绍1、kafka定义2、传统消息队列应用场景3、kafka特点和优势4、kafka角色介绍5、分区和副本的优势6、kafka 写入消息的流程 二、Kafka单机部署1、基础环境2、iptables -L -n配置3、下载并解压kafka部署包至/usr/local/目录4、修改server.properties5、修改/etc…...

如何使用 Python 实现链表的反转?

在Python中实现链表的反转可以通过几种不同的方法。这里,我将向你展示如何使用迭代和递归两种方式来反转链表。 1. 迭代方法 迭代方法是通过遍历链表,逐个节点地改变其指向来实现反转的。 class ListNode: def __init__(self, val0, nextNone): …...

react跳转传参的方法

传参 首先下载命令行 npm react-router-dom 然后引入此代码 前面跳转的是页面 后面传的是你需要传的参数接参 引入此方法 useLocation():这是 react-router-dom 提供的一个钩子,用于获取当前路由的位置对象location.state:这是从其他页面传…...

Scala:正则表达式

object test03 {//正则表达式def main(args: Array[String]): Unit {//定义一个正则表达式//1.[ab]:表示匹配一个字符,或者是a,或者是b//2.[a-z]:表示从a到z的26个字母中的任意一个//3.[A-Z]:表示从A到Z的26个字母中的任意一个//4.[0-9]:表示从0到9的10…...

【数电】常见时序逻辑电路设计和分析

本文目的:一是对真题常考题型总结,二是对常见时序电路设计方法进行归纳,给后面看这个文档的人留有一点有价值的东西。 1.不同模计数器设计 2.序列信号产生和检测电路 2.1序列信号产生电路 2.1.1设计思路 主要设计思路有三种 1)…...

Spring IOCAOP

Spring介绍 个人博客原地址 Spring是一个IOC(DI)和AOP框架 Sprng的优良特性 非侵入式:基于Spring开发的应用中的对象可以不依赖于Spring的API 依赖注入:DI是控制反转(IOC)最经典的实现 面向切面编程&am…...

Scala中的隐式转换

package qiqiobject qqqqq {//给参数设置一个默认值:如果用户不传入,就使用这个值def sayName(implicit name:String"小花"):Unit{println(s"我叫:$name")}//需求:能够自己设置函数的参数默认值,而不是在代码…...

GESP 2024年12月认证 真题 及答案

CCF GESP第八次认证将于2024年12月7日上午9:30正式开考,1-4级认证时间为上午9:30-11:30,5-8级认证时间为下午13:30-16:30。认证语言包括:C、 Python和图形化编程三种语言,其中C和Python编程为1-8级,图形化编程为1-4级。…...

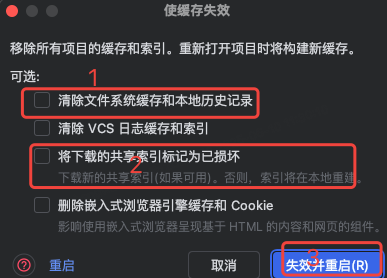

idea大量爆红问题解决

问题描述 在学习和工作中,idea是程序员不可缺少的一个工具,但是突然在有些时候就会出现大量爆红的问题,发现无法跳转,无论是关机重启或者是替换root都无法解决 就是如上所展示的问题,但是程序依然可以启动。 问题解决…...

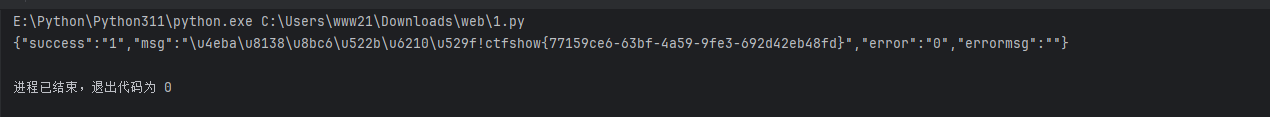

CTF show Web 红包题第六弹

提示 1.不是SQL注入 2.需要找关键源码 思路 进入页面发现是一个登录框,很难让人不联想到SQL注入,但提示都说了不是SQL注入,所以就不往这方面想了 先查看一下网页源码,发现一段JavaScript代码,有一个关键类ctfs…...

服务器硬防的应用场景都有哪些?

服务器硬防是指一种通过硬件设备层面的安全措施来防御服务器系统受到网络攻击的方式,避免服务器受到各种恶意攻击和网络威胁,那么,服务器硬防通常都会应用在哪些场景当中呢? 硬防服务器中一般会配备入侵检测系统和预防系统&#x…...

数据库分批入库

今天在工作中,遇到一个问题,就是分批查询的时候,由于批次过大导致出现了一些问题,一下是问题描述和解决方案: 示例: // 假设已有数据列表 dataList 和 PreparedStatement pstmt int batchSize 1000; // …...

《C++ 模板》

目录 函数模板 类模板 非类型模板参数 模板特化 函数模板特化 类模板的特化 模板,就像一个模具,里面可以将不同类型的材料做成一个形状,其分为函数模板和类模板。 函数模板 函数模板可以简化函数重载的代码。格式:templa…...

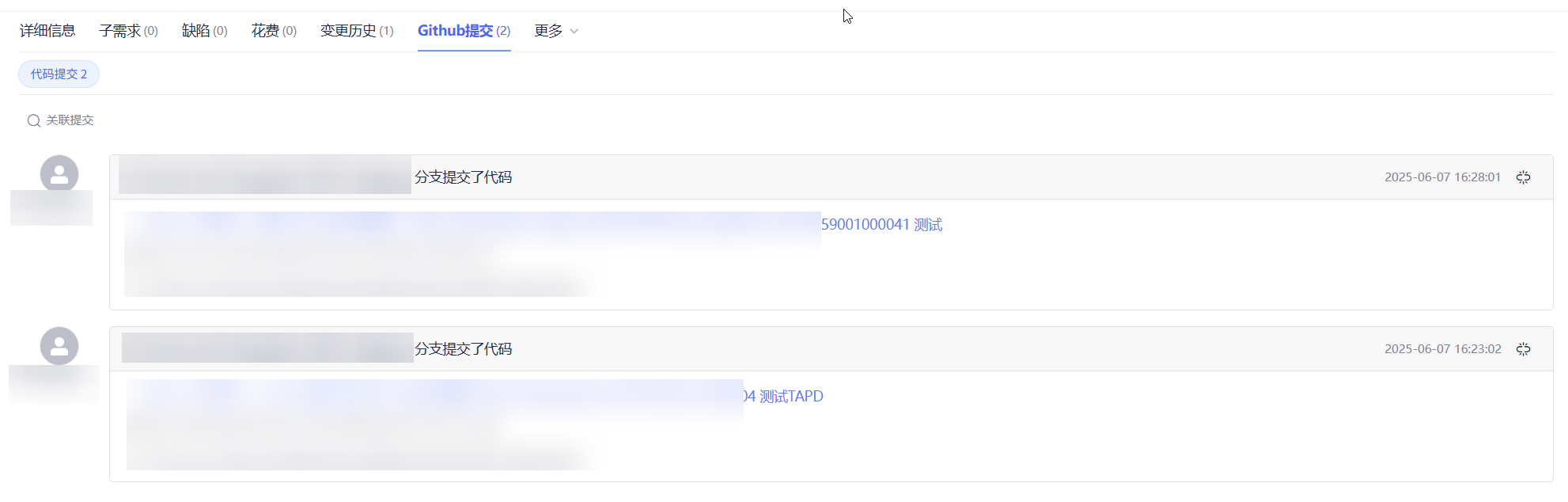

基于 TAPD 进行项目管理

起因 自己写了个小工具,仓库用的Github。之前在用markdown进行需求管理,现在随着功能的增加,感觉有点难以管理了,所以用TAPD这个工具进行需求、Bug管理。 操作流程 注册 TAPD,需要提供一个企业名新建一个项目&#…...

Python 训练营打卡 Day 47

注意力热力图可视化 在day 46代码的基础上,对比不同卷积层热力图可视化的结果 import torch import torch.nn as nn import torch.optim as optim from torchvision import datasets, transforms from torch.utils.data import DataLoader import matplotlib.pypl…...

uni-app学习笔记三十五--扩展组件的安装和使用

由于内置组件不能满足日常开发需要,uniapp官方也提供了众多的扩展组件供我们使用。由于不是内置组件,需要安装才能使用。 一、安装扩展插件 安装方法: 1.访问uniapp官方文档组件部分:组件使用的入门教程 | uni-app官网 点击左侧…...

C# winform教程(二)----checkbox

一、作用 提供一个用户选择或者不选的状态,这是一个可以多选的控件。 二、属性 其实功能大差不差,除了特殊的几个外,与button基本相同,所有说几个独有的 checkbox属性 名称内容含义appearance控件外观可以变成按钮形状checkali…...

C++--string的模拟实现

一,引言 string的模拟实现是只对string对象中给的主要功能经行模拟实现,其目的是加强对string的底层了解,以便于在以后的学习或者工作中更加熟练的使用string。本文中的代码仅供参考并不唯一。 二,默认成员函数 string主要有三个成员变量,…...