AI学习记录 - 依据 minimind 项目入门

想学习AI,还是需要从头到尾跑一边流程,最近看到这个项目 minimind, 我也记录下学习到的东西,需要结合项目的readme看。

1、github链接

https://github.com/jingyaogong/minimind?tab=readme-ov-file

2、硬件环境:英伟达4070ti

3、软件环境:

1、使用conda环境

conda create --name minimind python=3.9

2、python==3.9

3、torch版本安装方式:

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu121

官网:https://pytorch.org/

4、安装英伟达显卡驱动

5、安装cuda版本:12.1

6、按照readme的说法,下载了四个数据集文件

4、为什么需要训练一个词汇表:

找出高频词汇

5、将预训练用的文本语料按照词汇表进行分割

6、语料分割之后,还需要进行对句子进行掩码语料的分割,用于训练阶段预测下一个词

你知道光速是多少吗?

- 你 * * * * * * * * *

- 你知 * * * * * * * *

- 你知道 * * * * * * *

- 你知道光 * * * * * *

- 你知道光速 * * * * *

- 你知道光速是 * * * *

- 你知道光速是多 * * *

- 你知道光速是多少 * *

7、给语料添加开始符号,结束符号

8、直接开始训练

执行python命令:

python data_process.py: 这一步具体做什么还在看,猜测就是按照特定的策略从超大文本预料当中获取自己需要的语料。

python 1-pretrain.py: 这一步开始训练,耗费时间太长了,2个小时连一个batch都没有跑完,我就没有训练完,就截个图看看:

9、代码文件挺多的,为了保持学习,还是需要逐行代码解释

1-pretrain.py

import os

import platform

import argparse

import time

import math

import warningsimport pandas as pd

import torch

import torch.distributed as dist

from torch import optim

from torch.nn.parallel import DistributedDataParallel

from torch.optim.lr_scheduler import CosineAnnealingLR

from torch.utils.data import DataLoader, DistributedSampler

from contextlib import nullcontextfrom transformers import AutoTokenizerfrom model.model import Transformer

from model.LMConfig import LMConfig

from model.dataset import PretrainDatasetwarnings.filterwarnings('ignore')def Logger(content):if not ddp or dist.get_rank() == 0:print(content)# 动态学习率变化

def get_lr(it, all):warmup_iters = args.warmup_iterslr_decay_iters = allmin_lr = args.learning_rate / 10if it < warmup_iters:return args.learning_rate * it / warmup_itersif it > lr_decay_iters:return min_lrdecay_ratio = (it - warmup_iters) / (lr_decay_iters - warmup_iters)assert 0 <= decay_ratio <= 1coeff = 0.5 * (1.0 + math.cos(math.pi * decay_ratio))return min_lr + coeff * (args.learning_rate - min_lr)def train_epoch(epoch, wandb):start_time = time.time()for step, (X, Y, loss_mask) in enumerate(train_loader):X = X.to(args.device)Y = Y.to(args.device)loss_mask = loss_mask.to(args.device)lr = get_lr(epoch * iter_per_epoch + step, args.epochs * iter_per_epoch)for param_group in optimizer.param_groups:param_group['lr'] = lrwith ctx:out = model(X, Y)loss = out.last_loss / args.accumulation_stepsloss_mask = loss_mask.view(-1)loss = torch.sum(loss * loss_mask) / loss_mask.sum()scaler.scale(loss).backward()if (step + 1) % args.accumulation_steps == 0:scaler.unscale_(optimizer)torch.nn.utils.clip_grad_norm_(model.parameters(), args.grad_clip)scaler.step(optimizer)scaler.update()optimizer.zero_grad(set_to_none=True)if step % args.log_interval == 0:spend_time = time.time() - start_timeLogger('Epoch:[{}/{}]({}/{}) loss:{:.3f} lr:{:.7f} epoch_Time:{}min:'.format(epoch,args.epochs,step,iter_per_epoch,loss.item() * args.accumulation_steps,optimizer.param_groups[-1]['lr'],spend_time / (step + 1) * iter_per_epoch // 60 - spend_time // 60))if (wandb is not None) and (not ddp or dist.get_rank() == 0):wandb.log({"loss": loss.item() * args.accumulation_steps,"lr": optimizer.param_groups[-1]['lr'],"epoch_Time": spend_time / (step + 1) * iter_per_epoch // 60 - spend_time // 60})if (step + 1) % args.save_interval == 0 and (not ddp or dist.get_rank() == 0):model.eval()moe_path = '_moe' if lm_config.use_moe else ''ckp = f'{args.save_dir}/pretrain_{lm_config.dim}{moe_path}.pth'if isinstance(model, torch.nn.parallel.DistributedDataParallel):state_dict = model.module.state_dict()else:state_dict = model.state_dict()torch.save(state_dict, ckp)model.train()def init_model():def count_parameters(model):return sum(p.numel() for p in model.parameters() if p.requires_grad)tokenizer = AutoTokenizer.from_pretrained('./model/minimind_tokenizer')model = Transformer(lm_config).to(args.device)# moe_path = '_moe' if lm_config.use_moe else ''Logger(f'LLM总参数量:{count_parameters(model) / 1e6:.3f} 百万')return model, tokenizerdef init_distributed_mode():if not ddp: returnglobal ddp_local_rank, DEVICEdist.init_process_group(backend="nccl")ddp_rank = int(os.environ["RANK"])ddp_local_rank = int(os.environ["LOCAL_RANK"])ddp_world_size = int(os.environ["WORLD_SIZE"])DEVICE = f"cuda:{ddp_local_rank}"torch.cuda.set_device(DEVICE)# torchrun --nproc_per_node 2 1-pretrain.py

if __name__ == "__main__":parser = argparse.ArgumentParser(description="MiniMind Pretraining")# out_dir: 输出目录,用于保存模型和日志。parser.add_argument("--out_dir", type=str, default="out", help="Output directory")# epochs: 训练的轮数。parser.add_argument("--epochs", type=int, default=20, help="Number of epochs")# batch_size: 每个批次的样本数量。parser.add_argument("--batch_size", type=int, default=64, help="Batch size")# learning_rate: 学习率。parser.add_argument("--learning_rate", type=float, default=2e-4, help="Learning rate")# device: 用于训练的设备,默认为 cuda:0 如果 GPU 可用,否则为 cpu。parser.add_argument("--device", type=str, default="cuda:0" if torch.cuda.is_available() else "cpu", help="Device to use")# dtype: 数据类型(如 bfloat16)。parser.add_argument("--dtype", type=str, default="bfloat16", help="Data type")# use_wandb: 是否使用 Weights & Biases 进行实验跟踪。parser.add_argument("--use_wandb", action="store_true", help="Use Weights & Biases")# wandb_project: Weights & Biases 的项目名称。parser.add_argument("--wandb_project", type=str, default="MiniMind-Pretrain", help="Weights & Biases project name")# num_workers: 数据加载的工作线程数。parser.add_argument("--num_workers", type=int, default=1, help="Number of workers for data loading")# data_path: 训练数据的路径。parser.add_argument("--data_path", type=str, default="./dataset/pretrain_data.csv", help="Path to training data")# ddp: 是否启用分布式训练(使用 DistributedDataParallel)。parser.add_argument("--ddp", action="store_true", help="Use DistributedDataParallel")# accumulation_steps: 梯度累积的步数。parser.add_argument("--accumulation_steps", type=int, default=8, help="Gradient accumulation steps")# grad_clip: 梯度裁剪的阈值。parser.add_argument("--grad_clip", type=float, default=1.0, help="Gradient clipping threshold")# warmup_iters: 热身步骤数。parser.add_argument("--warmup_iters", type=int, default=0, help="Number of warmup iterations")# log_interval: 日志记录的间隔。parser.add_argument("--log_interval", type=int, default=100, help="Logging interval")# save_interval: 保存模型的间隔。parser.add_argument("--save_interval", type=int, default=1000, help="Model saving interval")# local_rank: 分布式训练时当前节点的 rank。parser.add_argument('--local_rank', type=int, default=-1, help='local rank for distributed training')args = parser.parse_args()lm_config = LMConfig()# 初始化配置,获取最大序列长度 max_seq_len。max_seq_len = lm_config.max_seq_lenargs.save_dir = os.path.join(args.out_dir)os.makedirs(args.save_dir, exist_ok=True)os.makedirs(args.out_dir, exist_ok=True)tokens_per_iter = args.batch_size * max_seq_len# 设置随机种子为 1337,确保实验可复现。torch.manual_seed(1337)# 设置 device_type 为 cuda 或 cpu,根据可用的硬件。device_type = "cuda" if "cuda" in args.device else "cpu"args.wandb_run_name = f"MiniMind-Pretrain-Epoch-{args.epochs}-BatchSize-{args.batch_size}-LearningRate-{args.learning_rate}"# 如果使用 GPU,启用混合精度训练(torch.cuda.amp.autocast())。ctx = nullcontext() if device_type == "cpu" else torch.cuda.amp.autocast()ddp = int(os.environ.get("RANK", -1)) != -1 # is this a ddp run?ddp_local_rank, DEVICE = 0, "cuda:0"# 如果启用 Weights & Biases(use_wandb),则初始化一个新的运行,用于跟踪训练过程。if ddp:init_distributed_mode()args.device = torch.device(DEVICE)if args.use_wandb and (not ddp or ddp_local_rank == 0):import wandbwandb.init(project=args.wandb_project, name=args.wandb_run_name)else:wandb = Nonemodel, tokenizer = init_model()df = pd.read_csv(args.data_path)df = df.sample(frac=1.0)train_ds = PretrainDataset(df, tokenizer, max_length=max_seq_len)train_sampler = DistributedSampler(train_ds) if ddp else Nonetrain_loader = DataLoader(train_ds,batch_size=args.batch_size,pin_memory=True,drop_last=False,shuffle=False,num_workers=args.num_workers,sampler=train_sampler)# 如果使用混合精度训练,初始化 GradScaler。scaler = torch.cuda.amp.GradScaler(enabled=(args.dtype in ['float16', 'bfloat16']))# 使用 Adam 优化器进行训练。optimizer = optim.Adam(model.parameters(), lr=args.learning_rate)# 如果条件满足,则尝试编译模型(这段代码被注释掉了,实际上不会执行)。if False and platform.system() != 'Windows' and float(torch.__version__.split('.')[0]) >= 2:Logger("compiling the model... (takes a ~minute)")unoptimized_model = modelmodel = torch.compile(model)# 如果启用了 DDP,模型会被包装在 DistributedDataParallel 中,以支持分布式训练。if ddp:model._ddp_params_and_buffers_to_ignore = {"pos_cis"}model = DistributedDataParallel(model, device_ids=[ddp_local_rank])# 设置每个 epoch 中的迭代次数,并开始训练过程。iter_per_epoch = len(train_loader)for epoch in range(args.epochs):train_epoch(epoch, wandb)2-eval.py

import random

import timeimport numpy as np

import torch

import warnings

from transformers import AutoTokenizer, AutoModelForCausalLM

from model.model import Transformer

from model.LMConfig import LMConfigwarnings.filterwarnings('ignore')def count_parameters(model):return sum(p.numel() for p in model.parameters() if p.requires_grad)def init_model(lm_config):tokenizer = AutoTokenizer.from_pretrained('./model/minimind_tokenizer')model_from = 1 # 1从权重,2用transformersif model_from == 1:# Load Model from Local Checkpoint# moe_path is conditionally added to the checkpoint filename if lm_config.use_moe is True. This suggests that the model might have an optional Mixture-of-Experts (MoE) configuratiomoe_path = '_moe' if lm_config.use_moe else ''ckp = f'./out/full_sft_{lm_config.dim}{moe_path}.pth'model = Transformer(lm_config)state_dict = torch.load(ckp, map_location=device)# 处理不需要的前缀unwanted_prefix = '_orig_mod.'# 包含了所有的权重,k是每一层权重的名字, v 是权重矩阵# 这段代码的目的是删除从某个模型加载时可能附加的多余前缀(如 _orig_mod.),确保参数名符合当前模型的要求,避免加载时出错。# { {# '_orig_mod.layer1.weight': torch.Tensor(...), 'layer1.weight': torch.Tensor(...),# '_orig_mod.layer1.bias': torch.Tensor(...), => 'layer1.bias': torch.Tensor(...),# 'layer2.weight': torch.Tensor(...), 'layer2.weight': torch.Tensor(...),# } }for k, v in list(state_dict.items()):if k.startswith(unwanted_prefix):state_dict[k[len(unwanted_prefix):]] = state_dict.pop(k)# 下面的代码是去掉掩码层# { # 'layer2.bias': torch.Tensor(...), { # 'mask_embedding': torch.Tensor(...), => 'layer2.bias': torch.Tensor(...), # } } for k, v in list(state_dict.items()):if 'mask' in k:del state_dict[k]# 加载到模型中model.load_state_dict(state_dict, strict=False)else:# Load Model from Hugging Face Hubmodel = AutoModelForCausalLM.from_pretrained('./minimind-v1-small', trust_remote_code=True)model = model.to(device)print(f'模型参数: {count_parameters(model) / 1e6} 百万 = {count_parameters(model) / 1e9} B (Billion)')return model, tokenizerdef setup_seed(seed):random.seed(seed) # 设置 Python 的随机种子np.random.seed(seed) # 设置 NumPy 的随机种子torch.manual_seed(seed) # 设置 PyTorch 的随机种子torch.cuda.manual_seed(seed) # 为当前 GPU 设置随机种子(如果有)torch.cuda.manual_seed_all(seed) # 为所有 GPU 设置随机种子(如果有)torch.backends.cudnn.deterministic = True # 确保每次返回的卷积算法是确定的torch.backends.cudnn.benchmark = False # 关闭 cuDNN 的自动调优,避免不确定性if __name__ == "__main__":# -----------------------------------------------------------------------------out_dir = 'out'start = ""temperature = 0.7# top_k = 16:控制生成文本时的候选词汇数目(Top-K Sampling)。k=16 表示每次生成时,会从16个最可能的单词中选择。top_k = 16# device = 'cpu'device = 'cuda:0' if torch.cuda.is_available() else 'cpu'dtype = 'bfloat16'max_seq_len = 1 * 1024lm_config = LMConfig()lm_config.max_seq_len = max_seq_len# 控制是否在对话中加入历史聊天记录。如果为False,则每次都从一个空的对话开始。contain_history_chat = False# -----------------------------------------------------------------------------model, tokenizer = init_model(lm_config)# 设置模型为评估模式(eval)。这意味着模型将不进行训练,例如禁用 Dropout 层等。model = model.eval()# 推送到huggingface# model.push_to_hub("minimind")# tokenizer.push_to_hub("minimind")# answer_way = int(input('输入0自动测试,输入1问题测试:'))answer_way = 0stream = Trueprompt_datas = ['你叫什么名字','你是谁','中国有哪些比较好的大学?',]messages_origin = []messages = messages_origini = 0while i < len(prompt_datas):# 为每次生成设置一个随机种子,确保每次生成的回答都不同。random_seed = random.randint(0, 2 ** 32 - 1)# 调用 setup_seed 函数设置随机种子,确保训练和推理过程中产生的随机数可重复。setup_seed(random_seed)if not contain_history_chat:messages = messages_origin.copy()if answer_way == 1:prompt = input('[Q]: ')else:prompt = prompt_datas[i]print(f'[Q]: {prompt}')i += 1prompt = '请问,' + promptmessages.append({"role": "user", "content": prompt})# [-(max_seq_len - 1):] 这是为了确保输入长度不会超过模型的最大序列长度 max_seq_len,而且为生成时留出至少 1 个 token 的空间 。new_prompt = tokenizer.apply_chat_template(messages,tokenize=False,add_generation_prompt=True)[-(max_seq_len - 1):]print("new_prompt", new_prompt)# new_prompt = <s>user请问,你叫什么名字</s><s>assistant# 将 new_prompt 转换成 token id,生成适合模型输入的张量 x# new_prompt = "请问,你叫什么名字?"# input_ids = [101, 7592, 8024, 8110, 9361, 10707, 102]x = tokenizer(new_prompt).data['input_ids']print("x1", x) # [1, 320, 275, 201, 4600, 270, 608, 5515, 1541, 1167, 1129, 2, 201, 1, 1078, 538, 501, 201]# 使用 torch.no_grad() 禁用梯度计算,这样可以加速推理过程并节省内存。x = (torch.tensor(x, dtype=torch.long, device=device)[None, ...]) # 等价于 x.unsqueeze(0)print("x2", x) # [[1, 320, 275, 201, 4600, 270, 608, 5515, 1541, 1167, 1129, 2, 201, 1, 1078, 538, 501, 201]]answer = new_promptprint("answer ========================", answer)with torch.no_grad():# model.generate() 使用模型生成回答,参数包括输入的 x,生成的最大 token 数量 max_new_tokens,温度 temperature , Top-K Sampling 的 top_k,以及是否使用流式生成 stream 。# y 是从模型中生成的 token,tokenizer.decode() 用于将这些 token 转换为可读的文本。res_y = model.generate(x, tokenizer.eos_token_id, max_new_tokens=max_seq_len, temperature=temperature, top_k=top_k, stream=stream)print("res_y", res_y)try:y = next(res_y)except StopIteration:print("No answer")continuehistory_idx = 0while y != None:answer = tokenizer.decode(y[0].tolist())print("Next word:", answer)# 通过检查生成的文本,如果最后一个字符是 '�'(通常是乱码的标志), 则继续获取下一个输出直到得到有效的回答 。if answer and answer[-1] == '�':try:y = next(res_y)print("="+y+"=")except:breakcontinueif not len(answer):try:y = next(res_y)print("="+y+"=")except:breakcontinuetry:y = next(res_y)# print("Next token:", y) # tensor([[4064, 1589, 1886, 2933, 270]], device='cuda:0')except:breakhistory_idx = len(answer)if not stream:break# 如果设置了 contain_history_chat = True,则会将助手的回答加入 messages 中,作为下一轮对话的上下文。if contain_history_chat:assistant_answer = answer.replace(new_prompt, "")messages.append({"role": "assistant", "content": assistant_answer})

3-full_sft.py

import os

import platform

import argparse

import time

import math

import warningsimport pandas as pd

import torch

import torch.nn.functional as F

import torch.distributed as dist

from contextlib import nullcontextfrom torch import optim

from torch.nn.parallel import DistributedDataParallel

from torch.utils.data import DataLoader, DistributedSampler

from transformers import AutoTokenizer, AutoModelForCausalLM

from model.model import Transformer

from model.LMConfig import LMConfig

from model.dataset import SFTDatasetwarnings.filterwarnings('ignore')def Logger(content):if not ddp or dist.get_rank() == 0:print(content)def get_lr(it, all):warmup_iters = args.warmup_iterslr_decay_iters = allmin_lr = args.learning_rate / 10if it < warmup_iters:return args.learning_rate * it / warmup_itersif it > lr_decay_iters:return min_lrdecay_ratio = (it - warmup_iters) / (lr_decay_iters - warmup_iters)assert 0 <= decay_ratio <= 1coeff = 0.5 * (1.0 + math.cos(math.pi * decay_ratio))return min_lr + coeff * (args.learning_rate - min_lr)def train_epoch(epoch, wandb):start_time = time.time()for step, (X, Y, loss_mask) in enumerate(train_loader):X = X.to(args.device)Y = Y.to(args.device)loss_mask = loss_mask.to(args.device)lr = get_lr(epoch * iter_per_epoch + step, args.epochs * iter_per_epoch)for param_group in optimizer.param_groups:param_group['lr'] = lrwith ctx:logits = model(X, Y).logitsloss = F.cross_entropy(logits.view(-1, logits.size(-1)), Y.view(-1), ignore_index=0, reduction='none')loss_mask = loss_mask.view(-1)loss = torch.sum(loss * loss_mask) / loss_mask.sum()scaler.scale(loss).backward()if (step + 1) % args.accumulation_steps == 0:scaler.unscale_(optimizer)torch.nn.utils.clip_grad_norm_(model.parameters(), args.grad_clip)scaler.step(optimizer)scaler.update()optimizer.zero_grad(set_to_none=True)if step % args.log_interval == 0:spend_time = time.time() - start_timeLogger('Epoch:[{}/{}]({}/{}) loss:{:.3f} lr:{:.7f} epoch_Time:{}min:'.format(epoch,args.epochs,step,iter_per_epoch,loss.item(),optimizer.param_groups[-1]['lr'],spend_time / (step + 1) * iter_per_epoch // 60 - spend_time // 60))if (wandb is not None) and (not ddp or dist.get_rank() == 0):wandb.log({"loss": loss,"lr": optimizer.param_groups[-1]['lr'],"epoch_Time": spend_time / (step + 1) * iter_per_epoch // 60 - spend_time // 60})if (step + 1) % args.save_interval == 0 and (not ddp or dist.get_rank() == 0):model.eval()moe_path = '_moe' if lm_config.use_moe else ''ckp = f'{args.save_dir}/full_sft_{lm_config.dim}{moe_path}.pth'if isinstance(model, torch.nn.parallel.DistributedDataParallel):state_dict = model.module.state_dict()else:state_dict = model.state_dict()torch.save(state_dict, ckp)model.train()def init_model():tokenizer = AutoTokenizer.from_pretrained('./model/minimind_tokenizer')model_from = 1 # 1从权重,2用transformersdef count_parameters(model):return sum(p.numel() for p in model.parameters() if p.requires_grad)if model_from == 1:model = Transformer(lm_config)moe_path = '_moe' if lm_config.use_moe else ''ckp = f'./out/pretrain_{lm_config.dim}{moe_path}.pth'state_dict = torch.load(ckp, map_location=args.device)unwanted_prefix = '_orig_mod.'for k, v in list(state_dict.items()):if k.startswith(unwanted_prefix):state_dict[k[len(unwanted_prefix):]] = state_dict.pop(k)model.load_state_dict(state_dict, strict=False)else:model = AutoModelForCausalLM.from_pretrained('./minimind-v1-small', trust_remote_code=True)Logger(f'LLM总参数量:{count_parameters(model) / 1e6:.3f} 百万')model = model.to(args.device)return model, tokenizerdef init_distributed_mode():if not ddp: returnglobal ddp_local_rank, DEVICEdist.init_process_group(backend="nccl")ddp_rank = int(os.environ["RANK"])ddp_local_rank = int(os.environ["LOCAL_RANK"])ddp_world_size = int(os.environ["WORLD_SIZE"])DEVICE = f"cuda:{ddp_local_rank}"torch.cuda.set_device(DEVICE)if __name__ == "__main__":parser = argparse.ArgumentParser(description="MiniMind Full SFT")parser.add_argument("--out_dir", type=str, default="out", help="Output directory")parser.add_argument("--epochs", type=int, default=19, help="Number of epochs")parser.add_argument("--batch_size", type=int, default=32, help="Batch size")parser.add_argument("--learning_rate", type=float, default=1e-4, help="Learning rate")parser.add_argument("--device", type=str, default="cuda:0" if torch.cuda.is_available() else "cpu", help="Device to use")parser.add_argument("--dtype", type=str, default="bfloat16", help="Data type")parser.add_argument("--use_wandb", action="store_true", help="Use Weights & Biases")parser.add_argument("--wandb_project", type=str, default="MiniMind-Full-SFT", help="Weights & Biases project name")parser.add_argument("--num_workers", type=int, default=1, help="Number of workers for data loading")parser.add_argument("--ddp", action="store_true", help="Use DistributedDataParallel")parser.add_argument("--accumulation_steps", type=int, default=1, help="Gradient accumulation steps")parser.add_argument("--grad_clip", type=float, default=1.0, help="Gradient clipping threshold")parser.add_argument("--warmup_iters", type=int, default=0, help="Number of warmup iterations")parser.add_argument("--log_interval", type=int, default=100, help="Logging interval")parser.add_argument("--save_interval", type=int, default=1000, help="Model saving interval")parser.add_argument('--local_rank', type=int, default=-1, help='local rank for distributed training')args = parser.parse_args()lm_config = LMConfig()max_seq_len = lm_config.max_seq_lenargs.save_dir = os.path.join(args.out_dir)os.makedirs(args.save_dir, exist_ok=True)os.makedirs(args.out_dir, exist_ok=True)tokens_per_iter = args.batch_size * max_seq_lentorch.manual_seed(1337)device_type = "cuda" if "cuda" in args.device else "cpu"args.wandb_run_name = f"MiniMind-Full-SFT-Epoch-{args.epochs}-BatchSize-{args.batch_size}-LearningRate-{args.learning_rate}"ctx = nullcontext() if device_type == "cpu" else torch.cuda.amp.autocast()ddp = int(os.environ.get("RANK", -1)) != -1 # is this a ddp run?ddp_local_rank, DEVICE = 0, "cuda:0"if ddp:init_distributed_mode()args.device = torch.device(DEVICE)if args.use_wandb and (not ddp or ddp_local_rank == 0):import wandbwandb.init(project=args.wandb_project, name=args.wandb_run_name)else:wandb = Nonemodel, tokenizer = init_model()df = pd.read_csv('./dataset/sft_data_single.csv')df = df.sample(frac=1.0)train_ds = SFTDataset(df, tokenizer, max_length=max_seq_len)train_sampler = DistributedSampler(train_ds) if ddp else Nonetrain_loader = DataLoader(train_ds,batch_size=args.batch_size,pin_memory=True,drop_last=False,shuffle=False,num_workers=args.num_workers,sampler=train_sampler)scaler = torch.cuda.amp.GradScaler(enabled=(args.dtype in ['float16', 'bfloat16']))optimizer = optim.Adam(model.parameters(), lr=args.learning_rate)if False and not lm_config.use_moe and platform.system() != 'Windows' and float(torch.__version__.split('.')[0]) >= 2:Logger("compiling the model... (takes a ~minute)")unoptimized_model = modelmodel = torch.compile(model)if ddp:model._ddp_params_and_buffers_to_ignore = {"pos_cis"}model = DistributedDataParallel(model, device_ids=[ddp_local_rank])iter_per_epoch = len(train_loader)for epoch in range(args.epochs):train_epoch(epoch, wandb)4-lora_sft.py

import os

import platform

import argparse

import time

import math

import warnings

import torch

import pandas as pd

import torch.nn.functional as F

from contextlib import nullcontextfrom torch import optim

from transformers import AutoTokenizer

from transformers import AutoModelForCausalLM

from peft import get_peft_model, LoraConfig, TaskType

from torch.utils.data import DataLoader

from model.LMConfig import LMConfig

from model.dataset import SFTDataset

from model.model import Transformerwarnings.filterwarnings('ignore')def Logger(content):print(content)def get_lr(it, all):warmup_iters = args.warmup_iterslr_decay_iters = allmin_lr = args.learning_rate / 10if it < warmup_iters:return args.learning_rate * it / warmup_itersif it > lr_decay_iters:return min_lrdecay_ratio = (it - warmup_iters) / (lr_decay_iters - warmup_iters)assert 0 <= decay_ratio <= 1coeff = 0.5 * (1.0 + math.cos(math.pi * decay_ratio))return min_lr + coeff * (args.learning_rate - min_lr)def train_epoch(epoch, wandb):start_time = time.time()for step, (X, Y, loss_mask) in enumerate(train_loader):X = X.to(args.device)Y = Y.to(args.device)loss_mask = loss_mask.to(args.device)lr = get_lr(epoch * iter_per_epoch + step, args.epochs * iter_per_epoch)for param_group in optimizer.param_groups:param_group['lr'] = lrwith ctx:logits = model(X, Y).logitsloss = F.cross_entropy(logits.view(-1, logits.size(-1)), Y.view(-1), ignore_index=0, reduction='none')loss_mask = loss_mask.view(-1)loss = torch.sum(loss * loss_mask) / loss_mask.sum()loss = loss / args.accumulation_stepsscaler.scale(loss).backward()if (step + 1) % args.accumulation_steps == 0:scaler.unscale_(optimizer)torch.nn.utils.clip_grad_norm_(model.parameters(), args.grad_clip)scaler.step(optimizer)scaler.update()optimizer.zero_grad(set_to_none=True)if step % args.log_interval == 0:spend_time = time.time() - start_timeLogger('Epoch:[{}/{}]({}/{}) loss:{:.3f} lr:{:.7f} epoch_Time:{}min:'.format(epoch,args.epochs,step,iter_per_epoch,loss.item() * args.accumulation_steps,optimizer.param_groups[-1]['lr'],spend_time / (step + 1) * iter_per_epoch // 60 - spend_time // 60))if wandb is not None:wandb.log({"loss": loss.item() * args.accumulation_steps,"lr": optimizer.param_groups[-1]['lr'],"epoch_Time": spend_time / (step + 1) * iter_per_epoch // 60 - spend_time // 60})if (step + 1) % args.save_interval == 0:model.save_pretrained(args.save_dir)def find_linear_with_keys(model, keys=["wq", "wk"]):cls = torch.nn.Linearlinear_names = []for name, module in model.named_modules():if isinstance(module, cls):for key in keys:if key in name:linear_names.append(name)breakreturn linear_namesdef init_model():model_name_or_path = "./minimind-v1-small"tokenizer_name_or_path = "./minimind-v1-small"tokenizer = AutoTokenizer.from_pretrained(tokenizer_name_or_path, trust_remote_code=True, use_fast=False)model = AutoModelForCausalLM.from_pretrained(model_name_or_path, trust_remote_code=True).to(args.device)target_modules = find_linear_with_keys(model)peft_config = LoraConfig(r=8,target_modules=target_modules)model = get_peft_model(model, peft_config)model.print_trainable_parameters()model = model.to(args.device)return model, tokenizerif __name__ == "__main__":parser = argparse.ArgumentParser(description="MiniMind LoRA Fine-tuning")parser.add_argument("--out_dir", type=str, default="out", help="Output directory")parser.add_argument("--epochs", type=int, default=20, help="Number of epochs")parser.add_argument("--batch_size", type=int, default=32, help="Batch size")parser.add_argument("--learning_rate", type=float, default=1e-4, help="Learning rate")parser.add_argument("--device", type=str, default="cuda:0" if torch.cuda.is_available() else "cpu",help="Device to use")parser.add_argument("--dtype", type=str, default="bfloat16", help="Data type")parser.add_argument("--use_wandb", action="store_true", help="Use Weights & Biases")parser.add_argument("--wandb_project", type=str, default="MiniMind-LoRA", help="Weights & Biases project name")parser.add_argument("--num_workers", type=int, default=1, help="Number of workers for data loading")parser.add_argument("--accumulation_steps", type=int, default=1, help="Gradient accumulation steps")parser.add_argument("--grad_clip", type=float, default=1.0, help="Gradient clipping threshold")parser.add_argument("--warmup_iters", type=int, default=1000, help="Number of warmup iterations")parser.add_argument("--log_interval", type=int, default=100, help="Logging interval")parser.add_argument("--save_interval", type=int, default=1000, help="Model saving interval")args = parser.parse_args()lm_config = LMConfig()max_seq_len = lm_config.max_seq_lenargs.save_dir = os.path.join(args.out_dir)os.makedirs(args.save_dir, exist_ok=True)os.makedirs(args.out_dir, exist_ok=True)tokens_per_iter = args.batch_size * max_seq_lentorch.manual_seed(1337)device_type = "cuda" if "cuda" in args.device else "cpu"args.wandb_run_name = f"MiniMind-LoRA-Epoch-{args.epochs}-BatchSize-{args.batch_size}-LearningRate-{args.learning_rate}"ctx = nullcontext() if device_type == "cpu" else torch.cuda.amp.autocast()if args.use_wandb:import wandbwandb.init(project=args.wandb_project, name=args.wandb_run_name)else:wandb = Nonemodel, tokenizer = init_model()df = pd.read_csv('./dataset/sft_data_single.csv')df = df.sample(frac=1.0)train_ds = SFTDataset(df, tokenizer, max_length=max_seq_len)train_loader = DataLoader(train_ds,batch_size=args.batch_size,pin_memory=True,drop_last=False,shuffle=False,num_workers=args.num_workers,)scaler = torch.cuda.amp.GradScaler(enabled=(args.dtype in ['float16', 'bfloat16']))optimizer = optim.Adam(filter(lambda p: p.requires_grad, model.parameters()),lr=args.learning_rate)if False and platform.system() != 'Windows' and float(torch.__version__.split('.')[0]) >= 2:Logger("compiling the model... (takes a ~minute)")unoptimized_model = modelmodel = torch.compile(model)iter_per_epoch = len(train_loader)for epoch in range(args.epochs):train_epoch(epoch, wandb)data_process.py

import csv

import itertools

import re

import json

import jsonlines

import psutil

import ujson

import numpy as np

import pandas as pd

from transformers import AutoTokenizer

from datasets import load_datasetbos_token = "<s>"

eos_token = "</s>"def pretrain_process(chunk_size=50000):chunk_idx = 0with jsonlines.open('./dataset/mobvoi_seq_monkey_general_open_corpus.jsonl') as reader:with open('./dataset/pretrain_data.csv', 'w', newline='', encoding='utf-8') as csvfile:writer = csv.writer(csvfile)writer.writerow(['text'])while True:chunk = list(itertools.islice(reader, chunk_size))if not chunk:breakfor idx, obj in enumerate(chunk):try:content = obj.get('text', '')if len(content) > 512:continuewriter.writerow([content])except UnicodeDecodeError as e:print(f"Skipping invalid line {chunk_idx * chunk_size + idx + 1}: {e}")continuechunk_idx += 1print('chunk:', ((chunk_idx - 1) * chunk_size, chunk_idx * chunk_size), 'process end')def sft_process(contain_history=False):file_name = 'sft_data.csv'if not contain_history:file_name = 'sft_data_single.csv'def chinese_ratio(text):# 匹配所有中文字符chinese_chars = re.findall(r'[\u4e00-\u9fff]', text)# 中文字符数量占比return len(chinese_chars) / len(text) if text else 0def process_and_write_data(data):q_lst, a_lst, history_lst = [], [], []for per in data:history, q, a = per['history'], per['q'], per['a']if (contain_history and not history) or not q or not a:continueif len(q) < 10 or len(a) < 5:continueif len(q) > 512 or len(a) > 512:continue# 判断q和a中中文字符占比是否超过70%if not (chinese_ratio(q) > 0.5 and chinese_ratio(a) > 0.5):continueq_lst.append(q)a_lst.append(a)if contain_history:history_lst.append(history)else:history_lst.append([])# 创建DataFrame并追加到CSV文件df = pd.DataFrame({'history': history_lst, 'q': q_lst, 'a': a_lst})# # 1、默认# df.to_csv(f'./dataset/{file_name}', mode='a', header=False, index=False, lineterminator='\r\n', encoding='utf-8')# 2、若遇到数据 `_csv.Error: need to escape, but no escapechar set` 问题,可加 escapechar='\\' 参数:df.to_csv(f'./dataset/{file_name}', mode='a', header=False, index=False, lineterminator='\r\n', escapechar='\\',encoding='utf-8')chunk_size = 1000 # 每次处理的记录数data = []with open(f'./dataset/{file_name}', 'w', encoding='utf-8') as f:f.write('history,q,a\n')sft_datasets = ['./dataset/sft_data_zh.jsonl']if not contain_history:sft_datasets = ['./dataset/sft_data_zh.jsonl']chunk_num = 0for path in sft_datasets:with jsonlines.open(path) as reader:for idx, obj in enumerate(reader):try:data.append({'history': obj.get('history', ''),'q': obj.get('input', '') + obj.get('q', ''),'a': obj.get('output', '') + obj.get('a', '')})if len(data) >= chunk_size:chunk_num += 1process_and_write_data(data)data = []if chunk_num % 100 == 0:print(f'chunk:{chunk_num} process end')except jsonlines.InvalidLineError as e:print(f"Skipping invalid JSON line {idx + 1}: {e}")continueif data:process_and_write_data(data)data = []def rl_process():################# Dataset################dataset_paths = ['./dataset/dpo/dpo_zh_demo.json','./dataset/dpo/dpo_train_data.json','./dataset/dpo/huozi_rlhf_data.json',]train_dataset = load_dataset('json', data_files=dataset_paths)merged_data = []for split in train_dataset.keys():merged_data.extend(train_dataset[split])with open('./dataset/dpo/train_data.json', 'w', encoding='utf-8') as f:json.dump(merged_data, f, ensure_ascii=False, indent=4)if __name__ == "__main__":tokenizer = AutoTokenizer.from_pretrained('./model/minimind_tokenizer', use_fast=False)print('tokenizer词表大小:', len(tokenizer))print('tokenizer词表大小:', tokenizer)################# 1: pretrain# 2: sft# 3: RL################process_type = 2if process_type == 1:pretrain_process()if process_type == 2:sft_process(contain_history=False)if process_type == 3:rl_process()学习参考资料

别人的一些学习心得:

https://github.com/jingyaogong/minimind/issues/26

B站大佬解释:

https://www.bilibili.com/video/BV1Sh1vYBEzY?spm_id_from=333.788.player.player_end_recommend_autoplay&vd_source=73f0f43dc639135d4ea9acffa3ad6ae0

推荐在线显卡租赁市场:

相关文章:

AI学习记录 - 依据 minimind 项目入门

想学习AI,还是需要从头到尾跑一边流程,最近看到这个项目 minimind, 我也记录下学习到的东西,需要结合项目的readme看。 1、github链接 https://github.com/jingyaogong/minimind?tabreadme-ov-file 2、硬件环境:英伟达4070ti …...

数据结构----链表头插中插尾插

一、链表的基本概念 链表是一种线性数据结构,它由一系列节点组成。每个节点包含两个主要部分: 数据域:用于存储数据元素,可以是任何类型的数据,如整数、字符、结构体等。指针域:用于存储下一个节点&#…...

设计模式-读书笔记

确认好: 模式名称 问题:在何时使用模式,包含设计中存在的问题以及问题存在的原因 解决方案:设计模式的组成部分,以及这些组成部分之间的相互关系,各自的职责和协作方式,用uml类图和核心代码描…...

c语言----选择结构

基本概念 选择结构是C语言中用于根据条件判断来执行不同代码块的结构。它允许程序在不同的条件下执行不同的操作,使程序具有决策能力。 if语句 单分支if语句 语法格式: if (条件表达式) { 执行语句块; } 功能: 当条件表达式的值为真&#…...

KS曲线python实现

目录 实战 实战 # 导入第三方模块 import pandas as pd import numpy as np import matplotlib.pyplot as plt# 自定义绘制ks曲线的函数 def plot_ks(y_test, y_score, positive_flag):# 对y_test重新设置索引y_test.index np.arange(len(y_test))# 构建目标数据集target_dat…...

解决matplotlib中文乱码问题

进入python,查看缓存 import matplotlib as mpl print(mpl.get_cachedir())如果结果为/Users/xxx/.matplotlib 那么就rm -rf /Users/xxx/.matplotlib 然后 mkdir ~/.fonts cd ~/.fonts wget http://129.204.205.246/downloads/SimHei.ttfsudo apt-get install fo…...

实操给桌面机器人加上超拟人音色

前面我们讲了怎么用CSK6大模型开发板做一个桌面机器人充当AI语音助理,近期上线超拟人方案,不仅大模型语音最快可以1秒内回复,还可以让我们的桌面机器人使用超拟人音色、具备声纹识别等能力,本文以csk6大模型开发板为例实操怎么把超…...

git stash 的文件如何找回

在Git中,如果你使用了git stash命令来保存你的工作进度,但之后想要找回这些被stash的文件,你可以按照以下步骤进行操作: 1. 查看stash列表 首先,使用git stash list命令来查看当前保存的所有stash记录。这个命令会列出…...

皮肤伤口分割数据集labelme格式248张5类别

数据集格式:labelme格式(不包含mask文件,仅仅包含jpg图片和对应的json文件) 图片数量(jpg文件个数):284 标注数量(json文件个数):284 标注类别数:5 标注类别名称:["bruises","burns","cu…...

uni-app开发AI康复锻炼小程序,帮助肢体受伤患者康复!

**提要:**近段时间我们收到多个康复机构用户,咨询AI运动识别插件是否可以应用于肢力运动受限患者的康复锻炼中来,插件是可以应用到AI康复锻炼中的,今天小编就为您介绍一下AI运动识别插件在康腹锻炼中的应用场景。 一、康复机构的应…...

双内核架构 Xenomai 4 安装教程

Xenomai 4是一种双内核架构, 继承了Xenomai系列的特点,通过在Linux内核中嵌入一个辅助核心(companion core),来提供实时能力。这个辅助核心专门处理那些需要极低且有界响应时间的任务。 本文将在官网教程(https://evlproject.org/…...

【redis的使用、账号流程、游戏服Handler的反射调用】1.自增id 2.全局用户名这样子名字唯一 3.

一、web服 1)账号注册 // 用于唯一命名服务 com.xinyue.game.center.business.account.logic.AccountRegisterService#accountRegister public void accountRegister(AccountEntity account) {accountManager.checkUsername(account.getUsername());accountManager.checkPass…...

neo4j 图表数据导入到 TuGraph

neo4j 图表数据导入到 TuGraph 代码文件说明后文 前言:近期在引入阿里的 TuGraph 图数据库,需要将 原 neo4j 数据导入到新的 tugraph 数据库中。预期走csv文件导入导出,但因为格式和数据库设计问题,操作起来比较麻烦(可能是个人没…...

启动报错java.lang.NoClassDefFoundError: ch/qos/logback/core/status/WarnStatus

报错信息图片 日志: Exception in thread "Quartz Scheduler [scheduler]" java.lang.NoClassDefFoundError: ch/qos/logback/core/status/WarnStatus先说我自己遇到的问题,我们项目在web设置了自定义的log输出路径,多了一个 / 去…...

【ubuntu18.04】ubuntu18.04挂在硬盘出现 Wrong diagnostic page; asked for 1 got 8解决方案

错误日志 [ 8754.700227] usb 2-3: new full-speed USB device number 3 using xhci_hcd [ 8754.867389] usb 2-3: New USB device found, idVendor0e0f, idProduct0002, bcdDevice 1.00 [ 8754.867421] usb 2-3: New USB device strings: Mfr1, Product2, SerialNumber0 [ 87…...

kubeadm安装K8s高可用集群之集群初始化及master/node节点加入calico网络插件安装

系列文章目录 1.kubeadm安装K8s高可用集群之基础环境配置 2.kubeadm安装K8s集群之高可用组件keepalivednginx及kubeadm部署 3.kubeadm安装K8s高可用集群之集群初始化及master/node节点加入集群calico网络插件安装 kubeadm安装K8s高可用集群之集群初始化及master/node节点加入ca…...

游戏何如防抓包

游戏抓包是指在游戏中,通过抓包工具捕获和分析游戏客户端与服务器之间传输的封包数据的过程。抓包工具可实现拦截、篡改、重发、丢弃游戏的上下行数据包,市面上常见的抓包工具有WPE、Fiddler和Charles Proxy等。 抓包工具有两种实现方式,一类…...

【LeetCode】每日一题 2024_12_19 找到稳定山的下标(模拟)

前言 每天和你一起刷 LeetCode 每日一题~ 最近力扣的每日一题出的比较烂,难度过山车,导致近期的更新都三天打鱼,两天断更了 . . . LeetCode 启动! 题目:找到稳定山的下标 代码与解题思路 先读题:最重要…...

运维 mysql、redis 、RocketMQ性能排查

MySQL查看数据库连接数 1. SHOW STATUS命令-查询当前的连接数 MySQL 提供了一个 SHOW STATUS 命令,可以用来查看服务器的状态信息,包括当前的连接数。 SHOW STATUS LIKE Threads_connected;这个命令会返回当前连接到服务器的线程数,即当前…...

[SAP ABAP] 将内表数据转换为HTML格式

从sflight数据库表中检索航班信息,并将这些信息转换成HTML格式,然后下载或显示在前端 开发步骤 ① 自定义一个数据类型 ty_sflight 来存储航班信息 ② 声明内表和工作区变量,用于存储表头、字段、HTML内容和航班详细信息以及创建字段目录lt…...

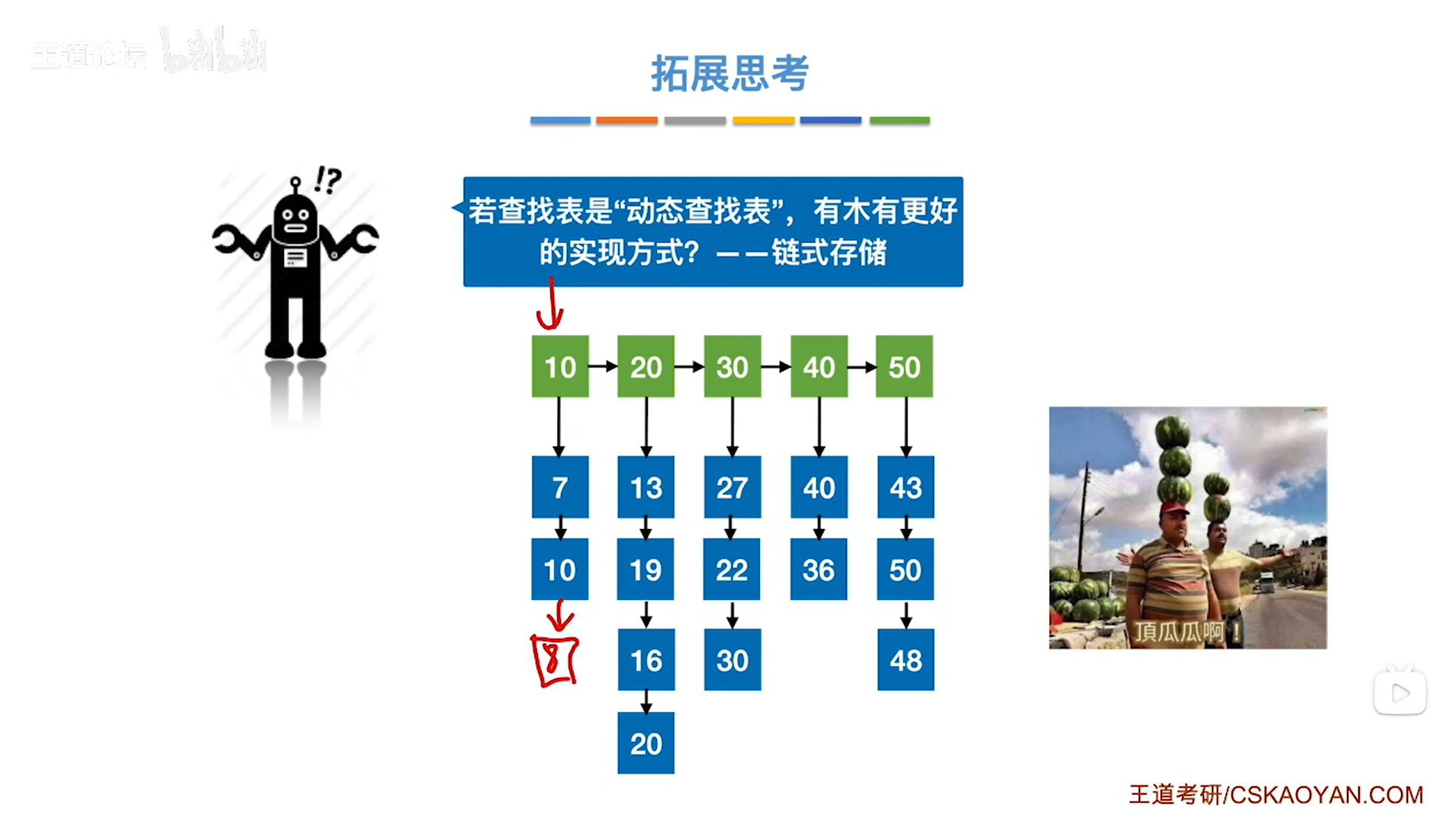

7.4.分块查找

一.分块查找的算法思想: 1.实例: 以上述图片的顺序表为例, 该顺序表的数据元素从整体来看是乱序的,但如果把这些数据元素分成一块一块的小区间, 第一个区间[0,1]索引上的数据元素都是小于等于10的, 第二…...

《Playwright:微软的自动化测试工具详解》

Playwright 简介:声明内容来自网络,将内容拼接整理出来的文档 Playwright 是微软开发的自动化测试工具,支持 Chrome、Firefox、Safari 等主流浏览器,提供多语言 API(Python、JavaScript、Java、.NET)。它的特点包括&a…...

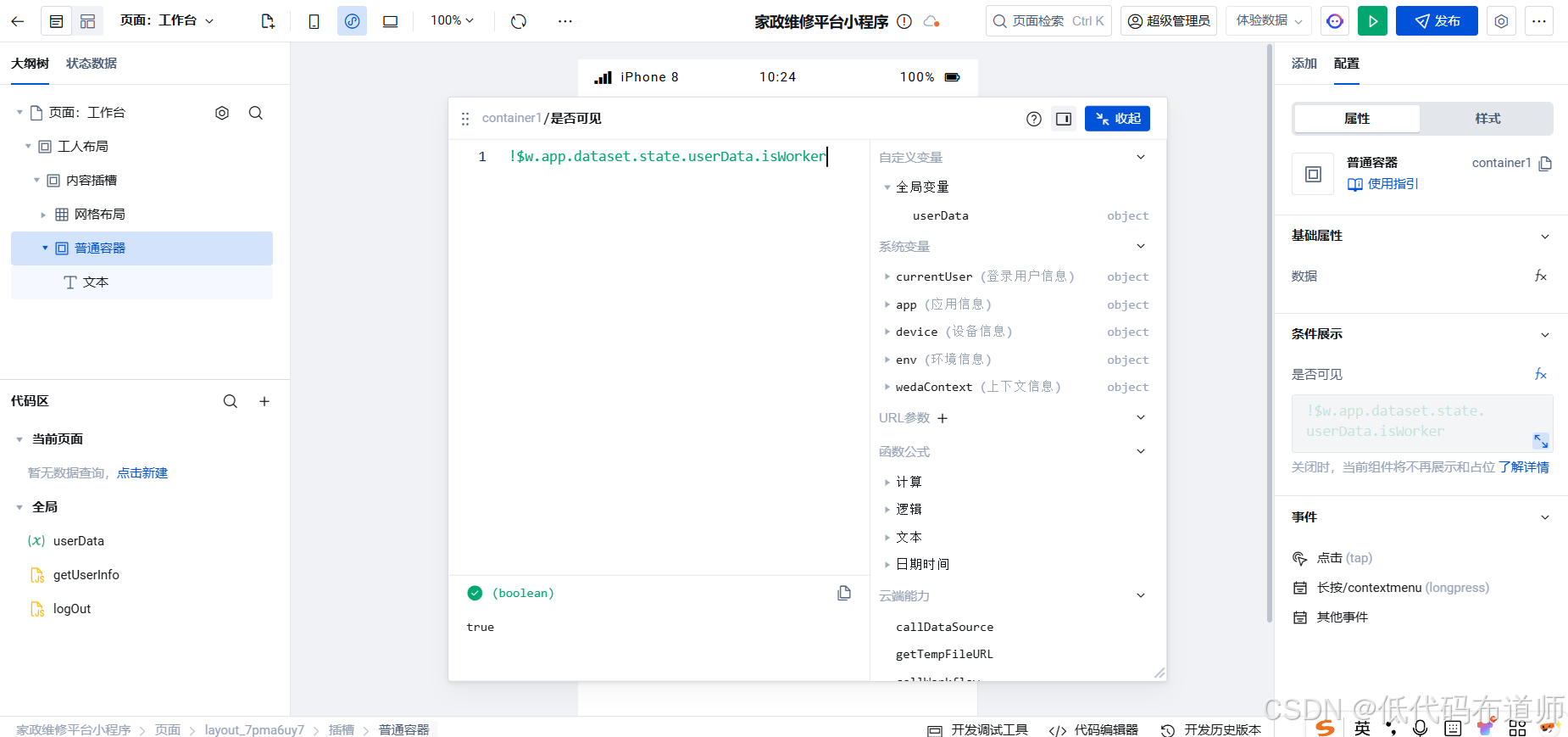

家政维修平台实战20:权限设计

目录 1 获取工人信息2 搭建工人入口3 权限判断总结 目前我们已经搭建好了基础的用户体系,主要是分成几个表,用户表我们是记录用户的基础信息,包括手机、昵称、头像。而工人和员工各有各的表。那么就有一个问题,不同的角色…...

Web 架构之 CDN 加速原理与落地实践

文章目录 一、思维导图二、正文内容(一)CDN 基础概念1. 定义2. 组成部分 (二)CDN 加速原理1. 请求路由2. 内容缓存3. 内容更新 (三)CDN 落地实践1. 选择 CDN 服务商2. 配置 CDN3. 集成到 Web 架构 …...

OPENCV形态学基础之二腐蚀

一.腐蚀的原理 (图1) 数学表达式:dst(x,y) erode(src(x,y)) min(x,y)src(xx,yy) 腐蚀也是图像形态学的基本功能之一,腐蚀跟膨胀属于反向操作,膨胀是把图像图像变大,而腐蚀就是把图像变小。腐蚀后的图像变小变暗淡。 腐蚀…...

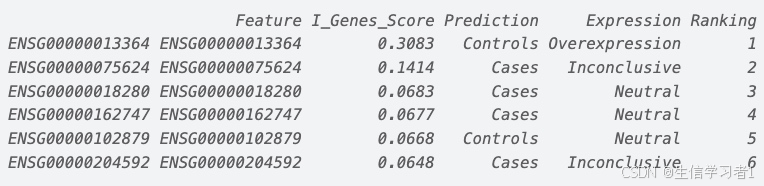

【数据分析】R版IntelliGenes用于生物标志物发现的可解释机器学习

禁止商业或二改转载,仅供自学使用,侵权必究,如需截取部分内容请后台联系作者! 文章目录 介绍流程步骤1. 输入数据2. 特征选择3. 模型训练4. I-Genes 评分计算5. 输出结果 IntelliGenesR 安装包1. 特征选择2. 模型训练和评估3. I-Genes 评分计…...

多光源(Multiple Lights))

C++.OpenGL (14/64)多光源(Multiple Lights)

多光源(Multiple Lights) 多光源渲染技术概览 #mermaid-svg-3L5e5gGn76TNh7Lq {font-family:"trebuchet ms",verdana,arial,sans-serif;font-size:16px;fill:#333;}#mermaid-svg-3L5e5gGn76TNh7Lq .error-icon{fill:#552222;}#mermaid-svg-3L5e5gGn76TNh7Lq .erro…...

提供了哪些便利?)

现有的 Redis 分布式锁库(如 Redisson)提供了哪些便利?

现有的 Redis 分布式锁库(如 Redisson)相比于开发者自己基于 Redis 命令(如 SETNX, EXPIRE, DEL)手动实现分布式锁,提供了巨大的便利性和健壮性。主要体现在以下几个方面: 原子性保证 (Atomicity)ÿ…...

数据库——redis

一、Redis 介绍 1. 概述 Redis(Remote Dictionary Server)是一个开源的、高性能的内存键值数据库系统,具有以下核心特点: 内存存储架构:数据主要存储在内存中,提供微秒级的读写响应 多数据结构支持&…...

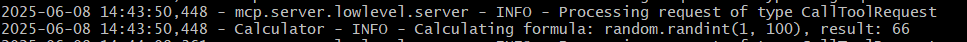

小智AI+MCP

什么是小智AI和MCP 如果还不清楚的先看往期文章 手搓小智AI聊天机器人 MCP 深度解析:AI 的USB接口 如何使用小智MCP 1.刷支持mcp的小智固件 2.下载官方MCP的示例代码 Github:https://github.com/78/mcp-calculator 安这个步骤执行 其中MCP_ENDPOI…...