GO语言基础面试题

一、字符串和整型怎么相互转换

1、使用 strconv 包中的函数 FormatInt 、ParseInt 等进行转换

2、转换10进制的整形时,可以使用 strconv.Atoi、strconv.Itoa:

Atoi是ParseInt(s, 10, 0) 的简写

Itoa是FormatInt(i, 10) 的简写

3、整形转为字符型时,可以使用 fmt.Sprintf 函数

4、注意:字符串和整形相互转换时,GO不支持强制类型转换,如string(10)、int("10")

package mainimport ("fmt""strconv"

)func main() {// FormatInt 返回100的10进制的字符串表示fmt.Println(strconv.FormatInt(100, 10)) // 输出:100// FormatInt 返回100的2进制的字符串表示fmt.Println(strconv.FormatInt(100, 2)) // 输出:1100100// ParseInt 将字符串转换为int64num, _ := strconv.ParseInt("100", 10, 64)fmt.Printf("ParseInt 转为的10进制int64: %d \n", num) // ParseInt 转为的10进制int64: 100// Itoa 将int以10进制方式转换为字符串,相当于 FormatInt(i, 10)fmt.Println(strconv.Itoa(20)) // 输出:20// Itoa 将字符串以10进制方式转换整型,相当于 ParseInt(s, 10, 0)num2, _ := strconv.Atoi("20")fmt.Println("Atoi 将字符串转为10进制整型:", num2) // 输出:Atoi 将字符串转为10进制整型: 20// 使用 fmt.Sprintf 将整形格式化为字符串str := fmt.Sprintf("%d", 200)fmt.Println(str) // 输出: 200

}

二、GO如何获取当前时间并格式化

使用 time 包中的函数:

time.Now().Format("2006-01-02 15:04:05")

三、怎么删除切片中的一个元素

1、使用 append() 函数进行追加

append(s[:index], s[index+1:]...) // index 为需要删除的元素的索引

2、使用 copy() 函数复制元素

// 切片长度减一

slice = slice[:len(slice)-1]// 使用copy函数将index之后的元素向前移动一位

copy(slice[index:], slice[index+1:])注意:切片本身没有delete方法

package mainimport ("fmt"

)func main() {// 删除切片中的元素的两种方法// 方式一:使用 append 函数// 原始切片s1 := []int{2, 3, 5, 7, 11, 13}fmt.Printf("原始切片长度: %d 容量:%d\n", len(s1), cap(s1)) // 输出:原始切片长度: 6 容量:6fmt.Println("原始切片内容:", s1) // 输出:原始切片内容: [2 3 5 7 11 13]// 删除切片中的第2个元素,即数字 3s1 = append(s1[:1], s1[2:]...) // 注意:s1[2:]...表示切片中的第3个元素到最后一个元素fmt.Printf("删除后的切片长度: %d 容量:%d\n", len(s1), cap(s1)) // 输出:删除后的切片长度: 5 容量:6//s1 = s1[:cap(s1)] // 将新切片的长度设为原始容量,则会输出 [2 5 7 11 13]fmt.Println("删除后的切片内容:", s1) // 输出:删除后的切片内容: [2 5 7 11 13]//注意:append 函数会返回一个新生产的切片,新切片中的长度会发生改变,容量、指向底层数组的指针也可能会变// 方式二:使用copy函数s := []int{2, 3, 5, 7, 11, 13}copy(s[1:], s[2:]) // 第2个元素之后的元素复制到第1个元素fmt.Println("使用copy函数后切片的内容:", s) // 输出:使用copy函数后切片的内容: [2 5 7 11 13 13]// 注意:如果不将切片的长度减1,则出现上面的结果( [2 5 7 11 13 13]),// 因为copy函数只是复制元素(复制元素的个数取决于源切片和目标切片中最小的个数),并不改变切片的长度、容量等,// 切片底层的数组的个数还是 6,最后一个的值还是13// 所以需要将原切片 s 的长度减1s = s[:len(s)-1]fmt.Println("长度减1后切片的内容:", s) // 输出:使用copy函数后切片的内容: [2 5 7 11 13]

}

四、map的key可以是哪些类型

key 的数据类型必须为可比较的类型,slice、map、func不可比较

五、如果只对map读取,需要加锁吗

map 在并发情况下,只读是线程安全的,不需要加锁,同时读写是线程不安全的。

如果想实现并发线程安全有两种方法:

- map加互斥锁或读写锁

- 标准库sync.map

sync.Map 使用方法:

package mainimport ("fmt""sync"

)func main() {var m map[string]int// 报错:assignment to entry in nil map// 原因:未对 map 进行初始化//m["test"] = 5fmt.Println(m["name"]) // 输出:0// 对 map 进行初始化clients := make(map[string]string, 0)clients["John"] = "Doe"fmt.Println(clients) // 输出:map[John:Doe]var sm sync.Map// 写入 sync.Map sync.Map 写入前不需要进行初始化sm.Store("name", "John") // 不支持以下赋值方式: sm["yy"] = "66"sm.Store("surname", "Doe")sm.Store("nickname", "li")// 读取 sync.Mapfmt.Println(sm.Load("name")) // 输出:John true// 删除 sync.Mapsm.Delete("name")// 读取 sync.Mapfmt.Println(sm.Load("name")) // 输出:<nil> false// 循环 sync.Mapsm.Range(func(key any, value any) bool {fmt.Printf("Key=%s value=%s \n", key, value)return true}) // 输出:Key=nickname value=li// Key=surname value=Doe

}

六、go协程中使用go协程,内层go协程panic后,会影响外层go协程吗

会影响,而且外层go协程中的recover对内层的panic不起作用

七、如果有多个协程,怎么判断多个协程都执行完毕

1、使用 sync.WaitGroup

2、使用 channel 同步

3、使用 context 和 sync.WaitGroup, 处理超时或取消操作

- 使用 sync.WaitGroup

package mainimport ("fmt""sync""time"

)func worker(id int, wg *sync.WaitGroup) {defer wg.Done()fmt.Printf("Worker %d starting \n", id)time.Sleep(2 * time.Second)fmt.Printf("Worker %d done \n", id)

}// 如何判断多个go协程都执行完成

func main() {var wg sync.WaitGroup// 启动 5 个协程for i := 1; i <= 5; i++ {wg.Add(1)go worker(i, &wg)}// 等待所有的 worker 完成wg.Wait()time.Sleep(time.Second)fmt.Println("All workers done")// 最终输出:// Worker 1 starting// Worker 5 starting// Worker 2 starting// Worker 3 starting// Worker 4 starting// Worker 2 done// Worker 4 done// Worker 5 done// Worker 3 done// Worker 1 done// All workers done

}

- 使用 channel 同步

package mainimport ("fmt""time"

)// 定义一个计数器管道

var channelDone = make(chan bool)func woker(id int) {fmt.Printf("worker %d starting \n", id)time.Sleep(time.Second)fmt.Printf("worker %d done \n", id)channelDone <- true // 当任务执行完成时,将 true 写入到管道

}func main() {// 启动 3 个 go 协程go woker(1)go woker(2)go woker(3)// 循环从计数器管道中读取完成标识for i := 0; i < 3; i++ {<-channelDone}fmt.Println("All workers done")// 输出:// worker 2 starting// worker 1 starting// worker 3 starting// worker 3 done// worker 2 done// All workers done

}

- 使用 context 和 sync.WaitGroup

package mainimport ("context""fmt""sync""time"

)func worker(id int, wg *sync.WaitGroup, ctx context.Context) {defer wg.Done()fmt.Printf("Worker %d starting \n", id)time.Sleep(2 * time.Second)select {case <-ctx.Done():fmt.Printf("worker %d cancelled \n", id)default:fmt.Printf("Worker %d done \n", id)}

}// 如何判断多个go协程都执行完成

func main() {var wg sync.WaitGroup// 创建一个带有2秒超时的上下文ctx, cancel := context.WithTimeout(context.Background(), 2*time.Second)// 确保在函数返回时释放资源defer cancel()// 启动 5 个协程for i := 1; i <= 5; i++ {wg.Add(1)go worker(i, &wg, ctx)}// 等待所有的 worker 完成wg.Wait()time.Sleep(time.Second)fmt.Println("All workers done")// 最终输出:// Worker 5 starting// Worker 2 starting// Worker 1 starting// Worker 3 starting// Worker 4 starting// Worker 4 done// Worker 1 done// worker 5 cancelled// worker 3 cancelled// Worker 2 done// All workers done

}

八、一个协程每隔1秒执行一次任务,如何实现

1、使用 time.Timer 实现单一定时器

2、使用 time.Ticker 实现周期性定时器

package mainimport ("fmt""time"

)func main() {// 1、用 time.Timer 实现单一的定时器,用它来在一段时间后执行一个任务// 创建一个定时器,设置 1 秒后触发timer := time.NewTimer(1 * time.Second)// 阻塞等待定时器触发<-timer.Cfmt.Println("定时任务被执行")// 停止定时器timer.Stop()// 2、time.Ticker 类型表示一个周期性的定时器,它会按照指定的间隔不断触发ticker := time.NewTicker(1 * time.Second)defer ticker.Stop() // 确保在不需要时,停止 ticker// 用管道控制 ticker 结束,简单运行 5 次后结束done := make(chan bool)go func() {for i := 0; i < 5; i++ {select {case <-done:returncase t := <-ticker.C:fmt.Println("定时任务执行,当前时间:", t)}}done <- true}()// 主线程等待一段时间time.Sleep(7 * time.Second)

}

九、分库分表

- 分表:

原因:数据库面临性能瓶颈或数据量很大,通过优化索引也无法解决

解决方法:将数据分散到多个表,通常采用横向拆分,具体策略:

①通过id取余将数据分散到多个表里;

②哈希分表策略,根据表的某个属性值获取哈希值进行分表;

③通过时间分表方式,按月或年将数据拆分到不同的表

注意:垂直拆分,比如将用户表拆分为主副表,最好在设计初期做,不然拆分代价大

- 分库:

原因:单库的访问量过高

解决方法:搭配微服务框架,按驱动领域设计(DDD)将业务表归属到不同的数据库,专库专用,提供系统稳定性,降低耦合度

十、channel 在什么情况下会出现死锁

1、对于无缓存双向管道,只向管道中写数据,不从管道中读数据;或只读不写

2、对于有缓存管道,向管道中添加数据超过缓存

3、select的所有case分支都不能执行,且没有default分支

4、对没有初始化的channel写数据

package mainimport ("fmt""time"

)func main() {// 创建缓存为2的的channelch := make(chan int, 2)// 向管道中添加3个数据,超过缓存数 2,出现死锁ch <- 3ch <- 4ch <- 5time.Sleep(time.Second * 1)fmt.Println("main end")// 输出:// fatal error: all goroutines are asleep - deadlock!// goroutine 1 [chan send]:// main.main()// E:/GoProject/src/mianshi/channel/main.go:22 +0x58// exit status 2

}

十一、在什么情况下关闭channel会panic

1、当channel为nil时

2、当channel已经关闭时

十二、channel的底层实现原理(数据结构)

type hchan struct {qcount uint // total data in the queuedataqsiz uint // size of the circular queuebuf unsafe.Pointer // points to an array of dataqsiz elementselemsize uint16closed uint32elemtype *_type // element typesendx uint // send indexrecvx uint // receive indexrecvq waitq // list of recv waiterssendq waitq // list of send waiters// lock protects all fields in hchan, as well as several// fields in sudogs blocked on this channel.//// Do not change another G's status while holding this lock// (in particular, do not ready a G), as this can deadlock// with stack shrinking.lock mutex

}type waitq struct {first *sudoglast *sudog

}向channel写数据(流程图来自go进阶(2) -深入理解Channel实现原理-CSDN博客):

1、锁定整个通道结构。

2、确定写入:如果recvq队列不为空,说明缓冲区没有数据或者没有缓冲区,此时直接从recvq等待队列中取出一个G(goroutine),并把数据写入,最后把该G唤醒,结束发送过程;

3、如果recvq为Empty,则确定缓冲区是否可用。如果可用,从当前goroutine复制数据写入缓冲区,结束发送过程。

4、如果缓冲区已满,则要写入的元素将保存在当前正在执行的goroutine的结构中,并且当前goroutine将在sendq中排队并从运行时挂起(进入休眠,等待被读goroutine唤醒)。

5、写入完成释放锁。

从channel读数据:

1、先获取channel全局锁

2、如果等待发送队列sendq不为空(有等待的goroutine):

1)若没有缓冲区,直接从sendq队列中取出G(goroutine),直接取出goroutine并读取数据,然后唤醒这个goroutine,结束读取释放锁,结束读取过程;

2)若有缓冲区(说明此时缓冲区已满),从缓冲队列中首部读取数据,再从sendq等待发送队列中取出G,把G中的数据写入缓冲区buf队尾,结束读取释放锁;

3、如果等待发送队列sendq为空(没有等待的goroutine):

1)若缓冲区有数据,直接读取缓冲区数据,结束读取释放锁。

2)没有缓冲区或缓冲区为空,将当前的goroutine加入recvq排队,进入睡眠,等待被写goroutine唤醒。结束读取释放锁。

十三、go中的锁机制

锁机制是并发编程中用于确保数据一致性和防止竞争条件(race conditions)的重要工具。Go 提供了几种不同的锁机制,包括互斥锁(Mutex)、读写锁(RWMutex)和通道(Channels)。

1、互斥锁(sync.Mutex)

确保同一时间只有一个 goroutine 可以访问某个资源

2、读写锁(sync.RWMutex)

读写锁允许多个 goroutine 同时读取资源,但在写入资源时会独占访问权。这提高了读操作的并发性能。

3、通道

通道不是传统意义上的锁,但它们提供了一种更 Go 风格的并发控制机制,用于在 goroutine 之间传递数据。通道可以用于实现同步和互斥,避免使用显式的锁。

package main import ( "fmt" "sync"

) var ( counter int mu sync.Mutex

) func increment(wg *sync.WaitGroup) { defer wg.Done() mu.Lock() counter++ mu.Unlock()

} func main() { var wg sync.WaitGroup for i := 0; i < 1000; i++ { wg.Add(1) go increment(&wg) } wg.Wait() fmt.Println("Final Counter:", counter)

}package main import ( "fmt" "sync"

) var ( data int rwMu sync.RWMutex

) func readData(wg *sync.WaitGroup) { defer wg.Done() rwMu.RLock() fmt.Println("Read:", data) rwMu.RUnlock()

} func writeData(wg *sync.WaitGroup, value int) { defer wg.Done() rwMu.Lock() data = value rwMu.Unlock()

} func main() { var wg sync.WaitGroup // 启动多个读 goroutine for i := 0; i < 10; i++ { wg.Add(1) go readData(&wg) } // 启动一个写 goroutine wg.Add(1) go writeData(&wg, 42) wg.Wait()

}package mainimport ("fmt""sync"

)func worker(id int, jobs <-chan int, results chan<- int, wg *sync.WaitGroup) {defer wg.Done()for j := range jobs {fmt.Printf("Worker %d started job %d\n", id, j)results <- j * 2}

}func main() {const numJobs = 5jobs := make(chan int, numJobs)results := make(chan int, numJobs)var wg sync.WaitGroup// 启动 3 个 worker goroutinefor w := 1; w <= 3; w++ {wg.Add(1)go worker(w, jobs, results, &wg)}// 发送 5 个 job 到 jobs 通道for j := 1; j <= numJobs; j++ {jobs <- j}close(jobs)// 等待所有 worker 完成go func() {wg.Wait()close(results)}()// 打印所有结果for result := range results {fmt.Println("Result:", result)}// 打印结果:// Worker 1 started job 1// Worker 1 started job 4// Worker 1 started job 5// Worker 2 started job 2// Result: 2// Result: 8// Worker 3 started job 3// Result: 10// Result: 4// Result: 6

}十四、互斥锁的底层实现原理

互斥锁(sync.Mutex)是一种用于同步并发访问共享资源的机制,它确保在同一时刻只有一个goroutine可以访问被保护的数据。

// 位于 src/sync/mutex.gotype Mutex struct {state int32 // 锁状态和一些标志位sema uint32 // 信号量,用于阻塞和唤醒等待的 goroutine

}const (mutexLocked = 1 << iota // 是否被锁定 mutexWoken // 是否有协程被唤醒mutexStarving // 是否处于饥饿模式mutexWaiterShift = iota // 表示等待锁的阻塞协程个数starvationThresholdNs = 1e6

)

十五、go内存逃逸现象

1、什么是内存逃逸

当一个函数内部定义的变量在函数执行完后仍然被其他部分引用时,这个变量就会逃逸到堆上分配内存,而不是在栈上分配,这种现象叫做内存逃逸。

对内存管理的理解:

栈上的内存分配和释放由编译器自动管理,速度快但空间有限;

堆上的内存分配和释放需要运行时系统的参与,相对较慢但空间大,由GC回收

2、内存逃逸的原因

- 变量的生命周期超出作用域:

在函数内部声明的变量,如果在函数返回后仍然被引用,就会导致内存逃逸。这些变量将被分配到堆上,以确保它们在函数返回后仍然可用。

- 引用外部变量:

如果函数内部引用了外部作用域的变量,这也可能导致内存逃逸。编译器无法确定这些外部变量的生命周期,因此它们可能会被分配到堆上。

- 使用闭包:

在Go中,闭包(函数值)可以捕获外部变量,这些变量的生命周期可能超出了闭包本身的生命周期。这导致了内存逃逸。

- 返回局部变量地址:

当一个函数返回一个局部变量的指针或引用时,这个变量就会逃逸到函数外部。

- 大对象分配:

由于栈上的空间有限,大对象无法在栈上得到足够的空间,因此可能导致逃逸到堆上

3、如何检测及避免内存逃逸

- 使用逃逸分析工具:

Go编译器内置了逃逸分析功能,可以帮助开发者检测内存逃逸。可以使用go build命令的-gcflags标志来启用逃逸分析并输出逃逸分析的结果。这会在编译时打印出逃逸分析的详细信息,包括哪些变量逃逸到堆上以及原因。

- 减小变量作用域:

将变量的作用域限制在最小的范围内,确保变量在不再需要时尽早被销毁。这有助于减少内存逃逸的可能性。

- 避免使用全局变量:

全局变量的生命周期持续到程序结束,通常会导致内存逃逸。因此,应尽量避免过多使用全局变量。

- 优化闭包使用:

如果不必要,避免使用闭包来捕获外部变量。如果必须使用闭包,可以考虑将需要的变量作为参数传递,而不是捕获外部变量。

- 使用值类型:

在某些情况下,将数据保存为值类型而不是引用类型(指针或接口)可以减少内存逃逸。值类型通常在栈上分配,生命周期受限于作用域。

- 避免在循环中创建对象:

在循环中创建对象可能导致大量内存分配和逃逸。可以在循环外部预分配好对象,循环内部重复使用。

// 1、函数内部定义的局部变量逃逸,比如返回参数是指针、切片、map

func createSlice() []int { var data []int for i := 0; i < 1000; i++ { data = append(data, i) } return data // data逃逸到堆上分配内存

}// 2、闭包捕获外部变量

func counter() func() int { count := 0 return func() int { count++ return count } // count逃逸到堆上

}// 3、返回局部变量地址

func escape() *int { x := 10 return &x // x逃逸到堆上

}// 4、使用go关键字启动协程

func main() { data := make([]int, 1000) go func() { fmt.Println(data[0]) // data逃逸到堆上 }()

}// 5、向channel中发送指针或包含指针的值

func f1() {i :=2ch = make(chan *int, 2)ch <- &i<-ch

}// 6、函数内的变量定义为interface

// 编译器不确定 interface 的大小,所以将变量分配在堆上

type animal interface {run()

}func f2() {var a animal

}

十六、高并发情况下如何保证数据库主键唯一性

数据库层面:

主键唯一约束、数据库事务和锁

应用程序层面:

UUID、雪花算法

十七、GMP调度模型的原理

Go语言的调度模型被称为GMP模型,用于在可用的物理线程上调度goroutines(Go的轻量级线程)。

GMP模型组成:

GMP模型由三个主要组件构成:Goroutine、M(机器)和P(处理器)。

1. Goroutine(G)

- Goroutine 是Go语言中的一个基本概念,类似于线程,但比线程更轻量。Goroutines在Go的运行时环境中被调度和管理,而非操作系统。

- Goroutines非常轻量,启动快,且切换开销小。这是因为它们有自己的栈,这个栈可以根据需要动态增长和缩减。

2. Machine(M)

- M 代表了真正的操作系统线程。每个M都由操作系统调度,并且拥有一个固定大小的内存栈用于执行C代码。

- M负责执行Goroutines的代码。Go的运行时会尽量复用M,以减少线程的创建和销毁带来的开销。

3. Processor(P)

- P 是Go运行时的一个资源,可以看作是执行Goroutines所需的上下文环境。P的数量决定了系统同时运行Goroutines的最大数量。

- 每个P都有一个本地的运行队列,用于存放待运行的Goroutines。

- P的数量一般设置为等于机器的逻辑处理器数量,以充分利用多核的优势。

调度过程:

- 当一个goroutine被创建时,它会被放入某个P的本地队列中等待执行。

- 当一个M执行完当前绑定的P中的goroutine后,它会从该P的本地队列中获取下一个待执行的goroutine。

- 如果P的本地队列为空,M会尝试从全局队列或其他P的队列中“偷取”goroutine来执行,以实现工作负载的均衡,提高线程利用率。

- 如果当前没有足够活跃的M来处理所有的P,调度器会创建新的M与P绑定,以充分利用多核资源。

调度的机制用一句话描述就是:runtime准备好G,M,P,然后M绑定P,M从本地或者是全局队列中获取G,然后切换到G的执行栈上执行G上的任务函数,调用goexit做清理工作并回到M,如此反复。

work stealing机制:

Hand off 机制:

也称为P分离机制,当本线程M因为G进行的系统调用阻塞时,线程释放绑定的P,把P转移给其他空闲的M执行,也提高了线程利用率(避免站着茅坑不拉shi)。

抢占式调度:

十八、go常用的并发模型有哪些

并发模型是指系统中的线程如何协作完成并发任务,包含两种并发模型:共享内存并发模型、CSP并发模型。

线程间的通讯方式有两种:共享内存、消息传递

十九、go Cond实现原理

Cond 是一种用于线程间同步的机制,可以让gorutine在满足特定条件时被阻塞或唤醒。

数据结构:

type Cond struct {noCopy noCopyL Lockernotify notifyListchecker copyChecker }type notifyList struct {wait uint32notify uint32lock uintptr // key field of the mutexhead unsafe.Pointertail unsafe.Pointer }

package mainimport ("fmt""sync""time" )func main() {// 定义一个互斥锁var mu sync.Mutex// 创建cond,用于gorutine在满足特定条件时被阻塞或唤醒c := sync.NewCond(&mu)go func() {// 调用 wait() 方法前需要加锁,因为 wait() 方法内部首先会解锁,不在外层加锁,会报错c.L.Lock()defer c.L.Unlock()c.Wait() // 阻塞等待被唤醒fmt.Println("Goroutine 1: 条件满足,继续执行")}()// Goroutine 2: 等待条件变量go func() {c.L.Lock()defer c.L.Unlock()c.Wait()fmt.Println("Goroutine 2: 条件满足,继续执行")}()time.Sleep(time.Second * 2)// 唤醒一个gorutine, 可以不用加锁//c.Signal()// 唤醒等待的所有 gorutine, 可以不用加锁c.Broadcast()time.Sleep(time.Second * 2) }

二十、map的使用及底层原理

1、map的初始化

①make函数分配内存

clients := make(map[string]string) // 默认容量为0 clients := make(map[string]string, 10) // 指定初始容量为10// 注意:如果直接使用new,还得再使用make,因为make会初始化内部的哈希表结构②直接定义map并赋值

clients := map[string]string{"test": "test","yy": "yy", }2、读map

// 方式1 name := clients["name"]// 方式2 name, ok := clients["name"] if ok {fmt.Println(name) // 输出: } else {fmt.Println("Key not found") }3、写map

// 如果map未初始化,直接写,会报错:panic: assignment to entry in nil map clients["name"] = "test"4、删map

// 如果map未初始化或key不存在,则删除方法直接结束,不会报错 delete(clients, "name")5、遍历map

// 前后两次遍历,key值顺序可能不一样 for k, v := range clients {fmt.Printf("Key=%s value=%s \n", k, v) }6、map底层数据结构

//src/runtime/map.go// A header for a Go map. type hmap struct {// Note: the format of the hmap is also encoded in cmd/compile/internal/reflectdata/reflect.go.// Make sure this stays in sync with the compiler's definition.count int // # live cells == size of map. Must be first (used by len() builtin)flags uint8B uint8 // log_2 of # of buckets (can hold up to loadFactor * 2^B items)noverflow uint16 // approximate number of overflow buckets; see incrnoverflow for detailshash0 uint32 // hash seedbuckets unsafe.Pointer // array of 2^B Buckets. may be nil if count==0.oldbuckets unsafe.Pointer // previous bucket array of half the size, non-nil only when growingnevacuate uintptr // progress counter for evacuation (buckets less than this have been evacuated)extra *mapextra // optional fields }// mapextra holds fields that are not present on all maps. type mapextra struct {// If both key and elem do not contain pointers and are inline, then we mark bucket// type as containing no pointers. This avoids scanning such maps.// However, bmap.overflow is a pointer. In order to keep overflow buckets// alive, we store pointers to all overflow buckets in hmap.extra.overflow and hmap.extra.oldoverflow.// overflow and oldoverflow are only used if key and elem do not contain pointers.// overflow contains overflow buckets for hmap.buckets.// oldoverflow contains overflow buckets for hmap.oldbuckets.// The indirection allows to store a pointer to the slice in hiter.overflow *[]*bmapoldoverflow *[]*bmap// nextOverflow holds a pointer to a free overflow bucket.nextOverflow *bmap }// A bucket for a Go map. type bmap struct {// tophash generally contains the top byte of the hash value// for each key in this bucket. If tophash[0] < minTopHash,// tophash[0] is a bucket evacuation state instead.tophash [bucketCnt]uint8// Followed by bucketCnt keys and then bucketCnt elems.// NOTE: packing all the keys together and then all the elems together makes the// code a bit more complicated than alternating key/elem/key/elem/... but it allows// us to eliminate padding which would be needed for, e.g., map[int64]int8.// Followed by an overflow pointer. }

7、map解决哈希冲突的方式

- 拉链法:当哈希值相同时,桶中的元素通过链表的形式链接。方便简单,无需预选分配内存

- 开放寻址法:当哈希值对应的桶中存满数据时,会通过一定的探测策略继续寻找下一个桶来存放数据。无需额外的指针用于链接元素,内存地址完全连续,充分利用CPU高速缓存

8、map读流程

func mapaccess1(t *maptype, h *hmap, key unsafe.Pointer) unsafe.Pointer {if raceenabled && h != nil {callerpc := getcallerpc()pc := abi.FuncPCABIInternal(mapaccess1)racereadpc(unsafe.Pointer(h), callerpc, pc)raceReadObjectPC(t.Key, key, callerpc, pc)}if msanenabled && h != nil {msanread(key, t.Key.Size_)}if asanenabled && h != nil {asanread(key, t.Key.Size_)}// map 未初始化或键值对为0,则直接返回 0 值if h == nil || h.count == 0 {if err := mapKeyError(t, key); err != nil {panic(err) // see issue 23734}return unsafe.Pointer(&zeroVal[0])}// 有协程在写map,则抛出异常if h.flags&hashWriting != 0 {fatal("concurrent map read and map write")}hash := t.Hasher(key, uintptr(h.hash0))// 桶长度的指数左移一位再减1 hash % 2^B 等价于 hash & (2^B - 1)m := bucketMask(h.B)// 根据key的哈希值找到对应桶数组的位置 (hash&m)*uintptr(t.BucketSize)) 表示桶数组索引*单个桶的大小,获得对应桶的地址偏移量b := (*bmap)(add(h.buckets, (hash&m)*uintptr(t.BucketSize)))// 判断是否处于扩容阶段(oldbuckets不为空,则表示正处于扩容阶段)if c := h.oldbuckets; c != nil {// 如果不是等量扩容,则获取老的桶数组长度减1(m值右移1位)if !h.sameSizeGrow() {// There used to be half as many buckets; mask down one more power of two.m >>= 1}oldb := (*bmap)(add(c, (hash&m)*uintptr(t.BucketSize)))// 判断老桶中的数据是否完成迁移,如果没有完成迁移,则从老桶中取数据if !evacuated(oldb) {b = oldb}}top := tophash(hash) bucketloop:// 外层循环桶及桶链表,内层循环每个桶的8(bucketCnt)个键值对for ; b != nil; b = b.overflow(t) {for i := uintptr(0); i < bucketCnt; i++ {if b.tophash[i] != top {if b.tophash[i] == emptyRest {break bucketloop}continue}k := add(unsafe.Pointer(b), dataOffset+i*uintptr(t.KeySize))if t.IndirectKey() {k = *((*unsafe.Pointer)(k))}if t.Key.Equal(key, k) {e := add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.KeySize)+i*uintptr(t.ValueSize))if t.IndirectElem() {e = *((*unsafe.Pointer)(e))}return e}}}return unsafe.Pointer(&zeroVal[0]) }9、map写流程

// src/runtime/map.gofunc mapassign(t *maptype, h *hmap, key unsafe.Pointer) unsafe.Pointer {if h == nil {panic(plainError("assignment to entry in nil map"))}if raceenabled {callerpc := getcallerpc()pc := abi.FuncPCABIInternal(mapassign)racewritepc(unsafe.Pointer(h), callerpc, pc)raceReadObjectPC(t.Key, key, callerpc, pc)}if msanenabled {msanread(key, t.Key.Size_)}if asanenabled {asanread(key, t.Key.Size_)}if h.flags&hashWriting != 0 {fatal("concurrent map writes")}hash := t.Hasher(key, uintptr(h.hash0))// Set hashWriting after calling t.hasher, since t.hasher may panic,// in which case we have not actually done a write.h.flags ^= hashWritingif h.buckets == nil {h.buckets = newobject(t.Bucket) // newarray(t.Bucket, 1)}again:bucket := hash & bucketMask(h.B)if h.growing() {growWork(t, h, bucket)}b := (*bmap)(add(h.buckets, bucket*uintptr(t.BucketSize)))top := tophash(hash)var inserti *uint8var insertk unsafe.Pointervar elem unsafe.Pointer bucketloop:for {for i := uintptr(0); i < bucketCnt; i++ {if b.tophash[i] != top {if isEmpty(b.tophash[i]) && inserti == nil {inserti = &b.tophash[i]insertk = add(unsafe.Pointer(b), dataOffset+i*uintptr(t.KeySize))elem = add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.KeySize)+i*uintptr(t.ValueSize))}if b.tophash[i] == emptyRest {break bucketloop}continue}k := add(unsafe.Pointer(b), dataOffset+i*uintptr(t.KeySize))if t.IndirectKey() {k = *((*unsafe.Pointer)(k))}if !t.Key.Equal(key, k) {continue}// already have a mapping for key. Update it.if t.NeedKeyUpdate() {typedmemmove(t.Key, k, key)}elem = add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.KeySize)+i*uintptr(t.ValueSize))goto done}ovf := b.overflow(t)if ovf == nil {break}b = ovf}// Did not find mapping for key. Allocate new cell & add entry.// If we hit the max load factor or we have too many overflow buckets,// and we're not already in the middle of growing, start growing.if !h.growing() && (overLoadFactor(h.count+1, h.B) || tooManyOverflowBuckets(h.noverflow, h.B)) {hashGrow(t, h)goto again // Growing the table invalidates everything, so try again}if inserti == nil {// The current bucket and all the overflow buckets connected to it are full, allocate a new one.newb := h.newoverflow(t, b)inserti = &newb.tophash[0]insertk = add(unsafe.Pointer(newb), dataOffset)elem = add(insertk, bucketCnt*uintptr(t.KeySize))}// store new key/elem at insert positionif t.IndirectKey() {kmem := newobject(t.Key)*(*unsafe.Pointer)(insertk) = kmeminsertk = kmem}if t.IndirectElem() {vmem := newobject(t.Elem)*(*unsafe.Pointer)(elem) = vmem}typedmemmove(t.Key, insertk, key)*inserti = toph.count++done:if h.flags&hashWriting == 0 {fatal("concurrent map writes")}h.flags &^= hashWritingif t.IndirectElem() {elem = *((*unsafe.Pointer)(elem))}return elem }注意:扩容包括增量扩容(k-v对数量/桶数量>6.5)和等量扩容(溢出桶数量=桶数量)

map采用渐进式扩容,每次写操作迁移一部分老数据到新桶中

10、删除map

// src/runtime/map.gofunc mapdelete(t *maptype, h *hmap, key unsafe.Pointer) {if raceenabled && h != nil {callerpc := getcallerpc()pc := abi.FuncPCABIInternal(mapdelete)racewritepc(unsafe.Pointer(h), callerpc, pc)raceReadObjectPC(t.Key, key, callerpc, pc)}if msanenabled && h != nil {msanread(key, t.Key.Size_)}if asanenabled && h != nil {asanread(key, t.Key.Size_)}if h == nil || h.count == 0 {if err := mapKeyError(t, key); err != nil {panic(err) // see issue 23734}return}if h.flags&hashWriting != 0 {fatal("concurrent map writes")}hash := t.Hasher(key, uintptr(h.hash0))// Set hashWriting after calling t.hasher, since t.hasher may panic,// in which case we have not actually done a write (delete).h.flags ^= hashWritingbucket := hash & bucketMask(h.B)if h.growing() {growWork(t, h, bucket)}b := (*bmap)(add(h.buckets, bucket*uintptr(t.BucketSize)))bOrig := btop := tophash(hash) search:for ; b != nil; b = b.overflow(t) {for i := uintptr(0); i < bucketCnt; i++ {if b.tophash[i] != top {if b.tophash[i] == emptyRest {break search}continue}k := add(unsafe.Pointer(b), dataOffset+i*uintptr(t.KeySize))k2 := kif t.IndirectKey() {k2 = *((*unsafe.Pointer)(k2))}if !t.Key.Equal(key, k2) {continue}// Only clear key if there are pointers in it.if t.IndirectKey() {*(*unsafe.Pointer)(k) = nil} else if t.Key.PtrBytes != 0 {memclrHasPointers(k, t.Key.Size_)}e := add(unsafe.Pointer(b), dataOffset+bucketCnt*uintptr(t.KeySize)+i*uintptr(t.ValueSize))if t.IndirectElem() {*(*unsafe.Pointer)(e) = nil} else if t.Elem.PtrBytes != 0 {memclrHasPointers(e, t.Elem.Size_)} else {memclrNoHeapPointers(e, t.Elem.Size_)}b.tophash[i] = emptyOne// If the bucket now ends in a bunch of emptyOne states,// change those to emptyRest states.// It would be nice to make this a separate function, but// for loops are not currently inlineable.if i == bucketCnt-1 {if b.overflow(t) != nil && b.overflow(t).tophash[0] != emptyRest {goto notLast}} else {if b.tophash[i+1] != emptyRest {goto notLast}}for {b.tophash[i] = emptyRestif i == 0 {if b == bOrig {break // beginning of initial bucket, we're done.}// Find previous bucket, continue at its last entry.c := bfor b = bOrig; b.overflow(t) != c; b = b.overflow(t) {}i = bucketCnt - 1} else {i--}if b.tophash[i] != emptyOne {break}}notLast:h.count--// Reset the hash seed to make it more difficult for attackers to// repeatedly trigger hash collisions. See issue 25237.if h.count == 0 {h.hash0 = uint32(rand())}break search}}if h.flags&hashWriting == 0 {fatal("concurrent map writes")}h.flags &^= hashWriting }11、遍历map

前后两次遍历的key顺序不一致的原因:

①遍历桶的起始节点和桶内k-v偏移量随机

②map是否处于扩容阶段也影响遍历顺序

12、map扩容

二十一、hash特性

hash又称做散列,可将任意长度的输入压缩到某一固定长度的输出,特性如下:

1、可重入性

2、离散性

3、单向性

4、哈希冲突

二十二、协程的缺点

1、协程本质上是单核的,无法充分利用多核资源

2、每个协程都有一个有限的堆栈大小

3、存在潜在死锁与资源争用

4、协程并行特性使得调试变得复杂

二十三、切片底层原理

1、切片初始化

// 切片初始化 // 1、使用make函数 make([]int, len) 或者 make([]int, len, cap) c := make([]int, 2, 4)// 2、直接初始化 d := []int{1, 2, 3}注意:make([]int, len, cap)中的[len, cap)的范围内,虽然已经分配了内存空间,但逻辑意义上不存在元素,直接访问会报数组越界(panic: runtime error: index out of range [2] with length 2)

2、底层数据结构

type slice struct {array unsafe.Pointer // 指向底层数组的起始地址len int // 长度cap int // 容量 }// src/runtime/slice.go // 初始化 slice func makeslice(et *_type, len, cap int) unsafe.Pointer {mem, overflow := math.MulUintptr(et.Size_, uintptr(cap))if overflow || mem > maxAlloc || len < 0 || len > cap {// NOTE: Produce a 'len out of range' error instead of a// 'cap out of range' error when someone does make([]T, bignumber).// 'cap out of range' is true too, but since the cap is only being// supplied implicitly, saying len is clearer.// See golang.org/issue/4085.mem, overflow := math.MulUintptr(et.Size_, uintptr(len))if overflow || mem > maxAlloc || len < 0 {panicmakeslicelen()}panicmakeslicecap()}return mallocgc(mem, et, true) }3、切片扩容

package mainimport "fmt"func main() {s := []int{1, 2, 3, 4}fmt.Printf("原始切片内容:%v 长度:%d 容量:%d 第一个元素的地址:%p \n", s, len(s), cap(s), &s[0])// 切片追加元素,会导致扩容s = append(s, 5)fmt.Printf("扩容后 切片内容:%v 长度:%d 容量:%d 第一个元素的地址:%p \n", s, len(s), cap(s), &s[0])// 输出内容:// 原始切片内容:[1 2 3 4] 长度:4 容量:4 第一个元素的地址:0xc000016160// 扩容后 切片内容:[1 2 3 4 5] 长度:5 容量:8 第一个元素的地址:0xc000010380 }

// src/runtime/slice.go 切片扩容 func growslice(oldPtr unsafe.Pointer, newLen, oldCap, num int, et *_type) slice {oldLen := newLen - numif raceenabled {callerpc := getcallerpc()racereadrangepc(oldPtr, uintptr(oldLen*int(et.Size_)), callerpc, abi.FuncPCABIInternal(growslice))}if msanenabled {msanread(oldPtr, uintptr(oldLen*int(et.Size_)))}if asanenabled {asanread(oldPtr, uintptr(oldLen*int(et.Size_)))}if newLen < 0 {panic(errorString("growslice: len out of range"))}if et.Size_ == 0 {// append should not create a slice with nil pointer but non-zero len.// We assume that append doesn't need to preserve oldPtr in this case.return slice{unsafe.Pointer(&zerobase), newLen, newLen}}newcap := nextslicecap(newLen, oldCap)var overflow boolvar lenmem, newlenmem, capmem uintptr// Specialize for common values of et.Size.// For 1 we don't need any division/multiplication.// For goarch.PtrSize, compiler will optimize division/multiplication into a shift by a constant.// For powers of 2, use a variable shift.noscan := et.PtrBytes == 0switch {case et.Size_ == 1:lenmem = uintptr(oldLen)newlenmem = uintptr(newLen)capmem = roundupsize(uintptr(newcap), noscan)overflow = uintptr(newcap) > maxAllocnewcap = int(capmem)case et.Size_ == goarch.PtrSize:lenmem = uintptr(oldLen) * goarch.PtrSizenewlenmem = uintptr(newLen) * goarch.PtrSizecapmem = roundupsize(uintptr(newcap)*goarch.PtrSize, noscan)overflow = uintptr(newcap) > maxAlloc/goarch.PtrSizenewcap = int(capmem / goarch.PtrSize)case isPowerOfTwo(et.Size_):var shift uintptrif goarch.PtrSize == 8 {// Mask shift for better code generation.shift = uintptr(sys.TrailingZeros64(uint64(et.Size_))) & 63} else {shift = uintptr(sys.TrailingZeros32(uint32(et.Size_))) & 31}lenmem = uintptr(oldLen) << shiftnewlenmem = uintptr(newLen) << shiftcapmem = roundupsize(uintptr(newcap)<<shift, noscan)overflow = uintptr(newcap) > (maxAlloc >> shift)newcap = int(capmem >> shift)capmem = uintptr(newcap) << shiftdefault:lenmem = uintptr(oldLen) * et.Size_newlenmem = uintptr(newLen) * et.Size_capmem, overflow = math.MulUintptr(et.Size_, uintptr(newcap))capmem = roundupsize(capmem, noscan)newcap = int(capmem / et.Size_)capmem = uintptr(newcap) * et.Size_}// The check of overflow in addition to capmem > maxAlloc is needed// to prevent an overflow which can be used to trigger a segfault// on 32bit architectures with this example program://// type T [1<<27 + 1]int64//// var d T// var s []T//// func main() {// s = append(s, d, d, d, d)// print(len(s), "\n")// }if overflow || capmem > maxAlloc {panic(errorString("growslice: len out of range"))}var p unsafe.Pointerif et.PtrBytes == 0 {p = mallocgc(capmem, nil, false)// The append() that calls growslice is going to overwrite from oldLen to newLen.// Only clear the part that will not be overwritten.// The reflect_growslice() that calls growslice will manually clear// the region not cleared here.memclrNoHeapPointers(add(p, newlenmem), capmem-newlenmem)} else {// Note: can't use rawmem (which avoids zeroing of memory), because then GC can scan uninitialized memory.p = mallocgc(capmem, et, true)if lenmem > 0 && writeBarrier.enabled {// Only shade the pointers in oldPtr since we know the destination slice p// only contains nil pointers because it has been cleared during alloc.//// It's safe to pass a type to this function as an optimization because// from and to only ever refer to memory representing whole values of// type et. See the comment on bulkBarrierPreWrite.bulkBarrierPreWriteSrcOnly(uintptr(p), uintptr(oldPtr), lenmem-et.Size_+et.PtrBytes, et)}}memmove(p, oldPtr, lenmem)return slice{p, newLen, newcap} }// nextslicecap computes the next appropriate slice length. func nextslicecap(newLen, oldCap int) int {newcap := oldCapdoublecap := newcap + newcapif newLen > doublecap {return newLen}const threshold = 256if oldCap < threshold {return doublecap}for {// Transition from growing 2x for small slices// to growing 1.25x for large slices. This formula// gives a smooth-ish transition between the two.newcap += (newcap + 3*threshold) >> 2// We need to check `newcap >= newLen` and whether `newcap` overflowed.// newLen is guaranteed to be larger than zero, hence// when newcap overflows then `uint(newcap) > uint(newLen)`.// This allows to check for both with the same comparison.if uint(newcap) >= uint(newLen) {break}}// Set newcap to the requested cap when// the newcap calculation overflowed.if newcap <= 0 {return newLen}return newcap }

二十四、context实现原理

context 主要在异步场景中实现并发协调以及对gorutine的生命周期控制,除此之外,context还带有一定的数据存储能力。

1、数据结构

// src/context/contex.gotype Context interface {// 返回 ctx 的过期时间Deadline() (deadline time.Time, ok bool)// 返回用以标识 ctx 是否结束的 chanDone() <-chan struct{}// 返回 ctx 的错误Err() error// 返回 ctx 存放的 key 对应的 valueValue(key any) any }2、emptyCtx

type emptyCtx struct{}func (emptyCtx) Deadline() (deadline time.Time, ok bool) {return }func (emptyCtx) Done() <-chan struct{} {return nil }func (emptyCtx) Err() error {return nil }func (emptyCtx) Value(key any) any {return nil }type backgroundCtx struct{ emptyCtx }func (backgroundCtx) String() string {return "context.Background" }type todoCtx struct{ emptyCtx }func (todoCtx) String() string {return "context.TODO" }3、cancelCtx

type cancelCtx struct {Contextmu sync.Mutex // protects following fieldsdone atomic.Value // of chan struct{}, created lazily, closed by first cancel callchildren map[canceler]struct{} // set to nil by the first cancel callerr error // set to non-nil by the first cancel callcause error // set to non-nil by the first cancel call }type canceler interface {cancel(removeFromParent bool, err, cause error)Done() <-chan struct{} }func withCancel(parent Context) *cancelCtx {if parent == nil {panic("cannot create context from nil parent")}c := &cancelCtx{}c.propagateCancel(parent, c)return c }func (c *cancelCtx) propagateCancel(parent Context, child canceler) {c.Context = parentdone := parent.Done()if done == nil {return // parent is never canceled}select {case <-done:// parent is already canceledchild.cancel(false, parent.Err(), Cause(parent))returndefault:}if p, ok := parentCancelCtx(parent); ok {// parent is a *cancelCtx, or derives from one.p.mu.Lock()if p.err != nil {// parent has already been canceledchild.cancel(false, p.err, p.cause)} else {if p.children == nil {p.children = make(map[canceler]struct{})}p.children[child] = struct{}{}}p.mu.Unlock()return}if a, ok := parent.(afterFuncer); ok {// parent implements an AfterFunc method.c.mu.Lock()stop := a.AfterFunc(func() {child.cancel(false, parent.Err(), Cause(parent))})c.Context = stopCtx{Context: parent,stop: stop,}c.mu.Unlock()return}goroutines.Add(1)go func() {select {case <-parent.Done():child.cancel(false, parent.Err(), Cause(parent))case <-child.Done():}}() }4、timerCtx

type timerCtx struct {cancelCtxtimer *time.Timer // Under cancelCtx.mu.deadline time.Time }func (c *timerCtx) cancel(removeFromParent bool, err, cause error) {c.cancelCtx.cancel(false, err, cause)if removeFromParent {// Remove this timerCtx from its parent cancelCtx's children.removeChild(c.cancelCtx.Context, c)}c.mu.Lock()if c.timer != nil {c.timer.Stop()c.timer = nil}c.mu.Unlock() }func WithDeadlineCause(parent Context, d time.Time, cause error) (Context, CancelFunc) {if parent == nil {panic("cannot create context from nil parent")}if cur, ok := parent.Deadline(); ok && cur.Before(d) {// The current deadline is already sooner than the new one.return WithCancel(parent)}c := &timerCtx{deadline: d,}c.cancelCtx.propagateCancel(parent, c)dur := time.Until(d)if dur <= 0 {c.cancel(true, DeadlineExceeded, cause) // deadline has already passedreturn c, func() { c.cancel(false, Canceled, nil) }}c.mu.Lock()defer c.mu.Unlock()if c.err == nil {c.timer = time.AfterFunc(dur, func() {c.cancel(true, DeadlineExceeded, cause)})}return c, func() { c.cancel(true, Canceled, nil) } }5、valueCtx

type valueCtx struct {// 父ctxContext// 存储在 ctx 中的键值对,注意只有一个键值对key, val any }func (c *valueCtx) Value(key any) any {if c.key == key {return c.val}return value(c.Context, key) }func value(c Context, key any) any {for {switch ctx := c.(type) {case *valueCtx:if key == ctx.key {return ctx.val}c = ctx.Contextcase *cancelCtx:if key == &cancelCtxKey {return c}c = ctx.Contextcase withoutCancelCtx:if key == &cancelCtxKey {// This implements Cause(ctx) == nil// when ctx is created using WithoutCancel.return nil}c = ctx.ccase *timerCtx:if key == &cancelCtxKey {return &ctx.cancelCtx}c = ctx.Contextcase backgroundCtx, todoCtx:return nildefault:return c.Value(key)}} }

二十五、http实现原理

1、http使用方法

package mainimport "net/http"func main() {// 注册路由及处理函数http.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {w.Write([]byte("Hello World!"))})// 启动监听 8080 端口http.ListenAndServe(":8080", nil) }

2、服务端数据结构

// src/net/http/server.gotype Server struct {// 服务器地址Addr string// 路由处理器Handler Handler // handler to invoke, http.DefaultServeMux if nilDisableGeneralOptionsHandler boolTLSConfig *tls.ConfigReadTimeout time.DurationReadHeaderTimeout time.DurationWriteTimeout time.DurationIdleTimeout time.DurationMaxHeaderBytes intTLSNextProto map[string]func(*Server, *tls.Conn, Handler)ConnState func(net.Conn, ConnState)ErrorLog *log.LoggerBaseContext func(net.Listener) context.ContextConnContext func(ctx context.Context, c net.Conn) context.ContextinShutdown atomic.Bool // true when server is in shutdowndisableKeepAlives atomic.BoolnextProtoOnce sync.Once // guards setupHTTP2_* initnextProtoErr error // result of http2.ConfigureServer if usedmu sync.Mutexlisteners map[*net.Listener]struct{}activeConn map[*conn]struct{}onShutdown []func()listenerGroup sync.WaitGroup }type Handler interface {ServeHTTP(ResponseWriter, *Request) }// ServeMux 是对 Handler 的具体实现,内部通过 map 维护了 path 到 handler 处理函数的映射关系 type ServeMux struct {mu sync.RWMutextree routingNodeindex routingIndexpatterns []*pattern // TODO(jba): remove if possiblemux121 serveMux121 // used only when GODEBUG=httpmuxgo121=1 }Handler 是server中最核心的成员字段,实现了从请求路径path到具体处理函数 handler 的注册和映射能力。

3、注册handler

func HandleFunc(pattern string, handler func(ResponseWriter, *Request)) {if use121 {DefaultServeMux.mux121.handleFunc(pattern, handler)} else {DefaultServeMux.register(pattern, HandlerFunc(handler))} }func (mux *ServeMux) register(pattern string, handler Handler) {if err := mux.registerErr(pattern, handler); err != nil {panic(err)} }func (mux *ServeMux) registerErr(patstr string, handler Handler) error {if patstr == "" {return errors.New("http: invalid pattern")}if handler == nil {return errors.New("http: nil handler")}if f, ok := handler.(HandlerFunc); ok && f == nil {return errors.New("http: nil handler")}pat, err := parsePattern(patstr)if err != nil {return fmt.Errorf("parsing %q: %w", patstr, err)}// Get the caller's location, for better conflict error messages.// Skip register and whatever calls it._, file, line, ok := runtime.Caller(3)if !ok {pat.loc = "unknown location"} else {pat.loc = fmt.Sprintf("%s:%d", file, line)}mux.mu.Lock()defer mux.mu.Unlock()// Check for conflict.if err := mux.index.possiblyConflictingPatterns(pat, func(pat2 *pattern) error {if pat.conflictsWith(pat2) {d := describeConflict(pat, pat2)return fmt.Errorf("pattern %q (registered at %s) conflicts with pattern %q (registered at %s):\n%s",pat, pat.loc, pat2, pat2.loc, d)}return nil}); err != nil {return err}mux.tree.addPattern(pat, handler)mux.index.addPattern(pat)mux.patterns = append(mux.patterns, pat)return nil }4、启动 server

// src/net/http/server.gofunc ListenAndServe(addr string, handler Handler) error {server := &Server{Addr: addr, Handler: handler}return server.ListenAndServe() }func (srv *Server) ListenAndServe() error {if srv.shuttingDown() {return ErrServerClosed}addr := srv.Addrif addr == "" {addr = ":http"}ln, err := net.Listen("tcp", addr)if err != nil {return err}return srv.Serve(ln) }func (srv *Server) Serve(l net.Listener) error {if fn := testHookServerServe; fn != nil {fn(srv, l) // call hook with unwrapped listener}origListener := ll = &onceCloseListener{Listener: l}defer l.Close()if err := srv.setupHTTP2_Serve(); err != nil {return err}if !srv.trackListener(&l, true) {return ErrServerClosed}defer srv.trackListener(&l, false)baseCtx := context.Background()if srv.BaseContext != nil {baseCtx = srv.BaseContext(origListener)if baseCtx == nil {panic("BaseContext returned a nil context")}}var tempDelay time.Duration // how long to sleep on accept failurectx := context.WithValue(baseCtx, ServerContextKey, srv)for {rw, err := l.Accept()if err != nil {if srv.shuttingDown() {return ErrServerClosed}if ne, ok := err.(net.Error); ok && ne.Temporary() {if tempDelay == 0 {tempDelay = 5 * time.Millisecond} else {tempDelay *= 2}if max := 1 * time.Second; tempDelay > max {tempDelay = max}srv.logf("http: Accept error: %v; retrying in %v", err, tempDelay)time.Sleep(tempDelay)continue}return err}connCtx := ctxif cc := srv.ConnContext; cc != nil {connCtx = cc(connCtx, rw)if connCtx == nil {panic("ConnContext returned nil")}}tempDelay = 0c := srv.newConn(rw)c.setState(c.rwc, StateNew, runHooks) // before Serve can returngo c.serve(connCtx)} }func (c *conn) serve(ctx context.Context) {if ra := c.rwc.RemoteAddr(); ra != nil {c.remoteAddr = ra.String()}ctx = context.WithValue(ctx, LocalAddrContextKey, c.rwc.LocalAddr())var inFlightResponse *responsedefer func() {if err := recover(); err != nil && err != ErrAbortHandler {const size = 64 << 10buf := make([]byte, size)buf = buf[:runtime.Stack(buf, false)]c.server.logf("http: panic serving %v: %v\n%s", c.remoteAddr, err, buf)}if inFlightResponse != nil {inFlightResponse.cancelCtx()}if !c.hijacked() {if inFlightResponse != nil {inFlightResponse.conn.r.abortPendingRead()inFlightResponse.reqBody.Close()}c.close()c.setState(c.rwc, StateClosed, runHooks)}}()if tlsConn, ok := c.rwc.(*tls.Conn); ok {tlsTO := c.server.tlsHandshakeTimeout()if tlsTO > 0 {dl := time.Now().Add(tlsTO)c.rwc.SetReadDeadline(dl)c.rwc.SetWriteDeadline(dl)}if err := tlsConn.HandshakeContext(ctx); err != nil {// If the handshake failed due to the client not speaking// TLS, assume they're speaking plaintext HTTP and write a// 400 response on the TLS conn's underlying net.Conn.if re, ok := err.(tls.RecordHeaderError); ok && re.Conn != nil && tlsRecordHeaderLooksLikeHTTP(re.RecordHeader) {io.WriteString(re.Conn, "HTTP/1.0 400 Bad Request\r\n\r\nClient sent an HTTP request to an HTTPS server.\n")re.Conn.Close()return}c.server.logf("http: TLS handshake error from %s: %v", c.rwc.RemoteAddr(), err)return}// Restore Conn-level deadlines.if tlsTO > 0 {c.rwc.SetReadDeadline(time.Time{})c.rwc.SetWriteDeadline(time.Time{})}c.tlsState = new(tls.ConnectionState)*c.tlsState = tlsConn.ConnectionState()if proto := c.tlsState.NegotiatedProtocol; validNextProto(proto) {if fn := c.server.TLSNextProto[proto]; fn != nil {h := initALPNRequest{ctx, tlsConn, serverHandler{c.server}}// Mark freshly created HTTP/2 as active and prevent any server state hooks// from being run on these connections. This prevents closeIdleConns from// closing such connections. See issue https://golang.org/issue/39776.c.setState(c.rwc, StateActive, skipHooks)fn(c.server, tlsConn, h)}return}}// HTTP/1.x from here on.ctx, cancelCtx := context.WithCancel(ctx)c.cancelCtx = cancelCtxdefer cancelCtx()c.r = &connReader{conn: c}c.bufr = newBufioReader(c.r)c.bufw = newBufioWriterSize(checkConnErrorWriter{c}, 4<<10)for {w, err := c.readRequest(ctx)if c.r.remain != c.server.initialReadLimitSize() {// If we read any bytes off the wire, we're active.c.setState(c.rwc, StateActive, runHooks)}if err != nil {const errorHeaders = "\r\nContent-Type: text/plain; charset=utf-8\r\nConnection: close\r\n\r\n"switch {case err == errTooLarge:// Their HTTP client may or may not be// able to read this if we're// responding to them and hanging up// while they're still writing their// request. Undefined behavior.const publicErr = "431 Request Header Fields Too Large"fmt.Fprintf(c.rwc, "HTTP/1.1 "+publicErr+errorHeaders+publicErr)c.closeWriteAndWait()returncase isUnsupportedTEError(err):// Respond as per RFC 7230 Section 3.3.1 which says,// A server that receives a request message with a// transfer coding it does not understand SHOULD// respond with 501 (Unimplemented).code := StatusNotImplemented// We purposefully aren't echoing back the transfer-encoding's value,// so as to mitigate the risk of cross side scripting by an attacker.fmt.Fprintf(c.rwc, "HTTP/1.1 %d %s%sUnsupported transfer encoding", code, StatusText(code), errorHeaders)returncase isCommonNetReadError(err):return // don't replydefault:if v, ok := err.(statusError); ok {fmt.Fprintf(c.rwc, "HTTP/1.1 %d %s: %s%s%d %s: %s", v.code, StatusText(v.code), v.text, errorHeaders, v.code, StatusText(v.code), v.text)return}const publicErr = "400 Bad Request"fmt.Fprintf(c.rwc, "HTTP/1.1 "+publicErr+errorHeaders+publicErr)return}}// Expect 100 Continue supportreq := w.reqif req.expectsContinue() {if req.ProtoAtLeast(1, 1) && req.ContentLength != 0 {// Wrap the Body reader with one that replies on the connectionreq.Body = &expectContinueReader{readCloser: req.Body, resp: w}w.canWriteContinue.Store(true)}} else if req.Header.get("Expect") != "" {w.sendExpectationFailed()return}c.curReq.Store(w)if requestBodyRemains(req.Body) {registerOnHitEOF(req.Body, w.conn.r.startBackgroundRead)} else {w.conn.r.startBackgroundRead()}// HTTP cannot have multiple simultaneous active requests.[*]// Until the server replies to this request, it can't read another,// so we might as well run the handler in this goroutine.// [*] Not strictly true: HTTP pipelining. We could let them all process// in parallel even if their responses need to be serialized.// But we're not going to implement HTTP pipelining because it// was never deployed in the wild and the answer is HTTP/2.inFlightResponse = wserverHandler{c.server}.ServeHTTP(w, w.req)inFlightResponse = nilw.cancelCtx()if c.hijacked() {return}w.finishRequest()c.rwc.SetWriteDeadline(time.Time{})if !w.shouldReuseConnection() {if w.requestBodyLimitHit || w.closedRequestBodyEarly() {c.closeWriteAndWait()}return}c.setState(c.rwc, StateIdle, runHooks)c.curReq.Store(nil)if !w.conn.server.doKeepAlives() {// We're in shutdown mode. We might've replied// to the user without "Connection: close" and// they might think they can send another// request, but such is life with HTTP/1.1.return}if d := c.server.idleTimeout(); d != 0 {c.rwc.SetReadDeadline(time.Now().Add(d))} else {c.rwc.SetReadDeadline(time.Time{})}// Wait for the connection to become readable again before trying to// read the next request. This prevents a ReadHeaderTimeout or// ReadTimeout from starting until the first bytes of the next request// have been received.if _, err := c.bufr.Peek(4); err != nil {return}c.rwc.SetReadDeadline(time.Time{})} }func (mux *ServeMux) findHandler(r *Request) (h Handler, patStr string, _ *pattern, matches []string) {var n *routingNodehost := r.URL.HostescapedPath := r.URL.EscapedPath()path := escapedPath// CONNECT requests are not canonicalized.if r.Method == "CONNECT" {// If r.URL.Path is /tree and its handler is not registered,// the /tree -> /tree/ redirect applies to CONNECT requests// but the path canonicalization does not._, _, u := mux.matchOrRedirect(host, r.Method, path, r.URL)if u != nil {return RedirectHandler(u.String(), StatusMovedPermanently), u.Path, nil, nil}// Redo the match, this time with r.Host instead of r.URL.Host.// Pass a nil URL to skip the trailing-slash redirect logic.n, matches, _ = mux.matchOrRedirect(r.Host, r.Method, path, nil)} else {// All other requests have any port stripped and path cleaned// before passing to mux.handler.host = stripHostPort(r.Host)path = cleanPath(path)// If the given path is /tree and its handler is not registered,// redirect for /tree/.var u *url.URLn, matches, u = mux.matchOrRedirect(host, r.Method, path, r.URL)if u != nil {return RedirectHandler(u.String(), StatusMovedPermanently), u.Path, nil, nil}if path != escapedPath {// Redirect to cleaned path.patStr := ""if n != nil {patStr = n.pattern.String()}u := &url.URL{Path: path, RawQuery: r.URL.RawQuery}return RedirectHandler(u.String(), StatusMovedPermanently), patStr, nil, nil}}if n == nil {// We didn't find a match with the request method. To distinguish between// Not Found and Method Not Allowed, see if there is another pattern that// matches except for the method.allowedMethods := mux.matchingMethods(host, path)if len(allowedMethods) > 0 {return HandlerFunc(func(w ResponseWriter, r *Request) {w.Header().Set("Allow", strings.Join(allowedMethods, ", "))Error(w, StatusText(StatusMethodNotAllowed), StatusMethodNotAllowed)}), "", nil, nil}return NotFoundHandler(), "", nil, nil}return n.handler, n.pattern.String(), n.pattern, matches }

二十六、Mysql慢查询该如何优化?

- 检查是否走了索引,如果没有则优化SQL利用索引

- 检查所利用的索引,是否是最优索引

- 检查所查字段是否都是必须的,是否查询了过多字段,查出了多余数据

- 检查表中数据是否过多,是否应该进行分库分表

- 检查数据库实例所在机器的性能配置,是否太低,是否可以适当增加资

相关文章:

GO语言基础面试题

一、字符串和整型怎么相互转换 1、使用 strconv 包中的函数 FormatInt 、ParseInt 等进行转换 2、转换10进制的整形时,可以使用 strconv.Atoi、strconv.Itoa: Atoi是ParseInt(s, 10, 0) 的简写 Itoa是FormatInt(i, 10) 的简写 3、整形转为字符型时&#…...

要查询 `user` 表中 `we_chat_subscribe` 和 `we_chat_union_id` 列不为空的用户数量

文章目录 1、we_chat_subscribe2、we_chat_union_id 1、we_chat_subscribe 要查询 user 表中 we_chat_subscribe 列不为空的用户数量,你可以使用以下 SQL 查询语句: SELECT COUNT(*) FROM user WHERE we_chat_subscribe IS NOT NULL;解释: …...

小程序基础 —— 10 如何调试小程序代码

如何调试小程序代码 在进行项目开发的时候,不可避免需要进行调试,那么如何调试小程序呢? 打开微信开发者工具后,有一个模拟器,通过模拟器能够实时预览自己写的页面,如下: 在上部工具栏中有一个…...

Vue项目如何设置多个静态文件;如何自定义静态文件目录

Vite实现方案 安装插件 npm i vite-plugin-static-copy在vite.config.ts引入 import { viteStaticCopy } from vite-plugin-static-copy配置 plugins: [viteStaticCopy({targets: [{src: "要设置的静态文件目录的相对路径 相对于vite.config.ts的", dest: ./, // …...

CentOS Stream 9 安装 JDK

安装前检查 java --version注:此时说明已安装过JDK,否则为未安装。如若已安装过JDK可以跳过安装步骤直接使用,或者先卸载已安装的JDK版本重新安装。 安装JDK 官网下载地址:https://www.oracle.com/java/technologies/downloads…...

前端(htmlcss)

前端页面 Web页面 PC端程序页面 移动端APP页面 ... HTML页面 HTML超文本标记页面 超文本:文本,声音,图片,视频,表格,链接 标记:由许多标签组成 HTML页面运行到浏览器上面 vscode便捷插件使用 vs…...

py打包工具

pyinstaller 安装 大佬文档参考 pip install pyinstallerpyinstaller 参数 -i 给应用程序添加图标 -F 只生成一个exe格式的文件 -D 创建一个目录,包含exe文件,但会依赖很多文件(默认选项) -c 有黑窗口 -w 去掉黑窗口pyinstalle…...

39-最长子字符串的长度(二))

华为OD E卷(100分)39-最长子字符串的长度(二)

前言 工作了十几年,从普通的研发工程师一路成长为研发经理、研发总监。临近40岁,本想辞职后换一个相对稳定的工作环境一直干到老, 没想到离职后三个多月了还没找到工作,愁肠百结。为了让自己有点事情做,也算提高一下自己的编程能力…...

Selenium+Java(21):Jenkins发送邮件报错Not sent to the following valid addresses解决方案

问题现象 小月妹妹近期在做RobotFrameWork自动化测试,并且使用Jenkins发送测试邮件的时候,发现报错Not sent to the following valid addresses,明明各个配置项看起来都没有问题,但是一到邮件发送环节,就是发送不出去,而且还不提示太多有用的信息,急的妹妹脸都红了,于…...

JSON结构快捷转XML结构API集成指南

JSON结构快捷转XML结构API集成指南 引言 在当今的软件开发世界中,数据交换格式的选择对于系统的互操作性和效率至关重要。JSON(JavaScript Object Notation)和XML(eXtensible Markup Language)是两种广泛使用的数据表…...

【视觉惯性SLAM:四、相机成像模型】

相机成像模型介绍 相机成像模型是计算机视觉和图像处理中的核心内容,它描述了真实三维世界如何通过相机映射到二维图像平面。相机成像模型通常包括针孔相机的基本成像原理、数学模型,以及在实际应用中如何处理相机的各种畸变现象。 一、针孔相机成像原…...

网络编程:TCP和UDP通信基础

TCP 简易服务器: #include<myhead.h>int main(int argc, const char *argv[]) {int oldfd socket(AF_INET,SOCK_STREAM,0);if(oldfd -1){perror("socket");return -1;}//绑定要绑定的结构体struct sockaddr_in server {.sin_family AF_INET,.…...

声波配网原理及使用python简单的示例

将自定义的信息内容(如Wi-Fi配置、数字数据)转换为音波是一种音频调制与解调技术,广泛应用于声波配网、数据传输和近场通信中。这项技术的实现涉及将数字信息编码为音频信号,并通过解码还原信息。 实现方法 1. 数字数据编码 将原…...

深度学习任务中的 `ulimit` 设置优化指南

深度学习任务中的 ulimit 设置优化指南 1. 什么是 ulimit?2. 深度学习任务中的关键 ulimit 设置2.1 max locked memory(-l)2.2 open files(-n)2.3 core file size(-c)2.4 stack size(…...

【学生管理系统】权限管理

目录 6.4 权限管理(菜单管理) 6.4.1 查询所有(含孩子) 6.4.2 添加权限 6.4.3 核心3:查询登录用户的权限,并绘制菜单 6.4 权限管理(菜单管理) 6.4.1 查询所有(含孩子…...

Java编程题_面向对象和常用API01_B级

Java编程题_面向对象和常用API01_B级 第1题 面向对象、异常、集合、IO 题干: 请编写程序,完成键盘录入学生信息,并计算总分将学生信息与总分一同写入文本文件 需求:键盘录入3个学生信息(姓名,语文成绩,数学成绩) 求出每个学生的总分 ,并…...

JUC并发工具---线程协作

信号量能被FixedThreadPool代替吗 Semaphore信号量 控制需要限制访问量的资源,没有获取到信号量的线程会被阻塞 import java.util.concurrent.ExecutorService; import java.util.concurrent.Executors; import java.util.concurrent.Semaphore;public class Sem…...

Excel for Finance 08 `XNPV`和`XIRR` 函数

Excel 的 XNPV 函数用于计算基于特定日期的净现值(Net Present Value, NPV)。与标准的 NPV 函数相比,XNPV 更灵活,可以考虑不规则的现金流间隔,而不仅限于等间隔的期数。 语法: XNPV(rate, values, dates)…...

嵌入式入门Day35

网络编程 Day2 套接字socket基于TCP通信的流程服务器端客户端TCP通信API 基于UDP通信的流程服务器端客户端 作业 套接字socket socket套接字本质是一个特殊的文件,在原始的Linux中,它和管道,消息队列,共享内存,信号等…...

AE/PR/达芬奇模板:自动光标打字机文字标题移动效果动画模板预设

适用于AE/PR/达芬奇的 Typewriter Pro 该模板包括专业的打字机文本动画,并包含很酷的功能,以及帮助文档和分步画外音视频教程。 主要特点 轻松的持续时间控制您可以通过在持续时间控件中输入 start 和 end duration(开始和结束持续时间&…...

未来机器人的大脑:如何用神经网络模拟器实现更智能的决策?

编辑:陈萍萍的公主一点人工一点智能 未来机器人的大脑:如何用神经网络模拟器实现更智能的决策?RWM通过双自回归机制有效解决了复合误差、部分可观测性和随机动力学等关键挑战,在不依赖领域特定归纳偏见的条件下实现了卓越的预测准…...

eNSP-Cloud(实现本地电脑与eNSP内设备之间通信)

说明: 想象一下,你正在用eNSP搭建一个虚拟的网络世界,里面有虚拟的路由器、交换机、电脑(PC)等等。这些设备都在你的电脑里面“运行”,它们之间可以互相通信,就像一个封闭的小王国。 但是&#…...

Android Wi-Fi 连接失败日志分析

1. Android wifi 关键日志总结 (1) Wi-Fi 断开 (CTRL-EVENT-DISCONNECTED reason3) 日志相关部分: 06-05 10:48:40.987 943 943 I wpa_supplicant: wlan0: CTRL-EVENT-DISCONNECTED bssid44:9b:c1:57:a8:90 reason3 locally_generated1解析: CTR…...

React 第五十五节 Router 中 useAsyncError的使用详解

前言 useAsyncError 是 React Router v6.4 引入的一个钩子,用于处理异步操作(如数据加载)中的错误。下面我将详细解释其用途并提供代码示例。 一、useAsyncError 用途 处理异步错误:捕获在 loader 或 action 中发生的异步错误替…...

)

云计算——弹性云计算器(ECS)

弹性云服务器:ECS 概述 云计算重构了ICT系统,云计算平台厂商推出使得厂家能够主要关注应用管理而非平台管理的云平台,包含如下主要概念。 ECS(Elastic Cloud Server):即弹性云服务器,是云计算…...

C++:std::is_convertible

C++标志库中提供is_convertible,可以测试一种类型是否可以转换为另一只类型: template <class From, class To> struct is_convertible; 使用举例: #include <iostream> #include <string>using namespace std;struct A { }; struct B : A { };int main…...

2025 后端自学UNIAPP【项目实战:旅游项目】6、我的收藏页面

代码框架视图 1、先添加一个获取收藏景点的列表请求 【在文件my_api.js文件中添加】 // 引入公共的请求封装 import http from ./my_http.js// 登录接口(适配服务端返回 Token) export const login async (code, avatar) > {const res await http…...

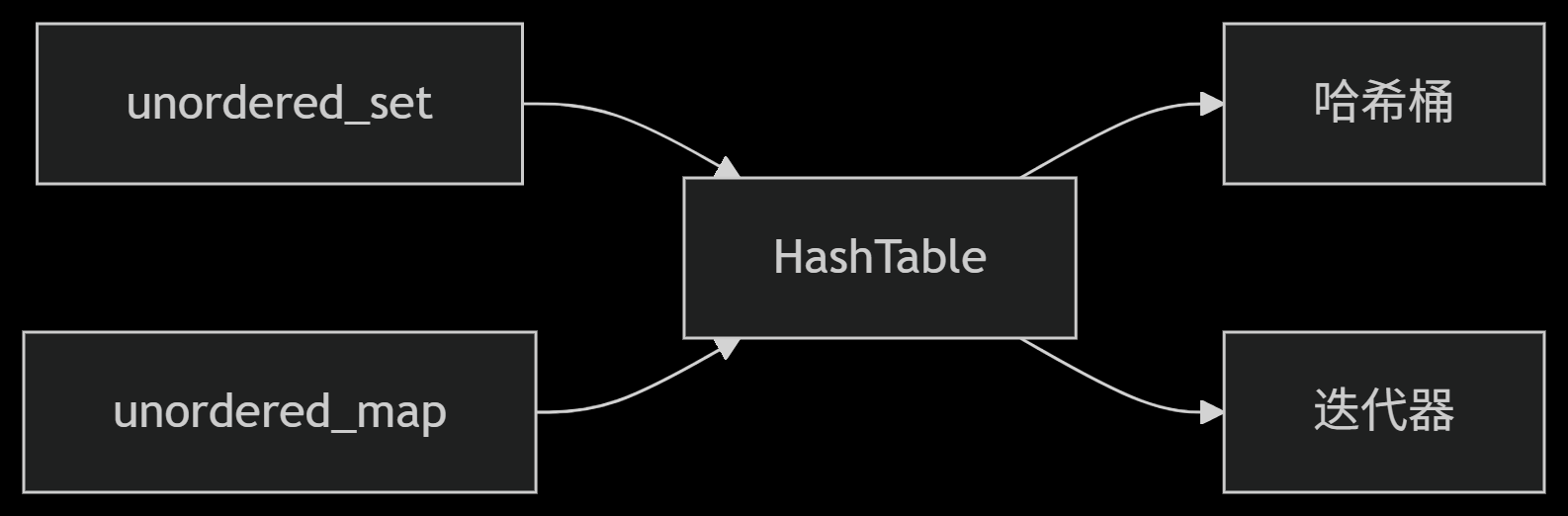

从零实现STL哈希容器:unordered_map/unordered_set封装详解

本篇文章是对C学习的STL哈希容器自主实现部分的学习分享 希望也能为你带来些帮助~ 那咱们废话不多说,直接开始吧! 一、源码结构分析 1. SGISTL30实现剖析 // hash_set核心结构 template <class Value, class HashFcn, ...> class hash_set {ty…...

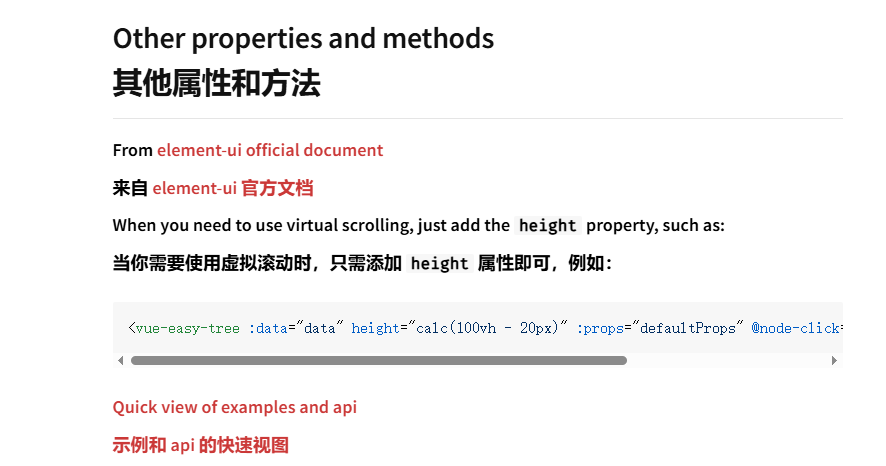

tree 树组件大数据卡顿问题优化

问题背景 项目中有用到树组件用来做文件目录,但是由于这个树组件的节点越来越多,导致页面在滚动这个树组件的时候浏览器就很容易卡死。这种问题基本上都是因为dom节点太多,导致的浏览器卡顿,这里很明显就需要用到虚拟列表的技术&…...

:邮件营销与用户参与度的关键指标优化指南)

精益数据分析(97/126):邮件营销与用户参与度的关键指标优化指南

精益数据分析(97/126):邮件营销与用户参与度的关键指标优化指南 在数字化营销时代,邮件列表效度、用户参与度和网站性能等指标往往决定着创业公司的增长成败。今天,我们将深入解析邮件打开率、网站可用性、页面参与时…...