HAMi + prometheus-k8s + grafana实现vgpu虚拟化监控

最近长沙跑了半个多月,跟甲方客户对了下项目指标,许久没更新

回来后继续研究如何实现 grafana实现HAMi vgpu虚拟化监控,毕竟合同里写了需要体现gpu资源限制和算力共享以及体现算力卡资源共享监控

先说下为啥要用HAMi吧, 一个重要原因是公司有人引见了这个工具的作者, 很多问题我都可以直接向作者提问

HAMi,是一个国产的GPU与国产加速卡(支持的GPU与国产加速卡型号与具体特性请查看此项目官网:https://github.com/Project-HAMi/HAMi/)虚拟化开源项目,实现以kubernetes为基础的容器场景下GPU或加速卡虚拟化。HAMi原名“k8s-vGPU-scheduler”,

最初由我司开源,现已在国内与国际上愈加流行,是管理Kubernetes中异构设备的中间件。它可以管理不同类型的异构设备(如GPU、NPU等),在Pod之间共享异构设备,根据设备的拓扑信息和调度策略做出更好的调度决策。为了阐述的简明性,本文只提供一种可行的办法,最终实现使用prometheus抓取监控指标并作为数据源、使用grafana来展示监控信息的目的。

本文假定已经部署好Kubernetes集群、HAMi。以下涉及到的相关组件都是在kubernetes集群内安装的,相关组件或软件版本信息如下:

| 组件或软件名称 | 版本 | 备注 |

|---|---|---|

| kubernetes集群 | v1.23.1 | AMD64构架服务器环境下 |

| HAMi | 根据向开源作者提问,当前HAMi版本发行机制还不够成熟,暂以安装HAMi的scheduler.kubeScheduler.imageTag 参数值为其版本,此值要跟kubernetes版本看齐 | 项目地址:https://github.com/Project-HAMi/HAMi/ |

| kube-prometheus stack | prom/prometheus:v2.27.1 | 关于监控的安装参见实现prometheus+grafana的监控部署_prometheus grafana监控部署-CSDN博客 |

| dcgm-exporter | nvcr.io/nvidia/k8s/dcgm-exporter:3.3.9-3.6.1-ubuntu22.04 |

HAMi 的默认安装方式是通过helm,添加Helm仓库:

helm repo add hami-charts https://project-hami.github.io/HAMi/

检查Kubernetes版本并安装HAMi(服务器版本为1.23.1):

helm install hami hami-charts/hami --set scheduler.kubeScheduler.imageTag=v1.23.1 -n kube-system验证hami安装成功

kubectl get pods -n kube-system

确认hami-device-plugin和hami-scheduler都处于Running状态表示安装成功。

把helm安装转为hami-install.yaml

helm template hami hami-charts/hami --set scheduler.kubeScheduler.imageTag=v1.23.1 -n kube-system > hami-install.yaml该格式部署

---

# Source: hami/templates/device-plugin/monitorserviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: hami-device-pluginnamespace: "kube-system"labels:app.kubernetes.io/component: "hami-device-plugin"helm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helm

---

# Source: hami/templates/scheduler/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: hami-schedulernamespace: "kube-system"labels:app.kubernetes.io/component: "hami-scheduler"helm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helm

---

# Source: hami/templates/device-plugin/configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: hami-device-pluginlabels:app.kubernetes.io/component: hami-device-pluginhelm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helm

data:config.json: |{"nodeconfig": [{"name": "m5-cloudinfra-online02","devicememoryscaling": 1.8,"devicesplitcount": 10,"migstrategy":"none","filterdevices": {"uuid": [],"index": []}}]}

---

# Source: hami/templates/scheduler/configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: hami-schedulerlabels:app.kubernetes.io/component: hami-schedulerhelm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helm

data:config.json: |{"kind": "Policy","apiVersion": "v1","extenders": [{"urlPrefix": "https://127.0.0.1:443","filterVerb": "filter","bindVerb": "bind","enableHttps": true,"weight": 1,"nodeCacheCapable": true,"httpTimeout": 30000000000,"tlsConfig": {"insecure": true},"managedResources": [{"name": "nvidia.com/gpu","ignoredByScheduler": true},{"name": "nvidia.com/gpumem","ignoredByScheduler": true},{"name": "nvidia.com/gpucores","ignoredByScheduler": true},{"name": "nvidia.com/gpumem-percentage","ignoredByScheduler": true},{"name": "nvidia.com/priority","ignoredByScheduler": true},{"name": "cambricon.com/vmlu","ignoredByScheduler": true},{"name": "hygon.com/dcunum","ignoredByScheduler": true},{"name": "hygon.com/dcumem","ignoredByScheduler": true },{"name": "hygon.com/dcucores","ignoredByScheduler": true},{"name": "iluvatar.ai/vgpu","ignoredByScheduler": true}],"ignoreable": false}]}

---

# Source: hami/templates/scheduler/configmapnew.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: hami-scheduler-newversionlabels:app.kubernetes.io/component: hami-schedulerhelm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helm

data:config.yaml: |apiVersion: kubescheduler.config.k8s.io/v1kind: KubeSchedulerConfigurationleaderElection:leaderElect: falseprofiles:- schedulerName: hami-schedulerextenders:- urlPrefix: "https://127.0.0.1:443"filterVerb: filterbindVerb: bindnodeCacheCapable: trueweight: 1httpTimeout: 30senableHTTPS: truetlsConfig:insecure: truemanagedResources:- name: nvidia.com/gpuignoredByScheduler: true- name: nvidia.com/gpumemignoredByScheduler: true- name: nvidia.com/gpucoresignoredByScheduler: true- name: nvidia.com/gpumem-percentageignoredByScheduler: true- name: nvidia.com/priorityignoredByScheduler: true- name: cambricon.com/vmluignoredByScheduler: true- name: hygon.com/dcunumignoredByScheduler: true- name: hygon.com/dcumemignoredByScheduler: true- name: hygon.com/dcucoresignoredByScheduler: true- name: iluvatar.ai/vgpuignoredByScheduler: true

---

# Source: hami/templates/scheduler/device-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: hami-scheduler-devicelabels:app.kubernetes.io/component: hami-schedulerhelm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helm

data:device-config.yaml: |-nvidia:resourceCountName: nvidia.com/gpuresourceMemoryName: nvidia.com/gpumemresourceMemoryPercentageName: nvidia.com/gpumem-percentageresourceCoreName: nvidia.com/gpucoresresourcePriorityName: nvidia.com/priorityoverwriteEnv: falsedefaultMemory: 0defaultCores: 0defaultGPUNum: 1deviceSplitCount: 10deviceMemoryScaling: 1deviceCoreScaling: 1cambricon:resourceCountName: cambricon.com/vmluresourceMemoryName: cambricon.com/mlu.smlu.vmemoryresourceCoreName: cambricon.com/mlu.smlu.vcorehygon:resourceCountName: hygon.com/dcunumresourceMemoryName: hygon.com/dcumemresourceCoreName: hygon.com/dcucoresmetax:resourceCountName: "metax-tech.com/gpu"mthreads:resourceCountName: "mthreads.com/vgpu"resourceMemoryName: "mthreads.com/sgpu-memory"resourceCoreName: "mthreads.com/sgpu-core"iluvatar: resourceCountName: iluvatar.ai/vgpuresourceMemoryName: iluvatar.ai/vcuda-memoryresourceCoreName: iluvatar.ai/vcuda-corevnpus:- chipName: 910BcommonWord: Ascend910AresourceName: huawei.com/Ascend910AresourceMemoryName: huawei.com/Ascend910A-memorymemoryAllocatable: 32768memoryCapacity: 32768aiCore: 30templates:- name: vir02memory: 2184aiCore: 2- name: vir04memory: 4369aiCore: 4- name: vir08memory: 8738aiCore: 8- name: vir16memory: 17476aiCore: 16- chipName: 910B3commonWord: Ascend910BresourceName: huawei.com/Ascend910BresourceMemoryName: huawei.com/Ascend910B-memorymemoryAllocatable: 65536memoryCapacity: 65536aiCore: 20aiCPU: 7templates:- name: vir05_1c_16gmemory: 16384aiCore: 5aiCPU: 1- name: vir10_3c_32gmemory: 32768aiCore: 10aiCPU: 3- chipName: 310P3commonWord: Ascend310PresourceName: huawei.com/Ascend310PresourceMemoryName: huawei.com/Ascend310P-memorymemoryAllocatable: 21527memoryCapacity: 24576aiCore: 8aiCPU: 7templates:- name: vir01memory: 3072aiCore: 1aiCPU: 1- name: vir02memory: 6144aiCore: 2aiCPU: 2- name: vir04memory: 12288aiCore: 4aiCPU: 4

---

# Source: hami/templates/device-plugin/monitorrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: hami-device-plugin-monitor

rules:- apiGroups:- ""resources:- podsverbs:- get- create- watch- list- update- patch- apiGroups:- ""resources:- nodesverbs:- get- update- list- patch

---

# Source: hami/templates/device-plugin/monitorrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: hami-device-pluginlabels:app.kubernetes.io/component: "hami-device-plugin"helm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helm

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRole#name: cluster-adminname: hami-device-plugin-monitor

subjects:- kind: ServiceAccountname: hami-device-pluginnamespace: "kube-system"

---

# Source: hami/templates/scheduler/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: hami-schedulerlabels:app.kubernetes.io/component: "hami-scheduler"helm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helm

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: cluster-admin

subjects:- kind: ServiceAccountname: hami-schedulernamespace: "kube-system"

---

# Source: hami/templates/device-plugin/monitorservice.yaml

apiVersion: v1

kind: Service

metadata:name: hami-device-plugin-monitorlabels:app.kubernetes.io/component: hami-device-pluginhelm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helm

spec:externalTrafficPolicy: Localselector:app.kubernetes.io/component: hami-device-plugintype: NodePortports:- name: monitorportport: 31992targetPort: 9394nodePort: 31992

---

# Source: hami/templates/scheduler/service.yaml

apiVersion: v1

kind: Service

metadata:name: hami-schedulerlabels:app.kubernetes.io/component: hami-schedulerhelm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helm

spec:type: NodePortports:- name: httpport: 443targetPort: 443nodePort: 31998protocol: TCP- name: monitorport: 31993targetPort: 9395nodePort: 31993protocol: TCPselector:app.kubernetes.io/component: hami-schedulerapp.kubernetes.io/name: hamiapp.kubernetes.io/instance: hami

---

# Source: hami/templates/device-plugin/daemonsetnvidia.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:name: hami-device-pluginlabels:app.kubernetes.io/component: hami-device-pluginhelm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helm

spec:selector:matchLabels:app.kubernetes.io/component: hami-device-pluginapp.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamitemplate:metadata:labels:app.kubernetes.io/component: hami-device-pluginhami.io/webhook: ignoreapp.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamispec:imagePullSecrets: []serviceAccountName: hami-device-pluginpriorityClassName: system-node-criticalhostPID: truehostNetwork: truecontainers:- name: device-pluginimage: projecthami/hami:latestimagePullPolicy: "IfNotPresent"lifecycle:postStart:exec:command: ["/bin/sh","-c", "cp -f /k8s-vgpu/lib/nvidia/* /usr/local/vgpu/"]command:- nvidia-device-plugin- --config-file=/device-config.yaml- --mig-strategy=none- --disable-core-limit=false- -v=falseenv:- name: NODE_NAMEvalueFrom:fieldRef:fieldPath: spec.nodeName- name: NVIDIA_MIG_MONITOR_DEVICESvalue: all- name: HOOK_PATHvalue: /usr/localsecurityContext:allowPrivilegeEscalation: falsecapabilities:drop: ["ALL"]add: ["SYS_ADMIN"]volumeMounts:- name: device-pluginmountPath: /var/lib/kubelet/device-plugins- name: libmountPath: /usr/local/vgpu- name: usrbinmountPath: /usrbin- name: deviceconfigmountPath: /config- name: hosttmpmountPath: /tmp- name: device-configmountPath: /device-config.yamlsubPath: device-config.yaml- name: vgpu-monitorimage: projecthami/hami:latestimagePullPolicy: "IfNotPresent"command: ["vGPUmonitor"]securityContext:allowPrivilegeEscalation: falsecapabilities:drop: ["ALL"]add: ["SYS_ADMIN"]env:- name: NVIDIA_VISIBLE_DEVICESvalue: "all"- name: NVIDIA_MIG_MONITOR_DEVICESvalue: all- name: HOOK_PATHvalue: /usr/local/vgpu volumeMounts:- name: ctrsmountPath: /usr/local/vgpu/containers- name: dockersmountPath: /run/docker- name: containerdsmountPath: /run/containerd- name: sysinfomountPath: /sysinfo- name: hostvarmountPath: /hostvarvolumes:- name: ctrshostPath:path: /usr/local/vgpu/containers- name: hosttmphostPath:path: /tmp- name: dockershostPath:path: /run/docker- name: containerdshostPath:path: /run/containerd- name: device-pluginhostPath:path: /var/lib/kubelet/device-plugins- name: libhostPath:path: /usr/local/vgpu- name: usrbinhostPath:path: /usr/bin- name: sysinfohostPath:path: /sys- name: hostvarhostPath:path: /var- name: deviceconfigconfigMap:name: hami-device-plugin- name: device-configconfigMap:name: hami-scheduler-devicenodeSelector: gpu: "on"

---

# Source: hami/templates/scheduler/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: hami-schedulerlabels:app.kubernetes.io/component: hami-schedulerhelm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helm

spec:replicas: 1selector:matchLabels:app.kubernetes.io/component: hami-schedulerapp.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamitemplate:metadata:labels:app.kubernetes.io/component: hami-schedulerapp.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamihami.io/webhook: ignorespec:imagePullSecrets: []serviceAccountName: hami-schedulerpriorityClassName: system-node-criticalcontainers:- name: kube-schedulerimage: registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.31.0imagePullPolicy: "IfNotPresent"command:- kube-scheduler- --config=/config/config.yaml- -v=4- --leader-elect=true- --leader-elect-resource-name=hami-scheduler- --leader-elect-resource-namespace=kube-systemvolumeMounts:- name: scheduler-configmountPath: /config- name: vgpu-scheduler-extenderimage: projecthami/hami:latestimagePullPolicy: "IfNotPresent"env:command:- scheduler- --http_bind=0.0.0.0:443- --cert_file=/tls/tls.crt- --key_file=/tls/tls.key- --scheduler-name=hami-scheduler- --metrics-bind-address=:9395- --node-scheduler-policy=binpack- --gpu-scheduler-policy=spread- --device-config-file=/device-config.yaml- --debug- -v=4ports:- name: httpcontainerPort: 443protocol: TCPvolumeMounts:- name: tls-configmountPath: /tls- name: device-configmountPath: /device-config.yamlsubPath: device-config.yamlvolumes:- name: tls-configsecret:secretName: hami-scheduler-tls- name: scheduler-configconfigMap:name: hami-scheduler-newversion- name: device-configconfigMap:name: hami-scheduler-device

---

# Source: hami/templates/scheduler/webhook.yaml

apiVersion: admissionregistration.k8s.io/v1

kind: MutatingWebhookConfiguration

metadata:name: hami-webhook

webhooks:- admissionReviewVersions:- v1beta1clientConfig:service:name: hami-schedulernamespace: kube-systempath: /webhookport: 443failurePolicy: IgnorematchPolicy: Equivalentname: vgpu.hami.ionamespaceSelector:matchExpressions:- key: hami.io/webhookoperator: NotInvalues:- ignoreobjectSelector:matchExpressions:- key: hami.io/webhookoperator: NotInvalues:- ignorereinvocationPolicy: Neverrules:- apiGroups:- ""apiVersions:- v1operations:- CREATEresources:- podsscope: '*'sideEffects: NonetimeoutSeconds: 10

---

# Source: hami/templates/scheduler/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: hami-admissionannotations:"helm.sh/hook": pre-install,pre-upgrade,post-install,post-upgrade"helm.sh/hook-delete-policy": before-hook-creation,hook-succeededlabels:helm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhook

---

# Source: hami/templates/scheduler/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: hami-admissionannotations:"helm.sh/hook": pre-install,pre-upgrade,post-install,post-upgrade"helm.sh/hook-delete-policy": before-hook-creation,hook-succeededlabels:helm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhook

rules:- apiGroups:- admissionregistration.k8s.ioresources:#- validatingwebhookconfigurations- mutatingwebhookconfigurationsverbs:- get- update

---

# Source: hami/templates/scheduler/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: hami-admissionannotations:"helm.sh/hook": pre-install,pre-upgrade,post-install,post-upgrade"helm.sh/hook-delete-policy": before-hook-creation,hook-succeededlabels:helm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhook

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: hami-admission

subjects:- kind: ServiceAccountname: hami-admissionnamespace: "kube-system"

---

# Source: hami/templates/scheduler/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:name: hami-admissionannotations:"helm.sh/hook": pre-install,pre-upgrade,post-install,post-upgrade"helm.sh/hook-delete-policy": before-hook-creation,hook-succeededlabels:helm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhook

rules:- apiGroups:- ""resources:- secretsverbs:- get- create

---

# Source: hami/templates/scheduler/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:name: hami-admissionannotations:"helm.sh/hook": pre-install,pre-upgrade,post-install,post-upgrade"helm.sh/hook-delete-policy": before-hook-creation,hook-succeededlabels:helm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhook

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: hami-admission

subjects:- kind: ServiceAccountname: hami-admissionnamespace: "kube-system"

---

# Source: hami/templates/scheduler/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:name: hami-admission-createannotations:"helm.sh/hook": pre-install,pre-upgrade"helm.sh/hook-delete-policy": before-hook-creation,hook-succeededlabels:helm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhook

spec:template:metadata:name: hami-admission-createlabels:helm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhookhami.io/webhook: ignorespec:imagePullSecrets: []containers:- name: createimage: liangjw/kube-webhook-certgen:v1.1.1imagePullPolicy: IfNotPresentargs:- create- --cert-name=tls.crt- --key-name=tls.key- --host=hami-scheduler.kube-system.svc,127.0.0.1- --namespace=kube-system- --secret-name=hami-scheduler-tlsrestartPolicy: OnFailureserviceAccountName: hami-admissionsecurityContext:runAsNonRoot: truerunAsUser: 2000

---

# Source: hami/templates/scheduler/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:name: hami-admission-patchannotations:"helm.sh/hook": post-install,post-upgrade"helm.sh/hook-delete-policy": before-hook-creation,hook-succeededlabels:helm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhook

spec:template:metadata:name: hami-admission-patchlabels:helm.sh/chart: hami-2.4.0app.kubernetes.io/name: hamiapp.kubernetes.io/instance: hamiapp.kubernetes.io/version: "2.4.0"app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhookhami.io/webhook: ignorespec:imagePullSecrets: []containers:- name: patchimage: liangjw/kube-webhook-certgen:v1.1.1imagePullPolicy: IfNotPresentargs:- patch- --webhook-name=hami-webhook- --namespace=kube-system- --patch-validating=false- --secret-name=hami-scheduler-tlsrestartPolicy: OnFailureserviceAccountName: hami-admissionsecurityContext:runAsNonRoot: truerunAsUser: 2000

部署dcgm-exporter

apiVersion: apps/v1

kind: DaemonSet

metadata:name: "dcgm-exporter"labels:app.kubernetes.io/name: "dcgm-exporter"app.kubernetes.io/version: "3.6.1"

spec:updateStrategy:type: RollingUpdateselector:matchLabels:app.kubernetes.io/name: "dcgm-exporter"app.kubernetes.io/version: "3.6.1"template:metadata:labels:app.kubernetes.io/name: "dcgm-exporter"app.kubernetes.io/version: "3.6.1"name: "dcgm-exporter"spec:containers:- image: "nvcr.io/nvidia/k8s/dcgm-exporter:3.3.9-3.6.1-ubuntu22.04"env:- name: "DCGM_EXPORTER_LISTEN"value: ":9400"- name: "DCGM_EXPORTER_KUBERNETES"value: "true"name: "dcgm-exporter"ports:- name: "metrics"containerPort: 9400securityContext:runAsNonRoot: falserunAsUser: 0capabilities:add: ["SYS_ADMIN"]volumeMounts:- name: "pod-gpu-resources"readOnly: truemountPath: "/var/lib/kubelet/pod-resources"volumes:- name: "pod-gpu-resources"hostPath:path: "/var/lib/kubelet/pod-resources"---kind: Service

apiVersion: v1

metadata:name: "dcgm-exporter"labels:app.kubernetes.io/name: "dcgm-exporter"app.kubernetes.io/version: "3.6.1"

spec:selector:app.kubernetes.io/name: "dcgm-exporter"app.kubernetes.io/version: "3.6.1"ports:- name: "metrics"port: 9400dcgm-exporter安装成功

参考这个hami-vgpu dashboard 下载panel 的json文件

hami-vgpu-dashboard | Grafana Labs 导入后grafana中将创建一个名为“hami-vgpu-dashboard”的dashboard,但此页面中有一些Panel如vGPUCorePercentage还没有数据

ServiceMonitor 是 Prometheus Operator 中的一个自定义资源,主要用于监控 Kubernetes 中的服务。它的作用包括:

1. 自动化发现

ServiceMonitor 允许 Prometheus 自动发现和监控 Kubernetes 中的服务。通过定义 ServiceMonitor,您可以告诉 Prometheus 监控特定服务的端点。

2. 配置抓取参数

您可以在 ServiceMonitor 中设置抓取的相关参数,例如:

- 抓取间隔:定义 Prometheus 多频繁抓取数据(如每 30 秒)。

- 超时:定义抓取请求的超时时间。

- 标签选择器:指定要监控的服务的标签,确保 Prometheus 仅抓取相关服务的数据。

dcgm-exporter需要配置两个service monitor

hami-device-plugin-svc-monitor.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:name: hami-device-plugin-svc-monitornamespace: kube-system

spec:selector:matchLabels:app.kubernetes.io/component: hami-device-pluginnamespaceSelector:matchNames:- kube-systemendpoints:- path: /metricsport: monitorportinterval: "15s"honorLabels: falserelabelings:- sourceLabels: [__meta_kubernetes_endpoints_name]regex: hami-.*replacement: $1action: keep- sourceLabels: [__meta_kubernetes_pod_node_name]regex: (.*)targetLabel: node_namereplacement: ${1}action: replace- sourceLabels: [__meta_kubernetes_pod_host_ip]regex: (.*)targetLabel: ipreplacement: $1action: replacehami-scheduler-svc-monitor.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:name: hami-scheduler-svc-monitornamespace: kube-system

spec:selector:matchLabels:app.kubernetes.io/component: hami-schedulernamespaceSelector:matchNames:- kube-systemendpoints:- path: /metricsport: monitorinterval: "15s"honorLabels: falserelabelings:- sourceLabels: [__meta_kubernetes_endpoints_name]regex: hami-.*replacement: $1action: keep- sourceLabels: [__meta_kubernetes_pod_node_name]regex: (.*)targetLabel: node_namereplacement: ${1}action: replace- sourceLabels: [__meta_kubernetes_pod_host_ip]regex: (.*)targetLabel: ipreplacement: $1action: replace确认创建的ServiceMonitor

启动gpu pod一个测试下

apiVersion: v1

kind: Pod

metadata:name: gpu-pod-1

spec:restartPolicy: Nevercontainers:- name: cuda-containerimage: nvcr.io/nvidia/k8s/cuda-sample:vectoradd-cuda11.2.1command: ["sleep", "infinity"]resources:limits:nvidia.com/gpu: 1nvidia.com/gpumem: 1000nvidia.com/gpucores: 10如果看到pod一直pending 状态

检查下节点如果出现下面gpu为0的情况

需要

docker:1:下载NVIDIA-DOCKER2安装包并安装2:修改/etc/docker/daemon.json文件内容加上{"default-runtime": "nvidia","runtimes": {"nvidia": {"path": "/usr/bin/nvidia-container-runtime","runtimeArgs": []}},}k8s:1:下载k8s-device-plugin 镜像2:编写nvidia-device-plugin.yml创建驱动pod使用这个yml进行创建

apiVersion: apps/v1

kind: DaemonSet

metadata:name: nvidia-device-plugin-daemonsetnamespace: kube-system

spec:selector:matchLabels:name: nvidia-device-plugin-dsupdateStrategy:type: RollingUpdatetemplate:metadata:labels:name: nvidia-device-plugin-dsspec:tolerations:- key: nvidia.com/gpuoperator: Existseffect: NoSchedulepriorityClassName: "system-node-critical"containers:- image: nvidia/k8s-device-plugin:1.11name: nvidia-device-plugin-ctrenv:- name: FAIL_ON_INIT_ERRORvalue: "false"securityContext:allowPrivilegeEscalation: falsecapabilities:drop: ["ALL"]volumeMounts:- name: device-pluginmountPath: /var/lib/kubelet/device-pluginsvolumes:- name: device-pluginhostPath:path: /var/lib/kubelet/device-pluginsgpu pod启动后进入查看下, gpu内存和限制的大小相同设置成功

访问下{scheduler node ip}:31993/metrics

日志最后有两行

vGPUPodsDeviceAllocated{containeridx="0",deviceusedcore="40",deviceuuid="GPU-7666e9de-679b-a768-51c6-260b81cd00ec",nodename="192.168.110.126",podname="gpu-pod-1",podnamespace="default",zone="vGPU"} 1.048576e+10

vGPUPodsDeviceAllocated{containeridx="0",deviceusedcore="40",deviceuuid="GPU-7666e9de-679b-a768-51c6-260b81cd00ec",nodename="192.168.110.126",podname="gpu-pod-2",podnamespace="default",zone="vGPU"} 1.048576e+10

可以看到相同deviceuuid的gpu被不同pod共享使用

exec进入hami-device-plugin daemonset里面执行nvidia-smi -L 可以看到机器上所有显卡的信息

root@node126:/# nvidia-smi -L

GPU 0: NVIDIA GeForce RTX 4090 (UUID: GPU-7666e9de-679b-a768-51c6-260b81cd00ec)

GPU 1: NVIDIA GeForce RTX 4090 (UUID: GPU-9f32af29-1a72-6e47-af2c-72b1130a176b)

root@node126:/#

之前创建的两个serviceMonitor会去请求

app.kubernetes.io/component: hami-scheduler 和app.kubernetes.io/component: hami-device-plugin 的/metrics 接口获取数据

当gpu-pod跑起来以后查看hami-vgpu-metrics-dashboard

相关文章:

HAMi + prometheus-k8s + grafana实现vgpu虚拟化监控

最近长沙跑了半个多月,跟甲方客户对了下项目指标,许久没更新 回来后继续研究如何实现 grafana实现HAMi vgpu虚拟化监控,毕竟合同里写了需要体现gpu资源限制和算力共享以及体现算力卡资源共享监控 先说下为啥要用HAMi吧, 一个重要原…...

Java基于SSM框架的在线视频教育系统小程序【附源码、文档】

博主介绍:✌IT徐师兄、7年大厂程序员经历。全网粉丝15W、csdn博客专家、掘金/华为云//InfoQ等平台优质作者、专注于Java技术领域和毕业项目实战✌ 🍅文末获取源码联系🍅 👇🏻 精彩专栏推荐订阅👇dz…...

mysql本地安装和pycharm链接数据库操作

MySQL本地安装和相关操作 Python相关:基础、函数、数据类型、面向、模块。 前端开发:HTML、CSS、JavaScript、jQuery。【静态页面】 Java前端; Python前端; Go前端 -> 【动态页面】直观: 静态,写死了…...

Unity编程与游戏开发-编程与游戏开发的关系

游戏开发是一个复杂的多领域合作过程,涵盖了从创意构思到最终实现的多个方面。在这个过程中,技术、设计与美术三大核心要素相互交织,缺一不可。在游戏开发的过程中,Unity作为一款强大的跨平台游戏引擎,凭借其高效的开发工具和庞大的社区支持,成为了很多游戏开发者的首选工…...

)

2025年第三届“华数杯”国际赛A题解题思路与代码(Python版)

游泳竞技策略优化模型代码详解 第一题:速度优化模型 在这一部分,我们将详细解析如何通过数学建模来优化游泳运动员在不同距离比赛中的速度分配策略。 1. 模型概述 我们的模型主要包含三个核心文件: speed_optimization.py: 速度优化的核…...

针对服务器磁盘爆满,MySql数据库始终无法启动,怎么解决

(点击即可进入聊天助手) 很多站长在运营网站的过程当中都会遇到一个问题,就是网站突然无法打开,数据一直无法启动 无论是强制重启还是,删除网站内的所有应用,数据库一直无法启动 这个时候,就需要常见的运维手段了,需要对服务器后台各个资源,进行逐一排查…...

[Android]service命令的使用

在前面的讨论中,我们说到,如果在客户端懒得使用aidl文件生成的接口类进行binder,可以使用IBinder的transcat方法 Parcel dataParcel = Parcel.obtain(); Parcel resultParcel = Parcel.obtain();dataParcel.writeInterfaceToken(DESCRIPTOR);//发起请求 aProxyBinder.trans…...

【芯片封测学习专栏 -- Substrate | RDL Interposer | Si Interposer | 嵌入式硅桥(EMIB)详细介绍】

请阅读【嵌入式开发学习必备专栏 Cache | MMU | AMBA BUS | CoreSight | Trace32 | CoreLink | ARM GCC | CSH】 文章目录 OverviewSubstrate(衬底或基板)Substrate 定义Substrate 特点与作用Substrate 实例 RDL Interposer(重布线层中介层&a…...

spring cloud注册nacos并从nacos上拉取配置文件,spring cloud不会自动读取bootstrap.yml文件

目录 踩坑问题记录前言版本说明spring cloudb不会自动读取bootstrap.yml文件问题解决spring cloud注册nacos并从nacos上拉取配置文件后话 踩坑问题记录 1、spring cloudb不会自动读取bootstrap.yml文件 2、spring cloud注册nacos并从nacos上拉取配置文件 前言 使用cloud Ali…...

【深度学习地学应用|滑坡制图、变化检测、多目标域适应、感知学习、深度学习】跨域大尺度遥感影像滑坡制图方法:基于原型引导的领域感知渐进表示学习(一)

【深度学习地学应用|滑坡制图、变化检测、多目标域适应、感知学习、深度学习】跨域大尺度遥感影像滑坡制图方法:基于原型引导的领域感知渐进表示学习(一) 【深度学习地学应用|滑坡制图、变化检测、多目标域适应、感知学习、深度学习】跨域大…...

Spring Boot 支持哪些日志框架

Spring Boot 支持多种日志框架,主要包括以下几种: SLF4J (Simple Logging Facade for Java) Logback(默认)Log4j 2Java Util Logging (JUL) 其中,Spring Boot 默认使用 SLF4J 和 Logback 作为日志框架。如果你需要使…...

【翻译】2025年华数杯国际赛数学建模题目+翻译pdf自取

保存至本地网盘 链接:https://pan.quark.cn/s/f82a1fa7ed87 提取码:6UUw 2025年“华数杯”国际大学生数学建模竞赛比赛时间于2025年1月11日(周六)06:00开始,至1月15日(周三)09:00结束ÿ…...

qt 窗口(window/widget)绘制/渲染顺序 QPainter QPaintDevice Qpainter渲染 失效 无效 原因

qt窗体布局 窗体渲染过程 qt中窗体渲染逻辑顺序为 本窗体->子窗体/控件 递归,也就是说先渲染父窗体再渲染子窗体。其中子窗体按加入时的先后顺序进行渲染。通过下方的函数调用堆栈可以看出窗体都是在widget组件源码的widgetprivate::drawwidget中进行渲染的&am…...

TIOBE编程语言排行靠前的编程语言的吉祥物

Python的吉祥物:小蟒蛇 Python语言的吉祥物是一只名叫"Pythonidae"(或简称"Py")的小蟒蛇。这个吉祥物由Tobias Kohn设计于2005年,它的形象借鉴了真实的蟒蛇,但加入了一些可爱和友善的特点。小蟒蛇…...

【前端动效】HTML + CSS 实现打字机效果

目录 1. 效果展示 2. 思路分析 2.1 难点 2.2 实现思路 3. 代码实现 3.1 html部分 3.2 css部分 3.3 完整代码 4. 总结 1. 效果展示 如图所示,这次带来的是一个有趣的“擦除”效果,也可以叫做打字机效果,其中一段文本从左到右逐渐从…...

大疆上云API连接遥控器和无人机

文章目录 1、部署大疆上云API关于如何连接我们自己部署的上云API2、开启无人机和遥控器并连接自己部署的上云API如果遥控器和无人机没有对频的情况下即只有遥控器没有无人机的情况下如果遥控器和无人机已经对频好了的情况下 4、订阅无人机或遥控器的主题信息4.1、订阅无人机实时…...

JS逆向-atob和btoa分析

声明:本文只作学习研究,禁止用于非法用途,否则后果自负,如有侵权,请告知删除,谢谢! 故事是这样的,有位读者朋友需要模拟登录一个网站: aHR0cDovL3d3dy56bGRzai5jb20v 我…...

primitive 编写着色器材质

import { nextTick, onMounted, ref } from vue import * as Cesium from cesium import gsap from gsaponMounted(() > { ... })// 1、创建矩形几何体,Cesium.RectangleGeometry:几何体,Rectangle:矩形 let rectGeometry new…...

计算机视觉算法实战——车道线检测

✨个人主页欢迎您的访问 ✨期待您的三连 ✨ ✨个人主页欢迎您的访问 ✨期待您的三连 ✨ ✨个人主页欢迎您的访问 ✨期待您的三连✨ 车道线检测是计算机视觉领域的一个重要研究方向,尤其在自动驾驶和高级驾驶辅助…...

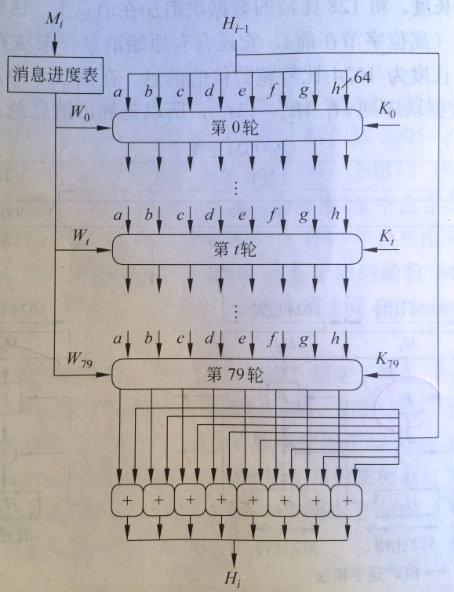

网络安全-安全散列函数,信息摘要SHA-1,MD5原理

安全散列函数 单向散列函数或者安全散列函数之所以重要,不仅在于消息认证(消息摘要。数据指纹)。还有数字签名(加强版的消息认证)和验证数据的完整性。常见的单向散列函数有MD5和SHA 散列函数的要求 散列函数的目的是文件、消息或者其它数据…...

在鸿蒙HarmonyOS 5中实现抖音风格的点赞功能

下面我将详细介绍如何使用HarmonyOS SDK在HarmonyOS 5中实现类似抖音的点赞功能,包括动画效果、数据同步和交互优化。 1. 基础点赞功能实现 1.1 创建数据模型 // VideoModel.ets export class VideoModel {id: string "";title: string ""…...

java调用dll出现unsatisfiedLinkError以及JNA和JNI的区别

UnsatisfiedLinkError 在对接硬件设备中,我们会遇到使用 java 调用 dll文件 的情况,此时大概率出现UnsatisfiedLinkError链接错误,原因可能有如下几种 类名错误包名错误方法名参数错误使用 JNI 协议调用,结果 dll 未实现 JNI 协…...

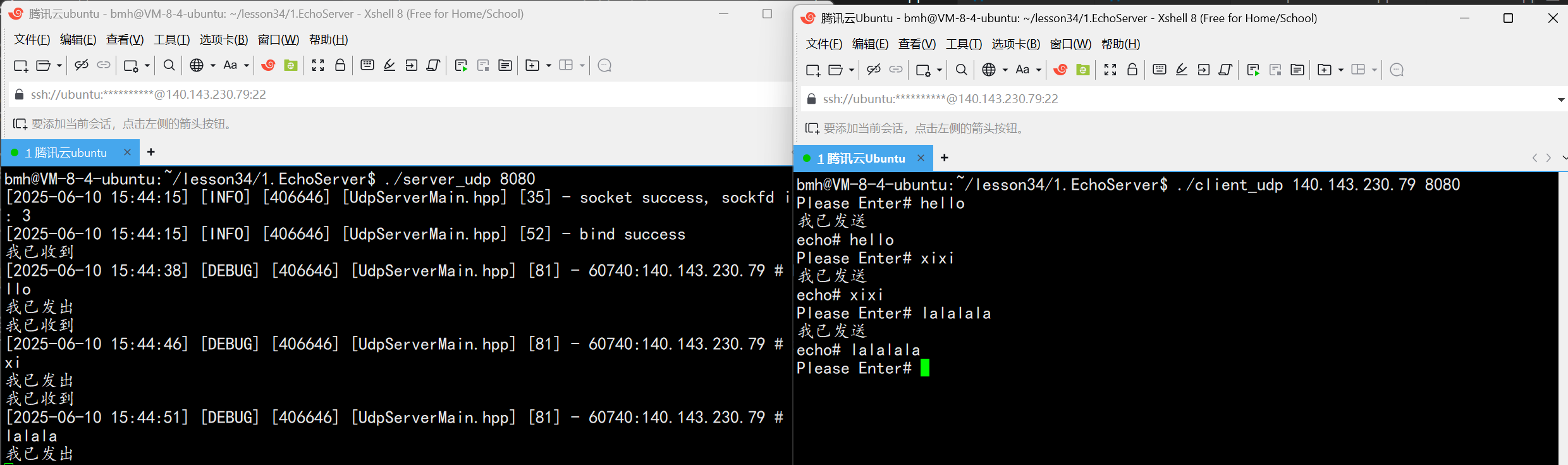

UDP(Echoserver)

网络命令 Ping 命令 检测网络是否连通 使用方法: ping -c 次数 网址ping -c 3 www.baidu.comnetstat 命令 netstat 是一个用来查看网络状态的重要工具. 语法:netstat [选项] 功能:查看网络状态 常用选项: n 拒绝显示别名&#…...

五年级数学知识边界总结思考-下册

目录 一、背景二、过程1.观察物体小学五年级下册“观察物体”知识点详解:由来、作用与意义**一、知识点核心内容****二、知识点的由来:从生活实践到数学抽象****三、知识的作用:解决实际问题的工具****四、学习的意义:培养核心素养…...

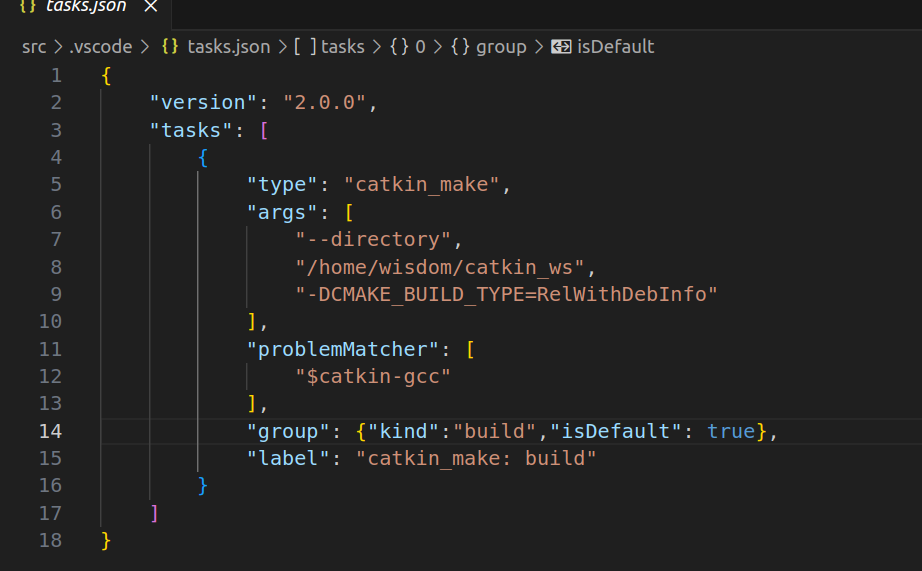

1.3 VSCode安装与环境配置

进入网址Visual Studio Code - Code Editing. Redefined下载.deb文件,然后打开终端,进入下载文件夹,键入命令 sudo dpkg -i code_1.100.3-1748872405_amd64.deb 在终端键入命令code即启动vscode 需要安装插件列表 1.Chinese简化 2.ros …...

反射获取方法和属性

Java反射获取方法 在Java中,反射(Reflection)是一种强大的机制,允许程序在运行时访问和操作类的内部属性和方法。通过反射,可以动态地创建对象、调用方法、改变属性值,这在很多Java框架中如Spring和Hiberna…...

【决胜公务员考试】求职OMG——见面课测验1

2025最新版!!!6.8截至答题,大家注意呀! 博主码字不易点个关注吧,祝期末顺利~~ 1.单选题(2分) 下列说法错误的是:( B ) A.选调生属于公务员系统 B.公务员属于事业编 C.选调生有基层锻炼的要求 D…...

uniapp中使用aixos 报错

问题: 在uniapp中使用aixos,运行后报如下错误: AxiosError: There is no suitable adapter to dispatch the request since : - adapter xhr is not supported by the environment - adapter http is not available in the build 解决方案&…...

多模态大语言模型arxiv论文略读(108)

CROME: Cross-Modal Adapters for Efficient Multimodal LLM ➡️ 论文标题:CROME: Cross-Modal Adapters for Efficient Multimodal LLM ➡️ 论文作者:Sayna Ebrahimi, Sercan O. Arik, Tejas Nama, Tomas Pfister ➡️ 研究机构: Google Cloud AI Re…...

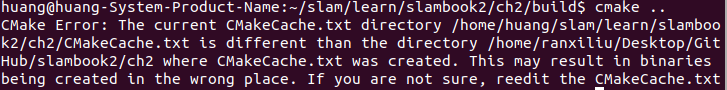

视觉slam十四讲实践部分记录——ch2、ch3

ch2 一、使用g++编译.cpp为可执行文件并运行(P30) g++ helloSLAM.cpp ./a.out运行 二、使用cmake编译 mkdir build cd build cmake .. makeCMakeCache.txt 文件仍然指向旧的目录。这表明在源代码目录中可能还存在旧的 CMakeCache.txt 文件,或者在构建过程中仍然引用了旧的路…...