xinference docker 部署方式

文章目录

- 简绍

- docker 安装方式

- 访问地址

- 对应官网

- 在 dify 中 添加 xinference 容器

- 内置大语言模型

- 嵌入模型

- 图像模型

- 音频模型

- 重排序模型

- 视频模型

简绍

Xorbits Inference (Xinference) 是一个开源平台,用于简化各种 AI 模型的运行和集成。借助 Xinference,您可以使用任何开源 LLM、嵌入模型和多模态模型在云端或本地环境中运行推理,并创建强大的 AI 应用。

docker 安装方式

docker 下载对应的 xinference

docker pull xprobe/xinference

docker 运行,注意 路径改成自己的,

docker run -d --name xinference --gpus all -v E:/docker/xinference/models:/root/models -v E:/docker/xinference/.xinference:/root/.xinference -v E:/docker/xinference/.cache/huggingface:/root/.cache/huggingface -e XINFERENCE_HOME=/root/models -p 9997:9997 xprobe/xinference:latest xinference-local -H 0.0.0.0

-d: 让容器在后台运行。--name xinference: 为容器指定一个名称,这里是xinference。--gpus all: 允许容器访问主机上的所有GPU,这对于需要进行大量计算的任务(如机器学习模型的推理)非常有用。-v E:/docker/xinference/models:/root/models,-v E:/docker/xinference/.xinference:/root/.xinference,-v E:/docker/xinference/.cache/huggingface:/root/.cache/huggingface: 这些参数用于将主机的目录挂载到容器内部的特定路径,以便于数据持久化和共享。例如,第一个挂载是将主机的E:/docker/xinference/models目录映射到容器内的/root/models目录。-e XINFERENCE_HOME=/root/models: 设置环境变量XINFERENCE_HOME,其值为/root/models,这可能是在容器内配置某些应用行为的方式。-p 9997:9997: 将主机的9997端口映射到容器的9997端口,允许外部通过主机的该端口访问容器的服务。xprobe/xinference:latest: 指定要使用的镜像和标签,这里使用的是xprobe/xinference镜像的latest版本。xinference-local -H 0.0.0.0: 在容器启动时执行的命令,看起来像是以本地模式运行某个服务,并监听所有网络接口。

访问地址

http://127.0.0.1:9997/

对应官网

https://inference.readthedocs.io/zh-cn/latest/index.html

在 dify 中 添加 xinference 容器

docker dify 添加 docker 容器内ip 配置

http://host.docker.internal:9997

内置大语言模型

MODEL NAME | ABILITIES | COTNEXT_LENGTH | DESCRIPTION |

|---|---|---|---|

aquila2 | generate | 2048 | Aquila2 series models are the base language models |

aquila2-chat | chat | 2048 | Aquila2-chat series models are the chat models |

aquila2-chat-16k | chat | 16384 | AquilaChat2-16k series models are the long-text chat models |

baichuan-2 | generate | 4096 | Baichuan2 is an open-source Transformer based LLM that is trained on both Chinese and English data. |

baichuan-2-chat | chat | 4096 | Baichuan2-chat is a fine-tuned version of the Baichuan LLM, specializing in chatting. |

c4ai-command-r-v01 | chat | 131072 | C4AI Command-R(+) is a research release of a 35 and 104 billion parameter highly performant generative model. |

code-llama | generate | 100000 | Code-Llama is an open-source LLM trained by fine-tuning LLaMA2 for generating and discussing code. |

code-llama-instruct | chat | 100000 | Code-Llama-Instruct is an instruct-tuned version of the Code-Llama LLM. |

code-llama-python | generate | 100000 | Code-Llama-Python is a fine-tuned version of the Code-Llama LLM, specializing in Python. |

codegeex4 | chat | 131072 | the open-source version of the latest CodeGeeX4 model series |

codeqwen1.5 | generate | 65536 | CodeQwen1.5 is the Code-Specific version of Qwen1.5. It is a transformer-based decoder-only language model pretrained on a large amount of data of codes. |

codeqwen1.5-chat | chat | 65536 | CodeQwen1.5 is the Code-Specific version of Qwen1.5. It is a transformer-based decoder-only language model pretrained on a large amount of data of codes. |

codeshell | generate | 8194 | CodeShell is a multi-language code LLM developed by the Knowledge Computing Lab of Peking University. |

codeshell-chat | chat | 8194 | CodeShell is a multi-language code LLM developed by the Knowledge Computing Lab of Peking University. |

codestral-v0.1 | generate | 32768 | Codestrall-22B-v0.1 is trained on a diverse dataset of 80+ programming languages, including the most popular ones, such as Python, Java, C, C++, JavaScript, and Bash |

cogagent | chat, vision | 4096 | The CogAgent-9B-20241220 model is based on GLM-4V-9B, a bilingual open-source VLM base model. Through data collection and optimization, multi-stage training, and strategy improvements, CogAgent-9B-20241220 achieves significant advancements in GUI perception, inference prediction accuracy, action space completeness, and task generalizability. |

cogvlm2 | chat, vision | 8192 | CogVLM2 have achieved good results in many lists compared to the previous generation of CogVLM open source models. Its excellent performance can compete with some non-open source models. |

cogvlm2-video-llama3-chat | chat, vision | 8192 | CogVLM2-Video achieves state-of-the-art performance on multiple video question answering tasks. |

csg-wukong-chat-v0.1 | chat | 32768 | csg-wukong-1B is a 1 billion-parameter small language model(SLM) pretrained on 1T tokens. |

deepseek | generate | 4096 | DeepSeek LLM, trained from scratch on a vast dataset of 2 trillion tokens in both English and Chinese. |

deepseek-chat | chat | 4096 | DeepSeek LLM is an advanced language model comprising 67 billion parameters. It has been trained from scratch on a vast dataset of 2 trillion tokens in both English and Chinese. |

deepseek-coder | generate | 16384 | Deepseek Coder is composed of a series of code language models, each trained from scratch on 2T tokens, with a composition of 87% code and 13% natural language in both English and Chinese. |

deepseek-coder-instruct | chat | 16384 | deepseek-coder-instruct is a model initialized from deepseek-coder-base and fine-tuned on 2B tokens of instruction data. |

deepseek-r1 | chat | 163840 | DeepSeek-R1, which incorporates cold-start data before RL. DeepSeek-R1 achieves performance comparable to OpenAI-o1 across math, code, and reasoning tasks. |

deepseek-r1-distill-llama | chat | 131072 | deepseek-r1-distill-llama is distilled from DeepSeek-R1 based on Llama |

deepseek-r1-distill-qwen | chat | 131072 | deepseek-r1-distill-qwen is distilled from DeepSeek-R1 based on Qwen |

deepseek-v2 | generate | 128000 | DeepSeek-V2, a strong Mixture-of-Experts (MoE) language model characterized by economical training and efficient inference. |

deepseek-v2-chat | chat | 128000 | DeepSeek-V2, a strong Mixture-of-Experts (MoE) language model characterized by economical training and efficient inference. |

deepseek-v2-chat-0628 | chat | 128000 | DeepSeek-V2-Chat-0628 is an improved version of DeepSeek-V2-Chat. |

deepseek-v2.5 | chat | 128000 | DeepSeek-V2.5 is an upgraded version that combines DeepSeek-V2-Chat and DeepSeek-Coder-V2-Instruct. The new model integrates the general and coding abilities of the two previous versions. |

deepseek-v3 | chat | 163840 | DeepSeek-V3, a strong Mixture-of-Experts (MoE) language model with 671B total parameters with 37B activated for each token. |

deepseek-vl-chat | chat, vision | 4096 | DeepSeek-VL possesses general multimodal understanding capabilities, capable of processing logical diagrams, web pages, formula recognition, scientific literature, natural images, and embodied intelligence in complex scenarios. |

gemma-2-it | chat | 8192 | Gemma is a family of lightweight, state-of-the-art open models from Google, built from the same research and technology used to create the Gemini models. |

gemma-it | chat | 8192 | Gemma is a family of lightweight, state-of-the-art open models from Google, built from the same research and technology used to create the Gemini models. |

glm-4v | chat, vision | 8192 | GLM4 is the open source version of the latest generation of pre-trained models in the GLM-4 series launched by Zhipu AI. |

glm-edge-chat | chat | 8192 | The GLM-Edge series is our attempt to face the end-side real-life scenarios, which consists of two sizes of large-language dialogue models and multimodal comprehension models (GLM-Edge-1.5B-Chat, GLM-Edge-4B-Chat, GLM-Edge-V-2B, GLM-Edge-V-5B). Among them, the 1.5B / 2B model is mainly for platforms such as mobile phones and cars, and the 4B / 5B model is mainly for platforms such as PCs. |

glm-edge-v | chat, vision | 8192 | The GLM-Edge series is our attempt to face the end-side real-life scenarios, which consists of two sizes of large-language dialogue models and multimodal comprehension models (GLM-Edge-1.5B-Chat, GLM-Edge-4B-Chat, GLM-Edge-V-2B, GLM-Edge-V-5B). Among them, the 1.5B / 2B model is mainly for platforms such as mobile phones and cars, and the 4B / 5B model is mainly for platforms such as PCs. |

glm4-chat | chat, tools | 131072 | GLM4 is the open source version of the latest generation of pre-trained models in the GLM-4 series launched by Zhipu AI. |

glm4-chat-1m | chat, tools | 1048576 | GLM4 is the open source version of the latest generation of pre-trained models in the GLM-4 series launched by Zhipu AI. |

gorilla-openfunctions-v2 | chat | 4096 | OpenFunctions is designed to extend Large Language Model (LLM) Chat Completion feature to formulate executable APIs call given natural language instructions and API context. |

gpt-2 | generate | 1024 | GPT-2 is a Transformer-based LLM that is trained on WebTest, a 40 GB dataset of Reddit posts with 3+ upvotes. |

internlm2-chat | chat | 32768 | The second generation of the InternLM model, InternLM2. |

internlm2.5-chat | chat | 32768 | InternLM2.5 series of the InternLM model. |

internlm2.5-chat-1m | chat | 262144 | InternLM2.5 series of the InternLM model supports 1M long-context |

internlm3-instruct | chat, tools | 32768 | InternLM3 has open-sourced an 8-billion parameter instruction model, InternLM3-8B-Instruct, designed for general-purpose usage and advanced reasoning. |

internvl-chat | chat, vision | 32768 | InternVL 1.5 is an open-source multimodal large language model (MLLM) to bridge the capability gap between open-source and proprietary commercial models in multimodal understanding. |

internvl2 | chat, vision | 32768 | InternVL 2 is an open-source multimodal large language model (MLLM) to bridge the capability gap between open-source and proprietary commercial models in multimodal understanding. |

llama-2 | generate | 4096 | Llama-2 is the second generation of Llama, open-source and trained on a larger amount of data. |

llama-2-chat | chat | 4096 | Llama-2-Chat is a fine-tuned version of the Llama-2 LLM, specializing in chatting. |

llama-3 | generate | 8192 | Llama 3 is an auto-regressive language model that uses an optimized transformer architecture |

llama-3-instruct | chat | 8192 | The Llama 3 instruction tuned models are optimized for dialogue use cases and outperform many of the available open source chat models on common industry benchmarks.. |

llama-3.1 | generate | 131072 | Llama 3.1 is an auto-regressive language model that uses an optimized transformer architecture |

llama-3.1-instruct | chat, tools | 131072 | The Llama 3.1 instruction tuned models are optimized for dialogue use cases and outperform many of the available open source chat models on common industry benchmarks.. |

llama-3.2-vision | generate, vision | 131072 | The Llama 3.2-Vision instruction-tuned models are optimized for visual recognition, image reasoning, captioning, and answering general questions about an image… |

llama-3.2-vision-instruct | chat, vision | 131072 | Llama 3.2-Vision instruction-tuned models are optimized for visual recognition, image reasoning, captioning, and answering general questions about an image… |

llama-3.3-instruct | chat, tools | 131072 | The Llama 3.3 instruction tuned models are optimized for dialogue use cases and outperform many of the available open source chat models on common industry benchmarks.. |

marco-o1 | chat, tools | 32768 | Marco-o1: Towards Open Reasoning Models for Open-Ended Solutions |

minicpm-2b-dpo-bf16 | chat | 4096 | MiniCPM is an End-Size LLM developed by ModelBest Inc. and TsinghuaNLP, with only 2.4B parameters excluding embeddings. |

minicpm-2b-dpo-fp16 | chat | 4096 | MiniCPM is an End-Size LLM developed by ModelBest Inc. and TsinghuaNLP, with only 2.4B parameters excluding embeddings. |

minicpm-2b-dpo-fp32 | chat | 4096 | MiniCPM is an End-Size LLM developed by ModelBest Inc. and TsinghuaNLP, with only 2.4B parameters excluding embeddings. |

minicpm-2b-sft-bf16 | chat | 4096 | MiniCPM is an End-Size LLM developed by ModelBest Inc. and TsinghuaNLP, with only 2.4B parameters excluding embeddings. |

minicpm-2b-sft-fp32 | chat | 4096 | MiniCPM is an End-Size LLM developed by ModelBest Inc. and TsinghuaNLP, with only 2.4B parameters excluding embeddings. |

minicpm-llama3-v-2_5 | chat, vision | 8192 | MiniCPM-Llama3-V 2.5 is the latest model in the MiniCPM-V series. The model is built on SigLip-400M and Llama3-8B-Instruct with a total of 8B parameters. |

minicpm-v-2.6 | chat, vision | 32768 | MiniCPM-V 2.6 is the latest model in the MiniCPM-V series. The model is built on SigLip-400M and Qwen2-7B with a total of 8B parameters. |

minicpm3-4b | chat | 32768 | MiniCPM3-4B is the 3rd generation of MiniCPM series. The overall performance of MiniCPM3-4B surpasses Phi-3.5-mini-Instruct and GPT-3.5-Turbo-0125, being comparable with many recent 7B~9B models. |

mistral-instruct-v0.1 | chat | 8192 | Mistral-7B-Instruct is a fine-tuned version of the Mistral-7B LLM on public datasets, specializing in chatting. |

mistral-instruct-v0.2 | chat | 8192 | The Mistral-7B-Instruct-v0.2 Large Language Model (LLM) is an improved instruct fine-tuned version of Mistral-7B-Instruct-v0.1. |

mistral-instruct-v0.3 | chat | 32768 | The Mistral-7B-Instruct-v0.2 Large Language Model (LLM) is an improved instruct fine-tuned version of Mistral-7B-Instruct-v0.1. |

mistral-large-instruct | chat | 131072 | Mistral-Large-Instruct-2407 is an advanced dense Large Language Model (LLM) of 123B parameters with state-of-the-art reasoning, knowledge and coding capabilities. |

mistral-nemo-instruct | chat | 1024000 | The Mistral-Nemo-Instruct-2407 Large Language Model (LLM) is an instruct fine-tuned version of the Mistral-Nemo-Base-2407 |

mistral-v0.1 | generate | 8192 | Mistral-7B is a unmoderated Transformer based LLM claiming to outperform Llama2 on all benchmarks. |

mixtral-8x22b-instruct-v0.1 | chat | 65536 | The Mixtral-8x22B-Instruct-v0.1 Large Language Model (LLM) is an instruct fine-tuned version of the Mixtral-8x22B-v0.1, specializing in chatting. |

mixtral-instruct-v0.1 | chat | 32768 | Mistral-8x7B-Instruct is a fine-tuned version of the Mistral-8x7B LLM, specializing in chatting. |

mixtral-v0.1 | generate | 32768 | The Mixtral-8x7B Large Language Model (LLM) is a pretrained generative Sparse Mixture of Experts. |

omnilmm | chat, vision | 2048 | OmniLMM is a family of open-source large multimodal models (LMMs) adept at vision & language modeling. |

openhermes-2.5 | chat | 8192 | Openhermes 2.5 is a fine-tuned version of Mistral-7B-v0.1 on primarily GPT-4 generated data. |

opt | generate | 2048 | Opt is an open-source, decoder-only, Transformer based LLM that was designed to replicate GPT-3. |

orion-chat | chat | 4096 | Orion-14B series models are open-source multilingual large language models trained from scratch by OrionStarAI. |

orion-chat-rag | chat | 4096 | Orion-14B series models are open-source multilingual large language models trained from scratch by OrionStarAI. |

phi-2 | generate | 2048 | Phi-2 is a 2.7B Transformer based LLM used for research on model safety, trained with data similar to Phi-1.5 but augmented with synthetic texts and curated websites. |

phi-3-mini-128k-instruct | chat | 128000 | The Phi-3-Mini-128K-Instruct is a 3.8 billion-parameter, lightweight, state-of-the-art open model trained using the Phi-3 datasets. |

phi-3-mini-4k-instruct | chat | 4096 | The Phi-3-Mini-4k-Instruct is a 3.8 billion-parameter, lightweight, state-of-the-art open model trained using the Phi-3 datasets. |

platypus2-70b-instruct | generate | 4096 | Platypus-70B-instruct is a merge of garage-bAInd/Platypus2-70B and upstage/Llama-2-70b-instruct-v2. |

qvq-72b-preview | chat, vision | 32768 | QVQ-72B-Preview is an experimental research model developed by the Qwen team, focusing on enhancing visual reasoning capabilities. |

qwen-chat | chat | 32768 | Qwen-chat is a fine-tuned version of the Qwen LLM trained with alignment techniques, specializing in chatting. |

qwen-vl-chat | chat, vision | 4096 | Qwen-VL-Chat supports more flexible interaction, such as multiple image inputs, multi-round question answering, and creative capabilities. |

qwen1.5-chat | chat, tools | 32768 | Qwen1.5 is the beta version of Qwen2, a transformer-based decoder-only language model pretrained on a large amount of data. |

qwen1.5-moe-chat | chat, tools | 32768 | Qwen1.5-MoE is a transformer-based MoE decoder-only language model pretrained on a large amount of data. |

qwen2-audio | generate, audio | 32768 | Qwen2-Audio: A large-scale audio-language model which is capable of accepting various audio signal inputs and performing audio analysis or direct textual responses with regard to speech instructions. |

qwen2-audio-instruct | chat, audio | 32768 | Qwen2-Audio: A large-scale audio-language model which is capable of accepting various audio signal inputs and performing audio analysis or direct textual responses with regard to speech instructions. |

qwen2-instruct | chat, tools | 32768 | Qwen2 is the new series of Qwen large language models |

qwen2-moe-instruct | chat, tools | 32768 | Qwen2 is the new series of Qwen large language models. |

qwen2-vl-instruct | chat, vision | 32768 | Qwen2-VL: To See the World More Clearly.Qwen2-VL is the latest version of the vision language models in the Qwen model familities. |

qwen2.5 | generate | 32768 | Qwen2.5 is the latest series of Qwen large language models. For Qwen2.5, we release a number of base language models and instruction-tuned language models ranging from 0.5 to 72 billion parameters. |

qwen2.5-coder | generate | 32768 | Qwen2.5-Coder is the latest series of Code-Specific Qwen large language models (formerly known as CodeQwen). |

qwen2.5-coder-instruct | chat, tools | 32768 | Qwen2.5-Coder is the latest series of Code-Specific Qwen large language models (formerly known as CodeQwen). |

qwen2.5-instruct | chat, tools | 32768 | Qwen2.5 is the latest series of Qwen large language models. For Qwen2.5, we release a number of base language models and instruction-tuned language models ranging from 0.5 to 72 billion parameters. |

qwen2.5-vl-instruct | chat, vision | 128000 | Qwen2.5-VL: Qwen2.5-VL is the latest version of the vision language models in the Qwen model familities. |

qwq-32b-preview | chat | 32768 | QwQ-32B-Preview is an experimental research model developed by the Qwen Team, focused on advancing AI reasoning capabilities. |

seallm_v2 | generate | 8192 | We introduce SeaLLM-7B-v2, the state-of-the-art multilingual LLM for Southeast Asian (SEA) languages |

seallm_v2.5 | generate | 8192 | We introduce SeaLLM-7B-v2.5, the state-of-the-art multilingual LLM for Southeast Asian (SEA) languages |

skywork | generate | 4096 | Skywork is a series of large models developed by the Kunlun Group · Skywork team. |

skywork-math | generate | 4096 | Skywork is a series of large models developed by the Kunlun Group · Skywork team. |

starling-lm | chat | 4096 | We introduce Starling-7B, an open large language model (LLM) trained by Reinforcement Learning from AI Feedback (RLAIF). The model harnesses the power of our new GPT-4 labeled ranking dataset |

telechat | chat | 8192 | The TeleChat is a large language model developed and trained by China Telecom Artificial Intelligence Technology Co., LTD. The 7B model base is trained with 1.5 trillion Tokens and 3 trillion Tokens and Chinese high-quality corpus. |

tiny-llama | generate | 2048 | The TinyLlama project aims to pretrain a 1.1B Llama model on 3 trillion tokens. |

wizardcoder-python-v1.0 | chat | 100000 | |

wizardmath-v1.0 | chat | 2048 | WizardMath is an open-source LLM trained by fine-tuning Llama2 with Evol-Instruct, specializing in math. |

xverse | generate | 2048 | XVERSE is a multilingual large language model, independently developed by Shenzhen Yuanxiang Technology. |

xverse-chat | chat | 2048 | XVERSEB-Chat is the aligned version of model XVERSE. |

yi | generate | 4096 | The Yi series models are large language models trained from scratch by developers at 01.AI. |

yi-1.5 | generate | 4096 | Yi-1.5 is an upgraded version of Yi. It is continuously pre-trained on Yi with a high-quality corpus of 500B tokens and fine-tuned on 3M diverse fine-tuning samples. |

yi-1.5-chat | chat | 4096 | Yi-1.5 is an upgraded version of Yi. It is continuously pre-trained on Yi with a high-quality corpus of 500B tokens and fine-tuned on 3M diverse fine-tuning samples. |

yi-1.5-chat-16k | chat | 16384 | Yi-1.5 is an upgraded version of Yi. It is continuously pre-trained on Yi with a high-quality corpus of 500B tokens and fine-tuned on 3M diverse fine-tuning samples. |

yi-200k | generate | 262144 | The Yi series models are large language models trained from scratch by developers at 01.AI. |

yi-chat | chat | 4096 | The Yi series models are large language models trained from scratch by developers at 01.AI. |

yi-coder | generate | 131072 | Yi-Coder is a series of open-source code language models that delivers state-of-the-art coding performance with fewer than 10 billion parameters.Excelling in long-context understanding with a maximum context length of 128K tokens.Supporting 52 major programming languages, including popular ones such as Java, Python, JavaScript, and C++. |

yi-coder-chat | chat | 131072 | Yi-Coder is a series of open-source code language models that delivers state-of-the-art coding performance with fewer than 10 billion parameters.Excelling in long-context understanding with a maximum context length of 128K tokens.Supporting 52 major programming languages, including popular ones such as Java, Python, JavaScript, and C++. |

yi-vl-chat | chat, vision | 4096 | Yi Vision Language (Yi-VL) model is the open-source, multimodal version of the Yi Large Language Model (LLM) series, enabling content comprehension, recognition, and multi-round conversations about images. |

嵌入模型

- bce-embedding-base_v1

- bge-base-en

- bge-base-en-v1.5

- bge-base-zh

- bge-base-zh-v1.5

- bge-large-en

- bge-large-en-v1.5

- bge-large-zh

- bge-large-zh-noinstruct

- bge-large-zh-v1.5

- bge-m3

- bge-small-en-v1.5

- bge-small-zh

- bge-small-zh-v1.5

- e5-large-v2

- gte-base

- gte-large

- gte-Qwen2

- jina-clip-v2

- jina-embeddings-v2-base-en

- jina-embeddings-v2-base-zh

- jina-embeddings-v2-small-en

- jina-embeddings-v3

- m3e-base

- m3e-large

- m3e-small

- multilingual-e5-large

- text2vec-base-chinese

- text2vec-base-chinese-paraphrase

- text2vec-base-chinese-sentence

- text2vec-base-multilingual

- text2vec-large-chinese

- FLUX.1-dev

- FLUX.1-schnell

- GOT-OCR2_0

- HunyuanDiT-v1.2

- HunyuanDiT-v1.2-Distilled

- kolors

- sd-turbo

- sd3-medium

- sd3.5-large

- sd3.5-large-turbo

- sd3.5-medium

- sdxl-turbo

- stable-diffusion-2-inpainting

- stable-diffusion-inpainting

- stable-diffusion-v1.5

- stable-diffusion-xl-base-1.0

- stable-diffusion-xl-inpainting

图像模型

- FLUX.1-dev

- FLUX.1-schnell

- GOT-OCR2_0

- HunyuanDiT-v1.2

- HunyuanDiT-v1.2-Distilled

- kolors

- sd-turbo

- sd3-medium

- sd3.5-large

- sd3.5-large-turbo

- sd3.5-medium

- sdxl-turbo

- stable-diffusion-2-inpainting

- stable-diffusion-inpainting

- stable-diffusion-v1.5

- stable-diffusion-xl-base-1.0

- stable-diffusion-xl-inpainting

音频模型

以下是 Xinference 中内置的音频模型列表:

- Belle-distilwhisper-large-v2-zh

- Belle-whisper-large-v2-zh

- Belle-whisper-large-v3-zh

- ChatTTS

- CosyVoice-300M

- CosyVoice-300M-Instruct

- CosyVoice-300M-SFT

- CosyVoice2-0.5B

- F5-TTS

- F5-TTS-MLX

- FishSpeech-1.5

- Kokoro-82M

- MeloTTS-Chinese

- MeloTTS-English

- MeloTTS-English-v2

- MeloTTS-English-v3

- MeloTTS-French

- MeloTTS-Japanese

- MeloTTS-Korean

- MeloTTS-Spanish

- SenseVoiceSmall

- whisper-base

- whisper-base-mlx

- whisper-base.en

- whisper-base.en-mlx

- whisper-large-v3

- whisper-large-v3-mlx

- whisper-large-v3-turbo

- whisper-large-v3-turbo-mlx

- whisper-medium

- whisper-medium-mlx

- whisper-medium.en

- whisper-medium.en-mlx

- whisper-small

- whisper-small-mlx

- whisper-small.en

- whisper-small.en-mlx

- whisper-tiny

- whisper-tiny-mlx

- whisper-tiny.en

- whisper-tiny.en-mlx

重排序模型

以下是 Xinference 中内置的重排序模型列表:

- bce-reranker-base_v1

- bge-reranker-base

- bge-reranker-large

- bge-reranker-v2-gemma

- bge-reranker-v2-m3

- bge-reranker-v2-minicpm-layerwise

- jina-reranker-v2

- minicpm-reranker

视频模型

以下是 Xinference 中内置的视频模型列表:

- CogVideoX-2b

- CogVideoX-5b

- HunyuanVideo

相关文章:

xinference docker 部署方式

文章目录 简绍docker 安装方式访问地址对应官网在 dify 中 添加 xinference 容器内置大语言模型嵌入模型图像模型音频模型重排序模型视频模型 简绍 Xorbits Inference (Xinference) 是一个开源平台,用于简化各种 AI 模型的运行和集成。借助 Xinference,…...

基于Kubernetes部署MySQL主从集群

以下是一个基于Kubernetes部署MySQL主从集群的详细YAML示例,包含StatefulSet、Service、ConfigMap和Secret等关键配置。MySQL主从集群需要至少1个主节点和多个从节点,这里使用 StatefulSet 初始化脚本 实现主从自动配置。 1. 创建 Namespace (可选) ap…...

【Azure 架构师学习笔记】- Azure Databricks (17) --Delta Live Table和Delta Table

本文属于【Azure 架构师学习笔记】系列。 本文属于【Azure Databricks】系列。 接上文 【Azure 架构师学习笔记】- Azure Databricks (16) – Delta Lake 和 ADLS整合 前言 前面介绍了Delta Table,但是Databricks又推出了“Delta Live Tables(DLTs&…...

Mybatis Generator 使用手册

第一章 什么是Mybatis Generator? MyBatis Generator Core – Introduction to MyBatis Generator MyBatis生成器(MBG)是MyBatis框架的代码生成工具。它支持为所有版本的MyBatis生成代码,通过解析数据库表(或多个表&…...

快乐数 力扣202

一、题目 编写一个算法来判断一个数 n 是不是快乐数。 「快乐数」 定义为: 对于一个正整数,每一次将该数替换为它每个位置上的数字的平方和。然后重复这个过程直到这个数变为 1,也可能是 无限循环 但始终变不到 1。如果这个过程 结果为 1&…...

SPA单页面应用优化SEO

1.SSR服务端渲染 将组件或页面通过服务器生成html,再返回给浏览器,如nuxt.js或vue-server-renderer const Vue require(vue); const server require(express)(); const renderer require(vue-server-renderer).createRenderer();const vueApp new …...

城市霓虹灯夜景拍照后期Lr调色教程,手机滤镜PS+Lightroom预设下载!

调色教程 在城市霓虹灯夜景拍摄中,由于现场光线复杂等因素,照片可能无法完全呈现出当时的视觉感受。通过 Lr 调色,可以弥补拍摄时的不足。例如,运用基本调整面板中的曝光、对比度、阴影等工具,可以处理出画面的整体明暗…...

通领科技冲刺北交所

高质量增长奔赴产业新征程 日前,通领科技已正式启动在北交所的 IPO 进程,期望借助资本市场的力量,加速技术升级,推动全球化战略布局。这一举措不仅展现了中国汽车零部件企业的强大实力,也预示着行业转型升级的新突破。…...

隐私保护在 Facebook 用户身份验证中的应用

在这个数字化的时代,个人隐私保护成为了公众关注的焦点。社交媒体巨头 Facebook 作为全球最大的社交平台之一,拥有数十亿用户,其在用户身份验证过程中对隐私保护的重视程度直接影响着用户的安全感和信任度。本文将探讨 Facebook 在用户身份验…...

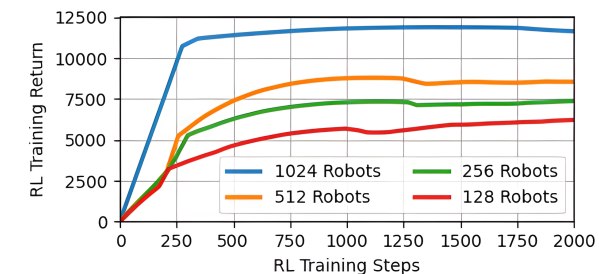

深度学习/强化学习调参技巧

深度调优策略 1. 学习率调整 技巧:学习率是最重要的超参数之一。过大可能导致训练不稳定,过小则收敛速度慢。可以使用学习率衰减(Learning Rate Decay)或自适应学习率方法(如Adam、RMSprop)来动态调整学习…...

python面试常见题目

1、python 有几种数据类型 数字:整形 (int),浮点型 (float)布尔 ( bool):false true字符串 (string)列表 (list)元组 (tuple)字典 &…...

echarts折线图设置背景颜色:X轴和Y轴组成部分背景色

echarts折线图设置背景颜色 关键代码 splitArea: {show: true,areaStyle: {color: [#F2F2F2],},},完整代码位置显示 yAxis: {type: value,boundaryGap: [0, 100%],max: 1,interval: 1,// 于设置y轴的字体axisLabel: {show: false, //这里的show用于设置是否显示y轴下的字体 默…...

文本处理Bert面试内容整理-BERT的应用场景有哪些?

BERT(Bidirectional Encoder Representations from Transformers)在多个自然语言处理(NLP)任务中表现出了强大的能力。由于其能够捕捉双向上下文信息和强大的迁移学习能力,BERT广泛应用于各种NLP场景。以下是BERT的一些典型应用场景: 1. 文本分类 文本分类任务旨在将文本…...

【愚公系列】《Python网络爬虫从入门到精通》045-Charles的SSL证书的安装

标题详情作者简介愚公搬代码头衔华为云特约编辑,华为云云享专家,华为开发者专家,华为产品云测专家,CSDN博客专家,CSDN商业化专家,阿里云专家博主,阿里云签约作者,腾讯云优秀博主&…...

manus对比ChatGPT-Deep reaserch进行研究类学术相关数据分析!谁更胜一筹?

没有账号,只能挑选一个案例 一夜之间被这个用全英文介绍全华班出品的新爆款国产AI产品的小胖刷频。白天还没有切换语言的选项,晚上就加上了。简单看了看团队够成,使用很长实践的Monica创始人也在其中。逐渐可以理解,重心放在海外产…...

20250307确认荣品PRO-RK3566开发板在Android13下的以太网络共享功能

20250307确认荣品PRO-RK3566开发板在Android13下的以太网络共享功能 2025/3/7 13:56 缘起:我司地面站需要实现“太网络共享功能”功能。电脑PC要像连接WIFI热点一样连接在Android设备/平板电脑上来实现上网功能/数据传输。 Android设备/平板电脑通过4G/WIFI来上网。…...

Unity Job系统详解原理和基础应用处理大量物体位置

概述 该脚本使用 Unity Job System 和 Burst Compiler 高效管理大量剑对象的位移计算与坐标更新。通过双缓冲技术实现无锁并行计算,适用于需要高性能批量处理Transform的场景。 核心类 SwordManager 成员变量 变量名类型说明swordPrefabGameObject剑对象预制体_d…...

高效编程指南:PyCharm与DeepSeek的完美结合

DeepSeek接入Pycharm 前几天DeepSeek的充值窗口又悄悄的开放了,这也就意味着我们又可以丝滑的使用DeepSeek的API进行各种辅助性工作了。本文我们来聊聊如何在代码编辑器中使用DeepSeek自动生成代码。 注:本文适用于所有的JetBrains开发工具,…...

Facebook 的隐私保护数据存储方案研究

Facebook 的隐私保护数据存储方案研究 在这个信息爆炸的时代,数据隐私保护已成为公众关注的热点。Facebook,作为全球最大的社交媒体平台之一,承载着海量用户数据,其隐私保护措施和数据存储方案对于维护用户隐私至关重要。本文将深…...

c#面试题整理

1.如何保持数据库的完整性,一致性 最好的方法:数据库约束(check,unique,主键,外键,默认,非空) 其次是:用触发器 最后:才是自己些业务逻辑,这个效率低 2.事…...

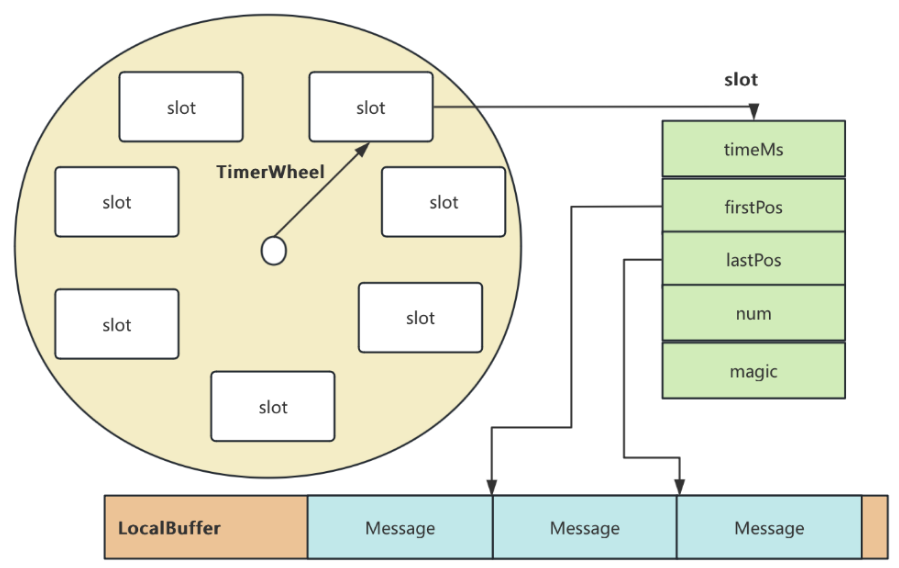

RocketMQ延迟消息机制

两种延迟消息 RocketMQ中提供了两种延迟消息机制 指定固定的延迟级别 通过在Message中设定一个MessageDelayLevel参数,对应18个预设的延迟级别指定时间点的延迟级别 通过在Message中设定一个DeliverTimeMS指定一个Long类型表示的具体时间点。到了时间点后…...

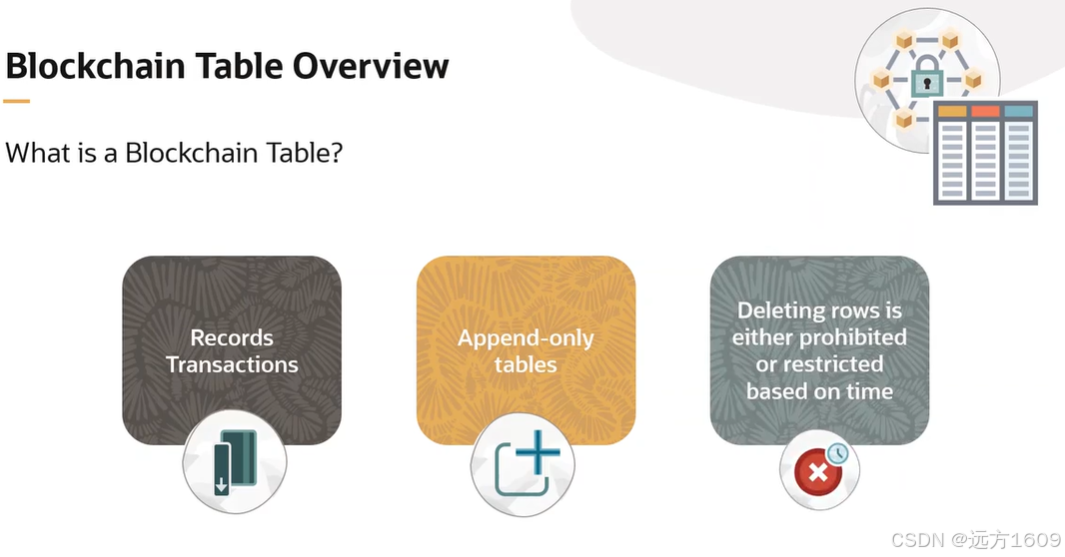

23-Oracle 23 ai 区块链表(Blockchain Table)

小伙伴有没有在金融强合规的领域中遇见,必须要保持数据不可变,管理员都无法修改和留痕的要求。比如医疗的电子病历中,影像检查检验结果不可篡改行的,药品追溯过程中数据只可插入无法删除的特性需求;登录日志、修改日志…...

LLM基础1_语言模型如何处理文本

基于GitHub项目:https://github.com/datawhalechina/llms-from-scratch-cn 工具介绍 tiktoken:OpenAI开发的专业"分词器" torch:Facebook开发的强力计算引擎,相当于超级计算器 理解词嵌入:给词语画"…...

Reasoning over Uncertain Text by Generative Large Language Models

https://ojs.aaai.org/index.php/AAAI/article/view/34674/36829https://ojs.aaai.org/index.php/AAAI/article/view/34674/36829 1. 概述 文本中的不确定性在许多语境中传达,从日常对话到特定领域的文档(例如医学文档)(Heritage 2013;Landmark、Gulbrandsen 和 Svenevei…...

【VLNs篇】07:NavRL—在动态环境中学习安全飞行

项目内容论文标题NavRL: 在动态环境中学习安全飞行 (NavRL: Learning Safe Flight in Dynamic Environments)核心问题解决无人机在包含静态和动态障碍物的复杂环境中进行安全、高效自主导航的挑战,克服传统方法和现有强化学习方法的局限性。核心算法基于近端策略优化…...

C#中的CLR属性、依赖属性与附加属性

CLR属性的主要特征 封装性: 隐藏字段的实现细节 提供对字段的受控访问 访问控制: 可单独设置get/set访问器的可见性 可创建只读或只写属性 计算属性: 可以在getter中执行计算逻辑 不需要直接对应一个字段 验证逻辑: 可以…...

【C++特殊工具与技术】优化内存分配(一):C++中的内存分配

目录 一、C 内存的基本概念 1.1 内存的物理与逻辑结构 1.2 C 程序的内存区域划分 二、栈内存分配 2.1 栈内存的特点 2.2 栈内存分配示例 三、堆内存分配 3.1 new和delete操作符 4.2 内存泄漏与悬空指针问题 4.3 new和delete的重载 四、智能指针…...

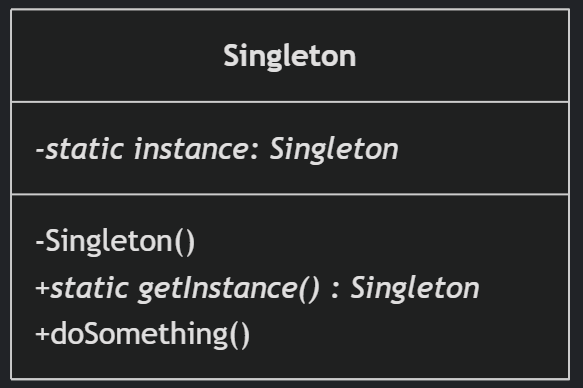

(一)单例模式

一、前言 单例模式属于六大创建型模式,即在软件设计过程中,主要关注创建对象的结果,并不关心创建对象的过程及细节。创建型设计模式将类对象的实例化过程进行抽象化接口设计,从而隐藏了类对象的实例是如何被创建的,封装了软件系统使用的具体对象类型。 六大创建型模式包括…...

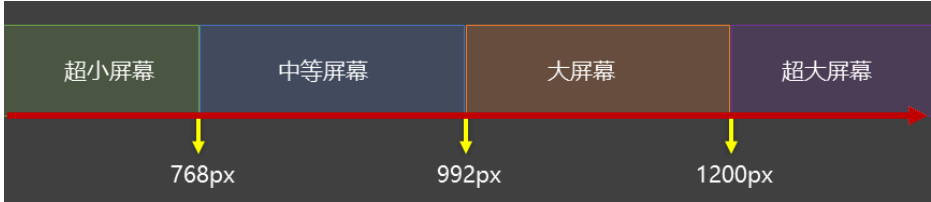

CSS3相关知识点

CSS3相关知识点 CSS3私有前缀私有前缀私有前缀存在的意义常见浏览器的私有前缀 CSS3基本语法CSS3 新增长度单位CSS3 新增颜色设置方式CSS3 新增选择器CSS3 新增盒模型相关属性box-sizing 怪异盒模型resize调整盒子大小box-shadow 盒子阴影opacity 不透明度 CSS3 新增背景属性ba…...

Java后端检查空条件查询

通过抛出运行异常:throw new RuntimeException("请输入查询条件!");BranchWarehouseServiceImpl.java // 查询试剂交易(入库/出库)记录Overridepublic List<BranchWarehouseTransactions> queryForReagent(Branch…...