YOLOv5源码解读1.7-网络架构common.py

往期回顾:YOLOv5源码解读1.0-目录_汉卿HanQ的博客-CSDN博客

学习了yolo.py网络模型后,今天学习common.py,common.py存放这YOLOv5网络搭建的通用模块,如果修改某一块,就要修改这个文件中对应的模块

目录

1.导入python包

2.加载自定义模块

3.填充padautopad

4.Conv

5.深度可分离卷积DWConv

6.注意力层TransformerLayer

7.注意力模块TransformerBlock

8.瓶颈层Bottleneck

9.CSP瓶颈层BottleneckCSP

10.简化的CSP瓶颈层C3

11.自注意力模块的C3TR

12.SPP的C3SPP

13.GhostBottleneck

14.空间金字塔池化模块SPP

15.快速版SPPF

16.Focus

17.幻象卷积GhostConv

18.幻象模块的瓶颈层

19.收缩模块Contract

20.扩张模块Expand

21.拼接模块Concat

22.后端推理DetectMultiBackend

23.模型扩展模块AutoShape

24.推理模块Detections

25.二级分类模块Classify

26.整体代码

1.导入python包

# ----------------------------------1.导入python包----------------------------------import json # 用于json和Python数据之间的相互转换

import math # 数学函数模块

import platform # 获取操作系统的信息

import warnings # 警告程序员关于语言或库功能的变化的方法

from copy import copy # 数据拷贝模块 分浅拷贝和深拷贝

from pathlib import Path # Path将str转换为Path对象 使字符串路径易于操作的模块import cv2 # 调用OpenCV的cv库

import numpy as np # numpy数组操作模块

import pandas as pd # panda数组操作模块

import requests # Python的HTTP客户端库

import torch # pytorch深度学习框架

import torch.nn as nn # 专门为神经网络设计的模块化接口

from PIL import Image # 图像基础操作模块

from torch.cuda import amp # 混合精度训练模块2.加载自定义模块

# ----------------------------------2.加载自定义模块----------------------------------

from utils.datasets import exif_transpose, letterbox # 加载数据集的函数

from utils.general import (LOGGER, check_requirements, check_suffix, colorstr, increment_path, make_divisible,non_max_suppression, scale_coords, xywh2xyxy, xyxy2xywh) # 定义了一些常用的工具函数

from utils.plots import Annotator, colors, save_one_box # 定义了Annotator类,可以在图像上绘制矩形框和标注信息

from utils.torch_utils import time_sync # 定义了一些与PyTorch有关的工具函数3.填充padautopad

# ----------------------------------3.填充padautopad----------------------------------

"""很具输入的卷积核计算需要padding多少才能把tensor补成原理的形状为same卷积或same池化自动扩充k 卷积核的kernel_sizep 计算的需要pad值

"""

def autopad(k, p=None): # kernel, padding# Pad to 'same'if p is None: # 如果k是int 进行 k//2 否则 x//2p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-padreturn p

4.Conv

# ----------------------------------4.Conv----------------------------------

"""Conv是标准卷积层函数 是整个网络中最核心的模块,由卷积层+BN+激活函数组成实现了将输入特征经过卷积层 激活函数 归一化层(指定是否使用) 得到输出层c1 输入的channelc2 输出的channelk 卷积核的kernel_sezes 卷积的stridep 卷积的paddingact 激活函数类型

"""

class Conv(nn.Module):# Standard convolutiondef __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, kernel, stride, padding, groupssuper().__init__()self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=False) # 卷积层self.bn = nn.BatchNorm2d(c2) # 归一化层self.act = nn.SiLU() if act is True else (act if isinstance(act, nn.Module) else nn.Identity()) # 激活函数def forward(self, x): # 前向计算 网络执行顺序按照forward决定return self.act(self.bn(self.conv(x))) # conv->bn->act激活def forward_fuse(self, x): # 前向融合计算return self.act(self.conv(x)) #卷积->激活

5.深度可分离卷积DWConv

# ----------------------------------5.深度可分离卷积DWConv----------------------------------

"""将通道数按输入输出的最大公约数进行切分,在不同的通道图层上进行特征学习深度分离卷积层分组数量=输入通道数量 每个通道作为一个小组分布进行卷积,结果连结作为输出

"""

class DWConv(Conv):# Depth-wise convolution classdef __init__(self, c1, c2, k=1, s=1, act=True): # ch_in, ch_out, kernel, stride, padding, groupssuper().__init__(c1, c2, k, s, g=math.gcd(c1, c2), act=act)

6.注意力层TransformerLayer

# ----------------------------------6.注意力层TransformerLayer----------------------------------

"""单个Encoder部分 但移除了两个Norm部分

"""

class TransformerLayer(nn.Module):# Transformer layer https://arxiv.org/abs/2010.11929 (LayerNorm layers removed for better performance)def __init__(self, c, num_heads):super().__init__()# 初始化 query key valueself.q = nn.Linear(c, c, bias=False)self.k = nn.Linear(c, c, bias=False)self.v = nn.Linear(c, c, bias=False)# 输出0 attn_output 即通过self-attention之后,从每一个词语位置输出的attention和输入的query形状意义# 1 attn_output_weights 即同attention weights 每个单词和另一个单词之间产生一个weightself.ma = nn.MultiheadAttention(embed_dim=c, num_heads=num_heads)self.fc1 = nn.Linear(c, c, bias=False)self.fc2 = nn.Linear(c, c, bias=False)def forward(self, x):x = self.ma(self.q(x), self.k(x), self.v(x))[0] + x # 多注意力机制+残差x = self.fc2(self.fc1(x)) + x # 前馈神经网络+残差return x

7.注意力模块TransformerBlock

# ----------------------------------7.注意力模块TransformerBlock----------------------------------

class TransformerBlock(nn.Module):# Vision Transformer https://arxiv.org/abs/2010.11929def __init__(self, c1, c2, num_heads, num_layers):super().__init__()self.conv = Noneif c1 != c2:self.conv = Conv(c1, c2)self.linear = nn.Linear(c2, c2) # learnable position embeddingself.tr = nn.Sequential(*(TransformerLayer(c2, num_heads) for _ in range(num_layers)))self.c2 = c2def forward(self, x):if self.conv is not None:x = self.conv(x)b, _, w, h = x.shapep = x.flatten(2).permute(2, 0, 1)return self.tr(p + self.linear(p)).permute(1, 2, 0).reshape(b, self.c2, w, h)8.瓶颈层Bottleneck

# ----------------------------------8.瓶颈层Bottleneck----------------------------------

"""标准瓶颈层 由1*1 3*3卷积核和残差快组成主要作用可以更有效提取特征,即减少了参数量 又优化了计算 保持了原有的精度先经过1*1降维 然后3*3卷积 最后通过残差链接在一起c1 第一个卷积的输入channelc2 第二个卷积的输出channelshortcut bool是否又shortcut连接g 从输入通道到输出通道的阻塞链接为1e e*c2就是 第一个输出channel=第二个输入channel

"""

class Bottleneck(nn.Module):# Standard bottleneckdef __init__(self, c1, c2, shortcut=True, g=1, e=0.5): # ch_in, ch_out, shortcut, groups, expansionsuper().__init__()c_ = int(c2 * e) # hidden channelsself.cv1 = Conv(c1, c_, 1, 1) # 1*1卷积层self.cv2 = Conv(c_, c2, 3, 1, g=g) # 3*3卷积层self.add = shortcut and c1 == c2 # 如果shortcut=True 将输入输出相加后再数码处def forward(self, x):return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))

9.CSP瓶颈层BottleneckCSP

# ----------------------------------9.CSP瓶颈层BottleneckCSP----------------------------------

"""由几个Bottleneck堆叠+CSP结构组成CSP结构主要思想是再输入block之前,将输入分为两个部分,一部分通过block计算,另一部分通过一个怠倦极shortcut进行concat可以加强CNN的学习能力,减少内存消耗,减少计算瓶颈c1 整个BottleneckCSP的输入channelc2 整个BottleneckCSP的输出channeln 有几个Bottleneckg 从输入通道到输出通道的阻塞链接e 中间层卷积个数/channel数torch.cat 再11维度(channel)进行合并c_ BottlenctCSP结构的中间层的通道数 由e决定

"""

class BottleneckCSP(nn.Module):# CSP Bottleneck https://github.com/WongKinYiu/CrossStagePartialNetworksdef __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansionsuper().__init__()c_ = int(c2 * e) # hidden channels# 4个1*1卷积层self.cv1 = Conv(c1, c_, 1, 1)self.cv2 = nn.Conv2d(c1, c_, 1, 1, bias=False)self.cv3 = nn.Conv2d(c_, c_, 1, 1, bias=False)self.cv4 = Conv(2 * c_, c2, 1, 1)self.bn = nn.BatchNorm2d(2 * c_) # BN层self.act = nn.SiLU() # 激活函数# m 堆叠n次Bottleneck操作 *可以把list拆开成一个个独立元素self.m = nn.Sequential(*(Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)))def forward(self, x):# y1做BottleneckCSP上分支操作 先做一次cv1 再做cv3 即 x->conv->n*Bottleneck->conv->y1y1 = self.cv3(self.m(self.cv1(x)))# y2做BottleneckCSP下分支操作y2 = self.cv2(x)# y1与y2拼接 接着进入BN层归一化 然后act激活 最后返回cv4return self.cv4(self.act(self.bn(torch.cat((y1, y2), dim=1))))10.简化的CSP瓶颈层C3

# ----------------------------------10.简化的CSP瓶颈层C3----------------------------------

class C3(nn.Module):# CSP Bottleneck with 3 convolutionsdef __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansionsuper().__init__()c_ = int(c2 * e) # hidden channels# 三个 1*1卷积核self.cv1 = Conv(c1, c_, 1, 1)self.cv2 = Conv(c1, c_, 1, 1)self.cv3 = Conv(2 * c_, c2, 1) # act=FReLU(c2)self.m = nn.Sequential(*(Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)))# self.m = nn.Sequential(*[CrossConv(c_, c_, 3, 1, g, 1.0, shortcut) for _ in range(n)])def forward(self, x):# 将第一个卷积层与第二个卷积层的结果拼接到一起return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), dim=1))11.自注意力模块的C3TR

# ----------------------------------11.自注意力模块的C3TR----------------------------------

"""继承C3模块,将n个Bottleneck更换为1个TransformerBlock

"""

class C3TR(C3):# C3 module with TransformerBlock()def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):super().__init__(c1, c2, n, shortcut, g, e)c_ = int(c2 * e)self.m = TransformerBlock(c_, c_, 4, n)12.SPP的C3SPP

# ----------------------------------12.SPP的C3SPP----------------------------------

"""继承C3模块,将n个Bottleneck更换为1个SPP

"""

class C3SPP(C3):# C3 module with SPP()def __init__(self, c1, c2, k=(5, 9, 13), n=1, shortcut=True, g=1, e=0.5):super().__init__(c1, c2, n, shortcut, g, e)c_ = int(c2 * e)self.m = SPP(c_, c_, k)13.GhostBottleneck

# ----------------------------------13.GhostBottleneck----------------------------------

"""继承C3模块,将n个Bottleneck更换为ChostBottleneck

"""

class C3Ghost(C3):# C3 module with GhostBottleneck()def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):super().__init__(c1, c2, n, shortcut, g, e)c_ = int(c2 * e) # hidden channelsself.m = nn.Sequential(*(GhostBottleneck(c_, c_) for _ in range(n)))14.空间金字塔池化模块SPP

# ----------------------------------14.空间金字塔池化模块SPP----------------------------------

"""空间金字塔池化,用于融合多尺度特征c1 SPP输入channelc2 SPP输出channelk 保存着三个maxpoll的卷积核大小 5 9 13

"""

class SPP(nn.Module):# Spatial Pyramid Pooling (SPP) layer https://arxiv.org/abs/1406.4729def __init__(self, c1, c2, k=(5, 9, 13)):super().__init__()c_ = c1 // 2 # hidden channelsself.cv1 = Conv(c1, c_, 1, 1) # 1*1卷积核self.cv2 = Conv(c_ * (len(k) + 1), c2, 1, 1) # +1因为由len+1个输入# m先进行最大池化操作,然后通过nn.ModuleLost进行构造一个模块,再构造时对每一个k都要进行最大池化self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=x, stride=1, padding=x // 2) for x in k])def forward(self, x):x = self.cv1(x) # cv1操作with warnings.catch_warnings():warnings.simplefilter('ignore') # suppress torch 1.9.0 max_pool2d() warning# 最每一个m进行最大池化,和没有做池化的每一个输入进行叠加,然后拼接 最后做cv2操作return self.cv2(torch.cat([x] + [m(x) for m in self.m], 1))

15.快速版SPPF

# ----------------------------------15.快速版SPPF----------------------------------

"""SPPF是快速版的SPP

"""

class SPPF(nn.Module):# Spatial Pyramid Pooling - Fast (SPPF) layer for YOLOv5 by Glenn Jocherdef __init__(self, c1, c2, k=5): # equivalent to SPP(k=(5, 9, 13))super().__init__()c_ = c1 // 2 # hidden channelsself.cv1 = Conv(c1, c_, 1, 1)self.cv2 = Conv(c_ * 4, c2, 1, 1)self.m = nn.MaxPool2d(kernel_size=k, stride=1, padding=k // 2)def forward(self, x):x = self.cv1(x)with warnings.catch_warnings():warnings.simplefilter('ignore') # suppress torch 1.9.0 max_pool2d() warningy1 = self.m(x)y2 = self.m(y1)return self.cv2(torch.cat([x, y1, y2, self.m(y2)], 1))16.Focus

# ----------------------------------16.Focus----------------------------------

"""Focus模块在模型的一开始,把wh整合到c空间在图片进入到Backbone前,对图片进行切片操作(在一张图片中每隔一个像素就拿一个值 类似临近下采样)生成四张图,四张图互补且不丢失信息通过上述操作,wh就集中到了channel通道空间,输入通道却扩充4倍 即RGB*4=12最后将得到的新图片进行卷积操作,最终得到了没有丢失信息的二倍下采样特征图c1 slice后的channelc2 Focus最终输出的channelk 最后卷积核的kernel_sizes 最后卷积核的stridep 最后卷积的paddingg 输入通道到输出通道的阻塞连接act 激活函数

"""

class Focus(nn.Module):# Focus wh information into c-spacedef __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, kernel, stride, padding, groupssuper().__init__()self.conv = Conv(c1 * 4, c2, k, s, p, g, act)# self.contract = Contract(gain=2)def forward(self, x): # x(b,c,w,h) -> y(b,4c,w/2,h/2)return self.conv(torch.cat([x[..., ::2, ::2], x[..., 1::2, ::2], x[..., ::2, 1::2], x[..., 1::2, 1::2]], 1))# return self.conv(self.contract(x))17.幻象卷积GhostConv

# ----------------------------------17.幻象卷积GhostConv----------------------------------

"""轻量化网络卷积模块 其不能增加mAP 但可以大大减少模型计算量c1 输入的channel值c2 输出的channel值k 卷积的kernel_sizes 卷积的stride....

"""

class GhostConv(nn.Module):# Ghost Convolution https://github.com/huawei-noah/ghostnetdef __init__(self, c1, c2, k=1, s=1, g=1, act=True): # ch_in, ch_out, kernel, stride, groupssuper().__init__()c_ = c2 // 2 # hidden channelsself.cv1 = Conv(c1, c_, k, s, None, g, act) # 少量卷积 一般是一半的计算量self.cv2 = Conv(c_, c_, 5, 1, None, c_, act) # cheap operaions 使用3*3 5*5卷积核 并且逐个特征图卷积def forward(self, x):y = self.cv1(x)return torch.cat([y, self.cv2(y)], 1)18.幻象模块的瓶颈层

# ----------------------------------18.幻象模块的瓶颈层----------------------------------

"""一个可复用模块,类似ResNEt的基本残差,由两个堆叠的Ghost模块组成第一个Ghost模块由于扩展层,增加了通道数。第二个Ghost模块减少通道数与shortcut路径品牌,然后使用shortcat连接俩个模块的输入和输出第二个Ghost模块不适用Relu其他层在其他每一层都使用了批归一化BN和Relu

"""

class GhostBottleneck(nn.Module):# Ghost Bottleneck https://github.com/huawei-noah/ghostnetdef __init__(self, c1, c2, k=3, s=1): # ch_in, ch_out, kernel, stridesuper().__init__()c_ = c2 // 2self.conv = nn.Sequential(GhostConv(c1, c_, 1, 1), # pwDWConv(c_, c_, k, s, act=False) if s == 2 else nn.Identity(), # dwGhostConv(c_, c2, 1, 1, act=False)) # pw-linear# 先将给一个DWconv 然后进行shotcut操作self.shortcut = nn.Sequential(DWConv(c1, c1, k, s, act=False),Conv(c1, c2, 1, 1, act=False)) if s == 2 else nn.Identity()def forward(self, x):return self.conv(x) + self.shortcut(x)

19.收缩模块Contract

# ----------------------------------19.收缩模块Contract----------------------------------

"""收缩模块,调整张量大小,将特征图中的w h 收缩到通道c中

"""

class Contract(nn.Module):# Contract width-height into channels, i.e. x(1,64,80,80) to x(1,256,40,40)def __init__(self, gain=2):super().__init__()self.gain = gaindef forward(self, x):b, c, h, w = x.size() # assert (h / s == 0) and (W / s == 0), 'Indivisible gain's = self.gainx = x.view(b, c, h // s, s, w // s, s) # x(1,64,40,2,40,2)x = x.permute(0, 3, 5, 1, 2, 4).contiguous() # x(1,2,2,64,40,40)return x.view(b, c * s * s, h // s, w // s) # x(1,256,40,40)20.扩张模块Expand

# ----------------------------------20.扩张模块Expand----------------------------------

"""Contract逆操作,扩张模块,将特征图像素变大改变输入特征的shape 将channel的数据扩展到w h

"""

class Expand(nn.Module):# Expand channels into width-height, i.e. x(1,64,80,80) to x(1,16,160,160)def __init__(self, gain=2):super().__init__()self.gain = gaindef forward(self, x):b, c, h, w = x.size() # assert C / s ** 2 == 0, 'Indivisible gain's = self.gainx = x.view(b, s, s, c // s ** 2, h, w) # x(1,2,2,16,80,80)x = x.permute(0, 3, 4, 1, 5, 2).contiguous() # x(1,16,80,2,80,2)return x.view(b, c // s ** 2, h * s, w * s) # x(1,16,160,160)21.拼接模块Concat

# ----------------------------------21.拼接模块Concat----------------------------------

"""将两个tensor进行拼接 demesion是维度意思

"""

class Concat(nn.Module):# Concatenate a list of tensors along dimensiondef __init__(self, dimension=1):super().__init__()self.d = dimensiondef forward(self, x):return torch.cat(x, self.d)

22.后端推理DetectMultiBackend

# ----------------------------------22.后端推理DetectMultiBackend----------------------------------

"""根据不同后端选择相应的模型类型 然后进行推理

"""

class DetectMultiBackend(nn.Module):# YOLOv5 MultiBackend class for python inference on various backendsdef __init__(self, weights='yolov5s.pt', device=None, dnn=True):# Usage:# PyTorch: weights = *.pt# TorchScript: *.torchscript.pt# CoreML: *.mlmodel# TensorFlow: *_saved_model# TensorFlow: *.pb# TensorFlow Lite: *.tflite# ONNX Runtime: *.onnx# OpenCV DNN: *.onnx with dnn=Truesuper().__init__()w = str(weights[0] if isinstance(weights, list) else weights)suffix, suffixes = Path(w).suffix.lower(), ['.pt', '.onnx', '.tflite', '.pb', '', '.mlmodel']check_suffix(w, suffixes) # check weights have acceptable suffixpt, onnx, tflite, pb, saved_model, coreml = (suffix == x for x in suffixes) # backend booleansjit = pt and 'torchscript' in w.lower()stride, names = 64, [f'class{i}' for i in range(1000)] # assign defaultsif jit: # TorchScriptLOGGER.info(f'Loading {w} for TorchScript inference...')extra_files = {'config.txt': ''} # model metadatamodel = torch.jit.load(w, _extra_files=extra_files)if extra_files['config.txt']:d = json.loads(extra_files['config.txt']) # extra_files dictstride, names = int(d['stride']), d['names']elif pt: # PyTorchfrom models.experimental import attempt_load # scoped to avoid circular importmodel = torch.jit.load(w) if 'torchscript' in w else attempt_load(weights, map_location=device)stride = int(model.stride.max()) # model stridenames = model.module.names if hasattr(model, 'module') else model.names # get class nameselif coreml: # CoreML *.mlmodelimport coremltools as ctmodel = ct.models.MLModel(w)elif dnn: # ONNX OpenCV DNNLOGGER.info(f'Loading {w} for ONNX OpenCV DNN inference...')check_requirements(('opencv-python>=4.5.4',))net = cv2.dnn.readNetFromONNX(w)elif onnx: # ONNX RuntimeLOGGER.info(f'Loading {w} for ONNX Runtime inference...')check_requirements(('onnx', 'onnxruntime-gpu' if torch.has_cuda else 'onnxruntime'))import onnxruntimesession = onnxruntime.InferenceSession(w, None)else: # TensorFlow model (TFLite, pb, saved_model)import tensorflow as tfif pb: # https://www.tensorflow.org/guide/migrate#a_graphpb_or_graphpbtxtdef wrap_frozen_graph(gd, inputs, outputs):x = tf.compat.v1.wrap_function(lambda: tf.compat.v1.import_graph_def(gd, name=""), []) # wrappedreturn x.prune(tf.nest.map_structure(x.graph.as_graph_element, inputs),tf.nest.map_structure(x.graph.as_graph_element, outputs))LOGGER.info(f'Loading {w} for TensorFlow *.pb inference...')graph_def = tf.Graph().as_graph_def()graph_def.ParseFromString(open(w, 'rb').read())frozen_func = wrap_frozen_graph(gd=graph_def, inputs="x:0", outputs="Identity:0")elif saved_model:LOGGER.info(f'Loading {w} for TensorFlow saved_model inference...')model = tf.keras.models.load_model(w)elif tflite: # https://www.tensorflow.org/lite/guide/python#install_tensorflow_lite_for_pythonif 'edgetpu' in w.lower():LOGGER.info(f'Loading {w} for TensorFlow Edge TPU inference...')import tflite_runtime.interpreter as tflidelegate = {'Linux': 'libedgetpu.so.1', # install https://coral.ai/software/#edgetpu-runtime'Darwin': 'libedgetpu.1.dylib','Windows': 'edgetpu.dll'}[platform.system()]interpreter = tfli.Interpreter(model_path=w, experimental_delegates=[tfli.load_delegate(delegate)])else:LOGGER.info(f'Loading {w} for TensorFlow Lite inference...')interpreter = tf.lite.Interpreter(model_path=w) # load TFLite modelinterpreter.allocate_tensors() # allocateinput_details = interpreter.get_input_details() # inputsoutput_details = interpreter.get_output_details() # outputsself.__dict__.update(locals()) # assign all variables to selfdef forward(self, im, augment=False, visualize=False, val=False):# YOLOv5 MultiBackend inferenceb, ch, h, w = im.shape # batch, channel, height, widthif self.pt: # PyTorchy = self.model(im) if self.jit else self.model(im, augment=augment, visualize=visualize)return y if val else y[0]elif self.coreml: # CoreML *.mlmodelim = im.permute(0, 2, 3, 1).cpu().numpy() # torch BCHW to numpy BHWC shape(1,320,192,3)im = Image.fromarray((im[0] * 255).astype('uint8'))# im = im.resize((192, 320), Image.ANTIALIAS)y = self.model.predict({'image': im}) # coordinates are xywh normalizedbox = xywh2xyxy(y['coordinates'] * [[w, h, w, h]]) # xyxy pixelsconf, cls = y['confidence'].max(1), y['confidence'].argmax(1).astype(np.float)y = np.concatenate((box, conf.reshape(-1, 1), cls.reshape(-1, 1)), 1)elif self.onnx: # ONNXim = im.cpu().numpy() # torch to numpyif self.dnn: # ONNX OpenCV DNNself.net.setInput(im)y = self.net.forward()else: # ONNX Runtimey = self.session.run([self.session.get_outputs()[0].name], {self.session.get_inputs()[0].name: im})[0]else: # TensorFlow model (TFLite, pb, saved_model)im = im.permute(0, 2, 3, 1).cpu().numpy() # torch BCHW to numpy BHWC shape(1,320,192,3)if self.pb:y = self.frozen_func(x=self.tf.constant(im)).numpy()elif self.saved_model:y = self.model(im, training=False).numpy()elif self.tflite:input, output = self.input_details[0], self.output_details[0]int8 = input['dtype'] == np.uint8 # is TFLite quantized uint8 modelif int8:scale, zero_point = input['quantization']im = (im / scale + zero_point).astype(np.uint8) # de-scaleself.interpreter.set_tensor(input['index'], im)self.interpreter.invoke()y = self.interpreter.get_tensor(output['index'])if int8:scale, zero_point = output['quantization']y = (y.astype(np.float32) - zero_point) * scale # re-scaley[..., 0] *= w # xy[..., 1] *= h # yy[..., 2] *= w # wy[..., 3] *= h # hy = torch.tensor(y)return (y, []) if val else y23.模型扩展模块AutoShape

# ----------------------------------23.模型扩展模块AutoShape----------------------------------

"""给模型封装成包含预处理,推理和NMS在train中不会被调用,当模型训练结束后,会通过这个模块对图片进行重塑,来方便模型预测

"""

class AutoShape(nn.Module):# YOLOv5 input-robust model wrapper for passing cv2/np/PIL/torch inputs. Includes preprocessing, inference and NMSconf = 0.25 # NMS confidence thresholdiou = 0.45 # NMS IoU thresholdclasses = None # (optional list) filter by class, i.e. = [0, 15, 16] for COCO persons, cats and dogsmulti_label = False # NMS multiple labels per boxmax_det = 1000 # maximum number of detections per imagedef __init__(self, model):super().__init__()self.model = model.eval()def autoshape(self):LOGGER.info('AutoShape already enabled, skipping... ') # model already converted to model.autoshape()return selfdef _apply(self, fn):# Apply to(), cpu(), cuda(), half() to model tensors that are not parameters or registered buffersself = super()._apply(fn)m = self.model.model[-1] # Detect()m.stride = fn(m.stride)m.grid = list(map(fn, m.grid))if isinstance(m.anchor_grid, list):m.anchor_grid = list(map(fn, m.anchor_grid))return self@torch.no_grad()def forward(self, imgs, size=640, augment=False, profile=False):# Inference from various sources. For height=640, width=1280, RGB images example inputs are:# file: imgs = 'data/images/zidane.jpg' # str or PosixPath# URI: = 'https://ultralytics.com/images/zidane.jpg'# OpenCV: = cv2.imread('image.jpg')[:,:,::-1] # HWC BGR to RGB x(640,1280,3)# PIL: = Image.open('image.jpg') or ImageGrab.grab() # HWC x(640,1280,3)# numpy: = np.zeros((640,1280,3)) # HWC# torch: = torch.zeros(16,3,320,640) # BCHW (scaled to size=640, 0-1 values)# multiple: = [Image.open('image1.jpg'), Image.open('image2.jpg'), ...] # list of imagest = [time_sync()]p = next(self.model.parameters()) # for device and typeif isinstance(imgs, torch.Tensor): # torchwith amp.autocast(enabled=p.device.type != 'cpu'):return self.model(imgs.to(p.device).type_as(p), augment, profile) # inference# Pre-processn, imgs = (len(imgs), imgs) if isinstance(imgs, list) else (1, [imgs]) # number of images, list of imagesshape0, shape1, files = [], [], [] # image and inference shapes, filenamesfor i, im in enumerate(imgs):f = f'image{i}' # filenameif isinstance(im, (str, Path)): # filename or uriim, f = Image.open(requests.get(im, stream=True).raw if str(im).startswith('http') else im), imim = np.asarray(exif_transpose(im))elif isinstance(im, Image.Image): # PIL Imageim, f = np.asarray(exif_transpose(im)), getattr(im, 'filename', f) or ffiles.append(Path(f).with_suffix('.jpg').name)if im.shape[0] < 5: # image in CHWim = im.transpose((1, 2, 0)) # reverse dataloader .transpose(2, 0, 1)im = im[..., :3] if im.ndim == 3 else np.tile(im[..., None], 3) # enforce 3ch inputs = im.shape[:2] # HWCshape0.append(s) # image shapeg = (size / max(s)) # gainshape1.append([y * g for y in s])imgs[i] = im if im.data.contiguous else np.ascontiguousarray(im) # updateshape1 = [make_divisible(x, int(self.stride.max())) for x in np.stack(shape1, 0).max(0)] # inference shapex = [letterbox(im, new_shape=shape1, auto=False)[0] for im in imgs] # padx = np.stack(x, 0) if n > 1 else x[0][None] # stackx = np.ascontiguousarray(x.transpose((0, 3, 1, 2))) # BHWC to BCHWx = torch.from_numpy(x).to(p.device).type_as(p) / 255 # uint8 to fp16/32t.append(time_sync())with amp.autocast(enabled=p.device.type != 'cpu'):# Inferencey = self.model(x, augment, profile)[0] # forwardt.append(time_sync())# Post-processy = non_max_suppression(y, self.conf, iou_thres=self.iou, classes=self.classes,multi_label=self.multi_label, max_det=self.max_det) # NMSfor i in range(n):scale_coords(shape1, y[i][:, :4], shape0[i])t.append(time_sync())return Detections(imgs, y, files, t, self.names, x.shape)24.推理模块Detections

# ----------------------------------24.推理模块Detections----------------------------------

"""针对目标检测的封装类,对推理结果进行处理

"""

class Detections:# YOLOv5 detections class for inference resultsdef __init__(self, imgs, pred, files, times=None, names=None, shape=None):super().__init__()d = pred[0].device # devicegn = [torch.tensor([*(im.shape[i] for i in [1, 0, 1, 0]), 1, 1], device=d) for im in imgs] # normalizationsself.imgs = imgs # list of images as numpy arrays 原图self.pred = pred # list of tensors pred[0] = (xyxy, conf, cls) 预测值self.names = names # class names 类名self.files = files # image filenames 图像文件名self.xyxy = pred # xyxy pixels 左上角+右下角格式self.xywh = [xyxy2xywh(x) for x in pred] # xywh pixels 中心点+宽长格式self.xyxyn = [x / g for x, g in zip(self.xyxy, gn)] # xyxy normalized xyxy标准化self.xywhn = [x / g for x, g in zip(self.xywh, gn)] # xywh normalized xywh标准化self.n = len(self.pred) # number of images (batch size)self.t = tuple((times[i + 1] - times[i]) * 1000 / self.n for i in range(3)) # timestamps (ms)self.s = shape # inference BCHW shapedef display(self, pprint=False, show=False, save=False, crop=False, render=False, save_dir=Path('')):crops = []for i, (im, pred) in enumerate(zip(self.imgs, self.pred)):s = f'image {i + 1}/{len(self.pred)}: {im.shape[0]}x{im.shape[1]} ' # stringif pred.shape[0]:for c in pred[:, -1].unique():n = (pred[:, -1] == c).sum() # detections per classs += f"{n} {self.names[int(c)]}{'s' * (n > 1)}, " # add to stringif show or save or render or crop:annotator = Annotator(im, example=str(self.names))for *box, conf, cls in reversed(pred): # xyxy, confidence, classlabel = f'{self.names[int(cls)]} {conf:.2f}'if crop:file = save_dir / 'crops' / self.names[int(cls)] / self.files[i] if save else Nonecrops.append({'box': box, 'conf': conf, 'cls': cls, 'label': label,'im': save_one_box(box, im, file=file, save=save)})else: # all othersannotator.box_label(box, label, color=colors(cls))im = annotator.imelse:s += '(no detections)'im = Image.fromarray(im.astype(np.uint8)) if isinstance(im, np.ndarray) else im # from npif pprint:LOGGER.info(s.rstrip(', '))if show:im.show(self.files[i]) # showif save:f = self.files[i]im.save(save_dir / f) # saveif i == self.n - 1:LOGGER.info(f"Saved {self.n} image{'s' * (self.n > 1)} to {colorstr('bold', save_dir)}")if render:self.imgs[i] = np.asarray(im)if crop:if save:LOGGER.info(f'Saved results to {save_dir}\n')return cropsdef print(self):self.display(pprint=True) # print resultsLOGGER.info(f'Speed: %.1fms pre-process, %.1fms inference, %.1fms NMS per image at shape {tuple(self.s)}' %self.t)def show(self):self.display(show=True) # show resultsdef save(self, save_dir='runs/detect/exp'):save_dir = increment_path(save_dir, exist_ok=save_dir != 'runs/detect/exp', mkdir=True) # increment save_dirself.display(save=True, save_dir=save_dir) # save resultsdef crop(self, save=True, save_dir='runs/detect/exp'):save_dir = increment_path(save_dir, exist_ok=save_dir != 'runs/detect/exp', mkdir=True) if save else Nonereturn self.display(crop=True, save=save, save_dir=save_dir) # crop resultsdef render(self):self.display(render=True) # render resultsreturn self.imgsdef pandas(self):# return detections as pandas DataFrames, i.e. print(results.pandas().xyxy[0])new = copy(self) # return copyca = 'xmin', 'ymin', 'xmax', 'ymax', 'confidence', 'class', 'name' # xyxy columnscb = 'xcenter', 'ycenter', 'width', 'height', 'confidence', 'class', 'name' # xywh columnsfor k, c in zip(['xyxy', 'xyxyn', 'xywh', 'xywhn'], [ca, ca, cb, cb]):a = [[x[:5] + [int(x[5]), self.names[int(x[5])]] for x in x.tolist()] for x in getattr(self, k)] # updatesetattr(new, k, [pd.DataFrame(x, columns=c) for x in a])return newdef tolist(self):# return a list of Detections objects, i.e. 'for result in results.tolist():'x = [Detections([self.imgs[i]], [self.pred[i]], self.names, self.s) for i in range(self.n)]for d in x:for k in ['imgs', 'pred', 'xyxy', 'xyxyn', 'xywh', 'xywhn']:setattr(d, k, getattr(d, k)[0]) # pop out of listreturn xdef __len__(self):return self.n25.二级分类模块Classify

# ----------------------------------25.二级分类模块Classify----------------------------------

"""对模型进行二次识别或分类 如车牌识别

"""

class Classify(nn.Module):# Classification head, i.e. x(b,c1,20,20) to x(b,c2)def __init__(self, c1, c2, k=1, s=1, p=None, g=1): # ch_in, ch_out, kernel, stride, padding, groupssuper().__init__()self.aap = nn.AdaptiveAvgPool2d(1) # to x(b,c1,1,1)self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g) # to x(b,c2,1,1) 自适应平均池化操作self.flat = nn.Flatten() # 展平def forward(self, x):z = torch.cat([self.aap(y) for y in (x if isinstance(x, list) else [x])], 1) # cat if list 先自适应平均池化操作 然后拼接return self.flat(self.conv(z)) # flatten to x(b,c2) 对z进行展品操作26.整体代码

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license

"""

Common modules

"""

# ----------------------------------1.导入python包----------------------------------import json # 用于json和Python数据之间的相互转换

import math # 数学函数模块

import platform # 获取操作系统的信息

import warnings # 警告程序员关于语言或库功能的变化的方法

from copy import copy # 数据拷贝模块 分浅拷贝和深拷贝

from pathlib import Path # Path将str转换为Path对象 使字符串路径易于操作的模块import cv2 # 调用OpenCV的cv库

import numpy as np # numpy数组操作模块

import pandas as pd # panda数组操作模块

import requests # Python的HTTP客户端库

import torch # pytorch深度学习框架

import torch.nn as nn # 专门为神经网络设计的模块化接口

from PIL import Image # 图像基础操作模块

from torch.cuda import amp # 混合精度训练模块# ----------------------------------2.加载自定义模块----------------------------------

from utils.datasets import exif_transpose, letterbox # 加载数据集的函数

from utils.general import (LOGGER, check_requirements, check_suffix, colorstr, increment_path, make_divisible,non_max_suppression, scale_coords, xywh2xyxy, xyxy2xywh) # 定义了一些常用的工具函数

from utils.plots import Annotator, colors, save_one_box # 定义了Annotator类,可以在图像上绘制矩形框和标注信息

from utils.torch_utils import time_sync # 定义了一些与PyTorch有关的工具函数# ----------------------------------3.填充padautopad----------------------------------

"""很具输入的卷积核计算需要padding多少才能把tensor补成原理的形状为same卷积或same池化自动扩充k 卷积核的kernel_sizep 计算的需要pad值

"""

def autopad(k, p=None): # kernel, padding# Pad to 'same'if p is None: # 如果k是int 进行 k//2 否则 x//2p = k // 2 if isinstance(k, int) else [x // 2 for x in k] # auto-padreturn p# ----------------------------------4.Conv----------------------------------

"""Conv是标准卷积层函数 是整个网络中最核心的模块,由卷积层+BN+激活函数组成实现了将输入特征经过卷积层 激活函数 归一化层(指定是否使用) 得到输出层c1 输入的channelc2 输出的channelk 卷积核的kernel_sezes 卷积的stridep 卷积的paddingact 激活函数类型

"""

class Conv(nn.Module):# Standard convolutiondef __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, kernel, stride, padding, groupssuper().__init__()self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=False) # 卷积层self.bn = nn.BatchNorm2d(c2) # 归一化层self.act = nn.SiLU() if act is True else (act if isinstance(act, nn.Module) else nn.Identity()) # 激活函数def forward(self, x): # 前向计算 网络执行顺序按照forward决定return self.act(self.bn(self.conv(x))) # conv->bn->act激活def forward_fuse(self, x): # 前向融合计算return self.act(self.conv(x)) #卷积->激活# ----------------------------------5.深度可分离卷积DWConv----------------------------------

"""将通道数按输入输出的最大公约数进行切分,在不同的通道图层上进行特征学习深度分离卷积层分组数量=输入通道数量 每个通道作为一个小组分布进行卷积,结果连结作为输出

"""

class DWConv(Conv):# Depth-wise convolution classdef __init__(self, c1, c2, k=1, s=1, act=True): # ch_in, ch_out, kernel, stride, padding, groupssuper().__init__(c1, c2, k, s, g=math.gcd(c1, c2), act=act)# ----------------------------------6.注意力层TransformerLayer----------------------------------

"""单个Encoder部分 但移除了两个Norm部分

"""

class TransformerLayer(nn.Module):# Transformer layer https://arxiv.org/abs/2010.11929 (LayerNorm layers removed for better performance)def __init__(self, c, num_heads):super().__init__()# 初始化 query key valueself.q = nn.Linear(c, c, bias=False)self.k = nn.Linear(c, c, bias=False)self.v = nn.Linear(c, c, bias=False)# 输出0 attn_output 即通过self-attention之后,从每一个词语位置输出的attention和输入的query形状意义# 1 attn_output_weights 即同attention weights 每个单词和另一个单词之间产生一个weightself.ma = nn.MultiheadAttention(embed_dim=c, num_heads=num_heads)self.fc1 = nn.Linear(c, c, bias=False)self.fc2 = nn.Linear(c, c, bias=False)def forward(self, x):x = self.ma(self.q(x), self.k(x), self.v(x))[0] + x # 多注意力机制+残差x = self.fc2(self.fc1(x)) + x # 前馈神经网络+残差return x# ----------------------------------7.注意力模块TransformerBlock----------------------------------

class TransformerBlock(nn.Module):# Vision Transformer https://arxiv.org/abs/2010.11929def __init__(self, c1, c2, num_heads, num_layers):super().__init__()self.conv = Noneif c1 != c2:self.conv = Conv(c1, c2)self.linear = nn.Linear(c2, c2) # learnable position embeddingself.tr = nn.Sequential(*(TransformerLayer(c2, num_heads) for _ in range(num_layers)))self.c2 = c2def forward(self, x):if self.conv is not None:x = self.conv(x)b, _, w, h = x.shapep = x.flatten(2).permute(2, 0, 1)return self.tr(p + self.linear(p)).permute(1, 2, 0).reshape(b, self.c2, w, h)# ----------------------------------8.瓶颈层Bottleneck----------------------------------

"""标准瓶颈层 由1*1 3*3卷积核和残差快组成主要作用可以更有效提取特征,即减少了参数量 又优化了计算 保持了原有的精度先经过1*1降维 然后3*3卷积 最后通过残差链接在一起c1 第一个卷积的输入channelc2 第二个卷积的输出channelshortcut bool是否又shortcut连接g 从输入通道到输出通道的阻塞链接为1e e*c2就是 第一个输出channel=第二个输入channel

"""

class Bottleneck(nn.Module):# Standard bottleneckdef __init__(self, c1, c2, shortcut=True, g=1, e=0.5): # ch_in, ch_out, shortcut, groups, expansionsuper().__init__()c_ = int(c2 * e) # hidden channelsself.cv1 = Conv(c1, c_, 1, 1) # 1*1卷积层self.cv2 = Conv(c_, c2, 3, 1, g=g) # 3*3卷积层self.add = shortcut and c1 == c2 # 如果shortcut=True 将输入输出相加后再数码处def forward(self, x):return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))# ----------------------------------9.CSP瓶颈层BottleneckCSP----------------------------------

"""由几个Bottleneck堆叠+CSP结构组成CSP结构主要思想是再输入block之前,将输入分为两个部分,一部分通过block计算,另一部分通过一个怠倦极shortcut进行concat可以加强CNN的学习能力,减少内存消耗,减少计算瓶颈c1 整个BottleneckCSP的输入channelc2 整个BottleneckCSP的输出channeln 有几个Bottleneckg 从输入通道到输出通道的阻塞链接e 中间层卷积个数/channel数torch.cat 再11维度(channel)进行合并c_ BottlenctCSP结构的中间层的通道数 由e决定

"""

class BottleneckCSP(nn.Module):# CSP Bottleneck https://github.com/WongKinYiu/CrossStagePartialNetworksdef __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansionsuper().__init__()c_ = int(c2 * e) # hidden channels# 4个1*1卷积层self.cv1 = Conv(c1, c_, 1, 1)self.cv2 = nn.Conv2d(c1, c_, 1, 1, bias=False)self.cv3 = nn.Conv2d(c_, c_, 1, 1, bias=False)self.cv4 = Conv(2 * c_, c2, 1, 1)self.bn = nn.BatchNorm2d(2 * c_) # BN层self.act = nn.SiLU() # 激活函数# m 堆叠n次Bottleneck操作 *可以把list拆开成一个个独立元素self.m = nn.Sequential(*(Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)))def forward(self, x):# y1做BottleneckCSP上分支操作 先做一次cv1 再做cv3 即 x->conv->n*Bottleneck->conv->y1y1 = self.cv3(self.m(self.cv1(x)))# y2做BottleneckCSP下分支操作y2 = self.cv2(x)# y1与y2拼接 接着进入BN层归一化 然后act激活 最后返回cv4return self.cv4(self.act(self.bn(torch.cat((y1, y2), dim=1))))# ----------------------------------10.简化的CSP瓶颈层C3----------------------------------

class C3(nn.Module):# CSP Bottleneck with 3 convolutionsdef __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansionsuper().__init__()c_ = int(c2 * e) # hidden channels# 三个 1*1卷积核self.cv1 = Conv(c1, c_, 1, 1)self.cv2 = Conv(c1, c_, 1, 1)self.cv3 = Conv(2 * c_, c2, 1) # act=FReLU(c2)self.m = nn.Sequential(*(Bottleneck(c_, c_, shortcut, g, e=1.0) for _ in range(n)))# self.m = nn.Sequential(*[CrossConv(c_, c_, 3, 1, g, 1.0, shortcut) for _ in range(n)])def forward(self, x):# 将第一个卷积层与第二个卷积层的结果拼接到一起return self.cv3(torch.cat((self.m(self.cv1(x)), self.cv2(x)), dim=1))# ----------------------------------11.自注意力模块的C3TR----------------------------------

"""继承C3模块,将n个Bottleneck更换为1个TransformerBlock

"""

class C3TR(C3):# C3 module with TransformerBlock()def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):super().__init__(c1, c2, n, shortcut, g, e)c_ = int(c2 * e)self.m = TransformerBlock(c_, c_, 4, n)# ----------------------------------12.SPP的C3SPP----------------------------------

"""继承C3模块,将n个Bottleneck更换为1个SPP

"""

class C3SPP(C3):# C3 module with SPP()def __init__(self, c1, c2, k=(5, 9, 13), n=1, shortcut=True, g=1, e=0.5):super().__init__(c1, c2, n, shortcut, g, e)c_ = int(c2 * e)self.m = SPP(c_, c_, k)# ----------------------------------13.GhostBottleneck----------------------------------

"""继承C3模块,将n个Bottleneck更换为ChostBottleneck

"""

class C3Ghost(C3):# C3 module with GhostBottleneck()def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):super().__init__(c1, c2, n, shortcut, g, e)c_ = int(c2 * e) # hidden channelsself.m = nn.Sequential(*(GhostBottleneck(c_, c_) for _ in range(n)))# ----------------------------------14.空间金字塔池化模块SPP----------------------------------

"""空间金字塔池化,用于融合多尺度特征c1 SPP输入channelc2 SPP输出channelk 保存着三个maxpoll的卷积核大小 5 9 13

"""

class SPP(nn.Module):# Spatial Pyramid Pooling (SPP) layer https://arxiv.org/abs/1406.4729def __init__(self, c1, c2, k=(5, 9, 13)):super().__init__()c_ = c1 // 2 # hidden channelsself.cv1 = Conv(c1, c_, 1, 1) # 1*1卷积核self.cv2 = Conv(c_ * (len(k) + 1), c2, 1, 1) # +1因为由len+1个输入# m先进行最大池化操作,然后通过nn.ModuleLost进行构造一个模块,再构造时对每一个k都要进行最大池化self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=x, stride=1, padding=x // 2) for x in k])def forward(self, x):x = self.cv1(x) # cv1操作with warnings.catch_warnings():warnings.simplefilter('ignore') # suppress torch 1.9.0 max_pool2d() warning# 最每一个m进行最大池化,和没有做池化的每一个输入进行叠加,然后拼接 最后做cv2操作return self.cv2(torch.cat([x] + [m(x) for m in self.m], 1))# ----------------------------------15.快速版SPPF----------------------------------

"""SPPF是快速版的SPP

"""

class SPPF(nn.Module):# Spatial Pyramid Pooling - Fast (SPPF) layer for YOLOv5 by Glenn Jocherdef __init__(self, c1, c2, k=5): # equivalent to SPP(k=(5, 9, 13))super().__init__()c_ = c1 // 2 # hidden channelsself.cv1 = Conv(c1, c_, 1, 1)self.cv2 = Conv(c_ * 4, c2, 1, 1)self.m = nn.MaxPool2d(kernel_size=k, stride=1, padding=k // 2)def forward(self, x):x = self.cv1(x)with warnings.catch_warnings():warnings.simplefilter('ignore') # suppress torch 1.9.0 max_pool2d() warningy1 = self.m(x)y2 = self.m(y1)return self.cv2(torch.cat([x, y1, y2, self.m(y2)], 1))# ----------------------------------16.Focus----------------------------------

"""Focus模块在模型的一开始,把wh整合到c空间在图片进入到Backbone前,对图片进行切片操作(在一张图片中每隔一个像素就拿一个值 类似临近下采样)生成四张图,四张图互补且不丢失信息通过上述操作,wh就集中到了channel通道空间,输入通道却扩充4倍 即RGB*4=12最后将得到的新图片进行卷积操作,最终得到了没有丢失信息的二倍下采样特征图c1 slice后的channelc2 Focus最终输出的channelk 最后卷积核的kernel_sizes 最后卷积核的stridep 最后卷积的paddingg 输入通道到输出通道的阻塞连接act 激活函数

"""

class Focus(nn.Module):# Focus wh information into c-spacedef __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, kernel, stride, padding, groupssuper().__init__()self.conv = Conv(c1 * 4, c2, k, s, p, g, act)# self.contract = Contract(gain=2)def forward(self, x): # x(b,c,w,h) -> y(b,4c,w/2,h/2)return self.conv(torch.cat([x[..., ::2, ::2], x[..., 1::2, ::2], x[..., ::2, 1::2], x[..., 1::2, 1::2]], 1))# return self.conv(self.contract(x))# ----------------------------------17.幻象卷积GhostConv----------------------------------

"""轻量化网络卷积模块 其不能增加mAP 但可以大大减少模型计算量c1 输入的channel值c2 输出的channel值k 卷积的kernel_sizes 卷积的stride....

"""

class GhostConv(nn.Module):# Ghost Convolution https://github.com/huawei-noah/ghostnetdef __init__(self, c1, c2, k=1, s=1, g=1, act=True): # ch_in, ch_out, kernel, stride, groupssuper().__init__()c_ = c2 // 2 # hidden channelsself.cv1 = Conv(c1, c_, k, s, None, g, act) # 少量卷积 一般是一半的计算量self.cv2 = Conv(c_, c_, 5, 1, None, c_, act) # cheap operaions 使用3*3 5*5卷积核 并且逐个特征图卷积def forward(self, x):y = self.cv1(x)return torch.cat([y, self.cv2(y)], 1)# ----------------------------------18.幻象模块的瓶颈层----------------------------------

"""一个可复用模块,类似ResNEt的基本残差,由两个堆叠的Ghost模块组成第一个Ghost模块由于扩展层,增加了通道数。第二个Ghost模块减少通道数与shortcut路径品牌,然后使用shortcat连接俩个模块的输入和输出第二个Ghost模块不适用Relu其他层在其他每一层都使用了批归一化BN和Relu

"""

class GhostBottleneck(nn.Module):# Ghost Bottleneck https://github.com/huawei-noah/ghostnetdef __init__(self, c1, c2, k=3, s=1): # ch_in, ch_out, kernel, stridesuper().__init__()c_ = c2 // 2self.conv = nn.Sequential(GhostConv(c1, c_, 1, 1), # pwDWConv(c_, c_, k, s, act=False) if s == 2 else nn.Identity(), # dwGhostConv(c_, c2, 1, 1, act=False)) # pw-linear# 先将给一个DWconv 然后进行shotcut操作self.shortcut = nn.Sequential(DWConv(c1, c1, k, s, act=False),Conv(c1, c2, 1, 1, act=False)) if s == 2 else nn.Identity()def forward(self, x):return self.conv(x) + self.shortcut(x)# ----------------------------------19.收缩模块Contract----------------------------------

"""收缩模块,调整张量大小,将特征图中的w h 收缩到通道c中

"""

class Contract(nn.Module):# Contract width-height into channels, i.e. x(1,64,80,80) to x(1,256,40,40)def __init__(self, gain=2):super().__init__()self.gain = gaindef forward(self, x):b, c, h, w = x.size() # assert (h / s == 0) and (W / s == 0), 'Indivisible gain's = self.gainx = x.view(b, c, h // s, s, w // s, s) # x(1,64,40,2,40,2)x = x.permute(0, 3, 5, 1, 2, 4).contiguous() # x(1,2,2,64,40,40)return x.view(b, c * s * s, h // s, w // s) # x(1,256,40,40)# ----------------------------------20.扩张模块Expand----------------------------------

"""Contract逆操作,扩张模块,将特征图像素变大改变输入特征的shape 将channel的数据扩展到w h

"""

class Expand(nn.Module):# Expand channels into width-height, i.e. x(1,64,80,80) to x(1,16,160,160)def __init__(self, gain=2):super().__init__()self.gain = gaindef forward(self, x):b, c, h, w = x.size() # assert C / s ** 2 == 0, 'Indivisible gain's = self.gainx = x.view(b, s, s, c // s ** 2, h, w) # x(1,2,2,16,80,80)x = x.permute(0, 3, 4, 1, 5, 2).contiguous() # x(1,16,80,2,80,2)return x.view(b, c // s ** 2, h * s, w * s) # x(1,16,160,160)# ----------------------------------21.拼接模块Concat----------------------------------

"""将两个tensor进行拼接 demesion是维度意思

"""

class Concat(nn.Module):# Concatenate a list of tensors along dimensiondef __init__(self, dimension=1):super().__init__()self.d = dimensiondef forward(self, x):return torch.cat(x, self.d)# ----------------------------------22.后端推理DetectMultiBackend----------------------------------

"""根据不同后端选择相应的模型类型 然后进行推理

"""

class DetectMultiBackend(nn.Module):# YOLOv5 MultiBackend class for python inference on various backendsdef __init__(self, weights='yolov5s.pt', device=None, dnn=True):# Usage:# PyTorch: weights = *.pt# TorchScript: *.torchscript.pt# CoreML: *.mlmodel# TensorFlow: *_saved_model# TensorFlow: *.pb# TensorFlow Lite: *.tflite# ONNX Runtime: *.onnx# OpenCV DNN: *.onnx with dnn=Truesuper().__init__()w = str(weights[0] if isinstance(weights, list) else weights)suffix, suffixes = Path(w).suffix.lower(), ['.pt', '.onnx', '.tflite', '.pb', '', '.mlmodel']check_suffix(w, suffixes) # check weights have acceptable suffixpt, onnx, tflite, pb, saved_model, coreml = (suffix == x for x in suffixes) # backend booleansjit = pt and 'torchscript' in w.lower()stride, names = 64, [f'class{i}' for i in range(1000)] # assign defaultsif jit: # TorchScriptLOGGER.info(f'Loading {w} for TorchScript inference...')extra_files = {'config.txt': ''} # model metadatamodel = torch.jit.load(w, _extra_files=extra_files)if extra_files['config.txt']:d = json.loads(extra_files['config.txt']) # extra_files dictstride, names = int(d['stride']), d['names']elif pt: # PyTorchfrom models.experimental import attempt_load # scoped to avoid circular importmodel = torch.jit.load(w) if 'torchscript' in w else attempt_load(weights, map_location=device)stride = int(model.stride.max()) # model stridenames = model.module.names if hasattr(model, 'module') else model.names # get class nameselif coreml: # CoreML *.mlmodelimport coremltools as ctmodel = ct.models.MLModel(w)elif dnn: # ONNX OpenCV DNNLOGGER.info(f'Loading {w} for ONNX OpenCV DNN inference...')check_requirements(('opencv-python>=4.5.4',))net = cv2.dnn.readNetFromONNX(w)elif onnx: # ONNX RuntimeLOGGER.info(f'Loading {w} for ONNX Runtime inference...')check_requirements(('onnx', 'onnxruntime-gpu' if torch.has_cuda else 'onnxruntime'))import onnxruntimesession = onnxruntime.InferenceSession(w, None)else: # TensorFlow model (TFLite, pb, saved_model)import tensorflow as tfif pb: # https://www.tensorflow.org/guide/migrate#a_graphpb_or_graphpbtxtdef wrap_frozen_graph(gd, inputs, outputs):x = tf.compat.v1.wrap_function(lambda: tf.compat.v1.import_graph_def(gd, name=""), []) # wrappedreturn x.prune(tf.nest.map_structure(x.graph.as_graph_element, inputs),tf.nest.map_structure(x.graph.as_graph_element, outputs))LOGGER.info(f'Loading {w} for TensorFlow *.pb inference...')graph_def = tf.Graph().as_graph_def()graph_def.ParseFromString(open(w, 'rb').read())frozen_func = wrap_frozen_graph(gd=graph_def, inputs="x:0", outputs="Identity:0")elif saved_model:LOGGER.info(f'Loading {w} for TensorFlow saved_model inference...')model = tf.keras.models.load_model(w)elif tflite: # https://www.tensorflow.org/lite/guide/python#install_tensorflow_lite_for_pythonif 'edgetpu' in w.lower():LOGGER.info(f'Loading {w} for TensorFlow Edge TPU inference...')import tflite_runtime.interpreter as tflidelegate = {'Linux': 'libedgetpu.so.1', # install https://coral.ai/software/#edgetpu-runtime'Darwin': 'libedgetpu.1.dylib','Windows': 'edgetpu.dll'}[platform.system()]interpreter = tfli.Interpreter(model_path=w, experimental_delegates=[tfli.load_delegate(delegate)])else:LOGGER.info(f'Loading {w} for TensorFlow Lite inference...')interpreter = tf.lite.Interpreter(model_path=w) # load TFLite modelinterpreter.allocate_tensors() # allocateinput_details = interpreter.get_input_details() # inputsoutput_details = interpreter.get_output_details() # outputsself.__dict__.update(locals()) # assign all variables to selfdef forward(self, im, augment=False, visualize=False, val=False):# YOLOv5 MultiBackend inferenceb, ch, h, w = im.shape # batch, channel, height, widthif self.pt: # PyTorchy = self.model(im) if self.jit else self.model(im, augment=augment, visualize=visualize)return y if val else y[0]elif self.coreml: # CoreML *.mlmodelim = im.permute(0, 2, 3, 1).cpu().numpy() # torch BCHW to numpy BHWC shape(1,320,192,3)im = Image.fromarray((im[0] * 255).astype('uint8'))# im = im.resize((192, 320), Image.ANTIALIAS)y = self.model.predict({'image': im}) # coordinates are xywh normalizedbox = xywh2xyxy(y['coordinates'] * [[w, h, w, h]]) # xyxy pixelsconf, cls = y['confidence'].max(1), y['confidence'].argmax(1).astype(np.float)y = np.concatenate((box, conf.reshape(-1, 1), cls.reshape(-1, 1)), 1)elif self.onnx: # ONNXim = im.cpu().numpy() # torch to numpyif self.dnn: # ONNX OpenCV DNNself.net.setInput(im)y = self.net.forward()else: # ONNX Runtimey = self.session.run([self.session.get_outputs()[0].name], {self.session.get_inputs()[0].name: im})[0]else: # TensorFlow model (TFLite, pb, saved_model)im = im.permute(0, 2, 3, 1).cpu().numpy() # torch BCHW to numpy BHWC shape(1,320,192,3)if self.pb:y = self.frozen_func(x=self.tf.constant(im)).numpy()elif self.saved_model:y = self.model(im, training=False).numpy()elif self.tflite:input, output = self.input_details[0], self.output_details[0]int8 = input['dtype'] == np.uint8 # is TFLite quantized uint8 modelif int8:scale, zero_point = input['quantization']im = (im / scale + zero_point).astype(np.uint8) # de-scaleself.interpreter.set_tensor(input['index'], im)self.interpreter.invoke()y = self.interpreter.get_tensor(output['index'])if int8:scale, zero_point = output['quantization']y = (y.astype(np.float32) - zero_point) * scale # re-scaley[..., 0] *= w # xy[..., 1] *= h # yy[..., 2] *= w # wy[..., 3] *= h # hy = torch.tensor(y)return (y, []) if val else y# ----------------------------------23.模型扩展模块AutoShape----------------------------------

"""给模型封装成包含预处理,推理和NMS在train中不会被调用,当模型训练结束后,会通过这个模块对图片进行重塑,来方便模型预测

"""

class AutoShape(nn.Module):# YOLOv5 input-robust model wrapper for passing cv2/np/PIL/torch inputs. Includes preprocessing, inference and NMSconf = 0.25 # NMS confidence thresholdiou = 0.45 # NMS IoU thresholdclasses = None # (optional list) filter by class, i.e. = [0, 15, 16] for COCO persons, cats and dogsmulti_label = False # NMS multiple labels per boxmax_det = 1000 # maximum number of detections per imagedef __init__(self, model):super().__init__()self.model = model.eval()def autoshape(self):LOGGER.info('AutoShape already enabled, skipping... ') # model already converted to model.autoshape()return selfdef _apply(self, fn):# Apply to(), cpu(), cuda(), half() to model tensors that are not parameters or registered buffersself = super()._apply(fn)m = self.model.model[-1] # Detect()m.stride = fn(m.stride)m.grid = list(map(fn, m.grid))if isinstance(m.anchor_grid, list):m.anchor_grid = list(map(fn, m.anchor_grid))return self@torch.no_grad()def forward(self, imgs, size=640, augment=False, profile=False):# Inference from various sources. For height=640, width=1280, RGB images example inputs are:# file: imgs = 'data/images/zidane.jpg' # str or PosixPath# URI: = 'https://ultralytics.com/images/zidane.jpg'# OpenCV: = cv2.imread('image.jpg')[:,:,::-1] # HWC BGR to RGB x(640,1280,3)# PIL: = Image.open('image.jpg') or ImageGrab.grab() # HWC x(640,1280,3)# numpy: = np.zeros((640,1280,3)) # HWC# torch: = torch.zeros(16,3,320,640) # BCHW (scaled to size=640, 0-1 values)# multiple: = [Image.open('image1.jpg'), Image.open('image2.jpg'), ...] # list of imagest = [time_sync()]p = next(self.model.parameters()) # for device and typeif isinstance(imgs, torch.Tensor): # torchwith amp.autocast(enabled=p.device.type != 'cpu'):return self.model(imgs.to(p.device).type_as(p), augment, profile) # inference# Pre-processn, imgs = (len(imgs), imgs) if isinstance(imgs, list) else (1, [imgs]) # number of images, list of imagesshape0, shape1, files = [], [], [] # image and inference shapes, filenamesfor i, im in enumerate(imgs):f = f'image{i}' # filenameif isinstance(im, (str, Path)): # filename or uriim, f = Image.open(requests.get(im, stream=True).raw if str(im).startswith('http') else im), imim = np.asarray(exif_transpose(im))elif isinstance(im, Image.Image): # PIL Imageim, f = np.asarray(exif_transpose(im)), getattr(im, 'filename', f) or ffiles.append(Path(f).with_suffix('.jpg').name)if im.shape[0] < 5: # image in CHWim = im.transpose((1, 2, 0)) # reverse dataloader .transpose(2, 0, 1)im = im[..., :3] if im.ndim == 3 else np.tile(im[..., None], 3) # enforce 3ch inputs = im.shape[:2] # HWCshape0.append(s) # image shapeg = (size / max(s)) # gainshape1.append([y * g for y in s])imgs[i] = im if im.data.contiguous else np.ascontiguousarray(im) # updateshape1 = [make_divisible(x, int(self.stride.max())) for x in np.stack(shape1, 0).max(0)] # inference shapex = [letterbox(im, new_shape=shape1, auto=False)[0] for im in imgs] # padx = np.stack(x, 0) if n > 1 else x[0][None] # stackx = np.ascontiguousarray(x.transpose((0, 3, 1, 2))) # BHWC to BCHWx = torch.from_numpy(x).to(p.device).type_as(p) / 255 # uint8 to fp16/32t.append(time_sync())with amp.autocast(enabled=p.device.type != 'cpu'):# Inferencey = self.model(x, augment, profile)[0] # forwardt.append(time_sync())# Post-processy = non_max_suppression(y, self.conf, iou_thres=self.iou, classes=self.classes,multi_label=self.multi_label, max_det=self.max_det) # NMSfor i in range(n):scale_coords(shape1, y[i][:, :4], shape0[i])t.append(time_sync())return Detections(imgs, y, files, t, self.names, x.shape)# ----------------------------------24.推理模块Detections----------------------------------

"""针对目标检测的封装类,对推理结果进行处理

"""

class Detections:# YOLOv5 detections class for inference resultsdef __init__(self, imgs, pred, files, times=None, names=None, shape=None):super().__init__()d = pred[0].device # devicegn = [torch.tensor([*(im.shape[i] for i in [1, 0, 1, 0]), 1, 1], device=d) for im in imgs] # normalizationsself.imgs = imgs # list of images as numpy arrays 原图self.pred = pred # list of tensors pred[0] = (xyxy, conf, cls) 预测值self.names = names # class names 类名self.files = files # image filenames 图像文件名self.xyxy = pred # xyxy pixels 左上角+右下角格式self.xywh = [xyxy2xywh(x) for x in pred] # xywh pixels 中心点+宽长格式self.xyxyn = [x / g for x, g in zip(self.xyxy, gn)] # xyxy normalized xyxy标准化self.xywhn = [x / g for x, g in zip(self.xywh, gn)] # xywh normalized xywh标准化self.n = len(self.pred) # number of images (batch size)self.t = tuple((times[i + 1] - times[i]) * 1000 / self.n for i in range(3)) # timestamps (ms)self.s = shape # inference BCHW shapedef display(self, pprint=False, show=False, save=False, crop=False, render=False, save_dir=Path('')):crops = []for i, (im, pred) in enumerate(zip(self.imgs, self.pred)):s = f'image {i + 1}/{len(self.pred)}: {im.shape[0]}x{im.shape[1]} ' # stringif pred.shape[0]:for c in pred[:, -1].unique():n = (pred[:, -1] == c).sum() # detections per classs += f"{n} {self.names[int(c)]}{'s' * (n > 1)}, " # add to stringif show or save or render or crop:annotator = Annotator(im, example=str(self.names))for *box, conf, cls in reversed(pred): # xyxy, confidence, classlabel = f'{self.names[int(cls)]} {conf:.2f}'if crop:file = save_dir / 'crops' / self.names[int(cls)] / self.files[i] if save else Nonecrops.append({'box': box, 'conf': conf, 'cls': cls, 'label': label,'im': save_one_box(box, im, file=file, save=save)})else: # all othersannotator.box_label(box, label, color=colors(cls))im = annotator.imelse:s += '(no detections)'im = Image.fromarray(im.astype(np.uint8)) if isinstance(im, np.ndarray) else im # from npif pprint:LOGGER.info(s.rstrip(', '))if show:im.show(self.files[i]) # showif save:f = self.files[i]im.save(save_dir / f) # saveif i == self.n - 1:LOGGER.info(f"Saved {self.n} image{'s' * (self.n > 1)} to {colorstr('bold', save_dir)}")if render:self.imgs[i] = np.asarray(im)if crop:if save:LOGGER.info(f'Saved results to {save_dir}\n')return cropsdef print(self):self.display(pprint=True) # print resultsLOGGER.info(f'Speed: %.1fms pre-process, %.1fms inference, %.1fms NMS per image at shape {tuple(self.s)}' %self.t)def show(self):self.display(show=True) # show resultsdef save(self, save_dir='runs/detect/exp'):save_dir = increment_path(save_dir, exist_ok=save_dir != 'runs/detect/exp', mkdir=True) # increment save_dirself.display(save=True, save_dir=save_dir) # save resultsdef crop(self, save=True, save_dir='runs/detect/exp'):save_dir = increment_path(save_dir, exist_ok=save_dir != 'runs/detect/exp', mkdir=True) if save else Nonereturn self.display(crop=True, save=save, save_dir=save_dir) # crop resultsdef render(self):self.display(render=True) # render resultsreturn self.imgsdef pandas(self):# return detections as pandas DataFrames, i.e. print(results.pandas().xyxy[0])new = copy(self) # return copyca = 'xmin', 'ymin', 'xmax', 'ymax', 'confidence', 'class', 'name' # xyxy columnscb = 'xcenter', 'ycenter', 'width', 'height', 'confidence', 'class', 'name' # xywh columnsfor k, c in zip(['xyxy', 'xyxyn', 'xywh', 'xywhn'], [ca, ca, cb, cb]):a = [[x[:5] + [int(x[5]), self.names[int(x[5])]] for x in x.tolist()] for x in getattr(self, k)] # updatesetattr(new, k, [pd.DataFrame(x, columns=c) for x in a])return newdef tolist(self):# return a list of Detections objects, i.e. 'for result in results.tolist():'x = [Detections([self.imgs[i]], [self.pred[i]], self.names, self.s) for i in range(self.n)]for d in x:for k in ['imgs', 'pred', 'xyxy', 'xyxyn', 'xywh', 'xywhn']:setattr(d, k, getattr(d, k)[0]) # pop out of listreturn xdef __len__(self):return self.n# ----------------------------------25.二级分类模块Classify----------------------------------

"""对模型进行二次识别或分类 如车牌识别

"""

class Classify(nn.Module):# Classification head, i.e. x(b,c1,20,20) to x(b,c2)def __init__(self, c1, c2, k=1, s=1, p=None, g=1): # ch_in, ch_out, kernel, stride, padding, groupssuper().__init__()self.aap = nn.AdaptiveAvgPool2d(1) # to x(b,c1,1,1)self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g) # to x(b,c2,1,1) 自适应平均池化操作self.flat = nn.Flatten() # 展平def forward(self, x):z = torch.cat([self.aap(y) for y in (x if isinstance(x, list) else [x])], 1) # cat if list 先自适应平均池化操作 然后拼接return self.flat(self.conv(z)) # flatten to x(b,c2) 对z进行展品操作

相关文章:

YOLOv5源码解读1.7-网络架构common.py

往期回顾:YOLOv5源码解读1.0-目录_汉卿HanQ的博客-CSDN博客 学习了yolo.py网络模型后,今天学习common.py,common.py存放这YOLOv5网络搭建的通用模块,如果修改某一块,就要修改这个文件中对应的模块 目录 1.导入python包 2.加载自…...

关于前端框架vue2升级为vue3的相关说明

一些框架需要升级 当前(202306) Vue 的最新稳定版本是 v3.3.4。Vue 框架升级为最新的3.0版本,涉及的相关依赖变更有: 前提条件:已安装 16.0 或更高版本的Node.js(摘) 必须的变更:核…...

gdb调试时查看汇编代码

在gdb中查看汇编代码,可以使用display命令或x命令。 以下是一个示例程序,我们以它为例来演示如何在gdb中查看汇编代码。 #include <stdio.h> int main() { int a 10; int b 20; int c a b; printf("c %d\n", c); return 0;…...

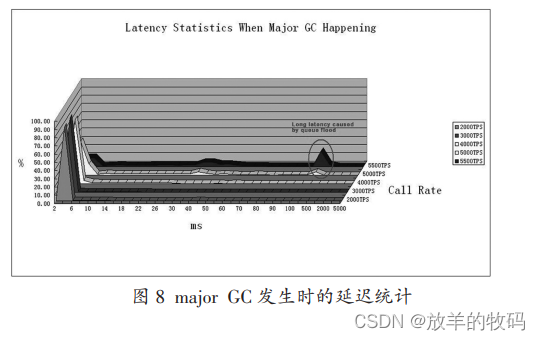

小研究 - JVM GC 对 IMS HSS 延迟分析(二)

用户归属服务器(IMS HSS)是下一代通信网(NGN)核心网络 IP 多媒体子系统(IMS)中的主要用户数据库。IMS HSS 中存储用户的配置文件,可执行用户的身份验证和授权,并提供对呼叫控制服务器…...

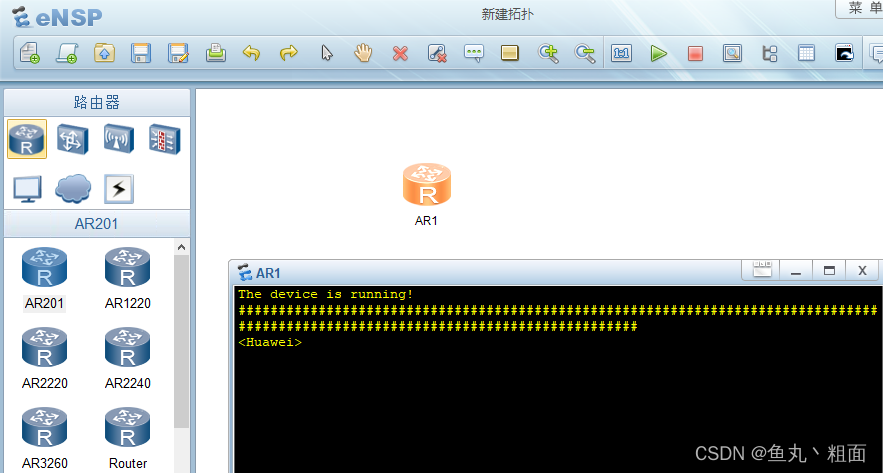

eNSP 路由器启动时一直显示 # 号的解决办法

文章目录 1 问题截图2 解决办法2.1 办法一:排除防火墙原因导致 3 验证是否成功 1 问题截图 路由器命令行一直显示 # 号,如下图 2 解决办法 2.1 办法一:排除防火墙原因导致 排查是否因为系统防火墙原因导致。放行与 eNSP 和 virtualbox 相…...

Kotlin~Facade

概念 又称门面模式,为复杂系统提供简单交互接口。 角色介绍 Facade:外观类,供客户端调用,将请求委派给响应的子系统。SubSystem:子系统,独立的子设备或子类 UML 代码实现 class Light(val name: Strin…...

服务配置文件/var/lib/systemd与/etc/systemd/

这两个目录都是用于存储 systemd 服务的配置文件。但它们的作用和用途略有不同。 /etc/systemd/system/: 这个目录存放的是系统管理员自己创建或修改的 systemd 服务配置文件。在这里的配置文件优先级更高,会覆盖默认的 systemd 配置文件。通常,我们可以…...

华为、阿里巴巴、字节跳动 100+ Python 面试问题总结(一)

系列文章目录 个人简介:机电专业在读研究生,CSDN内容合伙人,博主个人首页 Python面试专栏:《Python面试》此专栏面向准备面试的2024届毕业生。欢迎阅读,一起进步!🌟🌟🌟 …...

【牛客网】二叉搜索树与双向链表

二叉搜索树与双向链表 题目描述算法分析编程代码 链接: 二叉搜索树与双向链表 题目描述 算法分析 编程代码 /* struct TreeNode {int val;struct TreeNode *left;struct TreeNode *right;TreeNode(int x) :val(x), left(NULL), right(NULL) {} };*/ class Solution { public:…...

Oracle免费在线编程:Oracle APEX

前提: 注意:你要有个梯子才能更稳定的访问。 不需要安装Oracle,但是需要注册。(还算方便的) 注册&登录过程 进入Oracle APEX官网,我们选择免费的APEX工作区即可,点击“免费注册”。在注册…...

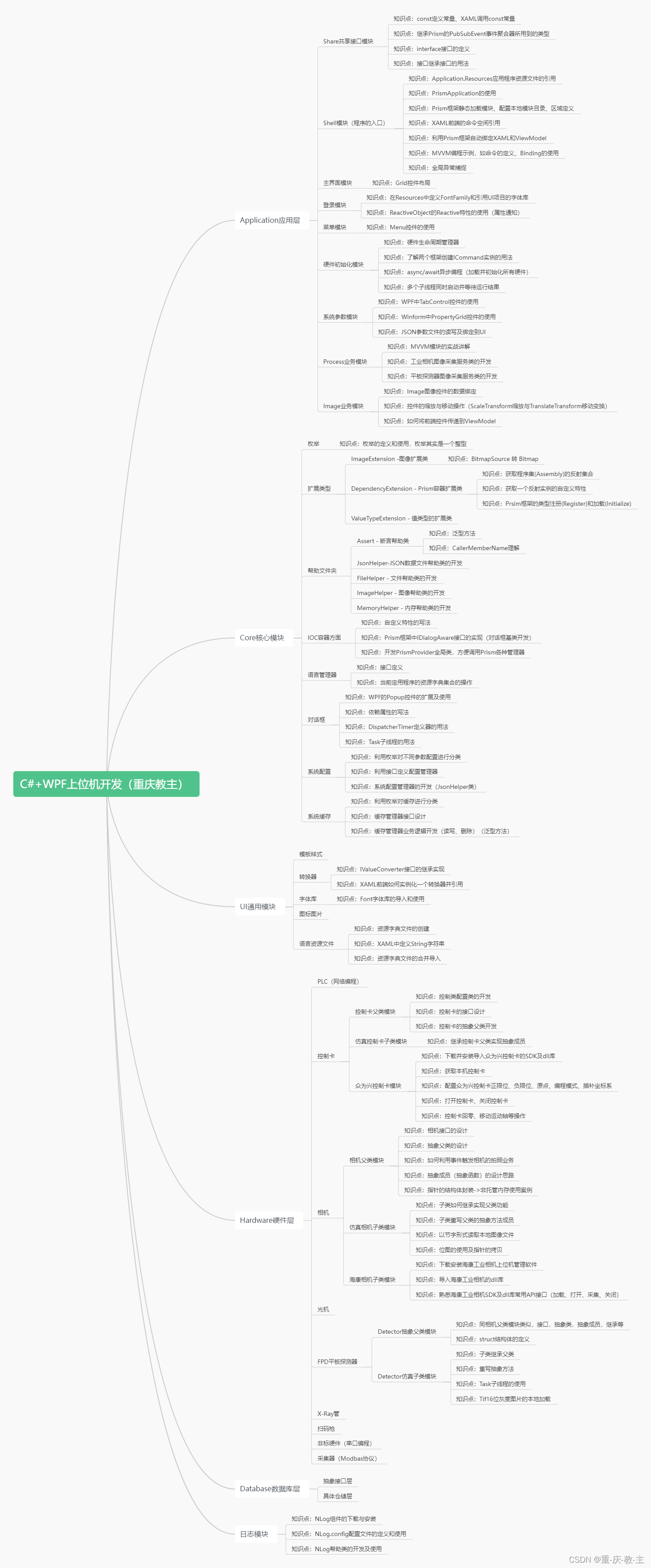

C#+WPF上位机开发(模块化+反应式)

在上位机开发领域中,C#与C两种语言是应用最多的两种开发语言,在C语言中,与之搭配的前端框架通常以QT最为常用,而C#语言中,与之搭配的前端框架是Winform和WPF两种框架。今天我们主要讨论一下C#和WPF这一对组合在上位机开…...

【LeetCode 算法】Card Flipping Game 翻转卡片游戏-阅读题

文章目录 Card Flipping Game 翻转卡片游戏问题描述:EN 分析代码 Tag Card Flipping Game 翻转卡片游戏 问题描述: 在桌子上有 N 张卡片,每张卡片的正面和背面都写着一个正数(正面与背面上的数有可能不一样)。 我们…...

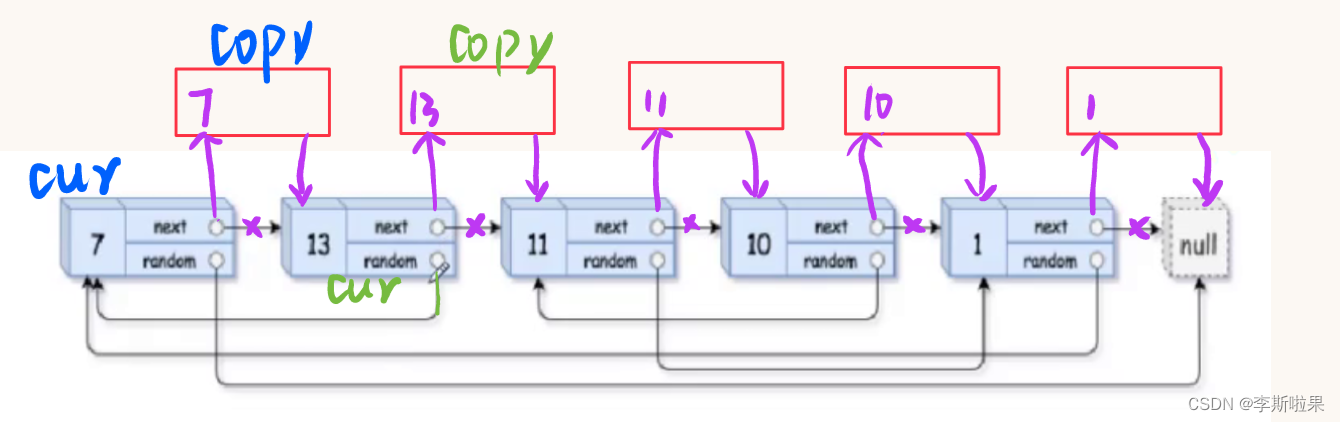

【leetcode】138.复制带随机指针的链表

方法一:暴力求解 1️⃣遍历原链表,复制节点尾插 2️⃣更新random,原链表中的random对应第几个节点则复制链表中的random就对应第几个 📖Note 不能通过节点中的val判断random的指向,因为链表中可能存在两个val相等的节点…...

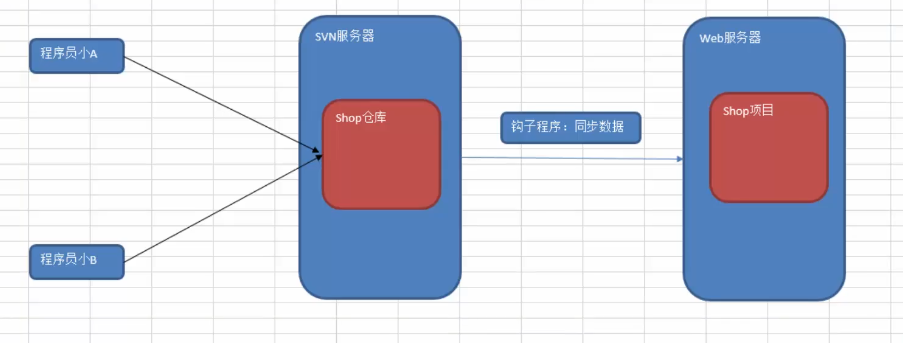

svn工具使用

svn 介绍 解决之道: SCM:软件配置管理 所谓的软件配置管理实际就是对软件源代码进行控制与管理 CVS:元老级产品 VSS:入门级产品 ClearCase:IBM 公司提供技术支持 SVN:主流产品 什么是SVNÿ…...

SpringBoot项目使用MyBatisX+Apifox IDEA 插件快速开发

今天跟大家介绍两个快速开发项目的插件。能大大提高开发效率。希望能帮助到大家。 1、MyBatisX 插件 MyBatis-Plus为我们提供了强大的mapper和service模板,能够大大的提高开发效率。但是在真正开发过程中,MyBatis-Plus并不能为我们解决所有问题…...

Redis数据结构

Redis 支持的数据结构的列表 1、String:字符串,是 Redis 最基本的数据类型,可以存储字符串、整数和浮点数。 2、Hash:哈希表,由多个键值对组成,可以储存多个字段和值。 3、List:列表,…...

解密Redis:应对面试中的缓存相关问题

文章目录 1. 缓存穿透问题及解决方案2. 缓存击穿问题及解决方案3. 缓存雪崩问题及解决方案4. Redis的数据持久化5. Redis的过期删除策略和数据淘汰策略6. Redis分布式锁和主从同步7. Redis集群方案8. Redis的数据一致性保障和高可用性方案 导语: 在面试过程中&#…...

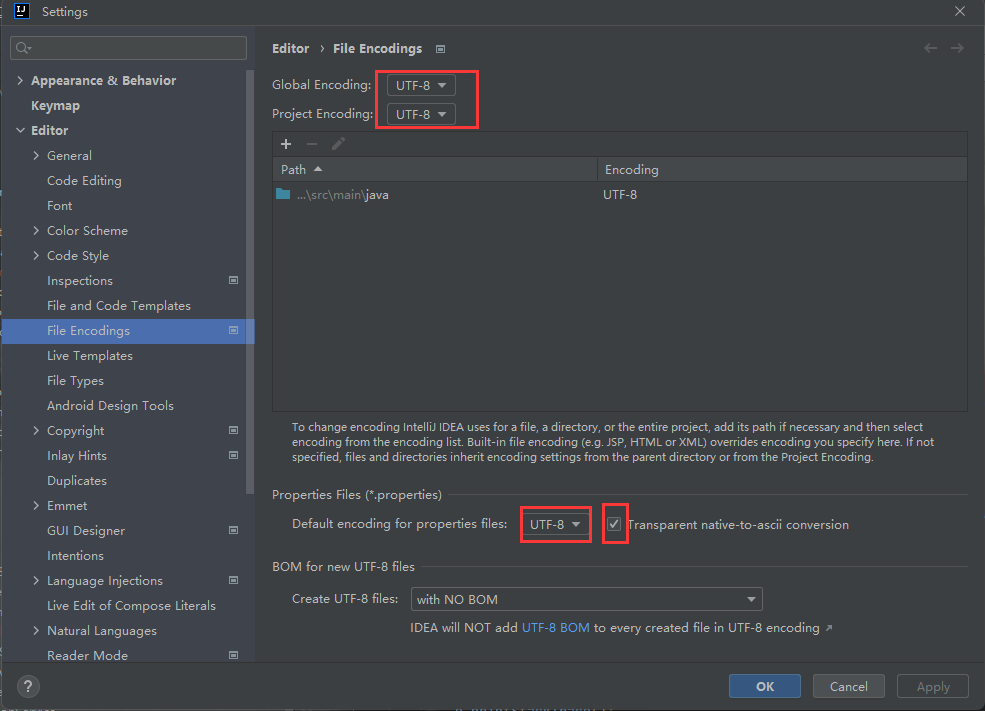

读取application-dev.properties的中文乱码【bug】

读取application-dev.properties的中文编码【bug】 2023-7-30 22:37:46 版权 禁止其他平台发布时删除以下此话 本文首次发布于CSDN平台 作者是CSDN日星月云 博客主页是https://blog.csdn.net/qq_51625007 禁止其他平台发布时删除以上此话 bug 读取application-dev.propert…...

Linux(centos7)如何实现配置iscsi存储多路径 及DM-Multipath的配置文件概述

安装多路径软件(系统默认安装) #第一:安装多路径软件yum -y install device-mapper device-mapper-multipath#第二:在CentOS7中启用多路径模块,mpathconf命令及相关模块加载(可以使用mpathconf -h查看用法&…...

DK7 vs JDK8 vs JDK11特性和功能的对比

JDK7 vs JDK8 vs JDK11特性和功能的对比 Java Development Kit (JDK) 是 Java 程序员所使用的开发工具包,它提供了编译、调试和运行 Java 程序所需的一切。JDK 在不同的版本中引入了许多新的特性和功能,下面我们来比较 JDK7、JDK8 和 JDK11 之间的一些重…...

KubeSphere 容器平台高可用:环境搭建与可视化操作指南

Linux_k8s篇 欢迎来到Linux的世界,看笔记好好学多敲多打,每个人都是大神! 题目:KubeSphere 容器平台高可用:环境搭建与可视化操作指南 版本号: 1.0,0 作者: 老王要学习 日期: 2025.06.05 适用环境: Ubuntu22 文档说…...

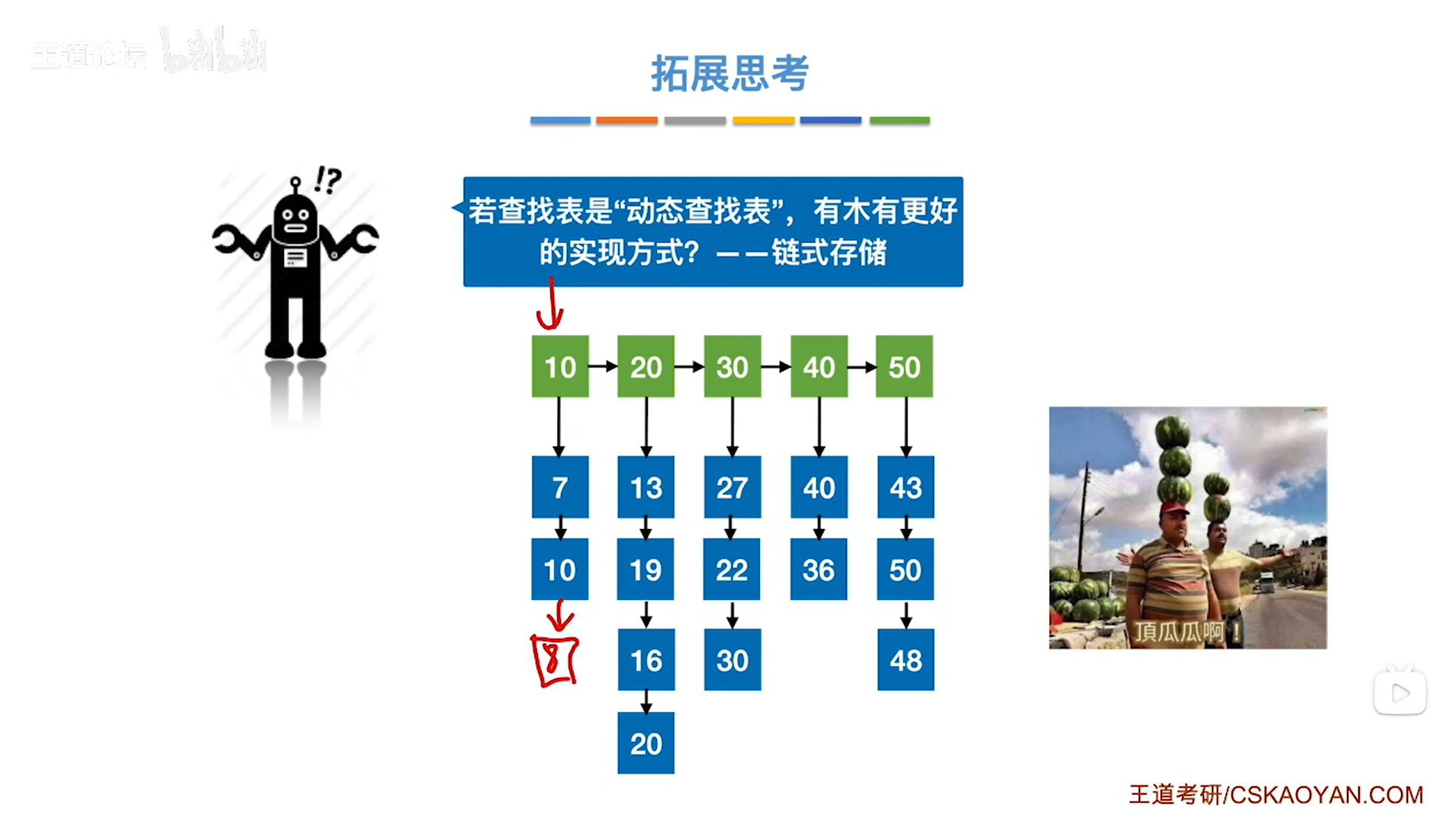

7.4.分块查找

一.分块查找的算法思想: 1.实例: 以上述图片的顺序表为例, 该顺序表的数据元素从整体来看是乱序的,但如果把这些数据元素分成一块一块的小区间, 第一个区间[0,1]索引上的数据元素都是小于等于10的, 第二…...

ubuntu搭建nfs服务centos挂载访问

在Ubuntu上设置NFS服务器 在Ubuntu上,你可以使用apt包管理器来安装NFS服务器。打开终端并运行: sudo apt update sudo apt install nfs-kernel-server创建共享目录 创建一个目录用于共享,例如/shared: sudo mkdir /shared sud…...

PHP和Node.js哪个更爽?

先说结论,rust完胜。 php:laravel,swoole,webman,最开始在苏宁的时候写了几年php,当时觉得php真的是世界上最好的语言,因为当初活在舒适圈里,不愿意跳出来,就好比当初活在…...

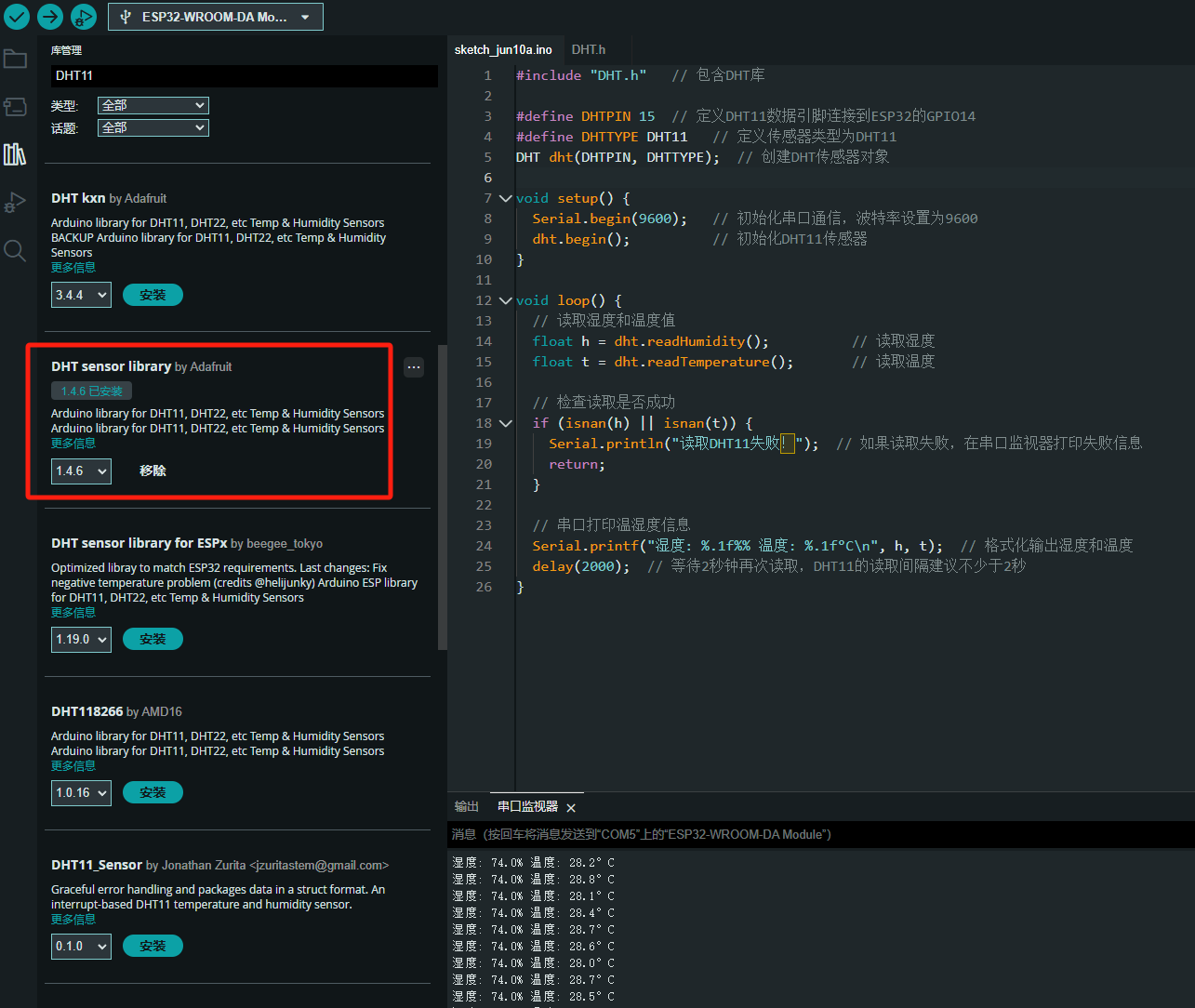

ESP32读取DHT11温湿度数据

芯片:ESP32 环境:Arduino 一、安装DHT11传感器库 红框的库,别安装错了 二、代码 注意,DATA口要连接在D15上 #include "DHT.h" // 包含DHT库#define DHTPIN 15 // 定义DHT11数据引脚连接到ESP32的GPIO15 #define D…...

测试markdown--肇兴

day1: 1、去程:7:04 --11:32高铁 高铁右转上售票大厅2楼,穿过候车厅下一楼,上大巴车 ¥10/人 **2、到达:**12点多到达寨子,买门票,美团/抖音:¥78人 3、中饭&a…...

Cinnamon修改面板小工具图标

Cinnamon开始菜单-CSDN博客 设置模块都是做好的,比GNOME简单得多! 在 applet.js 里增加 const Settings imports.ui.settings;this.settings new Settings.AppletSettings(this, HTYMenusonichy, instance_id); this.settings.bind(menu-icon, menu…...

DIY|Mac 搭建 ESP-IDF 开发环境及编译小智 AI

前一阵子在百度 AI 开发者大会上,看到基于小智 AI DIY 玩具的演示,感觉有点意思,想着自己也来试试。 如果只是想烧录现成的固件,乐鑫官方除了提供了 Windows 版本的 Flash 下载工具 之外,还提供了基于网页版的 ESP LA…...

拉力测试cuda pytorch 把 4070显卡拉满

import torch import timedef stress_test_gpu(matrix_size16384, duration300):"""对GPU进行压力测试,通过持续的矩阵乘法来最大化GPU利用率参数:matrix_size: 矩阵维度大小,增大可提高计算复杂度duration: 测试持续时间(秒&…...

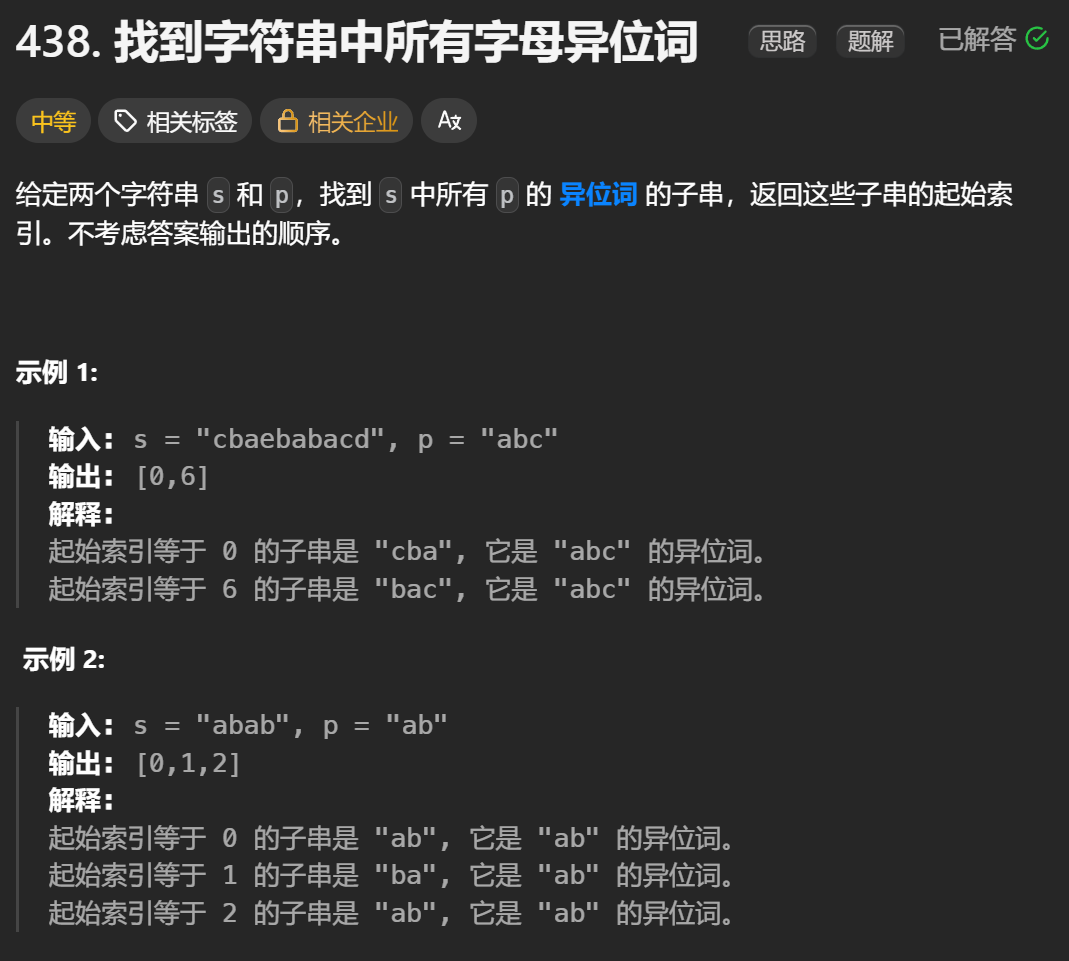

12.找到字符串中所有字母异位词

🧠 题目解析 题目描述: 给定两个字符串 s 和 p,找出 s 中所有 p 的字母异位词的起始索引。 返回的答案以数组形式表示。 字母异位词定义: 若两个字符串包含的字符种类和出现次数完全相同,顺序无所谓,则互为…...