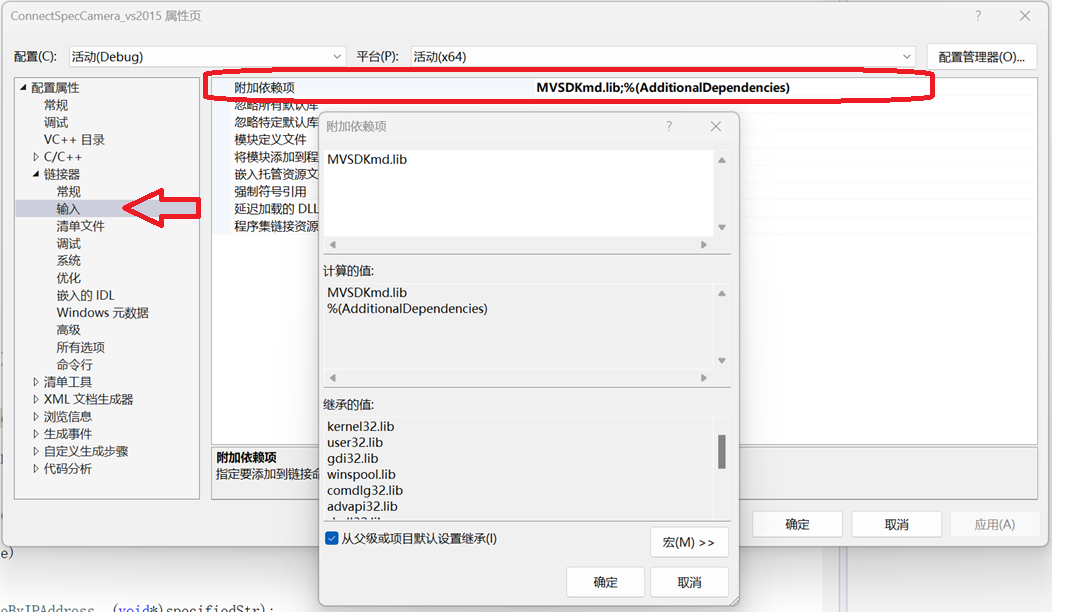

k8s v1.27.4二进制部署记录

记录二进制部署过程

#!/bin/bash#升级内核

update_kernel() {rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.orgyum -y install https://www.elrepo.org/elrepo-release-7.el7.elrepo.noarch.rpmyum --disablerepo="*" --enablerepo="elrepo-kernel" list availableyum --disablerepo='*' --enablerepo=elrepo-kernel -y install kernel-ltawk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfggrub2-set-default 0reboot

}

#安装containerd

containerd_install() {wget -O /etc/yum.repos.d/docker-ce.repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repoyum install -y containerd.iomkdir -p /etc/containerdcontainerd config default | sudo tee /etc/containerd/config.tomlsed -i.bak 's@registry.k8s.io/pause:3.6@registry.aliyuncs.com/google_containers/pause:3.9@' /etc/containerd/config.tomlsed -n 61p /etc/containerd/config.toml sed -i 's/SystemdCgroup = false/SystemdCgroup = true/' /etc/containerd/config.tomlsed -n 125p /etc/containerd/config.toml systemctl enable containerdsystemctl start containerd

}

#启用 shell 自动补全功能

kubectl_shell_completion() {yum install bash-completion -ysource /usr/share/bash-completion/bash_completionsource <(kubectl completion bash)echo 'source <(kubectl completion bash)' >>~/.bashrc

}#ipvs

ipvs_setup() {

yum install ipvsadm ipset sysstat conntrack libseccomp -y

mkdir -p /etc/modules-load.d/

cat >> /etc/modules-load.d/ipvs.conf <<EOF

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

br_netfilter

ip_conntrack

overlay

EOF

systemctl restart systemd-modules-load.service

lsmod | grep -e ip_vs -e nf_conntrack

}#内核参数调整

sysctlconf() {

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

vm.overcommit_memory = 1

vm.panic_on_oom = 0

fs.inotify.max_user_watches = 89100

fs.file-max = 52706963

fs.nr_open = 52706963

net.netfilter.nf_conntrack_max = 2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl = 15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

net.ipv6.conf.all.disable_ipv6 = 0

net.ipv6.conf.default.disable_ipv6 = 0

net.ipv6.conf.lo.disable_ipv6 = 0

net.ipv6.conf.all.forwarding = 1

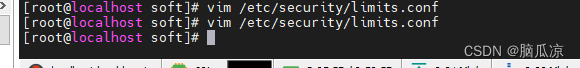

EOFsysctl -p /etc/sysctl.d/k8s.confcat <<EOF>>/etc/security/limits.conf

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

EOF

} #安装cfsslwget https://github.com/cloudflare/cfssl/releases/download/v1.6.2/cfssl-certinfo_1.6.2_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.2/cfssl_1.6.2_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.6.2/cfssljson_1.6.2_linux_amd64

mv cfssl_1.6.4_linux_amd64 cfssl

mv cfssl-certinfo_1.6.4_linux_amd64 cfssl-certinfo

mv cfssljson_1.6.4_linux_amd64 cfssljson

chmod +x ./cfssl*

cp cfssl* /bin/#配置etcd证书

etcd-ca-config.json

{"signing": {"default": {"expiry": "87600h"},"profiles": {"etcd": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}

}

#ca-config.json:可以定义多个 profiles,分别指定不同的过期时间、使用场景等参数;后续在签名证书时使用某个 profile;

#signing:表示该证书可用于签名其它证书;生成的 ca.pem 证书中 CA=TRUE;

#server auth:表示 client 可以用该 CA 对 server 提供的证书进行验证;

#client auth:表示 server 可以用该 CA 对 client 提供的证书进行验证;

etcd-ca-csr.json

{"CN": "etcd CA","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing"}]

}

etcd-server-csr.json

{"CN": "etcd","hosts": ["127.0.0.1","172.16.0.40","172.16.0.235","172.16.0.157"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing"}]

}

#生成etcd证书

mkdir pem

cfssl gencert -initca etcd-ca-csr.json | cfssljson -bare ./pem/etcd-ca -

cfssl gencert -ca=./pem/etcd-ca.pem -ca-key=./pem/etcd-ca-key.pem -config=etcd-ca-config.json -profile=etcd etcd-server-csr.json | cfssljson -bare ./pem/etcd-server

#安装ETCD

wget https://github.com/etcd-io/etcd/releases/download/v3.4.27/etcd-v3.4.27-linux-amd64.tar.gz

tar -zxvf etcd-v3.4.27-linux-amd64.tar.gz

mkdir -pv /data/etcd/{bin,log,conf,data,ssl}cp etcd-v3.4.27-linux-amd64/etcd* /data/etcd/bin/

chmod +x /data/etcd/bin/*

#拷贝证书文件到/data/etcd/ssl#创建配置文件,修为为对应主机名以及IP

/data/etcd/conf/etcd.conf

#[Member]

ETCD_NAME=k8s-master2

ETCD_DATA_DIR="/data/etcd/data"

ETCD_LISTEN_PEER_URLS="https://172.16.0.235:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.16.0.235:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.16.0.235:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://172.16.0.235:2379"

ETCD_INITIAL_CLUSTER="k8s-master1=https://172.16.0.40:2380,k8s-master2=https://172.16.0.235:2380,k8s-master3=https://172.16.0.157:2380"

ETCD_INITIAL_CLUSTER_TOKEN="hfy-etcd-server-cluster-g1"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_ENABLE_V2="true"

#ETCD_HEARTBEAT-INTERVAL=200

#ETCD_ELECTION-TIMEOUT=2000

#ETCD_SNAPSHOT_COUNT= 2000

#ETCD_AUTO_COMPACTION_RETENTION="1"

#ETCD_MAX_REQUEST_BYTES='33554432'

#ETCD_QUOTA-BACKEND_BYTES='8589934592#配置启动服务

/usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=/data/etcd/conf/etcd.conf

#https

ExecStart=/data/etcd/bin/etcd \

--cert-file=/data/etcd/ssl/etcd-server.pem \

--key-file=/data/etcd/ssl/etcd-server-key.pem \

--peer-cert-file=/data/etcd/ssl/etcd-server.pem \

--peer-key-file=/data/etcd/ssl/etcd-server-key.pem \

--trusted-ca-file=/data/etcd/ssl/etcd-ca.pem \

--peer-trusted-ca-file=/data/etcd/ssl/etcd-ca.pem \

--logger=zap

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.targetsystemctl start etcd.service

systemctl enable etcd.service

====================================下载kubernetes文件

https://cdn.dl.k8s.io/release/v1.27.4/kubernetes-server-linux-amd64.tar.gz#创建用户,下面的 token文件使用该 用户的ID号

useradd product

#创建kube-apiserver目录

mkdir -pv /data/kubernetes/kube-apiserver/{bin,conf,logs}

#创建存放证书目录

mkdir -pv /data/kubernetes/sslpem

#下载文件

https://cdn.dl.k8s.io/release/v1.27.4/kubernetes-server-linux-amd64.tar.gz

#解压把kube-apiserver执行文件拷贝到/data/kubernetes/kube-apiserver/bin

cp kube-apiserver /data/kubernetes/kube-apiserver/bin

#创建token文件,master共用这个文件

head -c 16 /dev/urandom | od -An -t x | tr -d ' '

2d1fb42eaba4ebfea22f024f868c82e3

cat > /data/kubernetes/kube-apiserver/conf/token.csv << EOF

2d1fb42eaba4ebfea22f024f868c82e3,kubelet-bootstrap,1000,"system:node-bootstrapper"

EOF

#第一列 2d1fb42eaba4ebfea22f024f868c82e3 是令牌(token)。

#第二列 kubelet-bootstrap 是与令牌关联的角色或权限。

#第三列 10001 是用户的UID(User ID)。

#第四列 "system:node-bootstrapper" 是与令牌关联的用户组或角色。#创建api证书

mkdir -pv k8sssl/pem

cd k8ssslcat > kube-apiserver-ca-csr.json << EOF

{"CN": "kubernetes","key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "Beijing","ST": "Beijing","O": "k8s","OU": "System"}]

}

EOF

cat > kube-apiserver-ca-config.json <<EOF

{"signing": {"default": {"expiry": "87600h"},"profiles": {"kubernetes": {"expiry": "87600h","usages": ["signing","key encipherment","server auth","client auth"]}}}

}

EOF

cfssl gencert -initca kube-apiserver-ca-csr.json | cfssljson -bare ./pem/kube-apiserver-ca -

ls -l pem/

#-rw-r--r-- 1 root root 1001 Aug 17 09:56 kube-apiserver-ca.csr

#-rw------- 1 root root 1675 Aug 17 09:56 kube-apiserver-ca-key.pem

#-rw-r--r-- 1 root root 1310 Aug 17 09:56 kube-apiserver-ca.pem

#修改为对应master的IP

cat > kube-apiserver-server-csr.json <<EOF

{"CN": "kubernetes","hosts": ["10.0.0.1","127.0.0.1","172.16.0.40","172.16.0.235","172.16.0.157","www.hf.com","kbsapisvr.hf.com.cn","kubernetes","kubernetes.default","kubernetes.default.svc","kubernetes.default.svc.cluster","kubernetes.default.svc.cluster.local"],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "k8s","OU": "System"}]

}

EOF

cfssl gencert -ca=./pem/kube-apiserver-ca.pem -ca-key=./pem/kube-apiserver-ca-key.pem -config=kube-apiserver-ca-config.json -profile=kubernetes kube-apiserver-server-csr.json | cfssljson -bare ./pem/kube-apiserver-serverls -l pem/

#生成的文件

#-rw-r--r-- 1 root root 1001 Aug 17 10:00 kube-apiserver-ca.csr

#-rw------- 1 root root 1679 Aug 17 10:00 kube-apiserver-ca-key.pem

#-rw-r--r-- 1 root root 1310 Aug 17 10:00 kube-apiserver-ca.pem

#-rw-r--r-- 1 root root 1305 Aug 17 10:07 kube-apiserver-server.csr

#-rw------- 1 root root 1679 Aug 17 10:07 kube-apiserver-server-key.pem

#-rw-r--r-- 1 root root 1671 Aug 17 10:07 kube-apiserver-server.pem#拷贝证书到三台master

for ip in {172.16.0.40,172.16.0.235,172.16.0.157};do scp ./pem/*.pem $ip:/data/kubernetes/sslpem/;donevim /data/kubernetes/kube-apiserver/conf/kube-apiserver.conf

KUBE_APISERVER_OPTS="--v=2 \

--audit-log-path=/data/kubernetes/kube-apiserver/logs/k8s-audit.log \

--etcd-servers=https://172.16.0.40:2379,https://172.16.0.235:2379,https://172.16.0.157:2379 \

--bind-address=172.16.0.40 \

--secure-port=6443 \

--advertise-address=172.16.0.40 \

--allow-privileged=true \

--service-cluster-ip-range=10.0.0.0/24 \

--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,ResourceQuota,NodeRestriction \

--authorization-mode=RBAC,Node \

--enable-bootstrap-token-auth=true \

--token-auth-file=/data/kubernetes/kube-apiserver/conf/token.csv \

--service-node-port-range=30000-32767 \

--kubelet-client-certificate=/data/kubernetes/sslpem/kube-apiserver-server.pem \

--kubelet-client-key=/data/kubernetes/sslpem/kube-apiserver-server-key.pem \

--tls-cert-file=/data/kubernetes/sslpem/kube-apiserver-server.pem \

--tls-private-key-file=/data/kubernetes/sslpem/kube-apiserver-server-key.pem \

--client-ca-file=/data/kubernetes/sslpem/kube-apiserver-ca.pem \

--service-account-key-file=/data/kubernetes/sslpem/kube-apiserver-ca-key.pem \

--service-account-signing-key-file=/data/kubernetes/sslpem/kube-apiserver-ca-key.pem \

--service-account-issuer=api \

--etcd-cafile=/data/etcd/ssl/etcd-ca.pem \

--etcd-certfile=/data/etcd/ssl/etcd-server.pem \

--etcd-keyfile=/data/etcd/ssl/etcd-server-key.pem \

--requestheader-client-ca-file=/data/kubernetes/sslpem/kube-apiserver-ca.pem \

--proxy-client-cert-file=/data/kubernetes/sslpem/kube-apiserver-server.pem \

--proxy-client-key-file=/data/kubernetes/sslpem/kube-apiserver-server-key.pem \

--requestheader-allowed-names=kubernetes \

--requestheader-extra-headers-prefix=X-Remote-Extra- \

--requestheader-group-headers=X-Remote-Group \

--requestheader-username-headers=X-Remote-User \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--http2-max-streams-per-connection=10000 \

--audit-log-maxsize=100 \

--audit-log-path=/data/logs/kube-apiserver/k8s-audit.log \

--default-not-ready-toleration-seconds=300 \

--default-unreachable-toleration-seconds=300 \

--max-mutating-requests-inflight=200 \

--max-requests-inflight=400 \

--min-request-timeout=1800 \

--kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname \

--request-timeout=1m0s \

--default-watch-cache-size=100 \

--delete-collection-workers=1 \

--enable-garbage-collector=true \

--etcd-compaction-interval=5m0s \

--etcd-count-metric-poll-period=1m0s \

--watch-cache=true \

--audit-log-batch-buffer-size=10000 \

--audit-log-batch-max-size=1 \

--service-account-issuer=https://kubernetes.default.svc.cluster.local \

--audit-log-truncate-max-batch-size=10485760 \

--audit-log-truncate-max-event-size=102400 \

--audit-webhook-batch-buffer-size=10000 \

--audit-webhook-batch-max-size=400 \

--audit-webhook-batch-max-wait=30s \

--audit-webhook-batch-throttle-burst=15 \

--audit-webhook-batch-throttle-enable=true \

--audit-webhook-batch-throttle-qps=10 \

--audit-webhook-truncate-max-batch-size=10485760 \

--audit-webhook-truncate-max-event-size=102400 \

--authentication-token-webhook-cache-ttl=2m0s \

--authorization-webhook-cache-authorized-ttl=5m0s \

--authorization-webhook-cache-unauthorized-ttl=30s \

--kubelet-timeout=5s"vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/data/kubernetes/kube-apiserver/conf/kube-apiserver.conf

ExecStart=/data/kubernetes/kube-apiserver/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.targetsystemctl start kube-apiserver.service

systemctl status kube-apiserver.service #部署kube-controller-manager

mkdir -pv /data/kubernetes/kube-controller-manager/{bin,conf,logs}

for ip in {172.16.0.40,172.16.0.235,172.16.0.157};do scp ./kube-controller-manager $ip:/data/kubernetes/kube-controller-manager/bin/;done新版本的api-server不再启动非安全端口,必须使用组件来连接

cat > kube-controller-manager-csr.json << EOF

{"CN": "system:kube-controller-manager","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing", "ST": "BeiJing","O": "system:masters","OU": "System"}]

}

EOFcfssl gencert -ca=./pem/kube-apiserver-ca.pem -ca-key=./pem/kube-apiserver-ca-key.pem -config=kube-apiserver-ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare ./pem/kube-controller-manager

ls -l pem

total 36

-rw-r--r-- 1 root root 1001 Aug 17 10:00 kube-apiserver-ca.csr

-rw------- 1 root root 1679 Aug 17 10:00 kube-apiserver-ca-key.pem

-rw-r--r-- 1 root root 1310 Aug 17 10:00 kube-apiserver-ca.pem

-rw-r--r-- 1 root root 1305 Aug 17 10:07 kube-apiserver-server.csr

-rw------- 1 root root 1679 Aug 17 10:07 kube-apiserver-server-key.pem

-rw-r--r-- 1 root root 1671 Aug 17 10:07 kube-apiserver-server.pem

-rw-r--r-- 1 root root 326 Aug 17 11:29 kube-controller-manager.csr

-rw------- 1 root root 227 Aug 17 11:29 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 981 Aug 17 11:29 kube-controller-manager.pem#生成kube-controller-managerkubeconfig文件

KUBE_CONFIG="/tmp/kube-controller-manager.kubeconfig"

KUBE_APISERVER="https://kbsapisvr.hf.com.cn:6443"kubectl config set-cluster kubernetes \--certificate-authority=./pem/kube-apiserver-ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials kube-controller-manager \--client-certificate=./pem/kube-controller-manager.pem \--client-key=./pem/kube-controller-manager-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \--cluster=kubernetes \--user=kube-controller-manager \--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}

#生成文件

cat /tmp/kube-controller-manager.kubeconfig

apiVersion: v1

clusters:

- cluster:certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURtakNDQW9LZ0F3SUJBZ0lVTXVqUGs1aGJEV1o0aTNUQkdaSWNaeGRiaTN3d0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pURUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbAphV3BwYm1jeEREQUtCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HVTNsemRHVnRNUk13RVFZRFZRUURFd3ByCmRXSmxjbTVsZEdWek1CNFhEVEl6TURneE56QXhOVFl3TUZvWERUSTRNRGd4TlRBeE5UWXdNRm93WlRFTE1Ba0cKQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbGFXcHBibWN4RERBSwpCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HVTNsemRHVnRNUk13RVFZRFZRUURFd3ByZFdKbGNtNWxkR1Z6Ck1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBMmNSL2dtQkk2K0lQZ3MzQzA2R3UKOFczTUdtTGx6aXp1MjAzb1p3cmxQdVdqclhsSVJCMUhBYXJtSDRMU2EvWXlzMlV2cWhaWGxnaVd1cUppdWZlRgo2enpENUw2L2pCaVl2RkpSbUgzSElOYSt5azYrVjNOdENVYmRZYkpKeXEvSGNITno3N0lVbk9sSDhZT0ZEaFd2CmN4R28yUElqeFd3d3FJb1psTnJML2JmTTcyRTNtcEZYNEVlRzdRelowcC94SmpSREZQSE0wKzRLVGkzRUxlOVcKVWxBYjQrc245Y0w3MjZMeVJXWGdrMVNJa0FjcHpEeGdOZUNGTmJlamNmZkVueUsrRG96T1F4bFpjZDZhVzZIKwpVdU5HRVczTHBYZTFHQmlYcUdDSkE5YXl6NkxlOWJFbmIxc0tvcjB4Z09sczc0aUJkRUo5czJibEx4WFNrOUZsCjdRSURBUUFCbzBJd1FEQU9CZ05WSFE4QkFmOEVCQU1DQVFZd0R3WURWUjBUQVFIL0JBVXdBd0VCL3pBZEJnTlYKSFE0RUZnUVVPZ3h2dXc3WmNSL1hXNzRET0k0NGJ2cjc1eTR3RFFZSktvWklodmNOQVFFTEJRQURnZ0VCQUxxSApYTEN6S3hiR2JFSW15c1VVZzVTVEFuWURwZjlWeDRaTjFJT1BHWkduYVMrVE5iMGcwU1FmdndiR2pGN1A4aFUwCjJoU1kvUG9oOVljRkRXS2tzVmFNWStHZjdzTElaT0xIZ0JyMFkyeGpLZEZmamtRbFZBKzFTTXV0dHFzYzRqNnkKS2pzWDN5M0dCT2pHWGg2bUVVOWZLRXMzSmluV1ZPMUhHNzV6T292WXVFc21pTkxPNS9Cc1lNQ0tRWU5nL212bQppMEY0b21MVjNFT1BJSDdOUjNaTXpuVnFWQVV5TDlVanlsdWJuQlhwY3o2S2E1SnMwaXpzZEI4NUpISW5xcmdpCk02NGRVNHB1L0lQS3Z5a0xrdUtxM3J6aXdjL2VsZTduK1B6bG5NRHRLUGdhaitkTE5PbVpiYytzRHlHQ1d6TjIKQTFGWEg5d2dYVW5XNnFQclZ0Yz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=server: https://kbsapisvr.hf.com.cn:6443name: kubernetes

contexts:

- context:cluster: kubernetesuser: kube-controller-managername: default

current-context: default

kind: Config

preferences: {}

users:

- name: kube-controller-manageruser:client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUNwekNDQVkrZ0F3SUJBZ0lVZDdQdzVsK2lnNEhOdjYrODU2OGd4RkpvSDhJd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1pURUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbAphV3BwYm1jeEREQUtCZ05WQkFvVEEyczRjekVQTUEwR0ExVUVDeE1HVTNsemRHVnRNUk13RVFZRFZRUURFd3ByCmRXSmxjbTVsZEdWek1CNFhEVEl6TURneE56QXpNalF3TUZvWERUTXpNRGd4TkRBek1qUXdNRm93QURCWk1CTUcKQnlxR1NNNDlBZ0VHQ0NxR1NNNDlBd0VIQTBJQUJIdEVsYXR3eGxnVW91eGtXbDJ2b2piU0lXV0F1ZkJpR2hQaApOMnpzNDBpMWxpYUZHamRDZ2RzVEt4cFpXeGkzdmcwcjFOeDdTUm5pR2kydW4rUmVkZnVqZnpCOU1BNEdBMVVkCkR3RUIvd1FFQXdJRm9EQWRCZ05WSFNVRUZqQVVCZ2dyQmdFRkJRY0RBUVlJS3dZQkJRVUhBd0l3REFZRFZSMFQKQVFIL0JBSXdBREFkQmdOVkhRNEVGZ1FVRzh1QUpDb0pTZVA1UjVucDlQVGlLY3VFK293d0h3WURWUjBqQkJndwpGb0FVT2d4dnV3N1pjUi9YVzc0RE9JNDRidnI3NXk0d0RRWUpLb1pJaHZjTkFRRUxCUUFEZ2dFQkFNS1doWUhGCkp3d2NSODM2YitVek9xZUhHN09ndDIySWF2M2xoZThsWU50NlR2Ri9CMmoxeVhmd1dmYkx5cG5ROVF2Wm9DSEoKYmQxb3RNVEVjOUZabHp5Z3I4VC9jVTVDTGw4TlNxblM0U3cyQytQZ0xrbGdPUktFUEpaQ3ZVYUFoZXZwd3RyMAo2bHpGbCtwaDMvQ0dBYkd2UU1zRHNsRmRscllLcEtIOXZCUGZlYkoxYVB3QWJRckJXVWpMM1F5cnZTUjloVjZSCmJDY09HQW4xT21PSlRuVW1aSjJNeFE5d3JBWXU2QVloYVZsTXZaeUFOazRoeVQ1N0dra1pOdXVNQTZTL2Z4alEKL1Y0Z3RhMk5HdHVPQ1EweDZOWlJ4L3lIV0lFRnIrWldtc3QrYnFZcEw5Z3owb0UxRTBxVWF2MEZnU2tQbE9iQgpkS2piaXl3YVkzN0llM0E9Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0Kclient-key-data: LS0tLS1CRUdJTiBFQyBQUklWQVRFIEtFWS0tLS0tCk1IY0NBUUVFSU5MQ05tY01qZlp4bDNHRXN6eENwRTNrT0M2QUJJNjJaWWhvaWx0UHhjZTlvQW9HQ0NxR1NNNDkKQXdFSG9VUURRZ0FFZTBTVnEzREdXQlNpN0dSYVhhK2lOdEloWllDNThHSWFFK0UzYk96alNMV1dKb1VhTjBLQgoyeE1yR2xsYkdMZStEU3ZVM0h0SkdlSWFMYTZmNUY1MSt3PT0KLS0tLS1FTkQgRUMgUFJJVkFURSBLRVktLS0tLQo=vim /data/kubernetes/kube-controller-manager/conf/kube-controller-manager.conf

KUBE_CONTROLLER_MANAGER_OPTS="--v=2 \

--leader-elect=true \

--bind-address=127.0.0.1 \

--kubeconfig=/data/kubernetes/kube-controller-manager/conf/kube-controller-manager.kubeconfig \

--allocate-node-cidrs=true \

--cluster-cidr=10.244.0.0/16 \

--service-cluster-ip-range=10.0.0.0/24 \

--cluster-signing-cert-file=/data/kubernetes/sslpem/kube-apiserver-ca.pem \

--cluster-signing-key-file=/data/kubernetes/sslpem/kube-apiserver-ca-key.pem \

--root-ca-file=/data/kubernetes/sslpem/kube-apiserver-ca.pem \

--service-account-private-key-file=/data/kubernetes/sslpem/kube-apiserver-ca-key.pem \

--cluster-signing-duration=87600h0m0s \

--kube-api-burst=30 \

--kube-api-content-type=application/vnd.kubernetes.protobuf \

--kube-api-qps=20 \

--min-resync-period=12h0m0s \

--node-monitor-period=5s \

--route-reconciliation-period=10s \

--concurrent-service-syncs=1 \

--secure-port=10257 \

--authentication-token-webhook-cache-ttl=10s \

--authorization-webhook-cache-authorized-ttl=10s \

--authorization-webhook-cache-unauthorized-ttl=10s \

--attach-detach-reconcile-sync-period=1m0s \

--concurrent-deployment-syncs=5 \

--concurrent-endpoint-syncs=5 \

--concurrent-gc-syncs=20 \

--enable-garbage-collector=true \

--horizontal-pod-autoscaler-cpu-initialization-period=5m0s \

--horizontal-pod-autoscaler-downscale-stabilization=5m0s \

--horizontal-pod-autoscaler-initial-readiness-delay=30s \

--horizontal-pod-autoscaler-sync-period=15s \

--horizontal-pod-autoscaler-tolerance=0.1 \

--concurrent-namespace-syncs=10 \

--namespace-sync-period=5m0s \

--large-cluster-size-threshold=50 \

--node-eviction-rate=0.1 \

--node-monitor-grace-period=40s \

--node-startup-grace-period=1m0s \

--secondary-node-eviction-rate=0.01 \

--unhealthy-zone-threshold=0.55 \

--terminated-pod-gc-threshold=12500 \

--concurrent-replicaset-syncs=5 \

--concurrent_rc_syncs=5 \

--concurrent-resource-quota-syncs=5 \

--resource-quota-sync-period=5m0s \

--concurrent-serviceaccount-token-syncs=5 \

--concurrent-ttl-after-finished-syncs=5"vim /usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/data/kubernetes/kube-controller-manager/conf/kube-controller-manager.conf

ExecStart=/data/kubernetes/kube-controller-manager/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.targetsystemctl start kube-controller-manager.service

systemctl status kube-controller-manager.service #部署kube-scheduler

mkdir -pv /data/kubernetes/kube-scheduler/{bin,conf,logs}

for ip in {172.16.0.40,172.16.0.235,172.16.0.157};do scp ./kube-scheduler $ip:/data/kubernetes/kube-scheduler/bin/;done#生成kube-scheduler kubeconfig文件

# 创建证书请求文件

cat > kube-scheduler-csr.json << EOF

{"CN": "system:kube-scheduler","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "system:masters","OU": "System"}]

}

EOF# 生成证书

cfssl gencert -ca=./pem/kube-apiserver-ca.pem -ca-key=./pem/kube-apiserver-ca-key.pem -config=kube-apiserver-ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare ./pem/kube-scheduler

ls -l pem

-rw-r--r-- 1 root root 1001 Aug 17 10:00 kube-apiserver-ca.csr

-rw------- 1 root root 1679 Aug 17 10:00 kube-apiserver-ca-key.pem

-rw-r--r-- 1 root root 1310 Aug 17 10:00 kube-apiserver-ca.pem

-rw-r--r-- 1 root root 1305 Aug 17 10:07 kube-apiserver-server.csr

-rw------- 1 root root 1679 Aug 17 10:07 kube-apiserver-server-key.pem

-rw-r--r-- 1 root root 1671 Aug 17 10:07 kube-apiserver-server.pem

-rw-r--r-- 1 root root 1029 Aug 17 13:35 kube-controller-manager.csr

-rw------- 1 root root 1679 Aug 17 13:35 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 1424 Aug 17 13:35 kube-controller-manager.pem

-rw-r--r-- 1 root root 1029 Aug 17 13:36 kube-scheduler.csr

-rw------- 1 root root 1675 Aug 17 13:36 kube-scheduler-key.pem

-rw-r--r-- 1 root root 1424 Aug 17 13:36 kube-scheduler.pem生成kubeconfig文件:

KUBE_CONFIG="/tmp/kube-scheduler.kubeconfig"

KUBE_APISERVER="https://kbsapisvr.hf.com.cn:6443"kubectl config set-cluster kubernetes \--certificate-authority=./pem/kube-apiserver-ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials kube-scheduler \--client-certificate=./pem/kube-scheduler.pem \--client-key=./pem/kube-scheduler-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \--cluster=kubernetes \--user=kube-scheduler \--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}cat /tmp/kube-scheduler.kubeconfigfor ip in {172.16.0.40,172.16.0.235,172.16.0.157};do scp /tmp/kube-scheduler.kubeconfig $ip:/data/kubernetes/kube-scheduler/kube-scheduler.kubeconfig;done#配置服务文件

vim /usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/data/kubernetes/kube-scheduler/conf/kube-scheduler.conf

ExecStart=/data/kubernetes/kube-scheduler/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

[Install]

WantedBy=multi-user.target#配置文件

vim /data/kubernetes/kube-scheduler/conf/kube-scheduler.conf

KUBE_SCHEDULER_OPTS="--v=2 \

--leader-elect=true \

--leader-elect-lease-duration=15s \

--leader-elect-renew-deadline=10s \

--leader-elect-resource-lock=endpointsleases \

--leader-elect-retry-period=2s \

--kubeconfig=/data/kubernetes/kube-scheduler/kube-scheduler.kubeconfig \

--bind-address=127.0.0.1 \

--authentication-token-webhook-cache-ttl=10s \

--authorization-webhook-cache-authorized-ttl=10s \

--authorization-webhook-cache-unauthorized-ttl=10s"#部署kubectl

#生成kubectl证书请求文件

cat > admin-csr.json << EOF

{"CN": "admin","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "system:masters","OU": "System"}]

}

EOF

#生成证书

cfssl gencert -ca=./pem/kube-apiserver-ca.pem -ca-key=./pem/kube-apiserver-ca-key.pem -config=kube-apiserver-ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare ./pem/admin

ls -l pem

-rw-r--r-- 1 root root 1009 Aug 17 14:01 admin.csr

-rw------- 1 root root 1675 Aug 17 14:01 admin-key.pem

-rw-r--r-- 1 root root 1399 Aug 17 14:01 admin.pem

-rw-r--r-- 1 root root 1001 Aug 17 10:00 kube-apiserver-ca.csr

-rw------- 1 root root 1679 Aug 17 10:00 kube-apiserver-ca-key.pem

-rw-r--r-- 1 root root 1310 Aug 17 10:00 kube-apiserver-ca.pem

-rw-r--r-- 1 root root 1305 Aug 17 10:07 kube-apiserver-server.csr

-rw------- 1 root root 1679 Aug 17 10:07 kube-apiserver-server-key.pem

-rw-r--r-- 1 root root 1671 Aug 17 10:07 kube-apiserver-server.pem

-rw-r--r-- 1 root root 1029 Aug 17 13:35 kube-controller-manager.csr

-rw------- 1 root root 1679 Aug 17 13:35 kube-controller-manager-key.pem

-rw-r--r-- 1 root root 1424 Aug 17 13:35 kube-controller-manager.pem

-rw-r--r-- 1 root root 1029 Aug 17 13:36 kube-scheduler.csr

-rw------- 1 root root 1675 Aug 17 13:36 kube-scheduler-key.pem

-rw-r--r-- 1 root root 1424 Aug 17 13:36 kube-scheduler.pem#生成kubectl的kubeconfig文件

KUBE_CONFIG="/tmp/config"

KUBE_APISERVER="https://kbsapisvr.hf.com.cn:6443"kubectl config set-cluster kubernetes \--certificate-authority=./pem/kube-apiserver-ca.pem \--embed-certs=true \--server=${KUBE_APISERVER} \--kubeconfig=${KUBE_CONFIG}

kubectl config set-credentials cluster-admin \--client-certificate=./pem/admin.pem \--client-key=./pem/admin-key.pem \--embed-certs=true \--kubeconfig=${KUBE_CONFIG}

kubectl config set-context default \--cluster=kubernetes \--user=cluster-admin \--kubeconfig=${KUBE_CONFIG}

kubectl config use-context default --kubeconfig=${KUBE_CONFIG}cat /tmp/config

master上面执行

mkdir /root/.kubefor ip in {172.16.0.40,172.16.0.235,172.16.0.157};do scp /tmp/config $ip:/root/.kube/;donekubectl get cs

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy

etcd-2 Healthy

etcd-1 Healthy #授权kubelet-bootstrap用户允许请求证书

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap 这个命令的作用是创建一个 ClusterRoleBinding(集群角色绑定)对象,将 kubelet-bootstrap 用户与 system:node-bootstrapper 集群角色进行绑定。

在 Kubernetes 中,集群角色绑定用于授予用户、服务账号或组等实体与集群角色之间的关联关系。通过集群角色绑定,可以为特定用户或实体提供访问集群资源和执行特定操作的权限。

在这个具体的命令中,create clusterrolebinding 表示创建一个集群角色绑定对象。kubelet-bootstrap 是指定的 ClusterRoleBinding 名称,你可以根据需要选择一个合适的名称。

--clusterrole=system:node-bootstrapper 指定了要绑定的集群角色,这里是 system:node-bootstrapper,它赋予了节点引导程序(kubelet)在启动过程中所需的权限。

--user=kubelet-bootstrap 指定了要将该角色绑定到的用户,即 kubelet-bootstrap 用户。

通过执行此命令,你将为 kubelet-bootstrap 用户分配 system:node-bootstrapper 的权限,以便其能够在启动节点时执行必要的操作和访问相关资源。

这通常用于自动化节点引导和注册的过程,以确保节点可以加入到 Kubernetes 集群中并正常运行。部署kubelet以及kubeproxy

worker以及master节点也部署mkdir -pv /data/kubernetes/kubelet/{bin,conf,logs}

mkdir -pv /data/kubernetes/kube-proxy/{bin,conf,logs}

for ip in {172.16.0.40,172.16.0.235,172.16.0.157,172.16.0.124};do scp ./kubelet $ip:/data/kubernetes/kubelet/bin/;done

for ip in {172.16.0.40,172.16.0.235,172.16.0.157,172.16.0.124};do scp ./kube-proxy $ip:/data/kubernetes/kube-proxy/bin/;donevim /data/kubernetes/kubelet/conf/kubelet.conf

KUBELET_OPTS="--v=2 \

--hostname-override=k8s-master1 \

--cgroup-driver=systemd \

--kubeconfig=/data/kubernetes/kubelet/conf/kubelet.kubeconfig \

--bootstrap-kubeconfig=/data/kubernetes/kubelet/conf/bootstrap.kubeconfig \

--config=/data/kubernetes/kubelet/conf/kubelet-config.yml \

--cert-dir=/data/kubernetes/sslpem \

--container-runtime-endpoint=unix:///run/containerd/containerd.sock \

--node-labels=node.kubernetes.io/node=''"vim /data/kubernetes/kubelet/conf/bootstrap.kubeconfig

apiVersion: v1

clusters:

- cluster:certificate-authority: /data/kubernetes/sslpem/kube-apiserver-ca.pemserver: https://kbsapisvr.hf.com.cn:6443name: kubernetes

contexts:

- context:cluster: kubernetesuser: kubelet-bootstrapname: default

current-context: default

kind: Config

preferences: {}

users:

- name: kubelet-bootstrapuser:token: 2d1fb42eaba4ebfea22f024f868c82e3vim /data/kubernetes/kubelet/conf/kubelet-config.yml

kind: KubeletConfiguration

apiVersion: kubelet.config.k8s.io/v1beta1

address: 0.0.0.0

port: 10250

readOnlyPort: 10255

healthzBindAddress: 0.0.0.0

healthzPort: 10248

cgroupDriver: cgroupfs

cgroupsPerQOS: true

clusterDNS:

- 10.0.0.2

clusterDomain: cluster.local

configMapAndSecretChangeDetectionStrategy: Watch

containerLogMaxFiles: 5

containerLogMaxSize: 10Mi

contentType: application/vnd.kubernetes.protobuf

cpuCFSQuota: true

cpuCFSQuotaPeriod: 100ms

cpuManagerPolicy: none

cpuManagerReconcilePeriod: 10s

enableControllerAttachDetach: true

enableDebuggingHandlers: true

authentication:anonymous:enabled: falsewebhook:cacheTTL: 2m0senabled: truex509:clientCAFile: /data/kubernetes/sslpem/kube-apiserver-ca.pem

authorization:mode: Webhookwebhook:cacheAuthorizedTTL: 5m0scacheUnauthorizedTTL: 30s

evictionHard:imagefs.available: 15%memory.available: 1024Minodefs.available: 10%nodefs.inodesFree: 5%

evictionMinimumReclaim:memory.available: 0Minodefs.available: 500Miimagefs.available: 2Gi

enforceNodeAllocatable:

- pods

kubeReserved: # 配置 kube 资源预留cpu: 1000mmemory: 1Gi

systemReserved: # 配置系统资源预留cpu: 1000mmemory: 1Gi

evictionPressureTransitionPeriod: 5m0s

failSwapOn: false

fileCheckFrequency: 20s

hairpinMode: promiscuous-bridge

httpCheckFrequency: 20s

imageGcHighThreshold: 85

imageGcLowThreshold: 80

iptablesMasqueradeBit: 14

kubeAPIBurst: 10

kubeAPIQPS: 5

makeIPTablesUtilChains: true

maxOpenFiles: 1000000

maxPods: 50

nodeStatusUpdateFrequency: 10s

oomScoreAdj: -999

registryBurst: 10

registryQps: 5

resolvConf: /etc/resolv.conf

runtimeRequestTimeout: 2m0s

serializeImagePulls: true

streamingConnectionIdleTimeout: 4h0m0s

syncFrequency: 1m0s

volumeStatsAggPeriod: 1m0svim /usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service[Service]

EnvironmentFile=/data/kubernetes/kubelet/conf/kubelet.conf

ExecStart=/data/kubernetes/kubelet/bin/kubelet $KUBELET_OPTS

Restart=on-failure

LimitNOFILE=65536[Install]

WantedBy=multi-user.target#启动服务

systemctl start kubelet

systemctl status kubelet#查看申请

kubectl get csr

NAME AGE SIGNERNAME REQUESTOR REQUESTEDDURATION CONDITION

node-csr-Ec_JnMU6QbbF90CcVC0LgFoKcjcL88i5NtS4VSN8bcQ 37s kubernetes.io/kube-apiserver-client-kubelet kubelet-bootstrap <none> Pending

#批准申请

kubectl certificate approve node-csr-Ec_JnMU6QbbF90CcVC0LgFoKcjcL88i5NtS4VSN8bcQcertificatesigningrequest.certificates.k8s.io/node-csr-Ec_JnMU6QbbF90CcVC0LgFoKcjcL88i5NtS4VSN8bcQ approved

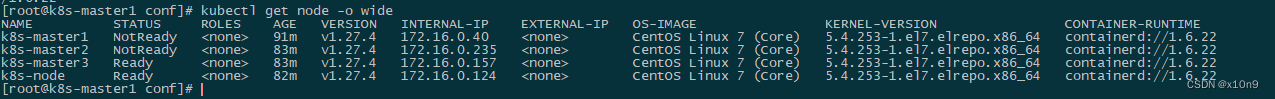

[root@k8s-master1 conf]# kubectl get node

NAME STATUS ROLES AGE VERSION

k8s-master1 NotReady <none> 4s v1.27.4#然后会自动生成/data/kubernetes/kubelet/conf/kubelet.kubeconfig#配置proxy

#生成proxy证书文件

#配置proxy证书请求文件

vim kube-proxy-ca-csr.json

{"CN": "system:kube-proxy","hosts": [],"key": {"algo": "rsa","size": 2048},"names": [{"C": "CN","L": "BeiJing","ST": "BeiJing","O": "k8s","OU": "System"}]

}

#生成proxy证书文件

cfssl gencert -ca=./pem/kube-apiserver-ca.pem -ca-key=./pem/kube-apiserver-ca-key.pem -config=kube-apiserver-ca-config.json -profile=kubernetes kube-proxy-ca-csr.json | cfssljson -bare ./pem/kube-proxyfor ip in {172.16.0.40,172.16.0.235,172.16.0.157,172.16.0.124};do scp ./pem/kube-proxy*.pem $ip:/data/kubernetes/sslpem/;donevim /data/kubernetes/kube-proxy/conf/kube-proxy.conf

KUBE_PROXY_OPTS="--v=2 --config=/data/kubernetes/kube-proxy/conf/kube-proxy-config.yml"vim /data/kubernetes/kube-proxy/conf/kube-proxy-config.yml

kind: KubeProxyConfiguration

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 0.0.0.0

metricsBindAddress: 0.0.0.0:10249

healthzBindAddress: 0.0.0.0

healthzPort: 10256

clientConnection:kubeconfig: /data/kubernetes/kube-proxy/conf/kube-proxy.kubeconfig

hostnameOverride: k8s-master1

#更改为对应的主机名

clusterCIDR: 10.0.0.0/24

mode: ipvs

ipvs:scheduler: "rr"

iptables:masqueradeAll: true

cleanupIpvs: true

configSyncPeriod: 15m0s

conntrackMaxPerCore: 32768

conntrackMin: 131072

conntrackTcpTimeoutCloseWait: 1h0m0s

conntrackTcpTimeoutEstablished: 24h0m0s

iptablesMasqueradeBit: 14

iptablesSyncPeriod: 30s

ipvsSyncPeriod: 30s

kubeApiContentType: application/vnd.kubernetes.protobuf

oomScoreAdj: -999

kubeApiBurst: 10

kubeApiQps: 5vim /data/kubernetes/kube-proxy/conf/kube-proxy.kubeconfig

apiVersion: v1

clusters:

- cluster:certificate-authority: /data/kubernetes/sslpem/kube-apiserver-ca.pem server: https://kbsapisvr.hf.com.cn:6443name: kubernetes

contexts:

- context:cluster: kubernetesuser: kube-proxyname: default

current-context: default

kind: Config

preferences: {}

users:

- name: kube-proxyuser:client-certificate: /data/kubernetes/sslpem/kube-proxy.pemclient-key: /data/kubernetes/sslpem/kube-proxy-key.pemvim /usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=/data/kubernetes/kube-proxy/conf/kube-proxy.conf

ExecStart=/data/kubernetes/kube-proxy/bin/kube-proxy $KUBE_PROXY_OPTS

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.targesystemctl start kube-proxy

systemctl status kube-proxy授权apiserver访问kubelet 应用场景:例如kubectl logs

cat > apiserver-to-kubelet-rbac.yaml << EOF

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:annotations:rbac.authorization.kubernetes.io/autoupdate: "true"labels:kubernetes.io/bootstrapping: rbac-defaultsname: system:kube-apiserver-to-kubelet

rules:- apiGroups:- ""resources:- nodes/proxy- nodes/stats- nodes/log- nodes/spec- nodes/metrics- pods/logverbs:- "*"

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: system:kube-apiservernamespace: ""

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:kube-apiserver-to-kubelet

subjects:- apiGroup: rbac.authorization.k8s.iokind: Username: kubernetes

EOFkubectl apply -f apiserver-to-kubelet-rbac.yaml#部署calico网络插件

wget --no-check-certificate https://docs.tigera.io/archive/v3.25/manifests/calico.yaml修改CALICO_IPV4POOL_CIDR

- name: CALICO_IPV4POOL_CIDRvalue: "10.244.0.0/16"kubectl apply -f calico.yaml#部署corednsapiVersion: v1

kind: ServiceAccount

metadata:name: corednsnamespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:labels:kubernetes.io/bootstrapping: rbac-defaultsname: system:coredns

rules:- apiGroups:- ""resources:- endpoints- services- pods- namespacesverbs:- list- watch- apiGroups:- discovery.k8s.ioresources:- endpointslicesverbs:- list- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:annotations:rbac.authorization.kubernetes.io/autoupdate: "true"labels:kubernetes.io/bootstrapping: rbac-defaultsname: system:coredns

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:coredns

subjects:

- kind: ServiceAccountname: corednsnamespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:name: corednsnamespace: kube-system

data:Corefile: |.:53 {errorshealth {lameduck 5s}readykubernetes cluster.local in-addr.arpa ip6.arpa {pods insecurefallthrough in-addr.arpa ip6.arpattl 30}prometheus :9153forward . /etc/resolv.conf {max_concurrent 1000}cache 30loopreloadloadbalance}

---

apiVersion: apps/v1

kind: Deployment

metadata:name: corednsnamespace: kube-systemlabels:k8s-app: kube-dnskubernetes.io/name: "CoreDNS"app.kubernetes.io/name: coredns

spec:# replicas: not specified here:# 1. Default is 1.# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.strategy:type: RollingUpdaterollingUpdate:maxUnavailable: 1selector:matchLabels:k8s-app: kube-dnsapp.kubernetes.io/name: corednstemplate:metadata:labels:k8s-app: kube-dnsapp.kubernetes.io/name: corednsspec:priorityClassName: system-cluster-criticalserviceAccountName: corednstolerations:- key: "CriticalAddonsOnly"operator: "Exists"nodeSelector:kubernetes.io/os: linuxaffinity:podAntiAffinity:requiredDuringSchedulingIgnoredDuringExecution:- labelSelector:matchExpressions:- key: k8s-appoperator: Invalues: ["kube-dns"]topologyKey: kubernetes.io/hostnamecontainers:- name: corednsimage: coredns/coredns:1.9.4imagePullPolicy: IfNotPresentresources:limits:memory: 170Mirequests:cpu: 100mmemory: 70Miargs: [ "-conf", "/etc/coredns/Corefile" ]volumeMounts:- name: config-volumemountPath: /etc/corednsreadOnly: trueports:- containerPort: 53name: dnsprotocol: UDP- containerPort: 53name: dns-tcpprotocol: TCP- containerPort: 9153name: metricsprotocol: TCPsecurityContext:allowPrivilegeEscalation: falsecapabilities:add:- NET_BIND_SERVICEdrop:- allreadOnlyRootFilesystem: truelivenessProbe:httpGet:path: /healthport: 8080scheme: HTTPinitialDelaySeconds: 60timeoutSeconds: 5successThreshold: 1failureThreshold: 5readinessProbe:httpGet:path: /readyport: 8181scheme: HTTPdnsPolicy: Defaultvolumes:- name: config-volumeconfigMap:name: corednsitems:- key: Corefilepath: Corefile

---

apiVersion: v1

kind: Service

metadata:name: kube-dnsnamespace: kube-systemannotations:prometheus.io/port: "9153"prometheus.io/scrape: "true"labels:k8s-app: kube-dnskubernetes.io/cluster-service: "true"kubernetes.io/name: "CoreDNS"app.kubernetes.io/name: coredns

spec:selector:k8s-app: kube-dnsapp.kubernetes.io/name: corednsclusterIP: 10.0.0.2ports:- name: dnsport: 53protocol: UDP- name: dns-tcpport: 53protocol: TCP- name: metricsport: 9153protocol: TCP

CPU不足,有两个节点calico没起来

相关文章:

k8s v1.27.4二进制部署记录

记录二进制部署过程 #!/bin/bash#升级内核 update_kernel() {rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.orgyum -y install https://www.elrepo.org/elrepo-release-7.el7.elrepo.noarch.rpmyum --disablerepo"*" --enablerepo"elrepo-kernel&q…...

C# API 文档注释规范

C# API 文档注释规范 1. 命名空间注释(namespace)2. summary3. remarks and para4. param5. returns6. example and code7. exception8. typeparam 最近在开发工作中需要实现 API 帮助文档,如果根据所写的代码直接重写 API 帮助文档将会是意见非常大的工作量&#x…...

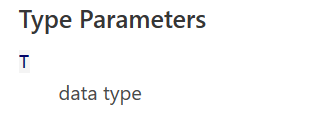

分类预测 | Matlab实现基于TSOA-CNN-GRU-Attention的数据分类预测

分类预测 | Matlab实现基于TSOA-CNN-GRU-Attention的数据分类预测 目录 分类预测 | Matlab实现基于TSOA-CNN-GRU-Attention的数据分类预测效果一览基本介绍研究内容程序设计参考资料 效果一览 基本介绍 Matlab实现分类预测 | Matlab实现基于TSOA-CNN-GRU-Attention的数据分类预…...

《深度剖析K8s》学习笔记

一、容器技术 1.从进程说起 a. 概述 进程:数据和状态的综合 容器技术的核心:约束和修改进程的动态表现,创造出边界(Cgroup:约束/namespace:进程视图) 启动容器例子: docker ru…...

神经网络基础-神经网络补充概念-49-adam优化算法

概念 Adam(Adaptive Moment Estimation)是一种优化算法,结合了动量梯度下降法和RMSProp的优点,用于在训练神经网络等深度学习模型时自适应地调整学习率。Adam算法在深度学习中广泛应用,通常能够加速收敛并提高模型性能…...

Java:正则表达式书写规则及相关案例:检验QQ号码,校验手机号码,邮箱格式,当前时间

正则表达式 目标:体验一下使用正则表达式来校验数据格式的合法性。需求:校验QQ号码是否正确,要求全部是数字,长度是(6-20)之间,不能以0开头 首先用自己编写的程序判断QQ号码是否正确 public static void main(String[] args) {Sy…...

图数据库_Neo4j_Centos7.9安装Neo4j社区版3.5.4_基于jdk1.8---Neo4j图数据库工作笔记0011

首先上传安装包,到opt/soft目录 然后看一下jdk安装的是什么版本的,因为在neo4j 4以后就必须要用jdk11 以上的版本,我这里还用着jdk1.8 所以 我这里用3.5.4的版本 关于下载地址: https://dist.neo4j.org/neo4j-community-3.5.4-unix.tar.gz 然后再去解压到/opt/module目录下 …...

使用Rust编写的一款使用遗传算法、神经网络、WASM技术的模拟生物进化的程序

模拟生物进化程序 Github地址:FishLife 期待各位的star✨✨✨ 本项目是一个模拟生物进化的程序,利用遗传算法、神经网络技术对鱼的眼睛和大脑进行模拟。该项目是使用 Rust 语言编写的,并编译为 WebAssembly (Wasm) 格式,使其可以…...

UE4/UE5 “无法双击打开.uproject 点击无反应“解决

一、方法一:运行UnrealVersionSelector.exe 1.找到Epic Game Lancher的安装目录, 在lancher->Engine->Binaries->Win64->UnrealVersionSelector.exe 2.把UnrealVersionSelector.exe 分别拷贝到UE4 不同版本引擎的 Engine->Binaries->…...

【前端】深入理解CSS定位

目录 一、前言二、定位组成1、定位模式1.1、静态定位static①、语法定义②、特点 1.2、相对定位relative①、语法定义②、特点③、代码示例 1.3、绝对定位absolute①、语法定义②、特点③、代码示例1)、没有祖先元素或者祖先元素没有定位2)、祖先元素有定…...

【问题】分布式事务的场景下如何保证读写分离的数据一致性

我的理解这个题目可以获得以下关键字:分布式处理、读写分离、数据一致性 那么就从”读写分离“做切入口吧,按我的理解其实就是在保证数据一致性的前提下两个(或以上)的数据库分别肩负不同的数据处理任务。太过久远的就不说了&…...

常见的Web安全漏洞有哪些,Web安全漏洞常用测试方法介绍

Web安全漏洞是指在Web应用程序中存在的可能被攻击者利用的漏洞,正确认识和了解这些漏洞对于Web应用程序的开发和测试至关重要。 一、常见的Web安全漏洞类型: 1、跨站脚本攻击(Cross-Site Scripting,XSS):攻击者通过向Web页面注入…...

随机微分方程

应用随机过程|第7章 随机微分方程 见知乎:https://zhuanlan.zhihu.com/p/348366892?utm_sourceqq&utm_mediumsocial&utm_oi1315073218793488384...

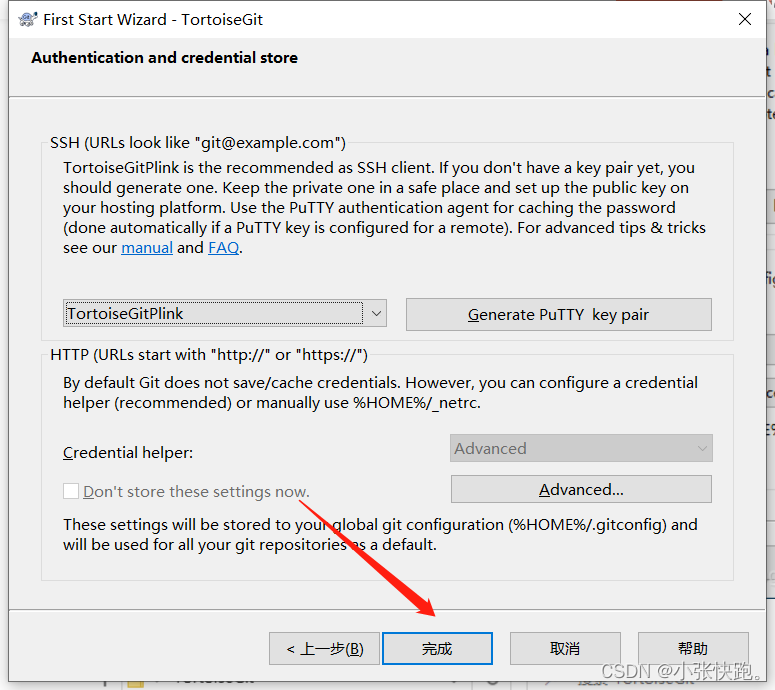

下载安装并使用小乌龟TortoiseGit

1、下载TortoiseGit安装包 官网:Download – TortoiseGit – Windows Shell Interface to Githttps://tortoisegit.org/download/ 2、小乌龟汉化包 在官网的下面就有官方提供的下载包 3、安装...

报错问题解决)

npm ERR!Cannot read properties of null(reading ‘pickAlgorithm’)报错问题解决

当在使用npm包管理器或执行npm命令时,有时候会遇到“npm ERR!Cannot read properties of null(reading ‘pickAlgorithm’)”这个错误提示,这是一个常见的npm错误。 这个错误提示通常说明在使用npm包管理器时,执行了某个npm命令,…...

web前端tips:js继承——组合继承

上篇文章给大家分享了 js继承中的借用构造函数继承 web前端tips:js继承——借用构造函数继承 在借用构造函数继承中,我提到了它的缺点 无法继承父类原型链上的方法和属性,只能继承父类构造函数中的属性和方法 父类的方法无法复用࿰…...

(7)(7.3) 自动任务中的相机控制

文章目录 前言 7.3.1 概述 7.3.2 自动任务类型 7.3.3 创建合成图像 前言 本文介绍 ArduPilot 的相机和云台命令,并说明如何在 Mission Planner 中使用这些命令来定义相机勘测任务。这些说明假定已经连接并配置了相机触发器和云台(camera trigger and gimbal hav…...

Python 爬虫小练

Python 爬虫小练 获取贝壳网数据 使用到的模块 标准库 Python3 标准库列表 os 模块:os 模块提供了许多与操作系统交互的函数,例如创建、移动和删除文件和目录,以及访问环境变量等。math 模块:math 模块提供了数学函数…...

vue3 事件处理 @click

在Vue 3中,事件处理可以通过click指令来实现。click指令用于监听元素的点击事件,并在触发时执行相应的处理函数。 下面是一个简单的示例,展示了如何在Vue 3中处理点击事件: <template><button click"handleClick&…...

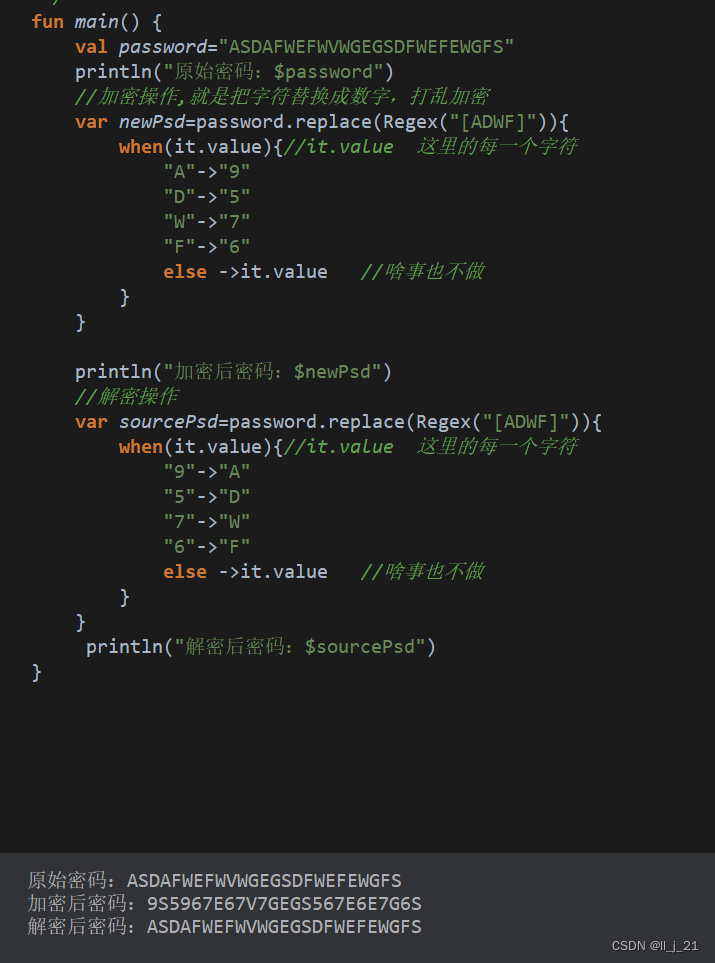

【第三阶段】kotlin语言使用replace完成加解密操作

fun main() {val password"ASDAFWEFWVWGEGSDFWEFEWGFS"println("原始密码:$password")//加密操作,就是把字符替换成数字,打乱加密var newPsdpassword.replace(Regex("[ADWF]")){when(it.value){//it.value 这里的每一个字…...

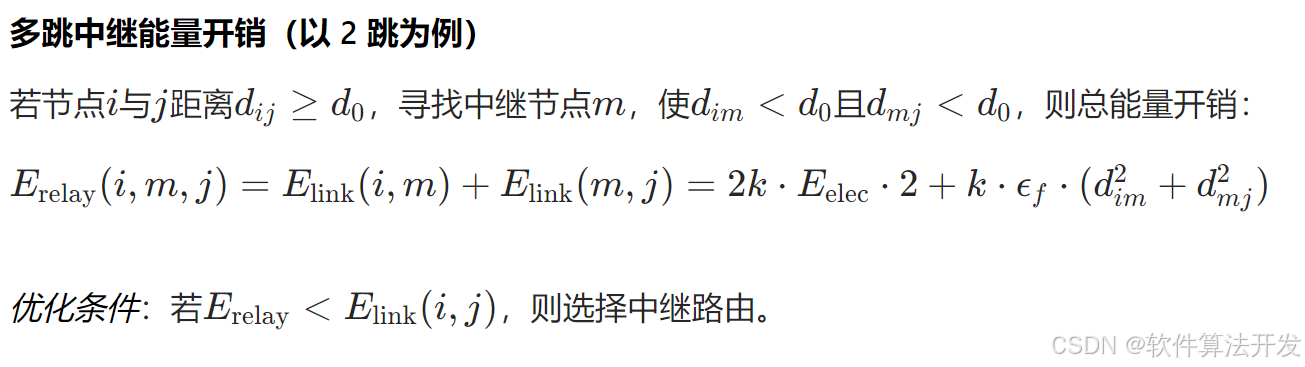

基于距离变化能量开销动态调整的WSN低功耗拓扑控制开销算法matlab仿真

目录 1.程序功能描述 2.测试软件版本以及运行结果展示 3.核心程序 4.算法仿真参数 5.算法理论概述 6.参考文献 7.完整程序 1.程序功能描述 通过动态调整节点通信的能量开销,平衡网络负载,延长WSN生命周期。具体通过建立基于距离的能量消耗模型&am…...

Java 8 Stream API 入门到实践详解

一、告别 for 循环! 传统痛点: Java 8 之前,集合操作离不开冗长的 for 循环和匿名类。例如,过滤列表中的偶数: List<Integer> list Arrays.asList(1, 2, 3, 4, 5); List<Integer> evens new ArrayList…...

Java如何权衡是使用无序的数组还是有序的数组

在 Java 中,选择有序数组还是无序数组取决于具体场景的性能需求与操作特点。以下是关键权衡因素及决策指南: ⚖️ 核心权衡维度 维度有序数组无序数组查询性能二分查找 O(log n) ✅线性扫描 O(n) ❌插入/删除需移位维护顺序 O(n) ❌直接操作尾部 O(1) ✅内存开销与无序数组相…...

无法与IP建立连接,未能下载VSCode服务器

如题,在远程连接服务器的时候突然遇到了这个提示。 查阅了一圈,发现是VSCode版本自动更新惹的祸!!! 在VSCode的帮助->关于这里发现前几天VSCode自动更新了,我的版本号变成了1.100.3 才导致了远程连接出…...

P3 QT项目----记事本(3.8)

3.8 记事本项目总结 项目源码 1.main.cpp #include "widget.h" #include <QApplication> int main(int argc, char *argv[]) {QApplication a(argc, argv);Widget w;w.show();return a.exec(); } 2.widget.cpp #include "widget.h" #include &q…...

多模态大语言模型arxiv论文略读(108)

CROME: Cross-Modal Adapters for Efficient Multimodal LLM ➡️ 论文标题:CROME: Cross-Modal Adapters for Efficient Multimodal LLM ➡️ 论文作者:Sayna Ebrahimi, Sercan O. Arik, Tejas Nama, Tomas Pfister ➡️ 研究机构: Google Cloud AI Re…...

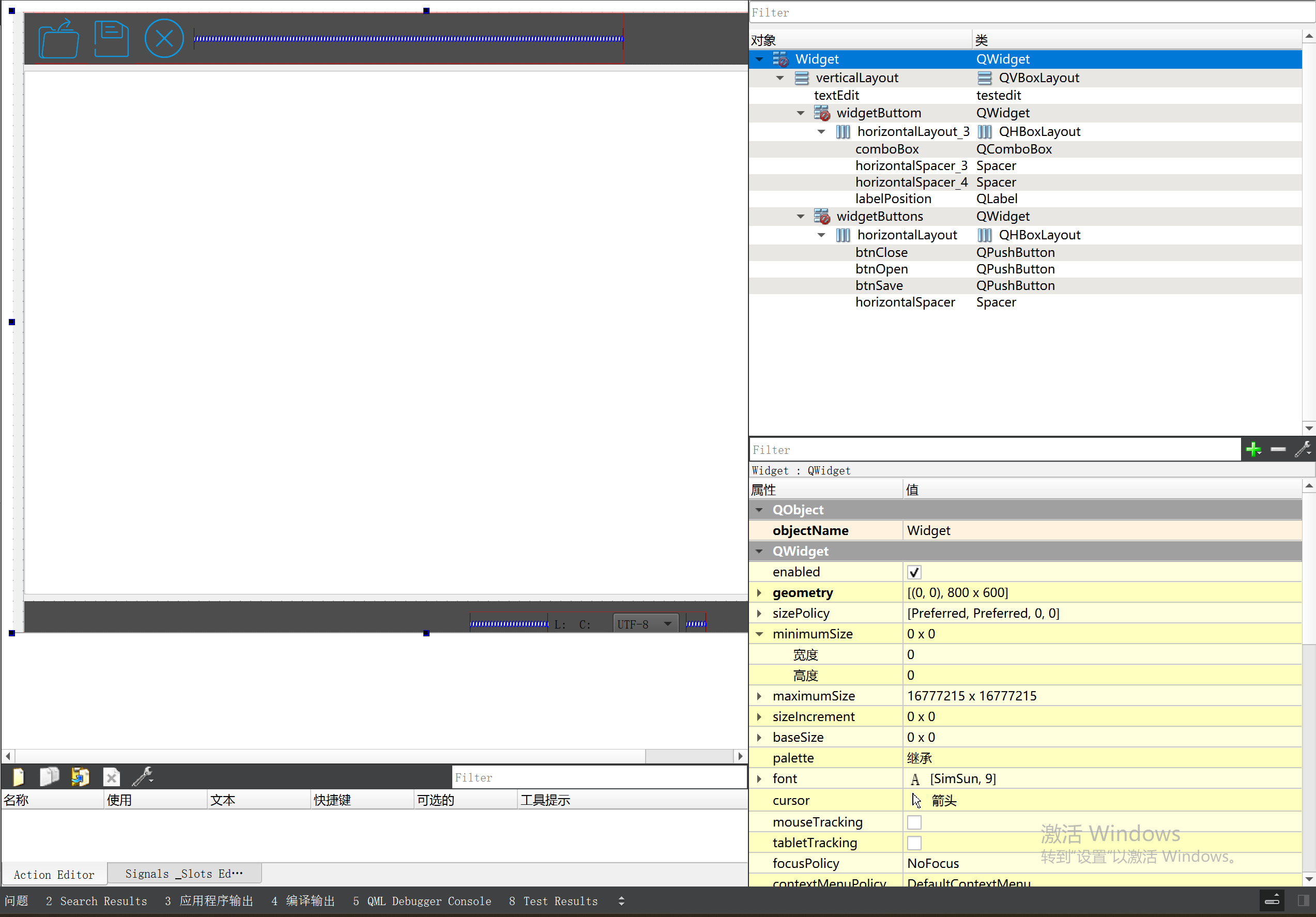

C/C++ 中附加包含目录、附加库目录与附加依赖项详解

在 C/C 编程的编译和链接过程中,附加包含目录、附加库目录和附加依赖项是三个至关重要的设置,它们相互配合,确保程序能够正确引用外部资源并顺利构建。虽然在学习过程中,这些概念容易让人混淆,但深入理解它们的作用和联…...

)

LLaMA-Factory 微调 Qwen2-VL 进行人脸情感识别(二)

在上一篇文章中,我们详细介绍了如何使用LLaMA-Factory框架对Qwen2-VL大模型进行微调,以实现人脸情感识别的功能。本篇文章将聚焦于微调完成后,如何调用这个模型进行人脸情感识别的具体代码实现,包括详细的步骤和注释。 模型调用步骤 环境准备:确保安装了必要的Python库。…...

Android Framework预装traceroute执行文件到system/bin下

文章目录 Android SDK中寻找traceroute代码内置traceroute到SDK中traceroute参数说明-I 参数(使用 ICMP Echo 请求)-T 参数(使用 TCP SYN 包) 相关文章 Android SDK中寻找traceroute代码 设备使用的是Android 11,在/s…...

2025-06-01-Hive 技术及应用介绍

Hive 技术及应用介绍 参考资料 Hive 技术原理Hive 架构及应用介绍Hive - 小海哥哥 de - 博客园https://cwiki.apache.org/confluence/display/Hive/Home(官方文档) Apache Hive 是基于 Hadoop 构建的数据仓库工具,它为海量结构化数据提供类 SQL 的查询能力…...