PyTorch DataLoader 报错 “DataLoader worker exited unexpectedly“ 的解决方案

注意:博主没有重写d2l的源代码文件,而是创建了一个新的python文件,并重写了该方法。

一、代码运行日志

C:\Users\Administrator\anaconda3\envs\limu\python.exe G:/PyCharmProjects/limu-d2l/ch03/softmax_regression.py

Traceback (most recent call last):File "<string>", line 1, in <module>

Traceback (most recent call last):File "<string>", line 1, in <module>File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 116, in spawn_mainFile "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 116, in spawn_mainexitcode = _main(fd, parent_sentinel)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 125, in _mainTraceback (most recent call last):

exitcode = _main(fd, parent_sentinel)File "<string>", line 1, in <module>File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 125, in _main

Traceback (most recent call last):File "<string>", line 1, in <module>

prepare(preparation_data)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 236, in prepareprepare(preparation_data)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 236, in prepare_fixup_main_from_path(data['init_main_from_path'])File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 287, in _fixup_main_from_path_fixup_main_from_path(data['init_main_from_path'])File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 287, in _fixup_main_from_pathmain_content = runpy.run_path(main_path,File "C:\Users\Administrator\anaconda3\envs\limu\lib\runpy.py", line 265, in run_pathmain_content = runpy.run_path(main_path,File "C:\Users\Administrator\anaconda3\envs\limu\lib\runpy.py", line 265, in run_pathreturn _run_module_code(code, init_globals, run_name,File "C:\Users\Administrator\anaconda3\envs\limu\lib\runpy.py", line 97, in _run_module_codereturn _run_module_code(code, init_globals, run_name,File "C:\Users\Administrator\anaconda3\envs\limu\lib\runpy.py", line 97, in _run_module_code_run_code(code, mod_globals, init_globals,File "C:\Users\Administrator\anaconda3\envs\limu\lib\runpy.py", line 87, in _run_code_run_code(code, mod_globals, init_globals,File "C:\Users\Administrator\anaconda3\envs\limu\lib\runpy.py", line 87, in _run_codeFile "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 116, in spawn_mainFile "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 116, in spawn_mainexec(code, run_globals)File "G:\PyCharmProjects\limu-d2l\ch03\softmax_regression.py", line 97, in <module>

exec(code, run_globals)File "G:\PyCharmProjects\limu-d2l\ch03\softmax_regression.py", line 97, in <module>train_ch03(net, train_iter, test_iter, cross_entropy, num_epochs, updater)File "G:\PyCharmProjects\limu-d2l\ch03\softmax_regression.py", line 81, in train_ch03

exitcode = _main(fd, parent_sentinel)

train_ch03(net, train_iter, test_iter, cross_entropy, num_epochs, updater)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 125, in _mainFile "G:\PyCharmProjects\limu-d2l\ch03\softmax_regression.py", line 81, in train_ch03exitcode = _main(fd, parent_sentinel)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 125, in _maintrain_loss, train_acc = train_epoch_ch03(net, train_iter, loss, updater)train_loss, train_acc = train_epoch_ch03(net, train_iter, loss, updater)File "G:\PyCharmProjects\limu-d2l\ch03\softmax_regression.py", line 64, in train_epoch_ch03File "G:\PyCharmProjects\limu-d2l\ch03\softmax_regression.py", line 64, in train_epoch_ch03prepare(preparation_data)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 236, in prepareprepare(preparation_data)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 236, in preparefor X, y in train_iter:for X, y in train_iter:File "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 441, in __iter__File "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 441, in __iter___fixup_main_from_path(data['init_main_from_path'])_fixup_main_from_path(data['init_main_from_path'])File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 287, in _fixup_main_from_pathFile "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 287, in _fixup_main_from_pathmain_content = runpy.run_path(main_path,main_content = runpy.run_path(main_path,File "C:\Users\Administrator\anaconda3\envs\limu\lib\runpy.py", line 265, in run_pathFile "C:\Users\Administrator\anaconda3\envs\limu\lib\runpy.py", line 265, in run_pathreturn self._get_iterator()File "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 388, in _get_iteratorreturn self._get_iterator()File "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 388, in _get_iteratorreturn _run_module_code(code, init_globals, run_name,return _run_module_code(code, init_globals, run_name,File "C:\Users\Administrator\anaconda3\envs\limu\lib\runpy.py", line 97, in _run_module_codeFile "C:\Users\Administrator\anaconda3\envs\limu\lib\runpy.py", line 97, in _run_module_codereturn _MultiProcessingDataLoaderIter(self)File "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 1042, in __init__return _MultiProcessingDataLoaderIter(self)File "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 1042, in __init___run_code(code, mod_globals, init_globals,

_run_code(code, mod_globals, init_globals,File "C:\Users\Administrator\anaconda3\envs\limu\lib\runpy.py", line 87, in _run_codeFile "C:\Users\Administrator\anaconda3\envs\limu\lib\runpy.py", line 87, in _run_codeexec(code, run_globals)

exec(code, run_globals)File "G:\PyCharmProjects\limu-d2l\ch03\softmax_regression.py", line 97, in <module>File "G:\PyCharmProjects\limu-d2l\ch03\softmax_regression.py", line 97, in <module>train_ch03(net, train_iter, test_iter, cross_entropy, num_epochs, updater)File "G:\PyCharmProjects\limu-d2l\ch03\softmax_regression.py", line 81, in train_ch03

train_ch03(net, train_iter, test_iter, cross_entropy, num_epochs, updater)File "G:\PyCharmProjects\limu-d2l\ch03\softmax_regression.py", line 81, in train_ch03w.start()File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\process.py", line 121, in startw.start()File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\process.py", line 121, in starttrain_loss, train_acc = train_epoch_ch03(net, train_iter, loss, updater)train_loss, train_acc = train_epoch_ch03(net, train_iter, loss, updater)File "G:\PyCharmProjects\limu-d2l\ch03\softmax_regression.py", line 64, in train_epoch_ch03File "G:\PyCharmProjects\limu-d2l\ch03\softmax_regression.py", line 64, in train_epoch_ch03self._popen = self._Popen(self)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\context.py", line 224, in _Popenfor X, y in train_iter:for X, y in train_iter:File "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 441, in __iter__File "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 441, in __iter__self._popen = self._Popen(self)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\context.py", line 224, in _Popenreturn _default_context.get_context().Process._Popen(process_obj)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\context.py", line 327, in _Popenreturn _default_context.get_context().Process._Popen(process_obj)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\context.py", line 327, in _Popenreturn self._get_iterator()return self._get_iterator()File "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 388, in _get_iteratorFile "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 388, in _get_iteratorreturn Popen(process_obj)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\popen_spawn_win32.py", line 45, in __init__return Popen(process_obj) return _MultiProcessingDataLoaderIter(self)return _MultiProcessingDataLoaderIter(self)prep_data = spawn.get_preparation_data(process_obj._name)File "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 1042, in __init__File "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 1042, in __init__File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 154, in get_preparation_dataFile "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\popen_spawn_win32.py", line 45, in __init__prep_data = spawn.get_preparation_data(process_obj._name)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 154, in get_preparation_data

_check_not_importing_main()File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 134, in _check_not_importing_main_check_not_importing_main()File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 134, in _check_not_importing_mainraise RuntimeError('''

RuntimeError: An attempt has been made to start a new process before thecurrent process has finished its bootstrapping phase.This probably means that you are not using fork to start yourchild processes and you have forgotten to use the proper idiomin the main module:if __name__ == '__main__':freeze_support()...The "freeze_support()" line can be omitted if the programis not going to be frozen to produce an executable.w.start()File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\process.py", line 121, in startw.start()File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\process.py", line 121, in startraise RuntimeError('''

RuntimeError: An attempt has been made to start a new process before thecurrent process has finished its bootstrapping phase.This probably means that you are not using fork to start yourchild processes and you have forgotten to use the proper idiomin the main module:if __name__ == '__main__':freeze_support()...The "freeze_support()" line can be omitted if the programis not going to be frozen to produce an executable.self._popen = self._Popen(self)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\context.py", line 224, in _Popenself._popen = self._Popen(self)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\context.py", line 224, in _Popenreturn _default_context.get_context().Process._Popen(process_obj)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\context.py", line 327, in _Popen

return _default_context.get_context().Process._Popen(process_obj)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\context.py", line 327, in _Popenreturn Popen(process_obj)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\popen_spawn_win32.py", line 45, in __init__return Popen(process_obj)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\popen_spawn_win32.py", line 45, in __init__prep_data = spawn.get_preparation_data(process_obj._name)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 154, in get_preparation_dataprep_data = spawn.get_preparation_data(process_obj._name)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 154, in get_preparation_data_check_not_importing_main()File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 134, in _check_not_importing_main_check_not_importing_main()File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\spawn.py", line 134, in _check_not_importing_mainraise RuntimeError('''raise RuntimeError('''

RuntimeError: RuntimeErrorAn attempt has been made to start a new process before thecurrent process has finished its bootstrapping phase.This probably means that you are not using fork to start yourchild processes and you have forgotten to use the proper idiomin the main module:if __name__ == '__main__':freeze_support()...The "freeze_support()" line can be omitted if the programis not going to be frozen to produce an executable.: An attempt has been made to start a new process before thecurrent process has finished its bootstrapping phase.This probably means that you are not using fork to start yourchild processes and you have forgotten to use the proper idiomin the main module:if __name__ == '__main__':freeze_support()...The "freeze_support()" line can be omitted if the programis not going to be frozen to produce an executable.

Traceback (most recent call last):File "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 1132, in _try_get_datadata = self._data_queue.get(timeout=timeout)File "C:\Users\Administrator\anaconda3\envs\limu\lib\multiprocessing\queues.py", line 108, in getraise Empty

_queue.EmptyThe above exception was the direct cause of the following exception:Traceback (most recent call last):File "G:/PyCharmProjects/limu-d2l/ch03/softmax_regression.py", line 97, in <module>train_ch03(net, train_iter, test_iter, cross_entropy, num_epochs, updater)File "G:/PyCharmProjects/limu-d2l/ch03/softmax_regression.py", line 81, in train_ch03train_loss, train_acc = train_epoch_ch03(net, train_iter, loss, updater)File "G:/PyCharmProjects/limu-d2l/ch03/softmax_regression.py", line 64, in train_epoch_ch03for X, y in train_iter:File "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 633, in __next__data = self._next_data()File "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 1328, in _next_dataidx, data = self._get_data()File "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 1294, in _get_datasuccess, data = self._try_get_data()File "C:\Users\Administrator\anaconda3\envs\limu\lib\site-packages\torch\utils\data\dataloader.py", line 1145, in _try_get_dataraise RuntimeError('DataLoader worker (pid(s) {}) exited unexpectedly'.format(pids_str)) from e

RuntimeError: DataLoader worker (pid(s) 14032, 23312, 21048, 1952) exited unexpectedlyProcess finished with exit code 1

二、问题分析

这个错误是由于在使用多进程 DataLoader 时出现的问题,通常与 Windows 操作系统相关。在 Windows 上,使用多进程的 DataLoader 可能会导致一些问题,这与 Windows 的进程模型不太兼容。

三、解决方案(使用单进程 DataLoader)

在 Windows 上,将 DataLoader 的 num_workers 参数设置为 0,以使用单进程 DataLoader。这会禁用多进程加载数据,虽然可能会导致数据加载速度变慢,但通常可以解决与多进程 DataLoader 相关的问题。

d2l.load_data_fashion_mnist(batch_size)源代码

def get_dataloader_workers():"""Use 4 processes to read the data.Defined in :numref:`sec_utils`"""return 4def load_data_fashion_mnist(batch_size, resize=None):"""Download the Fashion-MNIST dataset and then load it into memory.Defined in :numref:`sec_utils`"""trans = [transforms.ToTensor()]if resize:trans.insert(0, transforms.Resize(resize))trans = transforms.Compose(trans)mnist_train = torchvision.datasets.FashionMNIST(root="../data", train=True, transform=trans, download=True)mnist_test = torchvision.datasets.FashionMNIST(root="../data", train=False, transform=trans, download=True)return (torch.utils.data.DataLoader(mnist_train, batch_size, shuffle=True,num_workers=get_dataloader_workers()),torch.utils.data.DataLoader(mnist_test, batch_size, shuffle=False,num_workers=get_dataloader_workers()))

在代码中使用修改后的load_data_fashion_mnist函数

def load_data_fashion_mnist(batch_size, resize=None, num_workers=4):"""下载Fashion-MNIST数据集,然后将其加载到内存中"""trans = [transforms.ToTensor()]if resize:trans.index(0, transforms.Resize(resize))trans = transforms.Compose(trans)mnist_train = torchvision.datasets.FashionMNIST(root='../data', train=True, transform=trans, download=True)mnist_test = torchvision.datasets.FashionMNIST(root='../data', train=False, transform=trans, download=True)return (data.DataLoader(mnist_train, batch_size, shuffle=True, num_workers=num_workers),data.DataLoader(mnist_test, batch_size, shuffle=False, num_workers=num_workers))

train_iter, test_iter = fashion_mnist.load_data_fashion_mnist(batch_size, num_workers=0)

四、为什么将 DataLoader 的 num_workers 参数设置为 0,是使用的单进程,而不是零进程呢?

在 PyTorch 的 DataLoader 中,num_workers 参数控制了用于加载数据的子进程数量。当 num_workers 被设置为 0 时,实际上是表示不使用任何子进程来加载数据,即单进程加载数据。

为什么不是零进程?这是因为 DataLoader 需要至少一个进程来加载数据,这个进程被称为主进程。主进程负责数据加载和分发给训练的进程。当 num_workers 设置为 0 时,只有主进程用于加载和处理数据,没有额外的子进程。这是一种单进程的数据加载方式。

如果将 num_workers 设置为 1,则会有一个额外的子进程来加载数据,总共会有两个进程:一个主进程和一个数据加载子进程。这种设置可以在某些情况下提高数据加载的效率,特别是当数据加载耗时较长时,子进程可以并行地加载数据,从而加速训练过程。

五、完整训练代码

import torch

from d2l import torch as d2l

import fashion_mnistbatch_size = 256

train_iter, test_iter = fashion_mnist.load_data_fashion_mnist(batch_size, num_workers=0)# 初始化模型参数

num_inputs = 784 # 每个输入图像的通道数为1, 高度和宽度均为28像素

num_outputs = 10W = torch.normal(0, 0.01, size=(num_inputs, num_outputs), requires_grad=True)

b = torch.zeros(num_outputs, requires_grad=True)# 定义softmax操作

def softmax(X):"""矩阵中的非常大或非常小的元素可能造成数值上溢或者下溢解决方案: P84 3.7.2 重新审视softmax的实现"""X_exp = torch.exp(X)partition = X_exp.sum(1, keepdim=True)return X_exp / partition# 定义模型

def net(X):return softmax(torch.matmul(X.reshape((-1, W.shape[0])), W) + b)# 定义损失函数

def cross_entropy(y_hat, y):return - torch.log(y_hat[range(len(y_hat)), y])# 分类精度

def accuracy(y_hat, y):"""计算预测正确的数量"""if len(y_hat.shape) > 1 and y_hat.shape[1] > 1:y_hat = y_hat.argmax(axis=1)cmp = y_hat.type(y.dtype) == yreturn float(cmp.type(y.dtype).sum())def evaluate_accuracy(net, data_iter):"""计算在制定数据集上模型的精度"""if isinstance(net, torch.nn.Module):net.eval()metric = d2l.Accumulator(2)with torch.no_grad():for X, y in data_iter:metric.add(accuracy(net(X), y), y.numel())return metric[0] / metric[1]# 训练

def train_epoch_ch03(net, train_iter, loss, updater):if isinstance(net, torch.nn.Module):net.train()# 训练损失总和, 训练准确度总和, 样本数metric = d2l.Accumulator(3)for X, y in train_iter:y_hat = net(X)l = loss(y_hat, y)if isinstance(updater, torch.optim.Optimizer):updater.zero_grad()l.mean().backward()updater.step()else:l.sum().backward()updater(X.shape[0])metric.add(float(l.sum()), accuracy(y_hat, y), y.numel())# 返回训练损失和训练精度return metric[0] / metric[2], metric[1] / metric[2]def train_ch03(net, train_iter, test_iter, loss, num_epochs, updater):for epoch in range(num_epochs):train_loss, train_acc = train_epoch_ch03(net, train_iter, loss, updater)test_acc = evaluate_accuracy(net, test_iter)print(f'epoch {epoch + 1}, train_loss {train_loss:f}, train_acc {train_acc:f}, test_acc {test_acc:f}')assert train_loss < 0.5, train_lossassert train_acc <= 1 and train_acc > 0.7, train_accassert test_acc <= 1 and test_acc > 0.7, test_acclr = 0.1def updater(batch_size):return d2l.sgd([W, b], lr, batch_size)num_epochs = 10

train_ch03(net, train_iter, test_iter, cross_entropy, num_epochs, updater)

相关文章:

PyTorch DataLoader 报错 “DataLoader worker exited unexpectedly“ 的解决方案

注意:博主没有重写d2l的源代码文件,而是创建了一个新的python文件,并重写了该方法。 一、代码运行日志 C:\Users\Administrator\anaconda3\envs\limu\python.exe G:/PyCharmProjects/limu-d2l/ch03/softmax_regression.py Traceback (most r…...

【AI绘画--七夕篇】:七夕特别教程,使用SDXL绘制你的心上人(Stable Diffusion)(封神榜—妲己)

目录 前言0、介绍0-0、结果展示0-1、Stable Diffusion0-2、sdxl介绍 一、云端部署Stable Diffusion1-1、云端平台的优势 二、平台介绍三、注册账号并且开始炼制3-1、购买算力并创建工作空间3-2、启动工作空间3-3、应用市场一键安装3-4、使用Stable-Diffusion作图 四、有女朋友的…...

hadoop2的集群数据将副本存储在hadoop3

在 Hadoop 集群中,HDFS 副本是分布式存储的,会存储在不同的节点上。因此,如果您的 HDFS 所在路径是在 Hadoop2 集群中,您可以在 Hadoop3 集群上添加新的节点,并向 Hadoop3 集群中添加这些新节点上的数据副本。 以下是…...

c# ??=

空合并运算符 ??,用于定义引用类型和可空类型的默认值。如果此运算符的左操作符不为Null,则此操作符返回左操作数,否则返回右操作数。 例如: //当a不为空时返回a,为null时返回b var c a ?? b;空合并赋值运算符??…...

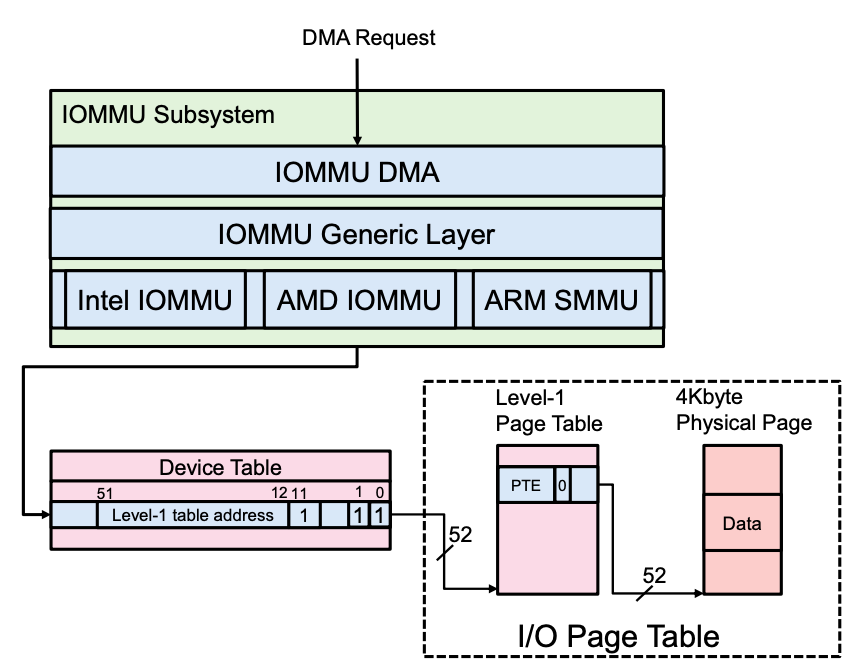

存储系统性能优化中IOMMU的作用是什么?

一、IOMMU原理 IOMMU(Input/Output Memory Management Unit)是一种用于管理计算机内存的技术,它允许将物理内存映射到虚拟地址空间。IOMMU通过使用专用的硬件来管理和优化内存访问,从而提高系统性能和稳定性。本文将详细介绍IOMMU的原理,并介绍一些应用案例和典型的问题解…...

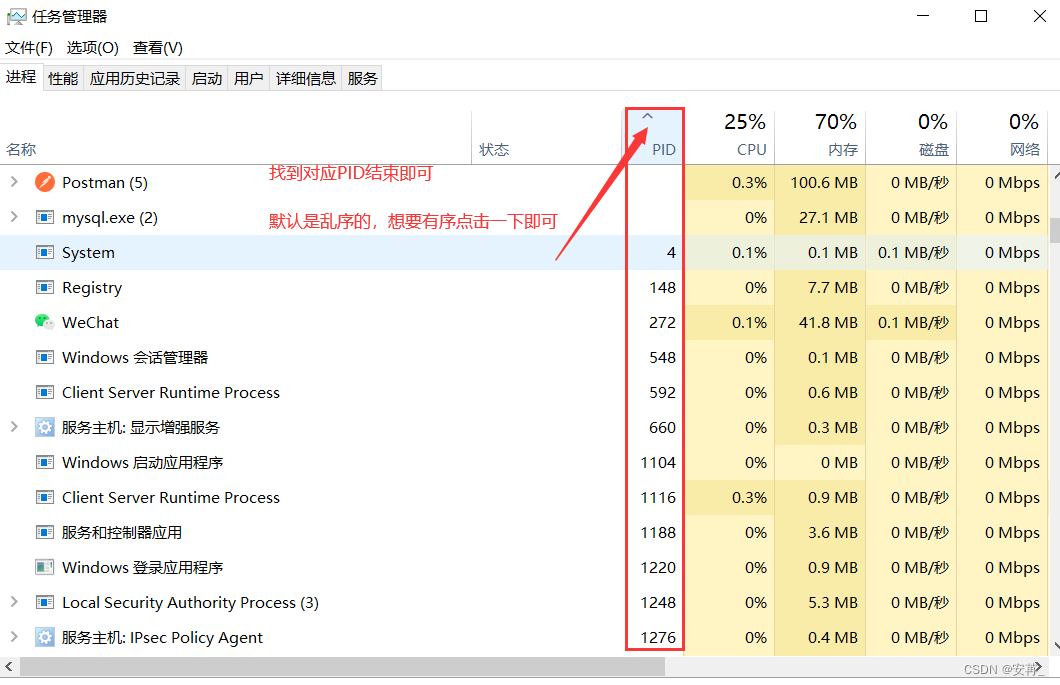

localhost:8080 is already in use

报错原因:本机的8080端口号已经被占用。因为机器的空闲端口号是随机分配的,而idea默认启动的端口号是8080,所以是存在这种情况。 对于这个问题,我们只需要重启idea或者修改项目的启动端口号即可。 更推荐第二种。对于修改项目启动端口号&…...

)

机器学习深度学习——NLP实战(自然语言推断——数据集)

👨🎓作者简介:一位即将上大四,正专攻机器学习的保研er 🌌上期文章:机器学习&&深度学习——NLP实战(情感分析模型——textCNN实现) 📚订阅专栏:机器…...

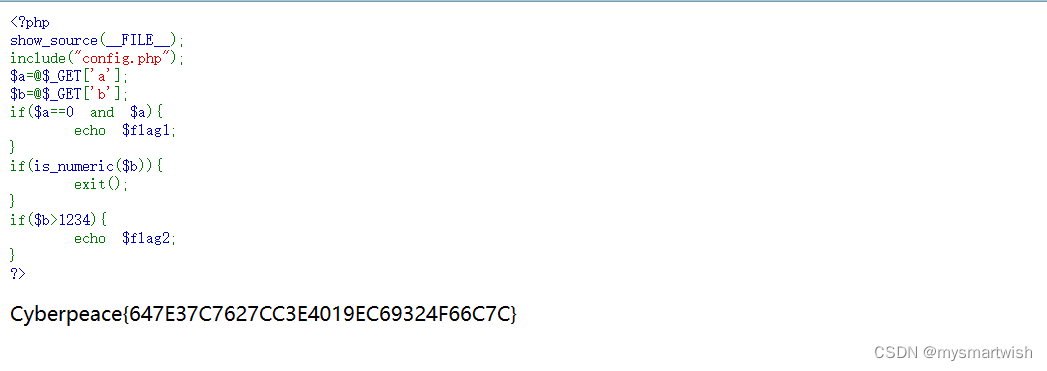

攻防世界-simple_php

原题 解题思路 flag被分成了两个部分:flag2,flag2。获得flag1需要满足变量a0且变量a≠0,这看起来不能实现,但实际上当变量a的值是字符时,与数字比较会发生强制类型转换,所以a为字符型数据即可,变…...

2023MyBatis 八股文——面试题

MyBatis简介 1. MyBatis是什么? MyBatis 是一款优秀的持久层框架,一个半 ORM(对象关系映射)框架,它支持定制化 SQL、存储过程以及高级映射。MyBatis 避免了几乎所有的 JDBC 代码和手动设置参数以及 获取结果集。MyBa…...

解决出海痛点:亚马逊云科技助力智能涂鸦,实现设备互联互通

今年6月,《财富》(中文版)发布“2023年值得关注的中国出海主力”盘点,在七个赛道中聚焦不断开拓新领域、影响力与日俱增的出海企业。涂鸦智能顺利入选,作为一家全球化公司,相比于产品直接到海外销售的传统出…...

国际刑警组织逮捕 14 名涉嫌盗窃 4000 万美元的网络罪犯

Bleeping Computer 网站披露,4 月份,国际刑警组织发动了一起为期四个月,横跨 25 个非洲国家的执法行动 “Africa Cyber Surge II”,共逮捕 14 名网络犯罪嫌疑人,摧毁 20000 多个从事勒索、网络钓鱼、BEC 和在线诈骗的犯…...

MySQL卸载-Linux版

MySQL卸载-Linux版 停止MySQL服务 systemctl stop mysqld 查询MySQL的安装文件 rpm -qa | grep -i mysql 卸载上述查询出来的所有的MySQL安装包 rpm -e mysql-community-client-plugins-8.0.26-1.el7.x86_64 --nodeps rpm -e mysql-community-server-8.0.26-1.el7.x86_64 -…...

快速学会创建uni-app项目并了解pages.json文件

(创作不易,感谢有你,你的支持,就是我前行的最大动力,如果看完对你有帮助,请留下您的足迹) 目录 前言 创建 uni-app 项目 通过 HBuilderX 创建 pages.json pages style globalStyle tabBar 前言…...

选云服务器还是物理服务器

选云服务器还是物理服务器 一、为什么需要云服务器或独立服务器取代共享主机 在最早之前,大多数的网站都是共享主机开始的,这里也包含了云虚拟机。这一类的站点还有其他站点都会共同托管在同一台服务器上。但是这种共享机只适用于小的网站,如…...

最新ChatGPT网站AI系统源码+详细图文搭建教程/支持GPT4.0/AI绘画/H5端/Prompt知识库/

一、前言 SparkAi系统是基于国外很火的ChatGPT进行开发的Ai智能问答系统。本期针对源码系统整体测试下来非常完美,可以说SparkAi是目前国内一款的ChatGPT对接OpenAI软件系统。 那么如何搭建部署AI创作ChatGPT?小编这里写一个详细图文教程吧!…...

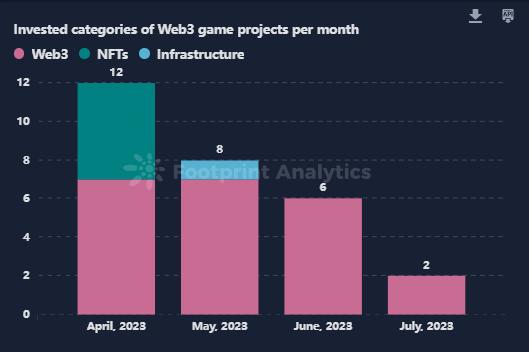

Web3 游戏七月洞察:迈向主流采用的临界点?

作者: lesleyfootprint.network 2023 年 7 月,Web3 游戏领域出现了小幅增长,但对于许多项目来说,用户采用仍然是一个持续的挑战。根据 Footprint Analytics 的数据,活跃的区块链游戏数量略有增加,达到 2,471 个。然而…...

Python爬虫——scrapy_多网页下载

在DangSpider类中设置一个基础url base_url http://category.dangdang.com/pg page 1在parse方法中 # 每一页的爬取逻辑都是一样的,所以只需要执行每一页的请求再次调用parse方法就可以了if self.page < 100:self.page 1url self.base_url str(self.page)…...

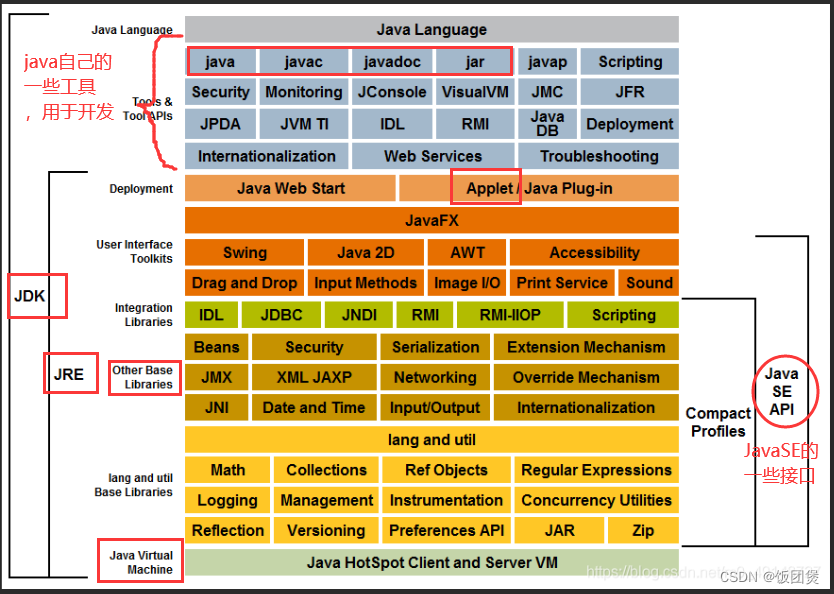

JDK JRE JVM 三者之间的详解

JDK : Java Development Kit JRE: Java Runtime Environment JVM : JAVA Virtual Machine JDK : Java Development Kit JDK : Java Development Kit【 Java开发者工具】,可以从上图可以看出,JDK包含JRE;java自己的一些开发工具中&#…...

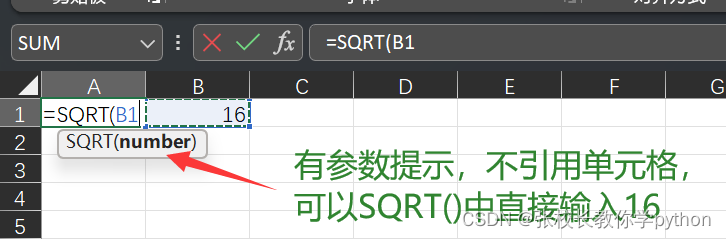

excel常见的数学函数篇2

一、数学函数 1、ABS(number):返回数字的绝对值 语法:ABS(数字);返回数字的绝对值;若引用单元格,把数字换为单元格地址即可 2、INT(number):向小取整 语法:INT(数字);若引用单元格…...

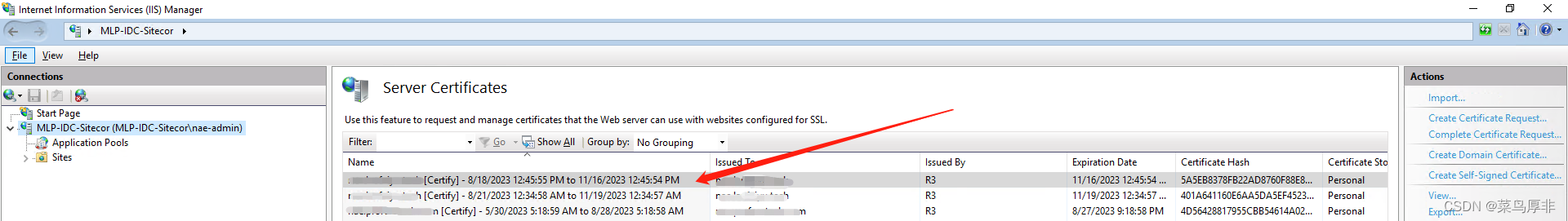

Certify The Web (IIS)

一、简介 Certify The Web 适用于 Windows的SSL 证书管理器用户界面,与所有 ACME v2 CA 兼容,为您的 IIS/Windows 服务器轻松地安装和自动更新来自 Letencrypt.org 和其他 ACME 证书授权机构的免费 SSL/TLS 证书,设置 https 从未如此简单。 …...

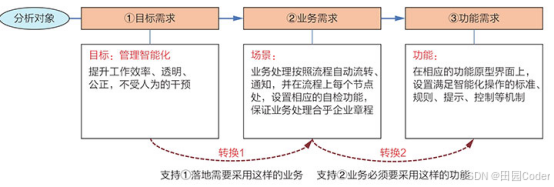

大话软工笔记—需求分析概述

需求分析,就是要对需求调研收集到的资料信息逐个地进行拆分、研究,从大量的不确定“需求”中确定出哪些需求最终要转换为确定的“功能需求”。 需求分析的作用非常重要,后续设计的依据主要来自于需求分析的成果,包括: 项目的目的…...

K8S认证|CKS题库+答案| 11. AppArmor

目录 11. AppArmor 免费获取并激活 CKA_v1.31_模拟系统 题目 开始操作: 1)、切换集群 2)、切换节点 3)、切换到 apparmor 的目录 4)、执行 apparmor 策略模块 5)、修改 pod 文件 6)、…...

Golang 面试经典题:map 的 key 可以是什么类型?哪些不可以?

Golang 面试经典题:map 的 key 可以是什么类型?哪些不可以? 在 Golang 的面试中,map 类型的使用是一个常见的考点,其中对 key 类型的合法性 是一道常被提及的基础却很容易被忽视的问题。本文将带你深入理解 Golang 中…...

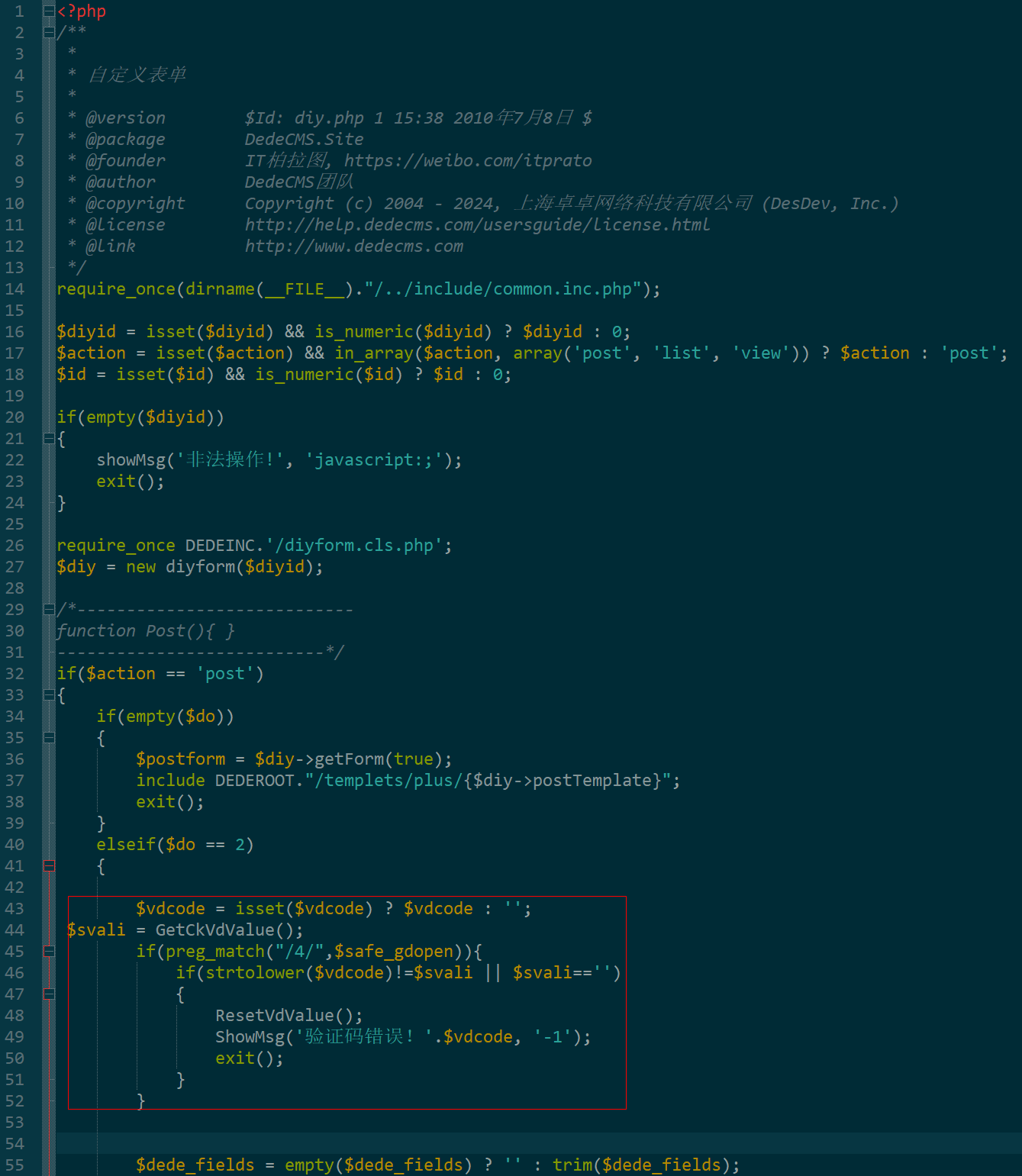

dedecms 织梦自定义表单留言增加ajax验证码功能

增加ajax功能模块,用户不点击提交按钮,只要输入框失去焦点,就会提前提示验证码是否正确。 一,模板上增加验证码 <input name"vdcode"id"vdcode" placeholder"请输入验证码" type"text&quo…...

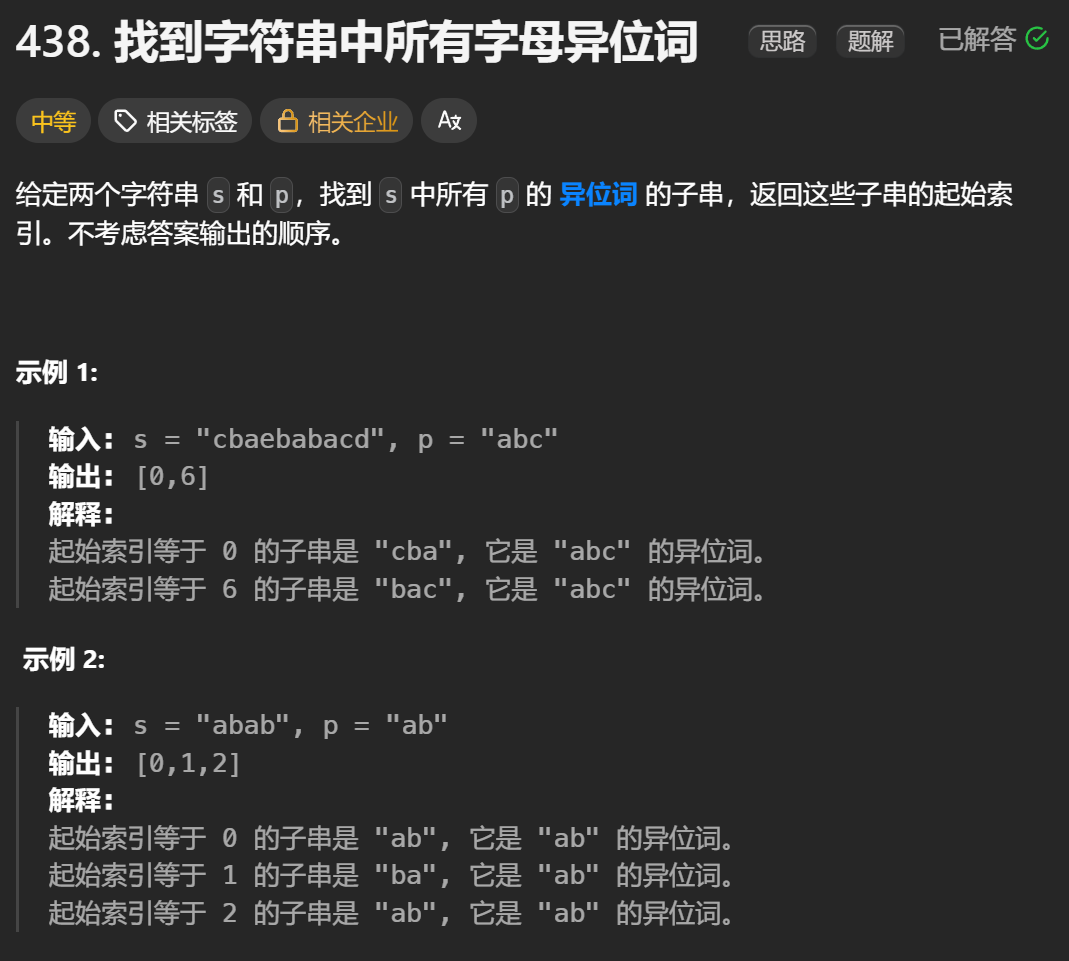

12.找到字符串中所有字母异位词

🧠 题目解析 题目描述: 给定两个字符串 s 和 p,找出 s 中所有 p 的字母异位词的起始索引。 返回的答案以数组形式表示。 字母异位词定义: 若两个字符串包含的字符种类和出现次数完全相同,顺序无所谓,则互为…...

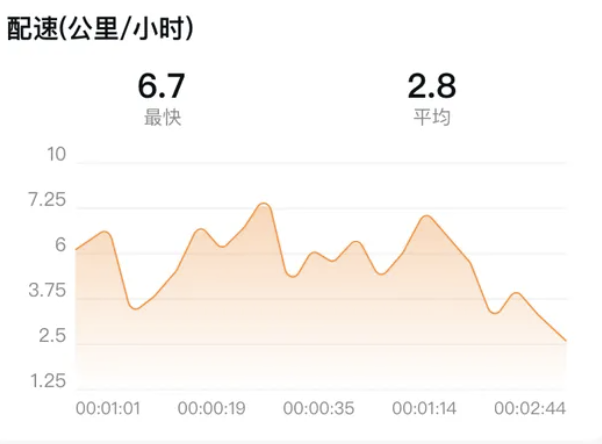

HarmonyOS运动开发:如何用mpchart绘制运动配速图表

##鸿蒙核心技术##运动开发##Sensor Service Kit(传感器服务)# 前言 在运动类应用中,运动数据的可视化是提升用户体验的重要环节。通过直观的图表展示运动过程中的关键数据,如配速、距离、卡路里消耗等,用户可以更清晰…...

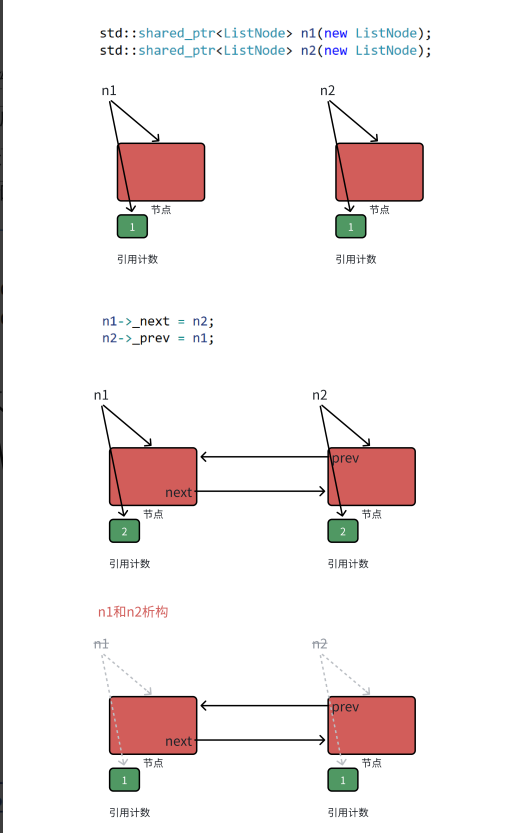

【C++进阶篇】智能指针

C内存管理终极指南:智能指针从入门到源码剖析 一. 智能指针1.1 auto_ptr1.2 unique_ptr1.3 shared_ptr1.4 make_shared 二. 原理三. shared_ptr循环引用问题三. 线程安全问题四. 内存泄漏4.1 什么是内存泄漏4.2 危害4.3 避免内存泄漏 五. 最后 一. 智能指针 智能指…...

PostgreSQL——环境搭建

一、Linux # 安装 PostgreSQL 15 仓库 sudo dnf install -y https://download.postgresql.org/pub/repos/yum/reporpms/EL-$(rpm -E %{rhel})-x86_64/pgdg-redhat-repo-latest.noarch.rpm# 安装之前先确认是否已经存在PostgreSQL rpm -qa | grep postgres# 如果存在࿰…...

Proxmox Mail Gateway安装指南:从零开始配置高效邮件过滤系统

💝💝💝欢迎莅临我的博客,很高兴能够在这里和您见面!希望您在这里可以感受到一份轻松愉快的氛围,不仅可以获得有趣的内容和知识,也可以畅所欲言、分享您的想法和见解。 推荐:「storms…...

Python 训练营打卡 Day 47

注意力热力图可视化 在day 46代码的基础上,对比不同卷积层热力图可视化的结果 import torch import torch.nn as nn import torch.optim as optim from torchvision import datasets, transforms from torch.utils.data import DataLoader import matplotlib.pypl…...