尚硅谷大数据项目《在线教育之离线数仓》笔记003

视频地址:尚硅谷大数据项目《在线教育之离线数仓》_哔哩哔哩_bilibili

目录

第8章 数仓开发之DIM层

P039

P040

P041

P042

P043

P044

P045

P046

P047

P048

第8章 数仓开发之DIM层

P039

第8章 数仓开发之DIM层

DIM层设计要点:

(1)DIM层的设计依据是维度建模理论,该层存储维度模型的维度表。

(2)DIM层的数据存储格式为orc列式存储+snappy压缩。

(3)DIM层表名的命名规范为dim_表名_全量表或者拉链表标识(full/zip)。

[2023-08-21 10:21:33] org.apache.hadoop.hive.ql.parse.SemanticException:Failed to get a spark session: org.apache.hadoop.hive.ql.metadata.HiveException: Failed to create Spark client for Spark session 2ed82e1b-8afb-4ad0-9ed2-0f84191a4343

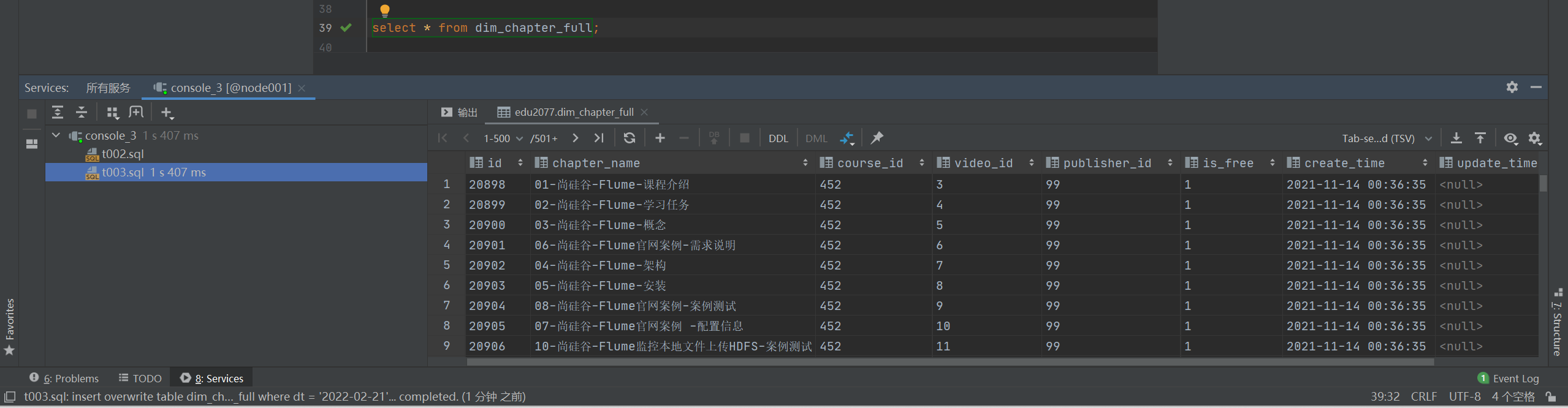

P040

show databases;use edu2077;show tables;--8.1 章节维度表(全量)

DROP TABLE IF EXISTS dim_chapter_full;

CREATE EXTERNAL TABLE dim_chapter_full

(`id` STRING COMMENT '章节ID',`chapter_name` STRING COMMENT '章节名称',`course_id` STRING COMMENT '课程ID',`video_id` STRING COMMENT '视频ID',`publisher_id` STRING COMMENT '发布者ID',`is_free` STRING COMMENT '是否免费',`create_time` STRING COMMENT '创建时间',`update_time` STRING COMMENT '更新时间'

) COMMENT '章节维度表'PARTITIONED BY (`dt` STRING)STORED AS ORCLOCATION '/warehouse/edu/dim/dim_chapter_full/'TBLPROPERTIES ('orc.compress' = 'snappy');--数据装载

--insert overwrite覆盖写,insert into会造成数据重复。

insert overwrite table dim_chapter_full partition (dt = '2022-02-21')

select id,chapter_name,course_id,video_id,publisher_id,is_free,create_time,update_time

from ods_chapter_info_full

where dt = '2022-02-21';select *

from dim_chapter_full;--8.2 课程维度表(全量)

DROP TABLE IF EXISTS dim_course_full;

CREATE EXTERNAL TABLE dim_course_full

(`id` STRING COMMENT '编号',`course_name` STRING COMMENT '课程名称',`subject_id` STRING COMMENT '学科id',`subject_name` STRING COMMENT '学科名称',`category_id` STRING COMMENT '分类id',`category_name` STRING COMMENT '分类名称',`teacher` STRING COMMENT '讲师名称',`publisher_id` STRING COMMENT '发布者id',`chapter_num` BIGINT COMMENT '章节数',`origin_price` decimal(16, 2) COMMENT '价格',`reduce_amount` decimal(16, 2) COMMENT '优惠金额',`actual_price` decimal(16, 2) COMMENT '实际价格',`create_time` STRING COMMENT '创建时间',`update_time` STRING COMMENT '更新时间',`chapters` ARRAY<STRUCT<chapter_id : STRING,chapter_name : STRING, video_id : STRING,is_free: STRING>> COMMENT '章节'

) COMMENT '课程维度表'PARTITIONED BY (`dt` STRING)STORED AS ORCLOCATION '/warehouse/edu/dim/dim_course_full/'TBLPROPERTIES ('orc.compress' = 'snappy');select *

from ods_base_source_full

where dt = '2022-02-21';select *

from ods_course_info_full

where dt = '2022-02-21';select *

from (select id,course_name,course_slogan,subject_id,teacher,publisher_id,chapter_num,origin_price,reduce_amount,actual_price,course_introduce,create_time,update_timefrom ods_course_info_fullwhere dt = '2022-02-21') ci;--ci是别名with ci as (select id,course_name,course_slogan,subject_id,teacher,publisher_id,chapter_num,origin_price,reduce_amount,actual_price,course_introduce,create_time,update_timefrom ods_course_info_fullwhere dt = '2022-02-21'

),bci as (select id, category_namefrom ods_base_category_info_fullwhere dt = '2022-02-21'),bs as (select id, subject_name, category_idfrom ods_base_subject_info_fullwhere dt = '2022-02-21'),chapter as (select course_id,--chapter_id : STRING,chapter_name : STRING, video_id : STRING,is_free : STRINGcollect_set(named_struct('chapter_id', id, 'chapter_name', chapter_name,'video_id', video_id, 'is_free', is_free)) csfrom ods_chapter_info_fullwhere dt = '2022-02-21'group by course_id)

insert overwrite table dim_course_full partition (dt = '2022-02-21')

select ci.id,course_name,subject_id,subject_name,category_id,category_name,teacher,publisher_id,chapter_num,origin_price,reduce_amount,actual_price,create_time,update_time,cs chapters

from cileft join bson ci.subject_id = bs.idleft join bcion bs.category_id = bci.idleft join chapteron ci.id = chapter.course_id;--desc function extended named_struct;select * from dim_course_full;P041

--8.3 视频维度表(全量)

show databases;--8.3 视频维度表(全量)

DROP TABLE IF EXISTS dim_video_full;

CREATE EXTERNAL TABLE dim_video_full

(`id` STRING COMMENT '编号',`video_name` STRING COMMENT '视频名称',`during_sec` BIGINT COMMENT '时长',`video_status` STRING COMMENT '状态 未上传,上传中,上传完',`video_size` BIGINT COMMENT '大小',`version_id` STRING COMMENT '版本号',`chapter_id` STRING COMMENT '章节id',`chapter_name` STRING COMMENT '章节名称',`is_free` STRING COMMENT '是否免费',`course_id` STRING COMMENT '课程id',`publisher_id` STRING COMMENT '发布者id',`create_time` STRING COMMENT '创建时间',`update_time` STRING COMMENT '更新时间'

) COMMENT '视频维度表'PARTITIONED BY (`dt` STRING)STORED AS ORCLOCATION '/warehouse/edu/dim/dim_video_zip/'TBLPROPERTIES ('orc.compress' = 'snappy');select *

from ods_video_info_full

where dt = '2022-02-21';insert overwrite table dim_video_full partition (dt = '2022-02-21')

select id,video_name,during_sec,video_status,video_size,version_id,chapter_id,chapter_name,is_free,course_id,publisher_id,create_time,update_time

from (select id,video_name,during_sec,video_status,video_size,video_url,video_source_id,version_id,chapter_id,course_id,publisher_id,create_time,update_time,deletedfrom ods_video_info_fullwhere dt = '2022-02-21'and deleted = '0') vileft join(select chapter_name,video_id,is_freefrom ods_chapter_info_fullwhere dt = '2022-02-21'

) cion vi.id = ci.video_id;select *

from dim_video_full;insert overwrite table dim_video_full partition (dt = '2022-02-21')

select vt.id,video_name,during_sec,video_status,video_size,version_id,chapter_id,chapter_name,is_free,course_id,publisher_id,create_time,update_time

from (select id,video_name,during_sec,video_status,video_size,version_id,chapter_id,course_id,publisher_id,create_time,update_timefrom ods_video_info_fullwhere dt = '2022-02-21'and deleted = '0') vtjoin(select id,chapter_name,is_freefrom ods_chapter_info_fullwhere dt = '2022-02-21') chton vt.chapter_id = cht.id;

org.apache.hadoop.hive.ql.parse.SemanticException:Failed to get a spark session: org.apache.hadoop.hive.ql.metadata.HiveException: Failed to create Spark client for Spark session 2519dff0-c795-4852-a1b4-f40ad1750136

2023/8/23

14:50 t004.sql: insert overwrite table dim_vi… on vt.chapter_id = cht.id... failed.15:00 t004.sql: insert overwrite table dim_vi… on vt.chapter_id = cht.id... failed.

org.apache.hadoop.hive.ql.parse.SemanticException:Failed to get a spark session: org.apache.hadoop.hive.ql.metadata.HiveException: Failed to create Spark client due to invalid resource request: Required executor memory (2048 MB), offHeap memory (0) MB, overhead (384 MB), and PySpark memory (0 MB) is above the max threshold (2048 MB)

报错-hive on spark执行数据导入脚本报错_dyson不只是吹风机的博客-CSDN博客

[atguigu@node001 hadoop]$ myhadoop.sh start================ 启动 hadoop集群 ================---------------- 启动 hdfs ----------------

Starting namenodes on [node001]

Starting datanodes

Starting secondary namenodes [node003]--------------- 启动 yarn ---------------

Starting resourcemanager

Starting nodemanagers--------------- 启动 historyserver ---------------

[atguigu@node001 hadoop]$ cd /opt/module/hive/hive-3.1.2/

[atguigu@node001 hive-3.1.2]$ nohup bin/hive &

[1] 11485

[atguigu@node001 hive-3.1.2]$ nohup: 忽略输入并把输出追加到"nohup.out"[atguigu@node001 hive-3.1.2]$ nohup bin/hive --service hiveserver2 &

[2] 11626

[atguigu@node001 hive-3.1.2]$ nohup: 忽略输入并把输出追加到"nohup.out"[atguigu@node001 hive-3.1.2]$ jpsall

================ node001 ================

3872 QuorumPeerMain

4291 Kafka

11381 JobHistoryServer

10583 NameNode

11626 RunJar

10747 DataNode

13660 Jps

13533 YarnCoarseGrainedExecutorBackend

11485 RunJar

11167 NodeManager

================ node002 ================

7841 Jps

5586 ResourceManager

2946 Kafka

7683 ApplicationMaster

2552 QuorumPeerMain

5384 DataNode

5711 NodeManager

================ node003 ================

6944 YarnCoarseGrainedExecutorBackend

2256 QuorumPeerMain

5040 SecondaryNameNode

4929 DataNode

2643 Kafka

5158 NodeManager

7047 Jps

[atguigu@node001 hive-3.1.2]$ P042

8.4 试卷维度表(全量)

--8.4 试卷维度表(全量)

DROP TABLE IF EXISTS dim_paper_full;

CREATE EXTERNAL TABLE dim_paper_full

(`id` STRING COMMENT '编号',`paper_title` STRING COMMENT '试卷名称',`course_id` STRING COMMENT '课程id',`create_time` STRING COMMENT '创建时间',`update_time` STRING COMMENT '更新时间',`publisher_id` STRING COMMENT '发布者id',`questions` ARRAY<STRUCT<question_id: STRING, score: DECIMAL(16, 2)>> COMMENT '题目'

) COMMENT '试卷维度表'PARTITIONED BY (`dt` STRING)STORED AS ORCLOCATION '/warehouse/edu/dim/dim_paper_full/'TBLPROPERTIES ('orc.compress' = 'snappy');select *

from ods_test_paper_full;insert overwrite table dim_paper_full partition (dt = '2022-02-21')

select id,paper_title,course_id,create_time,update_time,publisher_id,qs

from (select id,paper_title,course_id,create_time,update_time,publisher_id,deletedfrom ods_test_paper_fullwhere dt = '2022-02-21'--and deleted = '0') tpleft join (select paper_id,--question_id: STRING, score: DECIMAL(16, 2)collect_set(named_struct('question_id', id, 'score', score)) qsfrom ods_test_paper_question_fullwhere dt = '2022-02-21'and deleted = '0'group by paper_id

) pqon tp.id = pq.paper_id;select * from dim_paper_full;P043

8.5 来源维度表(全量)

8.6 题目维度表(全量)

8.7 地区维度表(全量)

--8.5 来源维度表(全量)

DROP TABLE IF EXISTS dim_source_full;

CREATE EXTERNAL TABLE dim_source_full

(`id` STRING COMMENT '编号',`source_site` STRING COMMENT '来源'

) COMMENT '来源维度表'PARTITIONED BY (`dt` STRING)STORED AS ORCLOCATION '/warehouse/edu/dim/dim_source_full/'TBLPROPERTIES ('orc.compress' = 'snappy');insert overwrite table edu2077.dim_source_full partition (dt = '2022-02-21')

select id,source_site

from edu2077.ods_base_source_full obsf

where dt = '2022-02-21';select * from dim_source_full;--8.6 题目维度表(全量)

DROP TABLE IF EXISTS dim_question_full;

CREATE EXTERNAL TABLE dim_question_full

(`id` STRING COMMENT '编号',`chapter_id` STRING COMMENT '章节id',`course_id` STRING COMMENT '课程id',`question_type` BIGINT COMMENT '题目类型',`create_time` STRING COMMENT '创建时间',`update_time` STRING COMMENT '更新时间',`publisher_id` STRING COMMENT '发布者id'

) COMMENT '题目维度表'PARTITIONED BY (`dt` STRING)STORED AS ORCLOCATION '/warehouse/edu/dim/dim_question_full/'TBLPROPERTIES ('orc.compress' = 'snappy');insert overwrite table edu2077.dim_question_fullpartition (dt = '2022-02-21')

select id,chapter_id,course_id,question_type,create_time,update_time,publisher_id

from edu2077.ods_test_question_info_full

where deleted = '0'and dt = '2022-02-21';select * from dim_question_full;--8.7 地区维度表(全量)

DROP TABLE IF EXISTS dim_province_full;

CREATE EXTERNAL TABLE dim_province_full

(`id` STRING COMMENT '编号',`name` STRING COMMENT '省名称',`region_id` STRING COMMENT '地区id',`area_code` STRING COMMENT '行政区位码',`iso_code` STRING COMMENT '国际编码',`iso_3166_2` STRING COMMENT 'ISO3166编码'

) COMMENT '地区维度表'PARTITIONED BY (`dt` STRING)STORED AS ORCLOCATION '/warehouse/edu/dim/dim_province_full/'TBLPROPERTIES ('orc.compress' = 'snappy');insert overwrite table edu2077.dim_province_full partition (dt = '2022-02-21')

select id,name,region_id,area_code,iso_code,iso_3166_2

from edu2077.ods_base_province_full

where dt = '2022-02-21';select * from dim_province_full;P044

8.8 时间维度表(特殊)

--8.8 时间维度表(特殊)

DROP TABLE IF EXISTS dim_date;

CREATE EXTERNAL TABLE dim_date

(`date_id` STRING COMMENT '日期id',`week_id` STRING COMMENT '周id,一年中的第几周',`week_day` STRING COMMENT '周几',`day` STRING COMMENT '每月的第几天',`month` STRING COMMENT '一年中的第几月',`quarter` STRING COMMENT '一年中的第几季度',`year` STRING COMMENT '年份',`is_workday` STRING COMMENT '是否是工作日',`holiday_id` STRING COMMENT '节假日'

) COMMENT '时间维度表'STORED AS ORCLOCATION '/warehouse/edu/dim/dim_date/'TBLPROPERTIES ('orc.compress' = 'snappy');DROP TABLE IF EXISTS tmp_dim_date_info;

CREATE EXTERNAL TABLE tmp_dim_date_info

(`date_id` STRING COMMENT '日',`week_id` STRING COMMENT '周id',`week_day` STRING COMMENT '周几',`day` STRING COMMENT '每月的第几天',`month` STRING COMMENT '第几月',`quarter` STRING COMMENT '第几季度',`year` STRING COMMENT '年',`is_workday` STRING COMMENT '是否是工作日',`holiday_id` STRING COMMENT '节假日'

) COMMENT '时间维度表'ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t'LOCATION '/warehouse/edu/tmp/tmp_dim_date_info/';insert overwrite table dim_date

select *

from tmp_dim_date_info;insert overwrite table dim_date

select date_id,week_id,week_day,day,month,quarter,year,is_workday,holiday_id

from tmp_dim_date_info;select * from dim_date;P045

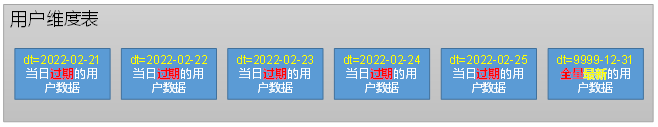

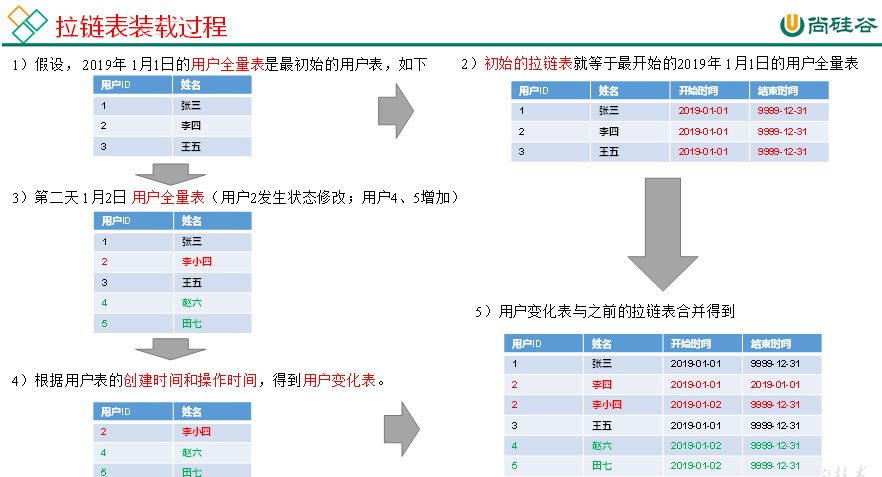

8.9 用户维度表(拉链表)

--8.9 用户维度表(拉链表)

DROP TABLE IF EXISTS dim_user_zip;

CREATE EXTERNAL TABLE dim_user_zip

(`id` STRING COMMENT '编号',`login_name` STRING COMMENT '用户名称',`nick_name` STRING COMMENT '用户昵称',`real_name` STRING COMMENT '用户姓名',`phone_num` STRING COMMENT '手机号',`email` STRING COMMENT '邮箱',`user_level` STRING COMMENT '用户级别',`birthday` STRING COMMENT '用户生日',`gender` STRING COMMENT '性别 M男,F女',`create_time` STRING COMMENT '创建时间',`operate_time` STRING COMMENT '修改时间',`status` STRING COMMENT '状态',`start_date` STRING COMMENT '开始日期',`end_date` STRING COMMENT '结束日期'

) COMMENT '用户表'PARTITIONED BY (`dt` STRING)STORED AS ORCLOCATION '/warehouse/edu/dim/dim_user_zip/'TBLPROPERTIES ('orc.compress' = 'snappy');P046

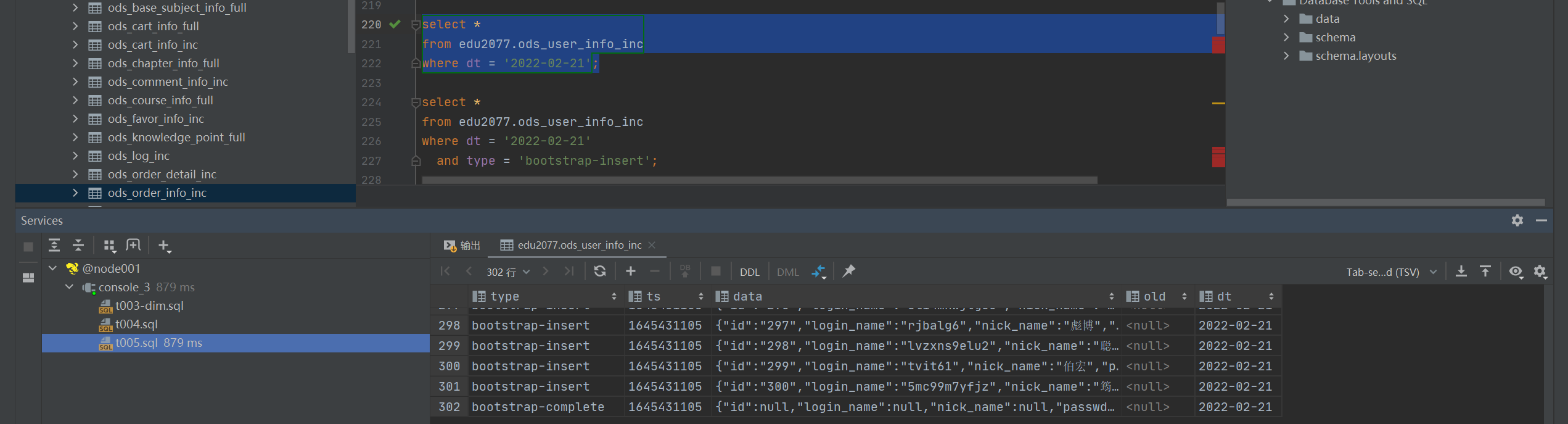

8.9 用户维度表(拉链表)

(3)首日装载

inc等增量表没有数据:尚硅谷在线教育系统项目,ods_user_info_inc这个增量表没有数据,课件上也没有相关操作,有空帮我看看?应该是执行hdfs_to_ods_db.sh这个数据装载脚本的时候,增量表数据没有被添加到hdfs里面。

启动Maxwell后,再执行hdfs_to_ods_db.sh脚本就行了。

{"id":"3","login_name":"tws1uxb5r","nick_name":"进林","passwd":null,"real_name":"贺进林","phone_num":"13443888468","email":"tws1uxb5r@aol.com","head_img":null,"user_level":"1","birthday":"1987-06-16","gender":null,"create_time":"2022-02-16 00:00:00","operate_time":null,"status":null}

{"id":"3","login_name":"tws1uxb5r","nick_name":"进林","passwd":null,"real_name":"贺进林","phone_num":"13443888468","email":"tws1uxb5r@aol.com","head_img":null,"user_level":"1","birthday":"1987-06-16","gender":null,"create_time":"2022-02-16 00:00:00","operate_time":null,"status":null

}--8.9 用户维度表(拉链表)

DROP TABLE IF EXISTS dim_user_zip;

CREATE EXTERNAL TABLE dim_user_zip

(`id` STRING COMMENT '编号',`login_name` STRING COMMENT '用户名称',`nick_name` STRING COMMENT '用户昵称',`real_name` STRING COMMENT '用户姓名',`phone_num` STRING COMMENT '手机号',`email` STRING COMMENT '邮箱',`user_level` STRING COMMENT '用户级别',`birthday` STRING COMMENT '用户生日',`gender` STRING COMMENT '性别 M男,F女',`create_time` STRING COMMENT '创建时间',`operate_time` STRING COMMENT '修改时间',`status` STRING COMMENT '状态',`start_date` STRING COMMENT '开始日期',`end_date` STRING COMMENT '结束日期'

) COMMENT '用户表'PARTITIONED BY (`dt` STRING)STORED AS ORCLOCATION '/warehouse/edu/dim/dim_user_zip/'TBLPROPERTIES ('orc.compress' = 'snappy');select * from edu2077.ods_user_info_inc

where dt = '2022-02-21';select * from edu2077.ods_user_info_inc

where dt = '2022-02-21'and type = 'bootstrap-insert';select data.id,data.login_name,data.nick_name,data.passwd,data.real_name,data.phone_num,data.email,data.head_img,data.user_level,data.birthday,data.gender,data.create_time,data.operate_time,data.status

from edu2077.ods_user_info_inc

where dt = '2022-02-21'and type = 'bootstrap-insert';insert overwrite table edu2077.dim_user_zip partition (dt = '9999-12-31')

select data.id,data.login_name,data.nick_name,md5(data.real_name),md5(if(data.phone_num regexp '^(13[0-9]|14[01456879]|15[0-35-9]|16[2567]|17[0-8]|18[0-9]|19[0-35-9])\\d{8}$',data.phone_num, null)),md5(if(data.email regexp '^[a-zA-Z0-9_-]+@[a-zA-Z0-9_-]+(\\.[a-zA-Z0-9_-]+)+$', data.email, null)),data.user_level,data.birthday,data.gender,data.create_time,data.operate_time,data.status,'2022-02-21' start_date,'9999-12-31' end_date

from edu2077.ods_user_info_inc

where dt = '2022-02-21'and type = 'bootstrap-insert';select * from dim_user_zip;P047

8.9 用户维度表(拉链表)

(4)每日装载

--8.9 用户维度表(拉链表)

--(4)每日装载

select *

from edu2077.ods_user_info_inc

where dt = '2022-02-21';select *

from edu2077.ods_user_info_inc

where dt = '2022-02-22';select *

from dim_user_zip

where dt = '9999-12-31';select data.id,data.login_name,data.nick_name,md5(data.real_name),md5(if(data.phone_num regexp '^(13[0-9]|14[01456879]|15[0-35-9]|16[2567]|17[0-8]|18[0-9]|19[0-35-9])\\d{8}$',data.phone_num, null)),md5(if(data.email regexp '^[a-zA-Z0-9_-]+@[a-zA-Z0-9_-]+(\\.[a-zA-Z0-9_-]+)+$', data.email, null)),data.user_level,data.birthday,data.gender,data.create_time,data.operate_time,data.status,'2022-02-21' start_date,'9999-12-31' end_date

from edu2077.ods_user_info_inc

where dt = '2022-02-22';select id,login_name,nick_name,real_name,phone_num,email,user_level,birthday,gender,create_time,operate_time,status,start_date

from dim_user_zip

where dt = '9999-12-31'

union

select data.id,data.login_name,data.nick_name,md5(data.real_name),md5(if(data.phone_num regexp '^(13[0-9]|14[01456879]|15[0-35-9]|16[2567]|17[0-8]|18[0-9]|19[0-35-9])\\d{8}$',data.phone_num, null)),md5(if(data.email regexp '^[a-zA-Z0-9_-]+@[a-zA-Z0-9_-]+(\\.[a-zA-Z0-9_-]+)+$', data.email, null)),data.user_level,data.birthday,data.gender,data.create_time,data.operate_time,data.status,'2022-02-21' start_date,'9999-12-31' end_datefrom edu2077.ods_user_info_inc

where dt = '2022-02-22';set hive.exec.dynamic.partition.mode=nonstrict;--关闭严格模式

insert overwrite table edu2077.dim_user_zip partition (dt)

select id,login_name,nick_name,real_name,phone_num,email,user_level,birthday,gender,create_time,operate_time,status,start_date,if(rn = 1, '9999-12-31', date_sub('2022-02-22', 1)) end_date,if(rn = 1, '9999-12-31', date_sub('2022-02-22', 1)) dt

from (select id,login_name,nick_name,real_name,phone_num,email,user_level,birthday,gender,create_time,operate_time,status,start_date,end_date,row_number() over (partition by id order by start_date desc) rnfrom (select id,login_name,nick_name,real_name,phone_num,email,user_level,birthday,gender,create_time,operate_time,status,start_date,end_datefrom edu2077.dim_user_zipwhere dt = '9999-12-31'unionselect id,login_name,nick_name,real_name,phone_num,email,user_level,birthday,gender,create_time,operate_time,status,'2020-02-22' start_date,'9999-12-31' end_datefrom (select data.id,data.login_name,data.nick_name,md5(data.real_name) real_name,md5(if(data.phone_num regexp'^(13[0-9]|14[01456879]|15[0-35-9]|16[2567]|17[0-8]|18[0-9]|19[0-35-9])\\d{8}$',data.phone_num, null)) phone_num,md5(if(data.email regexp '^[a-zA-Z0-9_-]+@[a-zA-Z0-9_-]+(\\.[a-zA-Z0-9_-]+)+$',data.email, null)) email,data.user_level,data.birthday,data.gender,data.create_time,data.operate_time,data.status,row_number() over (partition by data.id order by ts desc) rnfrom edu2077.ods_user_info_incwhere dt = '2022-02-22') t1where rn = 1) t2) t3;P048

8.10 数据装载脚本

8.10.1 首日装载脚本

8.10.2 每日装载脚本

#vim ods_to_dim_init.sh#!/bin/bash

if [ -n "$2" ] ;thendo_date=$2

elseecho "请传入日期参数"exit

fiAPP=edudim_chapter_full="

insert overwrite table ${APP}.dim_chapter_fullpartition (dt = '$do_date')

select id,chapter_name,course_id,video_id,publisher_id,is_free,create_time,update_time

from ${APP}.ods_chapter_info_full

where deleted = '0'and dt = '$do_date';"dim_course_full="

with a as(select id, category_namefrom ${APP}.ods_base_category_info_fullwhere deleted = '0'and dt = '$do_date'),b as(select id, subject_name, category_idfrom ${APP}.ods_base_subject_info_fullwhere deleted = '0'and dt = '$do_date'),c as(select id,course_name,subject_id,teacher,publisher_id,chapter_num,origin_price,reduce_amount,actual_price,create_time,update_timefrom ${APP}.ods_course_info_fullwhere deleted = '0'and dt = '$do_date'),d as(select course_id,collect_set(named_struct('chapter_id', id, 'chapter_name', chapter_name, 'video_id', video_id, 'is_free', is_free)) chaptersfrom ${APP}.ods_chapter_info_fullwhere deleted = '0'and dt = '$do_date'group by course_id)

insert overwrite table ${APP}.dim_course_full

partition(dt = '$do_date')

select c.id,course_name,subject_id,subject_name,category_id,category_name,teacher,publisher_id,chapter_num,origin_price,reduce_amount,actual_price,create_time,update_time,chapters

from cleft join bon c.subject_id = b.idleft join aon b.category_id = a.idleft join don c.id = d.course_id;"dim_video_full="

insert overwrite table ${APP}.dim_video_full partition (dt = '$do_date')

select vt.id,video_name,during_sec,video_status,video_size,version_id,chapter_id,chapter_name,is_free,course_id,publisher_id,create_time,update_time

from (select id,video_name,during_sec,video_status,video_size,version_id,chapter_id,course_id,publisher_id,create_time,update_timefrom ${APP}.ods_video_info_fullwhere dt = '$do_date' and deleted = '0') vtjoin(select id,chapter_name,is_freefrom ${APP}.ods_chapter_info_fullwhere dt = '$do_date') chton vt.chapter_id = cht.id;"dim_paper_full="

insert overwrite table ${APP}.dim_paper_full partition (dt = '$do_date')

select t1.id,paper_title,course_id,create_time,update_time,publisher_id,questions

from ${APP}.ods_test_paper_full t1left join(select paper_id,collect_set(named_struct('question_id', question_id, 'score', score)) questionsfrom ${APP}.ods_test_paper_question_fullwhere deleted = '0' and dt = '$do_date'group by paper_id) t2on t1.id = t2.paper_id

where t1.deleted = '0' and t1.dt = '$do_date';"dim_source_full="

insert overwrite table ${APP}.dim_source_full partition (dt = '$do_date')

select id,source_site

from ${APP}.ods_base_source_full obsf

where dt = '$do_date';"dim_question_full="

insert overwrite table ${APP}.dim_question_fullpartition (dt = '$do_date')

select id,chapter_id,course_id,question_type,create_time,update_time,publisher_id

from ${APP}.ods_test_question_info_full

where deleted = '0'and dt = '$do_date';"dim_province_full="

insert overwrite table ${APP}.dim_province_full partition (dt = '$do_date')

select id,name,region_id,area_code,iso_code,iso_3166_2

from ${APP}.ods_base_province_full

where dt = '$do_date';"dim_user_zip="

insert overwrite table ${APP}.dim_user_zippartition (dt = '9999-12-31')

select data.id,data.login_name,data.nick_name,md5(data.real_name),md5(if(data.phone_num regexp '^(13[0-9]|14[01456879]|15[0-35-9]|16[2567]|17[0-8]|18[0-9]|19[0-35-9])\\d{8}$',data.phone_num,null)),md5(if(data.email regexp '^[a-zA-Z0-9_-]+@[a-zA-Z0-9_-]+(\\.[a-zA-Z0-9_-]+)+$',data.email,null)),data.user_level,data.birthday,data.gender,data.create_time,data.operate_time,data.status,'$do_date' start_date,'9999-12-31' end_date

from ${APP}.ods_user_info_inc

where dt = '$do_date'and type = 'bootstrap-insert';"case $1 indim_chapter_full|dim_course_full|dim_video_full|dim_paper_full|dim_source_full|dim_question_full|dim_province_full|dim_user_zip)eval "hive -e \"\$$1\"";;"all" )hive -e "${dim_chapter_full}${dim_course_full}${dim_video_full}${dim_paper_full}${dim_source_full}${dim_question_full}${dim_province_full}${dim_user_zip}";;

esac#vim ods_to_dim.sh#!/bin/bash

if [ -n "$2" ] ;thendo_date=$2

elsedo_date=`date -d "-1 day" +%F`

fiAPP=edudim_chapter_full="

insert overwrite table ${APP}.dim_chapter_fullpartition (dt = '$do_date')

select id,chapter_name,course_id,video_id,publisher_id,is_free,create_time,update_time

from ${APP}.ods_chapter_info_full

where deleted = '0'and dt = '$do_date';"dim_course_full="

with a as(select id, category_namefrom ${APP}.ods_base_category_info_fullwhere deleted = '0'and dt = '$do_date'),b as(select id, subject_name, category_idfrom ${APP}.ods_base_subject_info_fullwhere deleted = '0'and dt = '$do_date'),c as(select id,course_name,subject_id,teacher,publisher_id,chapter_num,origin_price,reduce_amount,actual_price,create_time,update_timefrom ${APP}.ods_course_info_fullwhere deleted = '0'and dt = '$do_date'),d as(select course_id,collect_set(named_struct('chapter_id', id, 'chapter_name', chapter_name, 'video_id', video_id, 'is_free', is_free)) chaptersfrom ${APP}.ods_chapter_info_fullwhere deleted = '0'and dt = '$do_date'group by course_id)

insert overwrite table ${APP}.dim_course_full

partition(dt = '$do_date')

select c.id,course_name,subject_id,subject_name,category_id,category_name,teacher,publisher_id,chapter_num,origin_price,reduce_amount,actual_price,create_time,update_time,chapters

from cleft join bon c.subject_id = b.idleft join aon b.category_id = a.idleft join don c.id = d.course_id;"dim_video_full="

insert overwrite table ${APP}.dim_video_full partition (dt = '$do_date')

select vt.id,video_name,during_sec,video_status,video_size,version_id,chapter_id,chapter_name,is_free,course_id,publisher_id,create_time,update_time

from (select id,video_name,during_sec,video_status,video_size,version_id,chapter_id,course_id,publisher_id,create_time,update_timefrom ${APP}.ods_video_info_fullwhere dt = '$do_date' and deleted = '0') vtjoin(select id,chapter_name,is_freefrom ${APP}.ods_chapter_info_fullwhere dt = '$do_date') chton vt.chapter_id = cht.id;"dim_paper_full="

insert overwrite table ${APP}.dim_paper_full partition (dt = '$do_date')

select t1.id,paper_title,course_id,create_time,update_time,publisher_id,questions

from ${APP}.ods_test_paper_full t1left join(select paper_id,collect_set(named_struct('question_id', question_id, 'score', score)) questionsfrom ${APP}.ods_test_paper_question_fullwhere deleted = '0' and dt = '$do_date'group by paper_id) t2on t1.id = t2.paper_id

where t1.deleted = '0' and t1.dt = '$do_date';"dim_source_full="

insert overwrite table ${APP}.dim_source_full partition (dt = '$do_date')

select id,source_site

from ${APP}.ods_base_source_full obsf

where dt = '$do_date';"dim_question_full="

insert overwrite table ${APP}.dim_question_fullpartition (dt = '$do_date')

select id,chapter_id,course_id,question_type,create_time,update_time,publisher_id

from ${APP}.ods_test_question_info_full

where deleted = '0'and dt = '$do_date';"dim_province_full="

insert overwrite table ${APP}.dim_province_full partition (dt = '$do_date')

select id,name,region_id,area_code,iso_code,iso_3166_2

from ${APP}.ods_base_province_full

where dt = '$do_date';"dim_user_zip="

set hive.exec.dynamic.partition.mode=nonstrict;

insert overwrite table ${APP}.dim_user_zip

partition(dt)

selectid,login_name,nick_name,real_name,phone_num,email,user_level,birthday,gender,create_time,operate_time,status,start_date,if(rn=1,'9999-12-31',date_sub('$do_date',1)) end_date,if(rn=1,'9999-12-31',date_sub('$do_date',1)) dt

from

(selectid,login_name,nick_name,real_name,phone_num,email,user_level,birthday,gender,create_time,operate_time,status,start_date,end_date,row_number() over (partition by id order by start_date desc) rnfrom(selectid,login_name,nick_name,real_name,phone_num,email,user_level,birthday,gender,create_time,operate_time,status,start_date,end_datefrom ${APP}.dim_user_zipwhere dt='9999-12-31'unionselectid,login_name,nick_name,real_name,phone_num,email,user_level,birthday,gender,create_time,operate_time,status,'2020-02-22' start_date,'9999-12-31' end_datefrom(selectdata.id,data.login_name,data.nick_name,md5(data.real_name) real_name,md5(if(data.phone_num regexp '^(13[0-9]|14[01456879]|15[0-35-9]|16[2567]|17[0-8]|18[0-9]|19[0-35-9])\\d{8}$',data.phone_num,null)) phone_num,md5(if(data.email regexp '^[a-zA-Z0-9_-]+@[a-zA-Z0-9_-]+(\\.[a-zA-Z0-9_-]+)+$',data.email,null)) email,data.user_level,data.birthday,data.gender,data.create_time,data.operate_time,data.status,row_number() over (partition by data.id order by ts desc) rnfrom ${APP}.ods_user_info_incwhere dt='$do_date')t1where rn=1)t2

)t3;"case $1 indim_chapter_full|dim_course_full|dim_video_full|dim_paper_full|dim_source_full|dim_question_full|dim_province_full|dim_user_zip)eval "hive -e \"\$$1\"";;"all" )hive -e "${dim_chapter_full}${dim_course_full}${dim_video_full}${dim_paper_full}${dim_source_full}${dim_question_full}${dim_province_full}${dim_user_zip}";;

esac相关文章:

尚硅谷大数据项目《在线教育之离线数仓》笔记003

视频地址:尚硅谷大数据项目《在线教育之离线数仓》_哔哩哔哩_bilibili 目录 第8章 数仓开发之DIM层 P039 P040 P041 P042 P043 P044 P045 P046 P047 P048 第8章 数仓开发之DIM层 P039 第8章 数仓开发之DIM层 DIM层设计要点: (1&a…...

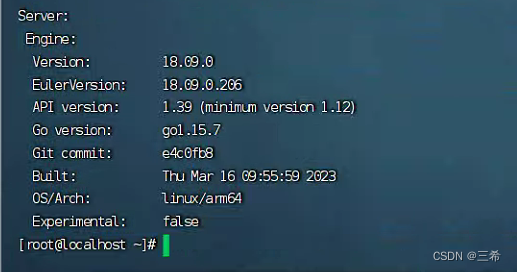

银河麒麟arm版服务器安装docker

安装 在线安装:输入下面命令,等待安装完成即可 #关闭防火墙 systemctl stop firewalld.service systemctl disable firewalld.serviceyum install -y docker# 修改docker拉取源为国内 rm -rf /etc/docker mkdir -p /etc/docker touch /etc/docker/da…...

三个视角解读ChatGPT在教学创新中的应用

第一,我们正处于一个学生使用ChatGPT等AI工具完成作业的时代,传统的教育方法需要适应变化。 教育工作者不应该因为学生利用了先进技术而惩罚他们,相反,应该专注于让学生去挑战超越AI能力范围的任务。这需要我们重新思考教育策略和…...

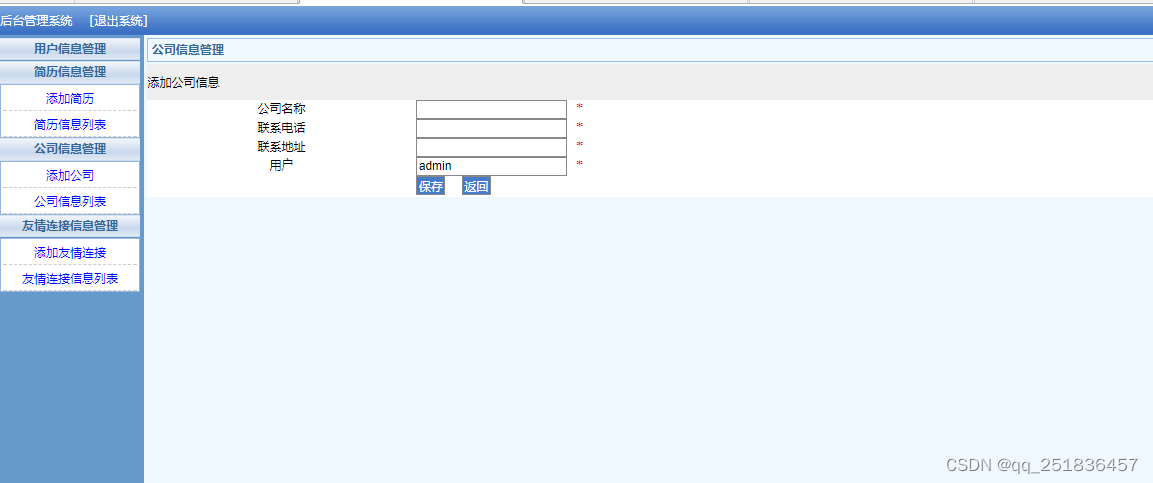

PHP求职招聘系统Dreamweaver开发mysql数据库web结构php编程计算机网页

一、源码特点 PHP 求职招聘系统是一套完善的web设计系统,对理解php编程开发语言有帮助,系统具有完整的源代码和数据库,系统主要采用B/S模式开发。 源码 https://download.csdn.net/download/qq_41221322/88240283 论文 https://down…...

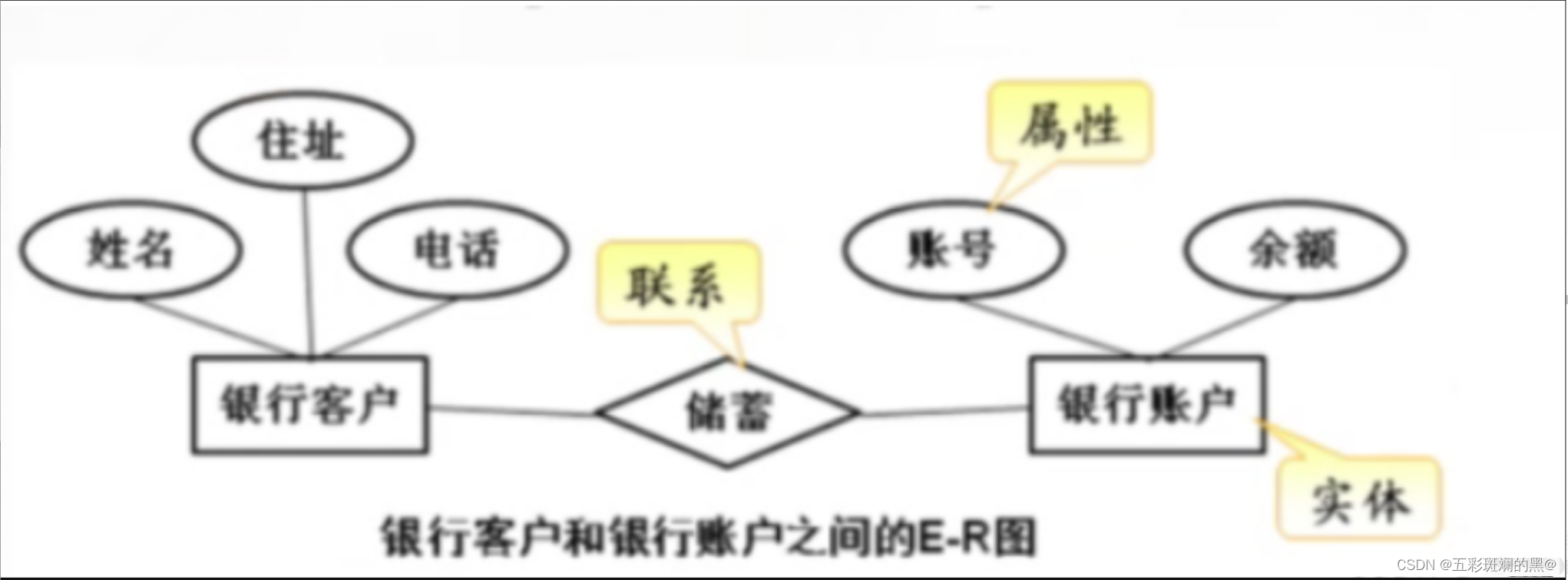

Mysql 基本概念

数据库的组成 数据 数据是描述事务的符号记录;包括数字、文字、图形、图像、声音、档案记录等 以“记录“形式按统一的格式进行存储 表 将不同的记录以行和列的方式组合成了表 用来存储具体数据 数据库 它就是所有不同属性表的集合 以一定的组织方式存储的…...

python开发--文件敏感信息识别

0x00 背景 文档中敏感信息识别。不限于word, pdf 等文件格式中的敏感信息及其中的图片敏感信息识别。 0x01 识别原理 以word文档为例 .docx文件有很多种结构,这些结构在python-docx中用3种不同的类型来表示:最高一层是Document对象表示文档࿰…...

【力扣】746. 使用最小花费爬楼梯 <动态规划>

【力扣】746. 使用最小花费爬楼梯 给你一个整数数组 cost ,其中 cost[i] 是从楼梯第 i 个台阶向上爬需要支付的费用。一旦你支付此费用,即可选择向上爬一个或者两个台阶。你可以选择从下标为 0 或下标为 1 的台阶开始爬楼梯。 请你计算并返回达到楼梯顶…...

)

sftp命令 添加端口(亲测)

要在sftp命令中指定端口,请使用以下语法: sftp -oPort<port_number> <username><host> 其中,<port_number>是你要连接的SFTP服务器的端口号,<username>是登录SFTP服务器所使用的用户名࿰…...

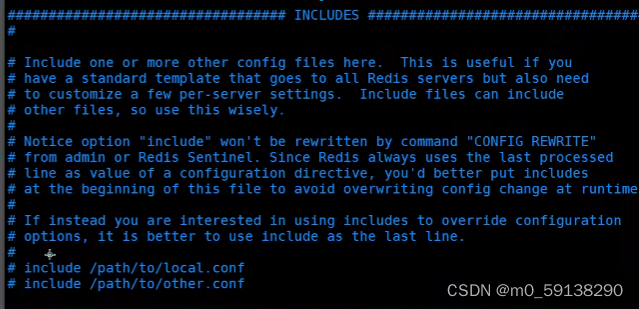

Redis.conf详解

Redis.conf详解 配置文件unit单位对大小写不敏感 包含 网络 bind 127.0.0.1 # 绑定的ip protected-mode yes # 保护模式 port 6379 # 端口设置通用 GENERAL daemonize yes # 以守护进程的方式运行 默认为no pidfile /var/run/redis_6379.pid #如果以后台的方式运行ÿ…...

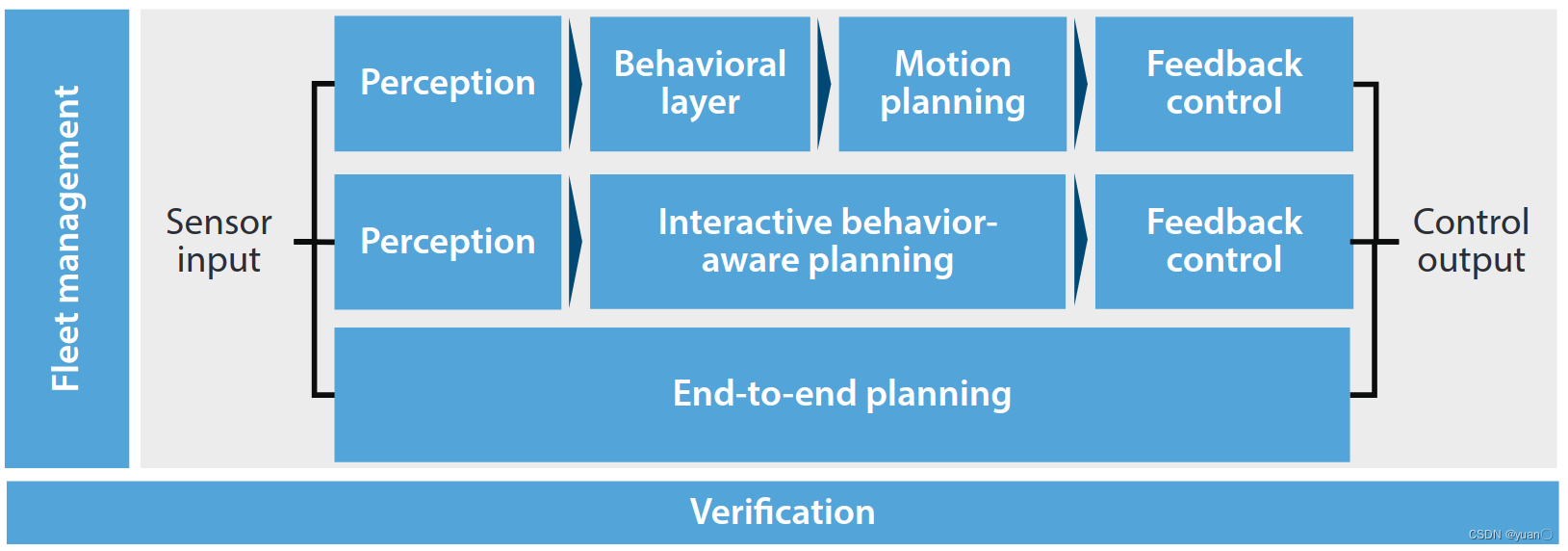

【论文笔记】Planning and Decision-Making for Autonomous Vehicles

文章目录 Summary1. INTRODUCTION2. MOTION PLANNING AND CONTROL2.1. Vehicle Dynamics and Control2.2. Parallel Autonomy2.3. Motion Planning for Autonomous Vehicles 3. INTEGRATED PERCEPTION AND PLANNING3.1. From Classical Perception to Current Challenges in Ne…...

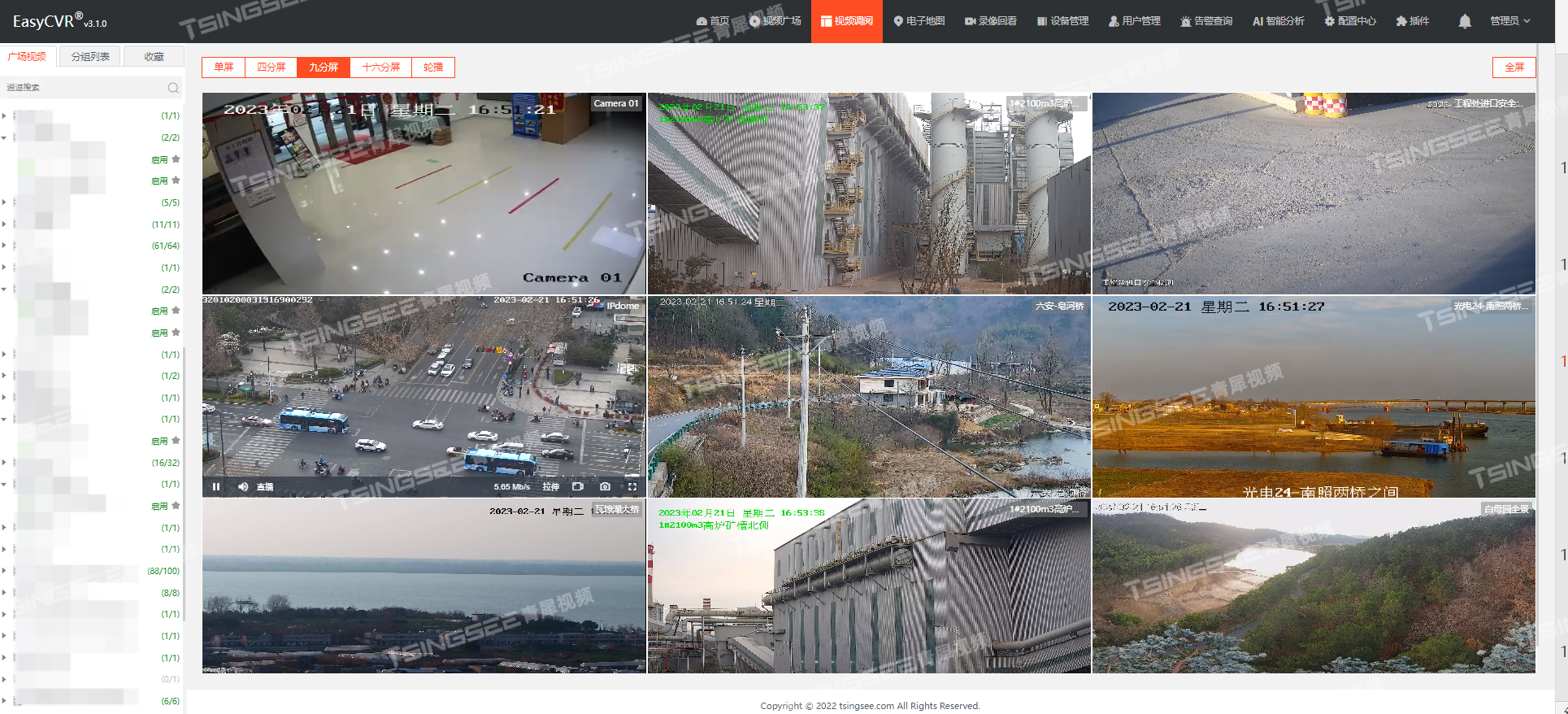

视频云存储/安防监控EasyCVR视频汇聚平台接入GB国标设备时,无法显示通道信息该如何解决?

安防视频监控/视频集中存储/云存储/磁盘阵列EasyCVR平台可拓展性强、视频能力灵活、部署轻快,可支持的主流标准协议有国标GB28181、RTSP/Onvif、RTMP等,以及支持厂家私有协议与SDK接入,包括海康Ehome、海大宇等设备的SDK等。平台既具备传统安…...

git中,add到暂存区,commit且push之后,暂存区域里还有内容吗

在Git中,使用git add命令将更改添加到暂存区后,提交这些更改并将其推送(push)到远程仓库后,暂存区中的内容会被清空。 具体的流程如下: 使用git add命令将更改添加到暂存区。这样,暂存区将保存…...

java中用SXSSFWorkbook把多个字段的list数据和单个实体dto导出到excel如何导出到多个sheet页详细实例?

要使用SXSSFWorkbook将多个字段的List数据和单个实体DTO导出到多个Sheet页,你可以按照以下步骤进行操作: 创建一个SXSSFWorkbook对象作为工作簿。针对每个字段的List数据,创建一个新的Sheet页,并将数据写入该Sheet页。创建一个新…...

ES基础操作

1.创建索引 在 Postman 中,向 ES 服务器发 PUT 请求 : http://127.0.0.1:9200/shopping 后台日志 重复发送 PUT 请求添加索引 : http://127.0.0.1:9200/shopping ,会返回错误信息 : 2.获取单个索引相关信息 在 Postman 中&#…...

PCIE超高速实时运动控制卡在六面外观视觉检测上的应用

市场应用背景 XPCIE1028超高速实时运动控制卡在六面外观检测高速视觉筛选中的应用,结合正运动技术提供的专用筛选机调试软件,可实现15000pcs/分钟的IO触发检测速度,只需简单参数设置,搭配图像采集硬件和视觉处理软件,…...

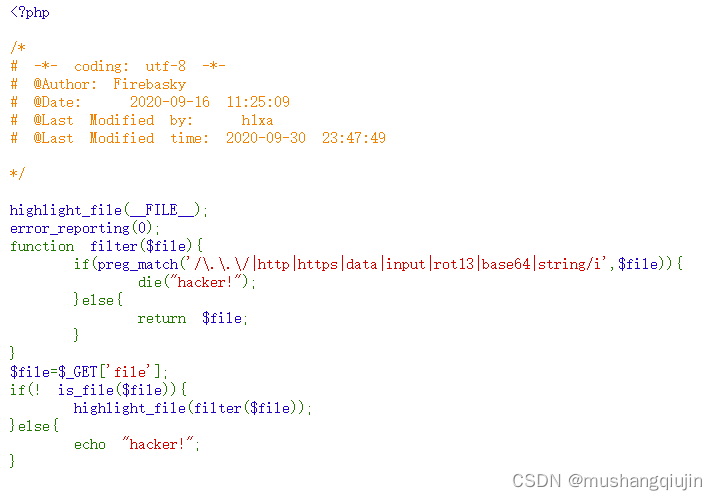

ctfshow web入门 php特性 web108-web112

1.web108 strrev() 反转字符串 <?php echo strrev("Hello world!"); // 输出 "!dlrow olleH" ?> ereg 存在空字符截断(只会匹配%00前面的字符),这个函数匹配到为true,没有匹配到为false,877为0x36d的十进制数值 payload: …...

数据可视化是什么?有什么工具?

一、什么是数据可视化? 数据可视化是一种通过图表、图形、地图和其他视觉元素将数据呈现给用户的方式。它是将复杂的数据转化为易于理解和解释的视觉形式的过程。数据可视化旨在帮助用户发现数据中的模式、趋势和关联,并从中获得洞察力。 数据可视化的…...

PC端版面设计之尾部设计

1、莫拉王子,底部就放了一个返回顶部 2 麻辣王子就放了一个认证--放了产地和得到的奖 3 阿芙:就是精油 4阿芙放的是品牌故事 5 这里可以做微博粉丝群体 6 基本返回底部是一个标配,点一下就可以反悔了 7 加一下旺旺店铺 8 BetyBoop的底部 9 底部 10 返回底…...

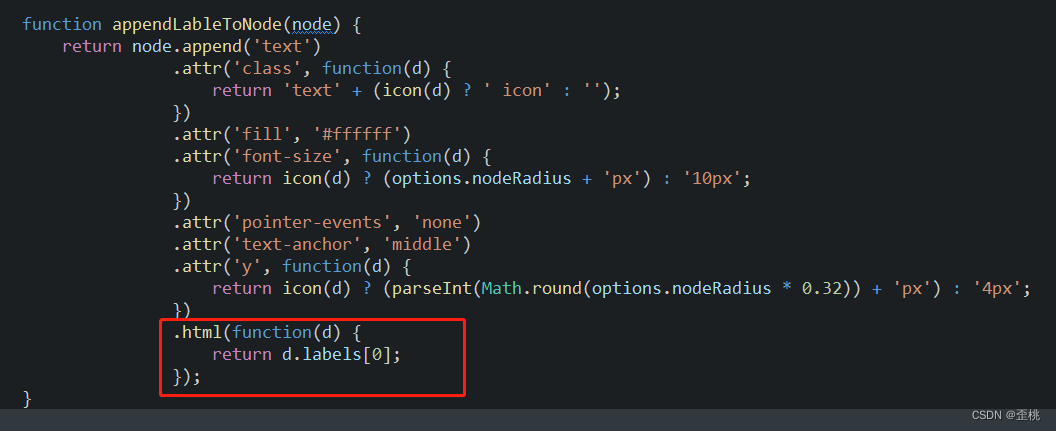

neo4jd3拓扑节点显示为节点标签(自定义节点显示)

需求描述:如下图所示,我的拓扑图中有需要不同类型的标签节点,我希望每个节点中显示的是节点的标签 在官方示例中,我们可以看到,节点里面是可以显示图标的,现在我们想将下面的图标换成我们自定义的内容 那…...

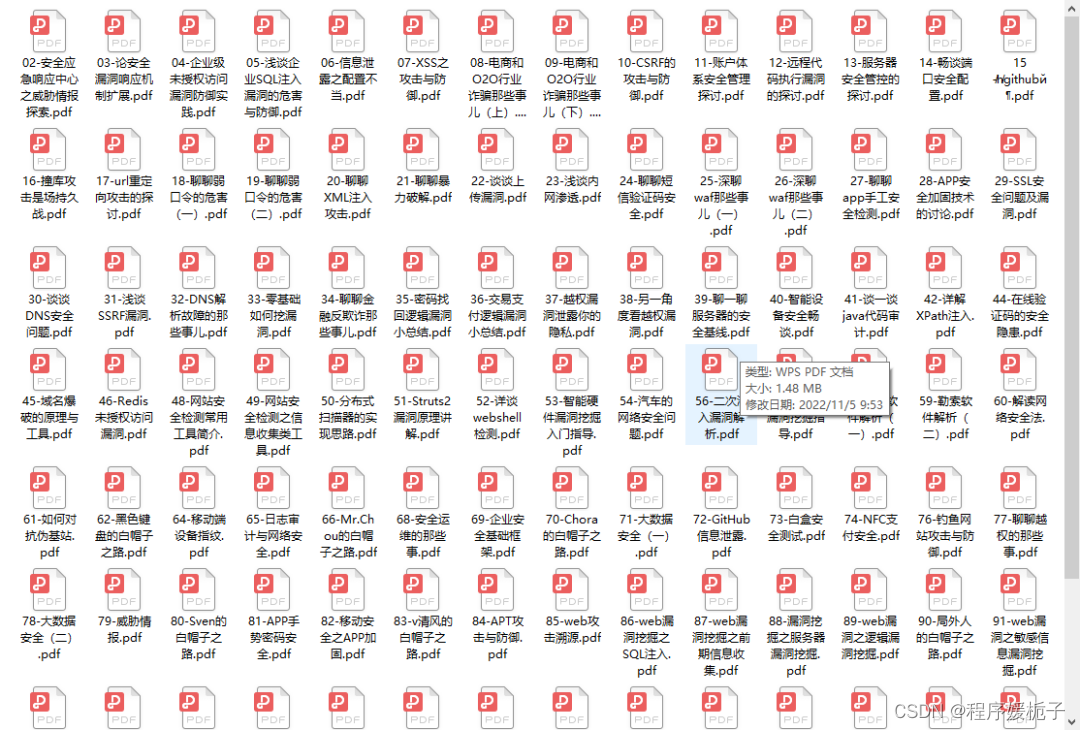

网络安全(黑客)了解学习路线

谈起黑客,可能各位都会想到:盗号,其实不尽然;黑客是一群喜爱研究技术的群体,在黑客圈中,一般分为三大圈:娱乐圈 技术圈 职业圈。 娱乐圈:主要是初中生和高中生较多,玩网恋…...

使用VSCode开发Django指南

使用VSCode开发Django指南 一、概述 Django 是一个高级 Python 框架,专为快速、安全和可扩展的 Web 开发而设计。Django 包含对 URL 路由、页面模板和数据处理的丰富支持。 本文将创建一个简单的 Django 应用,其中包含三个使用通用基本模板的页面。在此…...

Golang 面试经典题:map 的 key 可以是什么类型?哪些不可以?

Golang 面试经典题:map 的 key 可以是什么类型?哪些不可以? 在 Golang 的面试中,map 类型的使用是一个常见的考点,其中对 key 类型的合法性 是一道常被提及的基础却很容易被忽视的问题。本文将带你深入理解 Golang 中…...

python/java环境配置

环境变量放一起 python: 1.首先下载Python Python下载地址:Download Python | Python.org downloads ---windows -- 64 2.安装Python 下面两个,然后自定义,全选 可以把前4个选上 3.环境配置 1)搜高级系统设置 2…...

基础光照(Basic Lighting))

C++.OpenGL (10/64)基础光照(Basic Lighting)

基础光照(Basic Lighting) 冯氏光照模型(Phong Lighting Model) #mermaid-svg-GLdskXwWINxNGHso {font-family:"trebuchet ms",verdana,arial,sans-serif;font-size:16px;fill:#333;}#mermaid-svg-GLdskXwWINxNGHso .error-icon{fill:#552222;}#mermaid-svg-GLd…...

【python异步多线程】异步多线程爬虫代码示例

claude生成的python多线程、异步代码示例,模拟20个网页的爬取,每个网页假设要0.5-2秒完成。 代码 Python多线程爬虫教程 核心概念 多线程:允许程序同时执行多个任务,提高IO密集型任务(如网络请求)的效率…...

第 86 场周赛:矩阵中的幻方、钥匙和房间、将数组拆分成斐波那契序列、猜猜这个单词

Q1、[中等] 矩阵中的幻方 1、题目描述 3 x 3 的幻方是一个填充有 从 1 到 9 的不同数字的 3 x 3 矩阵,其中每行,每列以及两条对角线上的各数之和都相等。 给定一个由整数组成的row x col 的 grid,其中有多少个 3 3 的 “幻方” 子矩阵&am…...

Mobile ALOHA全身模仿学习

一、题目 Mobile ALOHA:通过低成本全身远程操作学习双手移动操作 传统模仿学习(Imitation Learning)缺点:聚焦与桌面操作,缺乏通用任务所需的移动性和灵活性 本论文优点:(1)在ALOHA…...

python报错No module named ‘tensorflow.keras‘

是由于不同版本的tensorflow下的keras所在的路径不同,结合所安装的tensorflow的目录结构修改from语句即可。 原语句: from tensorflow.keras.layers import Conv1D, MaxPooling1D, LSTM, Dense 修改后: from tensorflow.python.keras.lay…...

【Java学习笔记】BigInteger 和 BigDecimal 类

BigInteger 和 BigDecimal 类 二者共有的常见方法 方法功能add加subtract减multiply乘divide除 注意点:传参类型必须是类对象 一、BigInteger 1. 作用:适合保存比较大的整型数 2. 使用说明 创建BigInteger对象 传入字符串 3. 代码示例 import j…...

LangChain知识库管理后端接口:数据库操作详解—— 构建本地知识库系统的基础《二》

这段 Python 代码是一个完整的 知识库数据库操作模块,用于对本地知识库系统中的知识库进行增删改查(CRUD)操作。它基于 SQLAlchemy ORM 框架 和一个自定义的装饰器 with_session 实现数据库会话管理。 📘 一、整体功能概述 该模块…...