PyTorch中的pyi檔案生成機制

PyTorch中的pyi檔案生成機制

- 前言

- pyi檔

- 由py生成pyi.in

- 由pyi.in生成pyi

- torch/CMakeLists.txt

- tools/pyi/gen_pyi.py

- gen_pyi

- native_functions

- rand.names & rand.names_out

- rand.generator_with_names & rand.generator_with_names_out

- rand

- rand.generator

- rand.out

- rand.generator_out

- add.Tensor && add.out

- add_.Tensor && add.out

- add.out

- function_signatures

- rand.names & rand.names_out

- rand.generator_with_names & rand.generator_with_names_out

- rand

- rand.generator

- rand.out

- rand.generator_out

- add.Tensor && add.out

- add_.Tensor && add.out

- add.out

- sig_groups

- rand.generator_with_names & rand.generator_with_names_out

- rand.generator & rand.generator_out

- rand.names & rand.names_out

- rand & rand.out

- add.Tensor & add.out

- add & add.Tensor & add.out

- unsorted_function_hints

- rand

- add

- function_hints

- rand

- add

- hinted_function_names

- all_symbols

- all_directive

- env

- gen_nn_functional

- datapipe.pyi

- 生成結果

- 使用pyi做類型檢查

前言

在PyTorch中如果查找python函數的定義,十有八九會跳轉到torch/_C/_VariableFunctions.pyi這個檔案。但是如果去PyTorch的github repo上尋找這個檔案,只能找到一個跟它名字類似的torch/_C/_VariableFunctions.pyi.in,卻找不到torch/_C/_VariableFunctions.pyi這個檔案本身。

如果打開torch/_C/_VariableFunctions.pyi去看:

# @generated from torch/_C/_VariableFunctions.pyi.in

才發現原來首行就說了:它是在編譯時才由torch/_C/_VariableFunctions.pyi.in動態生成的。

本篇就是要探討PyTorch中pyi檔案的生成機制。pyi檔案的生成過程大致可分為以下兩步:

-

由py生成pyi.in

-

由pyi.in生成pyi

但在這之前,先來看一下pyi檔在Python中的作用為何。

pyi檔

首先來看一下pyi這個檔案類型的名稱由來,根據What does “i” represent in Python .pyi extension?:

The i in .pyi stands for ‘interface’.The .pyi extension was first mentioned in this GitHub issue thread where JukkaL says:I'd probably prefer an extension with just a single dot. It also needs to be something that is not in use (it should not be used by cython, etc.). .pys seems to be used in Windows (or was). Maybe .pyi, where i stands for an interface definition?

可以知道,pyi中的i代表的是interface。

pyi implements "stub" file (definition from Martin Fowler)Stubs: provide canned answers to calls made during the test, usually not responding at all to anything outside what's programmed in for the test.

而它代表的涵意則是stub(樁/存根),詳見樁 (計算機):

樁[1](Stub / Method Stub )是指用來替換一部分功能的程序段。樁程序可以用來模擬已有程序的行為(比如一個遠端機器的過程)或是對將要開發的代碼的一種臨時替代。因此,打樁技術在程序移植、分布式計算、通用軟體開發和測試中用處很大。

正如pyi文件是干嘛的?(一文读懂Python的存根文件和类型检查)中所說,而pyi檔的作用只是在IDE中給type hint的,並不是必須的。

在PyTorch中也是一樣,torch/_C/_VariableFunctions.pyi僅用於類型提示。Python函數與C++函數的關聯實際上是由torch/csrc/autograd/generated/python_torch_functions_i.cpp所指定,而該檔案也是在編譯時自動生成的,詳見PyTorch中的python_torch_functions_i.cpp檔案生成機制。

由py生成pyi.in

PyTorch源碼中有以下.pyi.in檔:

torch/_C/__init__.pyi.in

torch/_C/_nn.pyi.in

torch/_C/return_types.pyi.in

torch/_C/_VariableFunctions.pyi.in

torch/nn/functional.pyi.in

torch/utils/data/datapipes/datapipe.pyi.in

根據torch/nn/functional.pyi.in中的注釋:

# These stubs were generated by running stubgen (`stubgen --parse-only functional.py`), followed by manual cleaning.

functional.pyi.in是用mypy的stubgen工具由functional.py生成後手動編輯而成的。

試著自己對torch/nn/functional.py跑跑看stubgen,首先把functional.py這個檔案複製到一個合適的地方,然後下:

stubgen functional.py

如果出現以下跟import相關的錯誤,先手動把對應的行數注釋掉就好:

Critical error during semantic analysis: functional.py:23: error: No parent module -- cannot perform relative import

functional.py:24: error: No parent module -- cannot perform relative import

先只關注以下這一段:

def fractional_max_pool2d_with_indices(input: Tensor, kernel_size: BroadcastingList2[int],output_size: Optional[BroadcastingList2[int]] = None,output_ratio: Optional[BroadcastingList2[float]] = None,return_indices: bool = False,_random_samples: Optional[Tensor] = None

) -> Tuple[Tensor, Tensor]:# ...fractional_max_pool2d = boolean_dispatch(arg_name="return_indices",arg_index=4,default=False,if_true=fractional_max_pool2d_with_indices,if_false=_fractional_max_pool2d,module_name=__name__,func_name="fractional_max_pool2d",

)

生成的functional.pyi裡對應的內容:

# ...

def fractional_max_pool2d_with_indices(input: Tensor, kernel_size: BroadcastingList2[int], output_size: Optional[BroadcastingList2[int]] = ..., output_ratio: Optional[BroadcastingList2[float]] = ..., return_indices: bool = ..., _random_samples: Optional[Tensor] = ...) -> Tuple[Tensor, Tensor]: ...fractional_max_pool2d: Incomplete

# ...

fractional_max_pool2d_with_indices這個函數的簽名與原本的幾乎一致,而fractional_max_pool2d則因為無法推斷被標注為Incomplete。

照理說.pyi.in檔是由.py檔生成的,但是torch/_C目錄下的.pyi.in檔都沒有對應的.py檔,推測是由多個.py檔合併到同一個.pyi.in檔而來的。

由pyi.in生成pyi

一般來說.pyi檔是由stubgen生成的,但在PyTorch中則是先用stubgen生成並手動編輯後得到pyi.in檔,然後再利用Python腳本由.pyi.in檔生成的。

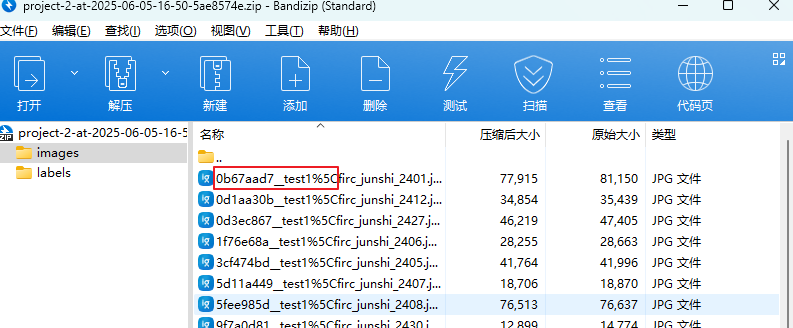

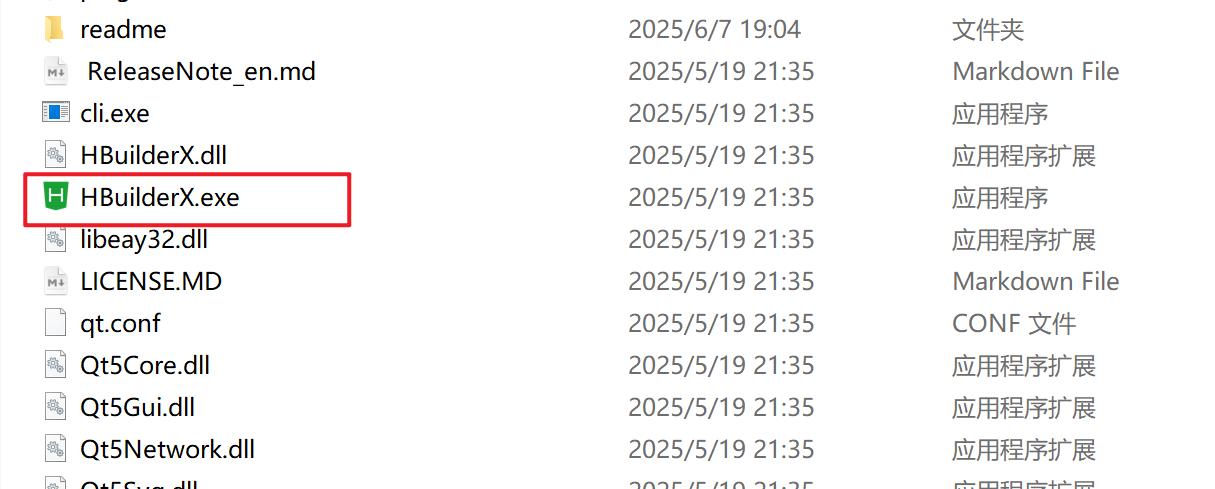

torch/CMakeLists.txt

torch/CMakeLists.txt

新增一個名為torch_python_stubs的custom target,依賴於如下的pyi檔。(關於add_custom_target和接下來會看到的add_custom_command詳見cmake的add_custom_command及add_custom_target。)

add_custom_target(torch_python_stubs DEPENDS"${TORCH_SRC_DIR}/_C/__init__.pyi""${TORCH_SRC_DIR}/_C/_VariableFunctions.pyi""${TORCH_SRC_DIR}/nn/functional.pyi""${TORCH_SRC_DIR}/utils/data/datapipes/datapipe.pyi"

)

查看如下add_custom_command的OUTPUT參數,可以知道這個custom command正是用於生成torch_python_stubs所依賴的前三個pyi檔。至於剩下的datapipe.pyi是如何生成的,詳見datapipe.pyi章節。

file(GLOB_RECURSE torchgen_python "${PROJECT_SOURCE_DIR}/torchgen/*.py")

file(GLOB_RECURSE autograd_python "${TOOLS_PATH}/autograd/*.py")

file(GLOB_RECURSE pyi_python "${TOOLS_PATH}/pyi/*.py")

add_custom_command(OUTPUT"${TORCH_SRC_DIR}/_C/__init__.pyi""${TORCH_SRC_DIR}/_C/_VariableFunctions.pyi""${TORCH_SRC_DIR}/nn/functional.pyi"COMMAND"${PYTHON_EXECUTABLE}" -mtools.pyi.gen_pyi--native-functions-path "aten/src/ATen/native/native_functions.yaml"--tags-path "aten/src/ATen/native/tags.yaml"--deprecated-functions-path "tools/autograd/deprecated.yaml"DEPENDS"${TORCH_SRC_DIR}/_C/__init__.pyi.in""${TORCH_SRC_DIR}/_C/_VariableFunctions.pyi.in""${TORCH_SRC_DIR}/nn/functional.pyi.in""${TORCH_ROOT}/aten/src/ATen/native/native_functions.yaml""${TORCH_ROOT}/aten/src/ATen/native/tags.yaml""${TORCH_ROOT}/tools/autograd/deprecated.yaml"${pyi_python}${autograd_python}${torchgen_python}WORKING_DIRECTORY"${TORCH_ROOT}"

)

這一段的入口是add_custom_command中的COMMAND,它透過"${PYTHON_EXECUTABLE}" -mtools.pyi.gen_pyi調用tools/pyi/gen_pyi.py,輸入則是DEPENDS區塊中寫的_C/__init__.pyi.in, _C/_VariableFunctions.pyi.in 及nn/functional.pyi.in,程序執行完後會生成OUTPUT區塊中寫的三個pyi檔。

torch/_C/_nn.pyi和torch/_C/return_types.pyi也是由tools/pyi/gen_pyi.py生成的,為什麼沒寫在add_custom_target和add_custom_command的DEPENDS和OUTPUT裡?

新增一個名為torch_python的shared library,運行過後會生成build/lib/libtorch_python.so。

add_library(torch_python SHARED ${TORCH_PYTHON_SRCS})

接著宣告torch_python依賴於torch_python_stubs這個custom target。

add_dependencies(torch_python torch_python_stubs)

在非MacOS的系統上都會建構一個名為nnapi_backend的library,它的依賴中就有torch_python。

# Skip building this library under MacOS, since it is currently failing to build on Mac

# Github issue #61930

if(NOT ${CMAKE_SYSTEM_NAME} MATCHES "Darwin")# Add Android Nnapi delegate libraryadd_library(nnapi_backend SHARED${TORCH_SRC_DIR}/csrc/jit/backends/nnapi/nnapi_backend_lib.cpp${TORCH_SRC_DIR}/csrc/jit/backends/nnapi/nnapi_backend_preprocess.cpp)# Pybind11 requires explicit linking of the torch_python librarytarget_link_libraries(nnapi_backend PRIVATE torch torch_python pybind::pybind11)

endif()

總結一下,就是有nnapi_backend -> torch_python -> torch_python_stubs -> torch/_C/__init__.pyi, torch/_C/_VariableFunctions.pyi, torch/nn/functional.pyi間的層層依賴,所以要建構nnapi_backend這個library時才會調用tools/pyi/gen_pyi.py去生成.pyi檔。

tools/pyi/gen_pyi.py

CMakeLists.txt中透過"${PYTHON_EXECUTABLE}" -mtools.pyi.gen_pyi調用了tools/pyi/gen_pyi.py, 它的功用是由.pyi.in檔生成.pyi檔。

def main() -> None:parser = argparse.ArgumentParser(description="Generate type stubs for PyTorch")parser.add_argument("--native-functions-path",metavar="NATIVE",default="aten/src/ATen/native/native_functions.yaml",help="path to native_functions.yaml",)parser.add_argument("--tags-path",metavar="TAGS",default="aten/src/ATen/native/tags.yaml",help="path to tags.yaml",)parser.add_argument("--deprecated-functions-path",metavar="DEPRECATED",default="tools/autograd/deprecated.yaml",help="path to deprecated.yaml",)parser.add_argument("--out", metavar="OUT", default=".", help="path to output directory")args = parser.parse_args()fm = FileManager(install_dir=args.out, template_dir=".", dry_run=False)gen_pyi(args.native_functions_path, args.tags_path, args.deprecated_functions_path, fm)if __name__ == "__main__":main()

gen_pyi.py中的注釋:

- We start off with a hand-written __init__.pyi.in file. Thisfile contains type definitions for everything we cannot automaticallygenerate, including pure Python definitions directly in __init__.py(the latter case should be pretty rare).- We go through automatically bound functions based on thetype information recorded in native_functions.yaml andgenerate type hints for them (generate_type_hints)

native_functions.yaml中記錄了自動綁定函數(automatically bound functions,猜測是Python與C++函數的綁定)的類型資訊,gen_pyi.py會依據這些類型資訊用generate_type_hints函數(待會會在unsorted_function_hints一節出現)生成類型提示。

gen_pyi

tools/pyi/gen_pyi.py

這個函數的功用是由_C/__init__.pyi.in, _C/_VariableFunctions.pyi.in及torch/_C/return_types.pyi.in生成_C/__init__.pyi, _C/_VariableFunctions.pyi, torch/_VF.pyi和torch/return_types.pyi。

def gen_pyi(native_yaml_path: str,tags_yaml_path: str,deprecated_yaml_path: str,fm: FileManager,

) -> None:"""gen_pyi()This function generates a pyi file for torch."""# ...

前三個參數預設為:

native_yaml_path:aten/src/ATen/native/native_functions.yamltags_yaml_path:aten/src/ATen/native/tags.yamldeprecated_yaml_path:tools/autograd/deprecated.yaml

fm建構子中的兩個參數如下:

install_dir:args.out,也就是’.’template_dir:‘.’

native_functions

解析native_functions.yaml與tags.yaml,得到native_functions變數:

native_functions = parse_native_yaml(native_yaml_path, tags_yaml_path).native_functionsnative_functions = list(filter(should_generate_py_binding, native_functions))

native_functions是一個NativeFunction的列表,表示aten命名空間裡的函數,其第零個元素如下:

NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='_cast_Byte', inplace=False, dunder_method=False, functional_overload=False), overload_name=''), arguments=Arguments(pre_self_positional=(), self_arg=SelfArgument(argument=Argument(name='self', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=None)), post_self_positional=(Argument(name='non_blocking', type=BaseType(name=<BaseTy.bool: 9>), default='False', annotation=None),), pre_tensor_options_kwarg_only=(), tensor_options=None, post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.ExactSame: 1>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=9), autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=False, has_composite_implicit_autograd_kernel=True, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=False, has_composite_explicit_autograd_non_functional_kernel=False, tags=set())

代表rand函數的元素如下,aten::rand函數有六種overload name,分別為names, generator_with_names, 空字串, generator, out, generator_out。可與native_functions.yaml交互參看:

rand.names & rand.names_out

- func: rand.names(SymInt[] size, *, Dimname[]? names, ScalarType? dtype=None, Layout? layout=None, Device? device=None, bool? pin_memory=None) -> Tensordevice_check: NoCheckdevice_guard: Falsedispatch:CompositeExplicitAutograd: randautogen: rand.names_outtags: nondeterministic_seeded

yaml檔中的autogen欄位中有rand.names_out,對照native_functions中的元素,可以發現NativeFunction的autogen成員也有一個overload_name為names_out的OperatorName。

NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False), overload_name='names'), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='names', type=OptionalType(elem=ListType(elem=BaseType(name=<BaseTy.Dimname: 5>), size=None)), default=None, annotation=None),), tensor_options=TensorOptionsArguments(dtype=Argument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', annotation=None), layout=Argument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', annotation=None), device=Argument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', annotation=None), pin_memory=Argument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='None', annotation=None)), post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=False, device_check=<DeviceCheckType.NoCheck: 0>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4254), autogen=[OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False), overload_name='names_out')], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False,has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=True, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'})

rand.generator_with_names & rand.generator_with_names_out

- func: rand.generator_with_names(SymInt[] size, *, Generator? generator, Dimname[]? names, ScalarType? dtype=None, Layout? layout=None, Device? device=None, bool? pin_memory=None) -> Tensordevice_check: NoCheckdevice_guard: Falsetags: nondeterministic_seededdispatch:CompositeExplicitAutograd: randautogen: rand.generator_with_names_out

yaml檔中的autogen欄位中有rand.generator_with_names_out,對照下面,可以發現NativeFunction的autogen成員也有一個overload_name為generator_with_names_out的OperatorName。

NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False), overload_name='generator_with_names'), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='generator', type=OptionalType(elem=BaseType(name=<BaseTy.Generator: 1>)), default=None, annotation=None), Argument(name='names', type=OptionalType(elem=ListType(elem=BaseType(name=<BaseTy.Dimname: 5>), size=None)), default=None, annotation=None)), tensor_options=TensorOptionsArguments(dtype=Argument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', annotation=None), layout=Argument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', annotation=None), device=Argument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', annotation=None), pin_memory=Argument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='None', annotation=None)), post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=False, device_check=<DeviceCheckType.NoCheck: 0>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4262), autogen=[OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False), overload_name='generator_with_names_out')], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=True, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'})

rand

- func: rand(SymInt[] size, *, ScalarType? dtype=None, Layout? layout=None, Device? device=None, bool? pin_memory=None) -> Tensortags: nondeterministic_seededdispatch:CompositeExplicitAutograd: rand

NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False), overload_name=''), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(), tensor_options=TensorOptionsArguments(dtype=Argument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', annotation=None), layout=Argument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', annotation=None), device=Argument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', annotation=None), pin_memory=Argument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='None', annotation=None)), post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.ExactSame: 1>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4270), autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=True, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'})

rand.generator

- func: rand.generator(SymInt[] size, *, Generator? generator, ScalarType? dtype=None, Layout? layout=None, Device? device=None, bool? pin_memory=None) -> Tensortags: nondeterministic_seededdispatch:CompositeExplicitAutograd: rand

NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False), overload_name='generator'), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='generator', type=OptionalType(elem=BaseType(name=<BaseTy.Generator: 1>)), default=None, annotation=None),), tensor_options=TensorOptionsArguments(dtype=Argument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', annotation=None), layout=Argument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', annotation=None), device=Argument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', annotation=None), pin_memory=Argument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='None', annotation=None)), post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.ExactSame: 1>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4275), autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=True, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'})

rand.out

- func: rand.out(SymInt[] size, *, Tensor(a!) out) -> Tensor(a!)tags: nondeterministic_seededdispatch:CompositeExplicitAutograd: rand_out

NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False), overload_name='out'), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(), tensor_options=None, post_tensor_options_kwarg_only=(), out=(Argument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.ExactSame: 1>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4280), autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=True, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'})

rand.generator_out

- func: rand.generator_out(SymInt[] size, *, Generator? generator, Tensor(a!) out) -> Tensor(a!)tags: nondeterministic_seeded

NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False), overload_name='generator_out'), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='generator', type=OptionalType(elem=BaseType(name=<BaseTy.Generator: 1>)), default=None, annotation=None),), tensor_options=None, post_tensor_options_kwarg_only=(), out=(Argument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.ExactSame: 1>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4285), autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=False, has_composite_implicit_autograd_kernel=True, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=False, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'})

因為rand.names和rand.generator_with_names會生成對應的out版本的函數,所以由native_functions.yaml裡六個rand相關函數最後可以生成C++ aten命名空間裡的八個函數。

add.Tensor && add.out

傳入self及other,回傳結果的add函數。

- func: add.Tensor(Tensor self, Tensor other, *, Scalar alpha=1) -> Tensordevice_check: NoCheck # TensorIteratorstructured_delegate: add.outvariants: function, methoddispatch:SparseCPU, SparseCUDA: add_sparseSparseCsrCPU, SparseCsrCUDA: add_sparse_csrMkldnnCPU: mkldnn_addZeroTensor: add_zerotensorNestedTensorCPU, NestedTensorCUDA: NestedTensor_add_Tensortags: [canonical, pointwise]

NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='add', inplace=False, dunder_method=False, functional_overload=False), overload_name='Tensor'), arguments=Arguments(pre_self_positional=(), self_arg=SelfArgument(argument=Argument(name='self', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=None)), post_self_positional=(Argument(name='other', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='alpha', type=BaseType(name=<BaseTy.Scalar: 12>), default='1', annotation=None),), tensor_options=None, post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.NoCheck: 0>, python_module=None, category_override=None, variants={<Variant.function: 1>, <Variant.method: 2>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=497), autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=OperatorName(name=BaseOperatorName(base='add', inplace=False, dunder_method=False, functional_overload=False), overload_name='out'), structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=False, has_composite_explicit_autograd_non_functional_kernel=False, tags={'pointwise', 'canonical'})

add_.Tensor && add.out

直接修改self參數的inplace版本。

- func: add_.Tensor(Tensor(a!) self, Tensor other, *, Scalar alpha=1) -> Tensor(a!)device_check: NoCheck # TensorIteratorvariants: methodstructured_delegate: add.outdispatch:SparseCPU, SparseCUDA: add_sparse_SparseCsrCPU, SparseCsrCUDA: add_sparse_csr_MkldnnCPU: mkldnn_add_NestedTensorCPU, NestedTensorCUDA: NestedTensor_add__Tensortags: pointwise

根據pytorch native README.md:

Tensor(a!) - members of a may be written to thus mutating the underlying data.

Tensor(a!) self這個寫法表示self參數同時是入參也是出參。

NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='add', inplace=True, dunder_method=False, functional_overload=False), overload_name='Tensor'), arguments=Arguments(pre_self_positional=(), self_arg=SelfArgument(argument=Argument(name='self', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=()))), post_self_positional=(Argument(name='other', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='alpha', type=BaseType(name=<BaseTy.Scalar: 12>), default='1', annotation=None),), tensor_options=None, post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.NoCheck: 0>, python_module=None, category_override=None, variants={<Variant.method: 2>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=509), autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=OperatorName(name=BaseOperatorName(base='add', inplace=False, dunder_method=False, functional_overload=False), overload_name='out'), structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=False, has_composite_explicit_autograd_non_functional_kernel=False, tags={'pointwise'})

add.out

有出參out版本的add函數。

- func: add.out(Tensor self, Tensor other, *, Scalar alpha=1, Tensor(a!) out) -> Tensor(a!)device_check: NoCheck # TensorIteratorstructured: Truestructured_inherits: TensorIteratorBaseufunc_inner_loop:Generic: add (AllAndComplex, BFloat16, Half, ComplexHalf)ScalarOnly: add (Bool)dispatch:SparseCPU: add_out_sparse_cpuSparseCUDA: add_out_sparse_cudaSparseCsrCPU: add_out_sparse_csr_cpuSparseCsrCUDA: add_out_sparse_csr_cudaMkldnnCPU: mkldnn_add_outMPS: add_out_mpstags: pointwise

NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='add', inplace=False, dunder_method=False, functional_overload=False), overload_name='out'), arguments=Arguments(pre_self_positional=(), self_arg=SelfArgument(argument=Argument(name='self', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=None)), post_self_positional=(Argument(name='other', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='alpha', type=BaseType(name=<BaseTy.Scalar: 12>), default='1', annotation=None),), tensor_options=None, post_tensor_options_kwarg_only=(), out=(Argument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.NoCheck: 0>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=520), autogen=[], ufunc_inner_loop={<UfuncKey.Generic: 7>: UfuncInnerLoop(name='add', supported_dtypes=<torchgen.utils.OrderedSet object at 0x7f600cff7910>, ufunc_key=<UfuncKey.Generic: 7>), <UfuncKey.ScalarOnly: 6>: UfuncInnerLoop(name='add', supported_dtypes=<torchgen.utils.OrderedSet object at 0x7f600cff7b80>, ufunc_key=<UfuncKey.ScalarOnly: 6>)}, structured=True, structured_delegate=None, structured_inherits='TensorIteratorBase', precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=False, has_composite_explicit_autograd_non_functional_kernel=False, tags={'pointwise'})

function_signatures

function_signatures = load_signatures(native_functions, deprecated_yaml_path, method=False, pyi=True)

function_signatures是一個PythonSignatureNativeFunctionPair的列表,其第零個元素如下:

PythonSignatureNativeFunctionPair(signature=PythonSignature(name='_cast_Byte', input_args=(PythonArgument(name='self', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, default_init=None), PythonArgument(name='non_blocking', type=BaseType(name=<BaseTy.bool: 9>), default='False', default_init=None)), input_kwargs=(), output_args=None, returns=PythonReturns(returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), tensor_options_args=(), method=False), function=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='_cast_Byte', inplace=False, dunder_method=False, functional_overload=False), overload_name=''), arguments=Arguments(pre_self_positional=(), self_arg=SelfArgument(argument=Argument(name='self', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=None)), post_self_positional=(Argument(name='non_blocking', type=BaseType(name=<BaseTy.bool: 9>), default='False', annotation=None),), pre_tensor_options_kwarg_only=(), tensor_options=None, post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.ExactSame: 1>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=9), autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=False, has_composite_implicit_autograd_kernel=True, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=False, has_composite_explicit_autograd_non_functional_kernel=False, tags=set()))

代表rand函數的元素如下,共六個,可與剛才的native_functions一一對應:

rand.names & rand.names_out

PythonSignatureNativeFunctionPair(signature=PythonSignature(name='rand', input_args=(PythonArgument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, default_init=None),), input_kwargs=(PythonArgument(name='names', type=OptionalType(elem=ListType(elem=BaseType(name=<BaseTy.Dimname: 5>), size=None)), default=None, default_init=None),), output_args=None, returns=PythonReturns(returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), tensor_options_args=(PythonArgument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', default_init=None), PythonArgument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', default_init=None), PythonArgument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', default_init='torch::tensors::get_default_device()'), PythonArgument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None), PythonArgument(name='requires_grad', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None)), method=False), function=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False),

overload_name='names'), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='names', type=OptionalType(elem=ListType(elem=BaseType(name=<BaseTy.Dimname: 5>), size=None)), default=None, annotation=None),), tensor_options=TensorOptionsArguments(dtype=Argument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', annotation=None), layout=Argument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', annotation=None), device=Argument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', annotation=None), pin_memory=Argument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='None', annotation=None)), post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=False, device_check=<DeviceCheckType.NoCheck: 0>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4254),

autogen=[OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False), overload_name='names_out')],

ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=True, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'}))

注意names會生成names_out函數。

rand.generator_with_names & rand.generator_with_names_out

PythonSignatureNativeFunctionPair(signature=PythonSignature(name='rand', input_args=(PythonArgument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, default_init=None),), input_kwargs=(PythonArgument(name='generator', type=OptionalType(elem=BaseType(name=<BaseTy.Generator: 1>)), default=None, default_init=None), PythonArgument(name='names', type=OptionalType(elem=ListType(elem=BaseType(name=<BaseTy.Dimname: 5>), size=None)), default=None, default_init=None)), output_args=None, returns=PythonReturns(returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), tensor_options_args=(PythonArgument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', default_init=None), PythonArgument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', default_init=None), PythonArgument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', default_init='torch::tensors::get_default_device()'), PythonArgument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None), PythonArgument(name='requires_grad', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None)), method=False), function=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False),

overload_name='generator_with_names'), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='generator', type=OptionalType(elem=BaseType(name=<BaseTy.Generator: 1>)), default=None, annotation=None), Argument(name='names', type=OptionalType(elem=ListType(elem=BaseType(name=<BaseTy.Dimname: 5>), size=None)), default=None, annotation=None)), tensor_options=TensorOptionsArguments(dtype=Argument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', annotation=None), layout=Argument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', annotation=None), device=Argument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', annotation=None), pin_memory=Argument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='None', annotation=None)), post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=False, device_check=<DeviceCheckType.NoCheck: 0>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4262),

autogen=[OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False), overload_name='generator_with_names_out')],

ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=True, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'}))

注意generator_with_names會生成generator_with_names_out函數。

rand

PythonSignatureNativeFunctionPair(signature=PythonSignature(name='rand', input_args=(PythonArgument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, default_init=None),), input_kwargs=(), output_args=None, returns=PythonReturns(returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), tensor_options_args=(PythonArgument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', default_init=None), PythonArgument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', default_init=None), PythonArgument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', default_init='torch::tensors::get_default_device()'), PythonArgument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None), PythonArgument(name='requires_grad', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None)), method=False), function=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False),

overload_name=''), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(), tensor_options=TensorOptionsArguments(dtype=Argument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', annotation=None), layout=Argument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', annotation=None), device=Argument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', annotation=None), pin_memory=Argument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='None', annotation=None)), post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.ExactSame: 1>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4270),

autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=True, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'}))

rand.generator

PythonSignatureNativeFunctionPair(signature=PythonSignature(name='rand', input_args=(PythonArgument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, default_init=None),), input_kwargs=(PythonArgument(name='generator', type=OptionalType(elem=BaseType(name=<BaseTy.Generator: 1>)), default=None, default_init=None),), output_args=None, returns=PythonReturns(returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), tensor_options_args=(PythonArgument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', default_init=None), PythonArgument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', default_init=None), PythonArgument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', default_init='torch::tensors::get_default_device()'), PythonArgument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None), PythonArgument(name='requires_grad', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None)), method=False), function=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False),

overload_name='generator'), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='generator', type=OptionalType(elem=BaseType(name=<BaseTy.Generator: 1>)), default=None, annotation=None),), tensor_options=TensorOptionsArguments(dtype=Argument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', annotation=None), layout=Argument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', annotation=None), device=Argument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', annotation=None), pin_memory=Argument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='None', annotation=None)), post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.ExactSame: 1>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4275),

autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=True, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'}))

rand.out

PythonSignatureNativeFunctionPair(signature=PythonSignature(name='rand', input_args=(PythonArgument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, default_init=None),), input_kwargs=(), output_args=PythonOutArgument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default='None', default_init=None, outputs=(PythonArgument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, default_init=None),)), returns=PythonReturns(returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), tensor_options_args=(PythonArgument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', default_init=None), PythonArgument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', default_init=None), PythonArgument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', default_init='torch::tensors::get_default_device()'), PythonArgument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None), PythonArgument(name='requires_grad', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None)), method=False), function=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False),

overload_name='out'), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(), tensor_options=None, post_tensor_options_kwarg_only=(), out=(Argument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.ExactSame: 1>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4280),

autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=True, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'}))

rand.generator_out

PythonSignatureNativeFunctionPair(signature=PythonSignature(name='rand', input_args=(PythonArgument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, default_init=None),), input_kwargs=(PythonArgument(name='generator', type=OptionalType(elem=BaseType(name=<BaseTy.Generator: 1>)), default=None, default_init=None),), output_args=PythonOutArgument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default='None', default_init=None, outputs=(PythonArgument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, default_init=None),)), returns=PythonReturns(returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), tensor_options_args=(PythonArgument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', default_init=None), PythonArgument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', default_init=None), PythonArgument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', default_init='torch::tensors::get_default_device()'), PythonArgument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None), PythonArgument(name='requires_grad', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None)), method=False), function=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False),

overload_name='generator_out'), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='generator', type=OptionalType(elem=BaseType(name=<BaseTy.Generator: 1>)), default=None, annotation=None),), tensor_options=None, post_tensor_options_kwarg_only=(), out=(Argument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.ExactSame: 1>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4285),

autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=False, has_composite_implicit_autograd_kernel=True, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=False, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'}))

代表add函數的元素如下,共三個,也可與剛才的native_functions一一對應。

add.Tensor && add.out

PythonSignatureNativeFunctionPair(signature=PythonSignature(name='add', input_args=(PythonArgument(name='self', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, default_init=None), PythonArgument(name='other', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, default_init=None)), input_kwargs=(PythonArgument(name='alpha', type=BaseType(name=<BaseTy.Scalar: 12>), default='1', default_init=None),), output_args=None, returns=PythonReturns(returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), tensor_options_args=(), method=False), function=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='add', inplace=False, dunder_method=False, functional_overload=False), overload_name='Tensor'), arguments=Arguments(pre_self_positional=(), self_arg=SelfArgument(argument=Argument(name='self', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=None)), post_self_positional=(Argument(name='other', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='alpha', type=BaseType(name=<BaseTy.Scalar: 12>), default='1', annotation=None),), tensor_options=None, post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.NoCheck: 0>, python_module=None, category_override=None, variants={<Variant.function: 1>, <Variant.method: 2>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=497), autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=OperatorName(name=BaseOperatorName(base='add', inplace=False, dunder_method=False, functional_overload=False), overload_name='out'), structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=False, has_composite_explicit_autograd_non_functional_kernel=False, tags={'pointwise', 'canonical'}))

add_.Tensor && add.out

PythonSignatureNativeFunctionPair(signature=PythonSignature(name='add_', input_args=(PythonArgument(name='self', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, default_init=None), PythonArgument(name='other', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, default_init=None)), input_kwargs=(PythonArgument(name='alpha', type=BaseType(name=<BaseTy.Scalar: 12>), default='1', default_init=None),), output_args=None, returns=PythonReturns(returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), tensor_options_args=(), method=False), function=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='add', inplace=True, dunder_method=False, functional_overload=False), overload_name='Tensor'), arguments=Arguments(pre_self_positional=(), self_arg=SelfArgument(argument=Argument(name='self', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=()))), post_self_positional=(Argument(name='other', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='alpha', type=BaseType(name=<BaseTy.Scalar: 12>), default='1', annotation=None),), tensor_options=None, post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.NoCheck: 0>, python_module=None, category_override=None, variants={<Variant.method: 2>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=509), autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=OperatorName(name=BaseOperatorName(base='add', inplace=False, dunder_method=False, functional_overload=False), overload_name='out'), structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=False, has_composite_explicit_autograd_non_functional_kernel=False, tags={'pointwise'}))

add.out

PythonSignatureNativeFunctionPair(signature=PythonSignature(name='add', input_args=(PythonArgument(name='self', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, default_init=None), PythonArgument(name='other', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, default_init=None)), input_kwargs=(PythonArgument(name='alpha', type=BaseType(name=<BaseTy.Scalar: 12>), default='1', default_init=None),), output_args=PythonOutArgument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default='None', default_init=None, outputs=(PythonArgument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, default_init=None),)), returns=PythonReturns(returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), tensor_options_args=(), method=False), function=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='add', inplace=False, dunder_method=False, functional_overload=False), overload_name='out'), arguments=Arguments(pre_self_positional=(), self_arg=SelfArgument(argument=Argument(name='self', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=None)), post_self_positional=(Argument(name='other', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='alpha', type=BaseType(name=<BaseTy.Scalar: 12>), default='1', annotation=None),), tensor_options=None, post_tensor_options_kwarg_only=(), out=(Argument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.NoCheck: 0>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=520), autogen=[], ufunc_inner_loop={<UfuncKey.Generic: 7>: UfuncInnerLoop(name='add', supported_dtypes=<torchgen.utils.OrderedSet object at 0x7f600cff7910>, ufunc_key=<UfuncKey.Generic: 7>), <UfuncKey.ScalarOnly: 6>: UfuncInnerLoop(name='add', supported_dtypes=<torchgen.utils.OrderedSet object at 0x7f600cff7b80>, ufunc_key=<UfuncKey.ScalarOnly: 6>)}, structured=True, structured_delegate=None, structured_inherits='TensorIteratorBase', precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=False, has_composite_explicit_autograd_non_functional_kernel=False, tags={'pointwise'}))

sig_groups

sig_groups是一個PythonSignatureGroup的列表,PythonSignatureGroup則是由PythonSignature和NativeFunction組成的pair。

PythonSignatureGroup跟PythonSignatureNativeFunctionPair比起來多了一個outplace。

sig_groups = get_py_torch_functions(function_signatures)

sig_groups的第零個元素如下:

PythonSignatureGroup(signature=PythonSignature(name='__and__', input_args=(PythonArgument(name='self', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, default_init=None), PythonArgument(name='other', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, default_init=None)), input_kwargs=(), output_args=None, returns=PythonReturns(returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), tensor_options_args=(), method=False), base=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='and', inplace=False, dunder_method=True, functional_overload=False), overload_name='Tensor'), arguments=Arguments(pre_self_positional=(), self_arg=SelfArgument(argument=Argument(name='self', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=None)), post_self_positional=(Argument(name='other', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=None),), pre_tensor_options_kwarg_only=(), tensor_options=None, post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.NoCheck: 0>, python_module=None, category_override=None, variants={<Variant.method: 2>, <Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=7635), autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=False, has_composite_implicit_autograd_kernel=True, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=False, has_composite_explicit_autograd_non_functional_kernel=False, tags=set()), outplace=None)

代表rand函數的四個元素如下。原先的八個函數依據有沒有out被整理成兩兩一對,共四對。

rand.generator_with_names & rand.generator_with_names_out

PythonSignatureGroup(signature=PythonSignature(name='rand', input_args=(PythonArgument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, default_init=None),), input_kwargs=(PythonArgument(name='generator', type=OptionalType(elem=BaseType(name=<BaseTy.Generator: 1>)), default=None, default_init=None), PythonArgument(name='names', type=OptionalType(elem=ListType(elem=BaseType(name=<BaseTy.Dimname: 5>), size=None)), default=None, default_init=None)), output_args=None, returns=PythonReturns(returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), tensor_options_args=(PythonArgument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', default_init=None), PythonArgument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', default_init=None), PythonArgument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', default_init='torch::tensors::get_default_device()'), PythonArgument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None), PythonArgument(name='requires_grad', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None)), method=False), base=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False), overload_name='generator_with_names'), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='generator', type=OptionalType(elem=BaseType(name=<BaseTy.Generator: 1>)), default=None, annotation=None), Argument(name='names', type=OptionalType(elem=ListType(elem=BaseType(name=<BaseTy.Dimname: 5>), size=None)), default=None, annotation=None)), tensor_options=TensorOptionsArguments(dtype=Argument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', annotation=None), layout=Argument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', annotation=None), device=Argument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', annotation=None), pin_memory=Argument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='None', annotation=None)), post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=False, device_check=<DeviceCheckType.NoCheck: 0>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4262), autogen=[OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False), overload_name='generator_with_names_out')], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=True, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'}), outplace=None)

rand.generator & rand.generator_out

PythonSignatureGroup(signature=PythonSignature(name='rand', input_args=(PythonArgument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, default_init=None),), input_kwargs=(PythonArgument(name='generator', type=OptionalType(elem=BaseType(name=<BaseTy.Generator: 1>)), default=None, default_init=None),), output_args=PythonOutArgument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default='None', default_init=None, outputs=(PythonArgument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, default_init=None),)), returns=PythonReturns(returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), tensor_options_args=(PythonArgument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', default_init=None), PythonArgument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', default_init=None), PythonArgument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', default_init='torch::tensors::get_default_device()'), PythonArgument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None), PythonArgument(name='requires_grad', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None)), method=False), base=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False),

overload_name='generator'), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='generator', type=OptionalType(elem=BaseType(name=<BaseTy.Generator: 1>)), default=None, annotation=None),), tensor_options=TensorOptionsArguments(dtype=Argument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', annotation=None), layout=Argument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', annotation=None), device=Argument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', annotation=None), pin_memory=Argument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='None', annotation=None)), post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.ExactSame: 1>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4275), autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=True, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'}), outplace=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False),

overload_name='generator_out'), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='generator', type=OptionalType(elem=BaseType(name=<BaseTy.Generator: 1>)), default=None, annotation=None),), tensor_options=None, post_tensor_options_kwarg_only=(), out=(Argument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.ExactSame: 1>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4285), autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=False, has_composite_implicit_autograd_kernel=True, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=False, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'}))

rand.names & rand.names_out

PythonSignatureGroup(signature=PythonSignature(name='rand', input_args=(PythonArgument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, default_init=None),), input_kwargs=(PythonArgument(name='names', type=OptionalType(elem=ListType(elem=BaseType(name=<BaseTy.Dimname: 5>), size=None)), default=None, default_init=None),), output_args=None, returns=PythonReturns(returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), tensor_options_args=(PythonArgument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', default_init=None), PythonArgument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', default_init=None), PythonArgument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', default_init='torch::tensors::get_default_device()'), PythonArgument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None), PythonArgument(name='requires_grad', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None)), method=False), base=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False),

overload_name='names'), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(Argument(name='names', type=OptionalType(elem=ListType(elem=BaseType(name=<BaseTy.Dimname: 5>), size=None)), default=None, annotation=None),), tensor_options=TensorOptionsArguments(dtype=Argument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', annotation=None), layout=Argument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', annotation=None), device=Argument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', annotation=None), pin_memory=Argument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='None', annotation=None)), post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=False, device_check=<DeviceCheckType.NoCheck: 0>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4254), autogen=[OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False),

overload_name='names_out')], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=True, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'}), outplace=None)

rand & rand.out

PythonSignatureGroup(signature=PythonSignature(name='rand', input_args=(PythonArgument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, default_init=None),), input_kwargs=(), output_args=PythonOutArgument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default='None', default_init=None, outputs=(PythonArgument(name='out', type=BaseType(name=<BaseTy.Tensor: 3>), default=None, default_init=None),)), returns=PythonReturns(returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=Annotation(alias_set=('a',), is_write=True, alias_set_after=())),)), tensor_options_args=(PythonArgument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', default_init=None), PythonArgument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', default_init=None), PythonArgument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', default_init='torch::tensors::get_default_device()'), PythonArgument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None), PythonArgument(name='requires_grad', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='False', default_init=None)), method=False), base=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False),

overload_name=''), arguments=Arguments(pre_self_positional=(), self_arg=None, post_self_positional=(Argument(name='size', type=ListType(elem=BaseType(name=<BaseTy.SymInt: 17>), size=None), default=None, annotation=None),), pre_tensor_options_kwarg_only=(), tensor_options=TensorOptionsArguments(dtype=Argument(name='dtype', type=OptionalType(elem=BaseType(name=<BaseTy.ScalarType: 2>)), default='None', annotation=None), layout=Argument(name='layout', type=OptionalType(elem=BaseType(name=<BaseTy.Layout: 10>)), default='None', annotation=None), device=Argument(name='device', type=OptionalType(elem=BaseType(name=<BaseTy.Device: 11>)), default='None', annotation=None), pin_memory=Argument(name='pin_memory', type=OptionalType(elem=BaseType(name=<BaseTy.bool: 9>)), default='None', annotation=None)), post_tensor_options_kwarg_only=(), out=()), returns=(Return(name=None, type=BaseType(name=<BaseTy.Tensor: 3>), annotation=None),)), use_const_ref_for_mutable_tensors=False, device_guard=True, device_check=<DeviceCheckType.ExactSame: 1>, python_module=None, category_override=None, variants={<Variant.function: 1>}, manual_kernel_registration=False, manual_cpp_binding=False, loc=Location(file='aten/src/ATen/native/native_functions.yaml', line=4270), autogen=[], ufunc_inner_loop={}, structured=False, structured_delegate=None, structured_inherits=None, precomputed=None, cpp_no_default_args=set(), is_abstract=True, has_composite_implicit_autograd_kernel=False, has_composite_implicit_autograd_nested_tensor_kernel=False, has_composite_explicit_autograd_kernel=True, has_composite_explicit_autograd_non_functional_kernel=False, tags={'nondeterministic_seeded'}), outplace=NativeFunction(namespace='aten', func=FunctionSchema(name=OperatorName(name=BaseOperatorName(base='rand', inplace=False, dunder_method=False, functional_overload=False),