storm统计服务开启zookeeper、kafka 、Storm(sasl认证)

部署storm统计服务开启zookeeper、kafka 、Storm(sasl认证)

- 当前测试验证结果:

- 单独配置zookeeper 支持acl 设置用户和密码,在storm不修改代码情况下和kafka支持

- 当kafka 开启ACL时,storm 和ccod模块不清楚配置用户和密码。

- 使用python脚本连接kafka用户和密码是能成功发送消息。

- 当前部署环境服务版本

| 服务IP | 模块 | 版本信息 |

|---|---|---|

| 10.130.41.42 | zookeeper | zookeeper-3.6.3 |

| 10.130.41.43 | kafka | kafka_2.11-2.3.1 |

| 10.130.41.44 | storm | apache-storm-1.2.4 |

zookeeper部署

- 部署mongodb_1服务器的zookeeper

- 传安装部署包和配置文件

[root@mongodb_1 ~]# su - storm

[storm@mongodb_1 ~]$ rz -be ====> mongodb_1 ~]$ rz -be storm_node1.tar.gz

[storm@mongodb_1 ~]$ tar xvf storm_node1.tar.gz

[storm@mongodb_1 ~]$ cd storm_node1

[storm@mongodb_1 storm_node1]$ mv * .bash_profile ../

[storm@mongodb_1 ~]$ source .bash_profile ;java -version

java version "1.8.0_91"

Java(TM) SE Runtime Environment (build 1.8.0_91-b14)

Java HotSpot(TM) 64-Bit Server VM (build 25.91-b14, mixed mode)

- 修改zookeeper配置文件,然后在启动

[storm@mongodb_1 ~]$ cd zookeeper-3.6.3/conf/

[storm@mongodb_1 conf]$ vim zoo.cfg

dataDir=/home/storm/zookeeper-3.6.3/data

dataLogDir=/home/storm/zookeeper-3.6.3/log

server.1:mongodb_1:2182:3181

server.2:mongodb_2:2182:3181

server.3:mongodb_3:2182:3181

#peerType=observer

autopurge.purgeInterval=1

autopurge.snapRetainCount=3

4lw.commands.whitelist=*

jaasLoginRenew=3600000

authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

authProvider.2=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

authProvider.3=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

requireClientAuthScheme=sasl

[storm@mongodb_1 conf]$ vim zk_server_jaas.conf

Server {org.apache.kafka.common.security.plain.PlainLoginModule requiredusername="zk_cluster"password="zk_cluster_passwd"user_admin="Admin@123";

};#拷贝kafka acl lib

[storm@mongodb_1 zookeeper-3.6.3]$ cd /home/storm/zookeeper-3.6.3

[storm@mongodb_1 zookeeper-3.6.3]$ mkdir zk_sasl_dependencies

[storm@mongodb_1 libs]$ cd /home/storm/kafka_2.11-2.3.1/libs

[storm@mongodb_1 libs]$ cp kafka-clients-2.3.1.jar lz4-java-1.6.0.jar slf4j-api-1.7.26.jar snappy-java-1.1.7.3.jar ~/zookeeper-3.6.3/lib/

[storm@mongodb_1 ~]$ mkdir -p /home/storm/zookeeper-3.6.3/log /home/storm/zookeeper-3.6.3/data

[storm@mongodb_1 ~]$ echo "1" > /home/storm/zookeeper-3.6.3/data/myid

[storm@mongodb_1 ~]$ cd ~/zookeeper-3.6.3/bin

[storm@mongodb_1 bin]$ vim zkEnv.sh #最下面添加即可

#为zookeeper添加SASL支持

for i in ~/zookeeper-3.6.3/zk_sasl_dependencies/*.jar;

doCLASSPATH="$i:$CLASSPATH"

done

SERVER_JVMFLAGS=" -Djava.security.auth.login.config=$HOME/zookeeper-3.6.3/conf/zk_server_jaas.conf"

[storm@mongodb_1 bin]$ ./zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /home/storm/zookeeper-3.6.3/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED#需要登录三台zookepper启动完成之后,在查看状态

[storm@mongodb_1 bin]$ ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/storm/zookeeper-3.6.3/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

- 部署mongodb_2服务器的zookeeper

- 上传安装部署包和配置文件

[root@mongodb_2 ~]# su - storm

[storm@mongodb_2 ~]$ rz -be ====> mongodb_1 ~]$ rz -be storm_node2.tar.gz

[storm@mongodb_2 ~]$ tar xvf storm_node2.tar.gz

[storm@mongodb_2 ~]$ cd storm_node2

[storm@mongodb_2 storm_node1]$ mv * .bash_profile ../

[storm@mongodb_2 ~]$ source .bash_profile ;java -version

java version "1.8.0_91"

Java(TM) SE Runtime Environment (build 1.8.0_91-b14)

Java HotSpot(TM) 64-Bit Server VM (build 25.91-b14, mixed mode)

- 修改zookeeper配置文件,然后在启动

[storm@mongodb_2 ~]$ cd zookeeper-3.6.3/conf/

[storm@mongodb_2 conf]$ vim zoo.cfg

dataDir=/home/storm/zookeeper-3.6.3/data

dataLogDir=/home/storm/zookeeper-3.6.3/log

server.1:mongodb_2:2182:3181

server.2:mongodb_2:2182:3181

server.3:mongodb_3:2182:3181

#peerType=observer

autopurge.purgeInterval=1

autopurge.snapRetainCount=3

4lw.commands.whitelist=*

jaasLoginRenew=3600000

authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

authProvider.2=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

authProvider.3=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

requireClientAuthScheme=sasl

[storm@mongodb_2 conf]$ vim zk_server_jaas.conf

Server {org.apache.kafka.common.security.plain.PlainLoginModule requiredusername="zk_cluster"password="zk_cluster_passwd"user_admin="Admin@123";

};#拷贝kafka acl lib

[storm@mongodb_2 zookeeper-3.6.3]$ cd /home/storm/zookeeper-3.6.3

[storm@mongodb_2 zookeeper-3.6.3]$ mkdir zk_sasl_dependencies

[storm@mongodb_2 libs]$ cd /home/storm/kafka_2.11-2.3.1/libs

[storm@mongodb_2 libs]$ cp kafka-clients-2.3.1.jar lz4-java-1.6.0.jar slf4j-api-1.7.26.jar snappy-java-1.1.7.3.jar ~/zookeeper-3.6.3/lib/

[storm@mongodb_2 ~]$ mkdir -p /home/storm/zookeeper-3.6.3/log /home/storm/zookeeper-3.6.3/data

[storm@mongodb_2 ~]$ echo "1" > /home/storm/zookeeper-3.6.3/data/myid

[storm@mongodb_2 ~]$ cd ~/zookeeper-3.6.3/bin

[storm@mongodb_2 bin]$ vim zkEnv.sh #最下面添加即可

#为zookeeper添加SASL支持

for i in ~/zookeeper-3.6.3/zk_sasl_dependencies/*.jar;

doCLASSPATH="$i:$CLASSPATH"

done

SERVER_JVMFLAGS=" -Djava.security.auth.login.config=$HOME/zookeeper-3.6.3/conf/zk_server_jaas.conf"

[storm@mongodb_2 bin]$ ./zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /home/storm/zookeeper-3.6.3/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED#需要登录三台zookepper启动完成之后,在查看状态

[storm@mongodb_2 bin]$ ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/storm/zookeeper-3.6.3/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: leader

- 部署mongodb_3服务器的zookeeper

- 上传安装部署包和配置文件

[root@mongodb_3 ~]# su - storm

[storm@mongodb_3 ~]$ rz -be ====> mongodb_1 ~]$ rz -be storm_node3.tar.gz

[storm@mongodb_3 ~]$ tar xvf storm_node3.tar.gz

[storm@mongodb_3 ~]$ cd storm_node3

[storm@mongodb_3 storm_node1]$ mv * .bash_profile ../

[storm@mongodb_3 ~]$ source .bash_profile ;java -version

java version "1.8.0_91"

Java(TM) SE Runtime Environment (build 1.8.0_91-b14)

Java HotSpot(TM) 64-Bit Server VM (build 25.91-b14, mixed mode)

- 修改zookeeper配置文件,然后在启动

[storm@mongodb_3 ~]$ cd zookeeper-3.6.3/conf/

[storm@mongodb_3 conf]$ vim zoo.cfg

dataDir=/home/storm/zookeeper-3.6.3/data

dataLogDir=/home/storm/zookeeper-3.6.3/log

server.1:mongodb_3:2182:3181

server.2:mongodb_3:2182:3181

server.3:mongodb_3:2182:3181

#peerType=observer

autopurge.purgeInterval=1

autopurge.snapRetainCount=3

4lw.commands.whitelist=*

jaasLoginRenew=3600000

authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

authProvider.2=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

authProvider.3=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

requireClientAuthScheme=sasl

[storm@mongodb_3 conf]$ vim zk_server_jaas.conf

Server {org.apache.zookeeper.server.auth.DigestLoginModule requiredadmin="Admin@123";

};#拷贝kafka acl lib

[storm@mongodb_3 zookeeper-3.6.3]$ cd /home/storm/zookeeper-3.6.3

[storm@mongodb_3 zookeeper-3.6.3]$ mkdir zk_sasl_dependencies

[storm@mongodb_3 libs]$ cd /home/storm/kafka_2.11-2.3.1/libs

[storm@mongodb_3 libs]$ cp kafka-clients-2.3.1.jar lz4-java-1.6.0.jar slf4j-api-1.7.26.jar snappy-java-1.1.7.3.jar ~/zookeeper-3.6.3/lib/

[storm@mongodb_3 ~]$ mkdir -p /home/storm/zookeeper-3.6.3/log /home/storm/zookeeper-3.6.3/data

[storm@mongodb_3 ~]$ echo "1" > /home/storm/zookeeper-3.6.3/data/myid

[storm@mongodb_3 ~]$ cd ~/zookeeper-3.6.3/bin

[storm@mongodb_3 bin]$ vim zkEnv.sh #最下面添加即可

#为zookeeper添加SASL支持

for i in ~/zookeeper-3.6.3/zk_sasl_dependencies/*.jar;

doCLASSPATH="$i:$CLASSPATH"

done

SERVER_JVMFLAGS=" -Djava.security.auth.login.config=$HOME/zookeeper-3.6.3/conf/zk_server_jaas.conf"

[storm@mongodb_3 bin]$ ./zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /home/storm/zookeeper-3.6.3/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED#需要登录三台zookepper启动完成之后,在查看状态

[storm@mongodb_3 bin]$ ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /home/storm/zookeeper-3.6.3/bin/../conf/zoo.cfg

Client port found: 2181. Client address: localhost. Client SSL: false.

Mode: follower

- zookeeper设置用户密码,并设置连接zookeeper白名单。(如已经开启sals认证,则选acl认证跳过)

- 当前新部署平台,zookeeper未做下面操作

addauth

- 当前新部署平台,zookeeper未做下面操作

[storm@mongodb_2 bin]$ ./zkCli.sh -server mongodb_2:2182,mongodb_1:2182,mongodb_3:2182[zk: localhost:2181(CONNECTED) 0] addauth digest admin:123456 #admin为用户名, 123456为密码。

[zk: localhost:2181(CONNECTED) 1] setAcl / auth:admin:123456:cdrwa

[zk: localhost:2181(CONNECTED) 2] getAcl /

'digest,'admin:0uek/hZ/V9fgiM35b0Z2226acMQ=

: cdrwa[storm@mongodb_2 bin]$ ./zkCli.sh #登录连接成功后每次连接需要输入 addauth digest admin:123456

[zk: localhost:2181(CONNECTED) 0] ls /

Insufficient permission : /

[zk: localhost:2181(CONNECTED) 1] addauth digest admin:123456

[zk: localhost:2181(CONNECTED) 2] ls /

[test, zookeeper][zk: localhost:2181(CONNECTED) 8] setAcl / ip:10.130.41.42:cdrwa,ip:10.130.41.43:cdrwa,ip:10.130.41.44:cdrwa,ip:10.130.41.86:cdrwa,ip:10.130.41.119:cdrwa,ip:127.0.0.1:cdrwa,auth:kafka:cdrwa #设置连接zookeeper白名单

初始化storm配置

- 需要三个随便找一个zookeeper进行登录

[storm@mongodb_1 ~]$ cd ~/zookeeper-3.6.3/bin

[storm@mongodb_1 bin]$ ./zkCli.sh

[zk: localhost:2181(CONNECTED) 2] addauth digest admin:123456

create /storm config

create /storm/config 1

create /umg_cloud cluster

create /umg_cloud/cluster 1

create /umg_cloud/cfg 1

create /umg_cloud/cfg/notify 1

create /storm/config/ENT_CALL_RECORD_LIST 0103290030,0103290035,0103290023,0103290021,0103290022,0103290031

create /storm/config/KAFKA_BROKER_LIST mongodb_1:9092,mongodb_2:9092,mongodb_3:9092

create /storm/config/KAFKA_ZOOKEEPER_CONNECT mongodb_1:2181,mongodb_2:2181,mongodb_3:2181

create /storm/config/MAX_SPOUT_PENDING 100

create /storm/config/MONGO_ADMIN admin

create /storm/config/MONGO_BLOCKSIZE 100

create /storm/config/MONGO_HOST mongodb_1:30000;mongodb_2:30000;mongodb_3:30000

create /storm/config/MONGO_NAME ""

create /storm/config/MONGO_PASSWORD ""

create /storm/config/MONGO_POOLSIZE 100

create /storm/config/MONGO_SWITCH 0

create /storm/config/OFF_ON_DTS 0

create /storm/config/REDIS_HOST mongodb_3:27381

create /storm/config/REDIS_PASSWORD ""

create /storm/config/SEND_MESSAGE 0

create /storm/config/TOPO_COMPUBOLT_NUM 4

create /storm/config/TOPO_MONGOBOLT_NUM 16

create /storm/config/TOPO_SPOUT_NUM 3

create /storm/config/TOPO_WORK_NUM 2

create /storm/config/ENTID_MESSAGE 1

create /storm/config/MESSAGE_TOPIC 1

kafka部署

- 部署mongodb_1服务器的kafka

时[storm@mongodb_1 ~]$ cd ~/kafka_2.11-2.3.1/config/

[storm@mongodb_1 config]$ cat server.properties

broker.id=1

listeners=SASL_PLAINTEXT://10.130.41.42:9092

sasl.mechanism.inter.broker.protocol=PLAIN

sasl.enabled.mechanisms=PLAIN

security.inter.broker.protocol=SASL_PLAINTEXT

allow.everyone.if.no.acl.found=false

authorizer.class.name=kafka.security.auth.SimpleAclAuthorizer

super.users=User:admin;User:kafkacluster

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/home/storm//kafka_2.11-2.3.1/logs

num.partitions=3

default.replication.factor=3

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=mongodb_1:2181,mongodb_2:2181,mongodb_3:2181

compression.type=producer

zookeeper.set.acl=true

zookeeper.defaultAcl=root;123456:rwcd

zookeeper.connection.timeout.ms=6000

zookeeper.digest.enabled=true

group.initial.rebalance.delay.ms=0[storm@mongodb_1 config]$ more sasl.conf

security.protocol=SASL_PLAINTEXT

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="Admin@123";[storm@mongodb_1 config]$ cat kafka_server_jaas.conf

KafkaServer {org.apache.kafka.common.security.plain.PlainLoginModule requiredusername="kafkacluster"password="Admin@123"user_kafkacluster="Admin@123"user_admin="Admin@123"

};Client{org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="Admin@123";

};[storm@mongodb_1 ~]$mkdir /home/storm/kafka_2.11-2.3.1/log -p

[storm@mongodb_1 ~]$cd /home/storm/kafka_2.11-2.3.1/bin

[storm@mongodb_1 bin]$ vim kafka-server-start.sh #把创建的文件 kafka_server_jaas.conf 加载到启动环境中

export KAFKA_HEAP_OPTS=$KAFKA_HEAP_OPTS" -Djava.security.auth.login.config=/home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf"[storm@mongodb_1 ~]$ ./kafka-server-start.sh -daemon config/server.properties

- 部署mongodb_2服务器的kafka

[storm@mongodb_2 ~]$ cd ~/kafka_2.11-2.3.1/config/

[storm@mongodb_2 config]$ cat server.properties

broker.id=1

listeners=SASL_PLAINTEXT://10.130.41.42:9092

sasl.mechanism.inter.broker.protocol=PLAIN

sasl.enabled.mechanisms=PLAIN

security.inter.broker.protocol=SASL_PLAINTEXT

allow.everyone.if.no.acl.found=false

authorizer.class.name=kafka.security.auth.SimpleAclAuthorizer

super.users=User:admin;User:kafkacluster

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/home/storm//kafka_2.11-2.3.1/logs

num.partitions=3

default.replication.factor=3

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=mongodb_2:2181,mongodb_2:2181,mongodb_3:2181

compression.type=producer

zookeeper.set.acl=true

zookeeper.defaultAcl=root;123456:rwcd

zookeeper.connection.timeout.ms=6000

zookeeper.digest.enabled=true

group.initial.rebalance.delay.ms=0[storm@mongodb_2 config]$ more sasl.conf

security.protocol=SASL_PLAINTEXT

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="Admin@123";[storm@mongodb_2 config]$ cat kafka_server_jaas.conf

KafkaServer {org.apache.kafka.common.security.plain.PlainLoginModule requiredusername="kafkacluster"password="Admin@123"user_kafkacluster="Admin@123"user_admin="Admin@123"

};Client{org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="Admin@123";

};[storm@mongodb_2 ~]$mkdir /home/storm/kafka_2.11-2.3.1/log -p

[storm@mongodb_2 ~]$cd /home/storm/kafka_2.11-2.3.1/bin

[storm@mongodb_2 bin]$ vim kafka-server-start.sh #把创建的文件 kafka_server_jaas.conf 加载到启动环境中

export KAFKA_HEAP_OPTS=$KAFKA_HEAP_OPTS" -Djava.security.auth.login.config=/home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf"[storm@mongodb_2 ~]$ ./kafka-server-start.sh -daemon config/server.properties

- 部署mongodb_3服务器的kafka

[storm@mongodb_3 ~]$ cd ~/kafka_2.11-2.3.1/config/

[storm@mongodb_3 config]$ cat server.properties

broker.id=1

listeners=SASL_PLAINTEXT://10.130.41.42:9092

sasl.mechanism.inter.broker.protocol=PLAIN

sasl.enabled.mechanisms=PLAIN

security.inter.broker.protocol=SASL_PLAINTEXT

allow.everyone.if.no.acl.found=false

authorizer.class.name=kafka.security.auth.SimpleAclAuthorizer

super.users=User:admin;User:kafkacluster

num.network.threads=3

num.io.threads=8

socket.send.buffer.bytes=102400

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

log.dirs=/home/storm//kafka_2.11-2.3.1/logs

num.partitions=3

default.replication.factor=3

num.recovery.threads.per.data.dir=1

offsets.topic.replication.factor=1

transaction.state.log.replication.factor=1

transaction.state.log.min.isr=1

log.retention.hours=168

log.segment.bytes=1073741824

log.retention.check.interval.ms=300000

zookeeper.connect=mongodb_3:2181,mongodb_2:2181,mongodb_3:2181

compression.type=producer

zookeeper.set.acl=true

zookeeper.defaultAcl=root;123456:rwcd

zookeeper.connection.timeout.ms=6000

zookeeper.digest.enabled=true

group.initial.rebalance.delay.ms=0[storm@mongodb_3 config]$ more sasl.conf

security.protocol=SASL_PLAINTEXT

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="Admin@123";[storm@mongodb_3 config]$ cat kafka_server_jaas.conf

KafkaServer {org.apache.kafka.common.security.plain.PlainLoginModule requiredusername="kafkacluster"password="Admin@123"user_kafkacluster="Admin@123"user_admin="Admin@123"

};Client{org.apache.kafka.common.security.plain.PlainLoginModule required username="admin" password="Admin@123";

};[storm@mongodb_3 ~]$mkdir /home/storm/kafka_2.11-2.3.1/log -p

[storm@mongodb_3 ~]$cd /home/storm/kafka_2.11-2.3.1/bin

[storm@mongodb_3 bin]$ vim kafka-server-start.sh #把创建的文件 kafka_server_jaas.conf 加载到启动环境中

export KAFKA_HEAP_OPTS=$KAFKA_HEAP_OPTS" -Djava.security.auth.login.config=/home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf"[storm@mongodb_3 ~]$ ./kafka-server-start.sh -daemon config/server.properties

- 初始化创建topics,在其中一台kafka上面操作即可

[storm@mongodb_1 kafka_2.11-2.3.1]$ cd kafka_2.11-2.3.1/[storm@mongodb_1 kafka_2.11-2.3.1]$ bin/kafka-topics.sh --create --zookeeper mongodb_1:2181,mongodb_2:2181,mongodb_3:2181 --replication-factor 3 --partitions 1 --topic ent_record_fastdfs_url

- 给对应topics配置acls权限

[storm@mongodb_1 bin]$ ./kafka-acls.sh --authorizer-properties zookeeper.connect=10.130.41.43:2181 --add --allow-principal User:admin --producer --topic ent_record_fastdfs_url- 查看那些topic设置alcs

[storm@mongodb_1 bin]$ ./kafka-acls.sh --authorizer-properties zookeeper.connect=10.130.41.43:2181 --list

Current ACLs for resource `Topic:LITERAL:ent_record_fastdfs_url`: User:admin has Allow permission for operations: Describe from hosts: *User:admin has Allow permission for operations: Create from hosts: *User:admin has Allow permission for operations: Write from hosts: *

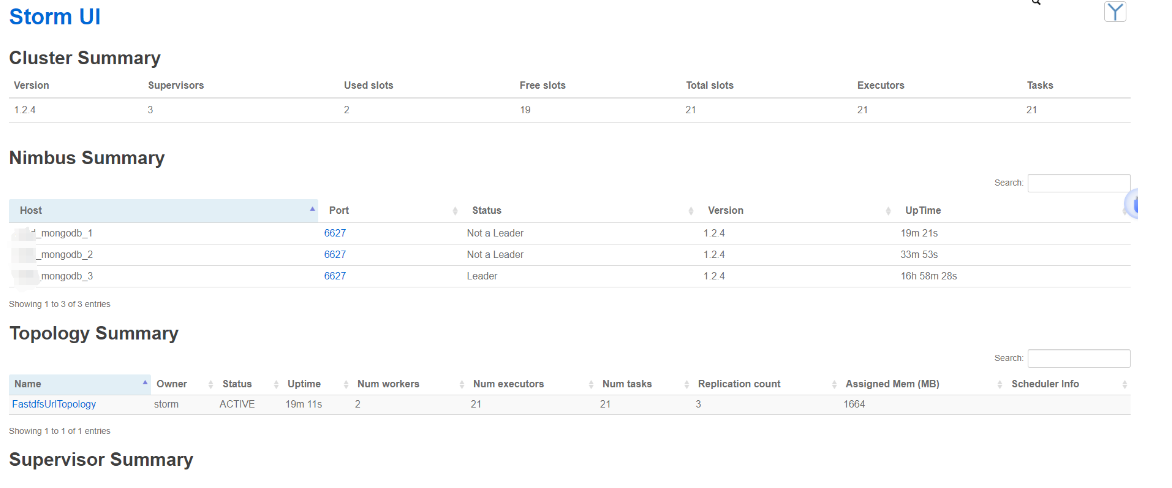

storm部署

-

当前版本不支持连接kafka支持 ACL, 需要修改storm jar包,待研发布支持版本。

-

下面配置文件配置已支持zookeeper连接设置ACL

-

部署mongodb_1服务器的storm

[storm@mongodb_1 ~]$ cd ~/apache-storm-1.2.4/conf/

[storm@mongodb_1 conf]$ vim storm.yaml

storm.zookeeper.factory.class: "org.apache.storm.security.auth.SASLAuthenticationProvider"

storm.zookeeper.auth.provider.1: "org.apache.storm.security.auth.digest.DigestSaslServerCallbackHandler"

storm.zookeeper.auth.provider.2: "org.apache.zookeeper.server.auth.SASLAuthenticationProvider"

storm.zookeeper.superACL: "sasl:admin"

storm.zookeeper.servers:- "10.130.41.42"- "10.130.41.43"- "10.130.41.44"

nimbus.seeds: ["10.130.41.42","10.130.41.43","10.130.41.44"]

ui.port: 8888

storm.local.dir: "/home/storm/apache-storm-1.2.4/data"

supervisor.slots.ports:- 6700- 6701- 6702- 6703- 6704- 6705- 6706

storm.zookeeper.authProvider.1: org.apache.zookeeper.server.auth.DigestAuthenticationProvider

storm.zookeeper.auth.user: admin

storm.zookeeper.auth.password: Admin@123

storm.zookeeper.auth.digest.1: admin=Admin@123

java.security.auth.login.config: /home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf

worker.childopts: '-Djava.security.auth.login.config=/home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf'

worker.childopts: '-Djava.security.auth.login.config=/home/storm/zookeeper-3.6.3/conf/jaas.conf'

[storm@mongodb_1 ~]$ mkdir -p /home/storm/apache-storm-1.2.4/data

[storm@mongodb_1 ~]$ cd /home/storm/apache-storm-1.2.4/bin

[storm@mongodb_1 bin]$ nohup ./storm nimbus &

[storm@mongodb_1 bin]$ nohup ./storm ui &

[storm@mongodb_1 bin]$ nohup ./storm supervisor &[storm@mongodb_1 bin]$ jps #查看是否正常运行

1808 worker

1792 LogWriter

1907 worker

29171 Supervisor

1891 LogWriter

20328 core

25224 Kafka

31867 ZooKeeperMain

2444 Jps

18957 QuorumPeerMain

32334 nimbus

- 部署mongodb_2服务器的storm

[storm@mongodb_2 ~]$ cd ~/apache-storm-1.2.4/conf/

[storm@mongodb_2 conf]$ vim storm.yaml

storm.zookeeper.factory.class: "org.apache.storm.security.auth.SASLAuthenticationProvider"

storm.zookeeper.auth.provider.1: "org.apache.storm.security.auth.digest.DigestSaslServerCallbackHandler"

storm.zookeeper.auth.provider.2: "org.apache.zookeeper.server.auth.SASLAuthenticationProvider"

storm.zookeeper.superACL: "sasl:admin"

storm.zookeeper.servers:- "10.130.41.42"- "10.130.41.43"- "10.130.41.44"

nimbus.seeds: ["10.130.41.42","10.130.41.43","10.130.41.44"]

ui.port: 8888

storm.local.dir: "/home/storm/apache-storm-1.2.4/data"

supervisor.slots.ports:- 6700- 6701- 6702- 6703- 6704- 6705- 6706

storm.zookeeper.authProvider.1: org.apache.zookeeper.server.auth.DigestAuthenticationProvider

storm.zookeeper.auth.user: admin

storm.zookeeper.auth.password: Admin@123

storm.zookeeper.auth.digest.1: admin=Admin@123

java.security.auth.login.config: /home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf

worker.childopts: '-Djava.security.auth.login.config=/home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf'

worker.childopts: '-Djava.security.auth.login.config=/home/storm/zookeeper-3.6.3/conf/jaas.conf'

[storm@mongodb_2 ~]$ mkdir -p /home/storm/apache-storm-1.2.4/data

[storm@mongodb_2 ~]$ cd /home/storm/apache-storm-1.2.4/bin

[storm@mongodb_2 bin]$ nohup ./storm nimbus &

[storm@mongodb_2 bin]$ nohup ./storm ui &

[storm@mongodb_2 bin]$ nohup ./storm supervisor &[storm@mongodb_2 bin]$ jps #查看是否正常运行

1808 worker

1792 LogWriter

1907 worker

29171 Supervisor

1891 LogWriter

20328 core

25224 Kafka

31867 ZooKeeperMain

2444 Jps

18957 QuorumPeerMain

32334 nimbus

- 部署mongodb_3服务器的storm

[storm@mongodb_3 ~]$ cd ~/apache-storm-1.2.4/conf/

[storm@mongodb_3 conf]$ vim storm.yaml

storm.zookeeper.factory.class: "org.apache.storm.security.auth.SASLAuthenticationProvider"

storm.zookeeper.auth.provider.1: "org.apache.storm.security.auth.digest.DigestSaslServerCallbackHandler"

storm.zookeeper.auth.provider.2: "org.apache.zookeeper.server.auth.SASLAuthenticationProvider"

storm.zookeeper.superACL: "sasl:admin"

storm.zookeeper.servers:- "10.130.41.42"- "10.130.41.43"- "10.130.41.44"

nimbus.seeds: ["10.130.41.42","10.130.41.43","10.130.41.44"]

ui.port: 8888

storm.local.dir: "/home/storm/apache-storm-1.2.4/data"

supervisor.slots.ports:- 6700- 6701- 6702- 6703- 6704- 6705- 6706

storm.zookeeper.authProvider.1: org.apache.zookeeper.server.auth.DigestAuthenticationProvider

storm.zookeeper.auth.user: admin

storm.zookeeper.auth.password: Admin@123

storm.zookeeper.auth.digest.1: admin=Admin@123

java.security.auth.login.config: /home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf

worker.childopts: '-Djava.security.auth.login.config=/home/storm/kafka_2.11-2.3.1/config/kafka_server_jaas.conf'

worker.childopts: '-Djava.security.auth.login.config=/home/storm/zookeeper-3.6.3/conf/jaas.conf'

[storm@mongodb_3 ~]$ mkdir -p /home/storm/apache-storm-1.2.4/data

[storm@mongodb_3 ~]$ cd /home/storm/apache-storm-1.2.4/bin

[storm@mongodb_3 bin]$ nohup ./storm nimbus &

[storm@mongodb_3 bin]$ nohup ./storm ui &

[storm@mongodb_3 bin]$ nohup ./storm supervisor &[storm@mongodb_3 bin]$ jps #查看是否正常运行

1808 worker

1792 LogWriter

1907 worker

29171 Supervisor

1891 LogWriter

20328 core

25224 Kafka

31867 ZooKeeperMain

2444 Jps

18957 QuorumPeerMain

32334 nimbus

- storm的主节点nimbus在第一台上启动拓扑图

- 启动拓扑图时需要指定stormStatics.jar 包,如果使用新storm安装包没有,请自行找测试部要stormStatics.jar包

[storm@mongodb_1 bin]$ cd /home/storm/apache-storm-1.2.4/bin[storm@mongodb_1 bin]$ ./storm jar ../lib/stormStatics.jar com.channelsoft.stormStatics.topology.FastdfsUrlTopology FastdfsUrlTopology mongodb_1,mongodb_2,mongodb_3 2181 ent_record_fastdfs_url /brokers

- 使用storm-ui查看刚启动拓扑是否正常

- 访问第一台的服务器ip端口是8888

本文由mdnice多平台发布

相关文章:

storm统计服务开启zookeeper、kafka 、Storm(sasl认证)

部署storm统计服务开启zookeeper、kafka 、Storm(sasl认证) 当前测试验证结果: 单独配置zookeeper 支持acl 设置用户和密码,在storm不修改代码情况下和kafka支持当kafka 开启ACL时,storm 和ccod模块不清楚配置用户和密…...

YOLOv8加入AIFI模块,附带项目源码链接

YOLOv8" 是一个新一代的对象检测框架,属于YOLO(You Only Look Once)系列的最新版本。YOLOv8中提及的AIFI(Attention-based Intrascale Feature Interaction)模块是一种用于增强对象检测性能的机制,它是…...

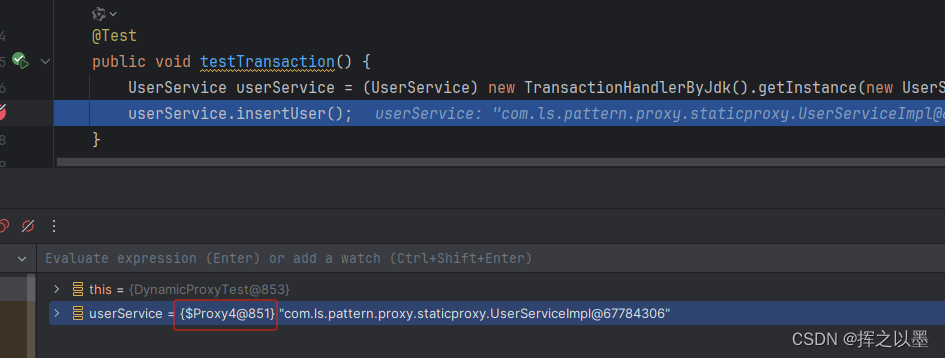

【设计模式】代理模式的实现方式与使用场景

1. 概述 代理模式是一种结构型设计模式,它通过创建一个代理对象来控制对另一个对象的访问,代理对象在客户端和目标对象之间充当了中介的角色,客户端不再直接访问目标对象,而是通过代理对象间接访问目标对象。 那在中间加一层代理…...

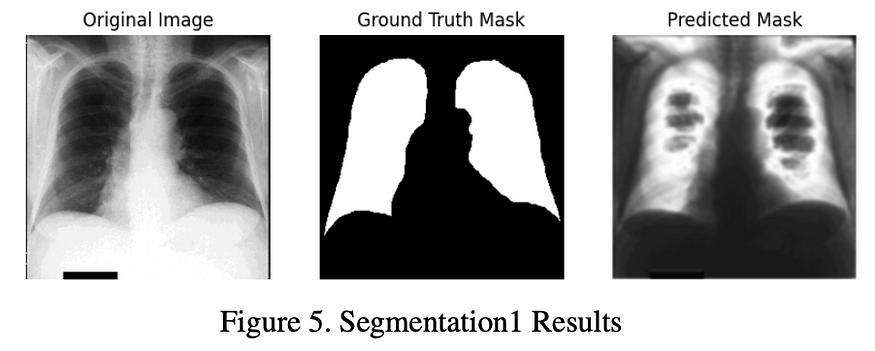

医学图像的图像处理、分割、分类和定位-1

一、说明 本报告全面探讨了应用于医学图像的图像处理和分类技术。开展了四项不同的任务来展示这些方法的多功能性和有效性。任务 1 涉及读取、写入和显示 PNG、JPG 和 DICOM 图像。任务 2 涉及基于定向变化的多类图像分类。此外,我们在任务 3 中包括了胸部 X 光图像…...

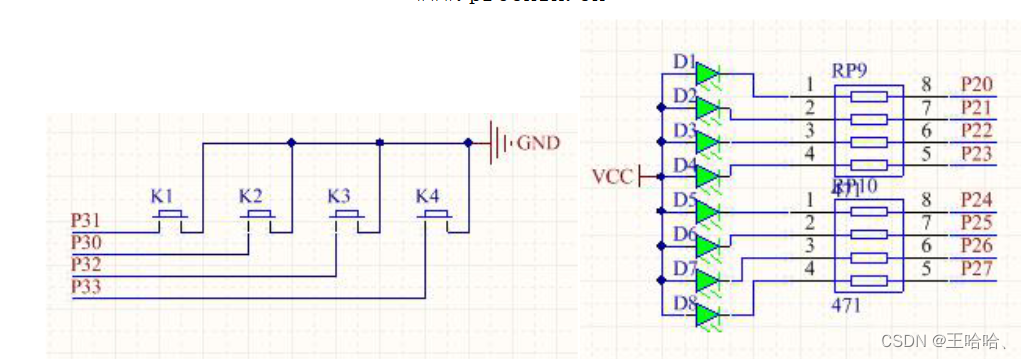

【51单片机】外部中断

0、前言 参考:普中 51 单片机开发攻略 第16章 及17章 1、硬件 2、软件 #include <reg52.h> #include <intrins.h> #include "delayms.h"typedef unsigned char u8; typedef unsigned int u16;sbit led P2^0; sbit key3 P3^2;//外部中断…...

fastapi框架

fastapi框架 fastapi,一个用于构建 API 的现代、快速(高性能)的异步web框架。 fastapi是建立在Starlette和Pydantic基础上的 Pydantic是一个基于Python类型提示来定义数据验证、序列化和文档的库。Starlette是一种轻量级的ASGI框架/工具包…...

2023 年顶级前端工具

谁不喜欢一个好的前端工具?在本综述中,您将找到去年流行的有用的前端工具,它们将帮助您加快开发工作流程。让我们深入了解一下! 在过去的 12 个月里,我在我的时事通讯 Web Tools Weekly 中分享了数百种工具。我为前端…...

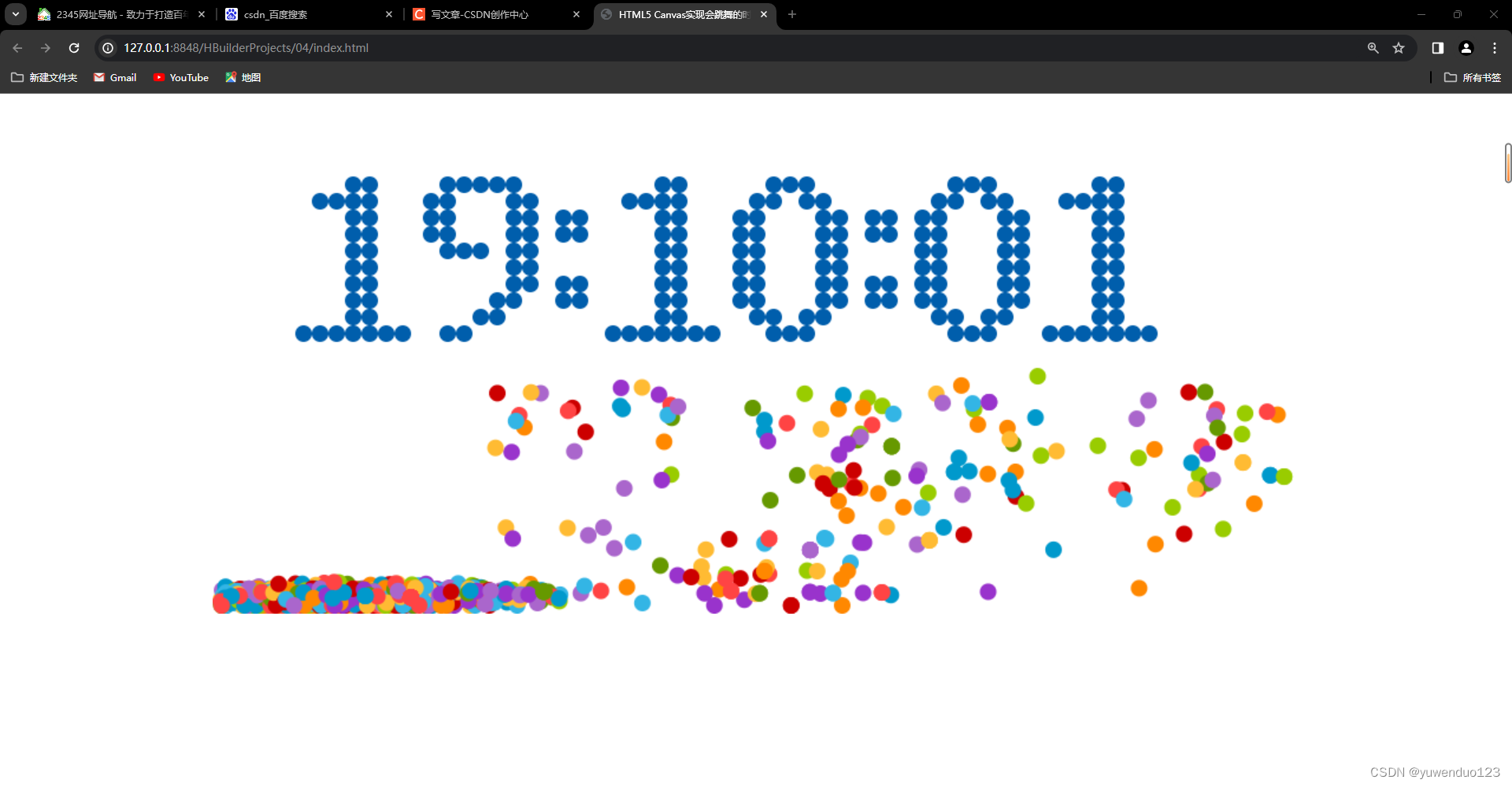

html 会跳舞的时间动画特效

下面是是代码: <!DOCTYPE html PUBLIC "-//W3C//DTD XHTML 1.0 Transitional//EN" "http://www.w3.org/TR/xhtml1/DTD/xhtml1-transitional.dtd"> <html xmlns"http://www.w3.org/1999/xhtml"> <head> <meta h…...

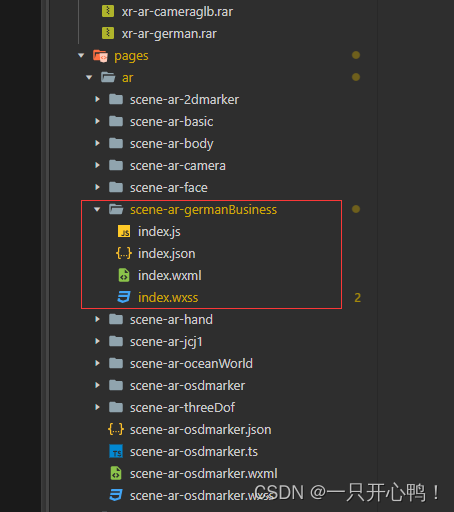

微信AR实现识别手部展示glb模型

1.效果 2.微信小程序手势识别只支持以下几个动作,和识别点位,官方文档 因为AR识别手部一直在识别,所以会出现闪动问题。可以将微信开发者调试基础库设置到3.3.2以上,可能要稳定一些 3.3.代码展示,我用的是微信官方文…...

MYSQL自连接、子查询

自连接: # board表 mysql> select * from board; --------------------------------- | id | name | intro | parent_id | --------------------------------- | 1 | 后端 | NULL | NULL | | 2 | 前端 | NULL | NULL | | 3 | 移…...

docker搭建hbase 全部流程(包含本地API访问)

一、使用docker下载并安装hbase 1、搜索:docker search hbase 2、下载:docker pull harisekhon/hbase(一定要下载这个,下面都是围绕此展开的) 3、启动容器: docker run -d -p 2181:2181 -p 16000:16000…...

Mybatis之关联

一、一对多关联 eg:一个用户对应多个订单 建表语句 CREATE TABLE t_customer (customer_id INT NOT NULL AUTO_INCREMENT, customer_name CHAR(100), PRIMARY KEY (customer_id) ); CREATE TABLE t_order ( order_id INT NOT NULL AUTO_INCREMENT, order_name C…...

Labview实现用户界面切换的几种方式---通过VI间相互调用

在做用户界面时我们的程序往往面对的对象是程序使用者,复杂程序如果放在同一个页面中,往往会导致程序冗长卡顿,此时通过多个VI之间的切换就可以实现多个界面之间的转换,也会显得程序更加的高大上。 本文所有程序均可下载ÿ…...

)

点云从入门到精通技术详解100篇-基于点云和图像融合的智能驾驶目标检测(中)

目录 2.1.2 数据源选型分析 2.2 环境感知系统分析 2.2.1 传感器布置方案分析...

Apache-iotdb物联网数据库的安装及使用

一、简介 >Apache IoTDB (Database for Internet of Things) is an IoT native database with high performance for data management and analysis, deployable on the edge and the cloud. Due to its light-weight architecture, high performance and rich feature set…...

项目管理流程

优质博文 IT-BLOG-CN 一、简介 项目是为提供某项独特产品【独特指:创造出与以往不同或者多个方面与以往有所区别产品或服务,所以日复一日重复的工作就不属于项目】、服务或成果所做的临时性【临时性指:项目有明确的开始时间和明确的结束时间,不会无限期…...

0004.电脑开机提示按F1

常用的电脑主板不知道什么原因,莫名其妙的启动不了了。尝试了很多方法,没有奏效。没有办法我就只能把硬盘拆了下来,装到了另一台电脑上面。但是开机以后却提示F1,如下图: 根据上面的提示,应该是驱动有问题…...

中国电子学会2022年12月份青少年软件编程Scratch图形化等级考试试卷一级真题(含答案)

一、单选题(共25题,共50分) 1. 小明想在开始表演之前向大家问好并做自我介绍,应运行下列哪个程序?(2分) A. B. C. D. 2. 舞台有两个不同的背景,小猫角色的哪个积木能够切换舞台背景?(2分) A. B. C. D. 3. …...

C语言第二弹---C语言基本概念(下)

✨个人主页: 熬夜学编程的小林 💗系列专栏: 【C语言详解】 【数据结构详解】 C语言基本概念 1、字符串和\02、转义字符3、语句和语句分类3.1、空语句3.2、表达式语句3.3、函数调⽤语句3.4、复合语句3.5、控制语句 4、注释4.1、注释的两种形…...

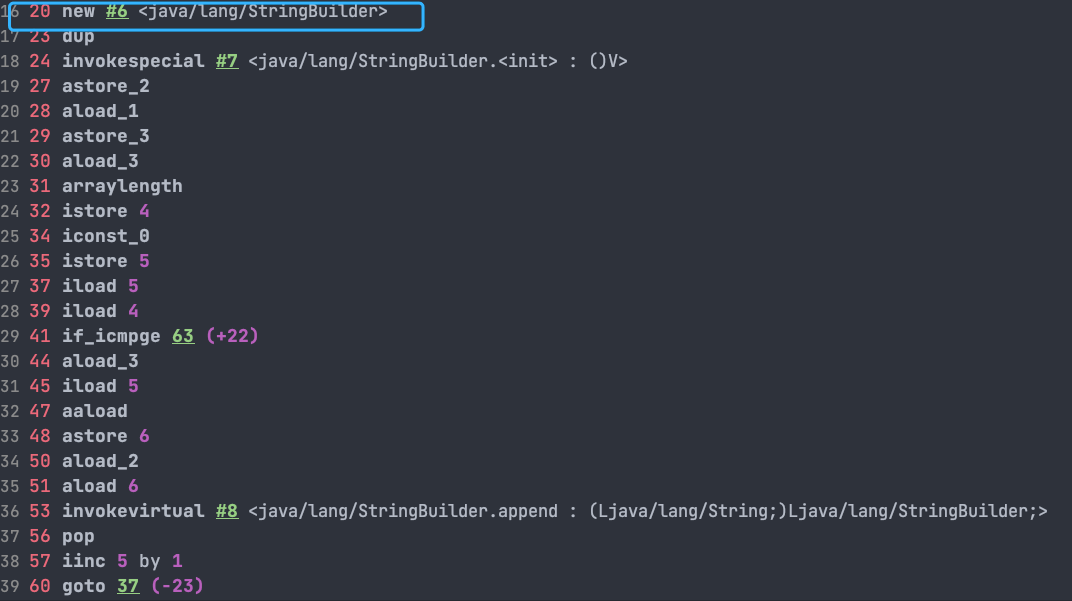

Java 基础面试题 String(一)

Java 基础面试题 String(一) 文章目录 Java 基础面试题 String(一)String、StringBuffer、StringBuilder 的区别?String 为什么是不可变的?字符串拼接用“” 还是 StringBuilder? 文章来自Java Guide 用于学习如有侵…...

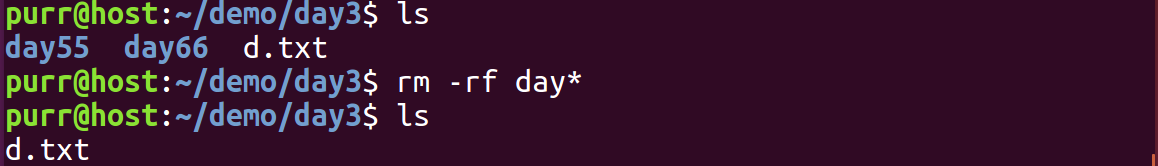

Linux 文件类型,目录与路径,文件与目录管理

文件类型 后面的字符表示文件类型标志 普通文件:-(纯文本文件,二进制文件,数据格式文件) 如文本文件、图片、程序文件等。 目录文件:d(directory) 用来存放其他文件或子目录。 设备…...

使用rpicam-app通过网络流式传输视频)

树莓派超全系列教程文档--(62)使用rpicam-app通过网络流式传输视频

使用rpicam-app通过网络流式传输视频 使用 rpicam-app 通过网络流式传输视频UDPTCPRTSPlibavGStreamerRTPlibcamerasrc GStreamer 元素 文章来源: http://raspberry.dns8844.cn/documentation 原文网址 使用 rpicam-app 通过网络流式传输视频 本节介绍来自 rpica…...

MongoDB学习和应用(高效的非关系型数据库)

一丶 MongoDB简介 对于社交类软件的功能,我们需要对它的功能特点进行分析: 数据量会随着用户数增大而增大读多写少价值较低非好友看不到其动态信息地理位置的查询… 针对以上特点进行分析各大存储工具: mysql:关系型数据库&am…...

Java - Mysql数据类型对应

Mysql数据类型java数据类型备注整型INT/INTEGERint / java.lang.Integer–BIGINTlong/java.lang.Long–––浮点型FLOATfloat/java.lang.FloatDOUBLEdouble/java.lang.Double–DECIMAL/NUMERICjava.math.BigDecimal字符串型CHARjava.lang.String固定长度字符串VARCHARjava.lang…...

成都鼎讯硬核科技!雷达目标与干扰模拟器,以卓越性能制胜电磁频谱战

在现代战争中,电磁频谱已成为继陆、海、空、天之后的 “第五维战场”,雷达作为电磁频谱领域的关键装备,其干扰与抗干扰能力的较量,直接影响着战争的胜负走向。由成都鼎讯科技匠心打造的雷达目标与干扰模拟器,凭借数字射…...

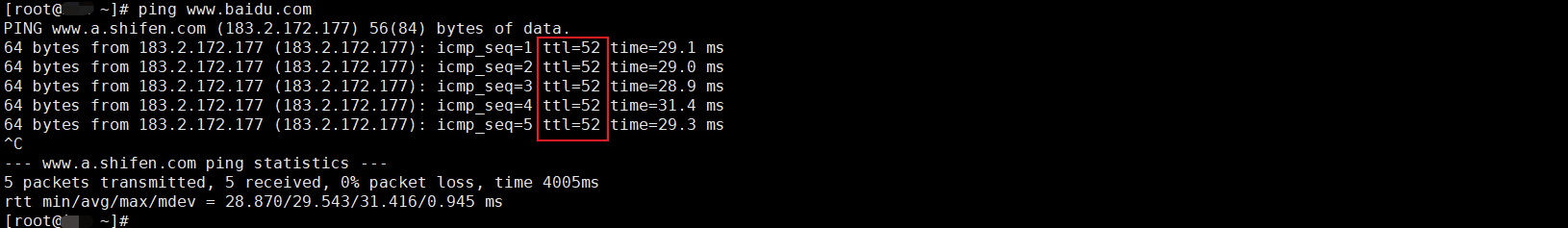

如何理解 IP 数据报中的 TTL?

目录 前言理解 前言 面试灵魂一问:说说对 IP 数据报中 TTL 的理解?我们都知道,IP 数据报由首部和数据两部分组成,首部又分为两部分:固定部分和可变部分,共占 20 字节,而即将讨论的 TTL 就位于首…...

2025年渗透测试面试题总结-腾讯[实习]科恩实验室-安全工程师(题目+回答)

安全领域各种资源,学习文档,以及工具分享、前沿信息分享、POC、EXP分享。不定期分享各种好玩的项目及好用的工具,欢迎关注。 目录 腾讯[实习]科恩实验室-安全工程师 一、网络与协议 1. TCP三次握手 2. SYN扫描原理 3. HTTPS证书机制 二…...

Scrapy-Redis分布式爬虫架构的可扩展性与容错性增强:基于微服务与容器化的解决方案

在大数据时代,海量数据的采集与处理成为企业和研究机构获取信息的关键环节。Scrapy-Redis作为一种经典的分布式爬虫架构,在处理大规模数据抓取任务时展现出强大的能力。然而,随着业务规模的不断扩大和数据抓取需求的日益复杂,传统…...

第一篇:Liunx环境下搭建PaddlePaddle 3.0基础环境(Liunx Centos8.5安装Python3.10+pip3.10)

第一篇:Liunx环境下搭建PaddlePaddle 3.0基础环境(Liunx Centos8.5安装Python3.10pip3.10) 一:前言二:安装编译依赖二:安装Python3.10三:安装PIP3.10四:安装Paddlepaddle基础框架4.1…...

大数据驱动企业决策智能化的路径与实践

📝个人主页🌹:慌ZHANG-CSDN博客 🌹🌹期待您的关注 🌹🌹 一、引言:数据驱动的企业竞争力重构 在这个瞬息万变的商业时代,“快者胜”的竞争逻辑愈发明显。企业如何在复杂环…...