14. rk3588自带的RKNNLite检测yolo模型(python)

首先将文件夹~/rknpu2/runtime/RK3588/Linux/librknn_api/aarch64/下的文件librknnrt.so复制到文件夹/usr/lib/下(该文件夹下原有的文件librknnrt.so是用来测试resnet50模型的,所以要替换成yolo模型的librknnrt.so),如下图所示:

![]()

然后在文件夹/home/rpdzkj/rknn-toolkit-lit2-examples/inference_with_lite/下放入以下3个文件:

bus.jpg best.rknn des.py

其中bus.jpg是要检测的图片,best.rknn是我们转换的yolov5的rknn模型,des.py是要运行的py代码,其代码如下:

# -*- coding: utf-8 -*-

# coding:utf-8import os

import urllib

import traceback

import time

import sys

import numpy as np

import cv2

import platform

from rknnlite.api import RKNNLite

from PIL import Image# Model from https://github.com/airockchip/rknn_model_zoo

RKNN_MODEL = 'best.rknn'

IMG_PATH = './bus.jpg'

DATASET = './dataset.txt'QUANTIZE_ON = True

BOX_THRESH = 0.5

OBJ_THRESH = 0.45

NMS_THRESH = 0.65

IMG_SIZE = 640CLASSES = ("car", "moto", "persons")# decice tree for rk356x/rk3588

DEVICE_COMPATIBLE_NODE = '/proc/device-tree/compatible'def get_host():# get platform and device typesystem = platform.system()machine = platform.machine()os_machine = system + '-' + machineif os_machine == 'Linux-aarch64':try:with open(DEVICE_COMPATIBLE_NODE) as f:device_compatible_str = f.read()host = 'RK3588'except IOError:print('Read device node {} failed.'.format(DEVICE_COMPATIBLE_NODE))exit(-1)else:host = os_machinereturn hostINPUT_SIZE = 640RK3588_RKNN_MODEL = 'best.rknn'def sigmoid(x):return 1 / (1 + np.exp(-x))def xywh2xyxy(x):# Convert [x, y, w, h] to [x1, y1, x2, y2]y = np.copy(x)y[:, 0] = x[:, 0] - x[:, 2] / 2 # top left xy[:, 1] = x[:, 1] - x[:, 3] / 2 # top left yy[:, 2] = x[:, 0] + x[:, 2] / 2 # bottom right xy[:, 3] = x[:, 1] + x[:, 3] / 2 # bottom right yreturn ydef process(input, mask, anchors):anchors = [anchors[i] for i in mask]grid_h, grid_w = map(int, input.shape[0:2])box_confidence = sigmoid(input[..., 4])box_confidence = np.expand_dims(box_confidence, axis=-1)box_class_probs = sigmoid(input[..., 5:])box_xy = sigmoid(input[..., :2]) * 2 - 0.5col = np.tile(np.arange(0, grid_w), grid_w).reshape(-1, grid_w)row = np.tile(np.arange(0, grid_h).reshape(-1, 1), grid_h)col = col.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)row = row.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)grid = np.concatenate((col, row), axis=-1)box_xy += gridbox_xy *= int(IMG_SIZE/grid_h)box_wh = pow(sigmoid(input[..., 2:4]) * 2, 2)box_wh = box_wh * anchorsbox = np.concatenate((box_xy, box_wh), axis=-1)return box, box_confidence, box_class_probsdef filter_boxes(boxes, box_confidences, box_class_probs):"""Filter boxes with box threshold. It's a bit different with origin yolov5 post process!# Argumentsboxes: ndarray, boxes of objects.box_confidences: ndarray, confidences of objects.box_class_probs: ndarray, class_probs of objects.# Returnsboxes: ndarray, filtered boxes.classes: ndarray, classes for boxes.scores: ndarray, scores for boxes."""boxes = boxes.reshape(-1, 4)box_confidences = box_confidences.reshape(-1)box_class_probs = box_class_probs.reshape(-1, box_class_probs.shape[-1])_box_pos = np.where(box_confidences >= OBJ_THRESH)boxes = boxes[_box_pos]box_confidences = box_confidences[_box_pos]box_class_probs = box_class_probs[_box_pos]class_max_score = np.max(box_class_probs, axis=-1)classes = np.argmax(box_class_probs, axis=-1)_class_pos = np.where(class_max_score >= OBJ_THRESH)boxes = boxes[_class_pos]classes = classes[_class_pos]scores = (class_max_score* box_confidences)[_class_pos]return boxes, classes, scoresdef nms_boxes(boxes, scores):"""Suppress non-maximal boxes.# Argumentsboxes: ndarray, boxes of objects.scores: ndarray, scores of objects.# Returnskeep: ndarray, index of effective boxes."""x = boxes[:, 0]y = boxes[:, 1]w = boxes[:, 2] - boxes[:, 0]h = boxes[:, 3] - boxes[:, 1]areas = w * horder = scores.argsort()[::-1]keep = []while order.size > 0:i = order[0]keep.append(i)xx1 = np.maximum(x[i], x[order[1:]])yy1 = np.maximum(y[i], y[order[1:]])xx2 = np.minimum(x[i] + w[i], x[order[1:]] + w[order[1:]])yy2 = np.minimum(y[i] + h[i], y[order[1:]] + h[order[1:]])w1 = np.maximum(0.0, xx2 - xx1 + 0.00001)h1 = np.maximum(0.0, yy2 - yy1 + 0.00001)inter = w1 * h1ovr = inter / (areas[i] + areas[order[1:]] - inter)inds = np.where(ovr <= NMS_THRESH)[0]order = order[inds + 1]keep = np.array(keep)return keepdef yolov5_post_process(input_data):masks = [[0, 1, 2], [3, 4, 5], [6, 7, 8]]anchors = [[15,20], [20, 75], [28, 25], [31,136], [44,42],[53,215], [75,76], [98,421], [148,226]]#anchors = [[10, 13], [16, 30], [33, 23], [30, 61], [62, 45],#[59, 119], [116, 90], [156, 198], [373, 326]]boxes, classes, scores = [], [], []for input, mask in zip(input_data, masks):b, c, s = process(input, mask, anchors)b, c, s = filter_boxes(b, c, s)boxes.append(b)classes.append(c)scores.append(s)boxes = np.concatenate(boxes)boxes = xywh2xyxy(boxes)classes = np.concatenate(classes)scores = np.concatenate(scores)nboxes, nclasses, nscores = [], [], []for c in set(classes):inds = np.where(classes == c)b = boxes[inds]c = classes[inds]s = scores[inds]keep = nms_boxes(b, s)nboxes.append(b[keep])nclasses.append(c[keep])nscores.append(s[keep])if not nclasses and not nscores:return None, None, Noneboxes = np.concatenate(nboxes)classes = np.concatenate(nclasses)scores = np.concatenate(nscores)return boxes, classes, scoresdef draw(image, boxes, scores, classes):"""Draw the boxes on the image.# Argument:image: original image.boxes: ndarray, boxes of objects.classes: ndarray, classes of objects.scores: ndarray, scores of objects.all_classes: all classes name."""for box, score, cl in zip(boxes, scores, classes):top, left, right, bottom = boxprint('class: {}, score: {}'.format(CLASSES[cl], score))print('box coordinate left,top,right,down: [{}, {}, {}, {}]'.format(top, left, right, bottom))top = int(top)left = int(left)right = int(right)bottom = int(bottom)cv2.rectangle(image, (top, left), (right, bottom), (255, 0, 0), 2)cv2.putText(image, '{0} {1:.2f}'.format(CLASSES[cl], score),(top, left - 6),cv2.FONT_HERSHEY_SIMPLEX,0.6, (0, 0, 255), 2)def letterbox(im, new_shape=(640, 640), color=(0, 0, 0)):# Resize and pad image while meeting stride-multiple constraintsshape = im.shape[:2] # current shape [height, width]if isinstance(new_shape, int):new_shape = (new_shape, new_shape)# Scale ratio (new / old)r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])# Compute paddingratio = r, r # width, height ratiosnew_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - new_unpad[1] # wh paddingdw /= 2 # divide padding into 2 sidesdh /= 2if shape[::-1] != new_unpad: # resizeim = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR)top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))left, right = int(round(dw - 0.1)), int(round(dw + 0.1))im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add borderreturn im, ratio, (dw, dh)if __name__ == '__main__':host_name = get_host()rknn_model = RK3588_RKNN_MODELrknn_lite = RKNNLite()# load RKNN modelprint('--> Load RKNN model')ret = rknn_lite.load_rknn(rknn_model)if ret != 0:print('Load RKNN model failed')exit(ret)print('done')#####ori_img = cv2.imread('./bus.jpg')######img = cv2.cvtColor(ori_img, cv2.COLOR_BGR2RGB)# init runtime environmentprint('--> Init runtime environment')# run on RK356x/RK3588 with Debian OS, do not need specify target.ret = rknn_lite.init_runtime(core_mask=RKNNLite.NPU_CORE_0)if ret != 0:print('Init runtime environment failed')exit(ret)print('done')# Set inputsimg = cv2.imread(IMG_PATH)img, ratio, (dw, dh) = letterbox(img, new_shape=(IMG_SIZE, IMG_SIZE))img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)img = cv2.resize(img, (IMG_SIZE, IMG_SIZE))# Inferenceprint('--> Running model')outputs = rknn_lite.inference(inputs=[img])#np.save('./onnx_yolov5_0.npy', outputs[0])#np.save('./onnx_yolov5_1.npy', outputs[1])#np.save('./onnx_yolov5_2.npy', outputs[2])print('done')# post processinput0_data = outputs[0]input1_data = outputs[1]input2_data = outputs[2]input0_data = input0_data.reshape([3, -1]+list(input0_data.shape[-2:]))input1_data = input1_data.reshape([3, -1]+list(input1_data.shape[-2:]))input2_data = input2_data.reshape([3, -1]+list(input2_data.shape[-2:]))input_data = list()input_data.append(np.transpose(input0_data, (2, 3, 0, 1)))input_data.append(np.transpose(input1_data, (2, 3, 0, 1)))input_data.append(np.transpose(input2_data, (2, 3, 0, 1)))boxes, classes, scores = yolov5_post_process(input_data)img_1 = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)if boxes is not None:draw(img_1, boxes, scores, classes)cv2.imwrite('result.jpg', img_1)rknn_lite.release()代码运行结果如下:

这里没有出车,是我训练的模型问题。

大家对以上代码是不是很熟悉,其实这部分代码的很多函数都是将onnx文件转换为rknn文件的代码,只是把它的from rknn.api import RKNN修改为from rknnlite.api import RKNNLite,并且将载入rknn模型的内容修改即可,数据的处理完全没变。

用这个代码的好处是,我们以后对相机进行检测目标时,只需要下载一次rknn模型,后续只需要处理图片即可。

二、下面再结合lidar数据的获取,我们可以同时获取雷达数据和检测rknn模型,代码如下:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

# coding:utf-8

import cv2import rospy

from sensor_msgs.msg import PointCloud2

import sensor_msgs.point_cloud2 as pc2

from std_msgs.msg import Header

from visualization_msgs.msg import Marker, MarkerArray

from geometry_msgs.msg import Point#import torch

import numpy as np

import sys

import time,datetime

print(sys.version)

#from recon_barriers_model import recon_barriers

#from pclpy import pcl

from queue import Queue

import open3d as o3dimport matplotlib

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

#%matplotlib#from create_date_file import data_time

import threading

import time,datetime

import os#先初始化ros,再from rknnlite.api import RKNNLite,否则会报错

rospy.init_node('lidar_node')import os

import urllib

import traceback

import sys

import numpy as np

import platform

from rknnlite.api import RKNNLite

from PIL import Image#######################################################################################################

#1.==================================rknn模型检测=====================================================#

#######################################################################################################QUANTIZE_ON = True

BOX_THRESH = 0.5

OBJ_THRESH = 0.45

NMS_THRESH = 0.65

IMG_SIZE = 640CLASSES = ("car", "moto", "persons")# decice tree for rk356x/rk3588

DEVICE_COMPATIBLE_NODE = '/proc/device-tree/compatible'def get_host():# get platform and device typesystem = platform.system()machine = platform.machine()os_machine = system + '-' + machineif os_machine == 'Linux-aarch64':try:with open(DEVICE_COMPATIBLE_NODE) as f:device_compatible_str = f.read()host = 'RK3588'except IOError:print('Read device node {} failed.'.format(DEVICE_COMPATIBLE_NODE))exit(-1)else:host = os_machinereturn hostINPUT_SIZE = 640RK3588_RKNN_MODEL = 'best.rknn'def sigmoid(x):return 1 / (1 + np.exp(-x))def xywh2xyxy(x):# Convert [x, y, w, h] to [x1, y1, x2, y2]y = np.copy(x)y[:, 0] = x[:, 0] - x[:, 2] / 2 # top left xy[:, 1] = x[:, 1] - x[:, 3] / 2 # top left yy[:, 2] = x[:, 0] + x[:, 2] / 2 # bottom right xy[:, 3] = x[:, 1] + x[:, 3] / 2 # bottom right yreturn ydef process(input, mask, anchors):anchors = [anchors[i] for i in mask]grid_h, grid_w = map(int, input.shape[0:2])box_confidence = sigmoid(input[..., 4])box_confidence = np.expand_dims(box_confidence, axis=-1)box_class_probs = sigmoid(input[..., 5:])box_xy = sigmoid(input[..., :2]) * 2 - 0.5col = np.tile(np.arange(0, grid_w), grid_w).reshape(-1, grid_w)row = np.tile(np.arange(0, grid_h).reshape(-1, 1), grid_h)col = col.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)row = row.reshape(grid_h, grid_w, 1, 1).repeat(3, axis=-2)grid = np.concatenate((col, row), axis=-1)box_xy += gridbox_xy *= int(IMG_SIZE/grid_h)box_wh = pow(sigmoid(input[..., 2:4]) * 2, 2)box_wh = box_wh * anchorsbox = np.concatenate((box_xy, box_wh), axis=-1)return box, box_confidence, box_class_probsdef filter_boxes(boxes, box_confidences, box_class_probs):"""Filter boxes with box threshold. It's a bit different with origin yolov5 post process!# Argumentsboxes: ndarray, boxes of objects.box_confidences: ndarray, confidences of objects.box_class_probs: ndarray, class_probs of objects.# Returnsboxes: ndarray, filtered boxes.classes: ndarray, classes for boxes.scores: ndarray, scores for boxes."""boxes = boxes.reshape(-1, 4)box_confidences = box_confidences.reshape(-1)box_class_probs = box_class_probs.reshape(-1, box_class_probs.shape[-1])_box_pos = np.where(box_confidences >= OBJ_THRESH)boxes = boxes[_box_pos]box_confidences = box_confidences[_box_pos]box_class_probs = box_class_probs[_box_pos]class_max_score = np.max(box_class_probs, axis=-1)classes = np.argmax(box_class_probs, axis=-1)_class_pos = np.where(class_max_score >= OBJ_THRESH)boxes = boxes[_class_pos]classes = classes[_class_pos]scores = (class_max_score* box_confidences)[_class_pos]return boxes, classes, scoresdef nms_boxes(boxes, scores):"""Suppress non-maximal boxes.# Argumentsboxes: ndarray, boxes of objects.scores: ndarray, scores of objects.# Returnskeep: ndarray, index of effective boxes."""x = boxes[:, 0]y = boxes[:, 1]w = boxes[:, 2] - boxes[:, 0]h = boxes[:, 3] - boxes[:, 1]areas = w * horder = scores.argsort()[::-1]keep = []while order.size > 0:i = order[0]keep.append(i)xx1 = np.maximum(x[i], x[order[1:]])yy1 = np.maximum(y[i], y[order[1:]])xx2 = np.minimum(x[i] + w[i], x[order[1:]] + w[order[1:]])yy2 = np.minimum(y[i] + h[i], y[order[1:]] + h[order[1:]])w1 = np.maximum(0.0, xx2 - xx1 + 0.00001)h1 = np.maximum(0.0, yy2 - yy1 + 0.00001)inter = w1 * h1ovr = inter / (areas[i] + areas[order[1:]] - inter)inds = np.where(ovr <= NMS_THRESH)[0]order = order[inds + 1]keep = np.array(keep)return keepdef yolov5_post_process(input_data):masks = [[0, 1, 2], [3, 4, 5], [6, 7, 8]]anchors = [[15,20], [20, 75], [28, 25], [31,136], [44,42],[53,215], [75,76], [98,421], [148,226]]#anchors = [[10, 13], [16, 30], [33, 23], [30, 61], [62, 45],#[59, 119], [116, 90], [156, 198], [373, 326]]boxes, classes, scores = [], [], []for input, mask in zip(input_data, masks):b, c, s = process(input, mask, anchors)b, c, s = filter_boxes(b, c, s)boxes.append(b)classes.append(c)scores.append(s)boxes = np.concatenate(boxes)boxes = xywh2xyxy(boxes)classes = np.concatenate(classes)scores = np.concatenate(scores)nboxes, nclasses, nscores = [], [], []for c in set(classes):inds = np.where(classes == c)b = boxes[inds]c = classes[inds]s = scores[inds]keep = nms_boxes(b, s)nboxes.append(b[keep])nclasses.append(c[keep])nscores.append(s[keep])if not nclasses and not nscores:return None, None, Noneboxes = np.concatenate(nboxes)classes = np.concatenate(nclasses)scores = np.concatenate(nscores)return boxes, classes, scoresdef draw(image, boxes, scores, classes):"""Draw the boxes on the image.# Argument:image: original image.boxes: ndarray, boxes of objects.classes: ndarray, classes of objects.scores: ndarray, scores of objects.all_classes: all classes name."""for box, score, cl in zip(boxes, scores, classes):top, left, right, bottom = boxprint('class: {}, score: {}'.format(CLASSES[cl], score))print('box coordinate left,top,right,down: [{}, {}, {}, {}]'.format(top, left, right, bottom))top = int(top)left = int(left)right = int(right)bottom = int(bottom)cv2.rectangle(image, (top, left), (right, bottom), (255, 0, 0), 2)cv2.putText(image, '{0} {1:.2f}'.format(CLASSES[cl], score),(top, left - 6),cv2.FONT_HERSHEY_SIMPLEX,0.6, (0, 0, 255), 2)def letterbox(im, new_shape=(640, 640), color=(0, 0, 0)):# Resize and pad image while meeting stride-multiple constraintsshape = im.shape[:2] # current shape [height, width]if isinstance(new_shape, int):new_shape = (new_shape, new_shape)# Scale ratio (new / old)r = min(new_shape[0] / shape[0], new_shape[1] / shape[1])# Compute paddingratio = r, r # width, height ratiosnew_unpad = int(round(shape[1] * r)), int(round(shape[0] * r))dw, dh = new_shape[1] - new_unpad[0], new_shape[0] - new_unpad[1] # wh paddingdw /= 2 # divide padding into 2 sidesdh /= 2if shape[::-1] != new_unpad: # resizeim = cv2.resize(im, new_unpad, interpolation=cv2.INTER_LINEAR)top, bottom = int(round(dh - 0.1)), int(round(dh + 0.1))left, right = int(round(dw - 0.1)), int(round(dw + 0.1))im = cv2.copyMakeBorder(im, top, bottom, left, right, cv2.BORDER_CONSTANT, value=color) # add borderreturn im, ratio, (dw, dh)host_name = get_host()

rknn_model = RK3588_RKNN_MODELrknn_lite = RKNNLite()# load RKNN model

print('--> Load RKNN model')

ret = rknn_lite.load_rknn(rknn_model)

if ret != 0:print('Load RKNN model failed')exit(ret)

print('done')# init runtime environment

print('--> Init runtime environment')# run on RK356x/RK3588 with Debian OS, do not need specify target.

ret = rknn_lite.init_runtime(core_mask=RKNNLite.NPU_CORE_0)

if ret != 0:print('Init runtime environment failed')exit(ret)

print('done')#######################################################################################################

#1.==================================rknn模型检测=====================================================#

##############################################################################################################################################################################################################

#2.==================================雷达数据获取=====================================================#

########################################################################################################2.打开相机

cap = cv2.VideoCapture("/dev/video61")#1.根据时间自动创建文件夹

def data_time(root_path="img_lidar_save/"):# 1.鑾峰彇褰撳墠鏃堕棿瀛楃涓叉垨鏃堕棿鎴筹紙閮藉彲绮剧‘鍒板井绉掞級start_time=datetime.datetime.now().strftime(f'%Y-%m-%d %H:%M:%S{r".%f"}')times=start_time.split(" ")# 2.data_files锛氭牴鎹棩鏈熻幏鍙栬鍒涘缓鐨勬枃浠跺す鍚嶇О锛屾瘮濡備粖澶╂槸2023_12_07data_files=times[0]#3.鑾峰彇鏂囦欢澶硅矾寰勶細img_lidar_save/2023_12_07file_path=root_path+data_filescamera_file = file_path + "/" + "camera_data"lidar_file = file_path + "/" + "lidar_data"#4.濡傛灉浠婂ぉ杩樻病鏈夋枃浠跺す锛屽垯鍒涘缓鏂囦欢澶?鏂囦欢澶瑰悕绉颁负 2023_12_07if not os.path.exists(file_path):os.makedirs(file_path)#5.寤虹珛camera鍜宭idar鏂囦欢澶癸紝瀛樺彇鍚勮嚜鐨勬暟鎹?if not os.path.exists(camera_file):os.makedirs(camera_file)if not os.path.exists(lidar_file):os.makedirs(lidar_file)#6.寤虹珛鍚勮嚜鐨勫瓨鍙栧浘鐗囧拰瑙嗛鐨勬枃浠跺すimg_file=camera_file+ "/" +"image"vedios=camera_file+ "/" +"vedios"lidar_videos=lidar_file +"/" +"vedios"lidar_pcd=lidar_file +"/" +"image"if not os.path.exists(img_file):os.makedirs(img_file)if not os.path.exists(vedios):os.makedirs(vedios)if not os.path.exists(lidar_videos):os.makedirs(lidar_videos)if not os.path.exists(lidar_pcd):os.makedirs(lidar_pcd)return img_file,vedios,lidar_videos,lidar_pcd#1.聚类的数据处理

def cluster(points, radius=0.2):"""points: pointcloudradius: max cluster range"""items = []while len(points)>1:item = np.array([points[0]])base = points[0]points = np.delete(points, 0, 0)distance = (points[:,0]-base[0])**2+(points[:,1]-base[1])**2+(points[:,2]-base[2])**2infected_points = np.where(distance <= radius**2)item = np.append(item, points[infected_points], axis=0)border_points = points[infected_points]points = np.delete(points, infected_points, 0)while len(border_points) > 0:border_base = border_points[0]border_points = np.delete(border_points, 0, 0)border_distance = (points[:,0]-border_base[0])**2+(points[:,1]-border_base[1])**2border_infected_points = np.where(border_distance <= radius**2)item = np.append(item, points[border_infected_points], axis=0)border_points = points[border_infected_points]points = np.delete(points, border_infected_points, 0)items.append(item)return items#2.保存点云

def save_pointcloud(pointcloud_np, file_name="pointcloud.pcd"):point_cloud_o3d = o3d.geometry.PointCloud()point_cloud_o3d.points = o3d.utility.Vector3dVector(pointcloud_np[:, 0:3])o3d.io.write_point_cloud(file_name, point_cloud_o3d, write_ascii=False, compressed=True)#3.点云数据的处理

def lidars(msg,pcd_path):#4.点云数据的获取pcl_msg = pc2.read_points(msg, skip_nans=False, field_names=("x", "y", "z", "intensity","ring"))#5.点云数据的过滤np_p_2 = np.array(list(pcl_msg), dtype=np.float32)#print(np_p_2)#6.将过滤后的点云数据保存为pcd文件#print(pcd_path)save_pointcloud(np_p_2, file_name=pcd_path)#7.根据条件过滤点云数据ss=np.where([s[0]>2 and s[1]<3 and s[-1]>-3 and s[2]>-0.5 for s in np_p_2])ans=np_p_2[ss]#8.点云的聚类算法 item=cluster(ans, radius=0.2)m_item=[]#9.求每个类的均值,之后与目标进行匹配#hh=np.where([s.shape[0]>20 for s in item])#print("//",len(hh),len(item))for items in item:#print("..............",items.shape)#x,y,z=int(items[:,:1].sum().mean()) x,y,z,r=items[:,:1].mean(),items[:,1:2].mean(),items[:,2:3].mean(),items[:,3:4].mean()m_item.append([x,y,z]) #4.相机图片数据的处理

def images(frame,jpg_path):#1.保存jpg文件cv2.imwrite(jpg_path,frame)#5.对相机图片和点云数据的汇总处理

def velo_callback(msg):#1.自动生成当天保存文件的文件夹及保存文件的路径#img_file:存放图片的路径(jpg或者png文件)#vedios:存放相机视频的路径(mp4文件)#lidar_videos:存放雷达视频的路径(bag文件)#lidar_pcd:存放点云数据的pcd文件的视频(pcd文件)img_file,vedios,lidar_videos,lidar_pcd=data_time(root_path="img_lidar_save/")vedio_time=day_time()vedio_path=vedios+"/"+vedio_time+".avi"lidar_path=lidar_videos+"/"+vedio_time+".bag"ret, frame = cap.read()frame = cv2.rotate(frame, 0, dst=None) # 视频是倒着的,要对视频进行两次90度的翻转frame = cv2.rotate(frame, 0, dst=None) # 视频是倒着的,要对视频进行两次90度的翻转########################################################################################################1.==================================rknn模型检测=====================================================########################################################################################################img, ratio, (dw, dh) = letterbox(frame, new_shape=(IMG_SIZE, IMG_SIZE))img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)img = cv2.resize(img, (IMG_SIZE, IMG_SIZE))# Inferenceprint('--> Running model')outputs = rknn_lite.inference(inputs=[img])#np.save('./onnx_yolov5_0.npy', outputs[0])#np.save('./onnx_yolov5_1.npy', outputs[1])#np.save('./onnx_yolov5_2.npy', outputs[2])print('done')# post processinput0_data = outputs[0]input1_data = outputs[1]input2_data = outputs[2]input0_data = input0_data.reshape([3, -1]+list(input0_data.shape[-2:]))input1_data = input1_data.reshape([3, -1]+list(input1_data.shape[-2:]))input2_data = input2_data.reshape([3, -1]+list(input2_data.shape[-2:]))input_data = list()input_data.append(np.transpose(input0_data, (2, 3, 0, 1)))input_data.append(np.transpose(input1_data, (2, 3, 0, 1)))input_data.append(np.transpose(input2_data, (2, 3, 0, 1)))boxes, classes, scores = yolov5_post_process(input_data)img_1 = cv2.cvtColor(img, cv2.COLOR_RGB2BGR)if boxes is not None:draw(img_1, boxes, scores, classes)cv2.imwrite('result.jpg', img_1)cv2.imshow("src_image", img_1)cv2.waitKey(1)########################################################################################################1.==================================rknn模型检测=====================================================#########################################################################################################显示视频#cv2.imshow("src_image", frame)#cv2.waitKey(1)#3.获取实时时间,作为保存jpg和pcd文件的名称now_time=day_time()print("------>",now_time)#4.获得存取pcd文件和jpg文件的路径pcd_path=lidar_pcd+"/"+now_time+".pcd"jpg_path=img_file+"/"+now_time+".jpg"#4.lidar和images数据的处理及保存,两个函数放在两个线程同时运行lidars(msg,pcd_path)#lidar数据的处理及保存images(frame,jpg_path)#images数据的处理及保存#根据时间给jpg和pcd文件命名

def day_time():start_time=datetime.datetime.now().strftime(f'%Y-%m-%d %H:%M:%S{r".%f"}')times=start_time.split(" ")mins=times[1].split(":")day_names=mins[0]+"_"+mins[1]+"_"+mins[2][:2]+"_"+mins[2][3:5]return day_namesif __name__ == '__main__':sub_ = rospy.Subscriber("livox/lidar", PointCloud2,velo_callback)print("ros_node has started!")rospy.spin()rknn_lite.release()注意,在该文件的同栏目需要建立一个文件夹 img_lidar_save,这个文件夹下面保存相机的jpg文件和pcd文件。效果如下:

以上是根据当天的实际时间自动保存的jig和pcd文件。下面是rknn模型检测的结果。

相关文章:

14. rk3588自带的RKNNLite检测yolo模型(python)

首先将文件夹~/rknpu2/runtime/RK3588/Linux/librknn_api/aarch64/下的文件librknnrt.so复制到文件夹/usr/lib/下(该文件夹下原有的文件librknnrt.so是用来测试resnet50模型的,所以要替换成yolo模型的librknnrt.so),如下图所示&am…...

心理辅导|高校心理教育辅导系统|基于Springboot的高校心理教育辅导系统设计与实现(源码+数据库+文档)

高校心理教育辅导系统目录 目录 基于Springboot的高校心理教育辅导系统设计与实现 一、前言 二、系统功能设计 三、系统实现 1、学生功能模块的实现 (1)学生登录界面 (2)留言反馈界面 (3)试卷列表界…...

字符串方法挑战

题目 编写一个程序,接收一个使用下划线命名法(underscore_case)编写的变量名列表,并将它们转换为驼峰命名法(camelCase)。 输入将来自插入到DOM中的文本区域(请参见下面的代码)&…...

vivado FIR Filters

Vivado合成直接从RTL中推导出乘加级联来组成FIR滤波器。这种滤波器有几种可能的实现方式;一个例子是收缩滤波器在7系列DSP48E1 Slice用户指南(UG479)中进行了描述,并在8抽头偶数中显示对称收缩FIR(Verilog)…...

c# Contains方法-检查集合中是否包含指定的元素

Contains 是 .NET 集合框架中许多集合类(如 List、Array、HashSet 等)提供的一种方法,用于检查集合中是否包含指定的元素。对于 List<int> 类型,Contains 方法会遍历列表中的所有元素,并判断传入的方法参数是否存…...

【开源】在线办公系统 JAVA+Vue.js+SpringBoot+MySQL

目录 1 功能模块1.1 员工管理模块1.2 邮件管理模块1.3 人事档案模块1.4 公告管理模块 2 系统展示3 核心代码3.1 查询用户3.2 导入用户3.3 新增公告 4 免责声明 本文项目编号: T 001 。 \color{red}{本文项目编号:T001。} 本文项目编号:T001。…...

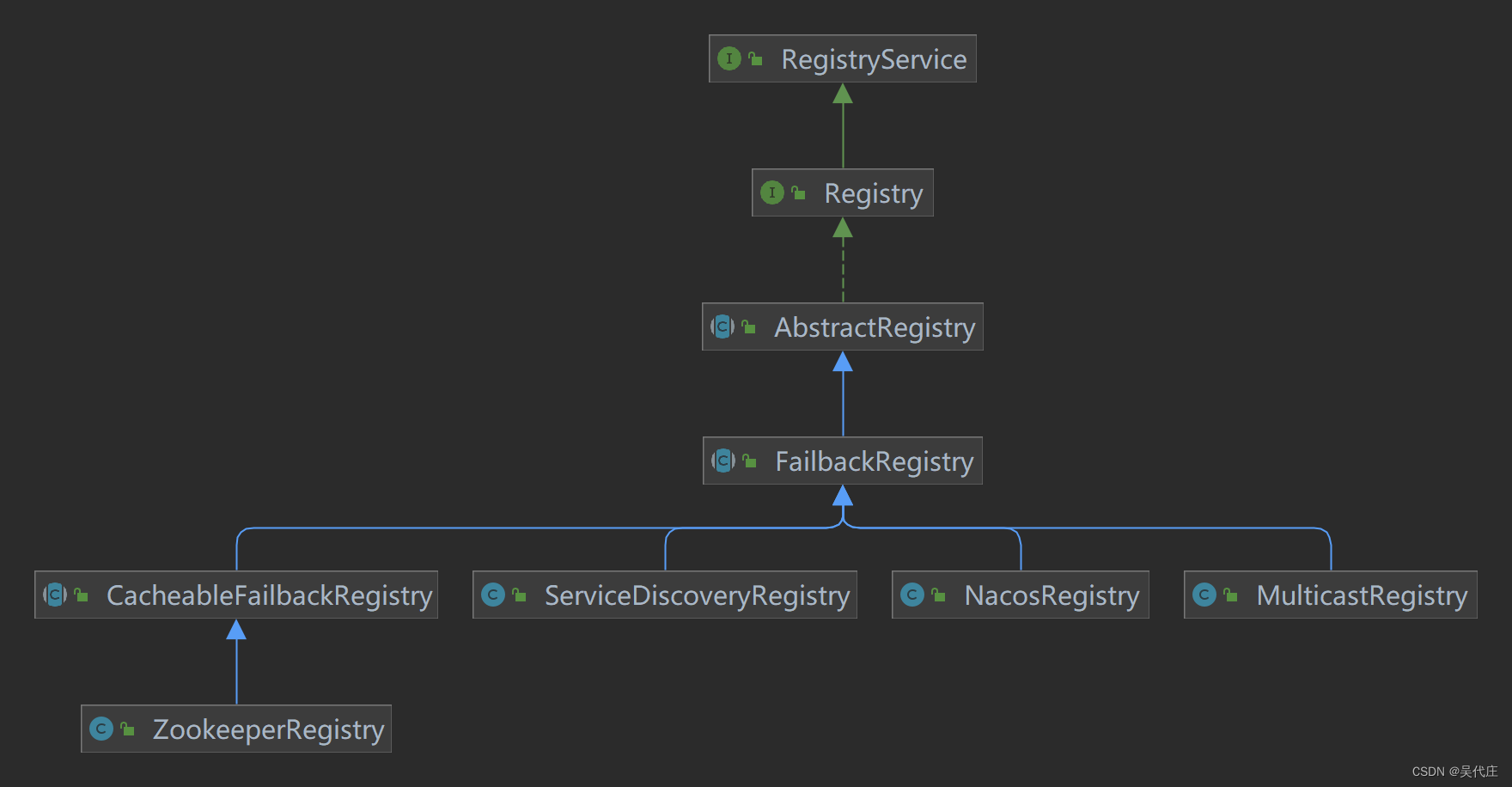

dubbo源码中设计模式——注册中心中工厂模式的应用

工厂模式的介绍 工厂模式提供了一种创建对象的方式,而无需指定要创建的具体类。 工厂模式属于创建型模式,它在创建对象时提供了一种封装机制,将实际创建对象的代码与使用代码分离。 应用场景:定义一个创建对象的接口࿰…...

T-Dongle-S3开发笔记——移植LVGL

添加lvgl组件 idf.py add-dependency lvgl/lvgl>8.* 新建终端执行命令后出现了新的文件: 清除再编译后才会出现lvgl库 优化为本地组件 以上方式修改了组件文件内容重新编译后文件又会变回去。 所以我们要把lvgl变成本地组件 1、要把 idf_component.yml 文…...

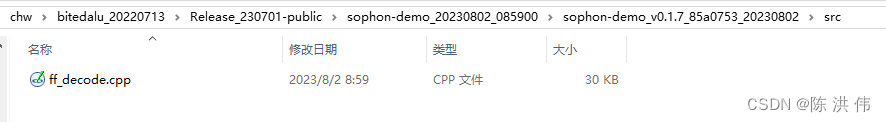

SOPHON算能科技新版SDK环境配置以及C++ demo使用过程

目录 1 SDK大包下载 2 获取SDK中的库文件和头文件 2.1 注意事项 2.2 交叉编译环境搭建 2.2.1 首先安装工具链 2.2.2 解压sophon-img包里的libsophon_soc__aarch64.tar.gz,将lib和include的所有内容拷贝到soc-sdk文件夹 2.2.3 解压sophon-mw包里的sophon-mw-s…...

Linux-SSH被攻击-解决方案

文章目录 一、检查攻击来源二、防范措施三、Fail2banfirewallcmd-ipset安装Fail2ban:安装firewalld:配置Fail2ban:配置firewalld以使用fail2ban:测试配置: SSH端口暴露在公网上很可能被黑客扫描,并尝试登入…...

)

第1章 计算机系统概述(2)

1.4操作系统结构 随着操作系统功能的不断增多和代码规模的不断变大,合理的操作系统结构,对于降低操作系统复杂度,提升操作系统安全与可靠性来说变得尤为重要。 分层法: 优点: 1.便于系统调试和验证,简化系统的设计和实现 2.易于扩充和维护 缺点: 1.合理定义各层较难(依赖关系比…...

【Java中23种设计模式-单例模式--饿汉式】

加油,新时代打工人! 简单粗暴,直接上代码。 23种设计模式定义介绍 Java中23种设计模式-单例模式 Java中23种设计模式-单例模式2–懒汉式线程不安全 Java中23种设计模式-单例模式2–懒汉式2线程安全 package mode;/*** author wenhao* dat…...

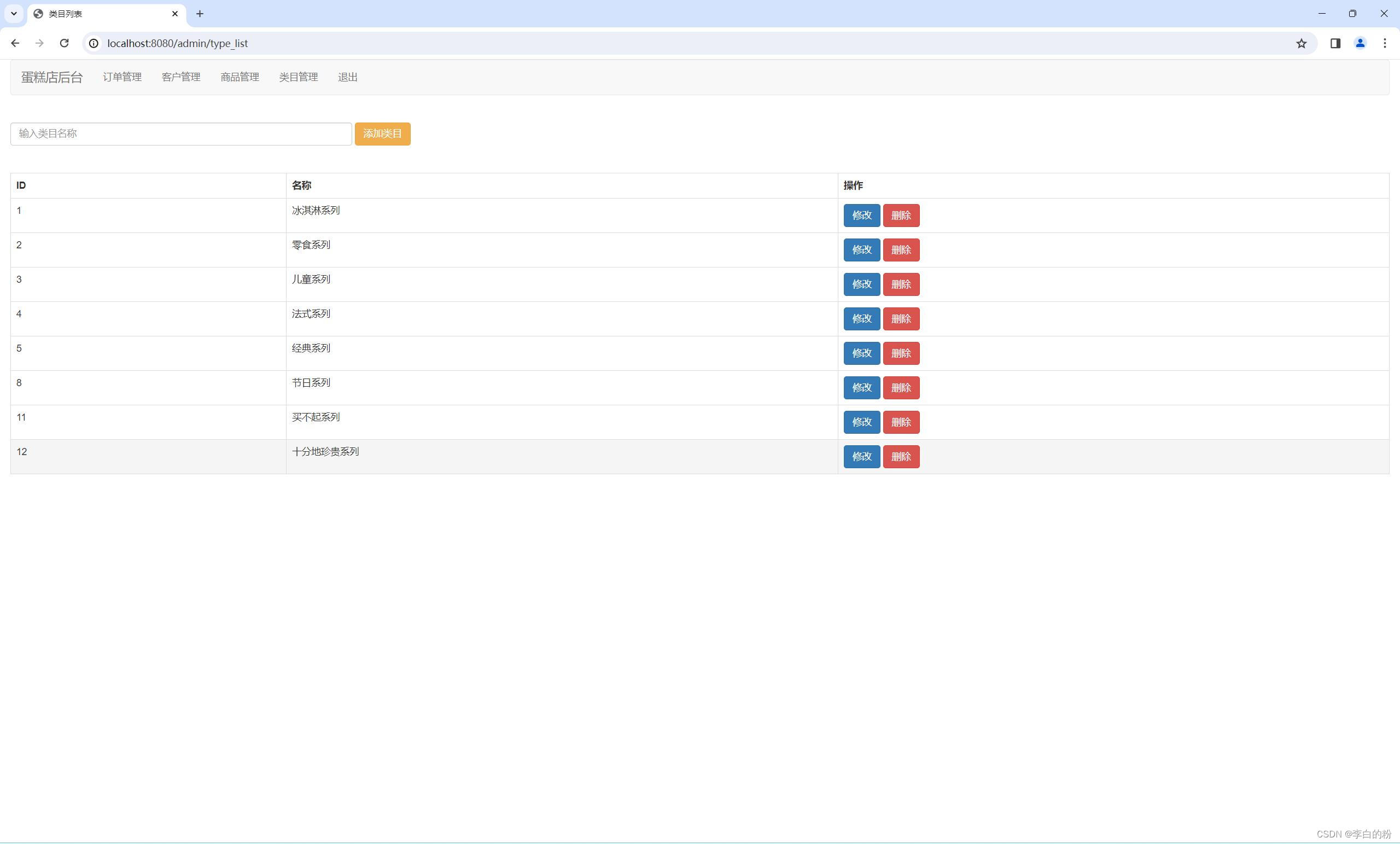

基于JavaWeb实现的在线蛋糕商城

一、系统架构 前端:jsp | bootstrap | js | css 后端:servlet | mybatis 环境:jdk1.7 | mysql | maven | tomcat 二、代码及数据库 三、功能介绍 01. web页-首页 02. web页-商品分类 03. web页-热销 04. web页-新品 05. w…...

【Pytorch】各种维度变换函数总结

维度变换千万不要混着用,尤其是交换维度的transpose和更改观察视角的view或者reshape!混用了以后虽然不会报错,但是数据是乱的, 建议用einops中的rearrange,符合人的直观,不容易出错。 一个例子: >>…...

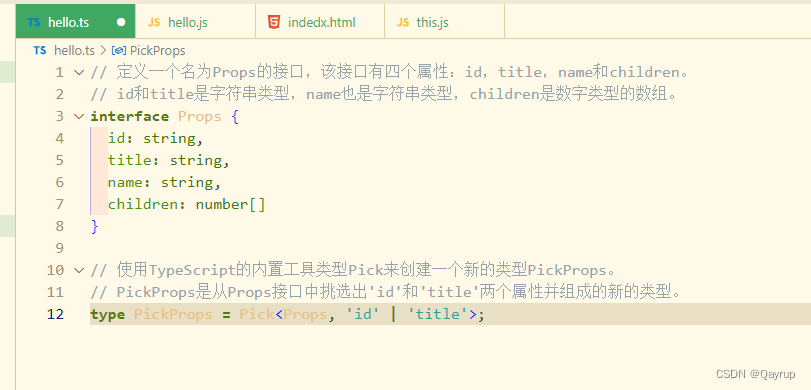

typescript 泛型详解

typescript 泛型 泛型是可以在保证类型安全前提下,让函数等与多种类型一起工作,从而实现复用,常用于: 函数、接口、class中。 需求:创建一个id 函数,传入什么数据就返回该数据本身(也就是说,参数和返回值类型相同)。 …...

【Ubuntu内核】解决Ubuntu 20.04更新内核后无法联网的问题

最近在使用Ubuntu 20.04时,在更新内核后无法进行WiFi联网。我的电脑上装载的是AX211型号的无线网卡,之前安装了相应的驱动,并且一直正常使用。但不小心更新到了Linux 5.15.0-94-generic后,突然发现无法连接网络了。 于是首先怀疑…...

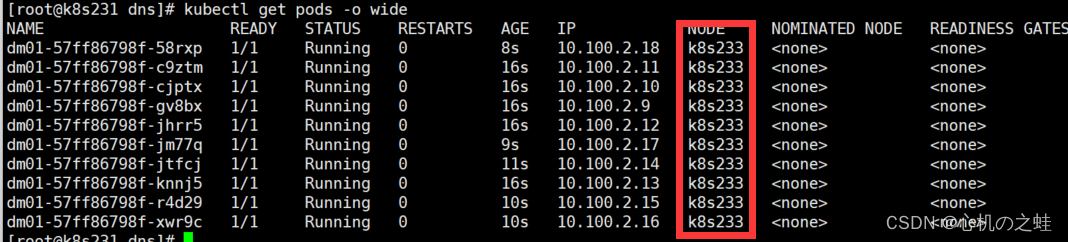

20-k8s中pod的调度-nodeSelector节点选择器

一、概念 我们先创建一个普通的deploy资源,设置为10个副本 [rootk8s231 dns]# cat deploy.yaml apiVersion: apps/v1 kind: Deployment metadata: name: dm01 spec: replicas: 10 selector: matchLabels: k8s: k8s template: metadata: …...

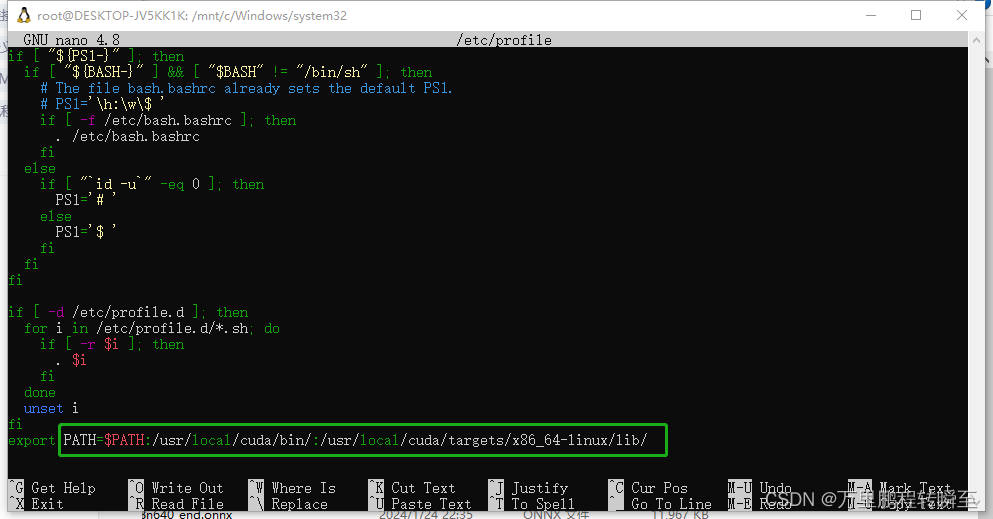

win10下wsl2使用记录(系统迁移到D盘、配置国内源、安装conda环境、配置pip源、安装pytorch-gpu环境、安装paddle-gpu环境)

wsl2 安装好后环境测试效果如下,支持命令nvidia-smi,不支持命令nvcc,usr/local目录下没有cuda文件夹。 系统迁移到非C盘 wsl安装的系统默认在c盘,为节省c盘空间进行迁移。 1、输出wsl -l 查看要迁移的系统名称 2、执行导出命…...

数据结构与算法:栈

朋友们大家好啊,在链表的讲解过后,我们本节内容来介绍一个特殊的线性表:栈,在讲解后也会以例题来加深对本节内容的理解 栈 栈的介绍栈进出栈的变化形式 栈的顺序存储结构的有关操作栈的结构定义与初始化压栈操作出栈操作获取栈顶元…...

Newtonsoft.Json设置忽略某些字段

using Newtonsoft.Json; using Newtonsoft.Json.Serialization; using System; using System.Collections.Generic; using System.Linq; using System.Text; using System.Threading.Tasks;namespace TestProject1 {/// <summary>/// 输出json时,设置忽略哪些…...

React 第五十五节 Router 中 useAsyncError的使用详解

前言 useAsyncError 是 React Router v6.4 引入的一个钩子,用于处理异步操作(如数据加载)中的错误。下面我将详细解释其用途并提供代码示例。 一、useAsyncError 用途 处理异步错误:捕获在 loader 或 action 中发生的异步错误替…...

Linux链表操作全解析

Linux C语言链表深度解析与实战技巧 一、链表基础概念与内核链表优势1.1 为什么使用链表?1.2 Linux 内核链表与用户态链表的区别 二、内核链表结构与宏解析常用宏/函数 三、内核链表的优点四、用户态链表示例五、双向循环链表在内核中的实现优势5.1 插入效率5.2 安全…...

【ROS】Nav2源码之nav2_behavior_tree-行为树节点列表

1、行为树节点分类 在 Nav2(Navigation2)的行为树框架中,行为树节点插件按照功能分为 Action(动作节点)、Condition(条件节点)、Control(控制节点) 和 Decorator(装饰节点) 四类。 1.1 动作节点 Action 执行具体的机器人操作或任务,直接与硬件、传感器或外部系统…...

鸿蒙中用HarmonyOS SDK应用服务 HarmonyOS5开发一个生活电费的缴纳和查询小程序

一、项目初始化与配置 1. 创建项目 ohpm init harmony/utility-payment-app 2. 配置权限 // module.json5 {"requestPermissions": [{"name": "ohos.permission.INTERNET"},{"name": "ohos.permission.GET_NETWORK_INFO"…...

)

OpenLayers 分屏对比(地图联动)

注:当前使用的是 ol 5.3.0 版本,天地图使用的key请到天地图官网申请,并替换为自己的key 地图分屏对比在WebGIS开发中是很常见的功能,和卷帘图层不一样的是,分屏对比是在各个地图中添加相同或者不同的图层进行对比查看。…...

高效线程安全的单例模式:Python 中的懒加载与自定义初始化参数

高效线程安全的单例模式:Python 中的懒加载与自定义初始化参数 在软件开发中,单例模式(Singleton Pattern)是一种常见的设计模式,确保一个类仅有一个实例,并提供一个全局访问点。在多线程环境下,实现单例模式时需要注意线程安全问题,以防止多个线程同时创建实例,导致…...

蓝桥杯 冶炼金属

原题目链接 🔧 冶炼金属转换率推测题解 📜 原题描述 小蓝有一个神奇的炉子用于将普通金属 O O O 冶炼成为一种特殊金属 X X X。这个炉子有一个属性叫转换率 V V V,是一个正整数,表示每 V V V 个普通金属 O O O 可以冶炼出 …...

【笔记】WSL 中 Rust 安装与测试完整记录

#工作记录 WSL 中 Rust 安装与测试完整记录 1. 运行环境 系统:Ubuntu 24.04 LTS (WSL2)架构:x86_64 (GNU/Linux)Rust 版本:rustc 1.87.0 (2025-05-09)Cargo 版本:cargo 1.87.0 (2025-05-06) 2. 安装 Rust 2.1 使用 Rust 官方安…...

【Linux】Linux 系统默认的目录及作用说明

博主介绍:✌全网粉丝23W,CSDN博客专家、Java领域优质创作者,掘金/华为云/阿里云/InfoQ等平台优质作者、专注于Java技术领域✌ 技术范围:SpringBoot、SpringCloud、Vue、SSM、HTML、Nodejs、Python、MySQL、PostgreSQL、大数据、物…...

Proxmox Mail Gateway安装指南:从零开始配置高效邮件过滤系统

💝💝💝欢迎莅临我的博客,很高兴能够在这里和您见面!希望您在这里可以感受到一份轻松愉快的氛围,不仅可以获得有趣的内容和知识,也可以畅所欲言、分享您的想法和见解。 推荐:「storms…...