【TVM 教程】在 Relay 中使用外部库

Apache TVM 是一个端到端的深度学习编译框架,适用于 CPU、GPU 和各种机器学习加速芯片。更多 TVM 中文文档可访问 → https://tvm.hyper.ai/

作者:Masahiro Masuda,Truman Tian

本文介绍如何将 cuDNN 或 cuBLAS 等外部库与 Relay 一起使用。

Relay 内部用 TVM 来生成 target-specific 的代码。例如,TVM 使用 CUDA 后端为用户提供的网络中的所有层生成 CUDA 内核。有时也可将各个供应商开发的外部库合并到 Relay 中,TVM 有一种机制可以透明地调用这些库——对于 Relay 用户,只需要设置一个适当的 target 字符串。

使用 Relay 的外部库前,用你要用的库构建 TVM。例如,要用 cuDNN,需启用 cmake/config.cmake 中的 USE_CUDNN 选项,必要时要指定 cuDNN 头文件和库目录。

首先导入 Relay 和 TVM。

import tvm

from tvm import te

import numpy as np

from tvm.contrib import graph_executor as runtime

from tvm import relay

from tvm.relay import testing

import tvm.testing

创建一个简单网络

下面创建一个简单网络进行演示,它由 convolution,batch normalization 和 ReLU activation 组成。

out_channels = 16

batch_size = 1data = relay.var("data", relay.TensorType((batch_size, 3, 224, 224), "float32"))

weight = relay.var("weight")

bn_gamma = relay.var("bn_gamma")

bn_beta = relay.var("bn_beta")

bn_mmean = relay.var("bn_mean")

bn_mvar = relay.var("bn_var")simple_net = relay.nn.conv2d(data=data, weight=weight, kernel_size=(3, 3), channels=out_channels, padding=(1, 1)

)

simple_net = relay.nn.batch_norm(simple_net, bn_gamma, bn_beta, bn_mmean, bn_mvar)[0]

simple_net = relay.nn.relu(simple_net)

simple_net = relay.Function(relay.analysis.free_vars(simple_net), simple_net)data_shape = (batch_size, 3, 224, 224)

net, params = testing.create_workload(simple_net)

使用 CUDA 后端构建和运行

正常使用 CUDA 后端构建和运行这个网络。设置日志记录级别为 DEBUG,Relay 计算图编译的结果将作为伪代码转储。

import logginglogging.basicConfig(level=logging.DEBUG) # to dump TVM IR after fusiontarget = "cuda"

lib = relay.build_module.build(net, target, params=params)dev = tvm.device(target, 0)

data = np.random.uniform(-1, 1, size=data_shape).astype("float32")

module = runtime.GraphModule(lib["default"](dev))

module.set_input("data", data)

module.run()

out_shape = (batch_size, out_channels, 224, 224)

out = module.get_output(0, tvm.nd.empty(out_shape))

out_cuda = out.numpy()

输出结果:

/workspace/python/tvm/driver/build_module.py:268: UserWarning: target_host parameter is going to be deprecated. Please pass in tvm.target.Target(target, host=target_host) instead."target_host parameter is going to be deprecated. "

生成的伪代码应如下。注意 bias add,batch normalization 和 ReLU activation 是如何融合到卷积核中的。 TVM 从这个表示中生成一个单一的融合内核。

produce tensor {// attr [iter_var(blockIdx.z, , blockIdx.z)] thread_extent = 1// attr [compute] storage_scope = "local"allocate compute[float32 * 32]// attr [pad_temp.shared] storage_scope = "shared"allocate pad_temp.shared[float32 * 180]// attr [placeholder.shared] storage_scope = "shared"allocate placeholder.shared[float32 * 144]// attr [iter_var(blockIdx.y, , blockIdx.y)] thread_extent = 28// attr [iter_var(blockIdx.x, , blockIdx.x)] thread_extent = 14// attr [iter_var(threadIdx.z, , threadIdx.z)] thread_extent = 4// attr [iter_var(threadIdx.y, , threadIdx.y)] thread_extent = 1// attr [iter_var(threadIdx.x, , threadIdx.x)] thread_extent = 16produce compute {compute[0] = 0.000000fcompute[1] = 0.000000fcompute[2] = 0.000000fcompute[3] = 0.000000fcompute[4] = 0.000000fcompute[5] = 0.000000fcompute[6] = 0.000000fcompute[7] = 0.000000fcompute[8] = 0.000000fcompute[9] = 0.000000fcompute[10] = 0.000000fcompute[11] = 0.000000fcompute[12] = 0.000000fcompute[13] = 0.000000fcompute[14] = 0.000000fcompute[15] = 0.000000fcompute[16] = 0.000000fcompute[17] = 0.000000fcompute[18] = 0.000000fcompute[19] = 0.000000fcompute[20] = 0.000000fcompute[21] = 0.000000fcompute[22] = 0.000000fcompute[23] = 0.000000fcompute[24] = 0.000000fcompute[25] = 0.000000fcompute[26] = 0.000000fcompute[27] = 0.000000fcompute[28] = 0.000000fcompute[29] = 0.000000fcompute[30] = 0.000000fcompute[31] = 0.000000ffor (rc.outer, 0, 3) {produce pad_temp.shared {// attr [iter_var(threadIdx.z, , threadIdx.z)] thread_extent = 4// attr [iter_var(threadIdx.y, , threadIdx.y)] thread_extent = 1// attr [iter_var(threadIdx.x, , threadIdx.x)] thread_extent = 16if (likely(((threadIdx.z*15) < (60 - threadIdx.x)))) {if (likely((threadIdx.x < 15))) {pad_temp.shared[(((((threadIdx.z*15) + threadIdx.x)/60)*180) + ((((((threadIdx.z*15) + threadIdx.x)/6) % 10)*18) + ((((threadIdx.z*3) + threadIdx.x)*3) % 18)))] = tvm_if_then_else((((((1 - ((((threadIdx.z*15) + threadIdx.x)/6) % 10)) <= (blockIdx.y*8)) && ((blockIdx.y*8) < (225 - ((((threadIdx.z*15) + threadIdx.x)/6) % 10)))) && ((1 - ((((threadIdx.z*3) + threadIdx.x)*3) % 18)) <= (blockIdx.x*16))) && ((blockIdx.x*16) < (225 - ((((threadIdx.z*3) + threadIdx.x)*3) % 18)))), placeholder[((((((((blockIdx.y*112) + blockIdx.x) + (rc.outer*3136)) + ((((threadIdx.z*15) + threadIdx.x)/60)*9408))*16) + ((((threadIdx.z*3) + threadIdx.x)*3) % 18)) + (((((threadIdx.z*15) + threadIdx.x)/6) % 10)*224)) + -225)], 0.000000f)pad_temp.shared[(((((((threadIdx.z*15) + threadIdx.x)*3) + 1)/180)*180) + ((((((((threadIdx.z*15) + threadIdx.x)*3) + 1)/18) % 10)*18) + (((((threadIdx.z*3) + threadIdx.x)*3) + 1) % 18)))] = tvm_if_then_else((((((1 - ((((((threadIdx.z*15) + threadIdx.x)*3) + 1)/18) % 10)) <= (blockIdx.y*8)) && ((blockIdx.y*8) < (225 - ((((((threadIdx.z*15) + threadIdx.x)*3) + 1)/18) % 10)))) && ((1 - (((((threadIdx.z*3) + threadIdx.x)*3) + 1) % 18)) <= (blockIdx.x*16))) && ((blockIdx.x*16) < (225 - (((((threadIdx.z*3) + threadIdx.x)*3) + 1) % 18)))), placeholder[((((((((blockIdx.y*112) + blockIdx.x) + (rc.outer*3136)) + ((((((threadIdx.z*15) + threadIdx.x)*3) + 1)/180)*9408))*16) + (((((threadIdx.z*3) + threadIdx.x)*3) + 1) % 18)) + (((((((threadIdx.z*15) + threadIdx.x)*3) + 1)/18) % 10)*224)) + -225)], 0.000000f)pad_temp.shared[(((((((threadIdx.z*15) + threadIdx.x)*3) + 2)/180)*180) + ((((((((threadIdx.z*15) + threadIdx.x)*3) + 2)/18) % 10)*18) + (((((threadIdx.z*3) + threadIdx.x)*3) + 2) % 18)))] = tvm_if_then_else((((((1 - ((((((threadIdx.z*15) + threadIdx.x)*3) + 2)/18) % 10)) <= (blockIdx.y*8)) && ((blockIdx.y*8) < (225 - ((((((threadIdx.z*15) + threadIdx.x)*3) + 2)/18) % 10)))) && ((1 - (((((threadIdx.z*3) + threadIdx.x)*3) + 2) % 18)) <= (blockIdx.x*16))) && ((blockIdx.x*16) < (225 - (((((threadIdx.z*3) + threadIdx.x)*3) + 2) % 18)))), placeholder[((((((((blockIdx.y*112) + blockIdx.x) + (rc.outer*3136)) + ((((((threadIdx.z*15) + threadIdx.x)*3) + 2)/180)*9408))*16) + (((((threadIdx.z*3) + threadIdx.x)*3) + 2) % 18)) + (((((((threadIdx.z*15) + threadIdx.x)*3) + 2)/18) % 10)*224)) + -225)], 0.000000f)}}}produce placeholder.shared {// attr [iter_var(threadIdx.z, , threadIdx.z)] thread_extent = 4// attr [iter_var(threadIdx.y, , threadIdx.y)] thread_extent = 1// attr [iter_var(threadIdx.x, , threadIdx.x)] thread_extent = 16if (likely(((threadIdx.z*4) < (16 - (threadIdx.x/3))))) {if (likely(((threadIdx.z*12) < (48 - threadIdx.x)))) {if (likely((threadIdx.x < 12))) {placeholder.shared[(((((threadIdx.z*4) + (threadIdx.x/3))*3) + (threadIdx.x % 3))*3)] = placeholder[(((((rc.outer + (threadIdx.z*12)) + ((threadIdx.x/3)*3))*3) + (threadIdx.x % 3))*3)]placeholder.shared[((((((threadIdx.z*4) + (threadIdx.x/3))*3) + (threadIdx.x % 3))*3) + 1)] = placeholder[((((((rc.outer + (threadIdx.z*12)) + ((threadIdx.x/3)*3))*3) + (threadIdx.x % 3))*3) + 1)]placeholder.shared[((((((threadIdx.z*4) + (threadIdx.x/3))*3) + (threadIdx.x % 3))*3) + 2)] = placeholder[((((((rc.outer + (threadIdx.z*12)) + ((threadIdx.x/3)*3))*3) + (threadIdx.x % 3))*3) + 2)]}}}}compute[0] = (compute[0] + (pad_temp.shared[threadIdx.x]*placeholder.shared[(threadIdx.z*36)]))compute[1] = (compute[1] + (pad_temp.shared[(threadIdx.x + 18)]*placeholder.shared[(threadIdx.z*36)]))compute[2] = (compute[2] + (pad_temp.shared[(threadIdx.x + 36)]*placeholder.shared[(threadIdx.z*36)]))compute[3] = (compute[3] + (pad_temp.shared[(threadIdx.x + 54)]*placeholder.shared[(threadIdx.z*36)]))compute[4] = (compute[4] + (pad_temp.shared[(threadIdx.x + 72)]*placeholder.shared[(threadIdx.z*36)]))compute[5] = (compute[5] + (pad_temp.shared[(threadIdx.x + 90)]*placeholder.shared[(threadIdx.z*36)]))compute[6] = (compute[6] + (pad_temp.shared[(threadIdx.x + 108)]*placeholder.shared[(threadIdx.z*36)]))compute[7] = (compute[7] + (pad_temp.shared[(threadIdx.x + 126)]*placeholder.shared[(threadIdx.z*36)]))compute[8] = (compute[8] + (pad_temp.shared[threadIdx.x]*placeholder.shared[((threadIdx.z*36) + 9)]))compute[9] = (compute[9] + (pad_temp.shared[(threadIdx.x + 18)]*placeholder.shared[((threadIdx.z*36) + 9)]))compute[10] = (compute[10] + (pad_temp.shared[(threadIdx.x + 36)]*placeholder.shared[((threadIdx.z*36) + 9)]))compute[11] = (compute[11] + (pad_temp.shared[(threadIdx.x + 54)]*placeholder.shared[((threadIdx.z*36) + 9)]))compute[12] = (compute[12] + (pad_temp.shared[(threadIdx.x + 72)]*placeholder.shared[((threadIdx.z*36) + 9)]))compute[13] = (compute[13] + (pad_temp.shared[(threadIdx.x + 90)]*placeholder.shared[((threadIdx.z*36) + 9)]))compute[14] = (compute[14] + (pad_temp.shared[(threadIdx.x + 108)]*placeholder.shared[((threadIdx.z*36) + 9)]))compute[15] = (compute[15] + (pad_temp.shared[(threadIdx.x + 126)]*placeholder.shared[((threadIdx.z*36) + 9)]))compute[16] = (compute[16] + (pad_temp.shared[threadIdx.x]*placeholder.shared[((threadIdx.z*36) + 18)]))compute[17] = (compute[17] + (pad_temp.shared[(threadIdx.x + 18)]*placeholder.shared[((threadIdx.z*36) + 18)]))compute[18] = (compute[18] + (pad_temp.shared[(threadIdx.x + 36)]*placeholder.shared[((threadIdx.z*36) + 18)]))compute[19] = (compute[19] + (pad_temp.shared[(threadIdx.x + 54)]*placeholder.shared[((threadIdx.z*36) + 18)]))compute[20] = (compute[20] + (pad_temp.shared[(threadIdx.x + 72)]*placeholder.shared[((threadIdx.z*36) + 18)]))compute[21] = (compute[21] + (pad_temp.shared[(threadIdx.x + 90)]*placeholder.shared[((threadIdx.z*36) + 18)]))compute[22] = (compute[22] + (pad_temp.shared[(threadIdx.x + 108)]*placeholder.shared[((threadIdx.z*36) + 18)]))compute[23] = (compute[23] + (pad_temp.shared[(threadIdx.x + 126)]*placeholder.shared[((threadIdx.z*36) + 18)]))compute[24] = (compute[24] + (pad_temp.shared[threadIdx.x]*placeholder.shared[((threadIdx.z*36) + 27)]))compute[25] = (compute[25] + (pad_temp.shared[(threadIdx.x + 18)]*placeholder.shared[((threadIdx.z*36) + 27)]))compute[26] = (compute[26] + (pad_temp.shared[(threadIdx.x + 36)]*placeholder.shared[((threadIdx.z*36) + 27)]))compute[27] = (compute[27] + (pad_temp.shared[(threadIdx.x + 54)]*placeholder.shared[((threadIdx.z*36) + 27)]))compute[28] = (compute[28] + (pad_temp.shared[(threadIdx.x + 72)]*placeholder.shared[((threadIdx.z*36) + 27)]))compute[29] = (compute[29] + (pad_temp.shared[(threadIdx.x + 90)]*placeholder.shared[((threadIdx.z*36) + 27)]))compute[30] = (compute[30] + (pad_temp.shared[(threadIdx.x + 108)]*placeholder.shared[((threadIdx.z*36) + 27)]))compute[31] = (compute[31] + (pad_temp.shared[(threadIdx.x + 126)]*placeholder.shared[((threadIdx.z*36) + 27)]))compute[0] = (compute[0] + (pad_temp.shared[(threadIdx.x + 1)]*placeholder.shared[((threadIdx.z*36) + 1)]))compute[1] = (compute[1] + (pad_temp.shared[(threadIdx.x + 19)]*placeholder.shared[((threadIdx.z*36) + 1)]))compute[2] = (compute[2] + (pad_temp.shared[(threadIdx.x + 37)]*placeholder.shared[((threadIdx.z*36) + 1)]))compute[3] = (compute[3] + (pad_temp.shared[(threadIdx.x + 55)]*placeholder.shared[((threadIdx.z*36) + 1)]))compute[4] = (compute[4] + (pad_temp.shared[(threadIdx.x + 73)]*placeholder.shared[((threadIdx.z*36) + 1)]))compute[5] = (compute[5] + (pad_temp.shared[(threadIdx.x + 91)]*placeholder.shared[((threadIdx.z*36) + 1)]))compute[6] = (compute[6] + (pad_temp.shared[(threadIdx.x + 109)]*placeholder.shared[((threadIdx.z*36) + 1)]))compute[7] = (compute[7] + (pad_temp.shared[(threadIdx.x + 127)]*placeholder.shared[((threadIdx.z*36) + 1)]))compute[8] = (compute[8] + (pad_temp.shared[(threadIdx.x + 1)]*placeholder.shared[((threadIdx.z*36) + 10)]))compute[9] = (compute[9] + (pad_temp.shared[(threadIdx.x + 19)]*placeholder.shared[((threadIdx.z*36) + 10)]))compute[10] = (compute[10] + (pad_temp.shared[(threadIdx.x + 37)]*placeholder.shared[((threadIdx.z*36) + 10)]))compute[11] = (compute[11] + (pad_temp.shared[(threadIdx.x + 55)]*placeholder.shared[((threadIdx.z*36) + 10)]))compute[12] = (compute[12] + (pad_temp.shared[(threadIdx.x + 73)]*placeholder.shared[((threadIdx.z*36) + 10)]))compute[13] = (compute[13] + (pad_temp.shared[(threadIdx.x + 91)]*placeholder.shared[((threadIdx.z*36) + 10)]))compute[14] = (compute[14] + (pad_temp.shared[(threadIdx.x + 109)]*placeholder.shared[((threadIdx.z*36) + 10)]))compute[15] = (compute[15] + (pad_temp.shared[(threadIdx.x + 127)]*placeholder.shared[((threadIdx.z*36) + 10)]))compute[16] = (compute[16] + (pad_temp.shared[(threadIdx.x + 1)]*placeholder.shared[((threadIdx.z*36) + 19)]))compute[17] = (compute[17] + (pad_temp.shared[(threadIdx.x + 19)]*placeholder.shared[((threadIdx.z*36) + 19)]))compute[18] = (compute[18] + (pad_temp.shared[(threadIdx.x + 37)]*placeholder.shared[((threadIdx.z*36) + 19)]))compute[19] = (compute[19] + (pad_temp.shared[(threadIdx.x + 55)]*placeholder.shared[((threadIdx.z*36) + 19)]))compute[20] = (compute[20] + (pad_temp.shared[(threadIdx.x + 73)]*placeholder.shared[((threadIdx.z*36) + 19)]))compute[21] = (compute[21] + (pad_temp.shared[(threadIdx.x + 91)]*placeholder.shared[((threadIdx.z*36) + 19)]))compute[22] = (compute[22] + (pad_temp.shared[(threadIdx.x + 109)]*placeholder.shared[((threadIdx.z*36) + 19)]))compute[23] = (compute[23] + (pad_temp.shared[(threadIdx.x + 127)]*placeholder.shared[((threadIdx.z*36) + 19)]))compute[24] = (compute[24] + (pad_temp.shared[(threadIdx.x + 1)]*placeholder.shared[((threadIdx.z*36) + 28)]))compute[25] = (compute[25] + (pad_temp.shared[(threadIdx.x + 19)]*placeholder.shared[((threadIdx.z*36) + 28)]))compute[26] = (compute[26] + (pad_temp.shared[(threadIdx.x + 37)]*placeholder.shared[((threadIdx.z*36) + 28)]))compute[27] = (compute[27] + (pad_temp.shared[(threadIdx.x + 55)]*placeholder.shared[((threadIdx.z*36) + 28)]))compute[28] = (compute[28] + (pad_temp.shared[(threadIdx.x + 73)]*placeholder.shared[((threadIdx.z*36) + 28)]))compute[29] = (compute[29] + (pad_temp.shared[(threadIdx.x + 91)]*placeholder.shared[((threadIdx.z*36) + 28)]))compute[30] = (compute[30] + (pad_temp.shared[(threadIdx.x + 109)]*placeholder.shared[((threadIdx.z*36) + 28)]))compute[31] = (compute[31] + (pad_temp.shared[(threadIdx.x + 127)]*placeholder.shared[((threadIdx.z*36) + 28)]))compute[0] = (compute[0] + (pad_temp.shared[(threadIdx.x + 2)]*placeholder.shared[((threadIdx.z*36) + 2)]))compute[1] = (compute[1] + (pad_temp.shared[(threadIdx.x + 20)]*placeholder.shared[((threadIdx.z*36) + 2)]))compute[2] = (compute[2] + (pad_temp.shared[(threadIdx.x + 38)]*placeholder.shared[((threadIdx.z*36) + 2)]))compute[3] = (compute[3] + (pad_temp.shared[(threadIdx.x + 56)]*placeholder.shared[((threadIdx.z*36) + 2)]))compute[4] = (compute[4] + (pad_temp.shared[(threadIdx.x + 74)]*placeholder.shared[((threadIdx.z*36) + 2)]))compute[5] = (compute[5] + (pad_temp.shared[(threadIdx.x + 92)]*placeholder.shared[((threadIdx.z*36) + 2)]))compute[6] = (compute[6] + (pad_temp.shared[(threadIdx.x + 110)]*placeholder.shared[((threadIdx.z*36) + 2)]))compute[7] = (compute[7] + (pad_temp.shared[(threadIdx.x + 128)]*placeholder.shared[((threadIdx.z*36) + 2)]))compute[8] = (compute[8] + (pad_temp.shared[(threadIdx.x + 2)]*placeholder.shared[((threadIdx.z*36) + 11)]))compute[9] = (compute[9] + (pad_temp.shared[(threadIdx.x + 20)]*placeholder.shared[((threadIdx.z*36) + 11)]))compute[10] = (compute[10] + (pad_temp.shared[(threadIdx.x + 38)]*placeholder.shared[((threadIdx.z*36) + 11)]))compute[11] = (compute[11] + (pad_temp.shared[(threadIdx.x + 56)]*placeholder.shared[((threadIdx.z*36) + 11)]))compute[12] = (compute[12] + (pad_temp.shared[(threadIdx.x + 74)]*placeholder.shared[((threadIdx.z*36) + 11)]))compute[13] = (compute[13] + (pad_temp.shared[(threadIdx.x + 92)]*placeholder.shared[((threadIdx.z*36) + 11)]))compute[14] = (compute[14] + (pad_temp.shared[(threadIdx.x + 110)]*placeholder.shared[((threadIdx.z*36) + 11)]))compute[15] = (compute[15] + (pad_temp.shared[(threadIdx.x + 128)]*placeholder.shared[((threadIdx.z*36) + 11)]))compute[16] = (compute[16] + (pad_temp.shared[(threadIdx.x + 2)]*placeholder.shared[((threadIdx.z*36) + 20)]))compute[17] = (compute[17] + (pad_temp.shared[(threadIdx.x + 20)]*placeholder.shared[((threadIdx.z*36) + 20)]))compute[18] = (compute[18] + (pad_temp.shared[(threadIdx.x + 38)]*placeholder.shared[((threadIdx.z*36) + 20)]))compute[19] = (compute[19] + (pad_temp.shared[(threadIdx.x + 56)]*placeholder.shared[((threadIdx.z*36) + 20)]))compute[20] = (compute[20] + (pad_temp.shared[(threadIdx.x + 74)]*placeholder.shared[((threadIdx.z*36) + 20)]))compute[21] = (compute[21] + (pad_temp.shared[(threadIdx.x + 92)]*placeholder.shared[((threadIdx.z*36) + 20)]))compute[22] = (compute[22] + (pad_temp.shared[(threadIdx.x + 110)]*placeholder.shared[((threadIdx.z*36) + 20)]))compute[23] = (compute[23] + (pad_temp.shared[(threadIdx.x + 128)]*placeholder.shared[((threadIdx.z*36) + 20)]))compute[24] = (compute[24] + (pad_temp.shared[(threadIdx.x + 2)]*placeholder.shared[((threadIdx.z*36) + 29)]))compute[25] = (compute[25] + (pad_temp.shared[(threadIdx.x + 20)]*placeholder.shared[((threadIdx.z*36) + 29)]))compute[26] = (compute[26] + (pad_temp.shared[(threadIdx.x + 38)]*placeholder.shared[((threadIdx.z*36) + 29)]))compute[27] = (compute[27] + (pad_temp.shared[(threadIdx.x + 56)]*placeholder.shared[((threadIdx.z*36) + 29)]))compute[28] = (compute[28] + (pad_temp.shared[(threadIdx.x + 74)]*placeholder.shared[((threadIdx.z*36) + 29)]))compute[29] = (compute[29] + (pad_temp.shared[(threadIdx.x + 92)]*placeholder.shared[((threadIdx.z*36) + 29)]))compute[30] = (compute[30] + (pad_temp.shared[(threadIdx.x + 110)]*placeholder.shared[((threadIdx.z*36) + 29)]))compute[31] = (compute[31] + (pad_temp.shared[(threadIdx.x + 128)]*placeholder.shared[((threadIdx.z*36) + 29)]))compute[0] = (compute[0] + (pad_temp.shared[(threadIdx.x + 18)]*placeholder.shared[((threadIdx.z*36) + 3)]))compute[1] = (compute[1] + (pad_temp.shared[(threadIdx.x + 36)]*placeholder.shared[((threadIdx.z*36) + 3)]))compute[2] = (compute[2] + (pad_temp.shared[(threadIdx.x + 54)]*placeholder.shared[((threadIdx.z*36) + 3)]))compute[3] = (compute[3] + (pad_temp.shared[(threadIdx.x + 72)]*placeholder.shared[((threadIdx.z*36) + 3)]))compute[4] = (compute[4] + (pad_temp.shared[(threadIdx.x + 90)]*placeholder.shared[((threadIdx.z*36) + 3)]))compute[5] = (compute[5] + (pad_temp.shared[(threadIdx.x + 108)]*placeholder.shared[((threadIdx.z*36) + 3)]))compute[6] = (compute[6] + (pad_temp.shared[(threadIdx.x + 126)]*placeholder.shared[((threadIdx.z*36) + 3)]))compute[7] = (compute[7] + (pad_temp.shared[(threadIdx.x + 144)]*placeholder.shared[((threadIdx.z*36) + 3)]))compute[8] = (compute[8] + (pad_temp.shared[(threadIdx.x + 18)]*placeholder.shared[((threadIdx.z*36) + 12)]))compute[9] = (compute[9] + (pad_temp.shared[(threadIdx.x + 36)]*placeholder.shared[((threadIdx.z*36) + 12)]))compute[10] = (compute[10] + (pad_temp.shared[(threadIdx.x + 54)]*placeholder.shared[((threadIdx.z*36) + 12)]))compute[11] = (compute[11] + (pad_temp.shared[(threadIdx.x + 72)]*placeholder.shared[((threadIdx.z*36) + 12)]))compute[12] = (compute[12] + (pad_temp.shared[(threadIdx.x + 90)]*placeholder.shared[((threadIdx.z*36) + 12)]))compute[13] = (compute[13] + (pad_temp.shared[(threadIdx.x + 108)]*placeholder.shared[((threadIdx.z*36) + 12)]))compute[14] = (compute[14] + (pad_temp.shared[(threadIdx.x + 126)]*placeholder.shared[((threadIdx.z*36) + 12)]))compute[15] = (compute[15] + (pad_temp.shared[(threadIdx.x + 144)]*placeholder.shared[((threadIdx.z*36) + 12)]))compute[16] = (compute[16] + (pad_temp.shared[(threadIdx.x + 18)]*placeholder.shared[((threadIdx.z*36) + 21)]))compute[17] = (compute[17] + (pad_temp.shared[(threadIdx.x + 36)]*placeholder.shared[((threadIdx.z*36) + 21)]))compute[18] = (compute[18] + (pad_temp.shared[(threadIdx.x + 54)]*placeholder.shared[((threadIdx.z*36) + 21)]))compute[19] = (compute[19] + (pad_temp.shared[(threadIdx.x + 72)]*placeholder.shared[((threadIdx.z*36) + 21)]))compute[20] = (compute[20] + (pad_temp.shared[(threadIdx.x + 90)]*placeholder.shared[((threadIdx.z*36) + 21)]))compute[21] = (compute[21] + (pad_temp.shared[(threadIdx.x + 108)]*placeholder.shared[((threadIdx.z*36) + 21)]))compute[22] = (compute[22] + (pad_temp.shared[(threadIdx.x + 126)]*placeholder.shared[((threadIdx.z*36) + 21)]))compute[23] = (compute[23] + (pad_temp.shared[(threadIdx.x + 144)]*placeholder.shared[((threadIdx.z*36) + 21)]))compute[24] = (compute[24] + (pad_temp.shared[(threadIdx.x + 18)]*placeholder.shared[((threadIdx.z*36) + 30)]))compute[25] = (compute[25] + (pad_temp.shared[(threadIdx.x + 36)]*placeholder.shared[((threadIdx.z*36) + 30)]))compute[26] = (compute[26] + (pad_temp.shared[(threadIdx.x + 54)]*placeholder.shared[((threadIdx.z*36) + 30)]))compute[27] = (compute[27] + (pad_temp.shared[(threadIdx.x + 72)]*placeholder.shared[((threadIdx.z*36) + 30)]))compute[28] = (compute[28] + (pad_temp.shared[(threadIdx.x + 90)]*placeholder.shared[((threadIdx.z*36) + 30)]))compute[29] = (compute[29] + (pad_temp.shared[(threadIdx.x + 108)]*placeholder.shared[((threadIdx.z*36) + 30)]))compute[30] = (compute[30] + (pad_temp.shared[(threadIdx.x + 126)]*placeholder.shared[((threadIdx.z*36) + 30)]))compute[31] = (compute[31] + (pad_temp.shared[(threadIdx.x + 144)]*placeholder.shared[((threadIdx.z*36) + 30)]))compute[0] = (compute[0] + (pad_temp.shared[(threadIdx.x + 19)]*placeholder.shared[((threadIdx.z*36) + 4)]))compute[1] = (compute[1] + (pad_temp.shared[(threadIdx.x + 37)]*placeholder.shared[((threadIdx.z*36) + 4)]))compute[2] = (compute[2] + (pad_temp.shared[(threadIdx.x + 55)]*placeholder.shared[((threadIdx.z*36) + 4)]))compute[3] = (compute[3] + (pad_temp.shared[(threadIdx.x + 73)]*placeholder.shared[((threadIdx.z*36) + 4)]))compute[4] = (compute[4] + (pad_temp.shared[(threadIdx.x + 91)]*placeholder.shared[((threadIdx.z*36) + 4)]))compute[5] = (compute[5] + (pad_temp.shared[(threadIdx.x + 109)]*placeholder.shared[((threadIdx.z*36) + 4)]))compute[6] = (compute[6] + (pad_temp.shared[(threadIdx.x + 127)]*placeholder.shared[((threadIdx.z*36) + 4)]))compute[7] = (compute[7] + (pad_temp.shared[(threadIdx.x + 145)]*placeholder.shared[((threadIdx.z*36) + 4)]))compute[8] = (compute[8] + (pad_temp.shared[(threadIdx.x + 19)]*placeholder.shared[((threadIdx.z*36) + 13)]))compute[9] = (compute[9] + (pad_temp.shared[(threadIdx.x + 37)]*placeholder.shared[((threadIdx.z*36) + 13)]))compute[10] = (compute[10] + (pad_temp.shared[(threadIdx.x + 55)]*placeholder.shared[((threadIdx.z*36) + 13)]))compute[11] = (compute[11] + (pad_temp.shared[(threadIdx.x + 73)]*placeholder.shared[((threadIdx.z*36) + 13)]))compute[12] = (compute[12] + (pad_temp.shared[(threadIdx.x + 91)]*placeholder.shared[((threadIdx.z*36) + 13)]))compute[13] = (compute[13] + (pad_temp.shared[(threadIdx.x + 109)]*placeholder.shared[((threadIdx.z*36) + 13)]))compute[14] = (compute[14] + (pad_temp.shared[(threadIdx.x + 127)]*placeholder.shared[((threadIdx.z*36) + 13)]))compute[15] = (compute[15] + (pad_temp.shared[(threadIdx.x + 145)]*placeholder.shared[((threadIdx.z*36) + 13)]))compute[16] = (compute[16] + (pad_temp.shared[(threadIdx.x + 19)]*placeholder.shared[((threadIdx.z*36) + 22)]))compute[17] = (compute[17] + (pad_temp.shared[(threadIdx.x + 37)]*placeholder.shared[((threadIdx.z*36) + 22)]))compute[18] = (compute[18] + (pad_temp.shared[(threadIdx.x + 55)]*placeholder.shared[((threadIdx.z*36) + 22)]))compute[19] = (compute[19] + (pad_temp.shared[(threadIdx.x + 73)]*placeholder.shared[((threadIdx.z*36) + 22)]))compute[20] = (compute[20] + (pad_temp.shared[(threadIdx.x + 91)]*placeholder.shared[((threadIdx.z*36) + 22)]))compute[21] = (compute[21] + (pad_temp.shared[(threadIdx.x + 109)]*placeholder.shared[((threadIdx.z*36) + 22)]))compute[22] = (compute[22] + (pad_temp.shared[(threadIdx.x + 127)]*placeholder.shared[((threadIdx.z*36) + 22)]))compute[23] = (compute[23] + (pad_temp.shared[(threadIdx.x + 145)]*placeholder.shared[((threadIdx.z*36) + 22)]))compute[24] = (compute[24] + (pad_temp.shared[(threadIdx.x + 19)]*placeholder.shared[((threadIdx.z*36) + 31)]))compute[25] = (compute[25] + (pad_temp.shared[(threadIdx.x + 37)]*placeholder.shared[((threadIdx.z*36) + 31)]))compute[26] = (compute[26] + (pad_temp.shared[(threadIdx.x + 55)]*placeholder.shared[((threadIdx.z*36) + 31)]))compute[27] = (compute[27] + (pad_temp.shared[(threadIdx.x + 73)]*placeholder.shared[((threadIdx.z*36) + 31)]))compute[28] = (compute[28] + (pad_temp.shared[(threadIdx.x + 91)]*placeholder.shared[((threadIdx.z*36) + 31)]))compute[29] = (compute[29] + (pad_temp.shared[(threadIdx.x + 109)]*placeholder.shared[((threadIdx.z*36) + 31)]))compute[30] = (compute[30] + (pad_temp.shared[(threadIdx.x + 127)]*placeholder.shared[((threadIdx.z*36) + 31)]))compute[31] = (compute[31] + (pad_temp.shared[(threadIdx.x + 145)]*placeholder.shared[((threadIdx.z*36) + 31)]))compute[0] = (compute[0] + (pad_temp.shared[(threadIdx.x + 20)]*placeholder.shared[((threadIdx.z*36) + 5)]))compute[1] = (compute[1] + (pad_temp.shared[(threadIdx.x + 38)]*placeholder.shared[((threadIdx.z*36) + 5)]))compute[2] = (compute[2] + (pad_temp.shared[(threadIdx.x + 56)]*placeholder.shared[((threadIdx.z*36) + 5)]))compute[3] = (compute[3] + (pad_temp.shared[(threadIdx.x + 74)]*placeholder.shared[((threadIdx.z*36) + 5)]))compute[4] = (compute[4] + (pad_temp.shared[(threadIdx.x + 92)]*placeholder.shared[((threadIdx.z*36) + 5)]))compute[5] = (compute[5] + (pad_temp.shared[(threadIdx.x + 110)]*placeholder.shared[((threadIdx.z*36) + 5)]))compute[6] = (compute[6] + (pad_temp.shared[(threadIdx.x + 128)]*placeholder.shared[((threadIdx.z*36) + 5)]))compute[7] = (compute[7] + (pad_temp.shared[(threadIdx.x + 146)]*placeholder.shared[((threadIdx.z*36) + 5)]))compute[8] = (compute[8] + (pad_temp.shared[(threadIdx.x + 20)]*placeholder.shared[((threadIdx.z*36) + 14)]))compute[9] = (compute[9] + (pad_temp.shared[(threadIdx.x + 38)]*placeholder.shared[((threadIdx.z*36) + 14)]))compute[10] = (compute[10] + (pad_temp.shared[(threadIdx.x + 56)]*placeholder.shared[((threadIdx.z*36) + 14)]))compute[11] = (compute[11] + (pad_temp.shared[(threadIdx.x + 74)]*placeholder.shared[((threadIdx.z*36) + 14)]))compute[12] = (compute[12] + (pad_temp.shared[(threadIdx.x + 92)]*placeholder.shared[((threadIdx.z*36) + 14)]))compute[13] = (compute[13] + (pad_temp.shared[(threadIdx.x + 110)]*placeholder.shared[((threadIdx.z*36) + 14)]))compute[14] = (compute[14] + (pad_temp.shared[(threadIdx.x + 128)]*placeholder.shared[((threadIdx.z*36) + 14)]))compute[15] = (compute[15] + (pad_temp.shared[(threadIdx.x + 146)]*placeholder.shared[((threadIdx.z*36) + 14)]))compute[16] = (compute[16] + (pad_temp.shared[(threadIdx.x + 20)]*placeholder.shared[((threadIdx.z*36) + 23)]))compute[17] = (compute[17] + (pad_temp.shared[(threadIdx.x + 38)]*placeholder.shared[((threadIdx.z*36) + 23)]))compute[18] = (compute[18] + (pad_temp.shared[(threadIdx.x + 56)]*placeholder.shared[((threadIdx.z*36) + 23)]))compute[19] = (compute[19] + (pad_temp.shared[(threadIdx.x + 74)]*placeholder.shared[((threadIdx.z*36) + 23)]))compute[20] = (compute[20] + (pad_temp.shared[(threadIdx.x + 92)]*placeholder.shared[((threadIdx.z*36) + 23)]))compute[21] = (compute[21] + (pad_temp.shared[(threadIdx.x + 110)]*placeholder.shared[((threadIdx.z*36) + 23)]))compute[22] = (compute[22] + (pad_temp.shared[(threadIdx.x + 128)]*placeholder.shared[((threadIdx.z*36) + 23)]))compute[23] = (compute[23] + (pad_temp.shared[(threadIdx.x + 146)]*placeholder.shared[((threadIdx.z*36) + 23)]))compute[24] = (compute[24] + (pad_temp.shared[(threadIdx.x + 20)]*placeholder.shared[((threadIdx.z*36) + 32)]))compute[25] = (compute[25] + (pad_temp.shared[(threadIdx.x + 38)]*placeholder.shared[((threadIdx.z*36) + 32)]))compute[26] = (compute[26] + (pad_temp.shared[(threadIdx.x + 56)]*placeholder.shared[((threadIdx.z*36) + 32)]))compute[27] = (compute[27] + (pad_temp.shared[(threadIdx.x + 74)]*placeholder.shared[((threadIdx.z*36) + 32)]))compute[28] = (compute[28] + (pad_temp.shared[(threadIdx.x + 92)]*placeholder.shared[((threadIdx.z*36) + 32)]))compute[29] = (compute[29] + (pad_temp.shared[(threadIdx.x + 110)]*placeholder.shared[((threadIdx.z*36) + 32)]))compute[30] = (compute[30] + (pad_temp.shared[(threadIdx.x + 128)]*placeholder.shared[((threadIdx.z*36) + 32)]))compute[31] = (compute[31] + (pad_temp.shared[(threadIdx.x + 146)]*placeholder.shared[((threadIdx.z*36) + 32)]))compute[0] = (compute[0] + (pad_temp.shared[(threadIdx.x + 36)]*placeholder.shared[((threadIdx.z*36) + 6)]))compute[1] = (compute[1] + (pad_temp.shared[(threadIdx.x + 54)]*placeholder.shared[((threadIdx.z*36) + 6)]))compute[2] = (compute[2] + (pad_temp.shared[(threadIdx.x + 72)]*placeholder.shared[((threadIdx.z*36) + 6)]))compute[3] = (compute[3] + (pad_temp.shared[(threadIdx.x + 90)]*placeholder.shared[((threadIdx.z*36) + 6)]))compute[4] = (compute[4] + (pad_temp.shared[(threadIdx.x + 108)]*placeholder.shared[((threadIdx.z*36) + 6)]))compute[5] = (compute[5] + (pad_temp.shared[(threadIdx.x + 126)]*placeholder.shared[((threadIdx.z*36) + 6)]))compute[6] = (compute[6] + (pad_temp.shared[(threadIdx.x + 144)]*placeholder.shared[((threadIdx.z*36) + 6)]))compute[7] = (compute[7] + (pad_temp.shared[(threadIdx.x + 162)]*placeholder.shared[((threadIdx.z*36) + 6)]))compute[8] = (compute[8] + (pad_temp.shared[(threadIdx.x + 36)]*placeholder.shared[((threadIdx.z*36) + 15)]))compute[9] = (compute[9] + (pad_temp.shared[(threadIdx.x + 54)]*placeholder.shared[((threadIdx.z*36) + 15)]))compute[10] = (compute[10] + (pad_temp.shared[(threadIdx.x + 72)]*placeholder.shared[((threadIdx.z*36) + 15)]))compute[11] = (compute[11] + (pad_temp.shared[(threadIdx.x + 90)]*placeholder.shared[((threadIdx.z*36) + 15)]))compute[12] = (compute[12] + (pad_temp.shared[(threadIdx.x + 108)]*placeholder.shared[((threadIdx.z*36) + 15)]))compute[13] = (compute[13] + (pad_temp.shared[(threadIdx.x + 126)]*placeholder.shared[((threadIdx.z*36) + 15)]))compute[14] = (compute[14] + (pad_temp.shared[(threadIdx.x + 144)]*placeholder.shared[((threadIdx.z*36) + 15)]))compute[15] = (compute[15] + (pad_temp.shared[(threadIdx.x + 162)]*placeholder.shared[((threadIdx.z*36) + 15)]))compute[16] = (compute[16] + (pad_temp.shared[(threadIdx.x + 36)]*placeholder.shared[((threadIdx.z*36) + 24)]))compute[17] = (compute[17] + (pad_temp.shared[(threadIdx.x + 54)]*placeholder.shared[((threadIdx.z*36) + 24)]))compute[18] = (compute[18] + (pad_temp.shared[(threadIdx.x + 72)]*placeholder.shared[((threadIdx.z*36) + 24)]))compute[19] = (compute[19] + (pad_temp.shared[(threadIdx.x + 90)]*placeholder.shared[((threadIdx.z*36) + 24)]))compute[20] = (compute[20] + (pad_temp.shared[(threadIdx.x + 108)]*placeholder.shared[((threadIdx.z*36) + 24)]))compute[21] = (compute[21] + (pad_temp.shared[(threadIdx.x + 126)]*placeholder.shared[((threadIdx.z*36) + 24)]))compute[22] = (compute[22] + (pad_temp.shared[(threadIdx.x + 144)]*placeholder.shared[((threadIdx.z*36) + 24)]))compute[23] = (compute[23] + (pad_temp.shared[(threadIdx.x + 162)]*placeholder.shared[((threadIdx.z*36) + 24)]))compute[24] = (compute[24] + (pad_temp.shared[(threadIdx.x + 36)]*placeholder.shared[((threadIdx.z*36) + 33)]))compute[25] = (compute[25] + (pad_temp.shared[(threadIdx.x + 54)]*placeholder.shared[((threadIdx.z*36) + 33)]))compute[26] = (compute[26] + (pad_temp.shared[(threadIdx.x + 72)]*placeholder.shared[((threadIdx.z*36) + 33)]))compute[27] = (compute[27] + (pad_temp.shared[(threadIdx.x + 90)]*placeholder.shared[((threadIdx.z*36) + 33)]))compute[28] = (compute[28] + (pad_temp.shared[(threadIdx.x + 108)]*placeholder.shared[((threadIdx.z*36) + 33)]))compute[29] = (compute[29] + (pad_temp.shared[(threadIdx.x + 126)]*placeholder.shared[((threadIdx.z*36) + 33)]))compute[30] = (compute[30] + (pad_temp.shared[(threadIdx.x + 144)]*placeholder.shared[((threadIdx.z*36) + 33)]))compute[31] = (compute[31] + (pad_temp.shared[(threadIdx.x + 162)]*placeholder.shared[((threadIdx.z*36) + 33)]))compute[0] = (compute[0] + (pad_temp.shared[(threadIdx.x + 37)]*placeholder.shared[((threadIdx.z*36) + 7)]))compute[1] = (compute[1] + (pad_temp.shared[(threadIdx.x + 55)]*placeholder.shared[((threadIdx.z*36) + 7)]))compute[2] = (compute[2] + (pad_temp.shared[(threadIdx.x + 73)]*placeholder.shared[((threadIdx.z*36) + 7)]))compute[3] = (compute[3] + (pad_temp.shared[(threadIdx.x + 91)]*placeholder.shared[((threadIdx.z*36) + 7)]))compute[4] = (compute[4] + (pad_temp.shared[(threadIdx.x + 109)]*placeholder.shared[((threadIdx.z*36) + 7)]))compute[5] = (compute[5] + (pad_temp.shared[(threadIdx.x + 127)]*placeholder.shared[((threadIdx.z*36) + 7)]))compute[6] = (compute[6] + (pad_temp.shared[(threadIdx.x + 145)]*placeholder.shared[((threadIdx.z*36) + 7)]))compute[7] = (compute[7] + (pad_temp.shared[(threadIdx.x + 163)]*placeholder.shared[((threadIdx.z*36) + 7)]))compute[8] = (compute[8] + (pad_temp.shared[(threadIdx.x + 37)]*placeholder.shared[((threadIdx.z*36) + 16)]))compute[9] = (compute[9] + (pad_temp.shared[(threadIdx.x + 55)]*placeholder.shared[((threadIdx.z*36) + 16)]))compute[10] = (compute[10] + (pad_temp.shared[(threadIdx.x + 73)]*placeholder.shared[((threadIdx.z*36) + 16)]))compute[11] = (compute[11] + (pad_temp.shared[(threadIdx.x + 91)]*placeholder.shared[((threadIdx.z*36) + 16)]))compute[12] = (compute[12] + (pad_temp.shared[(threadIdx.x + 109)]*placeholder.shared[((threadIdx.z*36) + 16)]))compute[13] = (compute[13] + (pad_temp.shared[(threadIdx.x + 127)]*placeholder.shared[((threadIdx.z*36) + 16)]))compute[14] = (compute[14] + (pad_temp.shared[(threadIdx.x + 145)]*placeholder.shared[((threadIdx.z*36) + 16)]))compute[15] = (compute[15] + (pad_temp.shared[(threadIdx.x + 163)]*placeholder.shared[((threadIdx.z*36) + 16)]))compute[16] = (compute[16] + (pad_temp.shared[(threadIdx.x + 37)]*placeholder.shared[((threadIdx.z*36) + 25)]))compute[17] = (compute[17] + (pad_temp.shared[(threadIdx.x + 55)]*placeholder.shared[((threadIdx.z*36) + 25)]))compute[18] = (compute[18] + (pad_temp.shared[(threadIdx.x + 73)]*placeholder.shared[((threadIdx.z*36) + 25)]))compute[19] = (compute[19] + (pad_temp.shared[(threadIdx.x + 91)]*placeholder.shared[((threadIdx.z*36) + 25)]))compute[20] = (compute[20] + (pad_temp.shared[(threadIdx.x + 109)]*placeholder.shared[((threadIdx.z*36) + 25)]))compute[21] = (compute[21] + (pad_temp.shared[(threadIdx.x + 127)]*placeholder.shared[((threadIdx.z*36) + 25)]))compute[22] = (compute[22] + (pad_temp.shared[(threadIdx.x + 145)]*placeholder.shared[((threadIdx.z*36) + 25)]))compute[23] = (compute[23] + (pad_temp.shared[(threadIdx.x + 163)]*placeholder.shared[((threadIdx.z*36) + 25)]))compute[24] = (compute[24] + (pad_temp.shared[(threadIdx.x + 37)]*placeholder.shared[((threadIdx.z*36) + 34)]))compute[25] = (compute[25] + (pad_temp.shared[(threadIdx.x + 55)]*placeholder.shared[((threadIdx.z*36) + 34)]))compute[26] = (compute[26] + (pad_temp.shared[(threadIdx.x + 73)]*placeholder.shared[((threadIdx.z*36) + 34)]))compute[27] = (compute[27] + (pad_temp.shared[(threadIdx.x + 91)]*placeholder.shared[((threadIdx.z*36) + 34)]))compute[28] = (compute[28] + (pad_temp.shared[(threadIdx.x + 109)]*placeholder.shared[((threadIdx.z*36) + 34)]))compute[29] = (compute[29] + (pad_temp.shared[(threadIdx.x + 127)]*placeholder.shared[((threadIdx.z*36) + 34)]))compute[30] = (compute[30] + (pad_temp.shared[(threadIdx.x + 145)]*placeholder.shared[((threadIdx.z*36) + 34)]))compute[31] = (compute[31] + (pad_temp.shared[(threadIdx.x + 163)]*placeholder.shared[((threadIdx.z*36) + 34)]))compute[0] = (compute[0] + (pad_temp.shared[(threadIdx.x + 38)]*placeholder.shared[((threadIdx.z*36) + 8)]))compute[1] = (compute[1] + (pad_temp.shared[(threadIdx.x + 56)]*placeholder.shared[((threadIdx.z*36) + 8)]))compute[2] = (compute[2] + (pad_temp.shared[(threadIdx.x + 74)]*placeholder.shared[((threadIdx.z*36) + 8)]))compute[3] = (compute[3] + (pad_temp.shared[(threadIdx.x + 92)]*placeholder.shared[((threadIdx.z*36) + 8)]))compute[4] = (compute[4] + (pad_temp.shared[(threadIdx.x + 110)]*placeholder.shared[((threadIdx.z*36) + 8)]))compute[5] = (compute[5] + (pad_temp.shared[(threadIdx.x + 128)]*placeholder.shared[((threadIdx.z*36) + 8)]))compute[6] = (compute[6] + (pad_temp.shared[(threadIdx.x + 146)]*placeholder.shared[((threadIdx.z*36) + 8)]))compute[7] = (compute[7] + (pad_temp.shared[(threadIdx.x + 164)]*placeholder.shared[((threadIdx.z*36) + 8)]))compute[8] = (compute[8] + (pad_temp.shared[(threadIdx.x + 38)]*placeholder.shared[((threadIdx.z*36) + 17)]))compute[9] = (compute[9] + (pad_temp.shared[(threadIdx.x + 56)]*placeholder.shared[((threadIdx.z*36) + 17)]))compute[10] = (compute[10] + (pad_temp.shared[(threadIdx.x + 74)]*placeholder.shared[((threadIdx.z*36) + 17)]))compute[11] = (compute[11] + (pad_temp.shared[(threadIdx.x + 92)]*placeholder.shared[((threadIdx.z*36) + 17)]))compute[12] = (compute[12] + (pad_temp.shared[(threadIdx.x + 110)]*placeholder.shared[((threadIdx.z*36) + 17)]))compute[13] = (compute[13] + (pad_temp.shared[(threadIdx.x + 128)]*placeholder.shared[((threadIdx.z*36) + 17)]))compute[14] = (compute[14] + (pad_temp.shared[(threadIdx.x + 146)]*placeholder.shared[((threadIdx.z*36) + 17)]))compute[15] = (compute[15] + (pad_temp.shared[(threadIdx.x + 164)]*placeholder.shared[((threadIdx.z*36) + 17)]))compute[16] = (compute[16] + (pad_temp.shared[(threadIdx.x + 38)]*placeholder.shared[((threadIdx.z*36) + 26)]))compute[17] = (compute[17] + (pad_temp.shared[(threadIdx.x + 56)]*placeholder.shared[((threadIdx.z*36) + 26)]))compute[18] = (compute[18] + (pad_temp.shared[(threadIdx.x + 74)]*placeholder.shared[((threadIdx.z*36) + 26)]))compute[19] = (compute[19] + (pad_temp.shared[(threadIdx.x + 92)]*placeholder.shared[((threadIdx.z*36) + 26)]))compute[20] = (compute[20] + (pad_temp.shared[(threadIdx.x + 110)]*placeholder.shared[((threadIdx.z*36) + 26)]))compute[21] = (compute[21] + (pad_temp.shared[(threadIdx.x + 128)]*placeholder.shared[((threadIdx.z*36) + 26)]))compute[22] = (compute[22] + (pad_temp.shared[(threadIdx.x + 146)]*placeholder.shared[((threadIdx.z*36) + 26)]))compute[23] = (compute[23] + (pad_temp.shared[(threadIdx.x + 164)]*placeholder.shared[((threadIdx.z*36) + 26)]))compute[24] = (compute[24] + (pad_temp.shared[(threadIdx.x + 38)]*placeholder.shared[((threadIdx.z*36) + 35)]))compute[25] = (compute[25] + (pad_temp.shared[(threadIdx.x + 56)]*placeholder.shared[((threadIdx.z*36) + 35)]))compute[26] = (compute[26] + (pad_temp.shared[(threadIdx.x + 74)]*placeholder.shared[((threadIdx.z*36) + 35)]))compute[27] = (compute[27] + (pad_temp.shared[(threadIdx.x + 92)]*placeholder.shared[((threadIdx.z*36) + 35)]))compute[28] = (compute[28] + (pad_temp.shared[(threadIdx.x + 110)]*placeholder.shared[((threadIdx.z*36) + 35)]))compute[29] = (compute[29] + (pad_temp.shared[(threadIdx.x + 128)]*placeholder.shared[((threadIdx.z*36) + 35)]))compute[30] = (compute[30] + (pad_temp.shared[(threadIdx.x + 146)]*placeholder.shared[((threadIdx.z*36) + 35)]))compute[31] = (compute[31] + (pad_temp.shared[(threadIdx.x + 164)]*placeholder.shared[((threadIdx.z*36) + 35)]))}}tensor[(((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x)] = max(((compute[0]*placeholder[(threadIdx.z*4)]) + placeholder[(threadIdx.z*4)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 224)] = max(((compute[1]*placeholder[(threadIdx.z*4)]) + placeholder[(threadIdx.z*4)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 448)] = max(((compute[2]*placeholder[(threadIdx.z*4)]) + placeholder[(threadIdx.z*4)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 672)] = max(((compute[3]*placeholder[(threadIdx.z*4)]) + placeholder[(threadIdx.z*4)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 896)] = max(((compute[4]*placeholder[(threadIdx.z*4)]) + placeholder[(threadIdx.z*4)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 1120)] = max(((compute[5]*placeholder[(threadIdx.z*4)]) + placeholder[(threadIdx.z*4)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 1344)] = max(((compute[6]*placeholder[(threadIdx.z*4)]) + placeholder[(threadIdx.z*4)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 1568)] = max(((compute[7]*placeholder[(threadIdx.z*4)]) + placeholder[(threadIdx.z*4)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 50176)] = max(((compute[8]*placeholder[((threadIdx.z*4) + 1)]) + placeholder[((threadIdx.z*4) + 1)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 50400)] = max(((compute[9]*placeholder[((threadIdx.z*4) + 1)]) + placeholder[((threadIdx.z*4) + 1)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 50624)] = max(((compute[10]*placeholder[((threadIdx.z*4) + 1)]) + placeholder[((threadIdx.z*4) + 1)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 50848)] = max(((compute[11]*placeholder[((threadIdx.z*4) + 1)]) + placeholder[((threadIdx.z*4) + 1)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 51072)] = max(((compute[12]*placeholder[((threadIdx.z*4) + 1)]) + placeholder[((threadIdx.z*4) + 1)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 51296)] = max(((compute[13]*placeholder[((threadIdx.z*4) + 1)]) + placeholder[((threadIdx.z*4) + 1)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 51520)] = max(((compute[14]*placeholder[((threadIdx.z*4) + 1)]) + placeholder[((threadIdx.z*4) + 1)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 51744)] = max(((compute[15]*placeholder[((threadIdx.z*4) + 1)]) + placeholder[((threadIdx.z*4) + 1)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 100352)] = max(((compute[16]*placeholder[((threadIdx.z*4) + 2)]) + placeholder[((threadIdx.z*4) + 2)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 100576)] = max(((compute[17]*placeholder[((threadIdx.z*4) + 2)]) + placeholder[((threadIdx.z*4) + 2)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 100800)] = max(((compute[18]*placeholder[((threadIdx.z*4) + 2)]) + placeholder[((threadIdx.z*4) + 2)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 101024)] = max(((compute[19]*placeholder[((threadIdx.z*4) + 2)]) + placeholder[((threadIdx.z*4) + 2)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 101248)] = max(((compute[20]*placeholder[((threadIdx.z*4) + 2)]) + placeholder[((threadIdx.z*4) + 2)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 101472)] = max(((compute[21]*placeholder[((threadIdx.z*4) + 2)]) + placeholder[((threadIdx.z*4) + 2)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 101696)] = max(((compute[22]*placeholder[((threadIdx.z*4) + 2)]) + placeholder[((threadIdx.z*4) + 2)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 101920)] = max(((compute[23]*placeholder[((threadIdx.z*4) + 2)]) + placeholder[((threadIdx.z*4) + 2)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 150528)] = max(((compute[24]*placeholder[((threadIdx.z*4) + 3)]) + placeholder[((threadIdx.z*4) + 3)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 150752)] = max(((compute[25]*placeholder[((threadIdx.z*4) + 3)]) + placeholder[((threadIdx.z*4) + 3)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 150976)] = max(((compute[26]*placeholder[((threadIdx.z*4) + 3)]) + placeholder[((threadIdx.z*4) + 3)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 151200)] = max(((compute[27]*placeholder[((threadIdx.z*4) + 3)]) + placeholder[((threadIdx.z*4) + 3)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 151424)] = max(((compute[28]*placeholder[((threadIdx.z*4) + 3)]) + placeholder[((threadIdx.z*4) + 3)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 151648)] = max(((compute[29]*placeholder[((threadIdx.z*4) + 3)]) + placeholder[((threadIdx.z*4) + 3)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 151872)] = max(((compute[30]*placeholder[((threadIdx.z*4) + 3)]) + placeholder[((threadIdx.z*4) + 3)]), 0.000000f)tensor[((((((blockIdx.y*112) + blockIdx.x) + (threadIdx.z*12544))*16) + threadIdx.x) + 152096)] = max(((compute[31]*placeholder[((threadIdx.z*4) + 3)]) + placeholder[((threadIdx.z*4) + 3)]), 0.000000f)

}

将 cuDNN 用于卷积层

将选项 “-libs=cudnn” 附加到 target 字符串,从而用 cuDNN 将卷积核替换为 cuDNN。

net, params = testing.create_workload(simple_net)

target = "cuda -libs=cudnn" # use cudnn for convolution

lib = relay.build_module.build(net, target, params=params)dev = tvm.device(target, 0)

data = np.random.uniform(-1, 1, size=data_shape).astype("float32")

module = runtime.GraphModule(lib["default"](dev))

module.set_input("data", data)

module.run()

out_shape = (batch_size, out_channels, 224, 224)

out = module.get_output(0, tvm.nd.empty(out_shape))

out_cudnn = out.numpy()

输出结果:

/workspace/python/tvm/driver/build_module.py:268: UserWarning: target_host parameter is going to be deprecated. Please pass in tvm.target.Target(target, host=target_host) instead."target_host parameter is going to be deprecated. "

注意,若用 cuDNN,Relay 无法将卷积与其后面的层融合。因为层融合发生在 TVM internal representation(IR)级别。 Relay 将外部库视为黑盒,因此无法将它们与 TVM IR 融合。

下面的伪代码显示了 cuDNN 卷积 + bias add + batch norm + ReLU 变成了两个计算阶段,一个用于 cuDNN 调用,另一个用于其余操作。

// attr [y] storage_scope = "global"

allocate y[float32 * 802816]

produce y {// attr [0] extern_scope = 0tvm_call_packed("tvm.contrib.cudnn.conv2d.forward", 1, 0, 1, 1, 1, 1, 1, 1, 1, tvm_stack_make_array(placeholder, tvm_stack_make_shape(1, 3, 224, 224), 0, 4, 0.000000f, 0), tvm_stack_make_array(placeholder, tvm_stack_make_shape(16, 3, 3, 3), 0, 4, 0.000000f, 0), tvm_stack_make_array(y, tvm_stack_make_shape(1, 16, 224, 224), 0, 4, 0.000000f, 0))

}

produce tensor {// attr [iter_var(blockIdx.x, , blockIdx.x)] thread_extent = 256// attr [iter_var(threadIdx.x, , threadIdx.x)] thread_extent = 512for (ax0.ax1.fused.ax2.fused.ax3.fused.outer, 0, 7) {if (likely(((blockIdx.x*512) < ((802816 - (ax0.ax1.fused.ax2.fused.ax3.fused.outer*131072)) - threadIdx.x)))) {tensor[(((((((blockIdx.x*512) + threadIdx.x) + (ax0.ax1.fused.ax2.fused.ax3.fused.outer*131072))/802816)*802816) + (((((((blockIdx.x*512) + threadIdx.x) + (ax0.ax1.fused.ax2.fused.ax3.fused.outer*131072))/224) % 224)*224) + ((((blockIdx.x*64) + threadIdx.x) + (ax0.ax1.fused.ax2.fused.ax3.fused.outer*32)) % 224))) + ((((((blockIdx.x*512) + threadIdx.x) + (ax0.ax1.fused.ax2.fused.ax3.fused.outer*131072))/50176) % 16)*50176))] = max(((y[(((((((blockIdx.x*512) + threadIdx.x) + (ax0.ax1.fused.ax2.fused.ax3.fused.outer*131072))/802816)*802816) + (((((((blockIdx.x*512) + threadIdx.x) + (ax0.ax1.fused.ax2.fused.ax3.fused.outer*131072))/224) % 224)*224) + ((((blockIdx.x*64) + threadIdx.x) + (ax0.ax1.fused.ax2.fused.ax3.fused.outer*32)) % 224))) + ((((((blockIdx.x*512) + threadIdx.x) + (ax0.ax1.fused.ax2.fused.ax3.fused.outer*131072))/50176) % 16)*50176))]*placeholder[(((((blockIdx.x*512) + threadIdx.x) + (ax0.ax1.fused.ax2.fused.ax3.fused.outer*131072))/50176) % 16)]) + placeholder[(((((blockIdx.x*512) + threadIdx.x) + (ax0.ax1.fused.ax2.fused.ax3.fused.outer*131072))/50176) % 16)]), 0.000000f)}}

}

验证结果

检查两次运行的结果是否匹配。

tvm.testing.assert_allclose(out_cuda, out_cudnn, rtol=1e-5)

结论

本教程介绍了 cuDNN 与 Relay 的使用,此外还支持 cuBLAS。若启用了 cuBLAS,它将在全连接层(relay.dense)内使用。若要用 cuBLAS,请将 target 字符串设置为 “cuda -libs=cublas”。也可以将 cuDNN 和 cuBLAS 与 “cuda -libs=cudnn,cublas” 一起使用。

对于 ROCm 后端,支持 MIOpen 和 rocBLAS。将 target 设置为 “rocm -libs=miopen,rocblas” 以启用它们。

使用外部库的注意事项:

首先,使用外部库可能会限制 TVM 和 Relay 的使用。例如,MIOpen 目前只支持 NCHW 布局和 fp32 数据类型,因此不能在 TVM 中使用其他布局或数据类型。

其次,外部库限制了计算图编译期间算子融合的可能性,如上所示。TVM 和 Relay 旨在通过联合算子级别和计算图级别优化,在各种硬件上实现最佳性能。为了实现这个目标,应该继续为 TVM 和 Relay 开发更好的优化,同时在必要时使用外部库回退到现有实现。

下载 Python 源代码:using_external_lib.py

下载 Jupyter Notebook:using_external_lib.ipynb

相关文章:

【TVM 教程】在 Relay 中使用外部库

Apache TVM 是一个端到端的深度学习编译框架,适用于 CPU、GPU 和各种机器学习加速芯片。更多 TVM 中文文档可访问 → https://tvm.hyper.ai/ 作者:Masahiro Masuda,Truman Tian 本文介绍如何将 cuDNN 或 cuBLAS 等外部库与 Relay 一起使用。…...

2024最新大厂面试:汇川嵌入式面试题及参考答案

目录 结合汇川业务,谈谈你对嵌入式开发的理解。 你使用过哪些芯片?请介绍它们的架构,例如 CORTEX-M3。 请描述项目的软件架构及其难点。 请介绍 SPI 的驱动和时序,包括 CS 拉低后到 CLK 第一个跳变沿的时间。同时,也请简要介绍数据链路层的相关知识。 栈溢出的原理是…...

tcp 流量控制

TCP流量控制是TCP/IP协议中用于控制发送方和接收方之间数据传输速率的一种机制,以防止网络拥塞和确保网络资源的有效利用。流量控制主要通过调整TCP窗口大小来实现,确保发送方不会发送超出接收方处理能力的数据量。以下是TCP流量控制的关键概念和工作原理…...

linux离线安装nacos

1、打开 Nacos-GitHub ,点击 Release 可以看到 Nacos 的各版本跟新信息和安装包之类的 点击下载nacos-server-2.4.1.tar.gz,在linux创建nacos文件夹,把下载好的文件上传到nacos文件夹,并通过命令解压:tar -zxvf nacos-server-2.4.…...

云原生 | 在 Kubernetes 中使用 Cilium 替代 Calico 网络插件实践指南!

[ 知识是人生的灯塔,只有不断学习,才能照亮前行的道路 ] 0x00 简述介绍 什么是 Cilium? Cilium 是一款开源软件,它基于一种名为eBPF的新的Linux内核技术提供动力,用于透明地保护使用 Docker 和 Kubernetes 等Linux 容器管理平台中部署的应用程序服务之间的网络连接,Ciliu…...

【重学 MySQL】十一、SQL 概述

【重学 MySQL】十一、SQL 概述 SQL 背景知识产生与发展主要特点主要应用SQL语言的发展趋势 SQL 语言排行榜SQL 分类数据查询语言(DQL, Data Query Language)数据操纵语言(DML, Data Manipulation Language)数据定义语言࿰…...

(一)模式识别——基于SVM的道路分割实验(附资源)

写在前面:本报告所有代码公开在附带资源中,无法下载代码资源的伙伴私信留下邮箱,小编24小时内回复 一、实验目的 1、实验目标 学习掌握SVM(Support Vector Machine)算法思想,利用MATLAB的特定工具箱和库函…...

Python | Leetcode Python题解之第391题完美矩形

题目: 题解: class Solution:def isRectangleCover(self, rectangles: List[List[int]]) -> bool:area, minX, minY, maxX, maxY 0, rectangles[0][0], rectangles[0][1], rectangles[0][2], rectangles[0][3]cnt defaultdict(int)for rect in rec…...

Rust模块std::thread

【图书介绍】《Rust编程与项目实战》-CSDN博客 《Rust编程与项目实战》(朱文伟,李建英)【摘要 书评 试读】- 京东图书 (jd.com) Rust到底值不值得学,之一 -CSDN博客 Rust到底值不值得学,之二-CSDN博客 Rust多线程编程概述-CSDN博客 12.…...

Leetcode Day20 打家劫舍

198 最基础 class Solution:def rob(self, nums: List[int]) -> int:dp1 [0] * len(nums)dp2 [0] * len(nums)# dp1指第i天偷了, dp2指第i天没有偷dp1[0] nums[0]for i in range(1, len(nums)):dp1[i] dp2[i - 1] nums[i]dp2[i] max(dp1[i - 1], dp2[i - 1])return m…...

云计算之数据库

目录 一、RDS产品介绍及排障思路 1.1 云RDS数据库及其特点 1.2 云RDS数据库-规格 1.3 云RDS数据库-存储 1.4 云RDS数据库-安全 1.5 云RDS数据库-整体架构 1.6 RDS常见问题排查 1.6.1 如何解决无法链接RDS实例的问题 1.6.2 RDS实例存储空间使用率高,怎…...

开发软件,什么类型的重要信息的日志要存到数据库表里面

在开发软件时,选择将哪些类型的重要信息日志存储到数据库表里面,主要取决于这些日志的用途、查询需求、性能考虑以及系统架构。以下是一些通常会选择存储到数据库表中的重要信息日志类型: 1. 业务日志: 交易记录:记录…...

websocket和轮询的区别?

问: websocket和轮询的区别? 回答: WebSocket 和定时轮询(每隔几秒发送一次请求)是两种不同的实时通信方法,各有优缺点,适用于不同的场景。以下是它们的主要区别及适用场景: WebSo…...

2024 年全国大学生数学建模竞赛(国赛)浅析

需要完整资料,请关注WX:“小何数模”! (需要完整B、C和E题资料请关注WX:“小何数模”,获取资料链接!) 本次万众瞩目的全国大学生数学建模赛题已正式出炉,无论是赛题难度…...

持续集成与持续部署(CI/CD)的深入探讨

在现代软件开发中,持续集成(CI)和持续部署(CD)已成为不可或缺的实践。这些方法旨在加快软件交付的速度,同时提高软件的质量和稳定性。通过CI/CD,开发团队可以频繁地将代码更改集成到主分支&…...

hyperf json-rpc

安装 安装docker hyperf 安装 hyperf-rpc-server-v8 (服务端) docker run --name hyperf-rpc-server-v8 \ -v /www/docker/hyperf-rpc-server:/data/project \ -w /data/project \ -p 9508:9501 -it \ --privileged -u root \ --entrypoint /bin/sh \…...

基于SpringBoot的外卖点餐系统

你好呀,我是计算机学姐码农小野!如果有相关需求,可以私信联系我。 开发语言:Java 数据库:MySQL 技术:SpringBootJSP 工具:IDEA/Eclipse、Navicat、Maven、Tomcat 系统展示 首页 用户管理界…...

网络编程day02(字节序、TCP编程)

目录 【1】字节序 1》大小端转换 2》端口转换 3》IP地址转换 主机字节序转换为网络字节序 (小端序->大端序) 网络字节序转换为主机字节序(大端序->小端序) 【2】TCP编程 1》流程 2》函数接口 1> socket 2> …...

)

萌新6:临场发挥(区间dp)

题目描述 小x和室友总共 nnn 人,组团去打一款游戏,总共有 nnn 台电脑供他们使用,一人一台,最开始,第 iii 个人使用第 iii 台电脑。 小x评估了每个人的能力值和临场发挥值。 第 iii 个人的能力值为 aia_iai。 而他们…...

《数字信号处理》学习04-离散时间系统中的线性时不变系统

目录 一,系统及离散时间系统 二,离散时间系统中的线性时不变系统 1,线性系统 1) 可加性 2) 比例性(齐次性) 3)叠加原理(叠加性质) 2,时不变系统(移不变系统) 通过前几篇文章的学习,此时我对序列的相关概…...

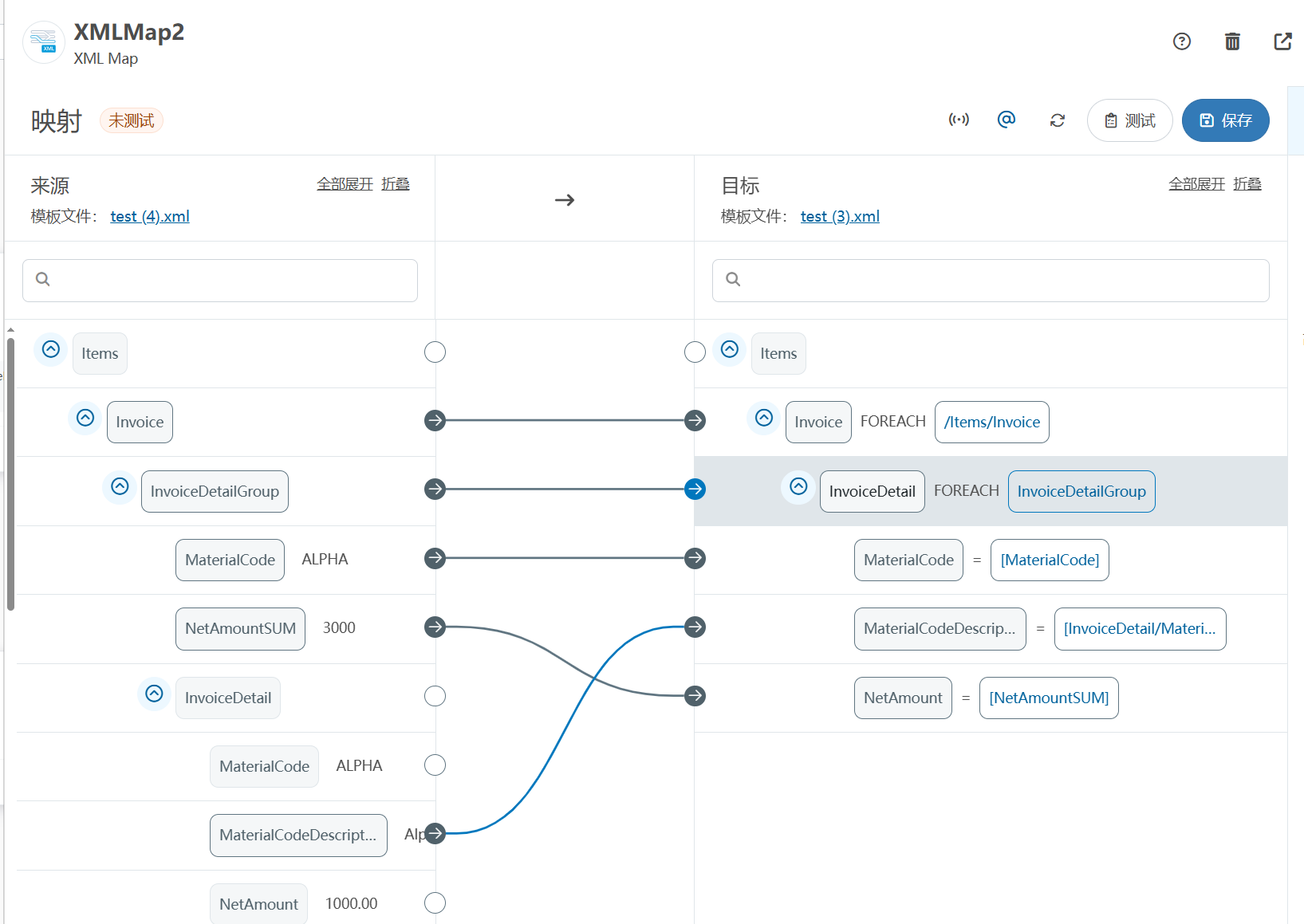

XML Group端口详解

在XML数据映射过程中,经常需要对数据进行分组聚合操作。例如,当处理包含多个物料明细的XML文件时,可能需要将相同物料号的明细归为一组,或对相同物料号的数量进行求和计算。传统实现方式通常需要编写脚本代码,增加了开…...

零门槛NAS搭建:WinNAS如何让普通电脑秒变私有云?

一、核心优势:专为Windows用户设计的极简NAS WinNAS由深圳耘想存储科技开发,是一款收费低廉但功能全面的Windows NAS工具,主打“无学习成本部署” 。与其他NAS软件相比,其优势在于: 无需硬件改造:将任意W…...

基于大模型的 UI 自动化系统

基于大模型的 UI 自动化系统 下面是一个完整的 Python 系统,利用大模型实现智能 UI 自动化,结合计算机视觉和自然语言处理技术,实现"看屏操作"的能力。 系统架构设计 #mermaid-svg-2gn2GRvh5WCP2ktF {font-family:"trebuchet ms",verdana,arial,sans-…...

)

椭圆曲线密码学(ECC)

一、ECC算法概述 椭圆曲线密码学(Elliptic Curve Cryptography)是基于椭圆曲线数学理论的公钥密码系统,由Neal Koblitz和Victor Miller在1985年独立提出。相比RSA,ECC在相同安全强度下密钥更短(256位ECC ≈ 3072位RSA…...

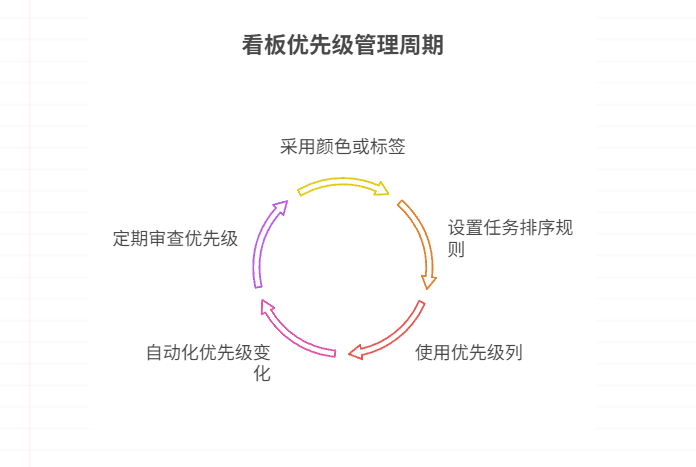

如何在看板中体现优先级变化

在看板中有效体现优先级变化的关键措施包括:采用颜色或标签标识优先级、设置任务排序规则、使用独立的优先级列或泳道、结合自动化规则同步优先级变化、建立定期的优先级审查流程。其中,设置任务排序规则尤其重要,因为它让看板视觉上直观地体…...

【SpringBoot】100、SpringBoot中使用自定义注解+AOP实现参数自动解密

在实际项目中,用户注册、登录、修改密码等操作,都涉及到参数传输安全问题。所以我们需要在前端对账户、密码等敏感信息加密传输,在后端接收到数据后能自动解密。 1、引入依赖 <dependency><groupId>org.springframework.boot</groupId><artifactId...

使用分级同态加密防御梯度泄漏

抽象 联邦学习 (FL) 支持跨分布式客户端进行协作模型训练,而无需共享原始数据,这使其成为在互联和自动驾驶汽车 (CAV) 等领域保护隐私的机器学习的一种很有前途的方法。然而,最近的研究表明&…...

-----深度优先搜索(DFS)实现)

c++ 面试题(1)-----深度优先搜索(DFS)实现

操作系统:ubuntu22.04 IDE:Visual Studio Code 编程语言:C11 题目描述 地上有一个 m 行 n 列的方格,从坐标 [0,0] 起始。一个机器人可以从某一格移动到上下左右四个格子,但不能进入行坐标和列坐标的数位之和大于 k 的格子。 例…...

解决本地部署 SmolVLM2 大语言模型运行 flash-attn 报错

出现的问题 安装 flash-attn 会一直卡在 build 那一步或者运行报错 解决办法 是因为你安装的 flash-attn 版本没有对应上,所以报错,到 https://github.com/Dao-AILab/flash-attention/releases 下载对应版本,cu、torch、cp 的版本一定要对…...

Spring AI 入门:Java 开发者的生成式 AI 实践之路

一、Spring AI 简介 在人工智能技术快速迭代的今天,Spring AI 作为 Spring 生态系统的新生力量,正在成为 Java 开发者拥抱生成式 AI 的最佳选择。该框架通过模块化设计实现了与主流 AI 服务(如 OpenAI、Anthropic)的无缝对接&…...