爆改YOLOv8|使用MobileNetV4替换yolov8的Backbone

1,本文介绍

MobileNetV4 是最新的 MobileNet 系列模型,专为移动设备优化。它引入了通用反转瓶颈(UIB)和 Mobile MQA 注意力机制,提升了推理速度和效率。通过改进的神经网络架构搜索(NAS)和蒸馏技术,MobileNetV4 在多种硬件平台上实现了高效和准确的表现,在 ImageNet-1K 数据集上达到 87% 的准确率,同时在 Pixel 8 EdgeTPU 上的运行时间为 3.8 毫秒。

关于MobileNetV4的详细介绍可以看论文:[2404.10518] MobileNetV4 - Universal Models for the Mobile Ecosystem

本文将讲解如何将MobileNetV4融合进yolov8

话不多说,上代码!

2, 将MobileNetV4融合进yolov8

2.1 步骤一

首先找到如下的目录'ultralytics/nn/modules',然后在这个目录下创建一个MobileNetV4.py文件,文件名字可以根据你自己的习惯起,然后将MobileNetV4的核心代码复制进去。

from typing import Optional

import torch

import torch.nn as nn

import torch.nn.functional as F__all__ = ['MobileNetV4ConvLarge', 'MobileNetV4ConvSmall', 'MobileNetV4ConvMedium', 'MobileNetV4HybridMedium', 'MobileNetV4HybridLarge']MNV4ConvSmall_BLOCK_SPECS = {"conv0": {"block_name": "convbn","num_blocks": 1,"block_specs": [[3, 32, 3, 2]]},"layer1": {"block_name": "convbn","num_blocks": 2,"block_specs": [[32, 32, 3, 2],[32, 32, 1, 1]]},"layer2": {"block_name": "convbn","num_blocks": 2,"block_specs": [[32, 96, 3, 2],[96, 64, 1, 1]]},"layer3": {"block_name": "uib","num_blocks": 6,"block_specs": [[64, 96, 5, 5, True, 2, 3],[96, 96, 0, 3, True, 1, 2],[96, 96, 0, 3, True, 1, 2],[96, 96, 0, 3, True, 1, 2],[96, 96, 0, 3, True, 1, 2],[96, 96, 3, 0, True, 1, 4],]},"layer4": {"block_name": "uib","num_blocks": 6,"block_specs": [[96, 128, 3, 3, True, 2, 6],[128, 128, 5, 5, True, 1, 4],[128, 128, 0, 5, True, 1, 4],[128, 128, 0, 5, True, 1, 3],[128, 128, 0, 3, True, 1, 4],[128, 128, 0, 3, True, 1, 4],]},"layer5": {"block_name": "convbn","num_blocks": 2,"block_specs": [[128, 960, 1, 1],[960, 1280, 1, 1]]}

}MNV4ConvMedium_BLOCK_SPECS = {"conv0": {"block_name": "convbn","num_blocks": 1,"block_specs": [[3, 32, 3, 2]]},"layer1": {"block_name": "fused_ib","num_blocks": 1,"block_specs": [[32, 48, 2, 4.0, True]]},"layer2": {"block_name": "uib","num_blocks": 2,"block_specs": [[48, 80, 3, 5, True, 2, 4],[80, 80, 3, 3, True, 1, 2]]},"layer3": {"block_name": "uib","num_blocks": 8,"block_specs": [[80, 160, 3, 5, True, 2, 6],[160, 160, 3, 3, True, 1, 4],[160, 160, 3, 3, True, 1, 4],[160, 160, 3, 5, True, 1, 4],[160, 160, 3, 3, True, 1, 4],[160, 160, 3, 0, True, 1, 4],[160, 160, 0, 0, True, 1, 2],[160, 160, 3, 0, True, 1, 4]]},"layer4": {"block_name": "uib","num_blocks": 11,"block_specs": [[160, 256, 5, 5, True, 2, 6],[256, 256, 5, 5, True, 1, 4],[256, 256, 3, 5, True, 1, 4],[256, 256, 3, 5, True, 1, 4],[256, 256, 0, 0, True, 1, 4],[256, 256, 3, 0, True, 1, 4],[256, 256, 3, 5, True, 1, 2],[256, 256, 5, 5, True, 1, 4],[256, 256, 0, 0, True, 1, 4],[256, 256, 0, 0, True, 1, 4],[256, 256, 5, 0, True, 1, 2]]},"layer5": {"block_name": "convbn","num_blocks": 2,"block_specs": [[256, 960, 1, 1],[960, 1280, 1, 1]]}

}MNV4ConvLarge_BLOCK_SPECS = {"conv0": {"block_name": "convbn","num_blocks": 1,"block_specs": [[3, 24, 3, 2]]},"layer1": {"block_name": "fused_ib","num_blocks": 1,"block_specs": [[24, 48, 2, 4.0, True]]},"layer2": {"block_name": "uib","num_blocks": 2,"block_specs": [[48, 96, 3, 5, True, 2, 4],[96, 96, 3, 3, True, 1, 4]]},"layer3": {"block_name": "uib","num_blocks": 11,"block_specs": [[96, 192, 3, 5, True, 2, 4],[192, 192, 3, 3, True, 1, 4],[192, 192, 3, 3, True, 1, 4],[192, 192, 3, 3, True, 1, 4],[192, 192, 3, 5, True, 1, 4],[192, 192, 5, 3, True, 1, 4],[192, 192, 5, 3, True, 1, 4],[192, 192, 5, 3, True, 1, 4],[192, 192, 5, 3, True, 1, 4],[192, 192, 5, 3, True, 1, 4],[192, 192, 3, 0, True, 1, 4]]},"layer4": {"block_name": "uib","num_blocks": 13,"block_specs": [[192, 512, 5, 5, True, 2, 4],[512, 512, 5, 5, True, 1, 4],[512, 512, 5, 5, True, 1, 4],[512, 512, 5, 5, True, 1, 4],[512, 512, 5, 0, True, 1, 4],[512, 512, 5, 3, True, 1, 4],[512, 512, 5, 0, True, 1, 4],[512, 512, 5, 0, True, 1, 4],[512, 512, 5, 3, True, 1, 4],[512, 512, 5, 5, True, 1, 4],[512, 512, 5, 0, True, 1, 4],[512, 512, 5, 0, True, 1, 4],[512, 512, 5, 0, True, 1, 4]]},"layer5": {"block_name": "convbn","num_blocks": 2,"block_specs": [[512, 960, 1, 1],[960, 1280, 1, 1]]}

}def mhsa(num_heads, key_dim, value_dim, px):if px == 24:kv_strides = 2elif px == 12:kv_strides = 1query_h_strides = 1query_w_strides = 1use_layer_scale = Trueuse_multi_query = Trueuse_residual = Truereturn [num_heads, key_dim, value_dim, query_h_strides, query_w_strides, kv_strides,use_layer_scale, use_multi_query, use_residual]MNV4HybridConvMedium_BLOCK_SPECS = {"conv0": {"block_name": "convbn","num_blocks": 1,"block_specs": [[3, 32, 3, 2]]},"layer1": {"block_name": "fused_ib","num_blocks": 1,"block_specs": [[32, 48, 2, 4.0, True]]},"layer2": {"block_name": "uib","num_blocks": 2,"block_specs": [[48, 80, 3, 5, True, 2, 4],[80, 80, 3, 3, True, 1, 2]]},"layer3": {"block_name": "uib","num_blocks": 8,"block_specs": [[80, 160, 3, 5, True, 2, 6],[160, 160, 0, 0, True, 1, 2],[160, 160, 3, 3, True, 1, 4],[160, 160, 3, 5, True, 1, 4, mhsa(4, 64, 64, 24)],[160, 160, 3, 3, True, 1, 4, mhsa(4, 64, 64, 24)],[160, 160, 3, 0, True, 1, 4, mhsa(4, 64, 64, 24)],[160, 160, 3, 3, True, 1, 4, mhsa(4, 64, 64, 24)],[160, 160, 3, 0, True, 1, 4]]},"layer4": {"block_name": "uib","num_blocks": 12,"block_specs": [[160, 256, 5, 5, True, 2, 6],[256, 256, 5, 5, True, 1, 4],[256, 256, 3, 5, True, 1, 4],[256, 256, 3, 5, True, 1, 4],[256, 256, 0, 0, True, 1, 2],[256, 256, 3, 5, True, 1, 2],[256, 256, 0, 0, True, 1, 2],[256, 256, 0, 0, True, 1, 4, mhsa(4, 64, 64, 12)],[256, 256, 3, 0, True, 1, 4, mhsa(4, 64, 64, 12)],[256, 256, 5, 5, True, 1, 4, mhsa(4, 64, 64, 12)],[256, 256, 5, 0, True, 1, 4, mhsa(4, 64, 64, 12)],[256, 256, 5, 0, True, 1, 4]]},"layer5": {"block_name": "convbn","num_blocks": 2,"block_specs": [[256, 960, 1, 1],[960, 1280, 1, 1]]}

}MNV4HybridConvLarge_BLOCK_SPECS = {"conv0": {"block_name": "convbn","num_blocks": 1,"block_specs": [[3, 24, 3, 2]]},"layer1": {"block_name": "fused_ib","num_blocks": 1,"block_specs": [[24, 48, 2, 4.0, True]]},"layer2": {"block_name": "uib","num_blocks": 2,"block_specs": [[48, 96, 3, 5, True, 2, 4],[96, 96, 3, 3, True, 1, 4]]},"layer3": {"block_name": "uib","num_blocks": 11,"block_specs": [[96, 192, 3, 5, True, 2, 4],[192, 192, 3, 3, True, 1, 4],[192, 192, 3, 3, True, 1, 4],[192, 192, 3, 3, True, 1, 4],[192, 192, 3, 5, True, 1, 4],[192, 192, 5, 3, True, 1, 4],[192, 192, 5, 3, True, 1, 4, mhsa(8, 48, 48, 24)],[192, 192, 5, 3, True, 1, 4, mhsa(8, 48, 48, 24)],[192, 192, 5, 3, True, 1, 4, mhsa(8, 48, 48, 24)],[192, 192, 5, 3, True, 1, 4, mhsa(8, 48, 48, 24)],[192, 192, 3, 0, True, 1, 4]]},"layer4": {"block_name": "uib","num_blocks": 14,"block_specs": [[192, 512, 5, 5, True, 2, 4],[512, 512, 5, 5, True, 1, 4],[512, 512, 5, 5, True, 1, 4],[512, 512, 5, 5, True, 1, 4],[512, 512, 5, 0, True, 1, 4],[512, 512, 5, 3, True, 1, 4],[512, 512, 5, 0, True, 1, 4],[512, 512, 5, 0, True, 1, 4],[512, 512, 5, 3, True, 1, 4],[512, 512, 5, 5, True, 1, 4, mhsa(8, 64, 64, 12)],[512, 512, 5, 0, True, 1, 4, mhsa(8, 64, 64, 12)],[512, 512, 5, 0, True, 1, 4, mhsa(8, 64, 64, 12)],[512, 512, 5, 0, True, 1, 4, mhsa(8, 64, 64, 12)],[512, 512, 5, 0, True, 1, 4]]},"layer5": {"block_name": "convbn","num_blocks": 2,"block_specs": [[512, 960, 1, 1],[960, 1280, 1, 1]]}

}MODEL_SPECS = {"MobileNetV4ConvSmall": MNV4ConvSmall_BLOCK_SPECS,"MobileNetV4ConvMedium": MNV4ConvMedium_BLOCK_SPECS,"MobileNetV4ConvLarge": MNV4ConvLarge_BLOCK_SPECS,"MobileNetV4HybridMedium": MNV4HybridConvMedium_BLOCK_SPECS,"MobileNetV4HybridLarge": MNV4HybridConvLarge_BLOCK_SPECS

}def make_divisible(value: float,divisor: int,min_value: Optional[float] = None,round_down_protect: bool = True,

) -> int:"""This function is copied from here"https://github.com/tensorflow/models/blob/master/official/vision/modeling/layers/nn_layers.py"This is to ensure that all layers have channels that are divisible by 8.Args:value: A `float` of original value.divisor: An `int` of the divisor that need to be checked upon.min_value: A `float` of minimum value threshold.round_down_protect: A `bool` indicating whether round down more than 10%will be allowed.Returns:The adjusted value in `int` that is divisible against divisor."""if min_value is None:min_value = divisornew_value = max(min_value, int(value + divisor / 2) // divisor * divisor)# Make sure that round down does not go down by more than 10%.if round_down_protect and new_value < 0.9 * value:new_value += divisorreturn int(new_value)def conv_2d(inp, oup, kernel_size=3, stride=1, groups=1, bias=False, norm=True, act=True):conv = nn.Sequential()padding = (kernel_size - 1) // 2conv.add_module('conv', nn.Conv2d(inp, oup, kernel_size, stride, padding, bias=bias, groups=groups))if norm:conv.add_module('BatchNorm2d', nn.BatchNorm2d(oup))if act:conv.add_module('Activation', nn.ReLU6())return convclass InvertedResidual(nn.Module):def __init__(self, inp, oup, stride, expand_ratio, act=False, squeeze_excitation=False):super(InvertedResidual, self).__init__()self.stride = strideassert stride in [1, 2]hidden_dim = int(round(inp * expand_ratio))self.block = nn.Sequential()if expand_ratio != 1:self.block.add_module('exp_1x1', conv_2d(inp, hidden_dim, kernel_size=3, stride=stride))if squeeze_excitation:self.block.add_module('conv_3x3',conv_2d(hidden_dim, hidden_dim, kernel_size=3, stride=stride, groups=hidden_dim))self.block.add_module('red_1x1', conv_2d(hidden_dim, oup, kernel_size=1, stride=1, act=act))self.use_res_connect = self.stride == 1 and inp == oupdef forward(self, x):if self.use_res_connect:return x + self.block(x)else:return self.block(x)class UniversalInvertedBottleneckBlock(nn.Module):def __init__(self,inp,oup,start_dw_kernel_size,middle_dw_kernel_size,middle_dw_downsample,stride,expand_ratio):"""An inverted bottleneck block with optional depthwises.Referenced from here https://github.com/tensorflow/models/blob/master/official/vision/modeling/layers/nn_blocks.py"""super().__init__()# Starting depthwise conv.self.start_dw_kernel_size = start_dw_kernel_sizeif self.start_dw_kernel_size:stride_ = stride if not middle_dw_downsample else 1self._start_dw_ = conv_2d(inp, inp, kernel_size=start_dw_kernel_size, stride=stride_, groups=inp, act=False)# Expansion with 1x1 convs.expand_filters = make_divisible(inp * expand_ratio, 8)self._expand_conv = conv_2d(inp, expand_filters, kernel_size=1)# Middle depthwise conv.self.middle_dw_kernel_size = middle_dw_kernel_sizeif self.middle_dw_kernel_size:stride_ = stride if middle_dw_downsample else 1self._middle_dw = conv_2d(expand_filters, expand_filters, kernel_size=middle_dw_kernel_size, stride=stride_,groups=expand_filters)# Projection with 1x1 convs.self._proj_conv = conv_2d(expand_filters, oup, kernel_size=1, stride=1, act=False)# Ending depthwise conv.# this not used# _end_dw_kernel_size = 0# self._end_dw = conv_2d(oup, oup, kernel_size=_end_dw_kernel_size, stride=stride, groups=inp, act=False)def forward(self, x):if self.start_dw_kernel_size:x = self._start_dw_(x)# print("_start_dw_", x.shape)x = self._expand_conv(x)# print("_expand_conv", x.shape)if self.middle_dw_kernel_size:x = self._middle_dw(x)# print("_middle_dw", x.shape)x = self._proj_conv(x)# print("_proj_conv", x.shape)return xclass MultiQueryAttentionLayerWithDownSampling(nn.Module):def __init__(self, inp, num_heads, key_dim, value_dim, query_h_strides, query_w_strides, kv_strides,dw_kernel_size=3, dropout=0.0):"""Multi Query Attention with spatial downsampling.Referenced from here https://github.com/tensorflow/models/blob/master/official/vision/modeling/layers/nn_blocks.py3 parameters are introduced for the spatial downsampling:1. kv_strides: downsampling factor on Key and Values only.2. query_h_strides: vertical strides on Query only.3. query_w_strides: horizontal strides on Query only.This is an optimized version.1. Projections in Attention is explict written out as 1x1 Conv2D.2. Additional reshapes are introduced to bring a up to 3x speed up."""super().__init__()self.num_heads = num_headsself.key_dim = key_dimself.value_dim = value_dimself.query_h_strides = query_h_stridesself.query_w_strides = query_w_stridesself.kv_strides = kv_stridesself.dw_kernel_size = dw_kernel_sizeself.dropout = dropoutself.head_dim = key_dim // num_headsif self.query_h_strides > 1 or self.query_w_strides > 1:self._query_downsampling_norm = nn.BatchNorm2d(inp)self._query_proj = conv_2d(inp, num_heads * key_dim, 1, 1, norm=False, act=False)if self.kv_strides > 1:self._key_dw_conv = conv_2d(inp, inp, dw_kernel_size, kv_strides, groups=inp, norm=True, act=False)self._value_dw_conv = conv_2d(inp, inp, dw_kernel_size, kv_strides, groups=inp, norm=True, act=False)self._key_proj = conv_2d(inp, key_dim, 1, 1, norm=False, act=False)self._value_proj = conv_2d(inp, key_dim, 1, 1, norm=False, act=False)self._output_proj = conv_2d(num_heads * key_dim, inp, 1, 1, norm=False, act=False)self.dropout = nn.Dropout(p=dropout)def forward(self, x):batch_size, seq_length, _, _ = x.size()if self.query_h_strides > 1 or self.query_w_strides > 1:q = F.avg_pool2d(self.query_h_stride, self.query_w_stride)q = self._query_downsampling_norm(q)q = self._query_proj(q)else:q = self._query_proj(x)px = q.size(2)q = q.view(batch_size, self.num_heads, -1, self.key_dim) # [batch_size, num_heads, seq_length, key_dim]if self.kv_strides > 1:k = self._key_dw_conv(x)k = self._key_proj(k)v = self._value_dw_conv(x)v = self._value_proj(v)else:k = self._key_proj(x)v = self._value_proj(x)k = k.view(batch_size, self.key_dim, -1) # [batch_size, key_dim, seq_length]v = v.view(batch_size, -1, self.key_dim) # [batch_size, seq_length, key_dim]# calculate attn scoreattn_score = torch.matmul(q, k) / (self.head_dim ** 0.5)attn_score = self.dropout(attn_score)attn_score = F.softmax(attn_score, dim=-1)context = torch.matmul(attn_score, v)context = context.view(batch_size, self.num_heads * self.key_dim, px, px)output = self._output_proj(context)return outputclass MNV4LayerScale(nn.Module):def __init__(self, init_value):"""LayerScale as introduced in CaiT: https://arxiv.org/abs/2103.17239Referenced from here https://github.com/tensorflow/models/blob/master/official/vision/modeling/layers/nn_blocks.pyAs used in MobileNetV4.Attributes:init_value (float): value to initialize the diagonal matrix of LayerScale."""super().__init__()self.init_value = init_valuedef forward(self, x):gamma = self.init_value * torch.ones(x.size(-1), dtype=x.dtype, device=x.device)return x * gammaclass MultiHeadSelfAttentionBlock(nn.Module):def __init__(self,inp,num_heads,key_dim,value_dim,query_h_strides,query_w_strides,kv_strides,use_layer_scale,use_multi_query,use_residual=True):super().__init__()self.query_h_strides = query_h_stridesself.query_w_strides = query_w_stridesself.kv_strides = kv_stridesself.use_layer_scale = use_layer_scaleself.use_multi_query = use_multi_queryself.use_residual = use_residualself._input_norm = nn.BatchNorm2d(inp)if self.use_multi_query:self.multi_query_attention = MultiQueryAttentionLayerWithDownSampling(inp, num_heads, key_dim, value_dim, query_h_strides, query_w_strides, kv_strides)else:self.multi_head_attention = nn.MultiheadAttention(inp, num_heads, kdim=key_dim)if self.use_layer_scale:self.layer_scale_init_value = 1e-5self.layer_scale = MNV4LayerScale(self.layer_scale_init_value)def forward(self, x):# Not using CPE, skipped# input normshortcut = xx = self._input_norm(x)# multi queryif self.use_multi_query:x = self.multi_query_attention(x)else:x = self.multi_head_attention(x, x)# layer scaleif self.use_layer_scale:x = self.layer_scale(x)# use residualif self.use_residual:x = x + shortcutreturn xdef build_blocks(layer_spec):if not layer_spec.get('block_name'):return nn.Sequential()block_names = layer_spec['block_name']layers = nn.Sequential()if block_names == "convbn":schema_ = ['inp', 'oup', 'kernel_size', 'stride']for i in range(layer_spec['num_blocks']):args = dict(zip(schema_, layer_spec['block_specs'][i]))layers.add_module(f"convbn_{i}", conv_2d(**args))elif block_names == "uib":schema_ = ['inp', 'oup', 'start_dw_kernel_size', 'middle_dw_kernel_size', 'middle_dw_downsample', 'stride','expand_ratio', 'msha']for i in range(layer_spec['num_blocks']):args = dict(zip(schema_, layer_spec['block_specs'][i]))msha = args.pop("msha") if "msha" in args else 0layers.add_module(f"uib_{i}", UniversalInvertedBottleneckBlock(**args))if msha:msha_schema_ = ["inp", "num_heads", "key_dim", "value_dim", "query_h_strides", "query_w_strides", "kv_strides","use_layer_scale", "use_multi_query", "use_residual"]args = dict(zip(msha_schema_, [args['oup']] + (msha)))layers.add_module(f"msha_{i}", MultiHeadSelfAttentionBlock(**args))elif block_names == "fused_ib":schema_ = ['inp', 'oup', 'stride', 'expand_ratio', 'act']for i in range(layer_spec['num_blocks']):args = dict(zip(schema_, layer_spec['block_specs'][i]))layers.add_module(f"fused_ib_{i}", InvertedResidual(**args))else:raise NotImplementedErrorreturn layersclass MobileNetV4(nn.Module):def __init__(self, model):# MobileNetV4ConvSmall MobileNetV4ConvMedium MobileNetV4ConvLarge# MobileNetV4HybridMedium MobileNetV4HybridLarge"""Params to initiate MobilenNetV4Args:model : support 5 types of models as indicated in"https://github.com/tensorflow/models/blob/master/official/vision/modeling/backbones/mobilenet.py""""super().__init__()assert model in MODEL_SPECS.keys()self.model = modelself.spec = MODEL_SPECS[self.model]# conv0self.conv0 = build_blocks(self.spec['conv0'])# layer1self.layer1 = build_blocks(self.spec['layer1'])# layer2self.layer2 = build_blocks(self.spec['layer2'])# layer3self.layer3 = build_blocks(self.spec['layer3'])# layer4self.layer4 = build_blocks(self.spec['layer4'])# layer5self.layer5 = build_blocks(self.spec['layer5'])self.width_list = [i.size(1) for i in self.forward(torch.randn(1, 3, 640, 640))]def forward(self, x):x0 = self.conv0(x)x1 = self.layer1(x0)x2 = self.layer2(x1)x3 = self.layer3(x2)x4 = self.layer4(x3)# x5 = self.layer5(x4)# x5 = nn.functional.adaptive_avg_pool2d(x5, 1)return [x1, x2, x3, x4]def MobileNetV4ConvSmall():model = MobileNetV4('MobileNetV4ConvSmall')return modeldef MobileNetV4ConvMedium():model = MobileNetV4('MobileNetV4ConvMedium')return modeldef MobileNetV4ConvLarge():model = MobileNetV4('MobileNetV4ConvLarge')return modeldef MobileNetV4HybridMedium():model = MobileNetV4('MobileNetV4HybridMedium')return modeldef MobileNetV4HybridLarge():model = MobileNetV4('MobileNetV4HybridLarge')return model2.2 步骤二

在task.py导入我们的模块

2.3 步骤三

如下图标注框所示,添加两行代码

2.4 步骤四

在task.py如下图所示位置,添加标注框内所示代码

elif m in {MobileNetV4ConvLarge, MobileNetV4ConvSmall, \MobileNetV4ConvMedium, MobileNetV4HybridMedium, MobileNetV4HybridLarge}:m = m(*args)c2 = m.width_listbackbone = True2.5 步骤五

在task.py如下图所示位置,添加标注框内所示代码

2.6 步骤六

在task.py如下图所示位置的代码需要替换

替换为下图所示代码

if verbose:LOGGER.info(f'{i:>3}{str(f):>20}{n_:>3}{m.np:10.0f} {t:<45}{str(args):<30}') # printsave.extend(x % (i + 4 if backbone else i) for x in ([f] if isinstance(f, int) else f) if x != -1) # append to savelistlayers.append(m_)if i == 0:ch = []if isinstance(c2, list):ch.extend(c2)if len(c2) != 5:ch.insert(0, 0)else:ch.append(c2)2.7 步骤七

这次修改在base_model的predict_once方法里面,在task.py的前面部分代码中。

在task.py如下图所示位置的代码需要替换

替换为下图所示代码

def _predict_once(self, x, profile=False, visualize=False, embed=None):y, dt, embeddings = [], [], [] # outputsfor m in self.model:if m.f != -1: # if not from previous layerx = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layersif profile:self._profile_one_layer(m, x, dt)if hasattr(m, 'backbone'):x = m(x)if len(x) != 5: # 0 - 5x.insert(0, None)for index, i in enumerate(x):if index in self.save:y.append(i)else:y.append(None)x = x[-1] # 最后一个输出传给下一层else:x = m(x) # runy.append(x if m.i in self.save else None) # save outputif visualize:feature_visualization(x, m.type, m.i, save_dir=visualize)if embed and m.i in embed:embeddings.append(nn.functional.adaptive_avg_pool2d(x, (1, 1)).squeeze(-1).squeeze(-1)) # flattenif m.i == max(embed):return torch.unbind(torch.cat(embeddings, 1), dim=0)return x2.8 步骤八

将下图所示代码注释掉,在ultralytics/utils/torch_utils.py中

修改为下图所示

2.9 步骤九

将下图所示代码注释掉,在task.py中,改为s=640

到这里完成修改,但是这里面细节很多,大家一定要注意,仔细修改,步骤比较多,出现错误很难找出来

复制下面的yaml文件运行即可

yaml文件

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'# [depth, width, max_channels]n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPss: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPsm: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPsl: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPsx: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs# YOLOv8.0n backbone

backbone:# [from, repeats, module, args]# MobileNetV4ConvSmall, MobileNetV4ConvLarge, MobileNetV4ConvMedium,# MobileNetV4HybridMedium, MobileNetV4HybridLarge 支持这五种版本- [-1, 1, MobileNetV4ConvSmall, []] # 4 将左面的MobileNetV4ConvSmall改为上面任意一个即替换对应的MobileNetV4版本- [-1, 1, SPPF, [1024, 5]] # 5# YOLOv8.0n head

head:- [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 6- [[-1, 3], 1, Concat, [1]] # 7 cat backbone P4- [-1, 3, C2f, [512]] # 8- [-1, 1, nn.Upsample, [None, 2, 'nearest']] # 9- [[-1, 2], 1, Concat, [1]] # 10 cat backbone P3- [-1, 3, C2f, [256]] # 11 (P3/8-small)- [-1, 1, Conv, [256, 3, 2]] # 12- [[-1, 8], 1, Concat, [1]] # 13 cat head P4- [-1, 3, C2f, [512]] # 14 (P4/16-medium)- [-1, 1, Conv, [512, 3, 2]] # 15- [[-1, 5], 1, Concat, [1]] # 16 cat head P5- [-1, 3, C2f, [1024]] # 17 (P5/32-large)- [[11, 14, 17], 1, Detect, [nc]] # Detect(P3, P4, P5)# 今天这个修改的地方比较多,大家一定要仔细检查

不知不觉已经看完了哦,动动小手留个点赞吧--_--

相关文章:

爆改YOLOv8|使用MobileNetV4替换yolov8的Backbone

1,本文介绍 MobileNetV4 是最新的 MobileNet 系列模型,专为移动设备优化。它引入了通用反转瓶颈(UIB)和 Mobile MQA 注意力机制,提升了推理速度和效率。通过改进的神经网络架构搜索(NAS)和蒸馏…...

C语言 | Leetcode C语言题解之第406题根据身高重建队列

题目: 题解: int cmp(const void* _a, const void* _b) {int *a *(int**)_a, *b *(int**)_b;return a[0] b[0] ? a[1] - b[1] : b[0] - a[0]; }int** reconstructQueue(int** people, int peopleSize, int* peopleColSize, int* returnSize, int** …...

【Git】初识Git

本篇文章的环境是在 Ubuntu/Linux 环境下编写的 文章目录 版本控制器Git 基本操作安装 Git创建 Git 本地仓库配置 Git认识工作区、暂存区、版本库添加文件修改文件版本回退撤销修改删除文件 版本控制器 在日常工作和学习中,老板/老师要求我们修改文档,…...

vue3 透传 Attributes

前言 Vue 3 现在正式支持了多根节点的组件,也就是片段! Vue 2.x 遵循单根节点组件的规则,即一个组件的模板必须有且仅有一个根元素。 为了满足单根节点的要求,开发者会将原本多根节点的内容包裹在一个<div>元素中&#x…...

4.接口测试基础(Jmter工具/场景二:一个项目由多个人负责接口测试,我只负责其中三个模块,协同)

一、场景二:一个项目由多个人负责接口测试,我只负责其中三个模块,协同 1.什么是测试片段? 1)就相当于只是项目的一部分用例,不能单独运行,必须要和控制器(include,模块)一…...

electron react离线使用monaco-editor

目录 1.搭建一个 electron-vite 项目 2.安装monaco-editor/react和monaco-editor 3.引入并做monaco-editor离线配置 4.react中使用 5.完整代码示例 6.monaco-editor离线配置官方说明 7.测试 1.搭建一个 electron-vite 项目 pnpm create quick-start/electron 参考链接…...

Python 的 WSGI 简单了解

从 flask 的 hello world 说起 直接讨论 WSGI,很多人可能没有概念,我们还是先从一个简单的 hello world 程序开始吧。 from flask import Flaskapp Flask(__name__)app.route("/", methods[GET]) def index():return "Hello world!&q…...

基于stm32使用ucgui+GUIBuilder开发ui实例

1 项目需求 1.1 基于Tft 触摸屏实现一个自锁按键 1.2 按键在按下后背景色需要进行变化,以凸显当前按键状态(选中or 未选中) 1.3 按键选中时对某一gpio输出低电平,非选中时输出高电平 2 移植 ucgui UCGUI的文件数量很大&#x…...

Spring扩展点系列-ApplicationContextAwareProcessor

文章目录 简介源码分析示例代码示例一:扩展点的执行顺序运行示例一 示例二:获取配置文件值配置文件application.properties内容定义工具类ConfigUtilcontroller测试调用运行示例二 示例三:实现ResourceLoaderAware读取文件ExtendResourceLoad…...

)

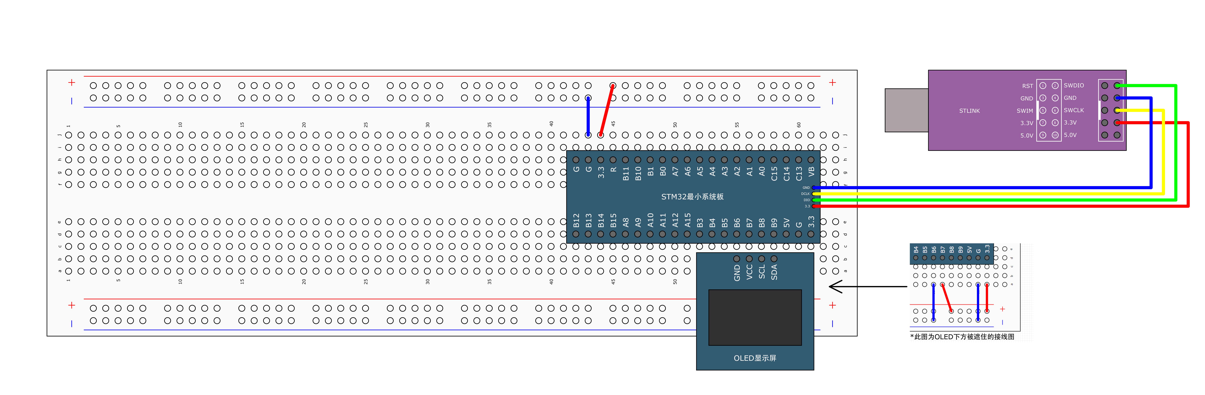

基于Keil软件实现实时时钟(江协科技HAL库)

实时时钟实验是基于江协科技STM32的HAL库工程模板创建的(可以在作品“基于江科大STM32创建的HAL库工程模板”中的结尾处获取工程模板的百度网盘链接) 复制“OLED显示”的工程文件——“4-1 OLED显示屏”,并命名为“12-2 实时时钟 ”。打开工程,把下面的程序复制到相应的文…...

dedecms靶场(四种webshell姿势)

进入靶场 姿势一:过文件管理器上传WebShell 步骤一:登录后台 /dede 步骤二:核心-》文件式管理-》文件上传-》上传一句话木马 点击 步骤三:进行蚁剑连接 姿势二:修改模板文件拿WebShell 步骤一:模板-》默认…...

PHP:强大的Web开发语言

PHP:强大的Web开发语言 一、PHP 简介及优势 PHP 的基本概念 PHP(PHP: Hypertext Preprocessor)即 “超文本预处理器”,是一种通用开源脚本语言,最初由 Rasmus Lerdorf 于 1994 年创建。它可以在服务器上执行…...

06_Python数据类型_元组

Python的基础数据类型 数值类型:整数、浮点数、复数、布尔字符串容器类型:列表、元祖、字典、集合 元组 元组(Tuple)是一种不可变的序列类型,与列表类似,但有一些关键的区别。本质:只读的列表…...

【Vue】- ref获取DOM元素和购物车案例分析

文章目录 知识回顾前言源码分析1. ref2. 购物车案例分析3. 购物车计算、全选 拓展知识数据持久化localStorage 总结 知识回顾 前言 元素上使用 ref属性关联响应式数据,获取DOM元素 步骤 ● 创建 ref > const hRef ref(null) ● 模板中建立关联 > <h1 re…...

【AI大模型】ChatGPT模型原理介绍(下)

目录 🍔 GPT-3介绍 1.1 GPT-3模型架构 1.2 GPT-3训练核心思想 1.3 GPT-3数据集 1.4 GPT-3模型的特点 1.5 GPT-3模型总结 🍔 ChatGPT介绍 2.1 ChatGPT原理 2.2 什么是强化学习 2.3 ChatGPT强化学习步骤 2.4 监督调优模型 2.5 训练奖励模型 2.…...

Python数据分析与可视化实战指南

在数据驱动的时代,Python因其简洁的语法、强大的库生态系统以及活跃的社区,成为了数据分析与可视化的首选语言。本文将通过一个详细的案例,带领大家学习如何使用Python进行数据分析,并通过可视化来直观呈现分析结果。 一、环境准…...

react18基础教程系列-- 框架基础理论知识mvc/jsx/createRoot

react的设计模式 React 是 mvc 体系,vue 是 mvvm 体系 mvc: model(数据)-view(视图)-controller(控制器) 我们需要按照专业的语法去构建 app 页面,react 使用的是 jsx 语法构建数据层,需要动态处理的的数据都要数据层支持控制层: 当我们需要…...

)

牛客周赛 Round 60 折返跑(组合数学)

题目链接:题目 大意: 在 1 1 1到 n n n之间往返跑m趟,推 m − 1 m-1 m−1次杆子,每次都向中间推,不能推零次,问有多少种推法(mod 1e97)。 思路: 一个高中学过的组合数…...

深入浅出Java匿名内部类:用法详解与实例演示

匿名内部类(Anonymous Inner Class)在Java中是一种非常有用的特性,它允许你在一个类的定义中直接创建并实例化一个内部类,而不需要为这个内部类指定一个名字。匿名内部类通常用于以下几种情况: 实现接口:当…...

数据库MySQL、Mariadb、PostgreSQL、MangoDB、Memcached和Redis详细介绍

以下是一些常见的后端开发数据库选型: 关系型数据库(RDBMS):关系型数据库是最常见的数据库类型,使用表格和关系模型来存储和管理数据。常见的关系型数据库包括MySQL、PostgreSQL和Oracle等。这些数据库适合处理结构化数…...

AI-调查研究-01-正念冥想有用吗?对健康的影响及科学指南

点一下关注吧!!!非常感谢!!持续更新!!! 🚀 AI篇持续更新中!(长期更新) 目前2025年06月05日更新到: AI炼丹日志-28 - Aud…...

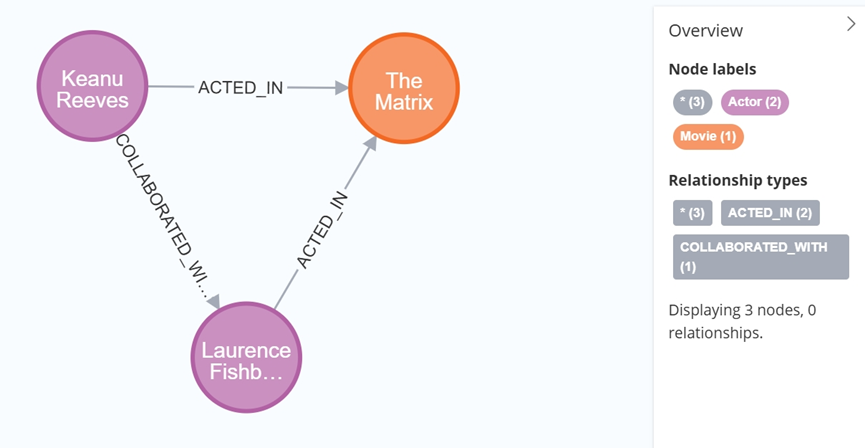

大数据学习栈记——Neo4j的安装与使用

本文介绍图数据库Neofj的安装与使用,操作系统:Ubuntu24.04,Neofj版本:2025.04.0。 Apt安装 Neofj可以进行官网安装:Neo4j Deployment Center - Graph Database & Analytics 我这里安装是添加软件源的方法 最新版…...

SkyWalking 10.2.0 SWCK 配置过程

SkyWalking 10.2.0 & SWCK 配置过程 skywalking oap-server & ui 使用Docker安装在K8S集群以外,K8S集群中的微服务使用initContainer按命名空间将skywalking-java-agent注入到业务容器中。 SWCK有整套的解决方案,全安装在K8S群集中。 具体可参…...

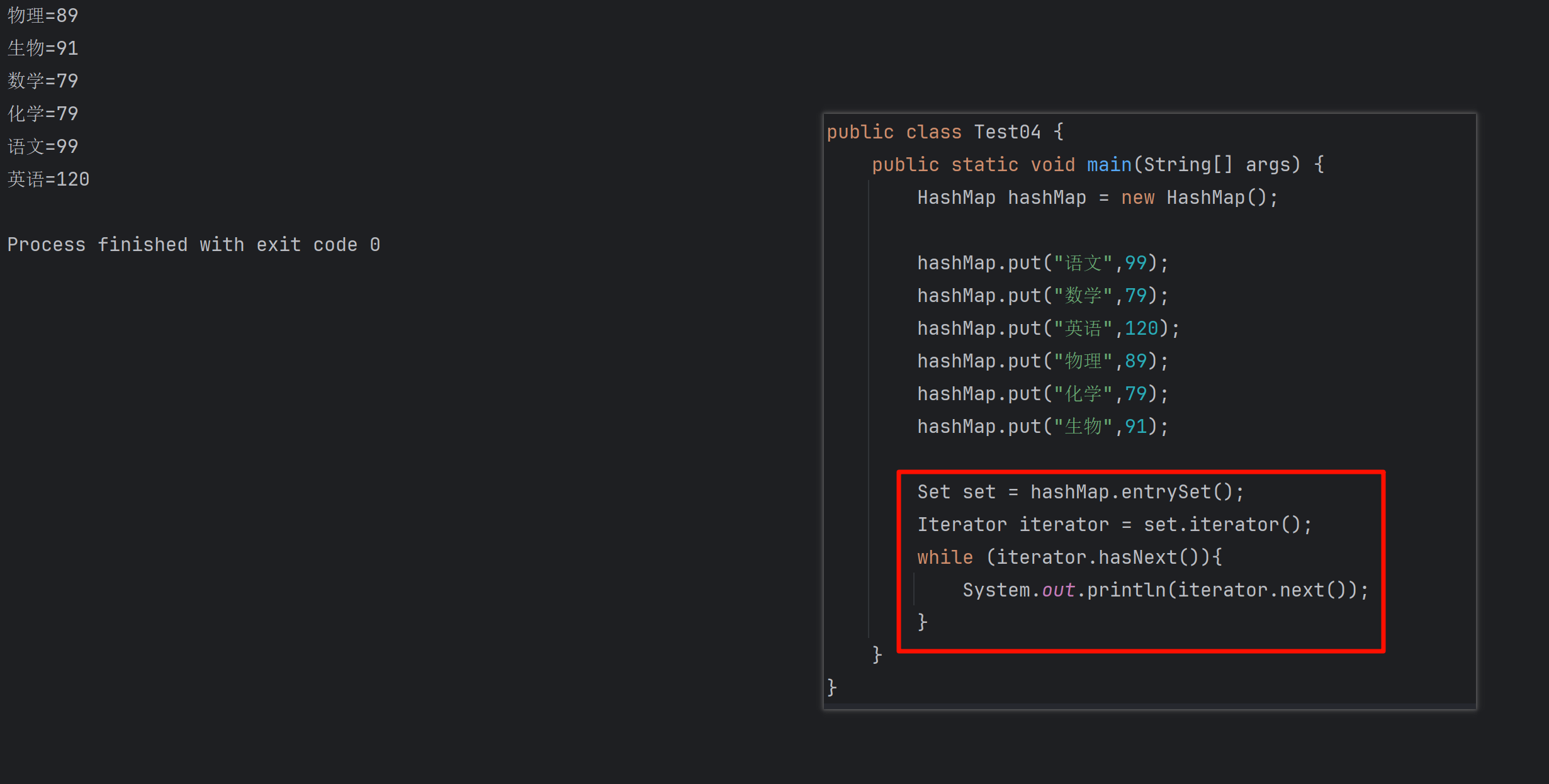

遍历 Map 类型集合的方法汇总

1 方法一 先用方法 keySet() 获取集合中的所有键。再通过 gey(key) 方法用对应键获取值 import java.util.HashMap; import java.util.Set;public class Test {public static void main(String[] args) {HashMap hashMap new HashMap();hashMap.put("语文",99);has…...

STM32标准库-DMA直接存储器存取

文章目录 一、DMA1.1简介1.2存储器映像1.3DMA框图1.4DMA基本结构1.5DMA请求1.6数据宽度与对齐1.7数据转运DMA1.8ADC扫描模式DMA 二、数据转运DMA2.1接线图2.2代码2.3相关API 一、DMA 1.1简介 DMA(Direct Memory Access)直接存储器存取 DMA可以提供外设…...

聊一聊接口测试的意义有哪些?

目录 一、隔离性 & 早期测试 二、保障系统集成质量 三、验证业务逻辑的核心层 四、提升测试效率与覆盖度 五、系统稳定性的守护者 六、驱动团队协作与契约管理 七、性能与扩展性的前置评估 八、持续交付的核心支撑 接口测试的意义可以从四个维度展开,首…...

动态 Web 开发技术入门篇

一、HTTP 协议核心 1.1 HTTP 基础 协议全称 :HyperText Transfer Protocol(超文本传输协议) 默认端口 :HTTP 使用 80 端口,HTTPS 使用 443 端口。 请求方法 : GET :用于获取资源,…...

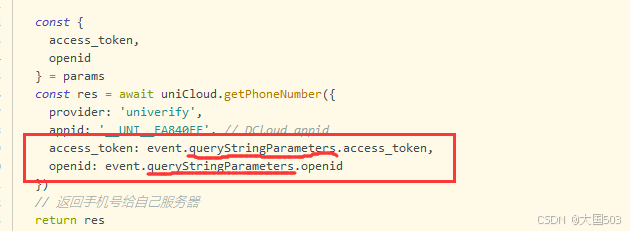

uniapp手机号一键登录保姆级教程(包含前端和后端)

目录 前置条件创建uniapp项目并关联uniClound云空间开启一键登录模块并开通一键登录服务编写云函数并上传部署获取手机号流程(第一种) 前端直接调用云函数获取手机号(第三种)后台调用云函数获取手机号 错误码常见问题 前置条件 手机安装有sim卡手机开启…...

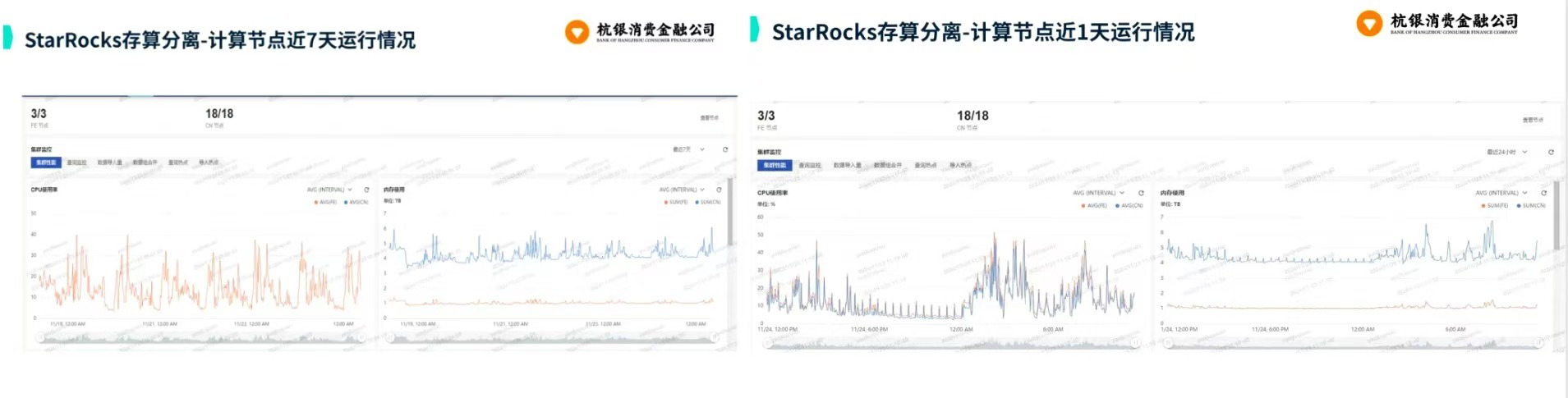

从 GreenPlum 到镜舟数据库:杭银消费金融湖仓一体转型实践

作者:吴岐诗,杭银消费金融大数据应用开发工程师 本文整理自杭银消费金融大数据应用开发工程师在StarRocks Summit Asia 2024的分享 引言:融合数据湖与数仓的创新之路 在数字金融时代,数据已成为金融机构的核心竞争力。杭银消费金…...

在树莓派上添加音频输入设备的几种方法

在树莓派上添加音频输入设备可以通过以下步骤完成,具体方法取决于设备类型(如USB麦克风、3.5mm接口麦克风或HDMI音频输入)。以下是详细指南: 1. 连接音频输入设备 USB麦克风/声卡:直接插入树莓派的USB接口。3.5mm麦克…...