【YOLOv8改进】 YOLOv8 更换骨干网络之GhostNetV3步骤详解

这里yolov8源码版本是 ultralytics-8.2.54

GhostNetV3 源码下载 https://codeload.github.com/huawei-noah/Efficient-AI-Backbones

将ghostnetv3.py文件复制一份到源码./ultralytics-8.2.54/ultralytics/nn/modules路径下

我根据mobilenetv4的教程,修改了ghostnetv3.py文件的以下部分:

class GhostNet(nn.Module):def __init__(self, block_specs, num_classes=1000):super(GhostNet, self).__init__()width=1.6dropout=0.2 block=GhostBottleneck# setting of inverted residual blocksself.dropout = dropout# building first layeroutput_channel = _make_divisible(16 * width, 4)self.conv_stem = nn.Conv2d(3, output_channel, 3, 2, 1, bias=False)self.bn1 = nn.BatchNorm2d(output_channel)self.act1 = nn.ReLU(inplace=True)input_channel = output_channel# building inverted residual blocksstages = []layer_id=0for block_cfg in block_specs:layers = []for k, exp_size, c, se_ratio, s in block_cfg:output_channel = _make_divisible(c * width, 4)hidden_channel = _make_divisible(exp_size * width, 4)if block==GhostBottleneck:layers.append(block(input_channel, hidden_channel, output_channel, k, s, se_ratio=se_ratio,layer_id=layer_id))input_channel = output_channellayer_id+=1stages.append(nn.Sequential(*layers))output_channel = _make_divisible(exp_size * width, 4)stages.append(nn.Sequential(ConvBnAct(input_channel, output_channel, 1)))input_channel = output_channelself.blocks = nn.Sequential(*stages) del self.blocks[9] # building last several layersoutput_channel = 1280self.global_pool = nn.AdaptiveAvgPool2d((1, 1))self.conv_head = nn.Conv2d(input_channel, output_channel, 1, 1, 0, bias=True)self.act2 = nn.ReLU(inplace=True)self.classifier = nn.Linear(output_channel, num_classes)self.layers_out_filters = [16, 24, 40, 112, 160]self.channels = [40, 64, 180, 256]def forward(self, x):x = self.conv_stem(x)x = self.bn1(x)x = self.act1(x)feature_maps = []for idx, block in enumerate(self.blocks):x = block(x)if idx in [2,4,6,8]:feature_maps.append(x)return feature_maps# def forward_ori(self, x):# x = self.conv_stem(x)# x = self.bn1(x)# x = self.act1(x)# x = self.blocks(x)# x = self.global_pool(x)# x = self.conv_head(x)# x = self.act2(x)# x = x.view(x.size(0), -1)# if self.dropout > 0.:# x = F.dropout(x, p=self.dropout, training=self.training)# x = self.classifier(x)# x = x.squeeze()# return xdef reparameterize(self):for _, module in self.named_modules():if isinstance(module, GhostModule):module.reparameterize()if isinstance(module, GhostBottleneck):module.reparameterize()@register_model

def ghostnetv3(**kwargs):"""Constructs a GhostNet model"""block_specs = [# k, t, c, SE, s # stage1[[3, 16, 16, 0, 1]],# stage2[[3, 48, 24, 0, 2]],[[3, 72, 24, 0, 1]],# stage3[[5, 72, 40, 0.25, 2]],[[5, 120, 40, 0.25, 1]],# stage4[[3, 240, 80, 0, 2]],[[3, 200, 80, 0, 1],[3, 184, 80, 0, 1],[3, 184, 80, 0, 1],[3, 480, 112, 0.25, 1],[3, 672, 112, 0.25, 1]],# stage5[[5, 672, 160, 0.25, 2]],[[5, 960, 160, 0, 1],[5, 960, 160, 0.25, 1],[5, 960, 160, 0, 1],[5, 960, 160, 0.25, 1]]]model = GhostNet(block_specs, **kwargs) #num_classes=4, width=1.6, dropout=0.2)return model模型注册以及引入

需要在./ultralytics-8.2.54/ultralytics/nn/modules/init.py文件中引入ghostnetv3

task.py文件的修改

(如果不了解这个文件是做啥的,推荐学习一下https://www.bilibili.com/video/BV1QC4y1R74t/?spm_id_from=333.999.top_right_bar_window_default_collection.content.click)

# Ultralytics YOLO 🚀, AGPL-3.0 licenseimport contextlib

from copy import deepcopy

from pathlib import Pathimport torch

import torch.nn as nnfrom ultralytics.nn.modules import (C2f_UIB,mobilenetv4_conv_large,ghostnetv3,AIFI,C1,C2,C3,C3TR,ELAN1,OBB,PSA,SPP,SPPELAN,SPPF,AConv,ADown,Bottleneck,BottleneckCSP,C2f,C2fAttn,C2fCIB,C3Ghost,C3x,CBFuse,CBLinear,Classify,Concat,Conv,Conv2,ConvTranspose,Detect,DWConv,DWConvTranspose2d,Focus,GhostBottleneck,GhostConv,HGBlock,HGStem,ImagePoolingAttn,Pose,RepC3,RepConv,RepNCSPELAN4,RepVGGDW,ResNetLayer,RTDETRDecoder,SCDown,Segment,WorldDetect,v10Detect,

)

from ultralytics.utils import DEFAULT_CFG_DICT, DEFAULT_CFG_KEYS, LOGGER, colorstr, emojis, yaml_load

from ultralytics.utils.checks import check_requirements, check_suffix, check_yaml

from ultralytics.utils.loss import (E2EDetectLoss,v8ClassificationLoss,v8DetectionLoss,v8OBBLoss,v8PoseLoss,v8SegmentationLoss,

)

from ultralytics.utils.plotting import feature_visualization

from ultralytics.utils.torch_utils import (fuse_conv_and_bn,fuse_deconv_and_bn,initialize_weights,intersect_dicts,make_divisible,model_info,scale_img,time_sync,

)try:import thop

except ImportError:thop = Noneclass BaseModel(nn.Module):"""The BaseModel class serves as a base class for all the models in the Ultralytics YOLO family."""def forward(self, x, *args, **kwargs):"""Forward pass of the model on a single scale. Wrapper for `_forward_once` method.Args:x (torch.Tensor | dict): The input image tensor or a dict including image tensor and gt labels.Returns:(torch.Tensor): The output of the network."""if isinstance(x, dict): # for cases of training and validating while training.return self.loss(x, *args, **kwargs)return self.predict(x, *args, **kwargs)def predict(self, x, profile=False, visualize=False, augment=False, embed=None):"""Perform a forward pass through the network.Args:x (torch.Tensor): The input tensor to the model.profile (bool): Print the computation time of each layer if True, defaults to False.visualize (bool): Save the feature maps of the model if True, defaults to False.augment (bool): Augment image during prediction, defaults to False.embed (list, optional): A list of feature vectors/embeddings to return.Returns:(torch.Tensor): The last output of the model."""if augment:return self._predict_augment(x)return self._predict_once(x, profile, visualize, embed)def _predict_once(self, x, profile=False, visualize=False, embed=None):"""Perform a forward pass through the network.Args:x (torch.Tensor): The input tensor to the model.profile (bool): Print the computation time of each layer if True, defaults to False.visualize (bool): Save the feature maps of the model if True, defaults to False.embed (list, optional): A list of feature vectors/embeddings to return.Returns:(torch.Tensor): The last output of the model."""y, dt, embeddings = [], [], [] # outputsfor m in self.model:if m.f != -1: # if not from previous layerx = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layersif profile:self._profile_one_layer(m, x, dt)if hasattr(m, 'backbone'):x = m(x)for _ in range(5 - len(x)):x.insert(0, None)for i_idx, i in enumerate(x):if i_idx in self.save:y.append(i)else:y.append(None)x = x[-1]else:x = m(x) # runy.append(x if m.i in self.save else None) # save outputif visualize:feature_visualization(x, m.type, m.i, save_dir=visualize)if embed and m.i in embed:embeddings.append(nn.functional.adaptive_avg_pool2d(x, (1, 1)).squeeze(-1).squeeze(-1)) # flattenif m.i == max(embed):return torch.unbind(torch.cat(embeddings, 1), dim=0)return xdef _predict_augment(self, x):"""Perform augmentations on input image x and return augmented inference."""LOGGER.warning(f"WARNING ⚠️ {self.__class__.__name__} does not support augmented inference yet. "f"Reverting to single-scale inference instead.")return self._predict_once(x)def _profile_one_layer(self, m, x, dt):"""Profile the computation time and FLOPs of a single layer of the model on a given input. Appends the results tothe provided list.Args:m (nn.Module): The layer to be profiled.x (torch.Tensor): The input data to the layer.dt (list): A list to store the computation time of the layer.Returns:None"""c = m == self.model[-1] and isinstance(x, list) # is final layer list, copy input as inplace fixflops = thop.profile(m, inputs=[x.copy() if c else x], verbose=False)[0] / 1e9 * 2 if thop else 0 # GFLOPst = time_sync()for _ in range(10):m(x.copy() if c else x)dt.append((time_sync() - t) * 100)if m == self.model[0]:LOGGER.info(f"{'time (ms)':>10s} {'GFLOPs':>10s} {'params':>10s} module")LOGGER.info(f"{dt[-1]:10.2f} {flops:10.2f} {m.np:10.0f} {m.type}")if c:LOGGER.info(f"{sum(dt):10.2f} {'-':>10s} {'-':>10s} Total")def fuse(self, verbose=True):"""Fuse the `Conv2d()` and `BatchNorm2d()` layers of the model into a single layer, in order to improve thecomputation efficiency.Returns:(nn.Module): The fused model is returned."""if not self.is_fused():for m in self.model.modules():if isinstance(m, (Conv, Conv2, DWConv)) and hasattr(m, "bn"):if isinstance(m, Conv2):m.fuse_convs()m.conv = fuse_conv_and_bn(m.conv, m.bn) # update convdelattr(m, "bn") # remove batchnormm.forward = m.forward_fuse # update forwardif isinstance(m, ConvTranspose) and hasattr(m, "bn"):m.conv_transpose = fuse_deconv_and_bn(m.conv_transpose, m.bn)delattr(m, "bn") # remove batchnormm.forward = m.forward_fuse # update forwardif isinstance(m, RepConv):m.fuse_convs()m.forward = m.forward_fuse # update forwardif isinstance(m, RepVGGDW):m.fuse()m.forward = m.forward_fuseself.info(verbose=verbose)return selfdef is_fused(self, thresh=10):"""Check if the model has less than a certain threshold of BatchNorm layers.Args:thresh (int, optional): The threshold number of BatchNorm layers. Default is 10.Returns:(bool): True if the number of BatchNorm layers in the model is less than the threshold, False otherwise."""bn = tuple(v for k, v in nn.__dict__.items() if "Norm" in k) # normalization layers, i.e. BatchNorm2d()return sum(isinstance(v, bn) for v in self.modules()) < thresh # True if < 'thresh' BatchNorm layers in modeldef info(self, detailed=False, verbose=True, imgsz=640):"""Prints model information.Args:detailed (bool): if True, prints out detailed information about the model. Defaults to Falseverbose (bool): if True, prints out the model information. Defaults to Falseimgsz (int): the size of the image that the model will be trained on. Defaults to 640"""return model_info(self, detailed=detailed, verbose=verbose, imgsz=imgsz)def _apply(self, fn):"""Applies a function to all the tensors in the model that are not parameters or registered buffers.Args:fn (function): the function to apply to the modelReturns:(BaseModel): An updated BaseModel object."""self = super()._apply(fn)m = self.model[-1] # Detect()if isinstance(m, Detect): # includes all Detect subclasses like Segment, Pose, OBB, WorldDetectm.stride = fn(m.stride)m.anchors = fn(m.anchors)m.strides = fn(m.strides)return selfdef load(self, weights, verbose=True):"""Load the weights into the model.Args:weights (dict | torch.nn.Module): The pre-trained weights to be loaded.verbose (bool, optional): Whether to log the transfer progress. Defaults to True."""model = weights["model"] if isinstance(weights, dict) else weights # torchvision models are not dictscsd = model.float().state_dict() # checkpoint state_dict as FP32csd = intersect_dicts(csd, self.state_dict()) # intersectself.load_state_dict(csd, strict=False) # loadif verbose:LOGGER.info(f"Transferred {len(csd)}/{len(self.model.state_dict())} items from pretrained weights")def loss(self, batch, preds=None):"""Compute loss.Args:batch (dict): Batch to compute loss onpreds (torch.Tensor | List[torch.Tensor]): Predictions."""if not hasattr(self, "criterion"):self.criterion = self.init_criterion()preds = self.forward(batch["img"]) if preds is None else predsreturn self.criterion(preds, batch)def init_criterion(self):"""Initialize the loss criterion for the BaseModel."""raise NotImplementedError("compute_loss() needs to be implemented by task heads")class DetectionModel(BaseModel):"""YOLOv8 detection model."""def __init__(self, cfg="yolov8n.yaml", ch=3, nc=None, verbose=True): # model, input channels, number of classes"""Initialize the YOLOv8 detection model with the given config and parameters."""super().__init__()self.yaml = cfg if isinstance(cfg, dict) else yaml_model_load(cfg) # cfg dictif self.yaml["backbone"][0][2] == "Silence":LOGGER.warning("WARNING ⚠️ YOLOv9 `Silence` module is deprecated in favor of nn.Identity. ""Please delete local *.pt file and re-download the latest model checkpoint.")self.yaml["backbone"][0][2] = "nn.Identity"# Define modelch = self.yaml["ch"] = self.yaml.get("ch", ch) # input channelsif nc and nc != self.yaml["nc"]:LOGGER.info(f"Overriding model.yaml nc={self.yaml['nc']} with nc={nc}")self.yaml["nc"] = nc # override YAML valueself.model, self.save = parse_model(deepcopy(self.yaml), ch=ch, verbose=verbose) # model, savelistself.names = {i: f"{i}" for i in range(self.yaml["nc"])} # default names dictself.inplace = self.yaml.get("inplace", True)self.end2end = getattr(self.model[-1], "end2end", False)# Build stridesm = self.model[-1] # Detect()if isinstance(m, (Detect, Segment, Pose, OBB)):s = 640 # 2x min stridem.inplace = self.inplaceself.model.to(torch.device('cuda'))forward = lambda x: self.forward(x)[0] if isinstance(m, (Segment, Pose, OBB)) else self.forward(x)m.stride = torch.tensor([s / x.shape[-2] for x in forward(torch.zeros(1, ch, s, s).to(torch.device('cuda')))]).cpu() # forwardself.model.cpu()self.stride = m.stridem.bias_init() # only run once# if isinstance(m, Detect): # includes all Detect subclasses like Segment, Pose, OBB, WorldDetect# s = 256 # 2x min stride# m.inplace = self.inplace## def _forward(x):# """Performs a forward pass through the model, handling different Detect subclass types accordingly."""# if self.end2end:# return self.forward(x)["one2many"]# return self.forward(x)[0] if isinstance(m, (Segment, Pose, OBB)) else self.forward(x)## m.stride = torch.tensor([s / x.shape[-2] for x in _forward(torch.zeros(1, ch, s, s))]) # forward# self.stride = m.stride# m.bias_init() # only run once# else:# self.stride = torch.Tensor([32]) # default stride for i.e. RTDETR# Init weights, biasesinitialize_weights(self)if verbose:self.info()LOGGER.info("")def _predict_augment(self, x):"""Perform augmentations on input image x and return augmented inference and train outputs."""img_size = x.shape[-2:] # height, widths = [1, 0.83, 0.67] # scalesf = [None, 3, None] # flips (2-ud, 3-lr)y = [] # outputsfor si, fi in zip(s, f):xi = scale_img(x.flip(fi) if fi else x, si, gs=int(self.stride.max()))yi = super().predict(xi)[0] # forwardyi = self._descale_pred(yi, fi, si, img_size)y.append(yi)y = self._clip_augmented(y) # clip augmented tailsreturn torch.cat(y, -1), None # augmented inference, train@staticmethoddef _descale_pred(p, flips, scale, img_size, dim=1):"""De-scale predictions following augmented inference (inverse operation)."""p[:, :4] /= scale # de-scalex, y, wh, cls = p.split((1, 1, 2, p.shape[dim] - 4), dim)if flips == 2:y = img_size[0] - y # de-flip udelif flips == 3:x = img_size[1] - x # de-flip lrreturn torch.cat((x, y, wh, cls), dim)def _clip_augmented(self, y):"""Clip YOLO augmented inference tails."""nl = self.model[-1].nl # number of detection layers (P3-P5)g = sum(4**x for x in range(nl)) # grid pointse = 1 # exclude layer counti = (y[0].shape[-1] // g) * sum(4**x for x in range(e)) # indicesy[0] = y[0][..., :-i] # largei = (y[-1].shape[-1] // g) * sum(4 ** (nl - 1 - x) for x in range(e)) # indicesy[-1] = y[-1][..., i:] # smallreturn ydef init_criterion(self):"""Initialize the loss criterion for the DetectionModel."""return E2EDetectLoss(self) if self.end2end else v8DetectionLoss(self)class OBBModel(DetectionModel):"""YOLOv8 Oriented Bounding Box (OBB) model."""def __init__(self, cfg="yolov8n-obb.yaml", ch=3, nc=None, verbose=True):"""Initialize YOLOv8 OBB model with given config and parameters."""super().__init__(cfg=cfg, ch=ch, nc=nc, verbose=verbose)def init_criterion(self):"""Initialize the loss criterion for the model."""return v8OBBLoss(self)class SegmentationModel(DetectionModel):"""YOLOv8 segmentation model."""def __init__(self, cfg="yolov8n-seg.yaml", ch=3, nc=None, verbose=True):"""Initialize YOLOv8 segmentation model with given config and parameters."""super().__init__(cfg=cfg, ch=ch, nc=nc, verbose=verbose)def init_criterion(self):"""Initialize the loss criterion for the SegmentationModel."""return v8SegmentationLoss(self)class PoseModel(DetectionModel):"""YOLOv8 pose model."""def __init__(self, cfg="yolov8n-pose.yaml", ch=3, nc=None, data_kpt_shape=(None, None), verbose=True):"""Initialize YOLOv8 Pose model."""if not isinstance(cfg, dict):cfg = yaml_model_load(cfg) # load model YAMLif any(data_kpt_shape) and list(data_kpt_shape) != list(cfg["kpt_shape"]):LOGGER.info(f"Overriding model.yaml kpt_shape={cfg['kpt_shape']} with kpt_shape={data_kpt_shape}")cfg["kpt_shape"] = data_kpt_shapesuper().__init__(cfg=cfg, ch=ch, nc=nc, verbose=verbose)def init_criterion(self):"""Initialize the loss criterion for the PoseModel."""return v8PoseLoss(self)class ClassificationModel(BaseModel):"""YOLOv8 classification model."""def __init__(self, cfg="yolov8n-cls.yaml", ch=3, nc=None, verbose=True):"""Init ClassificationModel with YAML, channels, number of classes, verbose flag."""super().__init__()self._from_yaml(cfg, ch, nc, verbose)def _from_yaml(self, cfg, ch, nc, verbose):"""Set YOLOv8 model configurations and define the model architecture."""self.yaml = cfg if isinstance(cfg, dict) else yaml_model_load(cfg) # cfg dict# Define modelch = self.yaml["ch"] = self.yaml.get("ch", ch) # input channelsif nc and nc != self.yaml["nc"]:LOGGER.info(f"Overriding model.yaml nc={self.yaml['nc']} with nc={nc}")self.yaml["nc"] = nc # override YAML valueelif not nc and not self.yaml.get("nc", None):raise ValueError("nc not specified. Must specify nc in model.yaml or function arguments.")self.model, self.save = parse_model(deepcopy(self.yaml), ch=ch, verbose=verbose) # model, savelistself.stride = torch.Tensor([1]) # no stride constraintsself.names = {i: f"{i}" for i in range(self.yaml["nc"])} # default names dictself.info()@staticmethoddef reshape_outputs(model, nc):"""Update a TorchVision classification model to class count 'n' if required."""name, m = list((model.model if hasattr(model, "model") else model).named_children())[-1] # last moduleif isinstance(m, Classify): # YOLO Classify() headif m.linear.out_features != nc:m.linear = nn.Linear(m.linear.in_features, nc)elif isinstance(m, nn.Linear): # ResNet, EfficientNetif m.out_features != nc:setattr(model, name, nn.Linear(m.in_features, nc))elif isinstance(m, nn.Sequential):types = [type(x) for x in m]if nn.Linear in types:i = len(types) - 1 - types[::-1].index(nn.Linear) # last nn.Linear indexif m[i].out_features != nc:m[i] = nn.Linear(m[i].in_features, nc)elif nn.Conv2d in types:i = len(types) - 1 - types[::-1].index(nn.Conv2d) # last nn.Conv2d indexif m[i].out_channels != nc:m[i] = nn.Conv2d(m[i].in_channels, nc, m[i].kernel_size, m[i].stride, bias=m[i].bias is not None)def init_criterion(self):"""Initialize the loss criterion for the ClassificationModel."""return v8ClassificationLoss()class RTDETRDetectionModel(DetectionModel):"""RTDETR (Real-time DEtection and Tracking using Transformers) Detection Model class.This class is responsible for constructing the RTDETR architecture, defining loss functions, and facilitating boththe training and inference processes. RTDETR is an object detection and tracking model that extends from theDetectionModel base class.Attributes:cfg (str): The configuration file path or preset string. Default is 'rtdetr-l.yaml'.ch (int): Number of input channels. Default is 3 (RGB).nc (int, optional): Number of classes for object detection. Default is None.verbose (bool): Specifies if summary statistics are shown during initialization. Default is True.Methods:init_criterion: Initializes the criterion used for loss calculation.loss: Computes and returns the loss during training.predict: Performs a forward pass through the network and returns the output."""def __init__(self, cfg="rtdetr-l.yaml", ch=3, nc=None, verbose=True):"""Initialize the RTDETRDetectionModel.Args:cfg (str): Configuration file name or path.ch (int): Number of input channels.nc (int, optional): Number of classes. Defaults to None.verbose (bool, optional): Print additional information during initialization. Defaults to True."""super().__init__(cfg=cfg, ch=ch, nc=nc, verbose=verbose)def init_criterion(self):"""Initialize the loss criterion for the RTDETRDetectionModel."""from ultralytics.models.utils.loss import RTDETRDetectionLossreturn RTDETRDetectionLoss(nc=self.nc, use_vfl=True)def loss(self, batch, preds=None):"""Compute the loss for the given batch of data.Args:batch (dict): Dictionary containing image and label data.preds (torch.Tensor, optional): Precomputed model predictions. Defaults to None.Returns:(tuple): A tuple containing the total loss and main three losses in a tensor."""if not hasattr(self, "criterion"):self.criterion = self.init_criterion()img = batch["img"]# NOTE: preprocess gt_bbox and gt_labels to list.bs = len(img)batch_idx = batch["batch_idx"]gt_groups = [(batch_idx == i).sum().item() for i in range(bs)]targets = {"cls": batch["cls"].to(img.device, dtype=torch.long).view(-1),"bboxes": batch["bboxes"].to(device=img.device),"batch_idx": batch_idx.to(img.device, dtype=torch.long).view(-1),"gt_groups": gt_groups,}preds = self.predict(img, batch=targets) if preds is None else predsdec_bboxes, dec_scores, enc_bboxes, enc_scores, dn_meta = preds if self.training else preds[1]if dn_meta is None:dn_bboxes, dn_scores = None, Noneelse:dn_bboxes, dec_bboxes = torch.split(dec_bboxes, dn_meta["dn_num_split"], dim=2)dn_scores, dec_scores = torch.split(dec_scores, dn_meta["dn_num_split"], dim=2)dec_bboxes = torch.cat([enc_bboxes.unsqueeze(0), dec_bboxes]) # (7, bs, 300, 4)dec_scores = torch.cat([enc_scores.unsqueeze(0), dec_scores])loss = self.criterion((dec_bboxes, dec_scores), targets, dn_bboxes=dn_bboxes, dn_scores=dn_scores, dn_meta=dn_meta)# NOTE: There are like 12 losses in RTDETR, backward with all losses but only show the main three losses.return sum(loss.values()), torch.as_tensor([loss[k].detach() for k in ["loss_giou", "loss_class", "loss_bbox"]], device=img.device)def predict(self, x, profile=False, visualize=False, batch=None, augment=False, embed=None):"""Perform a forward pass through the model.Args:x (torch.Tensor): The input tensor.profile (bool, optional): If True, profile the computation time for each layer. Defaults to False.visualize (bool, optional): If True, save feature maps for visualization. Defaults to False.batch (dict, optional): Ground truth data for evaluation. Defaults to None.augment (bool, optional): If True, perform data augmentation during inference. Defaults to False.embed (list, optional): A list of feature vectors/embeddings to return.Returns:(torch.Tensor): Model's output tensor."""y, dt, embeddings = [], [], [] # outputsfor m in self.model[:-1]: # except the head partif m.f != -1: # if not from previous layerx = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layersif profile:self._profile_one_layer(m, x, dt)x = m(x) # runy.append(x if m.i in self.save else None) # save outputif visualize:feature_visualization(x, m.type, m.i, save_dir=visualize)if embed and m.i in embed:embeddings.append(nn.functional.adaptive_avg_pool2d(x, (1, 1)).squeeze(-1).squeeze(-1)) # flattenif m.i == max(embed):return torch.unbind(torch.cat(embeddings, 1), dim=0)head = self.model[-1]x = head([y[j] for j in head.f], batch) # head inferencereturn xclass WorldModel(DetectionModel):"""YOLOv8 World Model."""def __init__(self, cfg="yolov8s-world.yaml", ch=3, nc=None, verbose=True):"""Initialize YOLOv8 world model with given config and parameters."""self.txt_feats = torch.randn(1, nc or 80, 512) # features placeholderself.clip_model = None # CLIP model placeholdersuper().__init__(cfg=cfg, ch=ch, nc=nc, verbose=verbose)def set_classes(self, text, batch=80, cache_clip_model=True):"""Set classes in advance so that model could do offline-inference without clip model."""try:import clipexcept ImportError:check_requirements("git+https://github.com/ultralytics/CLIP.git")import clipif (not getattr(self, "clip_model", None) and cache_clip_model): # for backwards compatibility of models lacking clip_model attributeself.clip_model = clip.load("ViT-B/32")[0]model = self.clip_model if cache_clip_model else clip.load("ViT-B/32")[0]device = next(model.parameters()).devicetext_token = clip.tokenize(text).to(device)txt_feats = [model.encode_text(token).detach() for token in text_token.split(batch)]txt_feats = txt_feats[0] if len(txt_feats) == 1 else torch.cat(txt_feats, dim=0)txt_feats = txt_feats / txt_feats.norm(p=2, dim=-1, keepdim=True)self.txt_feats = txt_feats.reshape(-1, len(text), txt_feats.shape[-1])self.model[-1].nc = len(text)def predict(self, x, profile=False, visualize=False, txt_feats=None, augment=False, embed=None):"""Perform a forward pass through the model.Args:x (torch.Tensor): The input tensor.profile (bool, optional): If True, profile the computation time for each layer. Defaults to False.visualize (bool, optional): If True, save feature maps for visualization. Defaults to False.txt_feats (torch.Tensor): The text features, use it if it's given. Defaults to None.augment (bool, optional): If True, perform data augmentation during inference. Defaults to False.embed (list, optional): A list of feature vectors/embeddings to return.Returns:(torch.Tensor): Model's output tensor."""txt_feats = (self.txt_feats if txt_feats is None else txt_feats).to(device=x.device, dtype=x.dtype)if len(txt_feats) != len(x):txt_feats = txt_feats.repeat(len(x), 1, 1)ori_txt_feats = txt_feats.clone()y, dt, embeddings = [], [], [] # outputsfor m in self.model: # except the head partif m.f != -1: # if not from previous layerx = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layersif profile:self._profile_one_layer(m, x, dt)if isinstance(m, C2fAttn):x = m(x, txt_feats)elif isinstance(m, WorldDetect):x = m(x, ori_txt_feats)elif isinstance(m, ImagePoolingAttn):txt_feats = m(x, txt_feats)else:x = m(x) # runy.append(x if m.i in self.save else None) # save outputif visualize:feature_visualization(x, m.type, m.i, save_dir=visualize)if embed and m.i in embed:embeddings.append(nn.functional.adaptive_avg_pool2d(x, (1, 1)).squeeze(-1).squeeze(-1)) # flattenif m.i == max(embed):return torch.unbind(torch.cat(embeddings, 1), dim=0)return xdef loss(self, batch, preds=None):"""Compute loss.Args:batch (dict): Batch to compute loss on.preds (torch.Tensor | List[torch.Tensor]): Predictions."""if not hasattr(self, "criterion"):self.criterion = self.init_criterion()if preds is None:preds = self.forward(batch["img"], txt_feats=batch["txt_feats"])return self.criterion(preds, batch)class Ensemble(nn.ModuleList):"""Ensemble of models."""def __init__(self):"""Initialize an ensemble of models."""super().__init__()def forward(self, x, augment=False, profile=False, visualize=False):"""Function generates the YOLO network's final layer."""y = [module(x, augment, profile, visualize)[0] for module in self]# y = torch.stack(y).max(0)[0] # max ensemble# y = torch.stack(y).mean(0) # mean ensembley = torch.cat(y, 2) # nms ensemble, y shape(B, HW, C)return y, None # inference, train output# Functions ------------------------------------------------------------------------------------------------------------@contextlib.contextmanager

def temporary_modules(modules=None, attributes=None):"""Context manager for temporarily adding or modifying modules in Python's module cache (`sys.modules`).This function can be used to change the module paths during runtime. It's useful when refactoring code,where you've moved a module from one location to another, but you still want to support the old importpaths for backwards compatibility.Args:modules (dict, optional): A dictionary mapping old module paths to new module paths.attributes (dict, optional): A dictionary mapping old module attributes to new module attributes.Example:```pythonwith temporary_modules({'old.module': 'new.module'}, {'old.module.attribute': 'new.module.attribute'}):import old.module # this will now import new.modulefrom old.module import attribute # this will now import new.module.attribute```Note:The changes are only in effect inside the context manager and are undone once the context manager exits.Be aware that directly manipulating `sys.modules` can lead to unpredictable results, especially in largerapplications or libraries. Use this function with caution."""if modules is None:modules = {}if attributes is None:attributes = {}import sysfrom importlib import import_moduletry:# Set attributes in sys.modules under their old namefor old, new in attributes.items():old_module, old_attr = old.rsplit(".", 1)new_module, new_attr = new.rsplit(".", 1)setattr(import_module(old_module), old_attr, getattr(import_module(new_module), new_attr))# Set modules in sys.modules under their old namefor old, new in modules.items():sys.modules[old] = import_module(new)yieldfinally:# Remove the temporary module pathsfor old in modules:if old in sys.modules:del sys.modules[old]def torch_safe_load(weight):"""This function attempts to load a PyTorch model with the torch.load() function. If a ModuleNotFoundError is raised,it catches the error, logs a warning message, and attempts to install the missing module via thecheck_requirements() function. After installation, the function again attempts to load the model using torch.load().Args:weight (str): The file path of the PyTorch model.Returns:(dict): The loaded PyTorch model."""from ultralytics.utils.downloads import attempt_download_assetcheck_suffix(file=weight, suffix=".pt")file = attempt_download_asset(weight) # search online if missing locallytry:with temporary_modules(modules={"ultralytics.yolo.utils": "ultralytics.utils","ultralytics.yolo.v8": "ultralytics.models.yolo","ultralytics.yolo.data": "ultralytics.data",},attributes={"ultralytics.nn.modules.block.Silence": "torch.nn.Identity", # YOLOv9e"ultralytics.nn.tasks.YOLOv10DetectionModel": "ultralytics.nn.tasks.DetectionModel", # YOLOv10},):ckpt = torch.load(file, map_location="cpu")except ModuleNotFoundError as e: # e.name is missing module nameif e.name == "models":raise TypeError(emojis(f"ERROR ❌️ {weight} appears to be an Ultralytics YOLOv5 model originally trained "f"with https://github.com/ultralytics/yolov5.\nThis model is NOT forwards compatible with "f"YOLOv8 at https://github.com/ultralytics/ultralytics."f"\nRecommend fixes are to train a new model using the latest 'ultralytics' package or to "f"run a command with an official YOLOv8 model, i.e. 'yolo predict model=yolov8n.pt'")) from eLOGGER.warning(f"WARNING ⚠️ {weight} appears to require '{e.name}', which is not in ultralytics requirements."f"\nAutoInstall will run now for '{e.name}' but this feature will be removed in the future."f"\nRecommend fixes are to train a new model using the latest 'ultralytics' package or to "f"run a command with an official YOLOv8 model, i.e. 'yolo predict model=yolov8n.pt'")check_requirements(e.name) # install missing moduleckpt = torch.load(file, map_location="cpu")if not isinstance(ckpt, dict):# File is likely a YOLO instance saved with i.e. torch.save(model, "saved_model.pt")LOGGER.warning(f"WARNING ⚠️ The file '{weight}' appears to be improperly saved or formatted. "f"For optimal results, use model.save('filename.pt') to correctly save YOLO models.")ckpt = {"model": ckpt.model}return ckpt, file # loaddef attempt_load_weights(weights, device=None, inplace=True, fuse=False):"""Loads an ensemble of models weights=[a,b,c] or a single model weights=[a] or weights=a."""ensemble = Ensemble()for w in weights if isinstance(weights, list) else [weights]:ckpt, w = torch_safe_load(w) # load ckptargs = {**DEFAULT_CFG_DICT, **ckpt["train_args"]} if "train_args" in ckpt else None # combined argsmodel = (ckpt.get("ema") or ckpt["model"]).to(device).float() # FP32 model# Model compatibility updatesmodel.args = args # attach args to modelmodel.pt_path = w # attach *.pt file path to modelmodel.task = guess_model_task(model)if not hasattr(model, "stride"):model.stride = torch.tensor([32.0])# Appendensemble.append(model.fuse().eval() if fuse and hasattr(model, "fuse") else model.eval()) # model in eval mode# Module updatesfor m in ensemble.modules():if hasattr(m, "inplace"):m.inplace = inplaceelif isinstance(m, nn.Upsample) and not hasattr(m, "recompute_scale_factor"):m.recompute_scale_factor = None # torch 1.11.0 compatibility# Return modelif len(ensemble) == 1:return ensemble[-1]# Return ensembleLOGGER.info(f"Ensemble created with {weights}\n")for k in "names", "nc", "yaml":setattr(ensemble, k, getattr(ensemble[0], k))ensemble.stride = ensemble[int(torch.argmax(torch.tensor([m.stride.max() for m in ensemble])))].strideassert all(ensemble[0].nc == m.nc for m in ensemble), f"Models differ in class counts {[m.nc for m in ensemble]}"return ensembledef attempt_load_one_weight(weight, device=None, inplace=True, fuse=False):"""Loads a single model weights."""ckpt, weight = torch_safe_load(weight) # load ckptargs = {**DEFAULT_CFG_DICT, **(ckpt.get("train_args", {}))} # combine model and default args, preferring model argsmodel = (ckpt.get("ema") or ckpt["model"]).to(device).float() # FP32 model# Model compatibility updatesmodel.args = {k: v for k, v in args.items() if k in DEFAULT_CFG_KEYS} # attach args to modelmodel.pt_path = weight # attach *.pt file path to modelmodel.task = guess_model_task(model)if not hasattr(model, "stride"):model.stride = torch.tensor([32.0])model = model.fuse().eval() if fuse and hasattr(model, "fuse") else model.eval() # model in eval mode# Module updatesfor m in model.modules():if hasattr(m, "inplace"):m.inplace = inplaceelif isinstance(m, nn.Upsample) and not hasattr(m, "recompute_scale_factor"):m.recompute_scale_factor = None # torch 1.11.0 compatibility# Return model and ckptreturn model, ckptdef parse_model(d, ch, verbose=True): # model_dict, input_channels(3)"""Parse a YOLO model.yaml dictionary into a PyTorch model."""import ast# Argsmax_channels = float("inf")nc, act, scales = (d.get(x) for x in ("nc", "activation", "scales"))depth, width, kpt_shape = (d.get(x, 1.0) for x in ("depth_multiple", "width_multiple", "kpt_shape"))if scales:scale = d.get("scale")if not scale:scale = tuple(scales.keys())[0]LOGGER.warning(f"WARNING ⚠️ no model scale passed. Assuming scale='{scale}'.")depth, width, max_channels = scales[scale]if act:Conv.default_act = eval(act) # redefine default activation, i.e. Conv.default_act = nn.SiLU()if verbose:LOGGER.info(f"{colorstr('activation:')} {act}") # printif verbose:LOGGER.info(f"\n{'':>3}{'from':>20}{'n':>3}{'params':>10} {'module':<45}{'arguments':<30}")ch = [ch]is_backbone = Falselayers, save, c2 = [], [], ch[-1] # layers, savelist, ch outfor i, (f, n, m, args) in enumerate(d["backbone"] + d["head"]): # from, number, module, argsm = getattr(torch.nn, m[3:]) if "nn." in m else globals()[m] # get modulefor j, a in enumerate(args):if isinstance(a, str):with contextlib.suppress(ValueError):args[j] = locals()[a] if a in locals() else ast.literal_eval(a)n = n_ = max(round(n * depth), 1) if n > 1 else n # depth gainif m in (Classify,Conv,ConvTranspose,GhostConv,Bottleneck,GhostBottleneck,SPP,SPPF,DWConv,Focus,BottleneckCSP,C1,C2,C2f,RepNCSPELAN4,ELAN1,ADown,AConv,SPPELAN,C2fAttn,C3,C3TR,C3Ghost,nn.ConvTranspose2d,DWConvTranspose2d,C3x,RepC3,PSA,SCDown,C2fCIB,C2f_UIB, ):c1, c2 = ch[f], args[0]if c2 != nc: # if c2 not equal to number of classes (i.e. for Classify() output)c2 = make_divisible(min(c2, max_channels) * width, 8)if m is C2fAttn:args[1] = make_divisible(min(args[1], max_channels // 2) * width, 8) # embed channelsargs[2] = int(max(round(min(args[2], max_channels // 2 // 32)) * width, 1) if args[2] > 1 else args[2]) # num headsargs = [c1, c2, *args[1:]]if m in (BottleneckCSP, C1, C2, C2f, C2fAttn, C3, C3TR, C3Ghost, C3x, RepC3, C2fCIB, C2f_UIB):args.insert(2, n) # number of repeatsn = 1elif m is AIFI:args = [ch[f], *args]elif m in (HGStem, HGBlock):c1, cm, c2 = ch[f], args[0], args[1]args = [c1, cm, c2, *args[2:]]if m is HGBlock:args.insert(4, n) # number of repeatsn = 1elif m in (mobilenetv4_conv_large, ghostnetv3):m = m(*args)c2 = m.channelselif m is ResNetLayer:c2 = args[1] if args[3] else args[1] * 4elif m is nn.BatchNorm2d:args = [ch[f]]elif m is Concat:c2 = sum(ch[x] for x in f)elif m in (Detect, WorldDetect, Segment, Pose, OBB, ImagePoolingAttn, v10Detect):args.append([ch[x] for x in f])if m is Segment:args[2] = make_divisible(min(args[2], max_channels) * width, 8)elif m is RTDETRDecoder: # special case, channels arg must be passed in index 1args.insert(1, [ch[x] for x in f])elif m is CBLinear:c2 = args[0]c1 = ch[f]args = [c1, c2, *args[1:]]elif m is CBFuse:c2 = ch[f[-1]]else:c2 = ch[f]if isinstance(c2, list):is_backbone = Truem_ = mm_.backbone = Trueelse:m_ = nn.Sequential(*(m(*args) for _ in range(n))) if n > 1 else m(*args) # modulet = str(m)[8:-2].replace('__main__.', '') # module typem.np = sum(x.numel() for x in m_.parameters()) # number paramsm_.i, m_.f, m_.type, m_.np = i + 4 if is_backbone else i, f, t, m.np # attach index, 'from' index, type, number paramsif verbose:LOGGER.info(f'{i:>3}{str(f):>20}{n_:>3}{m.np:10.0f} {t:<45}{str(args):<30}') # printsave.extend(x % (i + 4 if is_backbone else i) for x in ([f] if isinstance(f, int) else f) ifx != -1) # append to savelistlayers.append(m_)if i == 0:ch = []if isinstance(c2, list):ch.extend(c2)for _ in range(5 - len(ch)):ch.insert(0, 0)else:ch.append(c2)return nn.Sequential(*layers), sorted(save)def yaml_model_load(path):"""Load a YOLOv8 model from a YAML file."""import repath = Path(path)if path.stem in (f"yolov{d}{x}6" for x in "nsmlx" for d in (5, 8)):new_stem = re.sub(r"(\d+)([nslmx])6(.+)?$", r"\1\2-p6\3", path.stem)LOGGER.warning(f"WARNING ⚠️ Ultralytics YOLO P6 models now use -p6 suffix. Renaming {path.stem} to {new_stem}.")path = path.with_name(new_stem + path.suffix)unified_path = re.sub(r"(\d+)([nslmx])(.+)?$", r"\1\3", str(path)) # i.e. yolov8x.yaml -> yolov8.yamlyaml_file = check_yaml(unified_path, hard=False) or check_yaml(path)d = yaml_load(yaml_file) # model dictd["scale"] = guess_model_scale(path)d["yaml_file"] = str(path)return ddef guess_model_scale(model_path):"""Takes a path to a YOLO model's YAML file as input and extracts the size character of the model's scale. The functionuses regular expression matching to find the pattern of the model scale in the YAML file name, which is denoted byn, s, m, l, or x. The function returns the size character of the model scale as a string.Args:model_path (str | Path): The path to the YOLO model's YAML file.Returns:(str): The size character of the model's scale, which can be n, s, m, l, or x."""with contextlib.suppress(AttributeError):import rereturn re.search(r"yolov\d+([nslmx])", Path(model_path).stem).group(1) # n, s, m, l, or xreturn ""def guess_model_task(model):"""Guess the task of a PyTorch model from its architecture or configuration.Args:model (nn.Module | dict): PyTorch model or model configuration in YAML format.Returns:(str): Task of the model ('detect', 'segment', 'classify', 'pose').Raises:SyntaxError: If the task of the model could not be determined."""def cfg2task(cfg):"""Guess from YAML dictionary."""m = cfg["head"][-1][-2].lower() # output module nameif m in {"classify", "classifier", "cls", "fc"}:return "classify"if "detect" in m:return "detect"if m == "segment":return "segment"if m == "pose":return "pose"if m == "obb":return "obb"# Guess from model cfgif isinstance(model, dict):with contextlib.suppress(Exception):return cfg2task(model)# Guess from PyTorch modelif isinstance(model, nn.Module): # PyTorch modelfor x in "model.args", "model.model.args", "model.model.model.args":with contextlib.suppress(Exception):return eval(x)["task"]for x in "model.yaml", "model.model.yaml", "model.model.model.yaml":with contextlib.suppress(Exception):return cfg2task(eval(x))for m in model.modules():if isinstance(m, Segment):return "segment"elif isinstance(m, Classify):return "classify"elif isinstance(m, Pose):return "pose"elif isinstance(m, OBB):return "obb"elif isinstance(m, (Detect, WorldDetect, v10Detect)):return "detect"# Guess from model filenameif isinstance(model, (str, Path)):model = Path(model)if "-seg" in model.stem or "segment" in model.parts:return "segment"elif "-cls" in model.stem or "classify" in model.parts:return "classify"elif "-pose" in model.stem or "pose" in model.parts:return "pose"elif "-obb" in model.stem or "obb" in model.parts:return "obb"elif "detect" in model.parts:return "detect"# Unable to determine task from modelLOGGER.warning("WARNING ⚠️ Unable to automatically guess model task, assuming 'task=detect'. ""Explicitly define task for your model, i.e. 'task=detect', 'segment', 'classify','pose' or 'obb'.")return "detect" # assume detectyaml文件配置

# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect# Parameters

nc: 4 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'# [depth, width, max_channels]n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPss: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPsm: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPsl: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPsx: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs# YOLOv8.0n backbone

backbone:# [from, repeats, module, args]- [-1, 1, ghostnetv3, []] # 0-P1/2- [-1, 1, SPPF, [1024, 5]] # 5# YOLOv8.0n head

head:- [-1, 1, Classify, [nc]] # Classify

这样修改完后我是运行成功了,但是不知道有啥问题不,感谢批评指正!

参考链接:

YOLOv8改进策略|MobileNetv4替换主干网络 https://blog.csdn.net/m0_61698839/article/details/138537529

神经网络学习小记录60——Pytorch GhostNet模型的复现详解 https://blog.csdn.net/weixin_44791964/article/details/120884617

相关文章:

【YOLOv8改进】 YOLOv8 更换骨干网络之GhostNetV3步骤详解

这里yolov8源码版本是 ultralytics-8.2.54 GhostNetV3 源码下载 https://codeload.github.com/huawei-noah/Efficient-AI-Backbones 将ghostnetv3.py文件复制一份到源码./ultralytics-8.2.54/ultralytics/nn/modules路径下 我根据mobilenetv4的教程,修改了ghostne…...

成绩查询小程序,家长查分超方便~

这都马上2025年了,我不相信还有老师不知道怎么发成绩,如果你不知道,那么这篇文章不要错过,推荐给大家我用了7年的发成绩工具 易查分,新版本更新之后,发成绩只需要一分钟的时间即可生成一个成绩查询系统。 …...

鸿蒙开发(NEXT/API 12)【上传下载文件】远场通信场景

场景介绍 本协议栈框架支持将文件上传到服务器或者从服务器下载文件。 开发步骤 导包。 import { rcp } from kit.RemoteCommunicationKit; import {fileIo} from kit.CoreFileKit;下载文件。 let SESSION_CONFIG: rcp.SessionConfiguration {// 此处请根据业务设置合适的…...

快速理解AUTOSAR CP的软件架构层次以及各层的作用

在 AUTOSAR CP 的架构中,软件分为 应用层 (App)、运行时环境 (RTE) 和 基础软件层 (BSW) 三个主要层级。下面是每一层的主要功能与简单的代码示例来展示它们之间的关系。 1. 概述 应用层 (App):包含应用程序代码,主要实现业务逻辑。应用层通…...

【Unity】Unity中接入Admob聚合广告平台,可通过中介接入 AppLovin,Unity Ads,Meta等渠道的广告

一、下载Google Admob的SDK插件 到Google Admob官网中,切换到Unity平台 进来之后是这样,注意后面有Unity标识,然后点击下载,跳转到github中,下载最新的Admob插件sdk,导入到Unity中 二、阅读官方文档&…...

PythonExcel批量pingIP地址

问题: 作为一个电气工程师(PLC),当设备掉线的时候,需要用ping工具来检查网线物理层是否可靠连接,当项目体量过大时,就不能一个手动输入命令了。 解决方案一: 使用CMD命令 for /L %…...

软媒市场新蓝海:软文媒体自助发布与自助发稿的崛起

在信息时代的浪潮中,软媒市场以其独特的魅力和无限的潜力,成为了企业营销的新宠。随着互联网的飞速发展,软文媒体自助发布平台应运而生,为企业提供了更加高效、便捷的营销方式。而自助发稿功能的加入,更是让软媒市场的蓝海变得更加广阔。 软媒市场的独特价值 软媒市场之所以能…...

【笔记】Day2.5.1查询运费模板列表(未完

(一)代码编写 1.阅读需求,确保理解其中的每一个要素: 获取全部运费模板:这意味着我需要从数据库中查询所有运费模板数据。按创建时间倒序排序:这意味着查询结果需要根据模板的创建时间进行排序࿰…...

阿基米德螺旋线等距取点

曲线公式 极坐标形式: 笛卡尔坐标形式: 弧长公式 对极坐标形式积分可得弧长为: 将上式转换为一元二次方程: 解此一元二次方程可得: 等距取点 弧长L等距递增,代入公式,再利用笛卡尔坐标公式即…...

市场分析报告)

2024年全球增强现实(AR)市场分析报告

一、增强现实统计数据(2024) 市场价值:2024年,全球AR市场价值超过320亿美元,并预计到2027年将突破500亿美元。用户基础:目前约有14亿活跃的AR用户设备,这一数字预计将在2024年增长至17.3亿。消费者认知:大约四分之三的44岁以下成年人对AR有所了解。购物体验:基于AR的购物…...

探索 NetworkX:Python中的网络分析利器

文章目录 **探索 NetworkX:Python中的网络分析利器**一、背景介绍二、NetworkX是什么?三、如何安装NetworkX?四、NetworkX的五个简单函数五、NetworkX的三个应用场景六、常见问题及解决方案七、总结 探索 NetworkX:Python中的网络…...

Python知识点:基于Python技术,如何使用AirSim进行无人机模拟

开篇,先说一个好消息,截止到2025年1月1日前,翻到文末找到我,赠送定制版的开题报告和任务书,先到先得!过期不候! 如何使用Python和AirSim进行无人机模拟 无人机技术的发展为许多行业带来了革命性…...

《中国林业产业》是什么级别的期刊?是正规期刊吗?能评职称吗?

问题解答 问:《中国林业产业》是不是核心期刊? 答:不是,是知网收录的正规学术期刊。 问:《中国林业产业》级别? 答:国家级。主管单位:国家林业和草原局 …...

私域流量下的白酒新传奇:半年破五千万的营销策略揭秘

在当今的数字化浪潮中,某白酒品牌独树一帜,摒弃了实体店和传统电商的常规路径,仅凭其精心构建的私域流量生态,在短短六个月内创造了超过五千万元的销售额奇迹。这一非凡成就背后,蕴含着一套独特的营销策略。 重塑营销&…...

Tomcat 配置:方便运行 Java Web 项目

目录 一、作用 二、安装 三、配置环境 四、启动 五、访问 一、作用 是一个轻量级的web服务器,可使用Tomcat运行Java Web项目。 二、安装 1. 基于JDK(安装Tomcat之前,先安装JDK,并配置环境变量JAVA_HOME) 2. apache-tom…...

Spring Boot知识管理:机器学习与AI集成

5系统详细实现 5.1 管理员模块的实现 5.1.1 用户管理 知识管理系统的管理员可以对用户新增,修改,删除,查询操作。具体界面的展示如图5.1所示。 图5.1 用户管理管理界面 5.1.2 文章分类 管理员登录可以在文章分类新增,修改&#…...

Superset SQL模板使用

使用背景 有时想让表的时间索引生效,而不是在最外层配置报表时,再套多一层时间范围。这时可以使用SQL模板 参考官方文档 https://superset.apache.org/docs/configuration/sql-templating/#:~:textSQL%20Lab%20and%20Explore%20supports%20Jinja 我…...

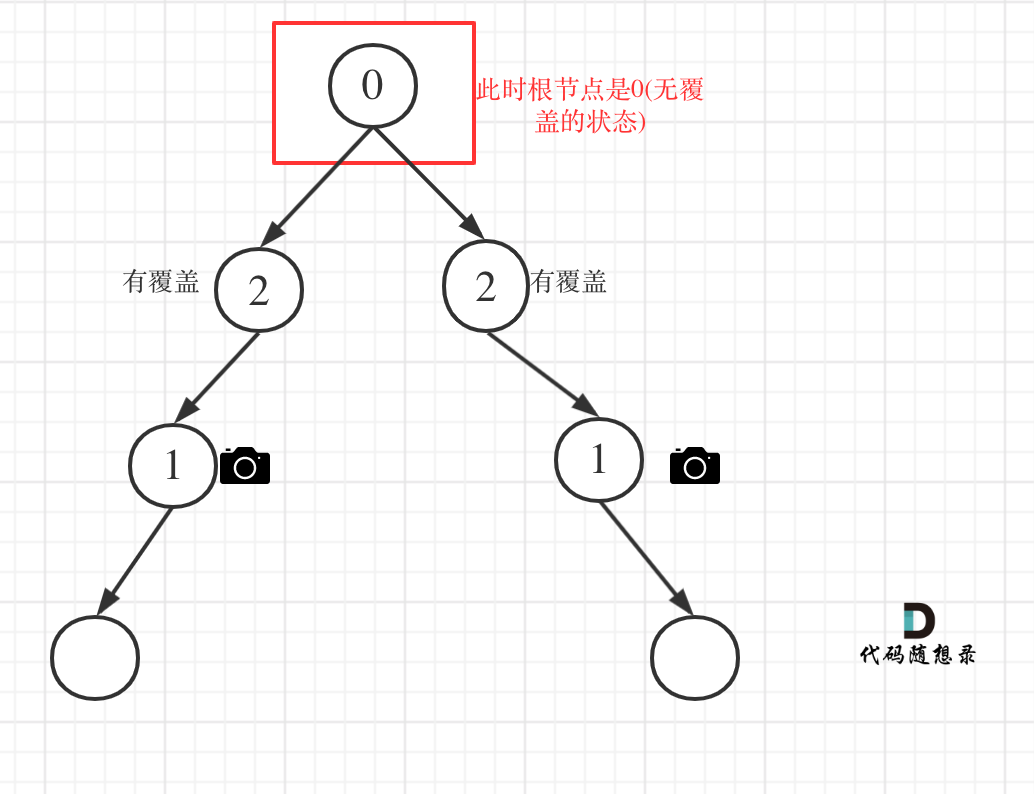

算法工程师重生之第二十七天(合并区间 单调递增的数字 监控二叉树 总结)

参考文献 代码随想录 一、合并区间 以数组 intervals 表示若干个区间的集合,其中单个区间为 intervals[i] [starti, endi] 。请你合并所有重叠的区间,并返回 一个不重叠的区间数组,该数组需恰好覆盖输入中的所有区间 。 示例 1:…...

前端开发基础NodeJS+NPM基本使用(零基础入门)

文章目录 1、Nodejs基础1.1、NodeJs简介1.2、下载安装文件1.3、安装NodeJS1.4、验证安装2、Node.js 创建第一个应用2.1、说明2.2、创建服务脚本2.3、执行运行代码2.4、测试访问3、npm 基本使用3.1、测试安装3.2、配置淘宝npm镜像3.3.1、本地安装3.3.2、全局安装3.4、查看安装信…...

)

深度学习 nd.random.normal()

nd.random.normal() 是 MXNet 中用于生成符合正态分布(高斯分布)随机数的函数。它允许用户指定均值、标准差以及生成的随机数的形状。 函数签名 mx.nd.random.normal(loc0.0, scale1.0, shape(1,)) 参数 loc: 生成的随机数的均值,默认为 …...

)

React Native 导航系统实战(React Navigation)

导航系统实战(React Navigation) React Navigation 是 React Native 应用中最常用的导航库之一,它提供了多种导航模式,如堆栈导航(Stack Navigator)、标签导航(Tab Navigator)和抽屉…...

学校招生小程序源码介绍

基于ThinkPHPFastAdminUniApp开发的学校招生小程序源码,专为学校招生场景量身打造,功能实用且操作便捷。 从技术架构来看,ThinkPHP提供稳定可靠的后台服务,FastAdmin加速开发流程,UniApp则保障小程序在多端有良好的兼…...

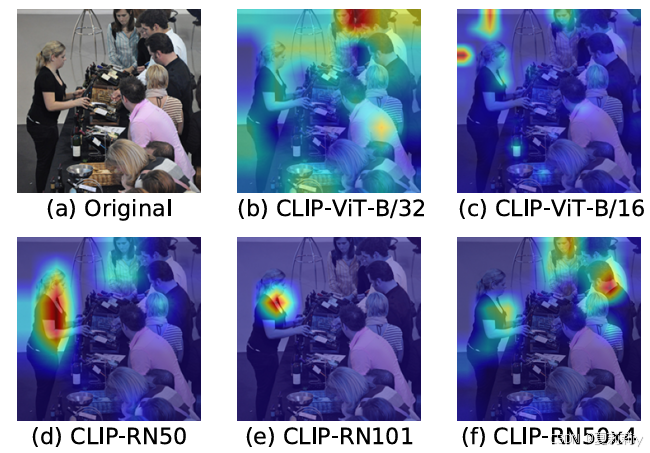

[ICLR 2022]How Much Can CLIP Benefit Vision-and-Language Tasks?

论文网址:pdf 英文是纯手打的!论文原文的summarizing and paraphrasing。可能会出现难以避免的拼写错误和语法错误,若有发现欢迎评论指正!文章偏向于笔记,谨慎食用 目录 1. 心得 2. 论文逐段精读 2.1. Abstract 2…...

镜像里切换为普通用户

如果你登录远程虚拟机默认就是 root 用户,但你不希望用 root 权限运行 ns-3(这是对的,ns3 工具会拒绝 root),你可以按以下方法创建一个 非 root 用户账号 并切换到它运行 ns-3。 一次性解决方案:创建非 roo…...

linux 下常用变更-8

1、删除普通用户 查询用户初始UID和GIDls -l /home/ ###家目录中查看UID cat /etc/group ###此文件查看GID删除用户1.编辑文件 /etc/passwd 找到对应的行,YW343:x:0:0::/home/YW343:/bin/bash 2.将标红的位置修改为用户对应初始UID和GID: YW3…...

)

相机Camera日志分析之三十一:高通Camx HAL十种流程基础分析关键字汇总(后续持续更新中)

【关注我,后续持续新增专题博文,谢谢!!!】 上一篇我们讲了:有对最普通的场景进行各个日志注释讲解,但相机场景太多,日志差异也巨大。后面将展示各种场景下的日志。 通过notepad++打开场景下的日志,通过下列分类关键字搜索,即可清晰的分析不同场景的相机运行流程差异…...

【JavaSE】绘图与事件入门学习笔记

-Java绘图坐标体系 坐标体系-介绍 坐标原点位于左上角,以像素为单位。 在Java坐标系中,第一个是x坐标,表示当前位置为水平方向,距离坐标原点x个像素;第二个是y坐标,表示当前位置为垂直方向,距离坐标原点y个像素。 坐标体系-像素 …...

RNN避坑指南:从数学推导到LSTM/GRU工业级部署实战流程

本文较长,建议点赞收藏,以免遗失。更多AI大模型应用开发学习视频及资料,尽在聚客AI学院。 本文全面剖析RNN核心原理,深入讲解梯度消失/爆炸问题,并通过LSTM/GRU结构实现解决方案,提供时间序列预测和文本生成…...

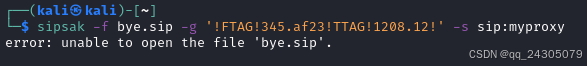

sipsak:SIP瑞士军刀!全参数详细教程!Kali Linux教程!

简介 sipsak 是一个面向会话初始协议 (SIP) 应用程序开发人员和管理员的小型命令行工具。它可以用于对 SIP 应用程序和设备进行一些简单的测试。 sipsak 是一款 SIP 压力和诊断实用程序。它通过 sip-uri 向服务器发送 SIP 请求,并检查收到的响应。它以以下模式之一…...

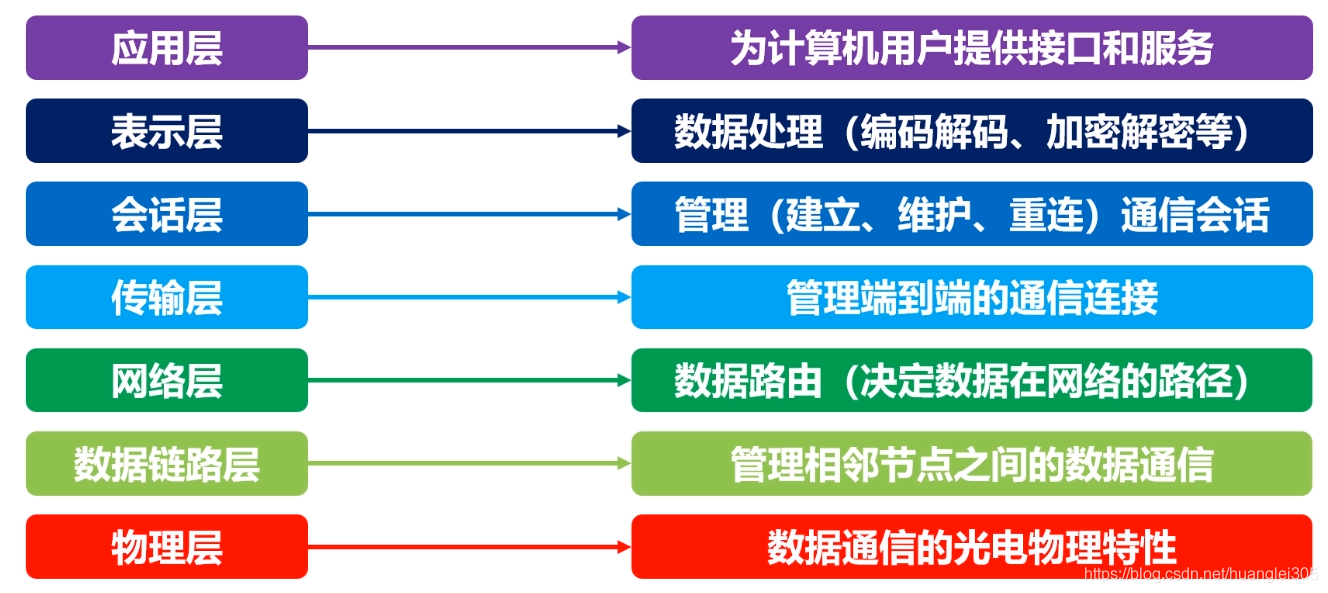

计算机基础知识解析:从应用到架构的全面拆解

目录 前言 1、 计算机的应用领域:无处不在的数字助手 2、 计算机的进化史:从算盘到量子计算 3、计算机的分类:不止 “台式机和笔记本” 4、计算机的组件:硬件与软件的协同 4.1 硬件:五大核心部件 4.2 软件&#…...