GraphRAG:LLM之Graphrag接入milvus

前言

微软目前的graphrag更像个demo,数据量大的时候不是很友好的啊,所以将milvus接入了graphrag,看完这篇文章,其他数据库接入应该也没问题

注:这篇文章只是在search的时候接入进来,index过程或者说整个流程接入有时间再写一遍博客

连接数据库

在graphrag.query.cil.py 文件中,我们定位到run_local_search函数中,找到

store_entity_semantic_embeddings(entities=entities, vectorstore=description_embedding_store

)将其注释掉,然后新加上

if vector_store_type == VectorStoreType.Milvus:#自定义实现store_text_semantic_embeddings(Texts=text_units, vectorstore=description_embedding_store,final_documents=final_documents)else:store_entity_semantic_embeddings(entities=entities, vectorstore=description_embedding_store)其中vector_store_type是graphrag中向量数据库的选择,位于graphrag\vector_stores\typing.py中,我们需要手动加上 Milvus = 'milvus'

class VectorStoreType(str, Enum):"""The supported vector store types."""LanceDB = "lancedb"AzureAISearch = "azure_ai_search"Milvus = 'milvus'同时对get_vector_store进行修改,加入case VectorStoreType.Milvus

MilvusVectorStore是自定义类,实现milvus的接口,后续会讲

@classmethoddef get_vector_store(cls, vector_store_type: VectorStoreType | str, kwargs: dict) -> LanceDBVectorStore | AzureAISearch:"""Get the vector store type from a string."""match vector_store_type:case VectorStoreType.LanceDB:return LanceDBVectorStore(**kwargs)case VectorStoreType.AzureAISearch:return AzureAISearch(**kwargs)case VectorStoreType.Milvus:return MilvusVectorStore(**kwargs)case _:if vector_store_type in cls.vector_store_types:return cls.vector_store_types[vector_store_type](**kwargs)msg = f"Unknown vector store type: {vector_store_type}"raise ValueError(msg)

然后是store_text_semantic_embeddings函数是对齐store_entity_semantic_embeddings实现的,位于graphrag\query\input\loaders\dfs.py中

def store_text_semantic_embeddings(Texts: list[TextUnit],vectorstore: BaseVectorStore,final_documents:DataFrame,

) -> BaseVectorStore:"""Store entity semantic embeddings in a vectorstore."""documents = []for Text in Texts:matching_rows = final_documents[final_documents['id'] == Text.document_ids[0]]if not matching_rows.empty: #如果存在文章名字 则存入文章名字 否则存入graphrag生成的文本块iddocument_title = matching_rows['title'].values[0]else:document_title = Text.document_idsattributes_dict = {'document_title': document_title,"entity_ids": Text.entity_ids} #除了文章名字 还有文本块中提取的实例idif Text.attributes:attributes_dict.update({**Text.attributes})documents.append(VectorStoreDocument(id=Text.id,text=Text.text,vector=Text.text_embedding,attributes=attributes_dict))vectorstore.load_documents(documents=documents) #将文本块数据加载进milvus数据库中return vectorstore具体代码如下:

from graphrag.query.input.loaders.dfs import (store_entity_semantic_embeddings,store_text_semantic_embeddings

)

def run_local_search(data_dir: str | None,root_dir: str | None,community_level: int,response_type: str,query: str,

):"""Run a local search with the given query."""data_dir, root_dir, config = _configure_paths_and_settings(data_dir, root_dir)data_path = Path(data_dir)final_documents = pd.read_parquet(data_path / "create_final_documents.parquet")final_text_units = pd.read_parquet(data_path / "create_final_text_units.parquet")final_community_reports = pd.read_parquet(data_path / "create_final_community_reports.parquet")final_relationships = pd.read_parquet(data_path / "create_final_relationships.parquet")final_nodes = pd.read_parquet(data_path / "create_final_nodes.parquet")final_entities = pd.read_parquet(data_path / "create_final_entities.parquet")final_covariates_path = data_path / "create_final_covariates.parquet"final_covariates = (pd.read_parquet(final_covariates_path)if final_covariates_path.exists()else None)#不做调整 默认是{}vector_store_args = (config.embeddings.vector_store if config.embeddings.vector_store else {})#获取数据库类型 默认VectorStoreType.LanceDB vector_store_type = vector_store_args.get("type", VectorStoreType.LanceDB)#初始化数据库 默认获取LanceDBdescription_embedding_store = __get_embedding_description_store(vector_store_type=vector_store_type,config_args=vector_store_args,)#获取实例entities = read_indexer_entities(final_nodes, final_entities, community_level)#covariates 默认{}covariates = (read_indexer_covariates(final_covariates)if final_covariates is not Noneelse [])reports = read_indexer_reports(final_community_reports, final_nodes, community_level)text_units = read_indexer_text_units(final_text_units)relationships = read_indexer_relationships(final_relationships)covariates = {"claims": covariates}if vector_store_type == VectorStoreType.Milvus:#自定义实现 将文本块数据存入milvus中store_text_semantic_embeddings(Texts=text_units, vectorstore=description_embedding_store,final_documents=final_documents)else:store_entity_semantic_embeddings(entities=entities, vectorstore=description_embedding_store)search_engine = get_local_search_engine(config,reports=reports,text_units=text_units,entities=entities,relationships=relationships,covariates=covariates,description_embedding_store=description_embedding_store,response_type=response_type,)result = search_engine.search(query=query,method_type=method_type)reporter.success(f"Local Search Response: {result.response}")return result然后在graphrag.vector_stores中创建个milvus.py文件,我们实现一个MilvusVectorStore类

from pymilvus import connections, FieldSchema, CollectionSchema, DataType, Collection,utility

from pymilvus import MilvusClient

from tqdm import tqdm

from datetime import datetime

from pymilvus import AnnSearchRequest

from typing import Any

from .base import (BaseVectorStore,VectorStoreDocument,VectorStoreSearchResult,

)

from graphrag.model.types import TextEmbedder

import jsonfrom xinference.client import Client #不是必要

client = Client("http://0.0.0.0:9997")

list_models_run = client.list_models()

model_uid = list_models_run['bge-m3']['id']

embedding_client = client.get_model(model_uid)class MilvusVectorStore(BaseVectorStore):def __init__(self,url:str='0.0.0.0',collection_name:str='data_store',recrate:bool=False,key_word_flag:bool=True):self.key_word_flag = key_word_flagconnections.connect(host=url, port="19530")self.has_collection = utility.has_collection(collection_name) #判断是否存在collectionprint(f'has_collection={self.has_collection}')if recrate and self.has_collection :s= input(f'Are you sure delete {collection_name}, yes or no \n ')if s =='yes':self.delete_collection(collection_name)print(f'删除{collection_name}成功')if not recrate and self.has_collection: #判断是否存在collection_nameself.collection = Collection(name=collection_name)else:schema = self.get_schema()self.collection = Collection(name=collection_name, schema=schema)def get_schema(self):id = FieldSchema(name="id", dtype=DataType.INT64,is_primary=True,auto_id=True) # 主键索引graph_id = FieldSchema(name="graph_id", dtype=DataType.VARCHAR,max_length=128)text = FieldSchema(name="text", dtype=DataType.VARCHAR,max_length=58192)file_name = FieldSchema(name="file_name", dtype=DataType.VARCHAR,max_length=512)text_embedding = FieldSchema(name="text_embedding", dtype=DataType.FLOAT_VECTOR,dim=1024) # 向量,dim=2代表向量只有两列,自己的数据的话一个向量有多少个元素就多少列#n_tokens = FieldSchema(name="n_tokens", dtype=DataType.INT64)if self.key_word_flag:key_word = FieldSchema(name="key_word", dtype=DataType.VARCHAR, max_length=8192)key_word_embedding = FieldSchema(name="key_word_embedding", dtype=DataType.FLOAT_VECTOR,dim=1024)schema = CollectionSchema(fields=[id,graph_id,text,file_name,text_embedding,key_word,key_word_embedding], description="文本与文本嵌入存储") # 描述else:schema = CollectionSchema(fields=[id, graph_id,text, file_name, text_embedding],description="文本与文本嵌入存储") # 描述return schemadef change_collection(self,collection_name):schema = self.get_schema()self.collection = Collection(name=collection_name,schema=schema)def delete_collection(self,collection_name):utility.drop_collection(collection_name)def release_collection(self):# self.collection.release_collection(collection_name)self.collection.release()def list_collections(self):collections_list = utility.list_collections()return collections_listdef create_index(self,metric_type='L2',index_name='L2'):#utility.drop_collection(collection_name=collection_name)# self.collection = Collection(name=collection_name, schema=schema)index_params = {"index_type": "AUTOINDEX","metric_type":metric_type,"params": {}}self.collection.create_index(field_name="text_embedding",index_params=index_params,index_name= 'text_embedding')if self.key_word_flag:self.collection.create_index(field_name="key_word_embedding",index_params=index_params,index_name='key_word_embedding')self.collection.load() #load_fields=['id',"text_embedding"]def drop_index(self):self.collection.release()self.collection.drop_index()def insert_data(self,data_dict:dict):#text_id_list,text_list,file_name_list,text_embedding_list,key_word_list,key_word_embedding_liststart = datetime.now()self.collection.insert(data_dict)# if self.key_word_flag:# for id,text,file_name,text_embedding,key_word,key_word_embedding in zip(text_id_list,text_list,file_name_list,text_embedding_list,key_word_list,key_word_embedding_list):# self.collection.insert([[id],[text],[file_name],[text_embedding],[key_word],[key_word_embedding]])# else:# for id,text,file_name,text_embedding in zip(text_id_list,text_list,file_name_list,text_embedding_list):# self.collection.insert([[id],[text],[file_name],[text_embedding]])end = datetime.now()print(f'插入数据消化时间{end-start}')def search(self,query_embedding, top_k=10,metric_type='L2'):search_params = {"metric_type": metric_type,"params": {"level": 2}}results = self.collection.search([query_embedding],anns_field="text_embedding",param=search_params,limit=top_k,output_fields=['graph_id',"text", "file_name",'text_embedding'])[0]return resultsdef hybrid_search(self, query_dense_embedding, query_sparse_embedding, rerank,top_k=10, metric_type='L2'):dense_search_params = {"index_type": "AUTOINDEX","metric_type":metric_type,"params": {}}# dense_req = self.collection.search( [query_dense_embedding],# anns_field="text_embedding",# param=dense_search_params,# limit=top_k,# output_fields=["text", "file_name"])dense_req = AnnSearchRequest([query_dense_embedding], "text_embedding", dense_search_params, limit=top_k)sparse_search_params = {"index_type": "AUTOINDEX","metric_type":metric_type,"params": {}}# sparse_req = self.collection.search( [query_sparse_embedding],# anns_field="text_embedding",# param=sparse_search_params,# limit=top_k,# output_fields=["text", "file_name"])sparse_req = AnnSearchRequest([query_sparse_embedding], "key_word_embedding", sparse_search_params, limit=top_k)res = self.collection.hybrid_search([dense_req,sparse_req],rerank=rerank, limit=top_k, output_fields=["text", "file_name"])[0]return resdef reranker_init(self,model_name_or_path,device="cpu"):self.reranker = bge_rf = BGERerankFunction(model_name=model_name_or_path, # Specify the model name. Defaults to `BAAI/bge-reranker-v2-m3`.device="cpu" # Specify the device to use, e.g., 'cpu' or 'cuda:0')def rereank(self,query,serach_result,top_k,rerank_client=None):documents_list = [i.entity.get('text') for i in serach_result]#如果外部传入非milvus集成的rerankif rerank_client:response = rerank_client.rerank(query=query,documents=documents_list,top_n=top_k,)rerank_results = response['results']results = []for i in rerank_results:index = i['index']results.append(serach_result[index])h = 1else:results = self.reranker(query=query,documents=documents_list,top_k=top_k,)return resultsdef filter_by_id(self, include_ids: list[str] | list[int]) -> Any:"""Build a query filter to filter documents by id."""if len(include_ids) == 0:self.query_filter = Noneelse:if isinstance(include_ids[0], str):id_filter = ", ".join([f"'{id}'" for id in include_ids])self.query_filter = f"id in ({id_filter})"else:self.query_filter = (f"id in ({', '.join([str(id) for id in include_ids])})")return self.query_filterdef connect(self,url:str='0.0.0.0',collection_name:str='data_store',recrate:bool=False,key_word_flag:bool=False,**kwargs: Any) -> Any:self.key_word_flag = key_word_flagconnections.connect(host=url, port="19530")has_collection = utility.has_collection(collection_name) #判断是否存在collectionif recrate and has_collection :s= input(f'Are you sure delete {collection_name}, yes or no \n ')if s =='yes':self.delete_collection(collection_name)print(f'删除{collection_name}成功')if not recrate and has_collection: #判断是否存在collection_nameself.collection = Collection(name=collection_name)else:schema = self.get_schema()self.collection = Collection(name=collection_name, schema=schema)self.create_index()def load_documents(self, documents: list[VectorStoreDocument], overwrite: bool = True) -> None:"""Load documents into vector storage."""documents = [documentfor document in documentsif document.vector is not None]if self.has_collection:s = input(f'Are you want to insert data, yes or no \n ')if s == 'yes':batch = 100documents_len = len(documents)insert_len = int(documents_len / batch) #milvus 一次性不能插入太多数据 所以需要分批次插入data_list = list()start = datetime.now()print(f'插入数据中***')for document in documents:attributes = document.attributesfile_name = attributes.get('document_title')[0]temp_dict = {"graph_id": document.id,"text": document.text,"text_embedding": document.vector,"file_name": file_name,}data_list.append(temp_dict)if len(data_list) >= insert_len:self.collection.insert(data_list)data_list = []if data_list: # 防止还有数据self.collection.insert(data_list)end = datetime.now()print(f'插入数据消化时间{end-start}')def similarity_search_by_text(self, text: str, text_embedder: TextEmbedder, k: int = 10, **kwargs: Any) -> list[VectorStoreSearchResult]:"""Perform a similarity search using a given input text."""query_embedding = embedding_client.create_embedding([text])['data'][0]['embedding']if query_embedding:search_result = self.similarity_search_by_vector(query_embedding, k)return search_resultreturn []def similarity_search_by_vector(self, query_embedding: list[float], k: int = 10, **kwargs: Any) -> list[VectorStoreSearchResult]:docs = self.search(query_embedding=query_embedding,top_k=k)result = []for doc in docs:file_name = doc.entity.get('file_name')attributes = {'document_title':file_name,'entity':[]}score = abs(float(doc.score))temp = VectorStoreSearchResult(document=VectorStoreDocument(id=doc.entity.get('graph_id'),text=doc.entity.get('text'),vector=doc.entity.get('text_embedding'),attributes=attributes,),score=score,)result.append(temp)# return [# VectorStoreSearchResult(# document=VectorStoreDocument(# id=doc["id"],# text=doc["text"],# vector=doc["vector"],# attributes=json.loads(doc["attributes"]),# ),# score=1 - abs(float(doc["_distance"])),# )# for doc in docs# ]return resultdef similarity_search_by_query(self, query: str, text_embedder: TextEmbedder, k: int = 10, **kwargs: Any) -> list[VectorStoreSearchResult]:h =1def similarity_search_by_hybrid(self, query: str, text_embedder: TextEmbedder, k: int = 10,oversample_scaler:int=10, **kwargs: Any) -> list[VectorStoreSearchResult]:h = 1

修改搜索代码

找到graphrag\query\structured_search\local_search\mixed_context.py文件

或者在graphrag\query\cli.py的run_local_search函数中的get_local_search_engine跳转,

找到get_local_search_engine函数的return中的LocalSearchMixedContext跳转就到了该类的实现代码

定位到build_context函数的map_query_to_entities,进行跳转到函数实现,位于graphrag\query\context_builder\entity_extraction.py中找到

matched = get_entity_by_key(entities=all_entities, #所有的Entitykey=embedding_vectorstore_key,value=result.document.id,)修改成

entity_ids = result.document.attributes.get('entity_ids')if entity_ids:for entity_id in entity_ids:matched = get_entity_by_key(entities=all_entities, #所有的Entitykey=embedding_vectorstore_key,value=entity_id,)if matched:matched_entities.append(matched)如果想保留graphrag原本这部分的搜索代码,可以像我这个样子

for result in search_results:if method_type == 'text_match':entity_ids = result.document.attributes.get('entity_ids')if entity_ids:for entity_id in entity_ids:matched = get_entity_by_key(entities=all_entities, #所有的Entitykey=embedding_vectorstore_key,value=entity_id,)if matched:matched_entities.append(matched)else:matched = get_entity_by_key(entities=all_entities, #所有的Entitykey=embedding_vectorstore_key,value=result.document.id,)if matched:matched_entities.append(matched)加行参数进行控制或者根据vector_store_type进行控制,最后修改map_query_to_entities函数的return,加上search_results

def map_query_to_entities(query: str,text_embedding_vectorstore: BaseVectorStore,text_embedder: BaseTextEmbedding,all_entities: list[Entity],embedding_vectorstore_key: str = EntityVectorStoreKey.ID,include_entity_names: list[str] | None = None,exclude_entity_names: list[str] | None = None,k: int = 10,oversample_scaler: int = 2,method_type:str|None = None,

) -> list[Entity]:"""Extract entities that match a given query using semantic similarity of text embeddings of query and entity descriptions."""if include_entity_names is None:include_entity_names = []if exclude_entity_names is None:exclude_entity_names = []matched_entities = []if query != "":# get entities with highest semantic similarity to query# oversample to account for excluded entities# 在graphrag文件夹目录的vector_stores目录下的lancedb文件中查看print(f'准备embedding')start_time = datetime.now()#返回的是相似的向量search_results = text_embedding_vectorstore.similarity_search_by_text(text=query,text_embedder=lambda t: text_embedder.embed(t),k=k * oversample_scaler,)end_time = datetime.now()print(f'耗时{end_time-start_time}')for result in search_results:if method_type == 'text_match':entity_ids = result.document.attributes.get('entity_ids')if entity_ids:for entity_id in entity_ids:matched = get_entity_by_key(entities=all_entities, #所有的Entitykey=embedding_vectorstore_key,value=entity_id,)if matched:matched_entities.append(matched)else:matched = get_entity_by_key(entities=all_entities, #所有的Entitykey=embedding_vectorstore_key,value=result.document.id,)if matched:matched_entities.append(matched)else:all_entities.sort(key=lambda x: x.rank if x.rank else 0, reverse=True)matched_entities = all_entities[:k]# filter out excluded entities# 默认exclude_entity_names []if exclude_entity_names:matched_entities = [entityfor entity in matched_entitiesif entity.title not in exclude_entity_names]# add entities in the include_entity listincluded_entities = []#默认include_entity_names []for entity_name in include_entity_names:included_entities.extend(get_entity_by_name(all_entities, entity_name))return included_entities + matched_entities,search_results #原本没有search_results不要忘记在graphrag\query\structured_search\local_search\mixed_context.py的build_context函数中修改map_query_to_entities由2个返回值变成了3个

selected_entities,search_results = map_query_to_entities(query=query,text_embedding_vectorstore=self.entity_text_embeddings,text_embedder=self.text_embedder,all_entities=list(self.entities.values()),embedding_vectorstore_key=self.embedding_vectorstore_key,include_entity_names=include_entity_names,exclude_entity_names=exclude_entity_names,k=top_k_mapped_entities,oversample_scaler=20,#2method_type=method_type)在build_context函数末尾找到self._build_text_unit_context函数,新加参数传入search_results

text_unit_context, text_unit_context_data,document_id_context = self._build_text_unit_context(selected_entities=selected_entities,max_tokens=text_unit_tokens,return_candidate_context=return_candidate_context,search_results=search_results,method_type= method_type)跳转到该函数的实现位置,仍然在mixed_context.py中,修改或者替换掉下面的代码

for index, entity in enumerate(selected_entities):if entity.text_unit_ids:for text_id in entity.text_unit_ids:if (text_id not in [unit.id for unit in selected_text_units]and text_id in self.text_units):selected_unit = self.text_units[text_id]num_relationships = count_relationships(selected_unit, entity, self.relationships)if selected_unit.attributes is None:selected_unit.attributes = {}selected_unit.attributes["entity_order"] = indexselected_unit.attributes["num_relationships"] = (num_relationships)selected_text_units.append(selected_unit)# sort selected text units by ascending order of entity order and descending order of number of relationshipsselected_text_units.sort(key=lambda x: (x.attributes["entity_order"], # type: ignore-x.attributes["num_relationships"], # type: ignore))for unit in selected_text_units:del unit.attributes["entity_order"] # type: ignoredel unit.attributes["num_relationships"] # type: ignore我的建议还是保留着,反正我是改成了

if method_type =='text_match':for index, Text in enumerate(search_results):text_id =Text.document.idif (text_id not in [unit.id for unit in selected_text_units]and text_id in self.text_units):selected_unit = self.text_units[text_id]if selected_unit.attributes is None:selected_unit.attributes = {'documnet_title':Text.document.attributes['document_title']}selected_text_units.append(selected_unit)else:for index, entity in enumerate(selected_entities):if entity.text_unit_ids:for text_id in entity.text_unit_ids:if (text_id not in [unit.id for unit in selected_text_units]and text_id in self.text_units):selected_unit = self.text_units[text_id]num_relationships = count_relationships(selected_unit, entity, self.relationships)if selected_unit.attributes is None:selected_unit.attributes = {}selected_unit.attributes["entity_order"] = indexselected_unit.attributes["num_relationships"] = (num_relationships)selected_text_units.append(selected_unit)# sort selected text units by ascending order of entity order and descending order of number of relationshipsselected_text_units.sort(key=lambda x: (x.attributes["entity_order"], # type: ignore-x.attributes["num_relationships"], # type: ignore))for unit in selected_text_units:del unit.attributes["entity_order"] # type: ignoredel unit.attributes["num_relationships"] # type: ignore然后就更换掉了向量数据库了,传入图数据啥的代码量更大 等我有时间再搞

代码量有点大

欢迎大家点赞或收藏~

大家的点赞或收藏可以鼓励作者加快更新~

相关文章:

GraphRAG:LLM之Graphrag接入milvus

前言 微软目前的graphrag更像个demo,数据量大的时候不是很友好的啊,所以将milvus接入了graphrag,看完这篇文章,其他数据库接入应该也没问题 注:这篇文章只是在search的时候接入进来,index过程或者说整个流…...

adb使用及常用命令

目录 介绍 组成 启用adb调试 常用命令 连接设备 版本信息 安装应用 卸载应用 文件操作 日志查看 屏幕截图和录制 设备重启 端口转发 调试相关 设置属性 设备信息查询 获取帮助 模拟输入 介绍 adb全称为 Android Debug Bridge(Android调试桥),是 A…...

omnipeek分析beacon帧

omnipeek查询设备发送beacon时同一信道两个beacon发送间隔 目录 用例要求分析抓包数据 1.用例要求 Beacon帧发送频率符合规范要求。参数-【同一个信道两个beacon发送间隔不能超过100ms】 2.分析抓包数据 打开becon.pkt文件(用omnipeek工具提前抓取包)…...

Java数组问题

题目2: 定义一个数组,存储1,2,3,4,5,6,7,8,9,10 遍历数组得到的每一个元素,统计数组里面一共多少个能被3整除的数字 package com.s…...

salesforce 可以为同一个简档的同一个 recordtype 的对象设置多种页面布局吗

在 Salesforce 中,对于同一个 Record Type(记录类型),默认情况下,每个 Profile(用户简档) 只能分配一个 Page Layout(页面布局)。也就是说,页面布局的分配规则…...

使用vue项目中,使用webpack模板和直接用vue.config来配置相关插件 区别是什么,具体有哪些提现呢

在 Vue 项目中,使用 Webpack 模板 和 vue.config.js 来配置相关插件的主要区别在于配置的复杂度、灵活性和易用性。以下是两者的详细对比: 1. Webpack 模板 Webpack 模板是 Vue CLI 早期版本(如 Vue CLI 2.x)中提供的项目初始化模…...

五、包图

包图 、基本概念 概念: 用来描述模型中的包和其所含元素的组织方式的图,是维护和控制系统总体结构的重要内容。 包可以把所建立的各种模型组织起来,形成各种功能或用途的模块,并可以控制包中元素的可见性以及描述包之间的依赖…...

关于重构一点简单想法

关于重构一点简单想法 当前工作的组内,由于业务开启的时间正好处于集团php-》go技术栈全面迁移的时间点,组内语言技术栈存在:php、go两套。 因此需求开发过程中通常要考虑两套技术栈的逻辑,一些基础的逻辑也没有办法复用。 在这…...

kafka使用以及基于zookeeper集群搭建集群环境

一、环境介绍 zookeeper下载地址:https://zookeeper.apache.org/releases.html kafka下载地址:https://kafka.apache.org/downloads 192.168.142.129 apache-zookeeper-3.8.4-bin.tar.gz kafka_2.13-3.6.0.tgz 192.168.142.130 apache-zookee…...

GAN对抗生成网络(二)——算法及Python实现

1 算法步骤 上一篇提到的GAN的最优化问题是,本文记录如何求解这一问题。 首先为了表示方便,记,这里让最大的可视作常量。 第一步,给定初始的,使用梯度上升找到 ,最大化。关于梯度下降,可以参考笔者另一篇…...

——线程池)

并发线程(21)——线程池

文章目录 二十一、day211. 线程池实现1.1 完整代码1.2 解释 二十一、day21 我们之前在学习std::future、std::async、std::promise相关的知识时,通过std::promise和packaged_task构建了一个可用的线程池,可参考文章:并发编程(6&a…...

基于32单片机的智能语音家居

一、主要功能介绍 以STM32F103C8T6单片机为控制核心,设计一款智能远程家电控制系统,该系统能实现如下功能: 1、可通过语音命令控制照明灯、空调、加热器、窗户及窗帘的开关; 2、可通过手机显示和控制照明灯、空调、窗户及窗帘的开…...

VScode怎么重启

原文链接:【vscode】vscode重新启动 键盘按下 Ctrl Shift p 打开命令行,如下图: 输入Reload Window,如下图:...

分析服务器 systemctl 启动gozero项目报错的解决方案

### 分析 systemctl start beisen.service 报错 在 Linux 系统中,systemctl 是管理系统和服务的主要工具。当我们尝试重启某个服务时,如果服务启动失败,systemctl 会输出错误信息,帮助我们诊断和解决问题。 本文将通过一个实际的…...

大模型LLM-Prompt-OPTIMAL

1 OPTIMAL OPTIMAL 具体每项内容解释如下: Objective Clarity(目标清晰):明确定义任务的最终目标和预期成果。 Purpose Definition(目的定义):阐述任务的目的和它的重要性。 Information Gat…...

3. 多线程(1) --- 创建线程,Thread类

文章目录 前言1. API2. 创建线程2.1. 继承 Thread类2.2. 实现 Runnable 接口2.3. 匿名内部类2.4. lambda2.5.其他方法 3. Thread类及其常见的方法和属性3.1. Thread 的常见构造方法3.2. Thread 的常见属性3.3. start() --- 启动一个线程3.4. 中断一个线程3.5. 等待线程3.6. 休眠…...

简单的jmeter数据请求学习

简单的jmeter数据请求学习 1.需求 我们的流程服务由原来的workflow-server调用wfms进行了优化,将wfms服务操作并入了workflow-server中,去除了原来的webservice服务调用形式,增加了并发处理,现在想测试模拟一下,在一…...

智能水文:ChatGPT等大语言模型如何提升水资源分析和模型优化的效率

大语言模型与水文水资源领域的融合具有多种具体应用,以下是一些主要的应用实例: 1、时间序列水文数据自动化处理及机器学习模型: ●自动分析流量或降雨量的异常值 ●参数估计,例如PIII型曲线的参数 ●自动分析降雨频率及重现期 ●…...

民宿酒店预订系统小程序+uniapp全开源+搭建教程

一.介绍 一.系统介绍 基于ThinkPHPuniappuView开发的多门店民宿酒店预订管理系统,快速部署属于自己民宿酒店的预订小程序,包含预订、退房、WIFI连接、吐槽、周边信息等功能。提供全部无加密源代码,支持私有化部署。 二.搭建环境 系统环境…...

计算机网络掩码、最小地址、最大地址计算、IP地址个数

一、必备知识 1.无分类地址IPV4地址网络前缀主机号 2.每个IPV4地址由32位二进制数组成 3. /15这个地址表示网络前缀有15位,那么主机号32-1517位。 4.IP地址的个数:2**n (n表示主机号的位数) 5.可用(可分配)IP地址个数&#x…...

应用升级/灾备测试时使用guarantee 闪回点迅速回退

1.场景 应用要升级,当升级失败时,数据库回退到升级前. 要测试系统,测试完成后,数据库要回退到测试前。 相对于RMAN恢复需要很长时间, 数据库闪回只需要几分钟。 2.技术实现 数据库设置 2个db_recovery参数 创建guarantee闪回点,不需要开启数据库闪回。…...

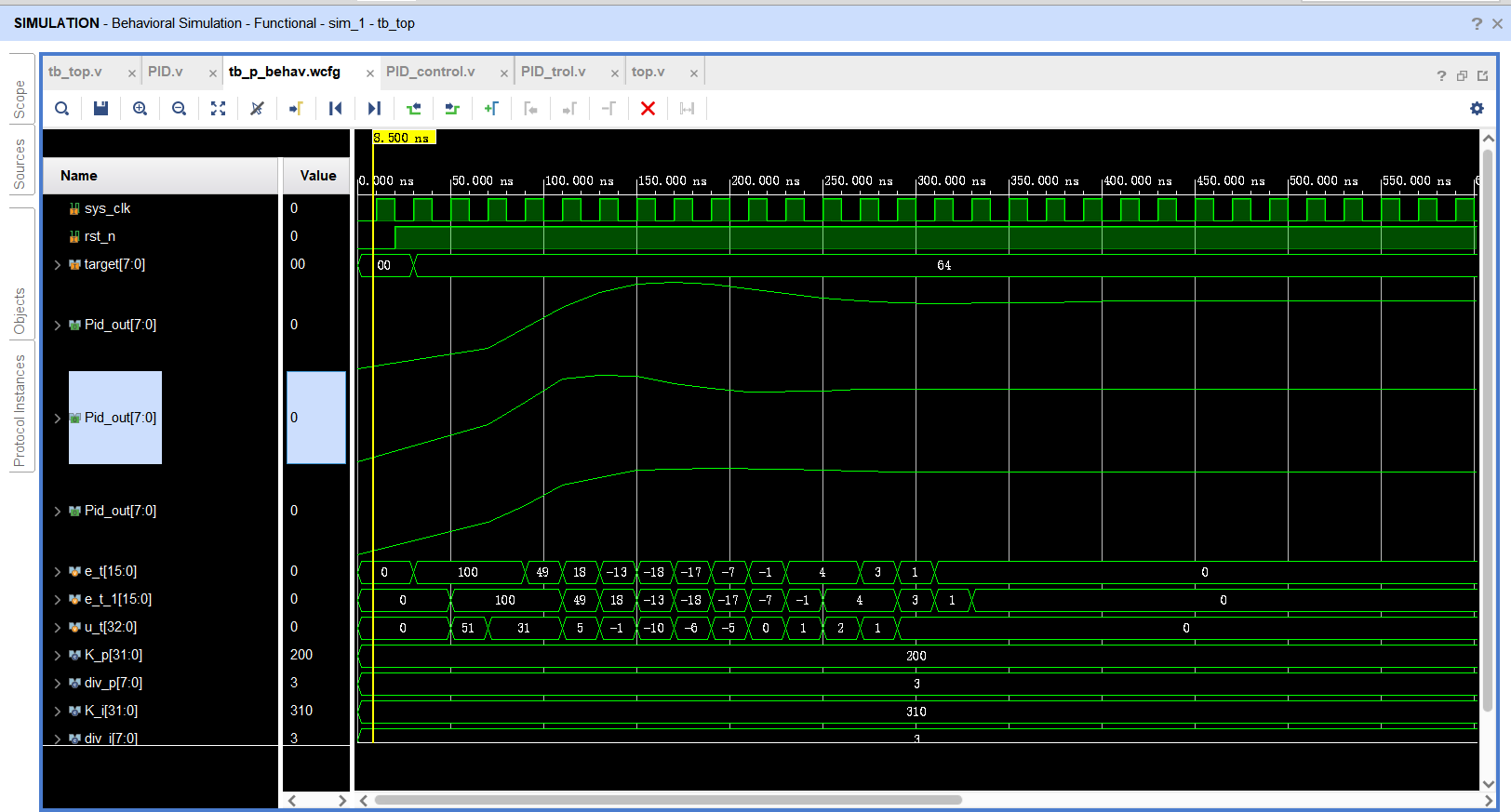

基于FPGA的PID算法学习———实现PID比例控制算法

基于FPGA的PID算法学习 前言一、PID算法分析二、PID仿真分析1. PID代码2.PI代码3.P代码4.顶层5.测试文件6.仿真波形 总结 前言 学习内容:参考网站: PID算法控制 PID即:Proportional(比例)、Integral(积分&…...

指令的指南)

在Ubuntu中设置开机自动运行(sudo)指令的指南

在Ubuntu系统中,有时需要在系统启动时自动执行某些命令,特别是需要 sudo权限的指令。为了实现这一功能,可以使用多种方法,包括编写Systemd服务、配置 rc.local文件或使用 cron任务计划。本文将详细介绍这些方法,并提供…...

鸿蒙中用HarmonyOS SDK应用服务 HarmonyOS5开发一个生活电费的缴纳和查询小程序

一、项目初始化与配置 1. 创建项目 ohpm init harmony/utility-payment-app 2. 配置权限 // module.json5 {"requestPermissions": [{"name": "ohos.permission.INTERNET"},{"name": "ohos.permission.GET_NETWORK_INFO"…...

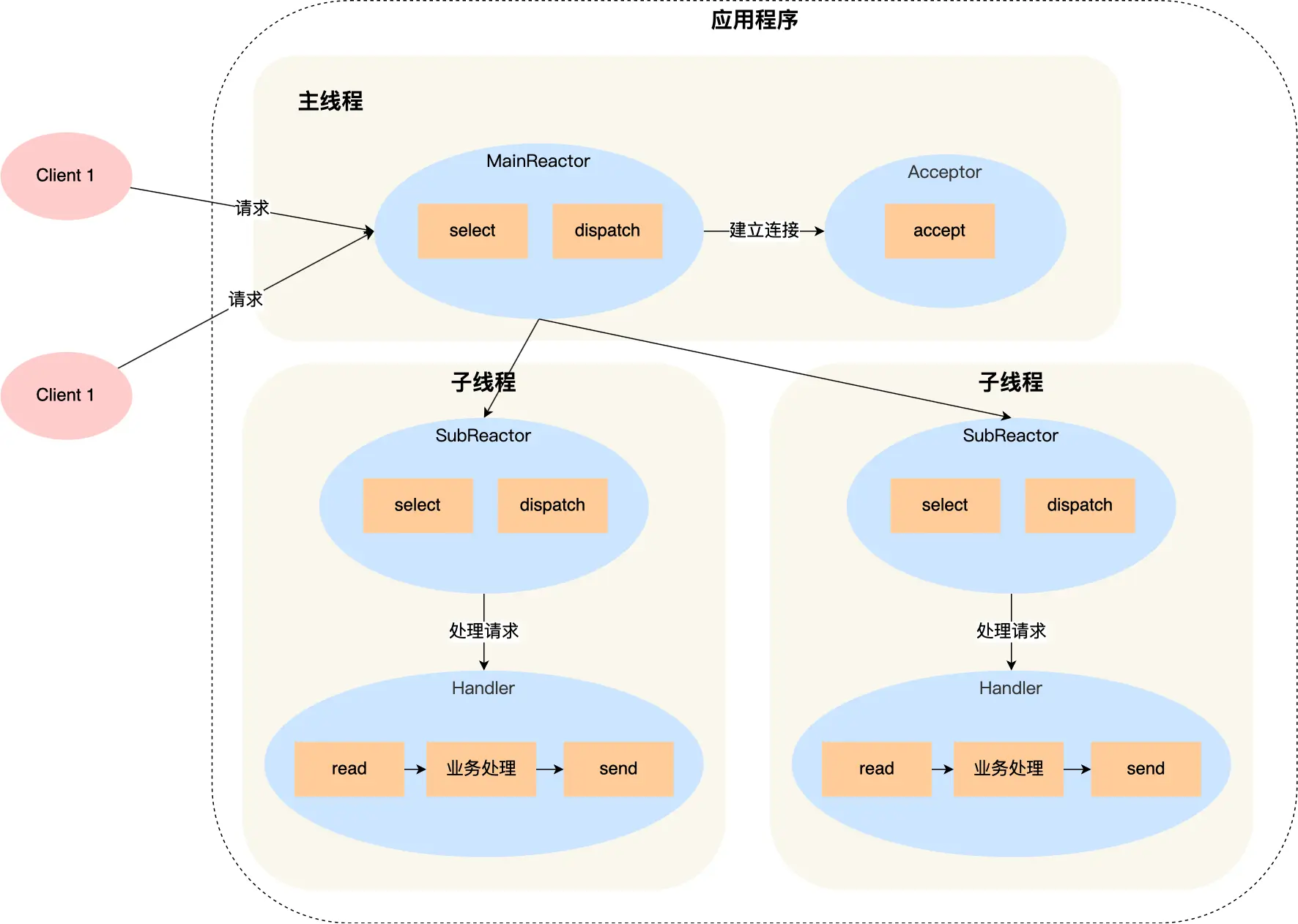

select、poll、epoll 与 Reactor 模式

在高并发网络编程领域,高效处理大量连接和 I/O 事件是系统性能的关键。select、poll、epoll 作为 I/O 多路复用技术的代表,以及基于它们实现的 Reactor 模式,为开发者提供了强大的工具。本文将深入探讨这些技术的底层原理、优缺点。 一、I…...

Spring Cloud Gateway 中自定义验证码接口返回 404 的排查与解决

Spring Cloud Gateway 中自定义验证码接口返回 404 的排查与解决 问题背景 在一个基于 Spring Cloud Gateway WebFlux 构建的微服务项目中,新增了一个本地验证码接口 /code,使用函数式路由(RouterFunction)和 Hutool 的 Circle…...

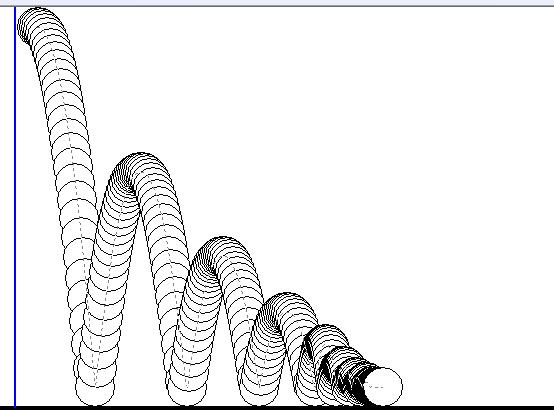

MFC 抛体运动模拟:常见问题解决与界面美化

在 MFC 中开发抛体运动模拟程序时,我们常遇到 轨迹残留、无效刷新、视觉单调、物理逻辑瑕疵 等问题。本文将针对这些痛点,详细解析原因并提供解决方案,同时兼顾界面美化,让模拟效果更专业、更高效。 问题一:历史轨迹与小球残影残留 现象 小球运动后,历史位置的 “残影”…...

Git 3天2K星标:Datawhale 的 Happy-LLM 项目介绍(附教程)

引言 在人工智能飞速发展的今天,大语言模型(Large Language Models, LLMs)已成为技术领域的焦点。从智能写作到代码生成,LLM 的应用场景不断扩展,深刻改变了我们的工作和生活方式。然而,理解这些模型的内部…...

基于Java+VUE+MariaDB实现(Web)仿小米商城

仿小米商城 环境安装 nodejs maven JDK11 运行 mvn clean install -DskipTestscd adminmvn spring-boot:runcd ../webmvn spring-boot:runcd ../xiaomi-store-admin-vuenpm installnpm run servecd ../xiaomi-store-vuenpm installnpm run serve 注意:运行前…...

Bean 作用域有哪些?如何答出技术深度?

导语: Spring 面试绕不开 Bean 的作用域问题,这是面试官考察候选人对 Spring 框架理解深度的常见方式。本文将围绕“Spring 中的 Bean 作用域”展开,结合典型面试题及实战场景,帮你厘清重点,打破模板式回答,…...