docker安装elk6.7.1-搜集java日志

docker安装elk6.7.1-搜集java日志

如果对运维课程感兴趣,可以在b站上、A站或csdn上搜索我的账号: 运维实战课程,可以关注我,学习更多免费的运维实战技术视频

0.规划

192.168.171.130 tomcat日志+filebeat

192.168.171.131 tomcat日志+filebeat

192.168.171.128 redis

192.168.171.129 logstash

192.168.171.128 es1

192.168.171.129 es2

192.168.171.132 kibana

1.docker安装es集群-6.7.1 和head插件(在192.168.171.128-es1和192.168.171.129-es2)

在192.168.171.128上安装es6.7.1和es6.7.1-head插件:

1)安装docker19.03.2:

[root@localhost ~]# docker info

.......

Server Version: 19.03.2

[root@localhost ~]# sysctl -w vm.max_map_count=262144 #设置elasticsearch用户拥有的内存权限太小,至少需要262144

[root@localhost ~]# sysctl -a |grep vm.max_map_count #查看

vm.max_map_count = 262144

[root@localhost ~]# vim /etc/sysctl.conf

vm.max_map_count=262144

2)安装es6.7.1:

上传相关es的压缩包到/data目录:

[root@localhost ~]# cd /data/

[root@localhost data]# ls es-6.7.1.tar.gz

es-6.7.1.tar.gz

[root@localhost data]# tar -zxf es-6.7.1.tar.gz

[root@localhost data]# cd es-6.7.1

[root@localhost es-6.7.1]# ls

config image scripts

[root@localhost es-6.7.1]# ls config/

es.yml

[root@localhost es-6.7.1]# ls image/

elasticsearch_6.7.1.tar

[root@localhost es-6.7.1]# ls scripts/

run_es_6.7.1.sh

[root@localhost es-6.7.1]# docker load -i image/elasticsearch_6.7.1.tar

[root@localhost es-6.7.1]# docker images |grep elasticsearch

elasticsearch 6.7.1 e2667f5db289 11 months ago 812MB

[root@localhost es-6.7.1]# cat config/es.yml

cluster.name: elasticsearch-cluster

node.name: es-node1

network.host: 0.0.0.0

network.publish_host: 192.168.171.128

http.port: 9200

transport.tcp.port: 9300

http.cors.enabled: true

http.cors.allow-origin: "*"

node.master: true

node.data: true

discovery.zen.ping.unicast.hosts: ["192.168.171.128:9300","192.168.171.129:9300"]

discovery.zen.minimum_master_nodes: 1

#cluster.name 集群的名称,可以自定义名字,但两个es必须一样,就是通过是不是同一个名称判断是不是一个集群

#node.name 本机的节点名,可自定义,没必要必须hosts解析或配置该主机名

#下面两个是默认基础上新加的,允许跨域访问

#http.cors.enabled: true

#http.cors.allow-origin: '*'

##注意:容器里有两个端口,9200是:ES节点和外部通讯使用,9300是:ES节点之间通讯使用

[root@localhost es-6.7.1]# cat scripts/run_es_6.7.1.sh

#!/bin/bash

docker run -e ES_JAVA_OPTS="-Xms1024m -Xmx1024m" -d --net=host --restart=always -v /data/es-6.7.1/config/es.yml:/usr/share/elasticsearch/config/elasticsearch.yml -v /data/es6.7.1_data:/usr/share/elasticsearch/data -v /data/es6.7.1_logs:/usr/share/elasticsearch/logs --name es6.7.1 elasticsearch:6.7.1

#注意:容器里有两个端口,9200是:ES节点和外部通讯使用,9300是:ES节点之间通讯使用

[root@localhost es-6.7.1]# mkdir /data/es6.7.1_data

[root@localhost es-6.7.1]# mkdir /data/es6.7.1_logs

[root@localhost es-6.7.1]# chmod -R 777 /data/es6.7.1_data/ #需要es用户能写入,否则无法映射

[root@localhost es-6.7.1]# chmod -R 777 /data/es6.7.1_logs/ #需要es用户能写入,否则无法映射

[root@localhost es-6.7.1]# sh scripts/run_es_6.7.1.sh

[root@localhost es-6.7.1]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

988abe7eedac elasticsearch:6.7.1 "/usr/local/bin/dock…" 23 seconds ago Up 19 seconds es6.7.1

[root@localhost es-6.7.1]# netstat -anput |grep 9200

tcp6 0 0 :::9200 :::* LISTEN 16196/java

[root@localhost es-6.7.1]# netstat -anput |grep 9300

tcp6 0 0 :::9300 :::* LISTEN 16196/java

[root@localhost es-6.7.1]# cd

浏览器访问es服务:http://192.168.171.128:9200/

3)安装es6.7.1-head插件:

上传相关es-head插件的压缩包到/data目录

[root@localhost ~]# cd /data/

[root@localhost data]# ls es-6.7.1-head.tar.gz

es-6.7.1-head.tar.gz

[root@localhost data]# tar -zxf es-6.7.1-head.tar.gz

[root@localhost data]# cd es-6.7.1-head

[root@localhost es-6.7.1-head]# ls

conf image scripts

[root@localhost es-6.7.1-head]# ls conf/

app.js Gruntfile.js

[root@localhost es-6.7.1-head]# ls image/

elasticsearch-head_6.7.1.tar

[root@localhost es-6.7.1-head]# ls scripts/

run_es-head.sh

[root@localhost es-6.7.1-head]# docker load -i image/elasticsearch-head_6.7.1.tar

[root@localhost es-6.7.1-head]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

elasticsearch 6.7.1 e2667f5db289 11 months ago 812MB

elasticsearch-head 6.7.1 b19a5c98e43b 3 years ago 824MB

[root@localhost es-6.7.1-head]# vim conf/app.js

.....

this.base_uri = this.config.base_uri || this.prefs.get("app-base_uri") || "http://192.168.171.128:9200"; #修改为本机ip

....

[root@localhost es-6.7.1-head]# vim conf/Gruntfile.js

....

connect: {

server: {

options: {

hostname: '*', #添加

port: 9100,

base: '.',

keepalive: true

}

}

....

[root@localhost es-6.7.1-head]# cat scripts/run_es-head.sh

#!/bin/bash

docker run -d --name es-head-6.7.1 --net=host --restart=always -v /data/es-6.7.1-head/conf/Gruntfile.js:/usr/src/app/Gruntfile.js -v /data/es-6.7.1-head/conf/app.js:/usr/src/app/_site/app.js elasticsearch-head:6.7.1

#容器端口是9100,是es的管理端口

[root@localhost es-6.7.1-head]# sh scripts/run_es-head.sh

[root@localhost es-6.7.1-head]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c46189c3338b elasticsearch-head:6.7.1 "/bin/sh -c 'grunt s…" 42 seconds ago Up 37 seconds es-head-6.7.1

988abe7eedac elasticsearch:6.7.1 "/usr/local/bin/dock…" 9 minutes ago Up 9 minutes es6.7.1

[root@localhost es-6.7.1-head]# netstat -anput |grep 9100

tcp6 0 0 :::9100 :::* LISTEN 16840/grunt

浏览器访问es-head插件:http://192.168.171.128:9100/

在192.168.171.129上安装es6.7.1和es6.7.1-head插件:

1)安装docker19.03.2:

[root@localhost ~]# docker info

Client:

Debug Mode: false

Server:

Containers: 2

Running: 2

Paused: 0

Stopped: 0

Images: 2

Server Version: 19.03.2

[root@localhost ~]# sysctl -w vm.max_map_count=262144 #设置elasticsearch用户拥有的内存权限太小,至少需要262144

[root@localhost ~]# sysctl -a |grep vm.max_map_count #查看

vm.max_map_count = 262144

[root@localhost ~]# vim /etc/sysctl.conf

vm.max_map_count=262144

2)安装es6.7.1:

上传相关es的压缩包到/data目录:

[root@localhost ~]# cd /data/

[root@localhost data]# ls es-6.7.1.tar.gz

es-6.7.1.tar.gz

[root@localhost data]# tar -zxf es-6.7.1.tar.gz

[root@localhost data]# cd es-6.7.1

[root@localhost es-6.7.1]# ls

config image scripts

[root@localhost es-6.7.1]# ls config/

es.yml

[root@localhost es-6.7.1]# ls image/

elasticsearch_6.7.1.tar

[root@localhost es-6.7.1]# ls scripts/

run_es_6.7.1.sh

[root@localhost es-6.7.1]# docker load -i image/elasticsearch_6.7.1.tar

[root@localhost es-6.7.1]# docker images |grep elasticsearch

elasticsearch 6.7.1 e2667f5db289 11 months ago 812MB

[root@localhost es-6.7.1]# vim config/es.yml

cluster.name: elasticsearch-cluster

node.name: es-node2

network.host: 0.0.0.0

network.publish_host: 192.168.171.129

http.port: 9200

transport.tcp.port: 9300

http.cors.enabled: true

http.cors.allow-origin: "*"

node.master: true

node.data: true

discovery.zen.ping.unicast.hosts: ["192.168.171.128:9300","192.168.171.129:9300"]

discovery.zen.minimum_master_nodes: 1

#cluster.name 集群的名称,可以自定义名字,但两个es必须一样,就是通过是不是同一个名称判断是不是一个集群

#node.name 本机的节点名,可自定义,没必要必须hosts解析或配置该主机名

#下面两个是默认基础上新加的,允许跨域访问

#http.cors.enabled: true

#http.cors.allow-origin: '*'

##注意:容器里有两个端口,9200是:ES节点和外部通讯使用,9300是:ES节点之间通讯使用

[root@localhost es-6.7.1]# cat scripts/run_es_6.7.1.sh

#!/bin/bash

docker run -e ES_JAVA_OPTS="-Xms1024m -Xmx1024m" -d --net=host --restart=always -v /data/es-6.7.1/config/es.yml:/usr/share/elasticsearch/config/elasticsearch.yml -v /data/es6.7.1_data:/usr/share/elasticsearch/data -v /data/es6.7.1_logs:/usr/share/elasticsearch/logs --name es6.7.1 elasticsearch:6.7.1

#注意:容器里有两个端口,9200是:ES节点和外部通讯使用,9300是:ES节点之间通讯使用

[root@localhost es-6.7.1]# mkdir /data/es6.7.1_data

[root@localhost es-6.7.1]# mkdir /data/es6.7.1_logs

[root@localhost es-6.7.1]# chmod -R 777 /data/es6.7.1_data/ #需要es用户能写入,否则无法映射

[root@localhost es-6.7.1]# chmod -R 777 /data/es6.7.1_logs/ #需要es用户能写入,否则无法映射

[root@localhost es-6.7.1]# sh scripts/run_es_6.7.1.sh

[root@localhost es-6.7.1]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a3b0a0187db8 elasticsearch:6.7.1 "/usr/local/bin/dock…" 9 seconds ago Up 7 seconds es6.7.1

[root@localhost es-6.7.1]# netstat -anput |grep 9200

tcp6 0 0 :::9200 :::* LISTEN 14171/java

[root@localhost es-6.7.1]# netstat -anput |grep 9300

tcp6 0 0 :::9300 :::* LISTEN 14171/java

[root@localhost es-6.7.1]# cd

浏览器访问es服务:http://192.168.171.129:9200/

3)安装es6.7.1-head插件:

上传相关es-head插件的压缩包到/data目录

[root@localhost ~]# cd /data/

[root@localhost data]# ls es-6.7.1-head.tar.gz

es-6.7.1-head.tar.gz

[root@localhost data]# tar -zxf es-6.7.1-head.tar.gz

[root@localhost data]# cd es-6.7.1-head

[root@localhost es-6.7.1-head]# ls

conf image scripts

[root@localhost es-6.7.1-head]# ls conf/

app.js Gruntfile.js

[root@localhost es-6.7.1-head]# ls image/

elasticsearch-head_6.7.1.tar

[root@localhost es-6.7.1-head]# ls scripts/

run_es-head.sh

[root@localhost es-6.7.1-head]# docker load -i image/elasticsearch-head_6.7.1.tar

[root@localhost es-6.7.1-head]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

elasticsearch 6.7.1 e2667f5db289 11 months ago 812MB

elasticsearch-head 6.7.1 b19a5c98e43b 3 years ago 824MB

[root@localhost es-6.7.1-head]# vim conf/app.js

.....

this.base_uri = this.config.base_uri || this.prefs.get("app-base_uri") || "http://192.168.171.129:9200"; #修改为本机ip

....

[root@localhost es-6.7.1-head]# vim conf/Gruntfile.js

....

connect: {

server: {

options: {

hostname: '*', #添加

port: 9100,

base: '.',

keepalive: true

}

}

....

[root@localhost es-6.7.1-head]# cat scripts/run_es-head.sh

#!/bin/bash

docker run -d --name es-head-6.7.1 --net=host --restart=always -v /data/es-6.7.1-head/conf/Gruntfile.js:/usr/src/app/Gruntfile.js -v /data/es-6.7.1-head/conf/app.js:/usr/src/app/_site/app.js elasticsearch-head:6.7.1

#容器端口是9100,是es的管理端口

[root@localhost es-6.7.1-head]# sh scripts/run_es-head.sh

[root@localhost es-6.7.1-head]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f4f5c967754b elasticsearch-head:6.7.1 "/bin/sh -c 'grunt s…" 12 seconds ago Up 7 seconds es-head-6.7.1

a3b0a0187db8 elasticsearch:6.7.1 "/usr/local/bin/dock…" 7 minutes ago Up 7 minutes es6.7.1

[root@localhost es-6.7.1-head]# netstat -anput |grep 9100

tcp6 0 0 :::9100 :::* LISTEN 14838/grunt

浏览器访问es-head插件:http://192.168.171.129:9100/

同样在机器192.168.171.128的head插件也能查看到状态,因为插件管理工具都是一样的,如下:

http://192.168.171.128:9100/

2.docker安装redis4.0.10(在192.168.171.128上)

上传redis4.0.10镜像:

[root@localhost ~]# ls redis_4.0.10.tar

redis_4.0.10.tar

[root@localhost ~]# docker load -i redis_4.0.10.tar

[root@localhost ~]# docker images |grep redis

gmprd.baiwang-inner.com/redis 4.0.10 f713a14c7f9b 13 months ago 425MB

[root@localhost ~]# mkdir -p /data/redis/conf #创建配置文件目录

[root@localhost ~]# vim /data/redis/conf/redis.conf #自定义配置文件

protected-mode no

port 6379

bind 0.0.0.0

tcp-backlog 511

timeout 0

tcp-keepalive 300

supervised no

pidfile "/usr/local/redis/redis_6379.pid"

loglevel notice

logfile "/opt/redis/logs/redis.log"

databases 16

save 900 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename "dump.rdb"

dir "/"

slave-serve-stale-data yes

slave-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 5

repl-disable-tcp-nodelay no

slave-priority 100

requirepass 123456

appendonly yes

dir "/opt/redis/data"

logfile "/opt/redis/logs/redis.log"

appendfilename "appendonly.aof"

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

lua-time-limit 5000

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit slave 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

aof-rewrite-incremental-fsync yes

maxclients 4064

#appendonly yes 是开启数据持久化

#dir "/opt/redis/data" #持久化到的容器里的目录

#logfile "/opt/redis/logs/redis.log" #持久化到的容器里的目录,此处写的必须是文件路径,目录路径不行

[root@localhost ~]# docker run -d --net=host --restart=always --name=redis4.0.10 -v /data/redis/conf/redis.conf:/opt/redis/conf/redis.conf -v /data/redis_data:/opt/redis/data -v /data/redis_logs:/opt/redis/logs gmprd.baiwang-inner.com/redis:4.0.10

[root@localhost ~]# docker ps |grep redis

735fb213ee41 gmprd.baiwang-inner.com/redis:4.0.10 "redis-server /opt/r…" 9 seconds ago Up 8 seconds redis4.0.10

[root@localhost ~]# netstat -anput |grep 6379

tcp 0 0 0.0.0.0:6379 0.0.0.0:* LISTEN 16988/redis-server

[root@localhost ~]# ls /data/redis_data/

appendonly.aof

[root@localhost ~]# ls /data/redis_logs/

redis.log

[root@localhost ~]# docker exec -it redis4.0.10 bash

[root@localhost /]# redis-cli -a 123456

127.0.0.1:6379> set k1 v1

OK

127.0.0.1:6379> keys *

1) "k1"

127.0.0.1:6379> get k1

"v1"

127.0.0.1:6379> quit

[root@localhost /]# exit

3.docker安装tomcat(不安装,仅创建模拟tomcat和其他java日志)和filebeat6.7.1 (192.168.171.130和192.168.171.131)

在192.168.171.130上:

模拟创建各类java日志,将各类java日志用filebeat写入redis中,在用logstash以多行匹配模式,写入es中:

注意:下面日志不能提前生成,需要先启动filebeat开始收集后,在vim编写下面的日志,否则filebeat不能读取已经有的日志.

a)创建模拟tomcat日志:

[root@localhost ~]# mkdir /data/java-logs

[root@localhost ~]# mkdir /data/java-logs/{tomcat_logs,es_logs,message_logs}

[root@localhost ~]# vim /data/java-logs/tomcat_logs/catalina.out

2020-03-09 13:07:48|ERROR|org.springframework.web.context.ContextLoader:351|Context initialization failed

org.springframework.beans.factory.parsing.BeanDefinitionParsingException: Configuration problem: Unable to locate Spring NamespaceHandler for XML schema namespace [http://www.springframework.org/schema/aop]

Offending resource: URL [file:/usr/local/apache-tomcat-8.0.32/webapps/ROOT/WEB-INF/classes/applicationContext.xml]

at org.springframework.beans.factory.parsing.FailFastProblemReporter.error(FailFastProblemReporter.java:70) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.parsing.ReaderContext.error(ReaderContext.java:85) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.parsing.ReaderContext.error(ReaderContext.java:80) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.BeanDefinitionParserDelegate.error(BeanDefinitionParserDelegate.java:301) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.BeanDefinitionParserDelegate.parseCustomElement(BeanDefinitionParserDelegate.java:1408) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.BeanDefinitionParserDelegate.parseCustomElement(BeanDefinitionParserDelegate.java:1401) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.DefaultBeanDefinitionDocumentReader.parseBeanDefinitions(DefaultBeanDefinitionDocumentReader.java:168) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.DefaultBeanDefinitionDocumentReader.doRegisterBeanDefinitions(DefaultBeanDefinitionDocumentReader.java:138) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.DefaultBeanDefinitionDocumentReader.registerBeanDefinitions(DefaultBeanDefinitionDocumentReader.java:94) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.XmlBeanDefinitionReader.registerBeanDefinitions(XmlBeanDefinitionReader.java:508) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.XmlBeanDefinitionReader.doLoadBeanDefinitions(XmlBeanDefinitionReader.java:392) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.XmlBeanDefinitionReader.loadBeanDefinitions(XmlBeanDefinitionReader.java:336) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.XmlBeanDefinitionReader.loadBeanDefinitions(XmlBeanDefinitionReader.java:304) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.support.AbstractBeanDefinitionReader.loadBeanDefinitions(AbstractBeanDefinitionReader.java:181) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.support.AbstractBeanDefinitionReader.loadBeanDefinitions(AbstractBeanDefinitionReader.java:217) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.support.AbstractBeanDefinitionReader.loadBeanDefinitions(AbstractBeanDefinitionReader.java:188) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.web.context.support.XmlWebApplicationContext.loadBeanDefinitions(XmlWebApplicationContext.java:125) ~[spring-web-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.web.context.support.XmlWebApplicationContext.loadBeanDefinitions(XmlWebApplicationContext.java:94) ~[spring-web-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.context.support.AbstractRefreshableApplicationContext.refreshBeanFactory(AbstractRefreshableApplicationContext.java:129) ~[spring-context-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.context.support.AbstractApplicationContext.obtainFreshBeanFactory(AbstractApplicationContext.java:609) ~[spring-context-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.context.support.AbstractApplicationContext.refresh(AbstractApplicationContext.java:510) ~[spring-context-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.web.context.ContextLoader.configureAndRefreshWebApplicationContext(ContextLoader.java:444) ~[spring-web-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.web.context.ContextLoader.initWebApplicationContext(ContextLoader.java:326) ~[spring-web-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.web.context.ContextLoaderListener.contextInitialized(ContextLoaderListener.java:107) [spring-web-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.apache.catalina.core.StandardContext.listenerStart(StandardContext.java:4812) [catalina.jar:8.0.32]

at org.apache.catalina.core.StandardContext.startInternal(StandardContext.java:5255) [catalina.jar:8.0.32]

at org.apache.catalina.util.LifecycleBase.start(LifecycleBase.java:147) [catalina.jar:8.0.32]

at org.apache.catalina.core.ContainerBase.addChildInternal(ContainerBase.java:725) [catalina.jar:8.0.32]

at org.apache.catalina.core.ContainerBase.addChild(ContainerBase.java:701) [catalina.jar:8.0.32]

at org.apache.catalina.core.StandardHost.addChild(StandardHost.java:717) [catalina.jar:8.0.32]

at org.apache.catalina.startup.HostConfig.deployDirectory(HostConfig.java:1091) [catalina.jar:8.0.32]

at org.apache.catalina.startup.HostConfig$DeployDirectory.run(HostConfig.java:1830) [catalina.jar:8.0.32]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [na:1.8.0_144]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) [na:1.8.0_144]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [na:1.8.0_144]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [na:1.8.0_144]

at java.lang.Thread.run(Thread.java:748) [na:1.8.0_144]

13-Oct-2020 13:07:48.990 SEVERE [localhost-startStop-3] org.apache.catalina.core.StandardContext.startInternal One or more listeners failed to start. Full details will be found in the appropriate container log file

13-Oct-2020 13:07:48.991 SEVERE [localhost-startStop-3] org.apache.catalina.core.StandardContext.startInternal Context [] startup failed due to previous errors

2020-03-09 13:07:48|INFO|org.springframework.context.support.AbstractApplicationContext:960|Closing Root WebApplicationContext: startup date [Sun Oct 13 13:07:43 CST 2020]; root of context hierarchy

2020-03-09 13:09:41|INFO|org.springframework.context.support.AbstractApplicationContext:960|Closing Root WebApplicationContext: startup date [Sun Oct 13 13:07:43 CST 2020]; root of context hierarchy error test1

2020-03-09 13:10:41|INFO|org.springframework.context.support.AbstractApplicationContext:960|Closing Root WebApplicationContext: startup date [Sun Oct 13 13:07:43 CST 2020]; root of context hierarchy error test2

2020-03-09 13:11:41|INFO|org.springframework.context.support.AbstractApplicationContext:960|Closing Root WebApplicationContext: startup date [Sun Oct 13 13:07:43 CST 2020]; root of context hierarchy error test3

b)制造系统日志(将/var/log/messages部分弄出来) 系统日志

[root@localhost ~]# vim /data/java-logs/message_logs/messages

Mar 09 14:19:06 localhost systemd: Removed slice system-selinux\x2dpolicy\x2dmigrate\x2dlocal\x2dchanges.slice.

Mar 09 14:19:06 localhost systemd: Stopping system-selinux\x2dpolicy\x2dmigrate\x2dlocal\x2dchanges.slice.

Mar 09 14:19:06 localhost systemd: Stopped target Network is Online.

Mar 09 14:19:06 localhost systemd: Stopping Network is Online.

Mar 09 14:19:06 localhost systemd: Stopping Authorization Manager...

Mar 09 14:20:38 localhost kernel: Initializing cgroup subsys cpuset

Mar 09 14:20:38 localhost kernel: Initializing cgroup subsys cpu

Mar 09 14:20:38 localhost kernel: Initializing cgroup subsys cpuacct

Mar 09 14:20:38 localhost kernel: Linux version 3.10.0-693.el7.x86_64 (builder@kbuilder.dev.centos.org) (gcc version 4.8.5 20150623 (Red Hat 4.8.5-16) (GCC) ) #1 SMP Tue Aug 22 21:09:27 UTC 2017

Mar 09 14:20:38 localhost kernel: Command line: BOOT_IMAGE=/vmlinuz-3.10.0-693.el7.x86_64 root=/dev/mapper/centos-root ro crashkernel=auto rd.lvm.lv=centos/root rd.lvm.lv=centos/swap rhgb quiet LANG=en_US.UTF-8

c)制造es日志:

[root@localhost ~]# vim /data/java-logs/es_logs/es_log

[2020-03-09T21:44:58,440][ERROR][o.e.b.Bootstrap ] Exception

java.lang.RuntimeException: can not run elasticsearch as root

at org.elasticsearch.bootstrap.Bootstrap.initializeNatives(Bootstrap.java:035) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Bootstrap.setup(Bootstrap.java:172) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Bootstrap.init(Bootstrap.java:323) [elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Elasticsearch.init(Elasticsearch.java:091) [elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Elasticsearch.execute(Elasticsearch.java:109) [elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.cli.EnvironmentAwareCommand.execute(EnvironmentAwareCommand.java:86) [elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.cli.Command.mainWithoutErrorHandling(Command.java:094) [elasticsearch-cli-6.2.4.jar:6.2.4]

at org.elasticsearch.cli.Command.main(Command.java:90) [elasticsearch-cli-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:92) [elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:85) [elasticsearch-6.2.4.jar:6.2.4]

[2020-03-09T21:44:58,549][WARN ][o.e.b.ElasticsearchUncaughtExceptionHandler] [] uncaught exception in thread [main]

org.elasticsearch.bootstrap.StartupException: java.lang.RuntimeException: can not run elasticsearch as root

at org.elasticsearch.bootstrap.Elasticsearch.init(Elasticsearch.java:095) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Elasticsearch.execute(Elasticsearch.java:109) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.cli.EnvironmentAwareCommand.execute(EnvironmentAwareCommand.java:86) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.cli.Command.mainWithoutErrorHandling(Command.java:094) ~[elasticsearch-cli-6.2.4.jar:6.2.4]

at org.elasticsearch.cli.Command.main(Command.java:90) ~[elasticsearch-cli-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:92) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:85) ~[elasticsearch-6.2.4.jar:6.2.4]

Caused by: java.lang.RuntimeException: can not run elasticsearch as root

at org.elasticsearch.bootstrap.Bootstrap.initializeNatives(Bootstrap.java:035) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Bootstrap.setup(Bootstrap.java:172) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Bootstrap.init(Bootstrap.java:323) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Elasticsearch.init(Elasticsearch.java:091) ~[elasticsearch-6.2.4.jar:6.2.4]

... 6 more

[2020-03-09T21:46:32,174][INFO ][o.e.n.Node ] [] initializing ...

[2020-03-09T21:46:32,467][INFO ][o.e.e.NodeEnvironment ] [koccs5f] using [1] data paths, mounts [[/ (rootfs)]], net usable_space [48gb], net total_space [49.9gb], types [rootfs]

[2020-03-09T21:46:32,468][INFO ][o.e.e.NodeEnvironment ] [koccs5f] heap size [0315.6mb], compressed ordinary object pointers [true]

d)制造tomcat访问日志

[root@localhost ~]# vim /data/java-logs/tomcat_logs/localhost_access_log.2020-03-09.txt

192.168.171.1 - - [09/Mar/2020:09:07:59 +0800] "GET /favicon.ico HTTP/1.1" 404 -

Caused by: java.lang.RuntimeException: can not run elasticsearch as root

at org.elasticsearch.bootstrap.Bootstrap.initializeNatives(Bootstrap.java:105) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Bootstrap.setup(Bootstrap.java:172) ~[elasticsearch-6.2.4.jar:6.2.4]

192.168.171.2 - - [09/Mar/2020:09:07:59 +0800] "GET / HTTP/1.1" 404 -

192.168.171.1 - - [09/Mar/2020:15:09:12 +0800] "GET / HTTP/1.1" 200 11250

Caused by: java.lang.RuntimeException: can not run elasticsearch as root

at org.elasticsearch.bootstrap.Bootstrap.initializeNatives

192.168.171.2 - - [09/Mar/2020:15:09:12 +0800] "GET /tomcat.png HTTP/1.1" 200 5103

192.168.171.3 - - [09/Mar/2020:15:09:12 +0800] "GET /tomcat.css HTTP/1.1" 200 5576

192.168.171.5 - - [09/Mar/2020:15:09:09 +0800] "GET /bg-nav.png HTTP/1.1" 200 1401

192.168.171.1 - - [09/Mar/2020:15:09:09 +0800] "GET /bg-upper.png HTTP/1.1" 200 3103

安装filebeat6.7.1:

[root@localhost ~]# cd /data/

[root@localhost data]# ls filebeat6.7.1.tar.gz

filebeat6.7.1.tar.gz

[root@localhost data]# tar -zxf filebeat6.7.1.tar.gz

[root@localhost data]# cd filebeat6.7.1

[root@localhost filebeat6.7.1]# ls

conf image scripts

[root@localhost filebeat6.7.1]# ls conf/

filebeat.yml filebeat.yml.bak

[root@localhost filebeat6.7.1]# ls image/

filebeat_6.7.1.tar

[root@localhost filebeat6.7.1]# ls scripts/

run_filebeat6.7.1.sh

[root@localhost filebeat6.7.1]# docker load -i image/filebeat_6.7.1.tar

[root@localhost filebeat6.7.1]# docker images |grep filebeat

docker.elastic.co/beats/filebeat 6.7.1 04fcff75b160 11 months ago 279MB

[root@localhost filebeat6.7.1]# cat conf/filebeat.yml

filebeat.inputs:

#下面为添加:——————————————

#系统日志:

- type: log

enabled: true

paths:

- /usr/share/filebeat/logs/message_logs/messages

fields:

log_source: system-171.130

#tomcat的catalina日志:

- type: log

enabled: true

paths:

- /usr/share/filebeat/logs/tomcat_logs/catalina.out

fields:

log_source: catalina-log-171.130

multiline.pattern: '^[0-9]{4}-(((0[13578]|(10|12))-(0[1-9]|[1-2][0-9]|3[0-1]))|(02-(0[1-9]|[1-2][0-9]))|((0[469]|11)-(0[1-9]|[1-2][0-9]|30)))'

multiline.negate: true

multiline.match: after

# 上面正则是匹配日期开头正则,类似:2004-02-29开头的

# log_source: xxx 表示: 因为存入redis的只有一个索引名,logstash对多种类型日志无法区分,定义该项可以让logstash以此来判断日志来源,当是这种类型日志,输出相应的索引名存入es,当时另一种类型日志,输出相应索引名存入es

#es日志:

- type: log

enabled: true

paths:

- /usr/share/filebeat/logs/es_logs/es_log

fields:

log_source: es-log-171.130

multiline.pattern: '^\['

multiline.negate: true

multiline.match: after

#上面正则是是匹配以[开头的,\表示转义.

#tomcat的访问日志:

- type: log

enabled: true

paths:

- /usr/share/filebeat/logs/tomcat_logs/localhost_access_log.2020-03-09.txt

fields:

log_source: tomcat-access-log-171.130

multiline.pattern: '^((2(5[0-5]|[0-4]\d))|[0-1]?\d{1,2})(\.((2(5[0-5]|[0-4]\d))|[0-1]?\d{1,2})){3}'

multiline.negate: true

multiline.match: after

#上面为添加:—————————————————————

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

setup.kibana:

#下面是直接写入es中:

#output.elasticsearch:

# hosts: ["192.168.171.128:9200"]

#下面是写入redis中:

#下面的filebeat-common是自定的key,要和logstash中从redis里对应的key要要一致,多个节点的nginx的都可以该key写入,但需要定义log_source以作为区分,logstash读取的时候以区分的标志来分开存放索引到es中

output.redis:

hosts: ["192.168.171.128"]

port: 6379

password: "123456"

key: "filebeat-common"

db: 0

datatype: list

processors:

- add_host_metadata: ~

- add_cloud_metadata: ~

#注意:因为默认情况下,宿主机日志路径和容器内日志路径是不一致的,所以配置文件里配置的路径如果是宿主机日志路径,容器里则找不到

##所以采取措施是:配置文件里配置成容器里的日志路径,再把宿主机的日志目录和容器日志目录做一个映射就可以了

#/usr/share/filebeat/logs/*.log 是容器里的日志路径

[root@localhost filebeat6.7.1]# cat scripts/run_filebeat6.7.1.sh

#!/bin/bash

docker run -d --name filebeat6.7.1 --net=host --restart=always --user=root -v /data/filebeat6.7.1/conf/filebeat.yml:/usr/share/filebeat/filebeat.yml -v /data/java-logs:/usr/share/filebeat/logs docker.elastic.co/beats/filebeat:6.7.1

#注意:因为默认情况下,宿主机日志路径和容器内日志路径是不一致的,所以配置文件里配置的路径如果是宿主机日志路径,容器里则找不到

#所以采取措施是:配置文件里配置成容器里的日志路径,再把宿主机的日志目录和容器日志目录做一个映射就可以了

[root@localhost filebeat6.7.1]# sh scripts/run_filebeat6.7.1.sh #运行后则开始收集日志到redis

[root@localhost filebeat6.7.1]# docker ps |grep filebeat

1f2bbd450e7e docker.elastic.co/beats/filebeat:6.7.1 "/usr/local/bin/dock…" 8 seconds ago Up 7 seconds filebeat6.7.1

[root@localhost filebeat6.7.1]# cd

在192.168.171.131上:

模拟创建各类java日志,将各类java日志用filebeat写入redis中,在用logstash以多行匹配模式,写入es中:

注意:下面日志不能提前生成,需要先启动filebeat开始收集后,在vim编写下面的日志,否则filebeat不能读取已经有的日志.

a)创建模拟tomcat日志:

[root@localhost ~]# mkdir /data/java-logs

[root@localhost ~]# mkdir /data/java-logs/{tomcat_logs,es_logs,message_logs}

[root@localhost ~]# vim /data/java-logs/tomcat_logs/catalina.out

2050-05-09 13:07:48|ERROR|org.springframework.web.context.ContextLoader:351|Context initialization failed

org.springframework.beans.factory.parsing.BeanDefinitionParsingException: Configuration problem: Unable to locate Spring NamespaceHandler for XML schema namespace [http://www.springframework.org/schema/aop]

Offending resource: URL [file:/usr/local/apache-tomcat-8.0.32/webapps/ROOT/WEB-INF/classes/applicationContext.xml]

at org.springframework.beans.factory.parsing.FailFastProblemReporter.error(FailFastProblemReporter.java:70) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.parsing.ReaderContext.error(ReaderContext.java:85) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.parsing.ReaderContext.error(ReaderContext.java:80) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.BeanDefinitionParserDelegate.error(BeanDefinitionParserDelegate.java:301) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.BeanDefinitionParserDelegate.parseCustomElement(BeanDefinitionParserDelegate.java:1408) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.BeanDefinitionParserDelegate.parseCustomElement(BeanDefinitionParserDelegate.java:1401) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.DefaultBeanDefinitionDocumentReader.parseBeanDefinitions(DefaultBeanDefinitionDocumentReader.java:168) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.DefaultBeanDefinitionDocumentReader.doRegisterBeanDefinitions(DefaultBeanDefinitionDocumentReader.java:138) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.DefaultBeanDefinitionDocumentReader.registerBeanDefinitions(DefaultBeanDefinitionDocumentReader.java:94) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.XmlBeanDefinitionReader.registerBeanDefinitions(XmlBeanDefinitionReader.java:508) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.XmlBeanDefinitionReader.doLoadBeanDefinitions(XmlBeanDefinitionReader.java:392) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.XmlBeanDefinitionReader.loadBeanDefinitions(XmlBeanDefinitionReader.java:336) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.xml.XmlBeanDefinitionReader.loadBeanDefinitions(XmlBeanDefinitionReader.java:304) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.support.AbstractBeanDefinitionReader.loadBeanDefinitions(AbstractBeanDefinitionReader.java:181) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.support.AbstractBeanDefinitionReader.loadBeanDefinitions(AbstractBeanDefinitionReader.java:217) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.beans.factory.support.AbstractBeanDefinitionReader.loadBeanDefinitions(AbstractBeanDefinitionReader.java:188) ~[spring-beans-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.web.context.support.XmlWebApplicationContext.loadBeanDefinitions(XmlWebApplicationContext.java:125) ~[spring-web-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.web.context.support.XmlWebApplicationContext.loadBeanDefinitions(XmlWebApplicationContext.java:94) ~[spring-web-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.context.support.AbstractRefreshableApplicationContext.refreshBeanFactory(AbstractRefreshableApplicationContext.java:129) ~[spring-context-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.context.support.AbstractApplicationContext.obtainFreshBeanFactory(AbstractApplicationContext.java:609) ~[spring-context-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.context.support.AbstractApplicationContext.refresh(AbstractApplicationContext.java:510) ~[spring-context-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.web.context.ContextLoader.configureAndRefreshWebApplicationContext(ContextLoader.java:444) ~[spring-web-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.web.context.ContextLoader.initWebApplicationContext(ContextLoader.java:326) ~[spring-web-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.springframework.web.context.ContextLoaderListener.contextInitialized(ContextLoaderListener.java:107) [spring-web-4.2.6.RELEASE.jar:4.2.6.RELEASE]

at org.apache.catalina.core.StandardContext.listenerStart(StandardContext.java:4812) [catalina.jar:8.0.32]

at org.apache.catalina.core.StandardContext.startInternal(StandardContext.java:5255) [catalina.jar:8.0.32]

at org.apache.catalina.util.LifecycleBase.start(LifecycleBase.java:147) [catalina.jar:8.0.32]

at org.apache.catalina.core.ContainerBase.addChildInternal(ContainerBase.java:725) [catalina.jar:8.0.32]

at org.apache.catalina.core.ContainerBase.addChild(ContainerBase.java:701) [catalina.jar:8.0.32]

at org.apache.catalina.core.StandardHost.addChild(StandardHost.java:717) [catalina.jar:8.0.32]

at org.apache.catalina.startup.HostConfig.deployDirectory(HostConfig.java:1091) [catalina.jar:8.0.32]

at org.apache.catalina.startup.HostConfig$DeployDirectory.run(HostConfig.java:1830) [catalina.jar:8.0.32]

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511) [na:1.8.0_144]

at java.util.concurrent.FutureTask.run(FutureTask.java:266) [na:1.8.0_144]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [na:1.8.0_144]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [na:1.8.0_144]

at java.lang.Thread.run(Thread.java:748) [na:1.8.0_144]

13-Oct-2050 13:07:48.990 SEVERE [localhost-startStop-3] org.apache.catalina.core.StandardContext.startInternal One or more listeners failed to start. Full details will be found in the appropriate container log file

13-Oct-2050 13:07:48.991 SEVERE [localhost-startStop-3] org.apache.catalina.core.StandardContext.startInternal Context [] startup failed due to previous errors

2050-05-09 13:07:48|INFO|org.springframework.context.support.AbstractApplicationContext:960|Closing Root WebApplicationContext: startup date [Sun Oct 13 13:07:43 CST 2050]; root of context hierarchy

2050-05-09 13:09:41|INFO|org.springframework.context.support.AbstractApplicationContext:960|Closing Root WebApplicationContext: startup date [Sun Oct 13 13:07:43 CST 2050]; root of context hierarchy error test1

2050-05-09 13:10:41|INFO|org.springframework.context.support.AbstractApplicationContext:960|Closing Root WebApplicationContext: startup date [Sun Oct 13 13:07:43 CST 2050]; root of context hierarchy error test2

2050-05-09 13:11:41|INFO|org.springframework.context.support.AbstractApplicationContext:960|Closing Root WebApplicationContext: startup date [Sun Oct 13 13:07:43 CST 2050]; root of context hierarchy error test3

b)制造系统日志(将/var/log/messages部分弄出来) 系统日志

[root@localhost ~]# vim /data/java-logs/message_logs/messages

Mar 50 50:50:06 localhost systemd: Removed slice system-selinux\x2dpolicy\x2dmigrate\x2dlocal\x2dchanges.slice.

Mar 50 50:50:06 localhost systemd: Stopping system-selinux\x2dpolicy\x2dmigrate\x2dlocal\x2dchanges.slice.

Mar 50 50:50:06 localhost systemd: Stopped target Network is Online.

Mar 50 50:50:06 localhost systemd: Stopping Network is Online.

Mar 50 50:50:06 localhost systemd: Stopping Authorization Manager...

Mar 50 50:20:38 localhost kernel: Initializing cgroup subsys cpuset

Mar 50 50:20:38 localhost kernel: Initializing cgroup subsys cpu

Mar 50 50:20:38 localhost kernel: Initializing cgroup subsys cpuacct

Mar 50 50:20:38 localhost kernel: Linux version 3.10.0-693.el7.x86_64 (builder@kbuilder.dev.centos.org) (gcc version 4.8.5 20150623 (Red Hat 4.8.5-16) (GCC) ) #1 SMP Tue Aug 22 21:50:27 UTC 2050

Mar 50 50:20:38 localhost kernel: Command line: BOOT_IMAGE=/vmlinuz-3.10.0-693.el7.x86_64 root=/dev/mapper/centos-root ro crashkernel=auto rd.lvm.lv=centos/root rd.lvm.lv=centos/swap rhgb quiet LANG=en_US.UTF-8

c)制造es日志:

[root@localhost ~]# vim /data/java-logs/es_logs/es_log

[2050-50-09T21:44:58,440][ERROR][o.e.b.Bootstrap ] Exception

java.lang.RuntimeException: can not run elasticsearch as root

at org.elasticsearch.bootstrap.Bootstrap.initializeNatives(Bootstrap.java:505) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Bootstrap.setup(Bootstrap.java:172) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Bootstrap.init(Bootstrap.java:323) [elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Elasticsearch.init(Elasticsearch.java:091) [elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Elasticsearch.execute(Elasticsearch.java:109) [elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.cli.EnvironmentAwareCommand.execute(EnvironmentAwareCommand.java:86) [elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.cli.Command.mainWithoutErrorHandling(Command.java:094) [elasticsearch-cli-6.2.4.jar:6.2.4]

at org.elasticsearch.cli.Command.main(Command.java:90) [elasticsearch-cli-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:92) [elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:85) [elasticsearch-6.2.4.jar:6.2.4]

[2050-50-09T21:44:58,549][WARN ][o.e.b.ElasticsearchUncaughtExceptionHandler] [] uncaught exception in thread [main]

org.elasticsearch.bootstrap.StartupException: java.lang.RuntimeException: can not run elasticsearch as root

at org.elasticsearch.bootstrap.Elasticsearch.init(Elasticsearch.java:095) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Elasticsearch.execute(Elasticsearch.java:109) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.cli.EnvironmentAwareCommand.execute(EnvironmentAwareCommand.java:86) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.cli.Command.mainWithoutErrorHandling(Command.java:094) ~[elasticsearch-cli-6.2.4.jar:6.2.4]

at org.elasticsearch.cli.Command.main(Command.java:90) ~[elasticsearch-cli-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:92) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Elasticsearch.main(Elasticsearch.java:85) ~[elasticsearch-6.2.4.jar:6.2.4]

Caused by: java.lang.RuntimeException: can not run elasticsearch as root

at org.elasticsearch.bootstrap.Bootstrap.initializeNatives(Bootstrap.java:505) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Bootstrap.setup(Bootstrap.java:172) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Bootstrap.init(Bootstrap.java:323) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Elasticsearch.init(Elasticsearch.java:091) ~[elasticsearch-6.2.4.jar:6.2.4]

... 6 more

[2050-50-09T21:46:32,174][INFO ][o.e.n.Node ] [] initializing ...

[2050-50-09T21:46:32,467][INFO ][o.e.e.NodeEnvironment ] [koccs5f] using [1] data paths, mounts [[/ (rootfs)]], net usable_space [48gb], net total_space [49.9gb], types [rootfs]

[2050-50-09T21:46:32,468][INFO ][o.e.e.NodeEnvironment ] [koccs5f] heap size [5015.6mb], compressed ordinary object pointers [true]

d)制造tomcat访问日志

[root@localhost ~]# vim /data/java-logs/tomcat_logs/localhost_access_log.2050-50-09.txt

192.168.150.1 - - [09/Mar/2050:09:07:59 +0800] "GET /favicon.ico HTTP/1.1" 404 -

Caused by: java.lang.RuntimeException: can not run elasticsearch as root

at org.elasticsearch.bootstrap.Bootstrap.initializeNatives(Bootstrap.java:105) ~[elasticsearch-6.2.4.jar:6.2.4]

at org.elasticsearch.bootstrap.Bootstrap.setup(Bootstrap.java:172) ~[elasticsearch-6.2.4.jar:6.2.4]

192.168.150.2 - - [09/Mar/2050:09:07:59 +0800] "GET / HTTP/1.1" 404 -

192.168.150.1 - - [09/Mar/2050:15:09:12 +0800] "GET / HTTP/1.1" 200 11250

Caused by: java.lang.RuntimeException: can not run elasticsearch as root

at org.elasticsearch.bootstrap.Bootstrap.initializeNatives

192.168.150.2 - - [09/Mar/2050:15:09:12 +0800] "GET /tomcat.png HTTP/1.1" 200 5103

192.168.150.3 - - [09/Mar/2050:15:09:12 +0800] "GET /tomcat.css HTTP/1.1" 200 5576

192.168.150.5 - - [09/Mar/2050:15:09:09 +0800] "GET /bg-nav.png HTTP/1.1" 200 1401

192.168.150.1 - - [09/Mar/2050:15:09:09 +0800] "GET /bg-upper.png HTTP/1.1" 200 3103

安装filebeat6.7.1:

[root@localhost ~]# cd /data/

[root@localhost data]# ls filebeat6.7.1.tar.gz

filebeat6.7.1.tar.gz

[root@localhost data]# tar -zxf filebeat6.7.1.tar.gz

[root@localhost data]# cd filebeat6.7.1

[root@localhost filebeat6.7.1]# ls

conf image scripts

[root@localhost filebeat6.7.1]# ls conf/

filebeat.yml filebeat.yml.bak

[root@localhost filebeat6.7.1]# ls image/

filebeat_6.7.1.tar

[root@localhost filebeat6.7.1]# ls scripts/

run_filebeat6.7.1.sh

[root@localhost filebeat6.7.1]# docker load -i image/filebeat_6.7.1.tar

[root@localhost filebeat6.7.1]# docker images |grep filebeat

docker.elastic.co/beats/filebeat 6.7.1 04fcff75b160 11 months ago 279MB

[root@localhost filebeat6.7.1]# cat conf/filebeat.yml

filebeat.inputs:

#下面为添加:——————————————

#系统日志:

- type: log

enabled: true

paths:

- /usr/share/filebeat/logs/message_logs/messages

fields:

log_source: system-171.131

#tomcat的catalina日志:

- type: log

enabled: true

paths:

- /usr/share/filebeat/logs/tomcat_logs/catalina.out

fields:

log_source: catalina-log-171.131

multiline.pattern: '^[0-9]{4}-(((0[13578]|(10|12))-(0[1-9]|[1-2][0-9]|3[0-1]))|(02-(0[1-9]|[1-2][0-9]))|((0[469]|11)-(0[1-9]|[1-2][0-9]|30)))'

multiline.negate: true

multiline.match: after

# 上面正则是匹配日期开头正则,类似:2004-02-29开头的

# log_source: xxx 表示: 因为存入redis的只有一个索引名,logstash对多种类型日志无法区分,定义该项可以让logstash以此来判断日志来源,当是这种类型日志,输出相应的索引名存入es,当时另一种类型日志,输出相应索引名存入es

#es日志:

- type: log

enabled: true

paths:

- /usr/share/filebeat/logs/es_logs/es_log

fields:

log_source: es-log-171.131

multiline.pattern: '^\['

multiline.negate: true

multiline.match: after

#上面正则是是匹配以[开头的,\表示转义.

#tomcat的访问日志:

- type: log

enabled: true

paths:

- /usr/share/filebeat/logs/tomcat_logs/localhost_access_log.2050-50-09.txt

fields:

log_source: tomcat-access-log-171.131

multiline.pattern: '^((2(5[0-5]|[0-4]\d))|[0-1]?\d{1,2})(\.((2(5[0-5]|[0-4]\d))|[0-1]?\d{1,2})){3}'

multiline.negate: true

multiline.match: after

#上面为添加:—————————————————————

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

setup.kibana:

#下面是直接写入es中:

#output.elasticsearch:

# hosts: ["192.168.171.128:9200"]

#下面是写入redis中:

#下面的filebeat-common是自定的key,要和logstash中从redis里对应的key要要一致,多个节点的nginx的都可以该key写入,但需要定义log_source以作为区分,logstash读取的时候以区分的标志来分开存放索引到es中

output.redis:

hosts: ["192.168.171.128"]

port: 6379

password: "123456"

key: "filebeat-common"

db: 0

datatype: list

processors:

- add_host_metadata: ~

- add_cloud_metadata: ~

#注意:因为默认情况下,宿主机日志路径和容器内日志路径是不一致的,所以配置文件里配置的路径如果是宿主机日志路径,容器里则找不到

##所以采取措施是:配置文件里配置成容器里的日志路径,再把宿主机的日志目录和容器日志目录做一个映射就可以了

#/usr/share/filebeat/logs/*.log 是容器里的日志路径

[root@localhost filebeat6.7.1]# cat scripts/run_filebeat6.7.1.sh

#!/bin/bash

docker run -d --name filebeat6.7.1 --net=host --restart=always --user=root -v /data/filebeat6.7.1/conf/filebeat.yml:/usr/share/filebeat/filebeat.yml -v /data/java-logs:/usr/share/filebeat/logs docker.elastic.co/beats/filebeat:6.7.1

#注意:因为默认情况下,宿主机日志路径和容器内日志路径是不一致的,所以配置文件里配置的路径如果是宿主机日志路径,容器里则找不到

#所以采取措施是:配置文件里配置成容器里的日志路径,再把宿主机的日志目录和容器日志目录做一个映射就可以了

[root@localhost filebeat6.7.1]# sh scripts/run_filebeat6.7.1.sh #运行后则开始收集日志到redis

[root@localhost filebeat6.7.1]# docker ps |grep filebeat

3cc559a84904 docker.elastic.co/beats/filebeat:6.7.1 "/usr/local/bin/dock…" 8 seconds ago Up 7 seconds filebeat6.7.1

[root@localhost filebeat6.7.1]# cd

到redis里查看是否以写入日志:(192.168.171.128,两台都以同一个key写入redis,所以只有一个key名,筛选进入es时再根据标识筛选)

[root@localhost ~]# docker exec -it redis4.0.10 bash

[root@localhost /]# redis-cli -a 123456

127.0.0.1:6379> KEYS *

1)"filebeat-common"

127.0.0.1:6379> quit

[root@localhost /]# exit

4.docker安装logstash6.7.1(在192.168.171.129上)——从redis读出日志,写入es集群

[root@localhost ~]# cd /data/

[root@localhost data]# ls logstash6.7.1.tar.gz

logstash6.7.1.tar.gz

[root@localhost data]# tar -zxf logstash6.7.1.tar.gz

[root@localhost data]# cd logstash6.7.1

[root@localhost logstash6.7.1]# ls

config image scripts

[root@localhost logstash6.7.1]# ls config/

GeoLite2-City.mmdb log4j2.properties logstash.yml pipelines.yml_bak startup.options

jvm.options logstash-sample.conf pipelines.yml redis_out_es_in.conf

[root@localhost logstash6.7.1]# ls image/

logstash_6.7.1.tar

[root@localhost logstash6.7.1]# ls scripts/

run_logstash6.7.1.sh

[root@localhost logstash6.7.1]# docker load -i image/logstash_6.7.1.tar

[root@localhost logstash6.7.1]# docker images |grep logstash

logstash 6.7.1 1f5e249719fc 11 months ago 778MB

[root@localhost logstash6.7.1]# cat config/pipelines.yml #确认配置,引用的conf目录

# This file is where you define your pipelines. You can define multiple.

# For more information on multiple pipelines, see the documentation:

# https://www.elastic.co/guide/en/logstash/current/multiple-pipelines.html

- pipeline.id: main

path.config: "/usr/share/logstash/config/*.conf" #容器内的目录

pipeline.workers: 3

[root@localhost logstash6.7.1]# cat config/redis_out_es_in.conf #查看和确认配置

input {

redis {

host => "192.168.171.128"

port => "6379"

password => "123456"

db => "0"

data_type => "list"

key => "filebeat-common"

}

}

#默认target是@timestamp,所以time_local会更新@timestamp时间。下面filter的date插件作用: 当第一次收集或使用缓存写入时候,会发现入库时间比日志实际时间有延时,导致时间不准确,最好加入date插件,使得>入库时间和日志实际时间保持一致.

filter {

date {

locale => "en"

match => ["time_local", "dd/MMM/yyyy:HH:mm:ss Z"]

}

}

output {

if [fields][log_source] == 'system-171.130' {

elasticsearch {

hosts => ["192.168.171.128:9200"]

index => "logstash-system-171.130-log-%{+YYYY.MM.dd}"

}

}

if [fields][log_source] == 'system-171.131' {

elasticsearch {

hosts => ["192.168.171.128:9200"]

index => "logstash-system-171.131-log-%{+YYYY.MM.dd}"

}

}

if [fields][log_source] == 'catalina-log-171.130' {

elasticsearch {

hosts => ["192.168.171.128:9200"]

index => "logstash-catalina-171.130-log-%{+YYYY.MM.dd}"

}

}

if [fields][log_source] == 'catalina-log-171.131' {

elasticsearch {

hosts => ["192.168.171.128:9200"]

index => "logstash-catalina-171.131-log-%{+YYYY.MM.dd}"

}

}

if [fields][log_source] == 'es-log-171.130' {

elasticsearch {

hosts => ["192.168.171.128:9200"]

index => "logstash-es-log-171.130-%{+YYYY.MM.dd}"

}

}

if [fields][log_source] == 'es-log-171.131' {

elasticsearch {

hosts => ["192.168.171.128:9200"]

index => "logstash-es-log-171.131-%{+YYYY.MM.dd}"

}

}

if [fields][log_source] == 'tomcat-access-log-171.130' {

elasticsearch {

hosts => ["192.168.171.128:9200"]

index => "logstash-tomcat-access-171.130-log-%{+YYYY.MM.dd}"

}

}

if [fields][log_source] == 'tomcat-access-log-171.131' {

elasticsearch {

hosts => ["192.168.171.128:9200"]

index => "logstash-tomcat-access-171.131-log-%{+YYYY.MM.dd}"

}

}

stdout { codec=> rubydebug }

#codec=> rubydebug 调试使用,能将信息输出到控制台

}

[root@localhost logstash6.7.1]# cat scripts/run_logstash6.7.1.sh

#!/bin/bash

docker run -d --name logstash6.7.1 --net=host --restart=always -v /data/logstash6.7.1/config:/usr/share/logstash/config logstash:6.7.1

[root@localhost logstash6.7.1]# sh scripts/run_logstash6.7.1.sh #从redis读取日志,写入es

[root@localhost logstash6.7.1]# docker ps |grep logstash

980aefbc077e logstash:6.7.1 "/usr/local/bin/dock…" 9 seconds ago Up 7 seconds logstash6.7.1

到es集群查看,如下:

到redis查看,数据已经读取走,为空了:

[root@localhost ~]# docker exec -it redis4.0.10 bash

[root@localhost /]# redis-cli -a 123456

127.0.0.1:6379> KEYS *

(empty list or set)

127.0.0.1:6379> quit

5.docker安装kibana6.7.1(在192.168.171.132上)从es中读取日志展示出来

[root@localhost ~]# cd /data/

[root@localhost data]# ls kibana6.7.1.tar.gz

kibana6.7.1.tar.gz

[root@localhost data]# tar -zxf kibana6.7.1.tar.gz

[root@localhost data]# cd kibana6.7.1

[root@localhost kibana6.7.1]# ls

config image scripts

[root@localhost kibana6.7.1]# ls config/

kibana.yml

[root@localhost kibana6.7.1]# ls image/

kibana_6.7.1.tar

[root@localhost kibana6.7.1]# ls scripts/

run_kibana6.7.1.sh

[root@localhost kibana6.7.1]# docker load -i image/kibana_6.7.1.tar

[root@localhost kibana6.7.1]# docker images |grep kibana

kibana 6.7.1 860831fbf9e7 11 months ago 677MB

[root@localhost kibana6.7.1]# cat config/kibana.yml

#

# ** THIS IS AN AUTO-GENERATED FILE **

#

# Default Kibana configuration for docker target

server.name: kibana

server.host: "0"

elasticsearch.hosts: [ "http://192.168.171.128:9200" ]

xpack.monitoring.ui.container.elasticsearch.enabled: true

[root@localhost kibana6.7.1]# cat scripts/run_kibana6.7.1.sh

#!/bin/bash

docker run -d --name kibana6.7.1 --net=host --restart=always -v /data/kibana6.7.1/config/kibana.yml:/usr/share/kibana/config/kibana.yml kibana:6.7.1

[root@localhost kibana6.7.1]# sh scripts/run_kibana6.7.1.sh #运行,从es读取展示到kibana中

[root@localhost kibana6.7.1]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

bf16aaeaf4d9 kibana:6.7.1 "/usr/local/bin/kiba…" 16 seconds ago Up 15 seconds kibana6.7.1

[root@localhost kibana6.7.1]# netstat -anput |grep 5601 #kibana端口

tcp 0 0 0.0.0.0:5601 0.0.0.0:* LISTEN 2418/node

浏览器访问kibana: http://192.168.171.132:5601

kibana依次创建索引(尽量和es里索引名对应,方便查找)——查询和展示es里的数据

(1)先创建-*索引:logstash-catalina-* 点击management,如下:

输入索引名:logstash-catalina-*,点击下一步,如下:

选择时间戳: @timestamp,点击创建索引,如下:

(2)先创建-*索引:logstash-es-log-*

点击下一步,如下:

选择时间戳,点击创建索引,如下:

(3)创建-*索引:logstash-system-*

点击下一步,如下:

选择时间戳,点击创建索引,如下:

(4)创建-*索引:logstash-tomcat-access-*

点击下一步,如下:

点击创建索引,如下:

查看日志,点击discover,如下: #注意:由于之前测试访问日志量少,后面又多写了些日志,方便测试。

随便选择几个点击箭头,即可展开,如下:

如果对运维课程感兴趣,可以在b站上、A站或csdn上搜索我的账号: 运维实战课程,可以关注我,学习更多免费的运维实战技术视频

相关文章:

docker安装elk6.7.1-搜集java日志

docker安装elk6.7.1-搜集java日志 如果对运维课程感兴趣,可以在b站上、A站或csdn上搜索我的账号: 运维实战课程,可以关注我,学习更多免费的运维实战技术视频 0.规划 192.168.171.130 tomcat日志filebeat 192.168.171.131 …...

入门:基础概念与应用场景)

自然语言处理(NLP)入门:基础概念与应用场景

什么是自然语言处理(NLP)? 自然语言处理(Natural Language Processing, NLP)是人工智能(AI)的一个重要分支,研究如何让计算机理解、生成、分析和与人类语言进行交互。换句话说&…...

AI News(1/21/2025):OpenAI 安全疏忽:ChatGPT漏洞引发DDoS风险/OpenAI 代理工具即将发布

1、OpenAI 的安全疏忽:ChatGPT API 漏洞引发DDoS风险 德国安全研究员 Benjamin Flesch 发现了一个严重的安全漏洞:攻击者可以通过向 ChatGPT API 发送一个 HTTP 请求,利用 ChatGPT 的爬虫对目标网站发起 DDoS 攻击。该漏洞源于 OpenAI 在处理…...

Linux——包源管理工具

一、概要 Linux下的包/源管理命令:主要任务就是完成在Linux环境下的安装/卸载/维护软件。 1.rpm 是最基础的rpm包的安装命令,需要提前下载相关安装包和依赖包。 2.yum/dnf (最好用)是基于rpm包的自动安装命令,可以自动…...

C++解决走迷宫问题:DFS、BFS算法应用

文章目录 思路:DFSBFSBFS和DFS的特点BFS 与 DFS 的区别BFS 的优点BFS 时间复杂度深度优先搜索(DFS)的优点深度优先搜索(DFS)的时间复杂度解释:空间复杂度总结:例如下面的迷宫: // 迷宫的表示:0表示可以走,1表示障碍 vector<vector<int>> maze = {{0, 0,…...

机器学习09-Pytorch功能拆解

机器学习09-Pytorch功能拆解 我个人是Java程序员,关于Python代码的使用过程中的相关代码事项,在此进行记录 文章目录 机器学习09-Pytorch功能拆解1-核心逻辑脉络2-个人备注3-Pytorch软件包拆解1-Python有参和无参构造构造方法的基本语法示例解释注意事项…...

BLE透传方案,IoT短距无线通信的“中坚力量”

在物联网(IoT)短距无线通信生态系统中,低功耗蓝牙(BLE)数据透传是一种无需任何网络或基础设施即可完成双向通信的技术。其主要通过简单操作串口的方式进行无线数据传输,最高能满足2Mbps的数据传输速率&…...

Linux 中的poll、select和epoll有什么区别?

poll 和 select 是Linux 系统中用于多路复用 I/O 的系统调用,它们允许一个程序同时监视多个文件描述符,以便在任何一个文件描述符准备好进行 I/O 操作时得到通知。 一、select select 是一种较早的 I/O 多路复用机制,具有以下特点ÿ…...

单片机-STM32 WIFI模块--ESP8266 (十二)

1.WIFI模块--ESP8266 名字由来: Wi-Fi这个术语被人们普遍误以为是指无线保真(Wireless Fidelity),并且即便是Wi-Fi联盟本身也经常在新闻稿和文件中使用“Wireless Fidelity”这个词,Wi-Fi还出现在ITAA的一个论文中。…...

linux日志排查相关命令

实时查看日志 tail -f -n 100 文件名 -f:实时查看 -n:查看多少行 直接查看日志文件 .log文件 cat 文件名 .gz文件 zgcat 文件名 在日志文件搜索指定内容 .log文件 grep -A 3 “呀1” 文件名 -A:向后查看 3:向后查看行数 “呀1”:搜…...

每日一题-二叉搜索树与双向链表

将二叉搜索树转化为排序双向链表 问题描述 输入一棵二叉搜索树,将该二叉搜索树转换成一个排序的双向链表,要求空间复杂度为 O(1),时间复杂度为 O(n),并且不能创建新的结点,只能调整树中结点的指针指向。 数据范围 …...

【多视图学习】Self-Weighted Contrastive Fusion for Deep Multi-View Clustering

Self-Weighted Contrastive Fusion for Deep Multi-View Clustering 用于深度多视图聚类的自加权对比融合 TMM 2024 代码链接 论文链接 0.摘要 多视图聚类可以从多个视图中探索共识信息,在过去二十年中越来越受到关注。然而,现有的工作面临两个主要挑…...

ASK-HAR:多尺度特征提取的深度学习模型

一、探索多尺度特征提取方法 在近年来,随着智能家居智能系统和传感技术的快速发展,人类活动识别(HAR)技术已经成为一个备受瞩目的研究领域。HAR技术的核心在于通过各种跟踪设备和测量手段,如传感器和摄像头࿰…...

C语言:数据的存储

本文重点: 1. 数据类型详细介绍 2. 整形在内存中的存储:原码、反码、补码 3. 大小端字节序介绍及判断 4. 浮点型在内存中的存储解析 数据类型结构的介绍: 类型的基本归类: 整型家族 浮点家族 构造类型: 指针类型&…...

深入理解动态规划(dp)--(提前要对dfs有了解)

前言:对于动态规划:该算法思维是在dfs基础上演化发展来的,所以我不想讲的是看到一个题怎样直接用动态规划来解决,而是说先用dfs搜索,一步步优化,这个过程叫做动态规划。(该文章教你怎样一步步的…...

)

单片机基础模块学习——数码管(二)

一、数码管模块代码 这部分包括将数码管想要显示的字符转换成对应段码的函数,另外还包括数码管显示函数 值得注意的是对于小数点和不显示部分的处理方式 由于小数点没有单独占一位,所以这里用到了两个变量i,j用于跳过小数点导致的占据其他字符显示在数…...

【大数据】机器学习----------强化学习机器学习阶段尾声

一、强化学习的基本概念 注: 圈图与折线图引用知乎博主斜杠青年 1. 任务与奖赏 任务:强化学习的目标是让智能体(agent)在一个环境(environment)中采取一系列行动(actions)以完成一个…...

flink写parquet解决timestamp时间格式字段问题

背景 Apache Parquet 是一种开源的列式数据文件格式,旨在实现高效的数据存储和检索。它提供高性能压缩和编码方案(encoding schemes)来批量处理复杂数据,并且受到许多编程语言和分析工具的支持。 在我们通过flink写入parquet文件的时候,会遇到timestamp时间格式写入的问题。…...

redis实现lamp架构缓存

redis服务器环境下mysql实现lamp架构缓存 ip角色环境192.168.242.49缓存服务器Redis2.2.7192.168.242.50mysql服务器mysql192.168.242.51web端php ***默认已安装好redis,mysql 三台服务器时间同步(非常重要) # 下载ntpdate yum -y install…...

正则表达式中常见的贪婪词

1. * 含义:匹配前面的元素零次或者多次。示例:对于正则表达式 a*,在字符串 "aaaa" 中,它会匹配整个 "aaaa",因为它会尽可能多地匹配 a 字符。代码示例(Python):…...

QMC5883L的驱动

简介 本篇文章的代码已经上传到了github上面,开源代码 作为一个电子罗盘模块,我们可以通过I2C从中获取偏航角yaw,相对于六轴陀螺仪的yaw,qmc5883l几乎不会零飘并且成本较低。 参考资料 QMC5883L磁场传感器驱动 QMC5883L磁力计…...

)

IGP(Interior Gateway Protocol,内部网关协议)

IGP(Interior Gateway Protocol,内部网关协议) 是一种用于在一个自治系统(AS)内部传递路由信息的路由协议,主要用于在一个组织或机构的内部网络中决定数据包的最佳路径。与用于自治系统之间通信的 EGP&…...

第25节 Node.js 断言测试

Node.js的assert模块主要用于编写程序的单元测试时使用,通过断言可以提早发现和排查出错误。 稳定性: 5 - 锁定 这个模块可用于应用的单元测试,通过 require(assert) 可以使用这个模块。 assert.fail(actual, expected, message, operator) 使用参数…...

[10-3]软件I2C读写MPU6050 江协科技学习笔记(16个知识点)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16...

Psychopy音频的使用

Psychopy音频的使用 本文主要解决以下问题: 指定音频引擎与设备;播放音频文件 本文所使用的环境: Python3.10 numpy2.2.6 psychopy2025.1.1 psychtoolbox3.0.19.14 一、音频配置 Psychopy文档链接为Sound - for audio playback — Psy…...

Mysql中select查询语句的执行过程

目录 1、介绍 1.1、组件介绍 1.2、Sql执行顺序 2、执行流程 2.1. 连接与认证 2.2. 查询缓存 2.3. 语法解析(Parser) 2.4、执行sql 1. 预处理(Preprocessor) 2. 查询优化器(Optimizer) 3. 执行器…...

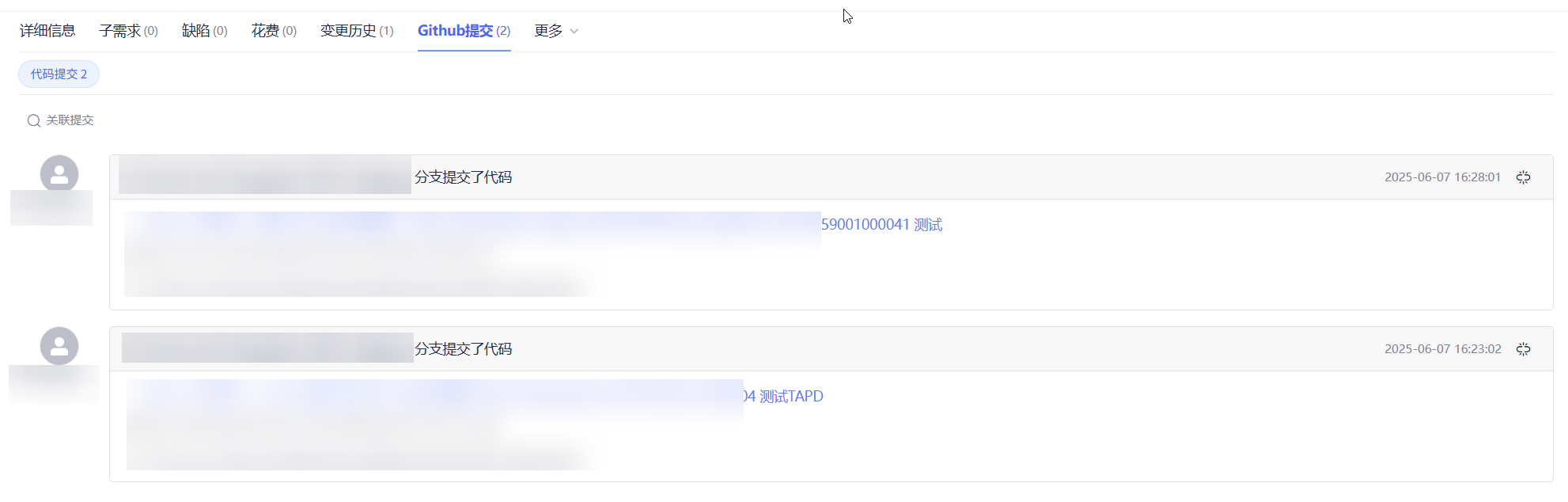

基于 TAPD 进行项目管理

起因 自己写了个小工具,仓库用的Github。之前在用markdown进行需求管理,现在随着功能的增加,感觉有点难以管理了,所以用TAPD这个工具进行需求、Bug管理。 操作流程 注册 TAPD,需要提供一个企业名新建一个项目&#…...

如何更改默认 Crontab 编辑器 ?

在 Linux 领域中,crontab 是您可能经常遇到的一个术语。这个实用程序在类 unix 操作系统上可用,用于调度在预定义时间和间隔自动执行的任务。这对管理员和高级用户非常有益,允许他们自动执行各种系统任务。 编辑 Crontab 文件通常使用文本编…...

【JavaSE】多线程基础学习笔记

多线程基础 -线程相关概念 程序(Program) 是为完成特定任务、用某种语言编写的一组指令的集合简单的说:就是我们写的代码 进程 进程是指运行中的程序,比如我们使用QQ,就启动了一个进程,操作系统就会为该进程分配内存…...

Go语言多线程问题

打印零与奇偶数(leetcode 1116) 方法1:使用互斥锁和条件变量 package mainimport ("fmt""sync" )type ZeroEvenOdd struct {n intzeroMutex sync.MutexevenMutex sync.MutexoddMutex sync.Mutexcurrent int…...