Kubernetes实战(九)-kubeadm安装k8s集群

1 环境准备

1.1 主机信息

| ip | hostname |

| 10.220.43.203 | master |

| 10.220.43.204 | node1 |

1.2 系统信息

$ cat /etc/redhat-release

Alibaba Cloud Linux (Aliyun Linux) release 2.1903 LTS (Hunting Beagle)2 部署准备

master/与slave主机均需要设置。

2.1 设置主机名

# master

hostnamectl set-hostname master# slave

hostnamectl set-hostname slave2.2 设置hosts

$ vim /etc/hosts

#添加如下内容:

10.220.43.203 master

10.220.43.204 slave

#保存退出,重新登录主机2.3 网络配置

# 桥接设置(master/node)$ cat > /etc/sysctl.d/k8s.conf << EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

$ sysctl --system3 安装部署

master/slave均安装

3.1 安装docker

docker二进制安装参考:docker部署及常用命令-CSDN博客

3.2 配置kubernetes加速yum源

为kubernetes添加国内阿里云YUM软件源。

$ cat > /etc/yum.repos.d/kubernetes.repo << EOF

[k8s]

name=k8s

enabled=1

gpgcheck=0

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

EOF3.3 安装kubeadm/kubelet/kubectl

#版本可以选择自己要安装的版本号

$ yum install -y kubelet-1.25.0 kubectl-1.25.0 kubeadm-1.25.0

# 此时,还不能启动kubelet,因为此时配置还不能,现在仅仅可以设置开机自启动

$ systemctl enable kubelet3.4 安装容器运行时

如果k8s版本低于1.24版,可以忽略此步骤。

由于1.24版本不能直接兼容docker引擎,

Docker Engine 没有实现 CRI, 而这是容器运行时在 Kubernetes 中工作所需要的。 为此,必须安装一个额外的服务cri-dockerd。 cri-dockerd 是一个基于传统的内置 Docker 引擎支持的项目, 它在 1.24 版本从 kubelet 中移除。

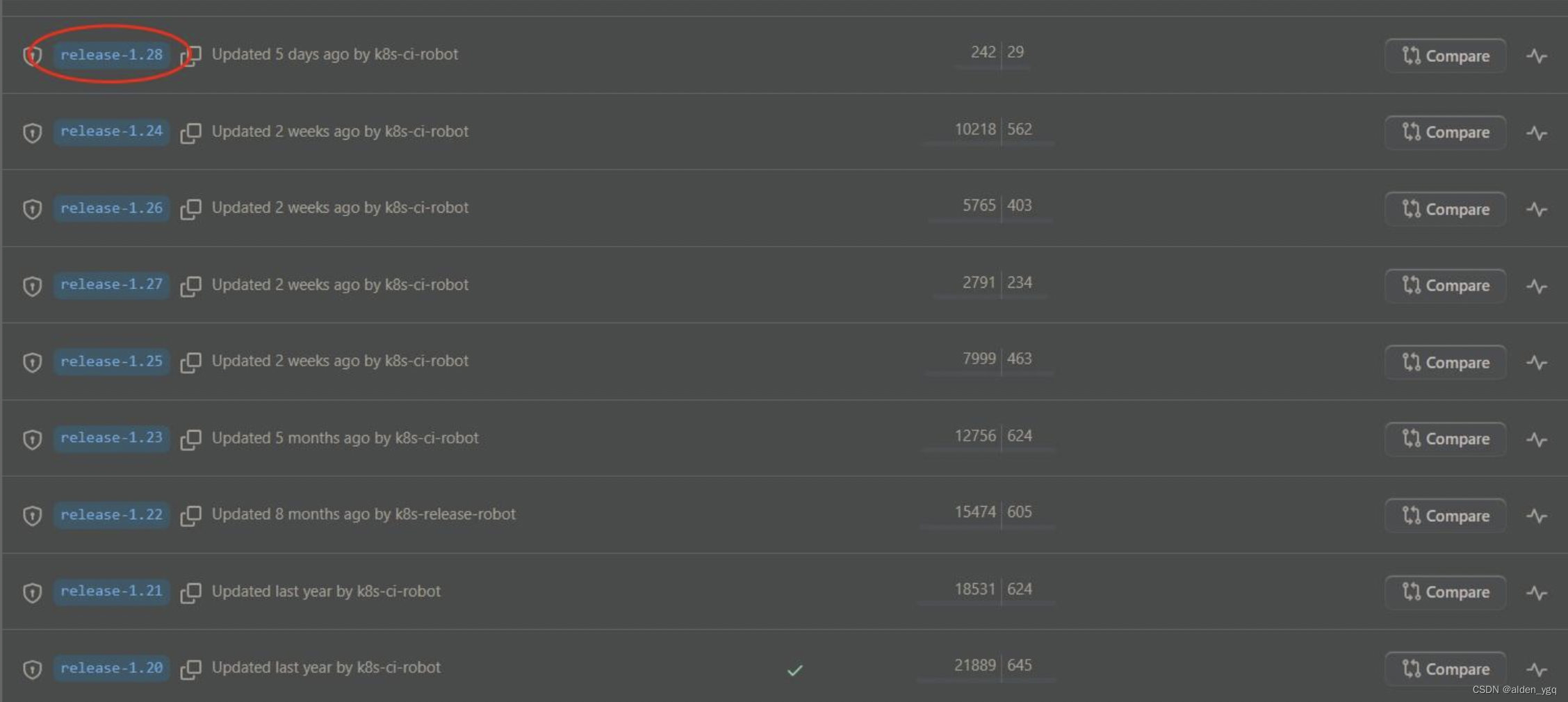

目前最新k8s版本为1.28.x。

需要在集群内每个节点上安装一个容器运行时以使Pod可以运行在上面。高版本Kubernetes要求使用符合容器运行时接口(CRI)的运行时。

以下是几款 Kubernetes 中几个常见的容器运行时的用法:

- containerd

- CRI-O

- Docker Engine

- Mirantis Container Runtime

以下是使用 cri-dockerd 适配器来将 Docker Engine 与 Kubernetes 集成。

3.4.1 安装cri-dockerd

$ wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.2.6/cri-dockerd-0.2.6.amd64.tgz

$ tar -xf cri-dockerd-0.2.6.amd64.tgz

$ cp cri-dockerd/cri-dockerd /usr/bin/

$ chmod +x /usr/bin/cri-dockerd3.4.2 配置启动服务

$ cat <<"EOF" > /usr/lib/systemd/system/cri-docker.service

> [Unit]

> Description=CRI Interface for Docker Application Container Engine

> Documentation=https://docs.mirantis.com

> After=network-online.target firewalld.service docker.service

> Wants=network-online.target

> Requires=cri-docker.socket

> [Service]

> Type=notify

> ExecStart=/usr/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.8

> ExecReload=/bin/kill -s HUP $MAINPID

> TimeoutSec=0

> RestartSec=2

> Restart=always

> StartLimitBurst=3

> StartLimitInterval=60s

> LimitNOFILE=infinity

> LimitNPROC=infinity

> LimitCORE=infinity

> TasksMax=infinity

> Delegate=yes

> KillMode=process

> [Install]

> WantedBy=multi-user.target

> EOF主要是以下命令:ExecStart=/usr/bin/cri-dockerd --network-plugin=cni --pod-infra-container-image=http://registry.aliyuncs.com/google_containers/pause:3.8

pause容器的版本可以通过kubeadm config images list查看:

$ kubeadm config images list

W1210 17:27:44.009895 31608 version.go:104] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get "https://cdn.dl.k8s.io/release/stable-1.txt": context deadline exceeded (Client.Timeout exceeded while awaiting headers)

W1210 17:27:44.009935 31608 version.go:105] falling back to the local client version: v1.25.0

registry.k8s.io/kube-apiserver:v1.25.0

registry.k8s.io/kube-controller-manager:v1.25.0

registry.k8s.io/kube-scheduler:v1.25.0

registry.k8s.io/kube-proxy:v1.25.0

registry.k8s.io/pause:3.8

registry.k8s.io/etcd:3.5.4-0

registry.k8s.io/coredns/coredns:v1.9.33.4.3 ⽣成 socket ⽂件

$ cat <<"EOF" > /usr/lib/systemd/system/cri-docker.socket

[Unit]

Description=CRI Docker Socket for the API

PartOf=cri-docker.service

[Socket]

ListenStream=%t/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

EOF3.4.4 启动 cri-docker 服务并配置开机启动

$ systemctl daemon-reload

$ systemctl enable cri-docker

$ systemctl start cri-docker

$ systemctl is-active cri-docker3.5 部署Kubernetes

master需要部署 ,slave node节点不需要执行kubeadm init。

创建kubeadm.yaml文件,内容如下:

kubeadm init \

--apiserver-advertise-address=10.220.43.203 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.25.0 \

--service-cidr=192.168.0.0/16 \

--pod-network-cidr=172.25.0.0/16 \

--ignore-preflight-errors=all \

--cri-socket unix:///var/run/cri-dockerd.sock- --apiserver-advertise-address=master节点IP

- --pod-network-cidr=10.244.0.0/16,要与后面kube-flannel.yml里的ip一致也就是使用10.244.0.0/16不要改它。

输出:

[init] Using Kubernetes version: v1.25.0

[preflight] Running pre-flight checks[WARNING CRI]: container runtime is not running: output: time="2023-12-10T17:38:57+08:00" level=fatal msg="validate service connection: CRI v1 runtime API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.RuntimeService"

, error: exit status 1

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'[WARNING ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/kube-apiserver:v1.25.0: output: time="2023-12-10T17:38:57+08:00" level=fatal msg="validate service connection: CRI v1 image API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.ImageService"

, error: exit status 1[WARNING ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/kube-controller-manager:v1.25.0: output: time="2023-12-10T17:38:57+08:00" level=fatal msg="validate service connection: CRI v1 image API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.ImageService"

, error: exit status 1[WARNING ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/kube-scheduler:v1.25.0: output: time="2023-12-10T17:38:57+08:00" level=fatal msg="validate service connection: CRI v1 image API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.ImageService"

, error: exit status 1[WARNING ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/kube-proxy:v1.25.0: output: time="2023-12-10T17:38:57+08:00" level=fatal msg="validate service connection: CRI v1 image API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.ImageService"

, error: exit status 1[WARNING ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/pause:3.8: output: time="2023-12-10T17:38:57+08:00" level=fatal msg="validate service connection: CRI v1 image API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.ImageService"

, error: exit status 1[WARNING ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/etcd:3.5.4-0: output: time="2023-12-10T17:38:57+08:00" level=fatal msg="validate service connection: CRI v1 image API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.ImageService"

, error: exit status 1[WARNING ImagePull]: failed to pull image registry.aliyuncs.com/google_containers/coredns:v1.9.3: output: time="2023-12-10T17:38:58+08:00" level=fatal msg="validate service connection: CRI v1 image API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.ImageService"

, error: exit status 1

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master] and IPs [192.168.0.1 10.220.43.203]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [localhost master] and IPs [10.220.43.203 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [localhost master] and IPs [10.220.43.203 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 28.001898 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the labels: [node-role.kubernetes.io/control-plane node.kubernetes.io/exclude-from-external-load-balancers]

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/control-plane:NoSchedule]

[bootstrap-token] Using token: 3u2q8d.u899qmv8lsm7sxyz

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] Configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] Configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxyYour Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user:mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run:export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 10.220.43.203:6443 --token 3u2q8d.u899qmv8lsm7sxyz \--discovery-token-ca-cert-hash sha256:d7b2a47417fbff13e11a50ae92aaa0666448a92eb4c8deaaae9e9aa5c0cbc930 这里是通过kubeadm init安装,所以执行后会下载相应的docker镜像,一般会发现在控制台卡着不动很久,这时就是在下载镜像,可以使用docker images命令查看是不是有新的镜像增加。

3.6 测试kubectl工具

master/slave均执行。

kubeadm安装好后,控制台也会有提示执行以下命令,照着执行(也就是第11步最后控制台输出的)。

3.6.1 配置kubeconfig

master执行。

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

$ scp /etc/kubernetes/admin.conf 10.220.43.204:/etc/kubernetes

root@10.220.43.204's password:

admin.conf 100% 5641 19.2MB/s 00:00 slave执行。

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config3.6.2 配置变量

$ vim /etc/profile

#加入以下变量

export KUBECONFIG=/etc/kubernetes/admin.conf

$ source /etc/profile3.6.3 测试kubectl命令

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master NotReady control-plane 21m v1.25.0 10.220.43.203 <none> Alibaba Cloud Linux (Aliyun Linux) 2.1903 LTS (Hunting Beagle) 4.19.91-27.6.al7.x86_64 docker://20.10.21

一般来说状态先会是NotReady ,可能程序还在启动中,过一会再看看就会变成Ready

3.7 安装网络插件

常用的cni网络插件有calico和flannel,两者区别为:

- flannel不支持复杂的网络策略

- calico支持网络策略

3.7.1 安装Pod CNI网络插件flannel

master/slave均执行

$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created报错:The connection to the server http://raw.githubusercontent.com was refused - did you specify the right host or port?

原因:国外资源访问不了

解决办法:host配置可以访问的ip

vim /etc/hosts

#在/etc/hosts增加以下这条

199.232.28.133 raw.githubusercontent.com重新执行上面命令,便可成功安装!

3.7.2 部署Pod CNI网络插件calico

官网:About Calico | Calico Documentation

3.7.2.1 下载calico.yaml文件

$ curl https://raw.githubusercontent.com/projectcalico/calico/v3.26.1/manifests/calico.yaml -O3.7.2.2 拉取calico镜像

$ grep -w image calico.yaml| uniq image: docker.io/calico/cni:v3.26.1image: docker.io/calico/node:v3.26.1image: docker.io/calico/kube-controllers:v3.26.1

$ docker pull docker.io/calico/cni:v3.26.1

$ docker pull docker.io/calico/node:v3.26.1

$ docker pull docker.io/calico/kube-controllers:v3.26.13.7.2.3 修改calico网段信息

修改calico.yaml 文件中CALICO_IPV4POOL_CIDR的IP段要和kubeadm初始化时候的pod网段一致,注意格式要对齐,不然会报错。

$ vim calico.yaml - name: CALICO_IPV4POOL_CIDRvalue: "172.16.0.0/16"3.7.2.4 加载calico.yaml文件

$ kubectl apply -f calico.yaml

poddisruptionbudget.policy/calico-kube-controllers configured

serviceaccount/calico-kube-controllers unchanged

serviceaccount/calico-node unchanged

serviceaccount/calico-cni-plugin unchanged

configmap/calico-config unchanged

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/bgpfilters.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org configured

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org configured

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers unchanged

clusterrole.rbac.authorization.k8s.io/calico-node unchanged

clusterrole.rbac.authorization.k8s.io/calico-cni-plugin unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-node unchanged

clusterrolebinding.rbac.authorization.k8s.io/calico-cni-plugin unchanged3.8 slave节点加入master

此步骤需要用到第3.5 部署Kubernetes控制台输出内容:

kubeadm join 10.220.43.203:6443 --token 3u2q8d.u899qmv8lsm7sxyz \--discovery-token-ca-cert-hash sha256:d7b2a47417fbff13e11a50ae92aaa0666448a92eb4c8deaaae9e9aa5c0cbc930 加入命令为:

kubeadm join 10.220.43.203:6443 --token 3u2q8d.u899qmv8lsm7sxyz \--discovery-token-ca-cert-hash sha256:d7b2a47417fbff13e11a50ae92aaa0666448a92eb4c8deaaae9e9aa5c0cbc930 \--ignore-preflight-errors=all \

--cri-socket unix:///var/run/cri-dockerd.sock- --ignore-preflight-errors=all

- --cri-socket unix:///var/run/cri-dockerd.sock

这两行一定要加上不然就会报各种错:

[preflight] Running pre-flight checks

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR CRI]: container runtime is not running: output: time="2023-08-31T16:42:23+08:00" level=fatal msg="validate service connection: CRI v1 runtime API is not implemented for endpoint \"unix:///var/run/cri-dockerd.sock\": rpc error: code = Unimplemented desc = unknown service runtime.v1.RuntimeService"

, error: exit status 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`

To see the stack trace of this error execute with --v=5 or higher

Found multiple CRI endpoints on the host. Please define which one do you wish to use by setting the 'criSocket' field in the kubeadm configuration file: unix:///var/run/containerd/containerd.sock, unix:///var/run/cri-dockerd.sock

To see the stack trace of this error execute with --v=5 or higher

3.9 验证

master节点:

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready control-plane 49m v1.25.0 10.220.43.203 <none> Alibaba Cloud Linux (Aliyun Linux) 2.1903 LTS (Hunting Beagle) 4.19.91-27.6.al7.x86_64 docker://20.10.21

slave Ready <none> 10m v1.25.0 10.220.43.204 <none> Alibaba Cloud Linux (Aliyun Linux) 2.1903 LTS (Hunting Beagle) 4.19.91-27.6.al7.x86_64 docker://20.10.21slavea节点:

$ kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

master Ready control-plane 50m v1.25.0 10.220.43.203 <none> Alibaba Cloud Linux (Aliyun Linux) 2.1903 LTS (Hunting Beagle) 4.19.91-27.6.al7.x86_64 docker://20.10.21

slave Ready <none> 11m v1.25.0 10.220.43.204 <none> Alibaba Cloud Linux (Aliyun Linux) 2.1903 LTS (Hunting Beagle) 4.19.91-27.6.al7.x86_64 docker://20.10.214 常见使用问题

4.1 K8S在kubeadm init后,没有记录kubeadm join如何查询?

#再生成一个token即可

kubeadm token create --print-join-command

#下在的命令可以查看历史的token

kubeadm token list4.2 node节点kubeadm join失败后,要重新join怎么办?

#再生成一个token即可

kubeadm token create --print-join-command

#下在的命令可以查看历史的token

kubeadm token list4.3 重启kubelet

systemctl daemon-reload

systemctl restart kubelet4.4 查询系统组件

#查询节点

kubectl get nodes

#查询pods 一般要带上"-n"即命名空间。不带等同 -n dafault

kubectl get pods -n kube-system5 异常问题处理

5.1 kubeadm init报错

[root@k8s centos]# kubeadm init

I1205 06:44:01.459391 12097 version.go:94] could not fetch a Kubernetes version from the internet: unable to get URL "https://dl.k8s.io/release/stable-1.txt": Get https://dl.k8s.io/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

I1205 06:44:01.459549 12097 version.go:95] falling back to the local client version: v1.13.0

[init] Using Kubernetes version: v1.13.0

[preflight] Running pre-flight checks[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'[WARNING Hostname]: hostname "k8s.novalocal" could not be reached[WARNING Hostname]: hostname "k8s.novalocal": lookup k8s.novalocal on 10.32.148.99:53: no such host[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'

error execution phase preflight: [preflight] Some fatal errors occurred:[ERROR FileContent--proc-sys-net-bridge-bridge-nf-call-iptables]: /proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`5.1.1 网络设置问题

5.1.1.1 错误内容

/proc/sys/net/bridge/bridge-nf-call-iptables contents are not set to 15.1.1.2 解决方法

$ echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables5.1.2 Enable docker

5.1.2.1 错误内容

[WARNING Service-Docker]: docker service is not enabled, please run 'systemctl enable docker.service'5.1.2.2 解决方法

$ systemctl enable docker.service5.1.3 hostname问题

5.1.3.1 错误内容

[WARNING Hostname]: hostname "slave" could not be reached

[WARNING Hostname]: hostname "slave": lookup slave on 10.32.148.99:53: no such host5.1.3.2 解决方法

1)修改主机名

$ hostnamectl set-hostname slave2)更改/etc/hostname

$ echo k8s > /etc/hostname5.1.4 Enable kubelet

5.1.4.1 错误内容

[WARNING Service-Kubelet]: kubelet service is not enabled, please run 'systemctl enable kubelet.service'5.1.4.2 错误内容

$ systemctl enable kubelet.service6 配置kubectl命令tab键自动补全

$ kubectl --help | grep bashcompletion Output shell completion code for the specified shell (bash or zsh)添加source <(kubectl completion bash)到/etc/profile,并使配置生效:

$ cat /etc/profile | head -2

# /etc/profile

source <(kubectl completion bash)$ source /etc/profile验证kubectl是否可以自动补全。

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

ops-master-1 Ready control-plane,master 33m v1.21.0

ops-worker-1 Ready <none> 30m v1.21.0

ops-worker-2 Ready <none> 30m v1.21.0#注意:需要bash-completion-2.1-6.el7.noarch包,不然不能自动补全命令

$ rpm -qa | grep bash bash-completion-2.1-6.el7.noarch bash-4.2.46-30.el7.x86_64 bash-doc-4.2.46-30.el7.x86_64

相关文章:

Kubernetes实战(九)-kubeadm安装k8s集群

1 环境准备 1.1 主机信息 iphostname10.220.43.203master10.220.43.204node1 1.2 系统信息 $ cat /etc/redhat-release Alibaba Cloud Linux (Aliyun Linux) release 2.1903 LTS (Hunting Beagle) 2 部署准备 master/与slave主机均需要设置。 2.1 设置主机名 # master h…...

【HarmonyOS开发】拖拽动画的实现

动画的原理是在一个时间段内,多次改变UI外观,由于人眼会产生视觉暂留,所以最终看到的就是一个“连续”的动画。UI的一次改变称为一个动画帧,对应一次屏幕刷新,而决定动画流畅度的一个重要指标就是帧率FPS(F…...

提高问卷填写率的策略与方法

在现代社会的研究中,问卷调研是一种常见的数据收集方式。但是,随着数据的快速传播和竞争激烈的市场环境,怎样吸引大量的人填好问卷成为了科研人员关心的问题。本文将介绍一些方式和策略,以帮助你吸引大量的人填好问卷,…...

软件工程考试复习

第一章、软件工程概述 🌟软件程序数据文档(考点) 🌟计算机程序及其说明程序的各种文档称为 ( 文件 ) 。计算任务的处理对象和处理规则的描述称为 ( 程序 )。有关计算机程序功能、…...

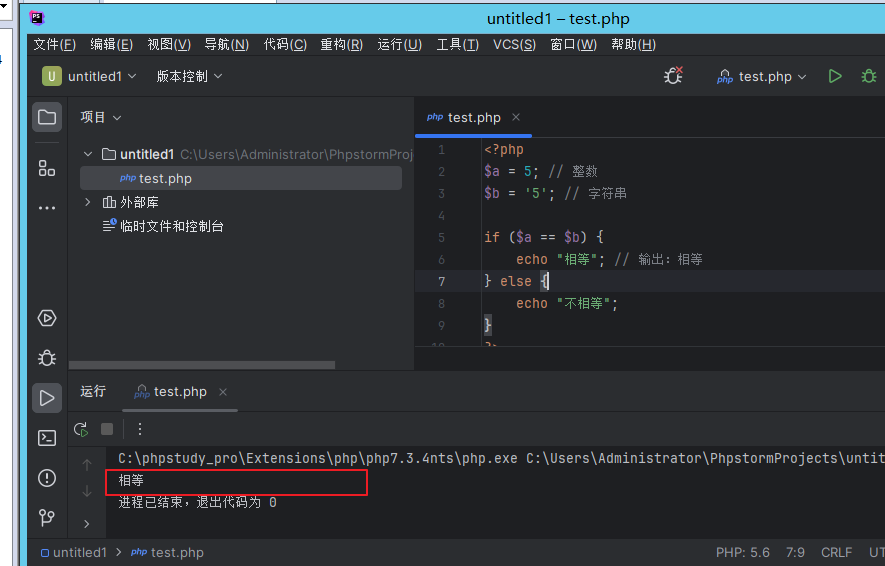

PHP基础 - 类型比较

在 PHP 中,作为一种弱类型语言,它提供了松散比较和严格比较两种方式来比较变量的值和类型。 松散比较: 使用两个等号(==)进行比较,只会比较变量的值,而不会考虑它们的数据类型。例如: $a = 5; // 整数 $b = 5; // 字符串if ($a == $b) {echo "相等"; // 输…...

张正友相机标定法原理与实现

张正友相机标定法是张正友教授1998年提出的单平面棋盘格的相机标定方法。传统标定法的标定板是需要三维的,需要非常精确,这很难制作,而张正友教授提出的方法介于传统标定法和自标定法之间,但克服了传统标定法需要的高精度标定物的缺点,而仅需使用一个打印出来的棋盘格就可…...

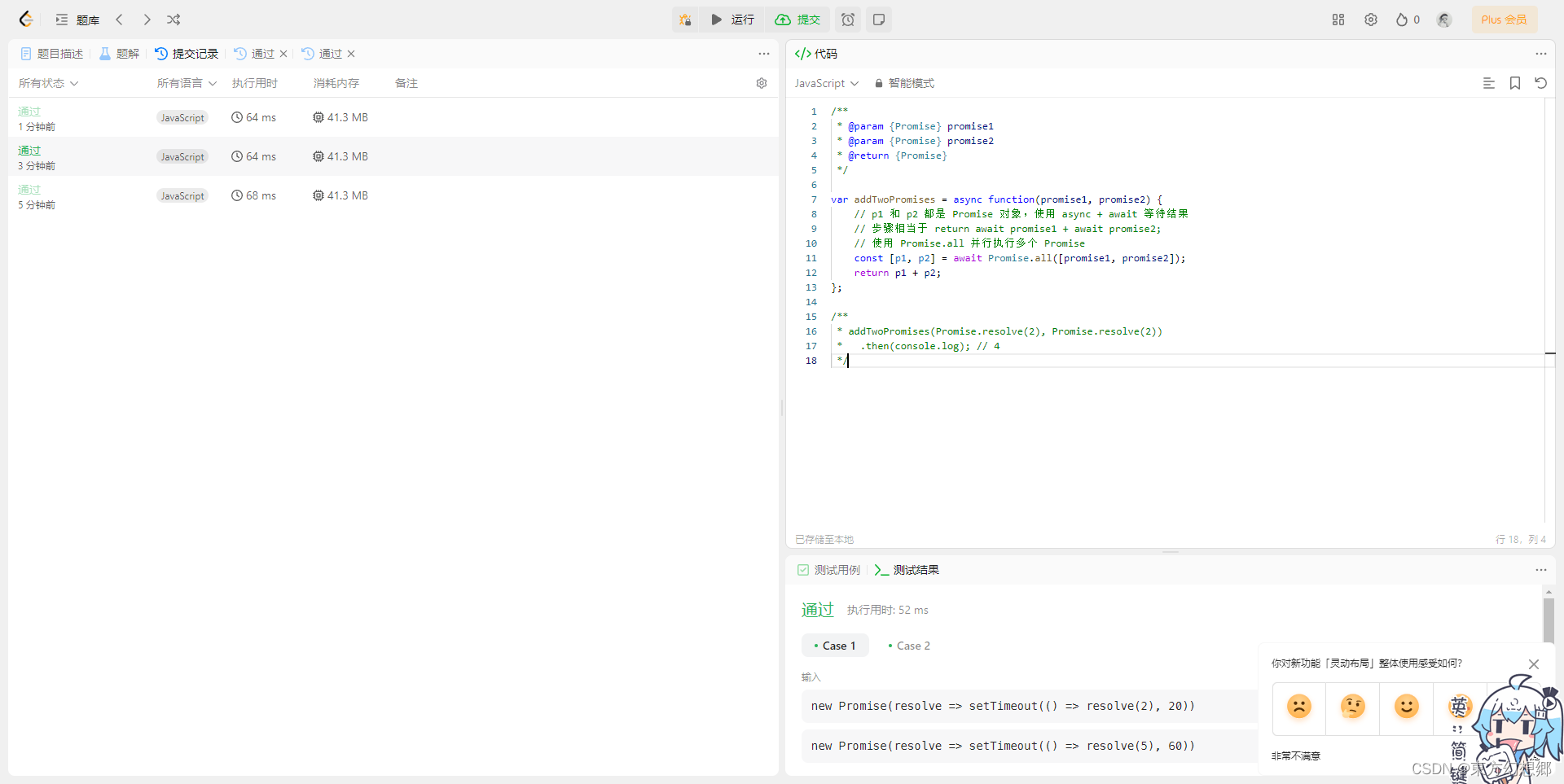

【LeetCode】2723. 两个 Promise 对象相加

两个 Promise 对象相加 题目题解 题目 给定两个 promise 对象 promise1 和 promise2,返回一个新的 promise。promise1 和 promise2 都会被解析为一个数字。返回的 Promise 应该解析为这两个数字的和。 示例 1: 输入: promise1 new Promise…...

设计模式--命令模式的简单例子

引入:以一个对数组的增删改查为例。通过命令模式可以对数组进行增删改查以及撤销回滚。 一、基本概念 命令模式有多种分法,在本文中主要分为CommandMgr、Command、Receiver. CommandMgr主要用于控制命令执行等操作、Command为具体的命令、Receiver为命…...

排序算法之六:快速排序(非递归)

快速排序是非常适合使用递归的,但是同时我们也要掌握非递归的算法 因为操作系统的栈空间很小,如果递归的深度太深,容易造成栈溢出 递归改非递归一般有两种改法: 改循环借助栈(数据结构) 图示算法 不是…...

【概率方法】重要性采样

从一个极简分布出发 假设我们有一个关于随机变量 X X X 的函数 f ( X ) f(X) f(X),满足如下分布 p ( X ) p(X) p(X)0.90.1 f ( X ) f(X) f(X)0.10.9 如果我们要对 f ( X ) f(X) f(X) 的期望 E p [ f ( X ) ] \mathbb{E}_p[f(X)] Ep[f(X)] 进行估计࿰…...

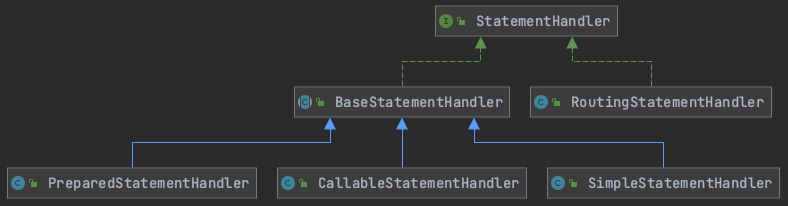

MyBatis 四大核心组件之 StatementHandler 源码解析

🚀 作者主页: 有来技术 🔥 开源项目: youlai-mall 🍃 vue3-element-admin 🍃 youlai-boot 🌺 仓库主页: Gitee 💫 Github 💫 GitCode 💖 欢迎点赞…...

用Guava做本地缓存示例

缓存的作用 提升系统性能,暂时在内存中保存业务系统的数据处理结果,并且等待下次访问使用 本地缓存和分布式缓存 缓存分为本地缓存与分布式缓存。本地缓存为了保证线程安全问题,一般使用ConcurrentMap的方式保存在内存之中,而常…...

Django多对多ManyToManyField字段

Django是一个支持多对多关系的Web框架,可以在模型中定义多对多关系。多对多关系通常涉及两个实体之间的复杂交互,例如用户和组之间的关系,或者课程和学生之间的关系。在Django中,可以使用ManyToManyField字段来定义多对多关系。 …...

docker-centos中基于keepalived+niginx模拟主从热备完整过程

文章目录 一、环境准备二、主机1、环境搭建1.1 镜像拉取1.2 创建网桥1.3 启动容器1.4 配置镜像源1.5 下载工具包1.6 下载keepalived1.7 下载nginx 2、配置2.1 配置keepalived2.2 配置nginx2.2.1 查看nginx.conf2.2.2 修改index.html 3、启动3.1 启动nginx3.2 启动keepalived 4、…...

软件科技成果鉴定测试需提供哪些材料?

为了有效评估科技成果的质量,促进科技理论向实际应用转化,所以需要进行科技成果鉴定测试。申请鉴定的科技成果范围是指列入国家和省、自治区、直辖市以及国务院有关部门科技计划内的应用技术成果,以及少数科技计划外的重大应用技术成果。 …...

办公word-从不是第一页添加页码

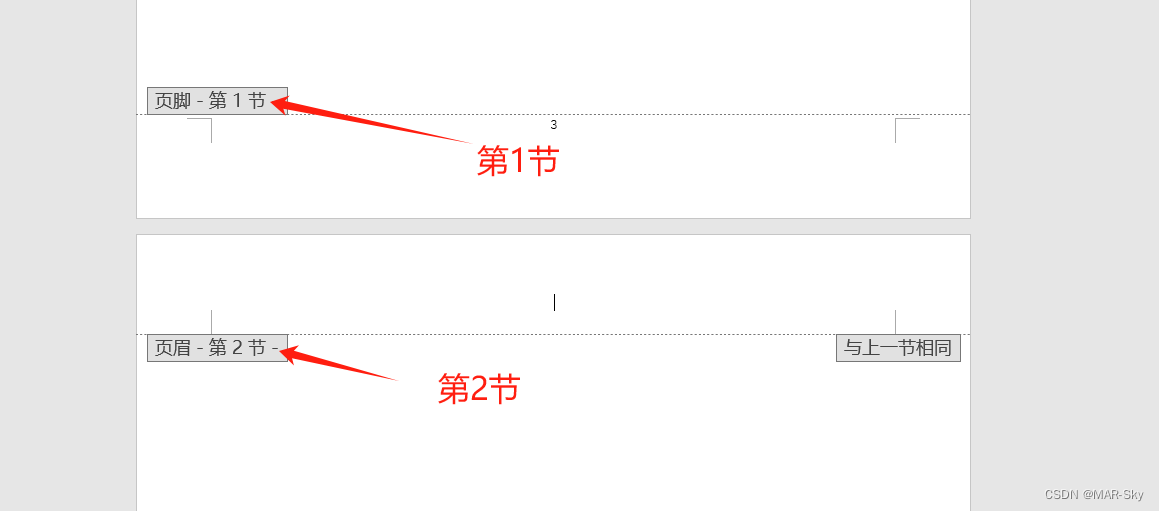

总结 实际需要注意的是,分隔符、分节符和分页符并不是一个含义 分隔符包含其他两个;分页符:是增加一页;分节符:指将文档分为几部分。 从不是第一页插入页码1步骤 1,插入默认页码 自己可以测试时通过**…...

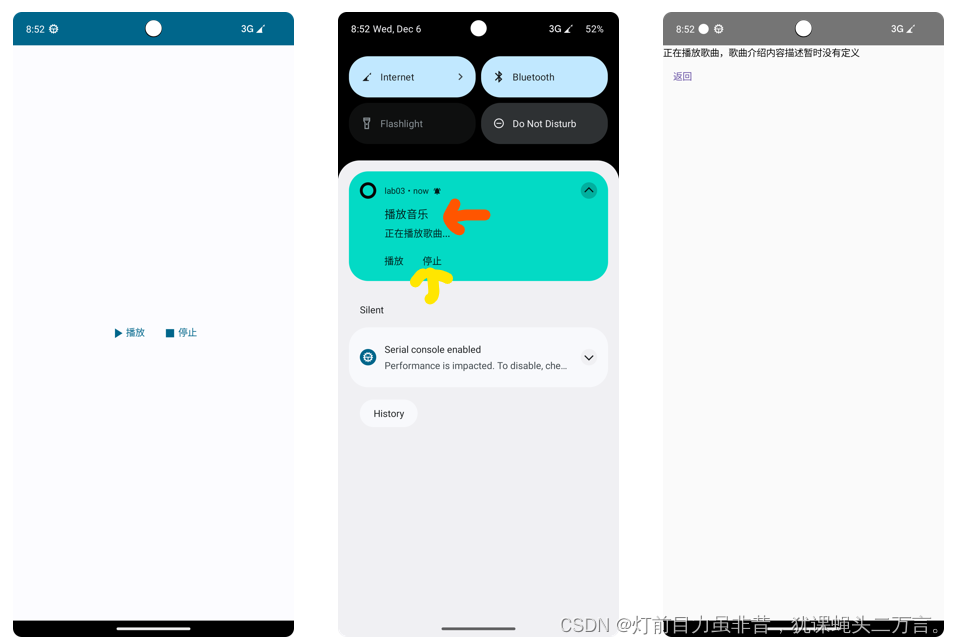

Android笔记(十七):PendingIntent简介

PendingIntent翻译成中文为“待定意图”,这个翻译很好地表示了它的涵义。PendingIntent描述了封装Intent意图以及该意图要执行的目标操作。PendingIntent封装Intent的目标行为的执行是必须满足一定条件,只有条件满足,才会触发意图的目标操作。…...

为 Compose MultiPlatform 添加 C/C++ 支持(2):在 jvm 平台使用 jni 实现桌面端与 C/C++ 互操作

前言 在上篇文章中我们已经介绍了实现 Compose MultiPlatform 对 C/C 互操作的基本思路。 并且先介绍了在 kotlin native 平台使用 cinterop 实现与 C/C 的互操作。 今天这篇文章将补充在 jvm 平台使用 jni。 在 Compose MultiPlatform 中,使用 jvm 平台的是 An…...

【PyTorch】卷积神经网络

文章目录 1. 理论介绍1.1. 从全连接层到卷积层1.1.1. 背景1.1.2. 从全连接层推导出卷积层 1.2. 卷积层1.2.1. 图像卷积1.2.2. 填充和步幅1.2.3. 多通道 1.3. 池化层(又称汇聚层)1.3.1. 背景1.3.2. 池化运算1.3.3. 填充和步幅1.3.4. 多通道 1.4. 卷积神经…...

qt可以详细写的项目或技术

1.QT 图形视图框架 2.QT 模型视图结构 3.QT列表显示大量信息 4.QT播放器 5.QT 编解码 6.QT opencv...

UE5 学习系列(二)用户操作界面及介绍

这篇博客是 UE5 学习系列博客的第二篇,在第一篇的基础上展开这篇内容。博客参考的 B 站视频资料和第一篇的链接如下: 【Note】:如果你已经完成安装等操作,可以只执行第一篇博客中 2. 新建一个空白游戏项目 章节操作,重…...

地震勘探——干扰波识别、井中地震时距曲线特点

目录 干扰波识别反射波地震勘探的干扰波 井中地震时距曲线特点 干扰波识别 有效波:可以用来解决所提出的地质任务的波;干扰波:所有妨碍辨认、追踪有效波的其他波。 地震勘探中,有效波和干扰波是相对的。例如,在反射波…...

Cesium1.95中高性能加载1500个点

一、基本方式: 图标使用.png比.svg性能要好 <template><div id"cesiumContainer"></div><div class"toolbar"><button id"resetButton">重新生成点</button><span id"countDisplay&qu…...

《Playwright:微软的自动化测试工具详解》

Playwright 简介:声明内容来自网络,将内容拼接整理出来的文档 Playwright 是微软开发的自动化测试工具,支持 Chrome、Firefox、Safari 等主流浏览器,提供多语言 API(Python、JavaScript、Java、.NET)。它的特点包括&a…...

服务器硬防的应用场景都有哪些?

服务器硬防是指一种通过硬件设备层面的安全措施来防御服务器系统受到网络攻击的方式,避免服务器受到各种恶意攻击和网络威胁,那么,服务器硬防通常都会应用在哪些场景当中呢? 硬防服务器中一般会配备入侵检测系统和预防系统&#x…...

智能在线客服平台:数字化时代企业连接用户的 AI 中枢

随着互联网技术的飞速发展,消费者期望能够随时随地与企业进行交流。在线客服平台作为连接企业与客户的重要桥梁,不仅优化了客户体验,还提升了企业的服务效率和市场竞争力。本文将探讨在线客服平台的重要性、技术进展、实际应用,并…...

【配置 YOLOX 用于按目录分类的图片数据集】

现在的图标点选越来越多,如何一步解决,采用 YOLOX 目标检测模式则可以轻松解决 要在 YOLOX 中使用按目录分类的图片数据集(每个目录代表一个类别,目录下是该类别的所有图片),你需要进行以下配置步骤&#x…...

leetcodeSQL解题:3564. 季节性销售分析

leetcodeSQL解题:3564. 季节性销售分析 题目: 表:sales ---------------------- | Column Name | Type | ---------------------- | sale_id | int | | product_id | int | | sale_date | date | | quantity | int | | price | decimal | -…...

Axios请求超时重发机制

Axios 超时重新请求实现方案 在 Axios 中实现超时重新请求可以通过以下几种方式: 1. 使用拦截器实现自动重试 import axios from axios;// 创建axios实例 const instance axios.create();// 设置超时时间 instance.defaults.timeout 5000;// 最大重试次数 cons…...

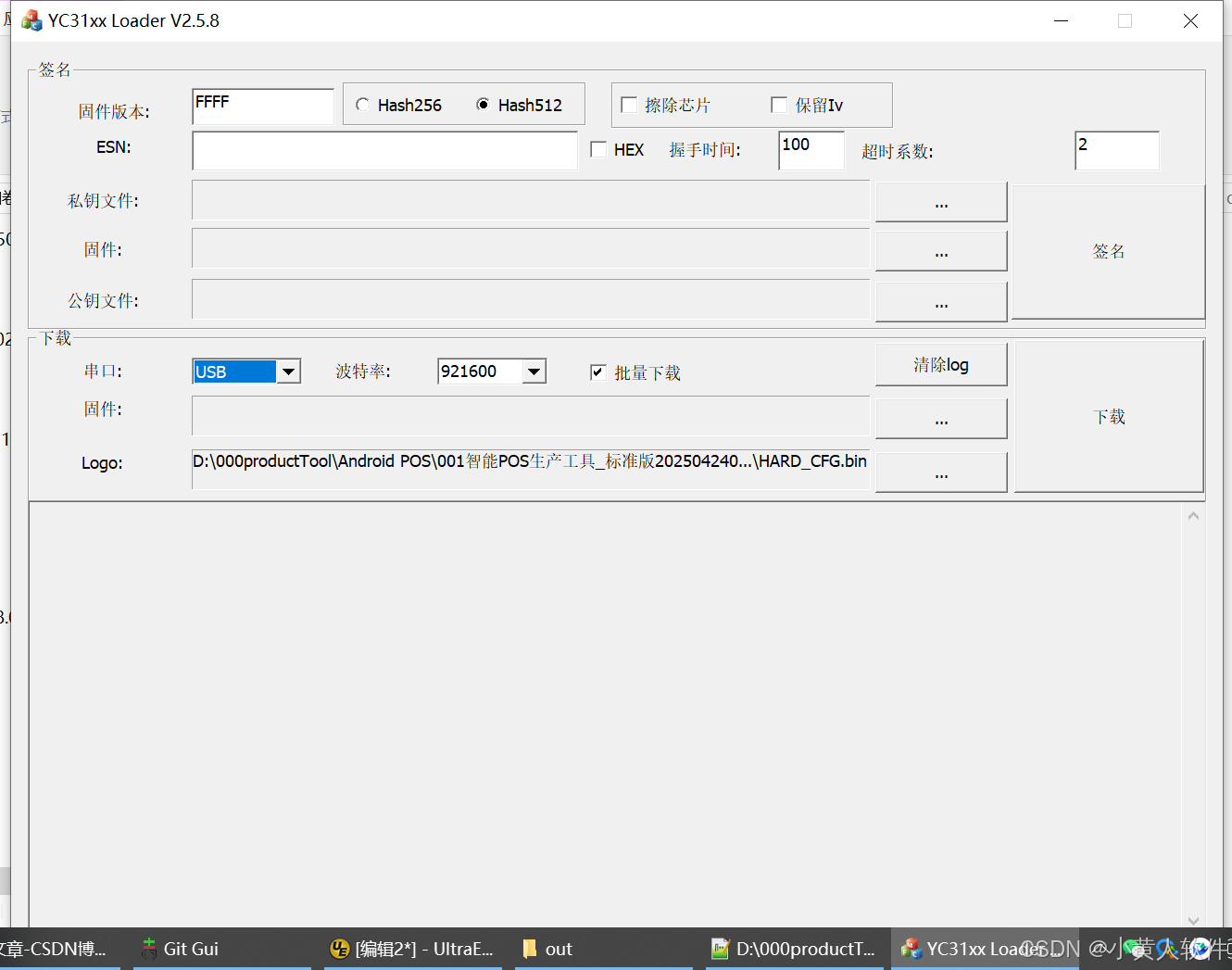

C++ Visual Studio 2017厂商给的源码没有.sln文件 易兆微芯片下载工具加开机动画下载。

1.先用Visual Studio 2017打开Yichip YC31xx loader.vcxproj,再用Visual Studio 2022打开。再保侟就有.sln文件了。 易兆微芯片下载工具加开机动画下载 ExtraDownloadFile1Info.\logo.bin|0|0|10D2000|0 MFC应用兼容CMD 在BOOL CYichipYC31xxloaderDlg::OnIni…...