J7 - 对于ResNeXt-50算法的思考

- 🍨 本文为🔗365天深度学习训练营 中的学习记录博客

- 🍖 原作者:K同学啊 | 接辅导、项目定制

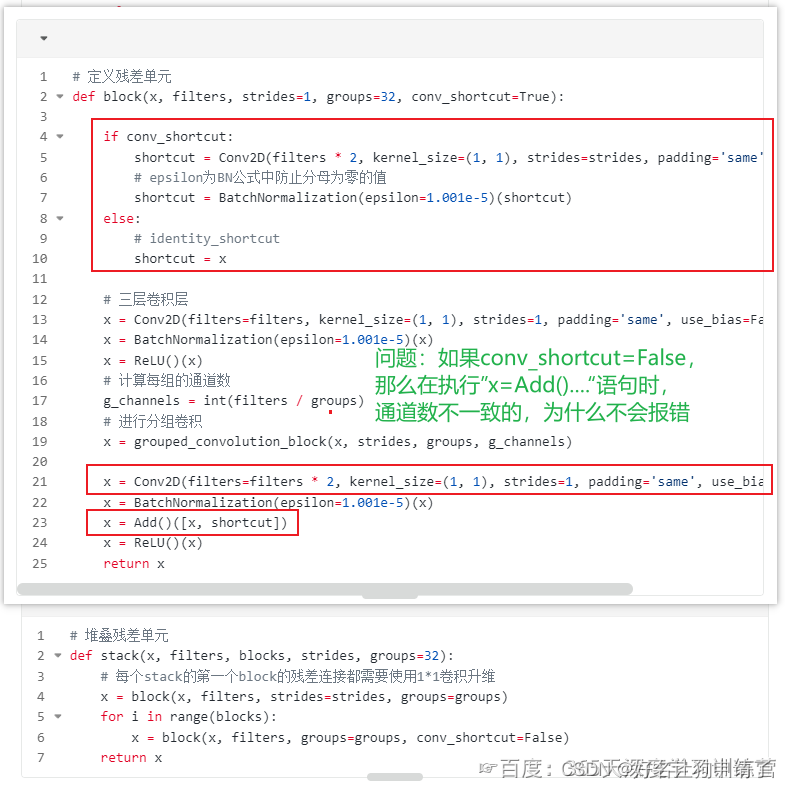

J6周有一段代码如下

思考过程

- 首先看到这个问题的描述,想到的是可能使用了向量操作的广播机制

- 然后就想想办法验证一下,想到直接把J6的tensorflow代码跑一遍

- 通过model.summary打印了模型的所有层的信息,并把信息处理成方便查看(去掉分组卷积的一大堆层)

- 发现通道数一致,并不是使用了广播机制

- 仔细分析模型的过程,得出解释

验证过程

summary直接打印的内容,(太大只能贴出部分)

Model: "model"

__________________________________________________________________________________________________Layer (type) Output Shape Param # Connected to

==================================================================================================input_4 (InputLayer) [(None, 224, 224, 3)] 0 [] zero_padding2d_6 (ZeroPadd (None, 230, 230, 3) 0 ['input_4[0][0]'] ing2D) conv2d_555 (Conv2D) (None, 112, 112, 64) 9472 ['zero_padding2d_6[0][0]'] batch_normalization_59 (Ba (None, 112, 112, 64) 256 ['conv2d_555[0][0]'] tchNormalization) re_lu_53 (ReLU) (None, 112, 112, 64) 0 ['batch_normalization_59[0][0]'] zero_padding2d_7 (ZeroPadd (None, 114, 114, 64) 0 ['re_lu_53[0][0]'] ing2D) max_pooling2d_3 (MaxPoolin (None, 56, 56, 64) 0 ['zero_padding2d_7[0][0]'] g2D) conv2d_557 (Conv2D) (None, 56, 56, 128) 8192 ['max_pooling2d_3[0][0]'] batch_normalization_61 (Ba (None, 56, 56, 128) 512 ['conv2d_557[0][0]'] tchNormalization) re_lu_54 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_61[0][0]'] lambda_514 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_515 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_516 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_517 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_518 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_519 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_520 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_521 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_522 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_523 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_524 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_525 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_526 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_527 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_528 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_529 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_530 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_531 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_532 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_533 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_534 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_535 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_536 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_537 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_538 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_539 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_540 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_541 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_542 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_543 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_544 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] lambda_545 (Lambda) (None, 56, 56, 4) 0 ['re_lu_54[0][0]'] conv2d_558 (Conv2D) (None, 56, 56, 4) 144 ['lambda_514[0][0]'] conv2d_559 (Conv2D) (None, 56, 56, 4) 144 ['lambda_515[0][0]'] conv2d_560 (Conv2D) (None, 56, 56, 4) 144 ['lambda_516[0][0]'] conv2d_561 (Conv2D) (None, 56, 56, 4) 144 ['lambda_517[0][0]'] conv2d_562 (Conv2D) (None, 56, 56, 4) 144 ['lambda_518[0][0]'] conv2d_563 (Conv2D) (None, 56, 56, 4) 144 ['lambda_519[0][0]'] conv2d_564 (Conv2D) (None, 56, 56, 4) 144 ['lambda_520[0][0]'] conv2d_565 (Conv2D) (None, 56, 56, 4) 144 ['lambda_521[0][0]'] conv2d_566 (Conv2D) (None, 56, 56, 4) 144 ['lambda_522[0][0]'] conv2d_567 (Conv2D) (None, 56, 56, 4) 144 ['lambda_523[0][0]'] conv2d_568 (Conv2D) (None, 56, 56, 4) 144 ['lambda_524[0][0]'] conv2d_569 (Conv2D) (None, 56, 56, 4) 144 ['lambda_525[0][0]'] conv2d_570 (Conv2D) (None, 56, 56, 4) 144 ['lambda_526[0][0]'] conv2d_571 (Conv2D) (None, 56, 56, 4) 144 ['lambda_527[0][0]'] conv2d_572 (Conv2D) (None, 56, 56, 4) 144 ['lambda_528[0][0]'] conv2d_573 (Conv2D) (None, 56, 56, 4) 144 ['lambda_529[0][0]'] conv2d_574 (Conv2D) (None, 56, 56, 4) 144 ['lambda_530[0][0]'] conv2d_575 (Conv2D) (None, 56, 56, 4) 144 ['lambda_531[0][0]'] conv2d_576 (Conv2D) (None, 56, 56, 4) 144 ['lambda_532[0][0]'] conv2d_577 (Conv2D) (None, 56, 56, 4) 144 ['lambda_533[0][0]'] conv2d_578 (Conv2D) (None, 56, 56, 4) 144 ['lambda_534[0][0]'] conv2d_579 (Conv2D) (None, 56, 56, 4) 144 ['lambda_535[0][0]'] conv2d_580 (Conv2D) (None, 56, 56, 4) 144 ['lambda_536[0][0]'] conv2d_581 (Conv2D) (None, 56, 56, 4) 144 ['lambda_537[0][0]'] conv2d_582 (Conv2D) (None, 56, 56, 4) 144 ['lambda_538[0][0]'] conv2d_583 (Conv2D) (None, 56, 56, 4) 144 ['lambda_539[0][0]'] conv2d_584 (Conv2D) (None, 56, 56, 4) 144 ['lambda_540[0][0]'] conv2d_585 (Conv2D) (None, 56, 56, 4) 144 ['lambda_541[0][0]'] conv2d_586 (Conv2D) (None, 56, 56, 4) 144 ['lambda_542[0][0]'] conv2d_587 (Conv2D) (None, 56, 56, 4) 144 ['lambda_543[0][0]'] conv2d_588 (Conv2D) (None, 56, 56, 4) 144 ['lambda_544[0][0]'] conv2d_589 (Conv2D) (None, 56, 56, 4) 144 ['lambda_545[0][0]'] concatenate_16 (Concatenat (None, 56, 56, 128) 0 ['conv2d_558[0][0]', e) 'conv2d_559[0][0]', 'conv2d_560[0][0]', 'conv2d_561[0][0]', 'conv2d_562[0][0]', 'conv2d_563[0][0]', 'conv2d_564[0][0]', 'conv2d_565[0][0]', 'conv2d_566[0][0]', 'conv2d_567[0][0]', 'conv2d_568[0][0]', 'conv2d_569[0][0]', 'conv2d_570[0][0]', 'conv2d_571[0][0]', 'conv2d_572[0][0]', 'conv2d_573[0][0]', 'conv2d_574[0][0]', 'conv2d_575[0][0]', 'conv2d_576[0][0]', 'conv2d_577[0][0]', 'conv2d_578[0][0]', 'conv2d_579[0][0]', 'conv2d_580[0][0]', 'conv2d_581[0][0]', 'conv2d_582[0][0]', 'conv2d_583[0][0]', 'conv2d_584[0][0]', 'conv2d_585[0][0]', 'conv2d_586[0][0]', 'conv2d_587[0][0]', 'conv2d_588[0][0]', 'conv2d_589[0][0]'] batch_normalization_62 (Ba (None, 56, 56, 128) 512 ['concatenate_16[0][0]'] tchNormalization) re_lu_55 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_62[0][0]'] conv2d_590 (Conv2D) (None, 56, 56, 256) 32768 ['re_lu_55[0][0]'] conv2d_556 (Conv2D) (None, 56, 56, 256) 16384 ['max_pooling2d_3[0][0]'] batch_normalization_63 (Ba (None, 56, 56, 256) 1024 ['conv2d_590[0][0]'] tchNormalization) batch_normalization_60 (Ba (None, 56, 56, 256) 1024 ['conv2d_556[0][0]'] tchNormalization) add_16 (Add) (None, 56, 56, 256) 0 ['batch_normalization_63[0][0]', 'batch_normalization_60[0][0]'] re_lu_56 (ReLU) (None, 56, 56, 256) 0 ['add_16[0][0]'] conv2d_591 (Conv2D) (None, 56, 56, 128) 32768 ['re_lu_56[0][0]'] batch_normalization_64 (Ba (None, 56, 56, 128) 512 ['conv2d_591[0][0]'] tchNormalization) re_lu_57 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_64[0][0]'] lambda_546 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_547 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_548 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_549 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_550 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_551 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_552 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_553 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_554 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_555 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_556 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_557 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_558 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_559 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_560 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_561 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_562 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_563 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_564 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_565 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_566 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_567 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_568 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_569 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_570 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_571 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_572 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_573 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_574 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_575 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_576 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] lambda_577 (Lambda) (None, 56, 56, 4) 0 ['re_lu_57[0][0]'] conv2d_592 (Conv2D) (None, 56, 56, 4) 144 ['lambda_546[0][0]'] conv2d_593 (Conv2D) (None, 56, 56, 4) 144 ['lambda_547[0][0]'] conv2d_594 (Conv2D) (None, 56, 56, 4) 144 ['lambda_548[0][0]'] conv2d_595 (Conv2D) (None, 56, 56, 4) 144 ['lambda_549[0][0]'] conv2d_596 (Conv2D) (None, 56, 56, 4) 144 ['lambda_550[0][0]'] conv2d_597 (Conv2D) (None, 56, 56, 4) 144 ['lambda_551[0][0]'] conv2d_598 (Conv2D) (None, 56, 56, 4) 144 ['lambda_552[0][0]'] conv2d_599 (Conv2D) (None, 56, 56, 4) 144 ['lambda_553[0][0]'] conv2d_600 (Conv2D) (None, 56, 56, 4) 144 ['lambda_554[0][0]'] conv2d_601 (Conv2D) (None, 56, 56, 4) 144 ['lambda_555[0][0]'] conv2d_602 (Conv2D) (None, 56, 56, 4) 144 ['lambda_556[0][0]'] conv2d_603 (Conv2D) (None, 56, 56, 4) 144 ['lambda_557[0][0]'] conv2d_604 (Conv2D) (None, 56, 56, 4) 144 ['lambda_558[0][0]'] conv2d_605 (Conv2D) (None, 56, 56, 4) 144 ['lambda_559[0][0]'] conv2d_606 (Conv2D) (None, 56, 56, 4) 144 ['lambda_560[0][0]'] conv2d_607 (Conv2D) (None, 56, 56, 4) 144 ['lambda_561[0][0]'] conv2d_608 (Conv2D) (None, 56, 56, 4) 144 ['lambda_562[0][0]'] conv2d_609 (Conv2D) (None, 56, 56, 4) 144 ['lambda_563[0][0]'] conv2d_610 (Conv2D) (None, 56, 56, 4) 144 ['lambda_564[0][0]'] conv2d_611 (Conv2D) (None, 56, 56, 4) 144 ['lambda_565[0][0]'] conv2d_612 (Conv2D) (None, 56, 56, 4) 144 ['lambda_566[0][0]'] conv2d_613 (Conv2D) (None, 56, 56, 4) 144 ['lambda_567[0][0]'] conv2d_614 (Conv2D) (None, 56, 56, 4) 144 ['lambda_568[0][0]'] conv2d_615 (Conv2D) (None, 56, 56, 4) 144 ['lambda_569[0][0]'] conv2d_616 (Conv2D) (None, 56, 56, 4) 144 ['lambda_570[0][0]'] conv2d_617 (Conv2D) (None, 56, 56, 4) 144 ['lambda_571[0][0]'] conv2d_618 (Conv2D) (None, 56, 56, 4) 144 ['lambda_572[0][0]'] conv2d_619 (Conv2D) (None, 56, 56, 4) 144 ['lambda_573[0][0]'] conv2d_620 (Conv2D) (None, 56, 56, 4) 144 ['lambda_574[0][0]'] conv2d_621 (Conv2D) (None, 56, 56, 4) 144 ['lambda_575[0][0]'] conv2d_622 (Conv2D) (None, 56, 56, 4) 144 ['lambda_576[0][0]'] conv2d_623 (Conv2D) (None, 56, 56, 4) 144 ['lambda_577[0][0]'] concatenate_17 (Concatenat (None, 56, 56, 128) 0 ['conv2d_592[0][0]', e) 'conv2d_593[0][0]', 'conv2d_594[0][0]', 'conv2d_595[0][0]', 'conv2d_596[0][0]', 'conv2d_597[0][0]', 'conv2d_598[0][0]', 'conv2d_599[0][0]', 'conv2d_600[0][0]', 'conv2d_601[0][0]', 'conv2d_602[0][0]', 'conv2d_603[0][0]', 'conv2d_604[0][0]', 'conv2d_605[0][0]', 'conv2d_606[0][0]', 'conv2d_607[0][0]', 'conv2d_608[0][0]', 'conv2d_609[0][0]', 'conv2d_610[0][0]', 'conv2d_611[0][0]', 'conv2d_612[0][0]', 'conv2d_613[0][0]', 'conv2d_614[0][0]', 'conv2d_615[0][0]', 'conv2d_616[0][0]', 'conv2d_617[0][0]', 'conv2d_618[0][0]', 'conv2d_619[0][0]', 'conv2d_620[0][0]', 'conv2d_621[0][0]', 'conv2d_622[0][0]', 'conv2d_623[0][0]'] batch_normalization_65 (Ba (None, 56, 56, 128) 512 ['concatenate_17[0][0]'] tchNormalization) re_lu_58 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_65[0][0]']

打印的层中,有大量的lambda,对照源代码,lambda操作在分组卷积内,我们可以把这一堆lambda一直到下面的concatenate全部看作在做分组卷积,分组卷积并不改变通道数,只是简化参数量。

# 把summary输出到文件中,使用python脚本处理掉这堆lambda

# 打开文件

f = open('summary')

# 读取内容

content = f.read()

# 按换行切分

lines = content.split('\n')clean_lines = []

# 过滤处理

for line in lines:if len(line.strip()) == 0:continueif len(line) - len(line.strip()) == 78 or len(line) - len(line.strip()) == 79:# 去掉concatenate那一堆connect tocontinue if 'lambda' in line:continueclean_lines.append(line)

for line in clean_lines:print(line)

处理后的模型结构如下

Model: "model"

__________________________________________________________________________________________________Layer (type) Output Shape Param # Connected to

==================================================================================================input_4 (InputLayer) [(None, 224, 224, 3)] 0 []zero_padding2d_6 (ZeroPadd (None, 230, 230, 3) 0 ['input_4[0][0]']ing2D)conv2d_555 (Conv2D) (None, 112, 112, 64) 9472 ['zero_padding2d_6[0][0]']batch_normalization_59 (Ba (None, 112, 112, 64) 256 ['conv2d_555[0][0]']tchNormalization)re_lu_53 (ReLU) (None, 112, 112, 64) 0 ['batch_normalization_59[0][0]']zero_padding2d_7 (ZeroPadd (None, 114, 114, 64) 0 ['re_lu_53[0][0]']ing2D)max_pooling2d_3 (MaxPoolin (None, 56, 56, 64) 0 ['zero_padding2d_7[0][0]']g2D)conv2d_557 (Conv2D) (None, 56, 56, 128) 8192 ['max_pooling2d_3[0][0]']batch_normalization_61 (Ba (None, 56, 56, 128) 512 ['conv2d_557[0][0]']tchNormalization)re_lu_54 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_61[0][0]']concatenate_16 (Concatenat (None, 56, 56, 128) 0 ['conv2d_558[0][0]',e) 'conv2d_559[0][0]',batch_normalization_62 (Ba (None, 56, 56, 128) 512 ['concatenate_16[0][0]']tchNormalization)re_lu_55 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_62[0][0]']conv2d_590 (Conv2D) (None, 56, 56, 256) 32768 ['re_lu_55[0][0]']conv2d_556 (Conv2D) (None, 56, 56, 256) 16384 ['max_pooling2d_3[0][0]']batch_normalization_63 (Ba (None, 56, 56, 256) 1024 ['conv2d_590[0][0]']tchNormalization)batch_normalization_60 (Ba (None, 56, 56, 256) 1024 ['conv2d_556[0][0]']tchNormalization)add_16 (Add) (None, 56, 56, 256) 0 ['batch_normalization_63[0][0]','batch_normalization_60[0][0]']re_lu_56 (ReLU) (None, 56, 56, 256) 0 ['add_16[0][0]']conv2d_591 (Conv2D) (None, 56, 56, 128) 32768 ['re_lu_56[0][0]']batch_normalization_64 (Ba (None, 56, 56, 128) 512 ['conv2d_591[0][0]']tchNormalization)re_lu_57 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_64[0][0]']concatenate_17 (Concatenat (None, 56, 56, 128) 0 ['conv2d_592[0][0]',e) 'conv2d_593[0][0]',batch_normalization_65 (Ba (None, 56, 56, 128) 512 ['concatenate_17[0][0]']tchNormalization)re_lu_58 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_65[0][0]']conv2d_624 (Conv2D) (None, 56, 56, 256) 32768 ['re_lu_58[0][0]']batch_normalization_66 (Ba (None, 56, 56, 256) 1024 ['conv2d_624[0][0]']tchNormalization)add_17 (Add) (None, 56, 56, 256) 0 ['batch_normalization_66[0][0]','re_lu_56[0][0]']re_lu_59 (ReLU) (None, 56, 56, 256) 0 ['add_17[0][0]']conv2d_625 (Conv2D) (None, 56, 56, 128) 32768 ['re_lu_59[0][0]']batch_normalization_67 (Ba (None, 56, 56, 128) 512 ['conv2d_625[0][0]']tchNormalization)re_lu_60 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_67[0][0]']concatenate_18 (Concatenat (None, 56, 56, 128) 0 ['conv2d_626[0][0]',e) 'conv2d_627[0][0]',batch_normalization_68 (Ba (None, 56, 56, 128) 512 ['concatenate_18[0][0]']tchNormalization)re_lu_61 (ReLU) (None, 56, 56, 128) 0 ['batch_normalization_68[0][0]']conv2d_658 (Conv2D) (None, 56, 56, 256) 32768 ['re_lu_61[0][0]']batch_normalization_69 (Ba (None, 56, 56, 256) 1024 ['conv2d_658[0][0]']tchNormalization)add_18 (Add) (None, 56, 56, 256) 0 ['batch_normalization_69[0][0]','re_lu_59[0][0]']re_lu_62 (ReLU) (None, 56, 56, 256) 0 ['add_18[0][0]']conv2d_660 (Conv2D) (None, 56, 56, 256) 65536 ['re_lu_62[0][0]']batch_normalization_71 (Ba (None, 56, 56, 256) 1024 ['conv2d_660[0][0]']tchNormalization)re_lu_63 (ReLU) (None, 56, 56, 256) 0 ['batch_normalization_71[0][0]']concatenate_19 (Concatenat (None, 28, 28, 256) 0 ['conv2d_661[0][0]',e) 'conv2d_662[0][0]',batch_normalization_72 (Ba (None, 28, 28, 256) 1024 ['concatenate_19[0][0]']tchNormalization)re_lu_64 (ReLU) (None, 28, 28, 256) 0 ['batch_normalization_72[0][0]']conv2d_693 (Conv2D) (None, 28, 28, 512) 131072 ['re_lu_64[0][0]']conv2d_659 (Conv2D) (None, 28, 28, 512) 131072 ['re_lu_62[0][0]']batch_normalization_73 (Ba (None, 28, 28, 512) 2048 ['conv2d_693[0][0]']tchNormalization)batch_normalization_70 (Ba (None, 28, 28, 512) 2048 ['conv2d_659[0][0]']tchNormalization)add_19 (Add) (None, 28, 28, 512) 0 ['batch_normalization_73[0][0]','batch_normalization_70[0][0]']re_lu_65 (ReLU) (None, 28, 28, 512) 0 ['add_19[0][0]']conv2d_694 (Conv2D) (None, 28, 28, 256) 131072 ['re_lu_65[0][0]']batch_normalization_74 (Ba (None, 28, 28, 256) 1024 ['conv2d_694[0][0]']tchNormalization)re_lu_66 (ReLU) (None, 28, 28, 256) 0 ['batch_normalization_74[0][0]']concatenate_20 (Concatenat (None, 28, 28, 256) 0 ['conv2d_695[0][0]',e) 'conv2d_696[0][0]',batch_normalization_75 (Ba (None, 28, 28, 256) 1024 ['concatenate_20[0][0]']tchNormalization)re_lu_67 (ReLU) (None, 28, 28, 256) 0 ['batch_normalization_75[0][0]']conv2d_727 (Conv2D) (None, 28, 28, 512) 131072 ['re_lu_67[0][0]']batch_normalization_76 (Ba (None, 28, 28, 512) 2048 ['conv2d_727[0][0]']tchNormalization)add_20 (Add) (None, 28, 28, 512) 0 ['batch_normalization_76[0][0]','re_lu_65[0][0]']re_lu_68 (ReLU) (None, 28, 28, 512) 0 ['add_20[0][0]']conv2d_728 (Conv2D) (None, 28, 28, 256) 131072 ['re_lu_68[0][0]']batch_normalization_77 (Ba (None, 28, 28, 256) 1024 ['conv2d_728[0][0]']tchNormalization)re_lu_69 (ReLU) (None, 28, 28, 256) 0 ['batch_normalization_77[0][0]']concatenate_21 (Concatenat (None, 28, 28, 256) 0 ['conv2d_729[0][0]',e) 'conv2d_730[0][0]',batch_normalization_78 (Ba (None, 28, 28, 256) 1024 ['concatenate_21[0][0]']tchNormalization)re_lu_70 (ReLU) (None, 28, 28, 256) 0 ['batch_normalization_78[0][0]']conv2d_761 (Conv2D) (None, 28, 28, 512) 131072 ['re_lu_70[0][0]']batch_normalization_79 (Ba (None, 28, 28, 512) 2048 ['conv2d_761[0][0]']tchNormalization)add_21 (Add) (None, 28, 28, 512) 0 ['batch_normalization_79[0][0]','re_lu_68[0][0]']re_lu_71 (ReLU) (None, 28, 28, 512) 0 ['add_21[0][0]']conv2d_762 (Conv2D) (None, 28, 28, 256) 131072 ['re_lu_71[0][0]']batch_normalization_80 (Ba (None, 28, 28, 256) 1024 ['conv2d_762[0][0]']tchNormalization)re_lu_72 (ReLU) (None, 28, 28, 256) 0 ['batch_normalization_80[0][0]']concatenate_22 (Concatenat (None, 28, 28, 256) 0 ['conv2d_763[0][0]',e) 'conv2d_764[0][0]',batch_normalization_81 (Ba (None, 28, 28, 256) 1024 ['concatenate_22[0][0]']tchNormalization)re_lu_73 (ReLU) (None, 28, 28, 256) 0 ['batch_normalization_81[0][0]']conv2d_795 (Conv2D) (None, 28, 28, 512) 131072 ['re_lu_73[0][0]']batch_normalization_82 (Ba (None, 28, 28, 512) 2048 ['conv2d_795[0][0]']tchNormalization)add_22 (Add) (None, 28, 28, 512) 0 ['batch_normalization_82[0][0]','re_lu_71[0][0]']re_lu_74 (ReLU) (None, 28, 28, 512) 0 ['add_22[0][0]']conv2d_797 (Conv2D) (None, 28, 28, 512) 262144 ['re_lu_74[0][0]']batch_normalization_84 (Ba (None, 28, 28, 512) 2048 ['conv2d_797[0][0]']tchNormalization)re_lu_75 (ReLU) (None, 28, 28, 512) 0 ['batch_normalization_84[0][0]']concatenate_23 (Concatenat (None, 14, 14, 512) 0 ['conv2d_798[0][0]',e) 'conv2d_799[0][0]',batch_normalization_85 (Ba (None, 14, 14, 512) 2048 ['concatenate_23[0][0]']tchNormalization)re_lu_76 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_85[0][0]']conv2d_830 (Conv2D) (None, 14, 14, 1024) 524288 ['re_lu_76[0][0]']conv2d_796 (Conv2D) (None, 14, 14, 1024) 524288 ['re_lu_74[0][0]']batch_normalization_86 (Ba (None, 14, 14, 1024) 4096 ['conv2d_830[0][0]']tchNormalization)batch_normalization_83 (Ba (None, 14, 14, 1024) 4096 ['conv2d_796[0][0]']tchNormalization)add_23 (Add) (None, 14, 14, 1024) 0 ['batch_normalization_86[0][0]','batch_normalization_83[0][0]']re_lu_77 (ReLU) (None, 14, 14, 1024) 0 ['add_23[0][0]']conv2d_831 (Conv2D) (None, 14, 14, 512) 524288 ['re_lu_77[0][0]']batch_normalization_87 (Ba (None, 14, 14, 512) 2048 ['conv2d_831[0][0]']tchNormalization)re_lu_78 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_87[0][0]']concatenate_24 (Concatenat (None, 14, 14, 512) 0 ['conv2d_832[0][0]',e) 'conv2d_833[0][0]',batch_normalization_88 (Ba (None, 14, 14, 512) 2048 ['concatenate_24[0][0]']tchNormalization)re_lu_79 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_88[0][0]']conv2d_864 (Conv2D) (None, 14, 14, 1024) 524288 ['re_lu_79[0][0]']batch_normalization_89 (Ba (None, 14, 14, 1024) 4096 ['conv2d_864[0][0]']tchNormalization)add_24 (Add) (None, 14, 14, 1024) 0 ['batch_normalization_89[0][0]','re_lu_77[0][0]']re_lu_80 (ReLU) (None, 14, 14, 1024) 0 ['add_24[0][0]']conv2d_865 (Conv2D) (None, 14, 14, 512) 524288 ['re_lu_80[0][0]']batch_normalization_90 (Ba (None, 14, 14, 512) 2048 ['conv2d_865[0][0]']tchNormalization)re_lu_81 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_90[0][0]']concatenate_25 (Concatenat (None, 14, 14, 512) 0 ['conv2d_866[0][0]',e) 'conv2d_867[0][0]',batch_normalization_91 (Ba (None, 14, 14, 512) 2048 ['concatenate_25[0][0]']tchNormalization)re_lu_82 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_91[0][0]']conv2d_898 (Conv2D) (None, 14, 14, 1024) 524288 ['re_lu_82[0][0]']batch_normalization_92 (Ba (None, 14, 14, 1024) 4096 ['conv2d_898[0][0]']tchNormalization)add_25 (Add) (None, 14, 14, 1024) 0 ['batch_normalization_92[0][0]','re_lu_80[0][0]']re_lu_83 (ReLU) (None, 14, 14, 1024) 0 ['add_25[0][0]']conv2d_899 (Conv2D) (None, 14, 14, 512) 524288 ['re_lu_83[0][0]']batch_normalization_93 (Ba (None, 14, 14, 512) 2048 ['conv2d_899[0][0]']tchNormalization)re_lu_84 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_93[0][0]']concatenate_26 (Concatenat (None, 14, 14, 512) 0 ['conv2d_900[0][0]',e) 'conv2d_901[0][0]',batch_normalization_94 (Ba (None, 14, 14, 512) 2048 ['concatenate_26[0][0]']tchNormalization)re_lu_85 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_94[0][0]']conv2d_932 (Conv2D) (None, 14, 14, 1024) 524288 ['re_lu_85[0][0]']batch_normalization_95 (Ba (None, 14, 14, 1024) 4096 ['conv2d_932[0][0]']tchNormalization)add_26 (Add) (None, 14, 14, 1024) 0 ['batch_normalization_95[0][0]','re_lu_83[0][0]']re_lu_86 (ReLU) (None, 14, 14, 1024) 0 ['add_26[0][0]']conv2d_933 (Conv2D) (None, 14, 14, 512) 524288 ['re_lu_86[0][0]']batch_normalization_96 (Ba (None, 14, 14, 512) 2048 ['conv2d_933[0][0]']tchNormalization)re_lu_87 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_96[0][0]']concatenate_27 (Concatenat (None, 14, 14, 512) 0 ['conv2d_934[0][0]',e) 'conv2d_935[0][0]',batch_normalization_97 (Ba (None, 14, 14, 512) 2048 ['concatenate_27[0][0]']tchNormalization)re_lu_88 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_97[0][0]']conv2d_966 (Conv2D) (None, 14, 14, 1024) 524288 ['re_lu_88[0][0]']batch_normalization_98 (Ba (None, 14, 14, 1024) 4096 ['conv2d_966[0][0]']tchNormalization)add_27 (Add) (None, 14, 14, 1024) 0 ['batch_normalization_98[0][0]','re_lu_86[0][0]']re_lu_89 (ReLU) (None, 14, 14, 1024) 0 ['add_27[0][0]']conv2d_967 (Conv2D) (None, 14, 14, 512) 524288 ['re_lu_89[0][0]']batch_normalization_99 (Ba (None, 14, 14, 512) 2048 ['conv2d_967[0][0]']tchNormalization)re_lu_90 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_99[0][0]']concatenate_28 (Concatenat (None, 14, 14, 512) 0 ['conv2d_968[0][0]',e) 'conv2d_969[0][0]',batch_normalization_100 (B (None, 14, 14, 512) 2048 ['concatenate_28[0][0]']atchNormalization)re_lu_91 (ReLU) (None, 14, 14, 512) 0 ['batch_normalization_100[0][0]']conv2d_1000 (Conv2D) (None, 14, 14, 1024) 524288 ['re_lu_91[0][0]']batch_normalization_101 (B (None, 14, 14, 1024) 4096 ['conv2d_1000[0][0]']atchNormalization)add_28 (Add) (None, 14, 14, 1024) 0 ['batch_normalization_101[0][0]','re_lu_89[0][0]']re_lu_92 (ReLU) (None, 14, 14, 1024) 0 ['add_28[0][0]']conv2d_1002 (Conv2D) (None, 14, 14, 1024) 1048576 ['re_lu_92[0][0]']batch_normalization_103 (B (None, 14, 14, 1024) 4096 ['conv2d_1002[0][0]']atchNormalization)re_lu_93 (ReLU) (None, 14, 14, 1024) 0 ['batch_normalization_103[0][0]']concatenate_29 (Concatenat (None, 7, 7, 1024) 0 ['conv2d_1003[0][0]',e) 'conv2d_1004[0][0]',batch_normalization_104 (B (None, 7, 7, 1024) 4096 ['concatenate_29[0][0]']atchNormalization)re_lu_94 (ReLU) (None, 7, 7, 1024) 0 ['batch_normalization_104[0][0]']conv2d_1035 (Conv2D) (None, 7, 7, 2048) 2097152 ['re_lu_94[0][0]']conv2d_1001 (Conv2D) (None, 7, 7, 2048) 2097152 ['re_lu_92[0][0]']batch_normalization_105 (B (None, 7, 7, 2048) 8192 ['conv2d_1035[0][0]']atchNormalization)batch_normalization_102 (B (None, 7, 7, 2048) 8192 ['conv2d_1001[0][0]']atchNormalization)add_29 (Add) (None, 7, 7, 2048) 0 ['batch_normalization_105[0][0]','batch_normalization_102[0][0]']re_lu_95 (ReLU) (None, 7, 7, 2048) 0 ['add_29[0][0]']conv2d_1036 (Conv2D) (None, 7, 7, 1024) 2097152 ['re_lu_95[0][0]']batch_normalization_106 (B (None, 7, 7, 1024) 4096 ['conv2d_1036[0][0]']atchNormalization)re_lu_96 (ReLU) (None, 7, 7, 1024) 0 ['batch_normalization_106[0][0]']concatenate_30 (Concatenat (None, 7, 7, 1024) 0 ['conv2d_1037[0][0]',e) 'conv2d_1038[0][0]',batch_normalization_107 (B (None, 7, 7, 1024) 4096 ['concatenate_30[0][0]']atchNormalization)re_lu_97 (ReLU) (None, 7, 7, 1024) 0 ['batch_normalization_107[0][0]']conv2d_1069 (Conv2D) (None, 7, 7, 2048) 2097152 ['re_lu_97[0][0]']batch_normalization_108 (B (None, 7, 7, 2048) 8192 ['conv2d_1069[0][0]']atchNormalization)add_30 (Add) (None, 7, 7, 2048) 0 ['batch_normalization_108[0][0]','re_lu_95[0][0]']re_lu_98 (ReLU) (None, 7, 7, 2048) 0 ['add_30[0][0]']conv2d_1070 (Conv2D) (None, 7, 7, 1024) 2097152 ['re_lu_98[0][0]']batch_normalization_109 (B (None, 7, 7, 1024) 4096 ['conv2d_1070[0][0]']atchNormalization)re_lu_99 (ReLU) (None, 7, 7, 1024) 0 ['batch_normalization_109[0][0]']concatenate_31 (Concatenat (None, 7, 7, 1024) 0 ['conv2d_1071[0][0]',e) 'conv2d_1072[0][0]',batch_normalization_110 (B (None, 7, 7, 1024) 4096 ['concatenate_31[0][0]']atchNormalization)re_lu_100 (ReLU) (None, 7, 7, 1024) 0 ['batch_normalization_110[0][0]']conv2d_1103 (Conv2D) (None, 7, 7, 2048) 2097152 ['re_lu_100[0][0]']batch_normalization_111 (B (None, 7, 7, 2048) 8192 ['conv2d_1103[0][0]']atchNormalization)add_31 (Add) (None, 7, 7, 2048) 0 ['batch_normalization_111[0][0]','re_lu_98[0][0]']re_lu_101 (ReLU) (None, 7, 7, 2048) 0 ['add_31[0][0]']global_average_pooling2d_1 (None, 2048) 0 ['re_lu_101[0][0]'](GlobalAveragePooling2D)dense_1 (Dense) (None, 1000) 2049000 ['global_average_pooling2d_1[0][0]']

观察Add的connected to,发现全都是一样的,并没有出现不一致的情况,竟然和我想的不一样,并没有使用什么广播机制。仔细观察模型的过程才发现,stack的block中,x和filters通道不一致,此时如果直接相加会报错,所以第一个block做了一个通道数*2的卷积。由于后续的filters没有变,输出的通道都是filters*2,所以也可以直接相加。

相关文章:

J7 - 对于ResNeXt-50算法的思考

🍨 本文为🔗365天深度学习训练营 中的学习记录博客🍖 原作者:K同学啊 | 接辅导、项目定制 J6周有一段代码如下 思考过程 首先看到这个问题的描述,想到的是可能使用了向量操作的广播机制然后就想想办法验证一下&…...

基础篇)

R3F(React Three Fiber)基础篇

之前一直在做ThreeJS方向,整理了两篇R3F(React Three Fiber)的文档,这是基础篇,如果您的业务场景需要使用R3F,您又对R3F不太了解,或者不想使用R3F全英文文档,您可以参考一下这篇&…...

torch\tensorflow在大语言模型LLM中的作用

文章目录 torch\tensorflow在大语言模型LLM中的作用 torch\tensorflow在大语言模型LLM中的作用 在大型语言模型(LLM)中,PyTorch和TensorFlow这两个深度学习框架起着至关重要的作用。它们为构建、训练和部署LLM提供了必要的工具和基础设施。 …...

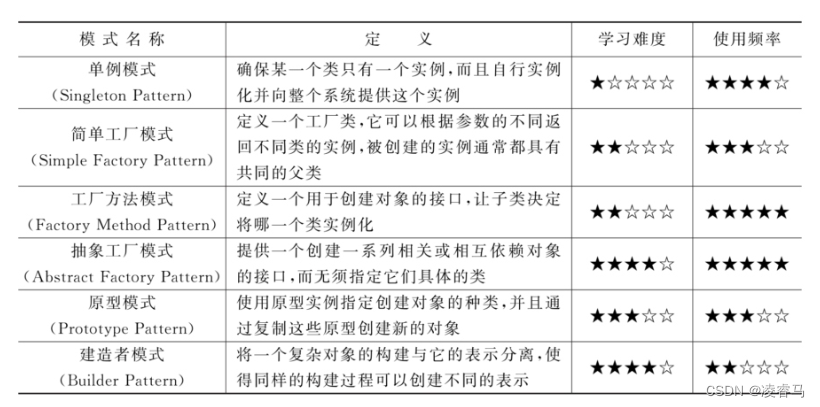

设计模式-创建型模式-单例模式

0 引言 创建型模式(Creational Pattern)关注对象的创建过程,是一类最常用的设计模式,每个创建型模式都通过采用不同的解决方案来回答3个问题:创建什么(What),由谁创建(W…...

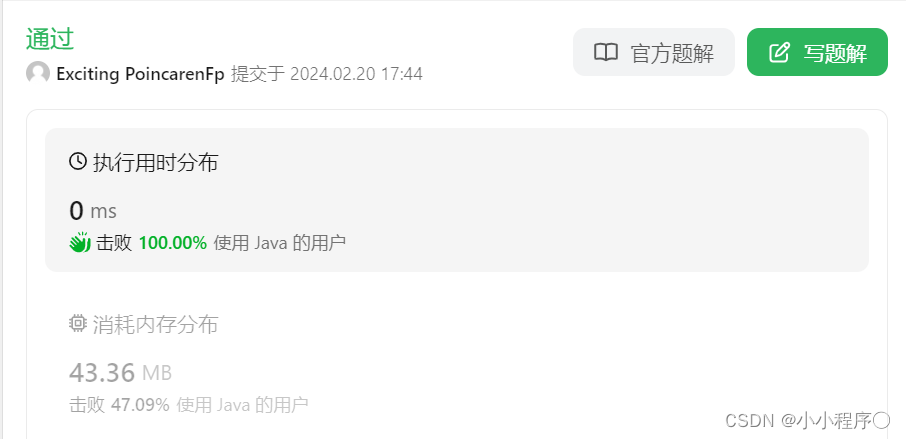

备战蓝桥杯—— 双指针技巧巧答链表1

对于单链表相关的问题,双指针技巧是一种非常广泛且有效的解决方法。以下是一些常见问题以及使用双指针技巧解决: 合并两个有序链表: 使用两个指针分别指向两个链表的头部,逐一比较节点的值,将较小的节点链接到结果链表…...

微信小程序返回上一级页面并自动刷新数据

文章目录 前言一、获取小程序栈二、生命周期触发总结 前言 界面由A到B,在由B返回A,触发刷新动作 一、获取小程序栈 界面A代码 shuaxin(){//此处可进行接口请求从而实现更新数据的效果console.log("刷新本页面数据啦")},界面B代码 // 返回触…...

Spring⼯⼚创建复杂对象

文章目录 5. Spring⼯⼚创建复杂对象5.1 什么是复杂对象5.2 Spring⼯⼚创建复杂对象的3种⽅式5.2.1 FactoryBean 接口5.2.2 实例⼯⼚5.2.3 静态工厂 5.3 Spring 工厂的总结 6. 控制Spring⼯⼚创建对象的次数6.1 如何控制简单对象的创建次数6.2 如何控制复杂对象的创建次数6.3 为…...

Top-N 泛型工具类

一、代码实现 通过封装 PriorityQueue 实现,PriorityQueue 本质上是完全二叉树实现的小根堆(相对来说,如果比较器反向比较则是大根堆)。 public class TopNUtil<E extends Comparable<E>> {private final PriorityQ…...

Java 后端面试指南

面试指南 TMD,一个后端为什么要了解那么多的知识,真是服了。啥啥都得了解 MySQL MySQL索引可能在以下几种情况下失效: 不遵循最左匹配原则:在联合索引中,如果没有使用索引的最左前缀,即查询条件中没有包含…...

142.环形链表 ||

给定一个链表的头节点 head ,返回链表开始入环的第一个节点。 如果链表无环,则返回 null。 如果链表中有某个节点,可以通过连续跟踪 next 指针再次到达,则链表中存在环。 为了表示给定链表中的环,评测系统内部使用整…...

Nacos、Eureka、Zookeeper注册中心的区别

Nacos、Eureka和Zookeeper都是常用的注册中心,它们在功能和实现方式上存在一些不同。 Nacos除了作为注册中心外,还提供了配置管理、服务发现和事件通知等功能。Nacos默认情况下采用AP架构保证服务可用性,CP架构底层采用Raft协议保证数据的一…...

CSS重点知识整理1

目录 1 平面位移 1.1 基本使用 1.2 单独方向的位移 1.3 使用平面位移实现绝对位置居中 2 平面旋转 2.1 基本使用 2.2 圆点转换 2.3 多重转换 3 平面缩放 3.1 基本使用 3.2 渐变的使用 4 空间转换 4.1 空间位移 4.1.1 基本使用 4.1.2 透视 4.2 空间旋转 4.3 立…...

【Langchain多Agent实践】一个有推销功能的旅游聊天机器人

【LangchainStreamlit】旅游聊天机器人_langchain streamlit-CSDN博客 视频讲解地址:【Langchain Agent】带推销功能的旅游聊天机器人_哔哩哔哩_bilibili 体验地址: http://101.33.225.241:8503/ github地址:GitHub - jerry1900/langcha…...

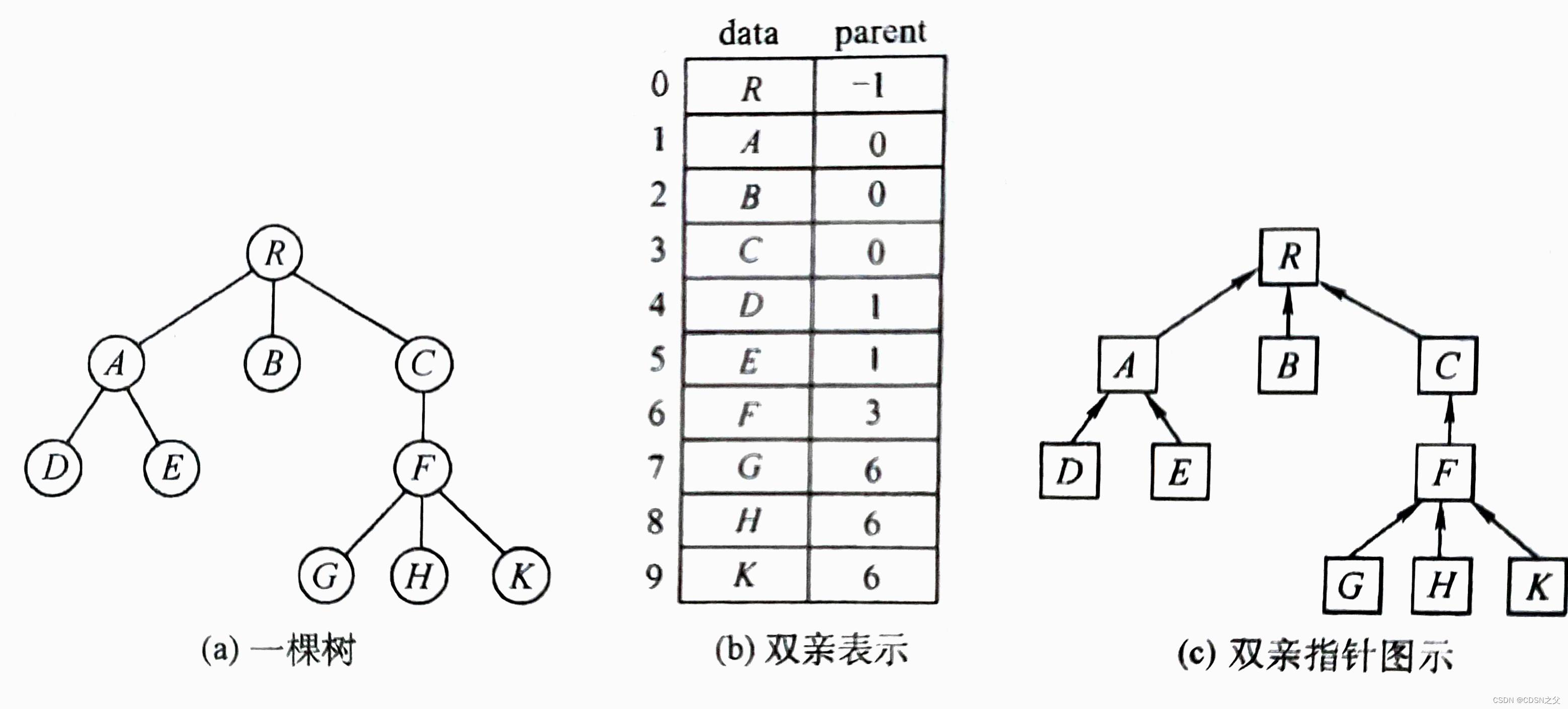

算法学习(十二)并查集

并查集 1. 概念 并查集主要用于解决一些 元素分组 问题,通过以下操作管理一系列不相交的集合: 合并(Union):把两个不相交的集合合并成一个集合 查询(Find):查询两个元素是否在同一…...

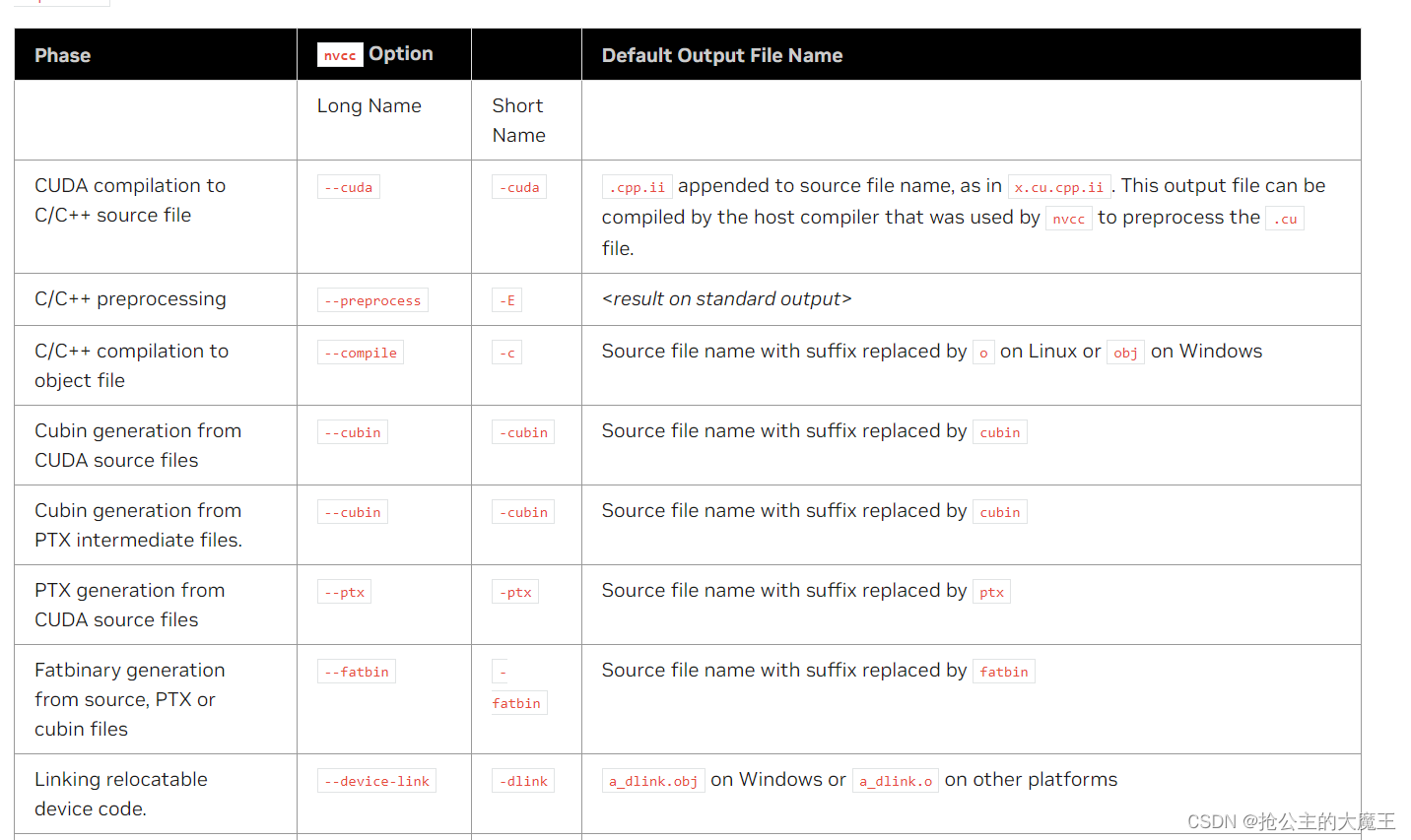

TensorRT及CUDA自学笔记003 NVCC及其命令行参数

TensorRT及CUDA自学笔记003 NVCC及其命令行参数 各位大佬,这是我的自学笔记,如有错误请指正,也欢迎在评论区学习交流,谢谢! NVCC是一种编译器,基于一些命令行参数可以将使用PTX或C语言编写的代码编译成可…...

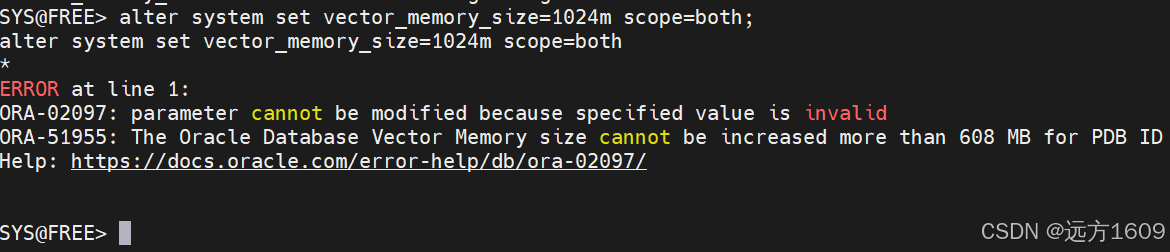

数据库管理-第154期 Oracle Vector DB AI-06(20240223)

数据库管理154期 2024-02-23 数据库管理-第154期 Oracle Vector DB & AI-06(20240223)1 环境准备创建表空间及用户TNSNAME配置 2 Oracle Vector的DML操作创建示例表插入基础数据DML操作UPDATE操作DELETE操作 3 多Vector列表4 固定维度的向量操作5 不…...

解决uni-app vue3 nvue中使用pinia页面空白问题

main.js中,最关键的就是Pinia要return出去的问题,至于原因嘛! 很忙啊,先用着吧 import App from ./App import * as Pinia from pinia import { createSSRApp } from vue export function createApp() {const app createSSRApp(App);app.us…...

不用加减乘除做加法

1.题目: 写一个函数,求两个整数之和,要求在函数体内不得使用、-、*、/四则运算符号。 数据范围:两个数都满足 −10≤�≤1000−10≤n≤1000 进阶:空间复杂度 �(1)O(1),时间复杂度 &am…...

旅游组团自驾游拼团系统 微信小程序python+java+node.js+php

随着社会的发展,旅游业已成为全球经济中发展势头最强劲和规模最大的产业之一。为方便驴友出行,寻找旅游伙伴,更好的规划旅游计划,开发一款自驾游拼团小程序,通过微信小程序发起自驾游拼团,吸收有车或无车驴…...

LeetCode 第41天 | 背包问题 二维数组 一维数组 416.分割等和子集 动态规划

46. 携带研究材料(第六期模拟笔试) 题目描述 小明是一位科学家,他需要参加一场重要的国际科学大会,以展示自己的最新研究成果。他需要带一些研究材料,但是他的行李箱空间有限。这些研究材料包括实验设备、文献资料和实…...

Python如何给视频添加音频和字幕

在Python中,给视频添加音频和字幕可以使用电影文件处理库MoviePy和字幕处理库Subtitles。下面将详细介绍如何使用这些库来实现视频的音频和字幕添加,包括必要的代码示例和详细解释。 环境准备 在开始之前,需要安装以下Python库:…...

HTML前端开发:JavaScript 常用事件详解

作为前端开发的核心,JavaScript 事件是用户与网页交互的基础。以下是常见事件的详细说明和用法示例: 1. onclick - 点击事件 当元素被单击时触发(左键点击) button.onclick function() {alert("按钮被点击了!&…...

MySQL中【正则表达式】用法

MySQL 中正则表达式通过 REGEXP 或 RLIKE 操作符实现(两者等价),用于在 WHERE 子句中进行复杂的字符串模式匹配。以下是核心用法和示例: 一、基础语法 SELECT column_name FROM table_name WHERE column_name REGEXP pattern; …...

UR 协作机器人「三剑客」:精密轻量担当(UR7e)、全能协作主力(UR12e)、重型任务专家(UR15)

UR协作机器人正以其卓越性能在现代制造业自动化中扮演重要角色。UR7e、UR12e和UR15通过创新技术和精准设计满足了不同行业的多样化需求。其中,UR15以其速度、精度及人工智能准备能力成为自动化领域的重要突破。UR7e和UR12e则在负载规格和市场定位上不断优化…...

Caliper 配置文件解析:config.yaml

Caliper 是一个区块链性能基准测试工具,用于评估不同区块链平台的性能。下面我将详细解释你提供的 fisco-bcos.json 文件结构,并说明它与 config.yaml 文件的关系。 fisco-bcos.json 文件解析 这个文件是针对 FISCO-BCOS 区块链网络的 Caliper 配置文件,主要包含以下几个部…...

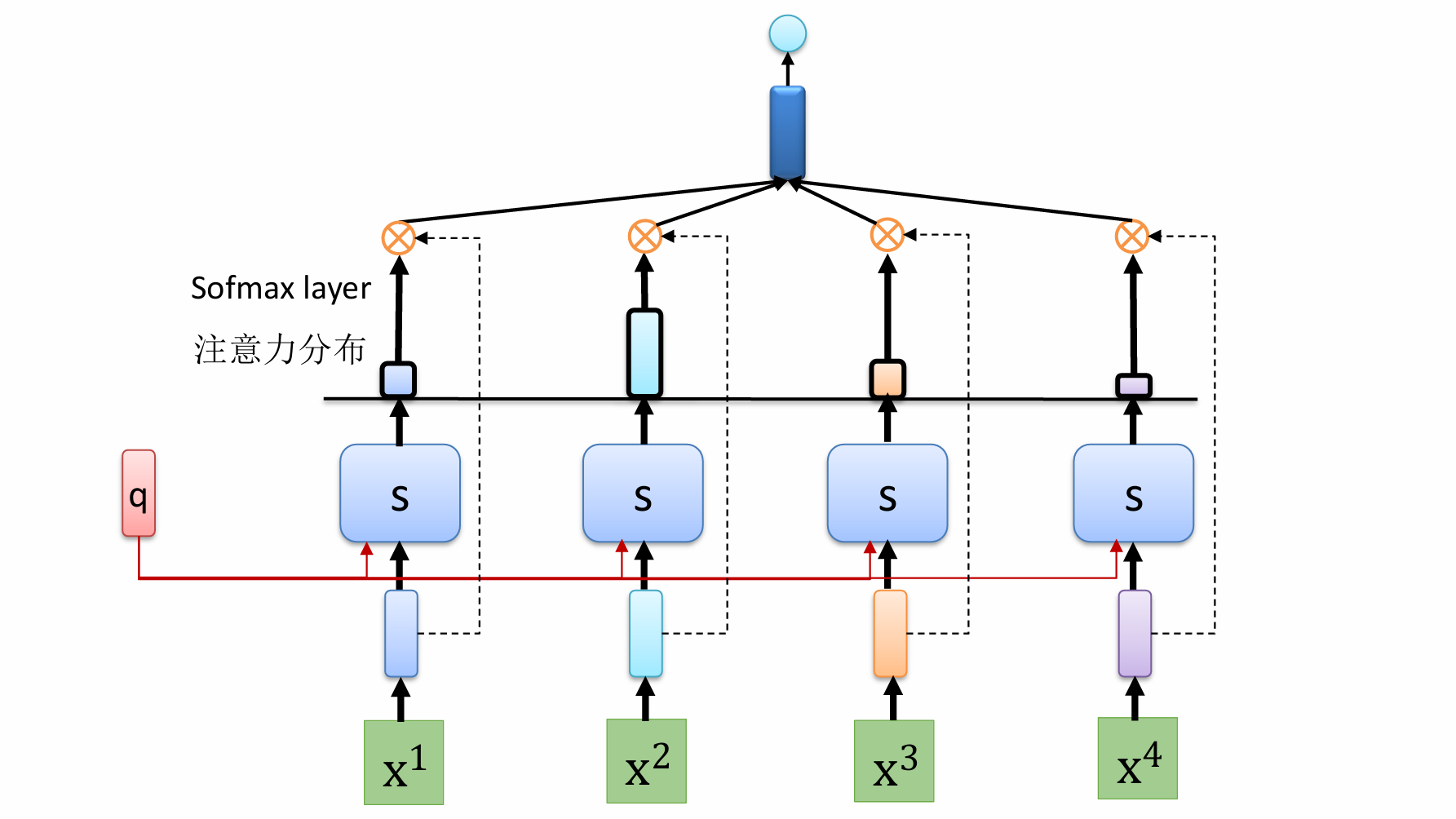

自然语言处理——循环神经网络

自然语言处理——循环神经网络 循环神经网络应用到基于机器学习的自然语言处理任务序列到类别同步的序列到序列模式异步的序列到序列模式 参数学习和长程依赖问题基于门控的循环神经网络门控循环单元(GRU)长短期记忆神经网络(LSTM)…...

在web-view 加载的本地及远程HTML中调用uniapp的API及网页和vue页面是如何通讯的?

uni-app 中 Web-view 与 Vue 页面的通讯机制详解 一、Web-view 简介 Web-view 是 uni-app 提供的一个重要组件,用于在原生应用中加载 HTML 页面: 支持加载本地 HTML 文件支持加载远程 HTML 页面实现 Web 与原生的双向通讯可用于嵌入第三方网页或 H5 应…...

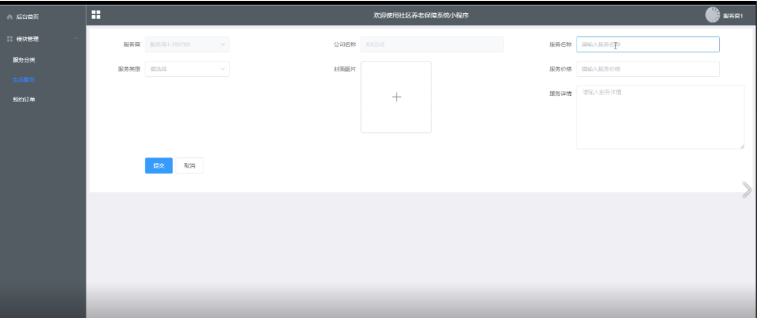

Springboot社区养老保险系统小程序

一、前言 随着我国经济迅速发展,人们对手机的需求越来越大,各种手机软件也都在被广泛应用,但是对于手机进行数据信息管理,对于手机的各种软件也是备受用户的喜爱,社区养老保险系统小程序被用户普遍使用,为方…...

10-Oracle 23 ai Vector Search 概述和参数

一、Oracle AI Vector Search 概述 企业和个人都在尝试各种AI,使用客户端或是内部自己搭建集成大模型的终端,加速与大型语言模型(LLM)的结合,同时使用检索增强生成(Retrieval Augmented Generation &#…...

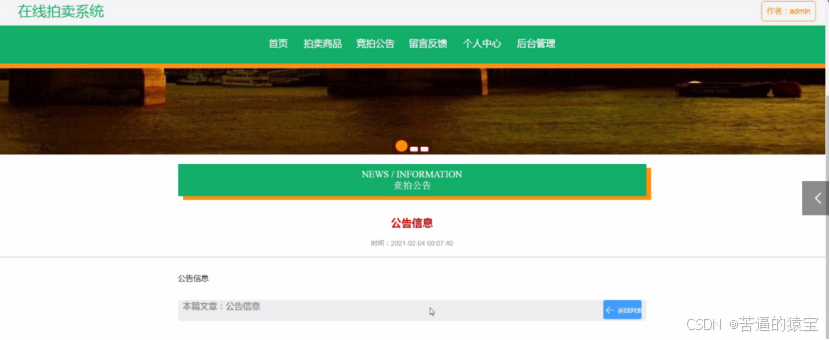

基于SpringBoot在线拍卖系统的设计和实现

摘 要 随着社会的发展,社会的各行各业都在利用信息化时代的优势。计算机的优势和普及使得各种信息系统的开发成为必需。 在线拍卖系统,主要的模块包括管理员;首页、个人中心、用户管理、商品类型管理、拍卖商品管理、历史竞拍管理、竞拍订单…...