K8S中Prometheus+Grafana监控

1.介绍

phometheus:当前一套非常流行的开源监控和报警系统。

运行原理:通过HTTP协议周期性抓取被监控组件的状态。输出被监控组件信息的HTTP接口称为exporter。

常用组件大部分都有exporter可以直接使用,比如haproxy,nginx,Mysql,Linux系统信息(包括磁盘、内存、CPU、网络等待)。

prometheus主要特点:

- 一个多维数据模型(时间序列由metrics指标名字和设置key/value键/值的labels构成)。

- 非常高效的存储,平均一个采样数据占~3.5字节左右,320万的时间序列,每30秒采样,保持60天,消耗磁盘大概228G。

- 一种灵活的查询语言(PromQL)。

- 无依赖存储,支持local和remote不同模型。

- 采用http协议,使用pull模式,拉取数据。

- 监控目标,可以采用服务器发现或静态配置的方式。

- 多种模式的图像和仪表板支持,图形化友好。

- 通过中间网关支持推送时间。

Grafana:是一个用于可视化大型测量数据的开源系统,可以对Prometheus 的指标数据进行可视化。

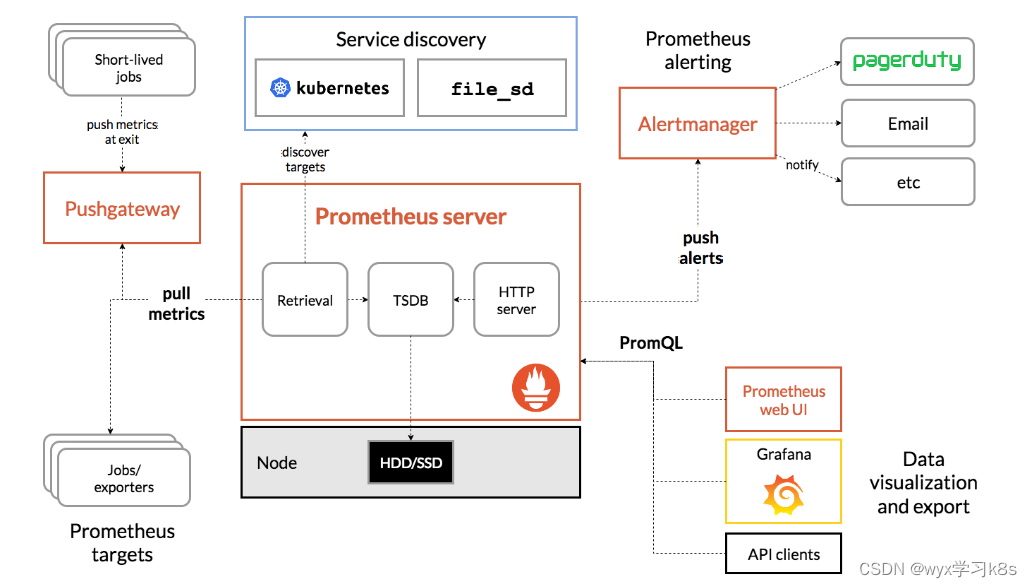

Prometheus的体系结构图:

Prometheus直接或通过中间推送网关从已检测的作业中删除指标,以处理短暂的作业。它在本地存储所有报废的样本,并对这些数据运行规则,以汇总和记录现有数据中的新时间序列,或生成警报。Grafana或其他API使用者可以用来可视化收集的数据。

2.部署prometheus

2.1 使用RBAC进行授权

[root@k8s-node01 k8s-prometheus]# cat prometheus-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: prometheusnamespace: kube-systemlabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: prometheuslabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

rules:- apiGroups:- ""resources:- nodes- nodes/metrics- services- endpoints- podsverbs:- get- list- watch- apiGroups:- ""resources:- configmapsverbs:- get- nonResourceURLs:- "/metrics"verbs:- get

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: prometheuslabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: prometheus

subjects:

- kind: ServiceAccountname: prometheusnamespace: kube-system[root@k8s-node01 k8s-prometheus]# kubectl apply -f prometheus-rbac.yaml

serviceaccount/prometheus created

clusterrole.rbac.authorization.k8s.io/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created2.2 配置管理

使用Configmap保存不需要加密配置信息,yaml中修改对应的NODE IP即可。

[root@k8s-node01 k8s-prometheus]# cat prometheus-configmap.yaml

# Prometheus configuration format https://prometheus.io/docs/prometheus/latest/configuration/configuration/

apiVersion: v1

kind: ConfigMap

metadata:name: prometheus-confignamespace: kube-system labels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: EnsureExists

data:prometheus.yml: |rule_files:- /etc/config/rules/*.rulesscrape_configs:- job_name: prometheusstatic_configs:- targets:- localhost:9090- job_name: kubernetes-nodesscrape_interval: 30sstatic_configs:- targets:- 11.0.1.13:9100- 11.0.1.14:9100- job_name: kubernetes-apiserverskubernetes_sd_configs:- role: endpointsrelabel_configs:- action: keepregex: default;kubernetes;httpssource_labels:- __meta_kubernetes_namespace- __meta_kubernetes_service_name- __meta_kubernetes_endpoint_port_namescheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtinsecure_skip_verify: truebearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token- job_name: kubernetes-nodes-kubeletkubernetes_sd_configs:- role: noderelabel_configs:- action: labelmapregex: __meta_kubernetes_node_label_(.+)scheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtinsecure_skip_verify: truebearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token- job_name: kubernetes-nodes-cadvisorkubernetes_sd_configs:- role: noderelabel_configs:- action: labelmapregex: __meta_kubernetes_node_label_(.+)- target_label: __metrics_path__replacement: /metrics/cadvisorscheme: httpstls_config:ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crtinsecure_skip_verify: truebearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token- job_name: kubernetes-service-endpointskubernetes_sd_configs:- role: endpointsrelabel_configs:- action: keepregex: truesource_labels:- __meta_kubernetes_service_annotation_prometheus_io_scrape- action: replaceregex: (https?)source_labels:- __meta_kubernetes_service_annotation_prometheus_io_schemetarget_label: __scheme__- action: replaceregex: (.+)source_labels:- __meta_kubernetes_service_annotation_prometheus_io_pathtarget_label: __metrics_path__- action: replaceregex: ([^:]+)(?::\d+)?;(\d+)replacement: $1:$2source_labels:- __address__- __meta_kubernetes_service_annotation_prometheus_io_porttarget_label: __address__- action: labelmapregex: __meta_kubernetes_service_label_(.+)- action: replacesource_labels:- __meta_kubernetes_namespacetarget_label: kubernetes_namespace- action: replacesource_labels:- __meta_kubernetes_service_nametarget_label: kubernetes_name- job_name: kubernetes-serviceskubernetes_sd_configs:- role: servicemetrics_path: /probeparams:module:- http_2xxrelabel_configs:- action: keepregex: truesource_labels:- __meta_kubernetes_service_annotation_prometheus_io_probe- source_labels:- __address__target_label: __param_target- replacement: blackboxtarget_label: __address__- source_labels:- __param_targettarget_label: instance- action: labelmapregex: __meta_kubernetes_service_label_(.+)- source_labels:- __meta_kubernetes_namespacetarget_label: kubernetes_namespace- source_labels:- __meta_kubernetes_service_nametarget_label: kubernetes_name- job_name: kubernetes-podskubernetes_sd_configs:- role: podrelabel_configs:- action: keepregex: truesource_labels:- __meta_kubernetes_pod_annotation_prometheus_io_scrape- action: replaceregex: (.+)source_labels:- __meta_kubernetes_pod_annotation_prometheus_io_pathtarget_label: __metrics_path__- action: replaceregex: ([^:]+)(?::\d+)?;(\d+)replacement: $1:$2source_labels:- __address__- __meta_kubernetes_pod_annotation_prometheus_io_porttarget_label: __address__- action: labelmapregex: __meta_kubernetes_pod_label_(.+)- action: replacesource_labels:- __meta_kubernetes_namespacetarget_label: kubernetes_namespace- action: replacesource_labels:- __meta_kubernetes_pod_nametarget_label: kubernetes_pod_namealerting:alertmanagers:- static_configs:- targets: ["alertmanager:80"][root@k8s-node01 k8s-prometheus]# kubectl apply -f prometheus-configmap.yaml

configmap/prometheus-config created2.3 有状态部署prometheus

这里使用storageclass进行动态供给,给prometheus的数据进行持久化

[root@k8s-node01 k8s-prometheus]# cat prometheus-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:name: prometheus namespace: kube-systemlabels:k8s-app: prometheuskubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcileversion: v2.2.1

spec:serviceName: "prometheus"replicas: 1podManagementPolicy: "Parallel"updateStrategy:type: "RollingUpdate"selector:matchLabels:k8s-app: prometheustemplate:metadata:labels:k8s-app: prometheusannotations:scheduler.alpha.kubernetes.io/critical-pod: ''spec:priorityClassName: system-cluster-criticalserviceAccountName: prometheusinitContainers:- name: "init-chown-data"image: "busybox:latest"imagePullPolicy: "IfNotPresent"command: ["chown", "-R", "65534:65534", "/data"]volumeMounts:- name: prometheus-datamountPath: /datasubPath: ""containers:- name: prometheus-server-configmap-reloadimage: "jimmidyson/configmap-reload:v0.1"imagePullPolicy: "IfNotPresent"args:- --volume-dir=/etc/config- --webhook-url=http://localhost:9090/-/reloadvolumeMounts:- name: config-volumemountPath: /etc/configreadOnly: trueresources:limits:cpu: 10mmemory: 10Mirequests:cpu: 10mmemory: 10Mi- name: prometheus-serverimage: "prom/prometheus:v2.2.1"imagePullPolicy: "IfNotPresent"args:- --config.file=/etc/config/prometheus.yml- --storage.tsdb.path=/data- --web.console.libraries=/etc/prometheus/console_libraries- --web.console.templates=/etc/prometheus/consoles- --web.enable-lifecycleports:- containerPort: 9090readinessProbe:httpGet:path: /-/readyport: 9090initialDelaySeconds: 30timeoutSeconds: 30livenessProbe:httpGet:path: /-/healthyport: 9090initialDelaySeconds: 30timeoutSeconds: 30# based on 10 running nodes with 30 pods eachresources:limits:cpu: 200mmemory: 1000Mirequests:cpu: 200mmemory: 1000MivolumeMounts:- name: config-volumemountPath: /etc/config- name: prometheus-datamountPath: /datasubPath: ""- name: prometheus-rulesmountPath: /etc/config/rulesterminationGracePeriodSeconds: 300volumes:- name: config-volumeconfigMap:name: prometheus-config- name: prometheus-rulesconfigMap:name: prometheus-rulesvolumeClaimTemplates:- metadata:name: prometheus-dataspec:storageClassName: managed-nfs-storage accessModes:- ReadWriteOnceresources:requests:storage: "1Gi"[root@k8s-node01 k8s-prometheus]# kubectl apply -f prometheus-statefulset.yaml

Warning: spec.template.metadata.annotations[scheduler.alpha.kubernetes.io/critical-pod]: non-functional in v1.16+; use the "priorityClassName" field instead

statefulset.apps/prometheus created[root@k8s-node01 k8s-prometheus]#kubectl get pod -n kube-system |grep prometheus

NAME READY STATUS RESTARTS AGE

prometheus-0 2/2 Running 6 1d2.4 创建service暴露访问端口

[root@k8s-node01 k8s-prometheus]# cat prometheus-service.yaml

kind: Service

apiVersion: v1

metadata: name: prometheusnamespace: kube-systemlabels: kubernetes.io/name: "Prometheus"kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

spec: type: NodePortports: - name: http port: 9090protocol: TCPtargetPort: 9090nodePort: 30090selector: k8s-app: prometheus[root@k8s-master prometheus-k8s]# kubectl apply -f prometheus-service.yaml2.5 web访问

使用任意一个NodeIP加端口进行访问,访问地址:http://NodeIP:Port

3.部署Grafana

[root@k8s-master prometheus-k8s]# cat grafana.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:name: grafananamespace: kube-system

spec:serviceName: "grafana"replicas: 1selector:matchLabels:app: grafanatemplate:metadata:labels:app: grafanaspec:containers:- name: grafanaimage: grafana/grafanaports:- containerPort: 3000protocol: TCPresources:limits:cpu: 100mmemory: 256Mirequests:cpu: 100mmemory: 256MivolumeMounts:- name: grafana-datamountPath: /var/lib/grafanasubPath: grafanasecurityContext:fsGroup: 472runAsUser: 472volumeClaimTemplates:- metadata:name: grafana-dataspec:storageClassName: managed-nfs-storage #和prometheus使用同一个存储类accessModes:- ReadWriteOnceresources:requests:storage: "1Gi"---apiVersion: v1

kind: Service

metadata:name: grafananamespace: kube-system

spec:type: NodePortports:- port : 80targetPort: 3000nodePort: 30091selector:app: grafana[root@k8s-master prometheus-k8s]#kubectl apply -f grafana.yaml访问方式:

使用任意一个NodeIP加端口进行访问,访问地址:http://NodeIP:Port ,默认账号密码为admin

4.监控K8S集群中Pod、Node、资源对象数据的方法

Pod:

kubelet的节点使用cAdvisor提供的metrics接口获取该节点所有Pod和容器相关的性能指标数据,安装kubelet默认就开启了

Node:

需要使用node_exporter收集器采集节点资源利用率。

使用node_exporter.sh脚本分别在所有服务器上部署node_exporter收集器,不需要修改可直接运行脚本

[root@k8s-master prometheus-k8s]# cat node_exporter.sh

#!/bin/bashwget https://github.com/prometheus/node_exporter/releases/download/v0.17.0/node_exporter-0.17.0.linux-amd64.tar.gztar zxf node_exporter-0.17.0.linux-amd64.tar.gz

mv node_exporter-0.17.0.linux-amd64 /usr/local/node_exportercat <<EOF >/usr/lib/systemd/system/node_exporter.service

[Unit]

Description=https://prometheus.io[Service]

Restart=on-failure

ExecStart=/usr/local/node_exporter/node_exporter --collector.systemd --collector.systemd.unit-whitelist=(docker|kubelet|kube-proxy|flanneld).service[Install]

WantedBy=multi-user.target

EOFsystemctl daemon-reload

systemctl enable node_exporter

systemctl restart node_exporter

[root@k8s-master prometheus-k8s]# ./node_exporter.sh[root@k8s-master prometheus-k8s]# ps -ef|grep node_exporter

root 6227 1 0 Oct08 ? 00:06:43 /usr/local/node_exporter/node_exporter --collector.systemd --collector.systemd.unit-whitelist=(docker|kubelet|kube-proxy|flanneld).service

root 118269 117584 0 23:27 pts/0 00:00:00 grep --color=auto node_exporter资源对象:

kube-state-metrics采集了k8s中各种资源对象的状态信息,只需要在master节点部署就行

1.创建rbac的yaml对metrics进行授权

[root@k8s-master prometheus-k8s]# cat kube-state-metrics-rbac.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: kube-state-metricsnamespace: kube-systemlabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: kube-state-metricslabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]resources:- configmaps- secrets- nodes- pods- services- resourcequotas- replicationcontrollers- limitranges- persistentvolumeclaims- persistentvolumes- namespaces- endpointsverbs: ["list", "watch"]

- apiGroups: ["extensions"]resources:- daemonsets- deployments- replicasetsverbs: ["list", "watch"]

- apiGroups: ["apps"]resources:- statefulsetsverbs: ["list", "watch"]

- apiGroups: ["batch"]resources:- cronjobs- jobsverbs: ["list", "watch"]

- apiGroups: ["autoscaling"]resources:- horizontalpodautoscalersverbs: ["list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:name: kube-state-metrics-resizernamespace: kube-systemlabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups: [""]resources:- podsverbs: ["get"]

- apiGroups: ["extensions"]resources:- deploymentsresourceNames: ["kube-state-metrics"]verbs: ["get", "update"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: kube-state-metricslabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: kube-state-metrics

subjects:

- kind: ServiceAccountname: kube-state-metricsnamespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:name: kube-state-metricsnamespace: kube-systemlabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: kube-state-metrics-resizer

subjects:

- kind: ServiceAccountname: kube-state-metricsnamespace: kube-system

[root@k8s-master prometheus-k8s]# kubectl apply -f kube-state-metrics-rbac.yaml2.编写Deployment和ConfigMap的yaml进行metrics pod部署,不需要进行修改

[root@k8s-master prometheus-k8s]# cat kube-state-metrics-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: kube-state-metricsnamespace: kube-systemlabels:k8s-app: kube-state-metricskubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcileversion: v1.3.0

spec:selector:matchLabels:k8s-app: kube-state-metricsversion: v1.3.0replicas: 1template:metadata:labels:k8s-app: kube-state-metricsversion: v1.3.0annotations:scheduler.alpha.kubernetes.io/critical-pod: ''spec:priorityClassName: system-cluster-criticalserviceAccountName: kube-state-metricscontainers:- name: kube-state-metricsimage: lizhenliang/kube-state-metrics:v1.3.0ports:- name: http-metricscontainerPort: 8080- name: telemetrycontainerPort: 8081readinessProbe:httpGet:path: /healthzport: 8080initialDelaySeconds: 5timeoutSeconds: 5- name: addon-resizerimage: lizhenliang/addon-resizer:1.8.3resources:limits:cpu: 100mmemory: 30Mirequests:cpu: 100mmemory: 30Mienv:- name: MY_POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: MY_POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:- name: config-volumemountPath: /etc/configcommand:- /pod_nanny- --config-dir=/etc/config- --container=kube-state-metrics- --cpu=100m- --extra-cpu=1m- --memory=100Mi- --extra-memory=2Mi- --threshold=5- --deployment=kube-state-metricsvolumes:- name: config-volumeconfigMap:name: kube-state-metrics-config

---

# Config map for resource configuration.

apiVersion: v1

kind: ConfigMap

metadata:name: kube-state-metrics-confignamespace: kube-systemlabels:k8s-app: kube-state-metricskubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcile

data:NannyConfiguration: |-apiVersion: nannyconfig/v1alpha1kind: NannyConfiguration

[root@k8s-master prometheus-k8s]# kubectl apply -f kube-state-metrics-deployment.yaml3.编写Service的yaml对metrics进行端口暴露

[root@k8s-master prometheus-k8s]# cat kube-state-metrics-service.yaml

apiVersion: v1

kind: Service

metadata:name: kube-state-metricsnamespace: kube-systemlabels:kubernetes.io/cluster-service: "true"addonmanager.kubernetes.io/mode: Reconcilekubernetes.io/name: "kube-state-metrics"annotations:prometheus.io/scrape: 'true'

spec:ports:- name: http-metricsport: 8080targetPort: http-metricsprotocol: TCP- name: telemetryport: 8081targetPort: telemetryprotocol: TCPselector:k8s-app: kube-state-metrics

[root@k8s-master prometheus-k8s]# kubectl apply -f kube-state-metrics-service.yaml[root@k8s-master prometheus-k8s]# kubectl get pod,svc -n kube-system

NAME READY STATUS RESTARTS AGE

pod/alertmanager-5d75d5688f-fmlq6 2/2 Running 0 9dpod/coredns-5bd5f9dbd9-wv45t 1/1 Running 1 9dpod/grafana-0 1/1 Running 2 15dpod/kube-state-metrics-7c76bdbf68-kqqgd 2/2 Running 6 14dpod/kubernetes-dashboard-7d77666777-d5ng4 1/1 Running 5 16dpod/prometheus-0 2/2 Running 6 15dNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/alertmanager ClusterIP 10.0.0.207 <none> 80/TCP 13dservice/grafana NodePort 10.0.0.74 <none> 80:30091/TCP 15dservice/kube-dns ClusterIP 10.0.0.2 <none> 53/UDP,53/TCP 14dservice/kube-state-metrics ClusterIP 10.0.0.194 <none> 8080/TCP,8081/TCP 14dservice/kubernetes-dashboard NodePort 10.0.0.127 <none> 443:30001/TCP 17dservice/prometheus NodePort 10.0.0.33 <none> 9090:30090/TCP 14d报错一:进行2.1步骤时报错:ensure CRDs are installed first

[root@k8s-node01 k8s-prometheus]# kubectl apply -f prometheus-rbac.yaml

serviceaccount/prometheus unchanged

resource mapping not found for name: "prometheus" namespace: "" from "prometheus-rbac.yaml": no matches for kind "ClusterRole" in version "rbac.authorization.k8s.io/v1beta1"

ensure CRDs are installed first

resource mapping not found for name: "prometheus" namespace: "" from "prometheus-rbac.yaml": no matches for kind "ClusterRoleBinding" in version "rbac.authorization.k8s.io/v1beta1"

ensure CRDs are installed first使用附件的原yaml会报错,原因是因为api过期,需要手动修改 apiVersion: rbac.authorization.k8s.io/v1beta1为apiVersion: rbac.authorization.k8s.io/v1

相关文章:

K8S中Prometheus+Grafana监控

1.介绍 phometheus:当前一套非常流行的开源监控和报警系统。 运行原理:通过HTTP协议周期性抓取被监控组件的状态。输出被监控组件信息的HTTP接口称为exporter。 常用组件大部分都有exporter可以直接使用,比如haproxy,nginx,Mysql,Linux系统信…...

)

题解:CF1968F(Equal XOR Segments)

题解:CF1968F(Equal XOR Segments) 题目翻译:定义一个序列是好,当且仅当可以将其分成大于 1 1 1 份,使得每个部分的异或和相等。现在给定一个长度为 n n n 的序列 a a a,以及 q q q 次查询…...

Python操作MySQL实战

文章导读 本文用于巩固Pymysql操作MySQL与MySQL操作的知识点,实现一个简易的音乐播放器,拟实现的功能包括:用户登录,窗口显示,加载本地音乐,加入和删除播放列表,播放音乐。 点击此处获取参考源…...

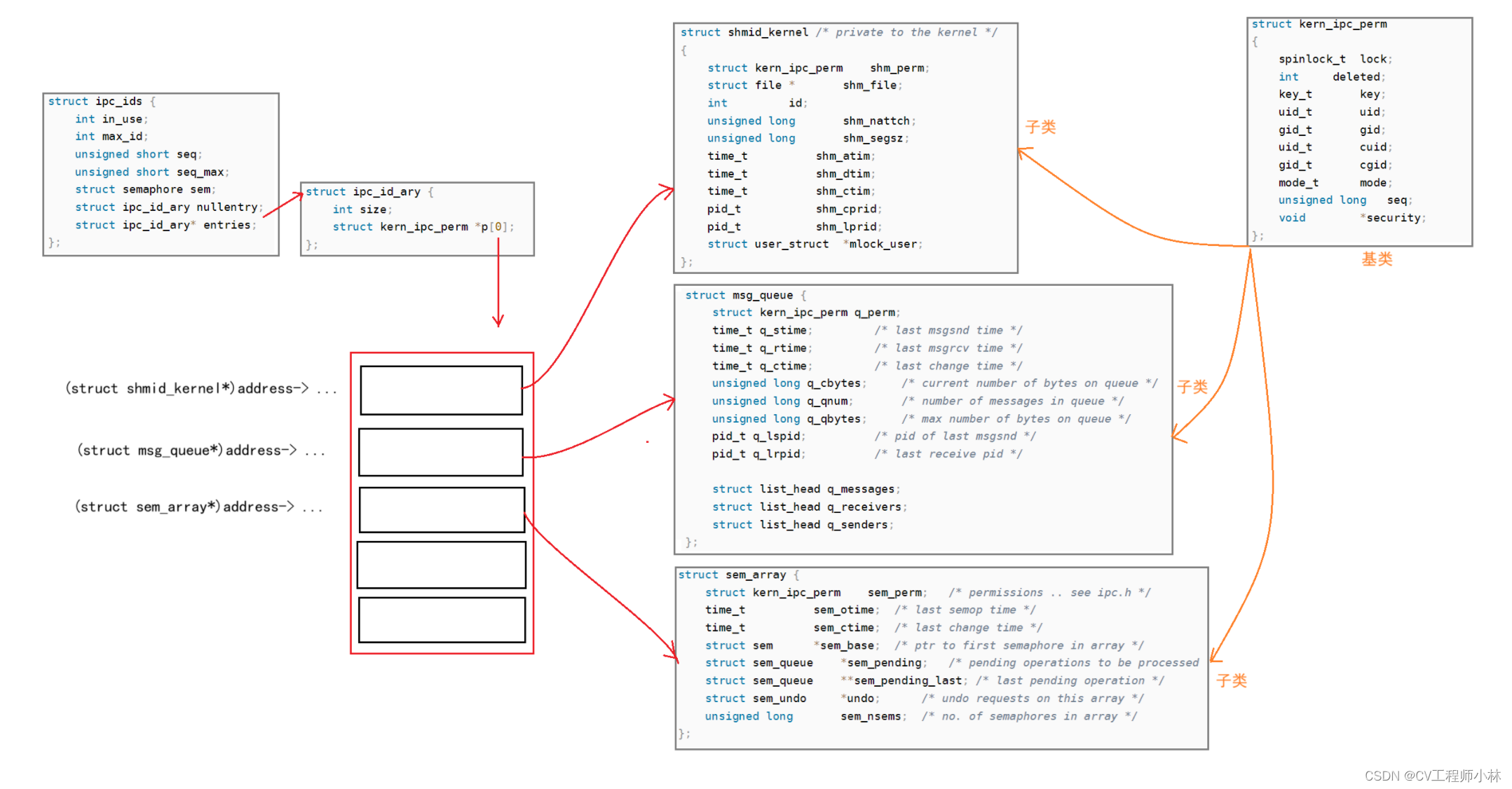

【Linux系统】进程间通信

本篇博客整理了进程间通信的方式管道、 system V IPC的原理,结合大量的系统调用接口,和代码示例,旨在让读者透过进程间通信去体会操作系统的设计思想和管理手段。 目录 一、进程间通信 二、管道 1.匿名管道 1.1-通信原理 1.2-系统调用 …...

北大国际医院腹膜后纤维化课题组 多学科协作开辟治疗新径

腹膜后纤维化(Retroperitoneal Fibrosis,简称RPF)是一种罕见的自身免疫性疾病,其核心特征是纤维组织的异常增生与硬化。这种疾病主要影响肾脏下方的腹主动脉和髂动脉区域,增生的纤维组织会逐渐压迫周围的输尿管和下腔静脉,从而导致一系列并发症,包括主动脉瘤、肾功能衰竭等,甚至…...

面试数据库八股文十问十答第七期

面试数据库八股文十问十答第七期 作者:程序员小白条,个人博客 相信看了本文后,对你的面试是有一定帮助的!关注专栏后就能收到持续更新! ⭐点赞⭐收藏⭐不迷路!⭐ 1)索引是越多越好吗ÿ…...

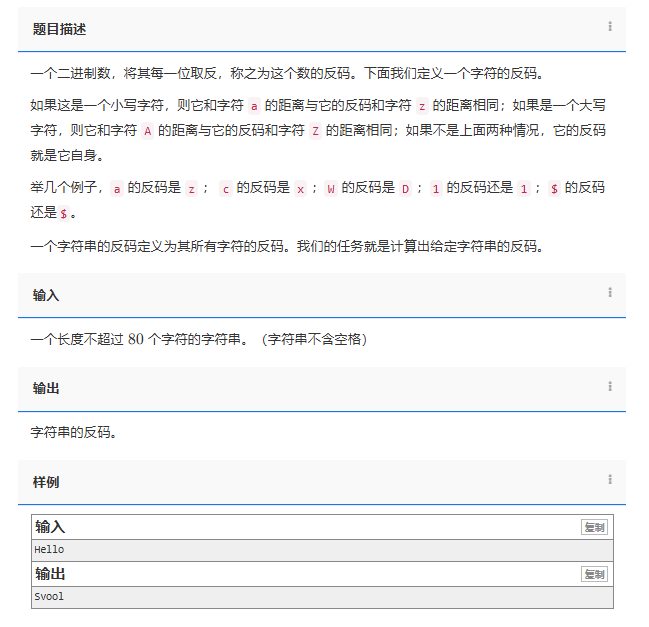

【C++题解】1133. 字符串的反码

问题:1133. 字符串的反码 类型:字符串 题目描述: 一个二进制数,将其每一位取反,称之为这个数的反码。下面我们定义一个字符的反码。 如果这是一个小写字符,则它和字符 a 的距离与它的反码和字符 z 的距离…...

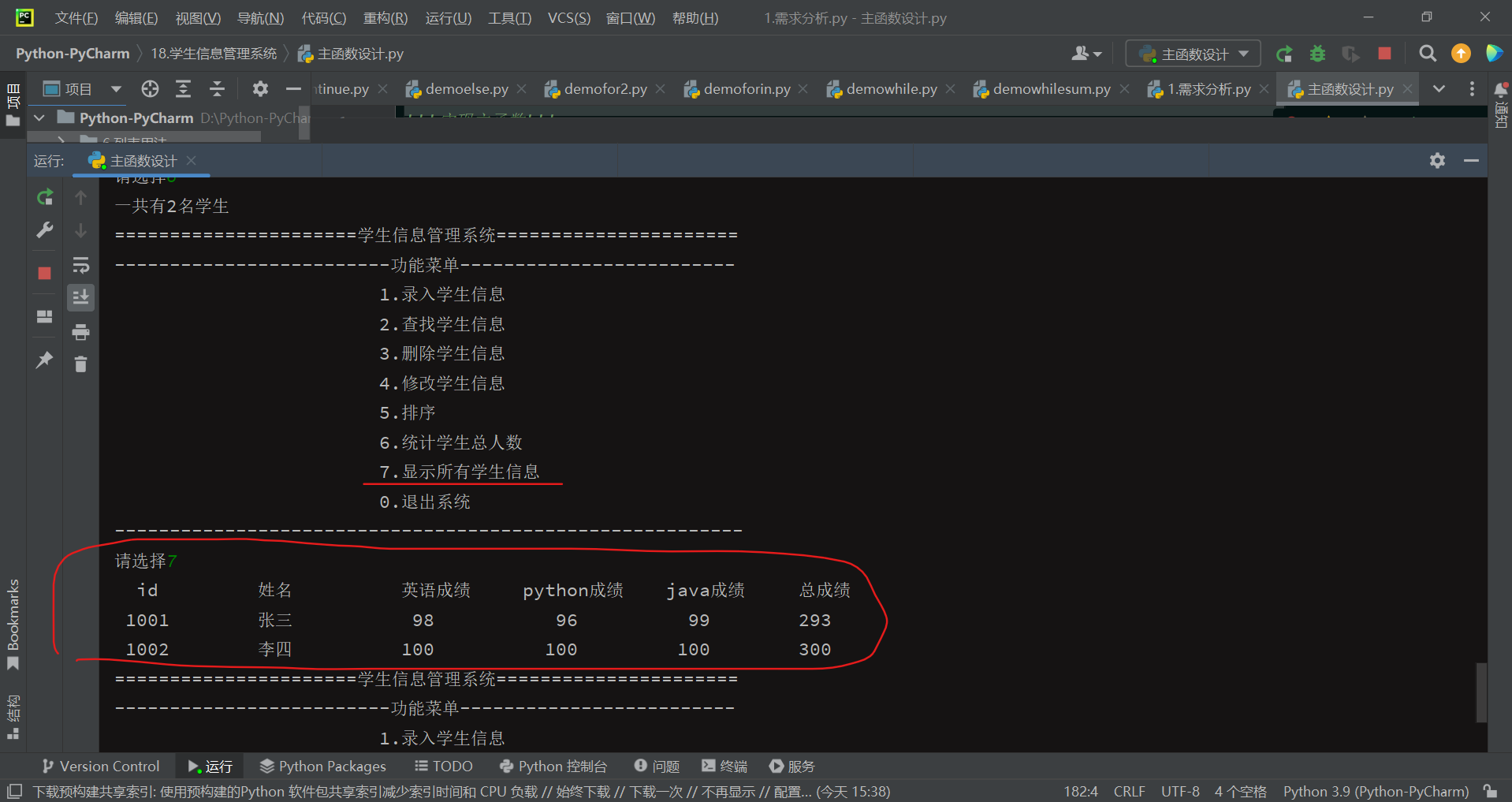

【Python编程实战】基于Python语言实现学生信息管理系统

🎩 欢迎来到技术探索的奇幻世界👨💻 📜 个人主页:一伦明悦-CSDN博客 ✍🏻 作者简介: C软件开发、Python机器学习爱好者 🗣️ 互动与支持:💬评论 &…...

AI网络爬虫:批量爬取电视猫上面的《庆余年》分集剧情

电视猫上面有《庆余年》分集剧情,如何批量爬取下来呢? 先找到每集的链接地址,都在这个class"epipage clear"的div标签里面的li标签下面的a标签里面: <a href"/drama/Yy0wHDA/episode">1</a> 这个…...

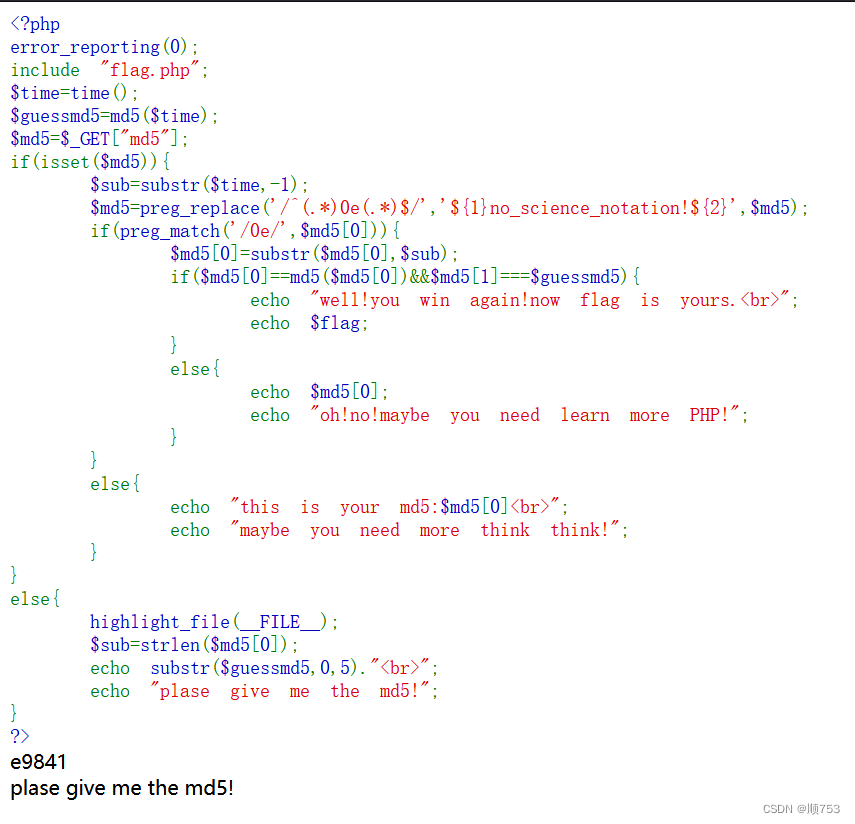

md5强弱碰撞

一,类型。 1.弱比较 php中的""和""在进行比较时,数字和字符串比较或者涉及到数字内容的字符串,则字符串会被转换为数值并且比较按照数值来进行。按照此理,我们可以上传md5编码后是0e的字符串,在…...

【Docker故障处理篇】运行容器报错“docker: failed to register layer...file exists.”解决方法

【Docker故障处理篇】运行容器报错“docker: failed to register layer...file exists.” 一、Docker环境介绍2.1 本次环境介绍2.2 本次实践介绍二、故障现象2.1 运行容器消失2.2 重新运行容器报错三、故障分析四、故障处理4.1 停止 Docker 服务:4.2 备份重要数据4.3 清理冲突…...

一面面经)

小红书-社区搜索部 (NLP、CV算法实习生) 一面面经

😄 整个流程按如下问题展开,用时60min左右面试官人挺好,前半部分问问题,后半部分coding一道题。 各位有什么问题可以直接评论区留言,24小时内必回信息,放心~ 文章目录 1、自我介绍2、介绍下项目:微信-多模态小视频分类2.1、看你用了cross-att来融合多模态信息,cross…...

解读makefile中的.PHONY

在 Makefile 中,.PHONY 是一个特殊的目标,用于声明伪目标(phony target)。伪目标是指并不代表实际构建结果的目标,而是用来触发特定动作或命令的标识。通常情况下,.PHONY 会被用来声明一组需要执行的动作&a…...

linux配置防火墙端口

配置防火墙,添加或删除端口,需要有root权限。 防火墙常用命令如下: 1.查看防火墙状态: systemctl status firewalld active(running):开启状态,正在运行中 inactive(dead):关闭状态ÿ…...

sklearn线性回归--岭回归

sklearn线性回归--岭回归 岭回归也是一种用于回归的线性模型,因此它的预测公式与普通最小二乘法相同。但在岭回归中,对系数(w)的选择不仅要在训练数据上得到好的预测结果,而且还要拟合附加约束,使系数尽量小…...

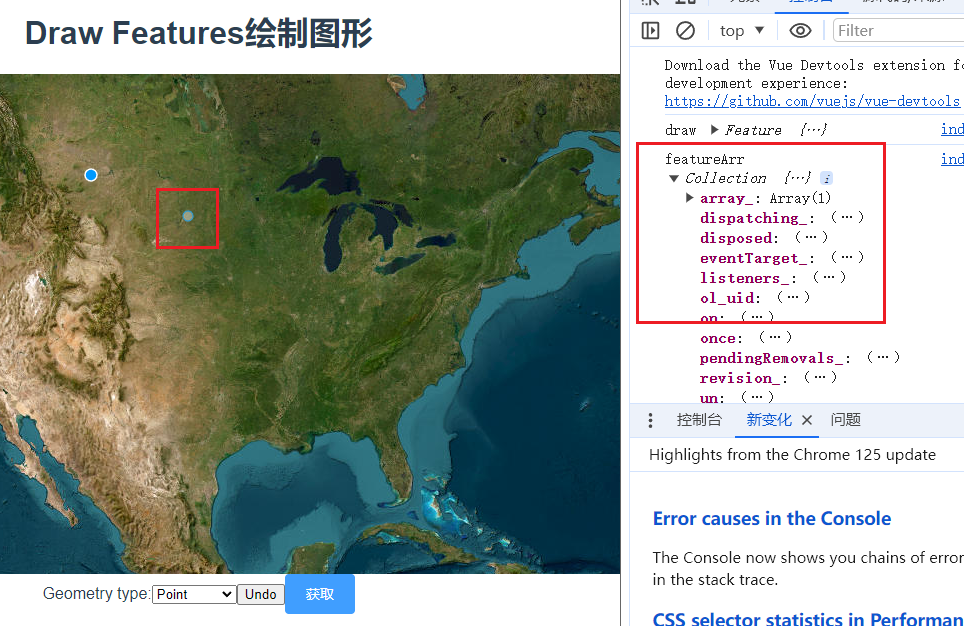

三十一、openlayers官网示例Draw Features解析——在地图上自定义绘制点、线、多边形、圆形并获取图形数据

官网demo地址: Draw Features 先初始化地图,准备一个空的矢量图层,用于显示绘制的图形。 initLayers() {const raster new TileLayer({source: new XYZ({url: "https://server.arcgisonline.com/ArcGIS/rest/services/World_Imagery/…...

医疗科技:UWB模块为智能医疗设备带来的变革

随着医疗科技的不断发展和人们健康意识的提高,智能医疗设备的应用越来越广泛。超宽带(UWB)技术作为一种新兴的定位技术,正在引领着智能医疗设备的变革。UWB模块作为UWB技术的核心组成部分,在智能医疗设备中发挥着越来越…...

)

Java面试题大全(从基础到框架,中间件,持续更新~~~)

从Java基础到数据库,Spring,MyBatis,消息中间件,微服务解决全部Java面试过程中的问题。(持续更新~~) Java基础 2024最新Java面试题——java基础 MySQL基础 mysql基础知识——适合不太熟悉数据库知识的小…...

零知识证明在隐私保护和身份验证中的应用

PrimiHub一款由密码学专家团队打造的开源隐私计算平台,专注于分享数据安全、密码学、联邦学习、同态加密等隐私计算领域的技术和内容。 隐私保护和身份验证是现代社会中的关键问题,尤其是在数字化时代。零知识证明(Zero-Knowledge Proofs&…...

15.微信小程序之async-validator 基本使用

async-validator是一个基于 JavaScript 的表单验证库,支持异步验证规则和自定义验证规则 主流的 UI 组件库 Ant-design 和 Element中的表单验证都是基于 async-validator 使用 async-validator 可以方便地构建表单验证逻辑,使得错误提示信息更加友好和…...

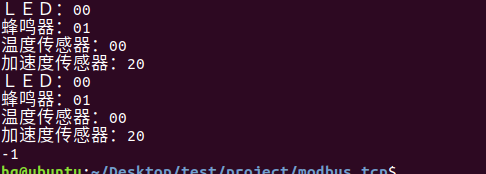

网络编程(Modbus进阶)

思维导图 Modbus RTU(先学一点理论) 概念 Modbus RTU 是工业自动化领域 最广泛应用的串行通信协议,由 Modicon 公司(现施耐德电气)于 1979 年推出。它以 高效率、强健性、易实现的特点成为工业控制系统的通信标准。 包…...

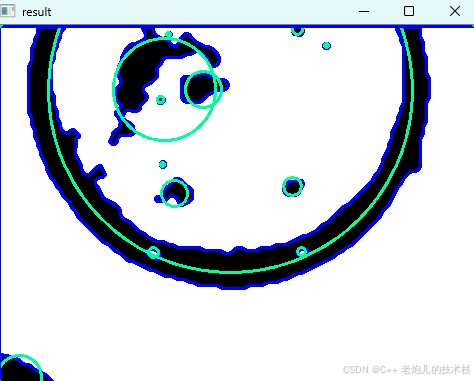

利用最小二乘法找圆心和半径

#include <iostream> #include <vector> #include <cmath> #include <Eigen/Dense> // 需安装Eigen库用于矩阵运算 // 定义点结构 struct Point { double x, y; Point(double x_, double y_) : x(x_), y(y_) {} }; // 最小二乘法求圆心和半径 …...

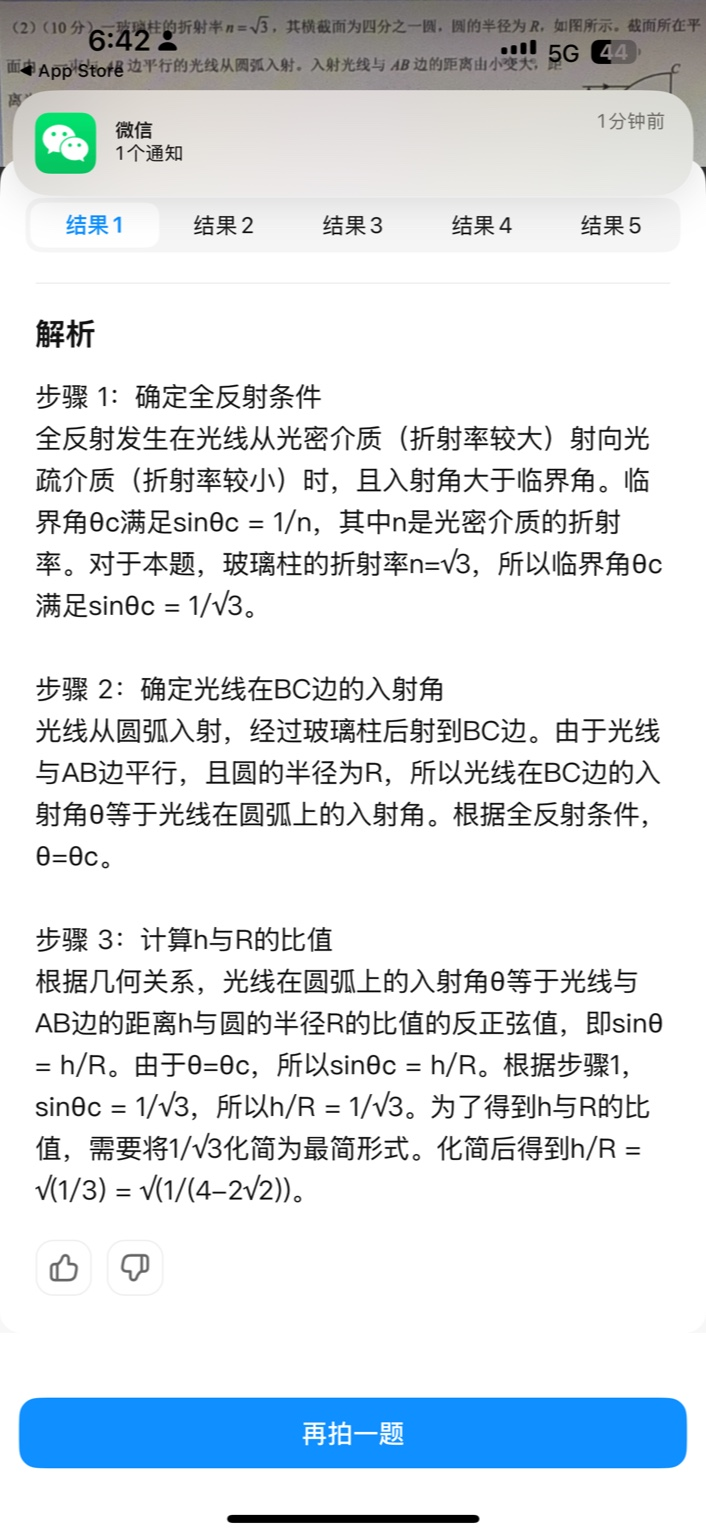

【大模型RAG】拍照搜题技术架构速览:三层管道、两级检索、兜底大模型

摘要 拍照搜题系统采用“三层管道(多模态 OCR → 语义检索 → 答案渲染)、两级检索(倒排 BM25 向量 HNSW)并以大语言模型兜底”的整体框架: 多模态 OCR 层 将题目图片经过超分、去噪、倾斜校正后,分别用…...

超短脉冲激光自聚焦效应

前言与目录 强激光引起自聚焦效应机理 超短脉冲激光在脆性材料内部加工时引起的自聚焦效应,这是一种非线性光学现象,主要涉及光学克尔效应和材料的非线性光学特性。 自聚焦效应可以产生局部的强光场,对材料产生非线性响应,可能…...

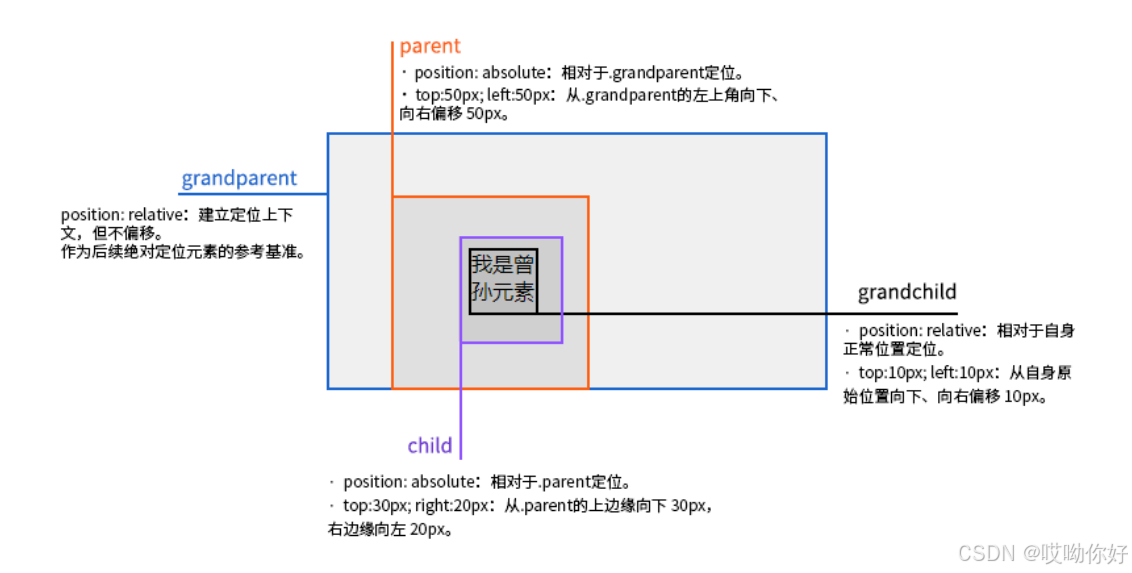

【CSS position 属性】static、relative、fixed、absolute 、sticky详细介绍,多层嵌套定位示例

文章目录 ★ position 的五种类型及基本用法 ★ 一、position 属性概述 二、position 的五种类型详解(初学者版) 1. static(默认值) 2. relative(相对定位) 3. absolute(绝对定位) 4. fixed(固定定位) 5. sticky(粘性定位) 三、定位元素的层级关系(z-i…...

macOS多出来了:Google云端硬盘、YouTube、表格、幻灯片、Gmail、Google文档等应用

文章目录 问题现象问题原因解决办法 问题现象 macOS启动台(Launchpad)多出来了:Google云端硬盘、YouTube、表格、幻灯片、Gmail、Google文档等应用。 问题原因 很明显,都是Google家的办公全家桶。这些应用并不是通过独立安装的…...

【OSG学习笔记】Day 16: 骨骼动画与蒙皮(osgAnimation)

骨骼动画基础 骨骼动画是 3D 计算机图形中常用的技术,它通过以下两个主要组件实现角色动画。 骨骼系统 (Skeleton):由层级结构的骨头组成,类似于人体骨骼蒙皮 (Mesh Skinning):将模型网格顶点绑定到骨骼上,使骨骼移动…...

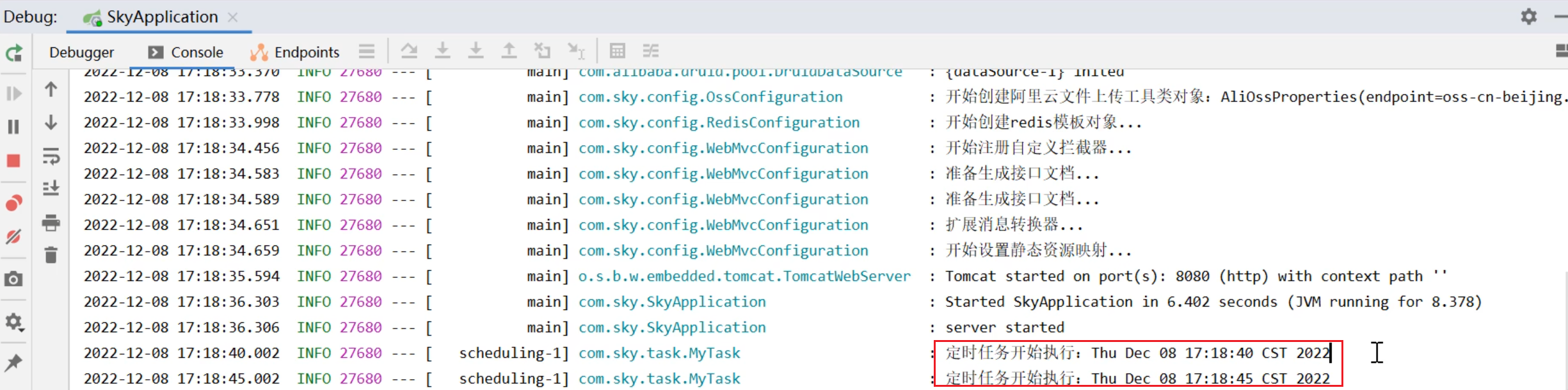

SpringTask-03.入门案例

一.入门案例 启动类: package com.sky;import lombok.extern.slf4j.Slf4j; import org.springframework.boot.SpringApplication; import org.springframework.boot.autoconfigure.SpringBootApplication; import org.springframework.cache.annotation.EnableCach…...

python报错No module named ‘tensorflow.keras‘

是由于不同版本的tensorflow下的keras所在的路径不同,结合所安装的tensorflow的目录结构修改from语句即可。 原语句: from tensorflow.keras.layers import Conv1D, MaxPooling1D, LSTM, Dense 修改后: from tensorflow.python.keras.lay…...

智能AI电话机器人系统的识别能力现状与发展水平

一、引言 随着人工智能技术的飞速发展,AI电话机器人系统已经从简单的自动应答工具演变为具备复杂交互能力的智能助手。这类系统结合了语音识别、自然语言处理、情感计算和机器学习等多项前沿技术,在客户服务、营销推广、信息查询等领域发挥着越来越重要…...