尚硅谷k8s 2

p54-56 k8s核心实战 service服务发现

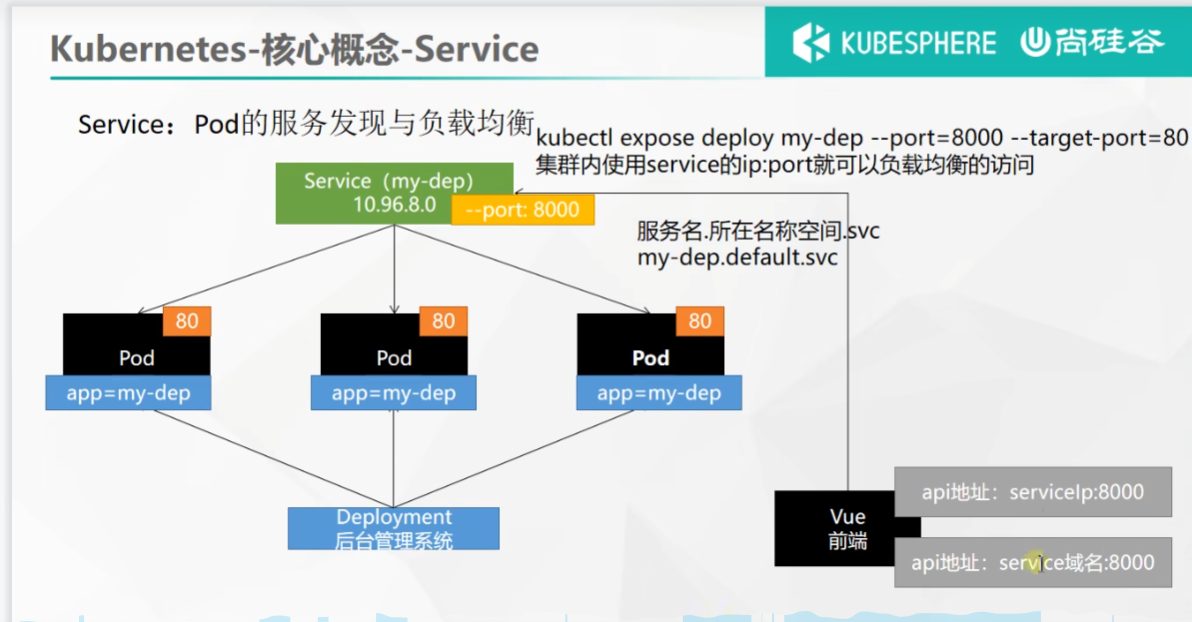

Service:将一组 Pods 公开为网络服务的抽象方法。

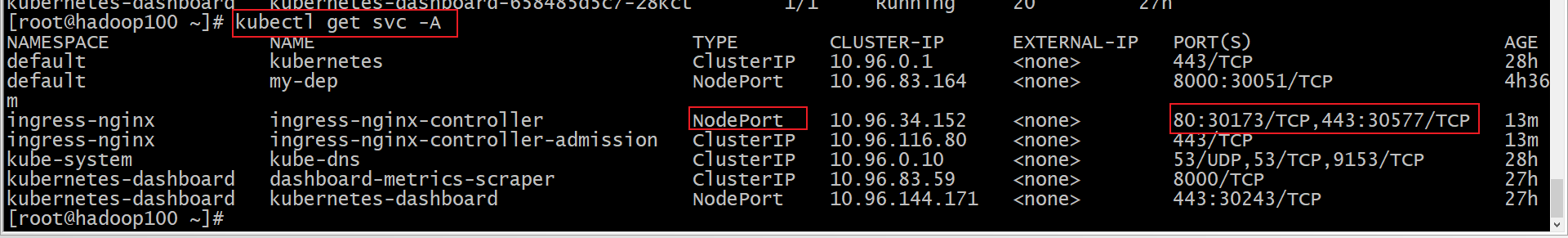

#暴露Deploy,暴露deploy会出现在svc

kubectl expose deployment my-dep --port=8000 --target-port=80#使用标签检索Pod

kubectl get pod -l app=my-dep

apiVersion: v1

kind: Service

metadata:labels:app: my-depname: my-dep

spec:selector:app: my-depports:- port: 8000protocol: TCPtargetPort: 80

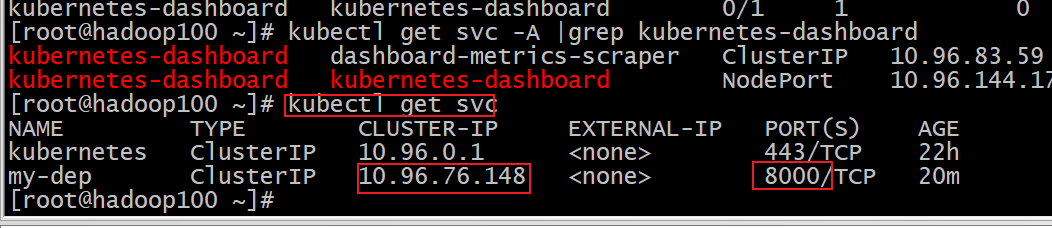

ClusterIP

拿到service的ip和port

# 等同于没有--type的

kubectl expose deployment my-dep --port=8000 --target-port=80 --type=ClusterIP

apiVersion: v1

kind: Service

metadata:labels:app: my-depname: my-dep

spec:ports:- port: 8000protocol: TCPtargetPort: 80selector:app: my-deptype: ClusterIP

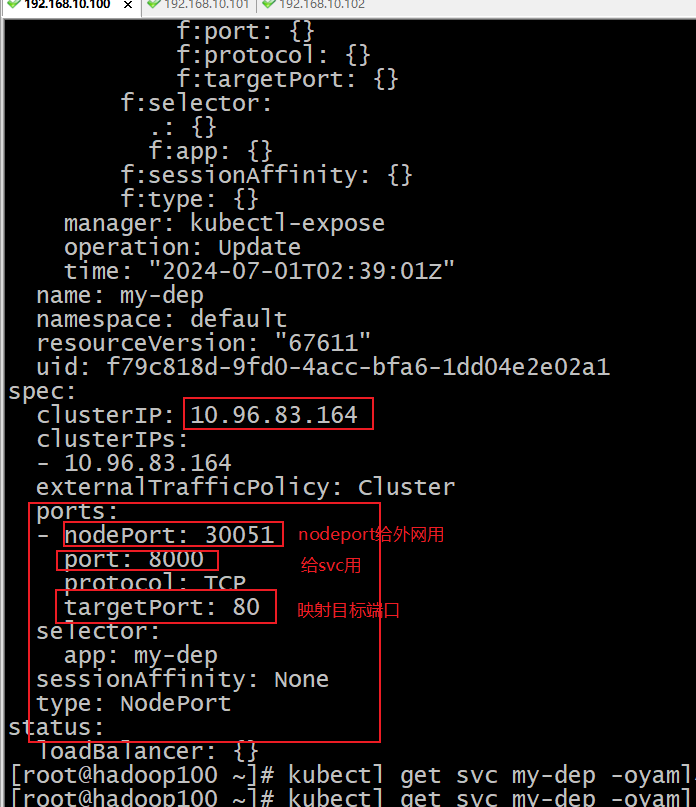

NodePort

kubectl expose deployment my-dep --port=8000 --target-port=80 --type=NodePort

apiVersion: v1

kind: Service

metadata:labels:app: my-depname: my-dep

spec:ports:- port: 8000protocol: TCPtargetPort: 80selector:app: my-deptype: NodePort区别

区别:nodeport模式在可以访问公有网络也可以通过service网络访问 ,cluster只能够通过service网络访问

kubectl get svc my-dep -oyaml

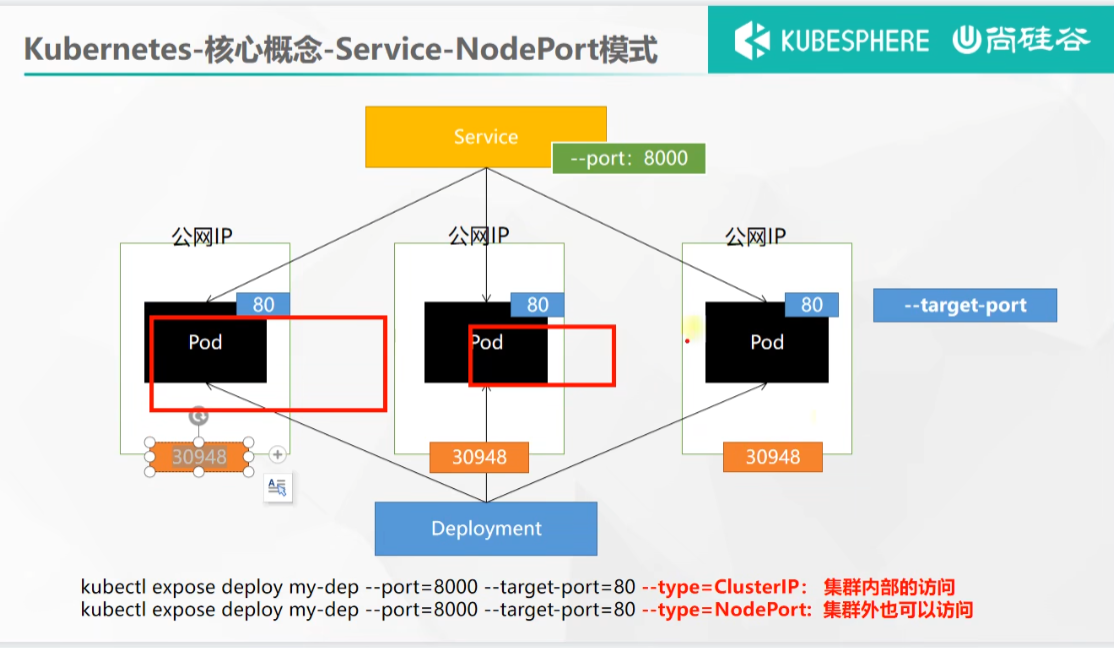

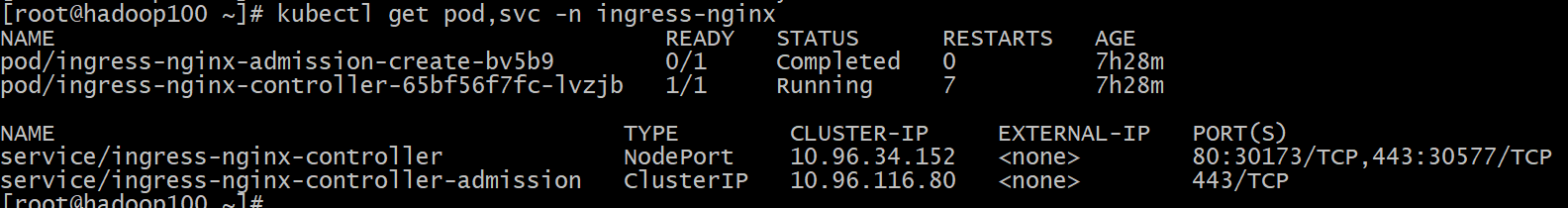

p57-61 ingress网络模型

1、安装

wget https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.47.0/deploy/static/provider/baremetal/deploy.yaml#修改镜像

vi deploy.yaml

#将image的值改为如下值:

registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/ingress-nginx-controller:v0.46.0# 检查安装的结果

kubectl get pod,svc -n ingress-nginx# 最后别忘记把svc暴露的端口要放行

如果下载不到,用以下文件

apiVersion: v1

kind: Namespace

metadata:name: ingress-nginxlabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginx---

# Source: ingress-nginx/templates/controller-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:labels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: controllername: ingress-nginxnamespace: ingress-nginx

automountServiceAccountToken: true

---

# Source: ingress-nginx/templates/controller-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:labels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: controllername: ingress-nginx-controllernamespace: ingress-nginx

data:

---

# Source: ingress-nginx/templates/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:labels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmname: ingress-nginx

rules:- apiGroups:- ''resources:- configmaps- endpoints- nodes- pods- secretsverbs:- list- watch- apiGroups:- ''resources:- nodesverbs:- get- apiGroups:- ''resources:- servicesverbs:- get- list- watch- apiGroups:- extensions- networking.k8s.io # k8s 1.14+resources:- ingressesverbs:- get- list- watch- apiGroups:- ''resources:- eventsverbs:- create- patch- apiGroups:- extensions- networking.k8s.io # k8s 1.14+resources:- ingresses/statusverbs:- update- apiGroups:- networking.k8s.io # k8s 1.14+resources:- ingressclassesverbs:- get- list- watch

---

# Source: ingress-nginx/templates/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:labels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmname: ingress-nginx

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: ingress-nginx

subjects:- kind: ServiceAccountname: ingress-nginxnamespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:labels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: controllername: ingress-nginxnamespace: ingress-nginx

rules:- apiGroups:- ''resources:- namespacesverbs:- get- apiGroups:- ''resources:- configmaps- pods- secrets- endpointsverbs:- get- list- watch- apiGroups:- ''resources:- servicesverbs:- get- list- watch- apiGroups:- extensions- networking.k8s.io # k8s 1.14+resources:- ingressesverbs:- get- list- watch- apiGroups:- extensions- networking.k8s.io # k8s 1.14+resources:- ingresses/statusverbs:- update- apiGroups:- networking.k8s.io # k8s 1.14+resources:- ingressclassesverbs:- get- list- watch- apiGroups:- ''resources:- configmapsresourceNames:- ingress-controller-leader-nginxverbs:- get- update- apiGroups:- ''resources:- configmapsverbs:- create- apiGroups:- ''resources:- eventsverbs:- create- patch

---

# Source: ingress-nginx/templates/controller-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:labels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: controllername: ingress-nginxnamespace: ingress-nginx

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: ingress-nginx

subjects:- kind: ServiceAccountname: ingress-nginxnamespace: ingress-nginx

---

# Source: ingress-nginx/templates/controller-service-webhook.yaml

apiVersion: v1

kind: Service

metadata:labels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: controllername: ingress-nginx-controller-admissionnamespace: ingress-nginx

spec:type: ClusterIPports:- name: https-webhookport: 443targetPort: webhookselector:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1

kind: Service

metadata:annotations:labels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: controllername: ingress-nginx-controllernamespace: ingress-nginx

spec:type: NodePortports:- name: httpport: 80protocol: TCPtargetPort: http- name: httpsport: 443protocol: TCPtargetPort: httpsselector:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/component: controller

---

# Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:labels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: controllername: ingress-nginx-controllernamespace: ingress-nginx

spec:selector:matchLabels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/component: controllerrevisionHistoryLimit: 10minReadySeconds: 0template:metadata:labels:app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/component: controllerspec:dnsPolicy: ClusterFirstcontainers:- name: controllerimage: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/ingress-nginx-controller:v0.46.0imagePullPolicy: IfNotPresentlifecycle:preStop:exec:command:- /wait-shutdownargs:- /nginx-ingress-controller- --election-id=ingress-controller-leader- --ingress-class=nginx- --configmap=$(POD_NAMESPACE)/ingress-nginx-controller- --validating-webhook=:8443- --validating-webhook-certificate=/usr/local/certificates/cert- --validating-webhook-key=/usr/local/certificates/keysecurityContext:capabilities:drop:- ALLadd:- NET_BIND_SERVICErunAsUser: 101allowPrivilegeEscalation: trueenv:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespace- name: LD_PRELOADvalue: /usr/local/lib/libmimalloc.solivenessProbe:failureThreshold: 5httpGet:path: /healthzport: 10254scheme: HTTPinitialDelaySeconds: 10periodSeconds: 10successThreshold: 1timeoutSeconds: 1readinessProbe:failureThreshold: 3httpGet:path: /healthzport: 10254scheme: HTTPinitialDelaySeconds: 10periodSeconds: 10successThreshold: 1timeoutSeconds: 1ports:- name: httpcontainerPort: 80protocol: TCP- name: httpscontainerPort: 443protocol: TCP- name: webhookcontainerPort: 8443protocol: TCPvolumeMounts:- name: webhook-certmountPath: /usr/local/certificates/readOnly: trueresources:requests:cpu: 100mmemory: 90MinodeSelector:kubernetes.io/os: linuxserviceAccountName: ingress-nginxterminationGracePeriodSeconds: 300volumes:- name: webhook-certsecret:secretName: ingress-nginx-admission

---

# Source: ingress-nginx/templates/admission-webhooks/validating-webhook.yaml

# before changing this value, check the required kubernetes version

# https://kubernetes.io/docs/reference/access-authn-authz/extensible-admission-controllers/#prerequisites

apiVersion: admissionregistration.k8s.io/v1

kind: ValidatingWebhookConfiguration

metadata:labels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhookname: ingress-nginx-admission

webhooks:- name: validate.nginx.ingress.kubernetes.iomatchPolicy: Equivalentrules:- apiGroups:- networking.k8s.ioapiVersions:- v1beta1operations:- CREATE- UPDATEresources:- ingressesfailurePolicy: FailsideEffects: NoneadmissionReviewVersions:- v1- v1beta1clientConfig:service:namespace: ingress-nginxname: ingress-nginx-controller-admissionpath: /networking/v1beta1/ingresses

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: ingress-nginx-admissionannotations:helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgradehelm.sh/hook-delete-policy: before-hook-creation,hook-succeededlabels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhooknamespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:name: ingress-nginx-admissionannotations:helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgradehelm.sh/hook-delete-policy: before-hook-creation,hook-succeededlabels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhook

rules:- apiGroups:- admissionregistration.k8s.ioresources:- validatingwebhookconfigurationsverbs:- get- update

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/clusterrolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:name: ingress-nginx-admissionannotations:helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgradehelm.sh/hook-delete-policy: before-hook-creation,hook-succeededlabels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhook

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: ingress-nginx-admission

subjects:- kind: ServiceAccountname: ingress-nginx-admissionnamespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:name: ingress-nginx-admissionannotations:helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgradehelm.sh/hook-delete-policy: before-hook-creation,hook-succeededlabels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhooknamespace: ingress-nginx

rules:- apiGroups:- ''resources:- secretsverbs:- get- create

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:name: ingress-nginx-admissionannotations:helm.sh/hook: pre-install,pre-upgrade,post-install,post-upgradehelm.sh/hook-delete-policy: before-hook-creation,hook-succeededlabels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhooknamespace: ingress-nginx

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: ingress-nginx-admission

subjects:- kind: ServiceAccountname: ingress-nginx-admissionnamespace: ingress-nginx

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-createSecret.yaml

apiVersion: batch/v1

kind: Job

metadata:name: ingress-nginx-admission-createannotations:helm.sh/hook: pre-install,pre-upgradehelm.sh/hook-delete-policy: before-hook-creation,hook-succeededlabels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhooknamespace: ingress-nginx

spec:template:metadata:name: ingress-nginx-admission-createlabels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhookspec:containers:- name: createimage: docker.io/jettech/kube-webhook-certgen:v1.5.1imagePullPolicy: IfNotPresentargs:- create- --host=ingress-nginx-controller-admission,ingress-nginx-controller-admission.$(POD_NAMESPACE).svc- --namespace=$(POD_NAMESPACE)- --secret-name=ingress-nginx-admissionenv:- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacerestartPolicy: OnFailureserviceAccountName: ingress-nginx-admissionsecurityContext:runAsNonRoot: truerunAsUser: 2000

---

# Source: ingress-nginx/templates/admission-webhooks/job-patch/job-patchWebhook.yaml

apiVersion: batch/v1

kind: Job

metadata:name: ingress-nginx-admission-patchannotations:helm.sh/hook: post-install,post-upgradehelm.sh/hook-delete-policy: before-hook-creation,hook-succeededlabels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhooknamespace: ingress-nginx

spec:template:metadata:name: ingress-nginx-admission-patchlabels:helm.sh/chart: ingress-nginx-3.33.0app.kubernetes.io/name: ingress-nginxapp.kubernetes.io/instance: ingress-nginxapp.kubernetes.io/version: 0.47.0app.kubernetes.io/managed-by: Helmapp.kubernetes.io/component: admission-webhookspec:containers:- name: patchimage: docker.io/jettech/kube-webhook-certgen:v1.5.1imagePullPolicy: IfNotPresentargs:- patch- --webhook-name=ingress-nginx-admission- --namespace=$(POD_NAMESPACE)- --patch-mutating=false- --secret-name=ingress-nginx-admission- --patch-failure-policy=Failenv:- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacerestartPolicy: OnFailureserviceAccountName: ingress-nginx-admissionsecurityContext:runAsNonRoot: truerunAsUser: 2000

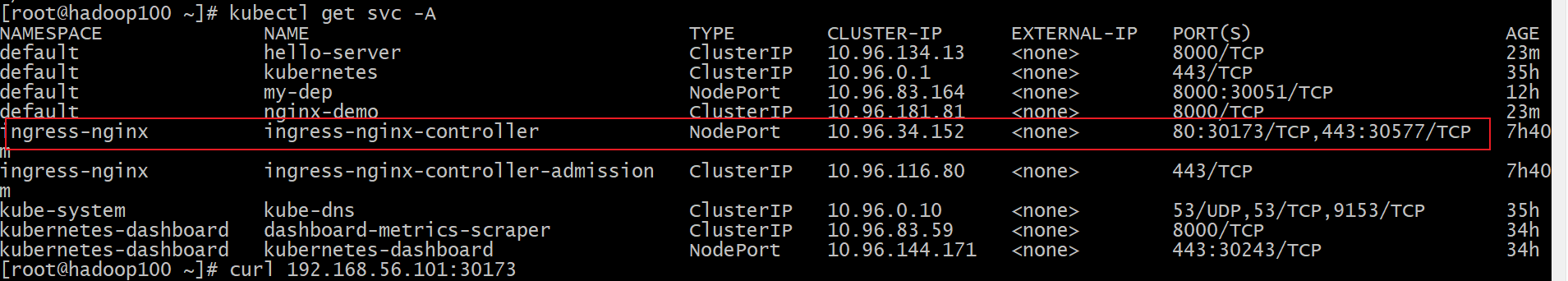

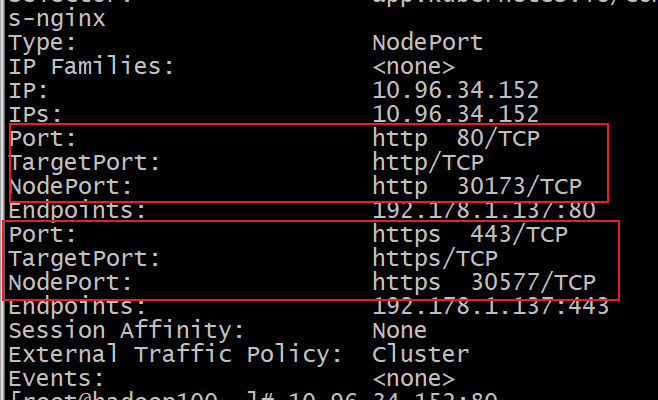

2、使用

官网地址:https://kubernetes.github.io/ingress-nginx/

就是nginx做的

port私网用,nodeport公网用.targetport是目标端口

集群私网访问

http://10.96.34.152:80

https://10.96.34.152:443

NodePort模式下公网访问

http://192.168.10.101:30173/

https://192.168.10.101:30577/

测试环境

应用如下test.yaml,准备好测试环境 kubectl apply -f test.yaml,

部署pod和service

apiVersion: apps/v1

kind: Deployment

metadata:name: hello-server

spec:replicas: 2selector:matchLabels:app: hello-servertemplate:metadata:labels:app: hello-serverspec:containers:- name: hello-serverimage: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/hello-serverports:- containerPort: 9000

---

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: nginx-demoname: nginx-demo

spec:replicas: 2selector:matchLabels:app: nginx-demotemplate:metadata:labels:app: nginx-demospec:containers:- image: nginxname: nginx

---

apiVersion: v1

kind: Service

metadata:labels:app: nginx-demoname: nginx-demo

spec:selector:app: nginx-demoports:- port: 8000protocol: TCPtargetPort: 80

---

apiVersion: v1

kind: Service

metadata:labels:app: hello-servername: hello-server

spec:selector:app: hello-serverports:- port: 8000protocol: TCPtargetPort: 9000

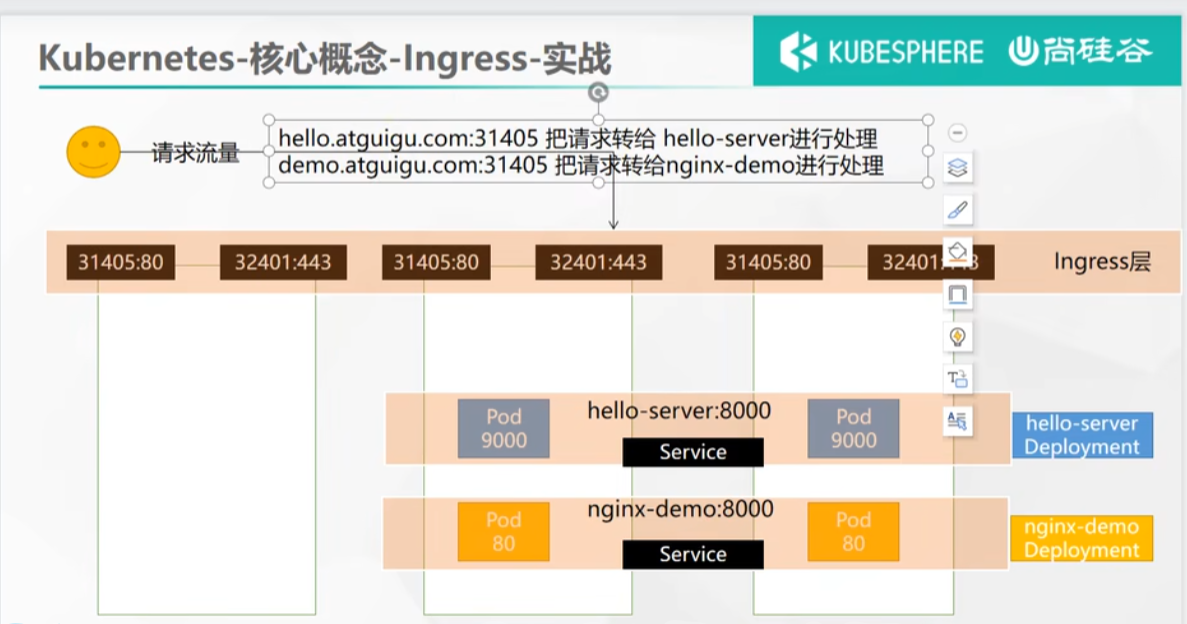

现在想要根据不同的域名访问不同的service

1、域名访问

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:name: ingress-host-bar

spec:ingressClassName: nginxrules:- host: "hello.atguigu.com"http:paths:- pathType: Prefixpath: "/"backend:service:name: hello-serverport:number: 8000- host: "demo.atguigu.com"http:paths:- pathType: Prefixpath: "/nginx" # 把请求会转给下面的服务,下面的服务一定要能处理这个路径,不能处理就是404backend:service:name: nginx-demo ## java,比如使用路径重写,去掉前缀nginxport:number: 8000

2、路径重写

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:annotations:nginx.ingress.kubernetes.io/rewrite-target: /$2name: ingress-host-bar

spec:ingressClassName: nginxrules:- host: "hello.atguigu.com"http:paths:- pathType: Prefixpath: "/"backend:service:name: hello-serverport:number: 8000- host: "demo.atguigu.com"http:paths:- pathType: Prefixpath: "/nginx(/|$)(.*)" # 把请求会转给下面的服务,下面的服务一定要能处理这个路径,不能处理就是404backend:service:name: nginx-demo ## java,比如使用路径重写,去掉前缀nginxport:number: 8000

3、流量限制

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:name: ingress-limit-rateannotations:nginx.ingress.kubernetes.io/limit-rps: "1"

spec:ingressClassName: nginxrules:- host: "haha.atguigu.com"http:paths:- pathType: Exactpath: "/"backend:service:name: nginx-demoport:number: 8000

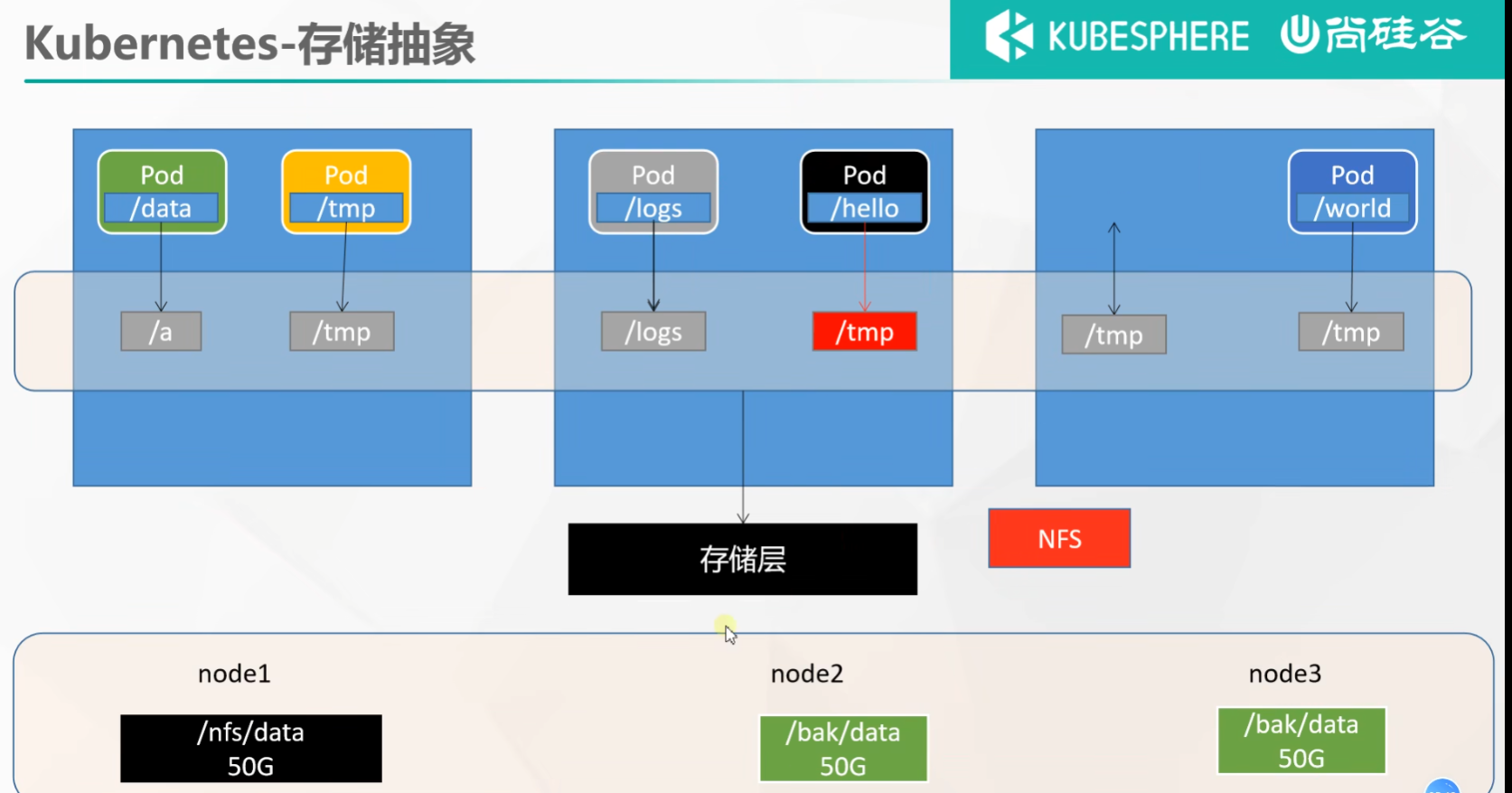

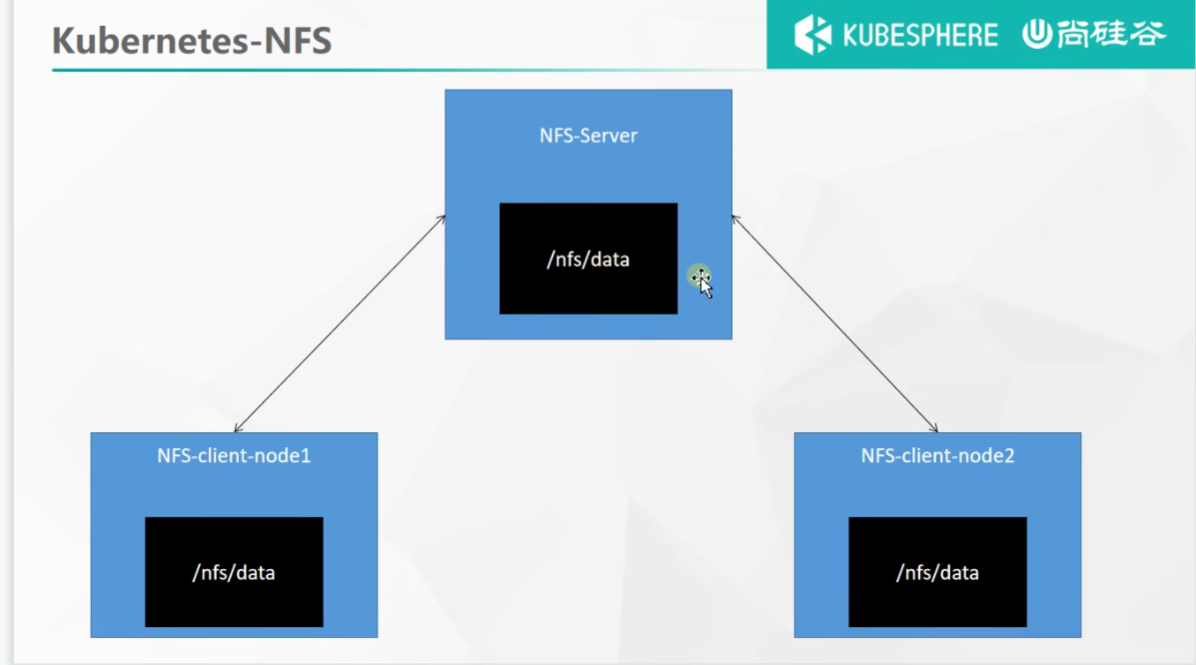

62 存储抽象基本概念 NFS搭建

传统的docker挂载不能解决分布式下问题,使用NFS

环境准备

1、所有节点

#所有机器安装

yum install -y nfs-utils

2、主节点

#nfs主节点

echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exportsmkdir -p /nfs/data

systemctl enable rpcbind --now

systemctl enable nfs-server --now

#配置生效

exportfs -r

3、从节点

showmount -e 172.31.0.4#执行以下命令挂载 nfs 服务器上的共享目录到本机路径 /root/nfsmount

mkdir -p /nfs/datamount -t nfs 172.31.0.4:/nfs/data /nfs/data

# 写入一个测试文件

echo "hello nfs server" > /nfs/data/test.txt

4、原生方式数据挂载

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: nginx-pv-demoname: nginx-pv-demo

spec:replicas: 2selector:matchLabels:app: nginx-pv-demotemplate:metadata:labels:app: nginx-pv-demospec:containers:- image: nginxname: nginxvolumeMounts:- name: htmlmountPath: /usr/share/nginx/htmlvolumes:- name: htmlnfs:server: 192.168.10.100path: /nfs/data/nginx-pv

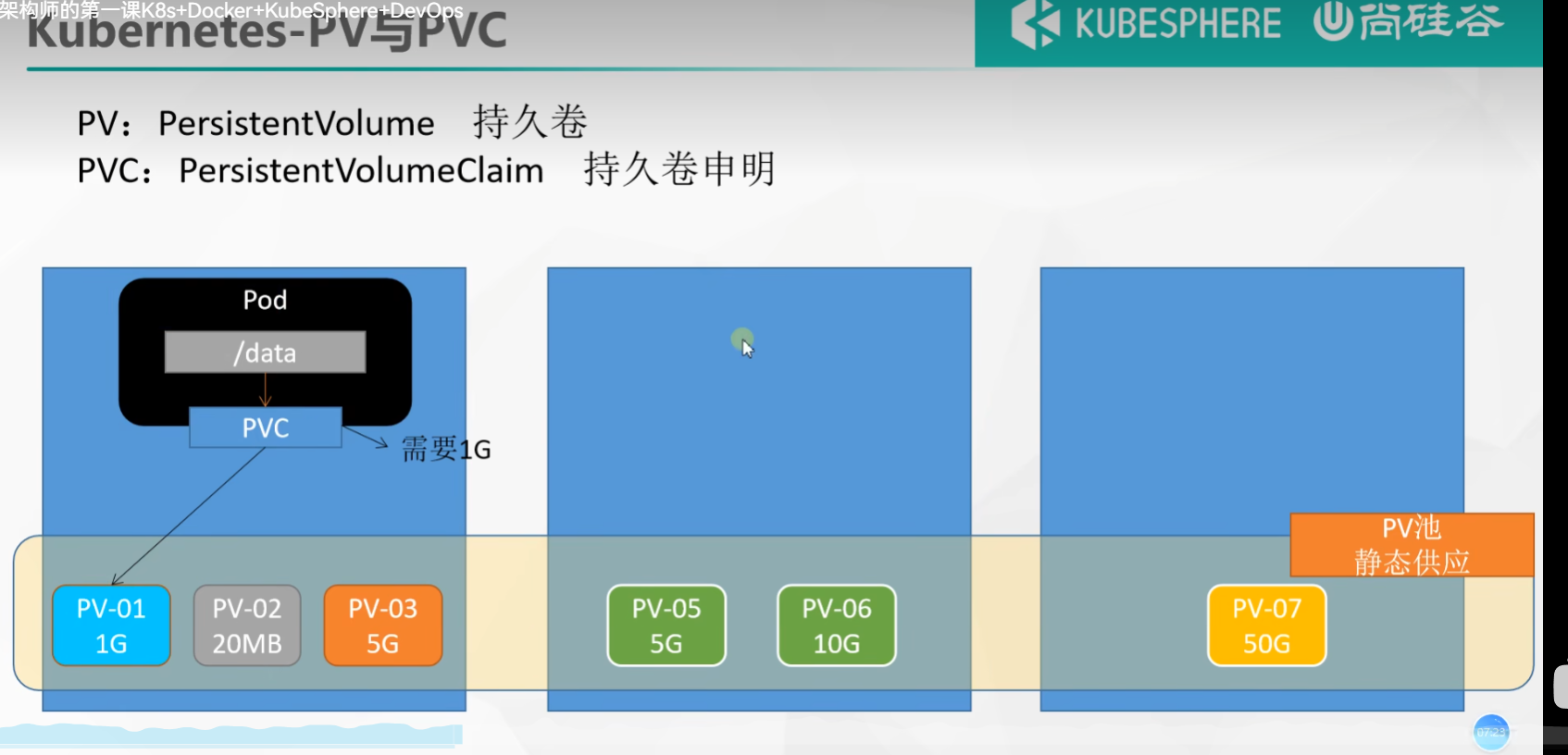

p64 pv 与pvc

1、PV&PVC

PV:持久卷(Persistent Volume),将应用需要持久化的数据保存到指定位置

PVC:持久卷申明(Persistent Volume Claim),申明需要使用的持久卷规格

1、创建pv池

静态供应

#nfs主节点

mkdir -p /nfs/data/01

mkdir -p /nfs/data/02

mkdir -p /nfs/data/03

创建PV

apiVersion: v1

kind: PersistentVolume

metadata:name: pv01-10m

spec:capacity:storage: 10MaccessModes:- ReadWriteManystorageClassName: nfsnfs:path: /nfs/data/01server: 192.168.10.100

---

apiVersion: v1

kind: PersistentVolume

metadata:name: pv02-1gi

spec:capacity:storage: 1GiaccessModes:- ReadWriteManystorageClassName: nfsnfs:path: /nfs/data/02server: 192.168.10.100

---

apiVersion: v1

kind: PersistentVolume

metadata:name: pv03-3gi

spec:capacity:storage: 3GiaccessModes:- ReadWriteManystorageClassName: nfsnfs:path: /nfs/data/03server: 192.168.10.100

2、PVC创建与绑定

创建PVC

kind: PersistentVolumeClaim

apiVersion: v1

metadata:name: nginx-pvc

spec:accessModes:- ReadWriteManyresources:requests:storage: 200MistorageClassName: nfs

创建Pod绑定PVC

apiVersion: apps/v1

kind: Deployment

metadata:labels:app: nginx-deploy-pvcname: nginx-deploy-pvc

spec:replicas: 2selector:matchLabels:app: nginx-deploy-pvctemplate:metadata:labels:app: nginx-deploy-pvcspec:containers:- image: nginxname: nginxvolumeMounts:- name: htmlmountPath: /usr/share/nginx/htmlvolumes:- name: htmlpersistentVolumeClaim:claimName: nginx-pvc

p65 66 ConfigMap重抽取配置

之前使用docker 挂载配置

现在使用pod挂载configmap配置

1、redis示例

1、把之前的配置文件创建为配置集

#创建配置,redis保存到k8s的etcd;

kubectl create cm redis-conf --from-file=redis.conf

ConfigMap详情

apiVersion: v1

data: #data是所有真正的数据,key:默认是文件名 value:配置文件的内容redis.conf: |appendonly yes

kind: ConfigMap

metadata:name: redis-confnamespace: default

2、创建Pod

apiVersion: v1

kind: Pod

metadata:name: redis

spec:containers:- name: redisimage: rediscommand:- redis-server- "/redis-master/redis.conf" #指的是redis容器内部的位置ports:- containerPort: 6379volumeMounts:- mountPath: /dataname: data- mountPath: /redis-mastername: configvolumes:- name: dataemptyDir: {}- name: configconfigMap:name: redis-confitems:- key: redis.confpath: redis.conf

3、Secret

Secret 对象类型用来保存敏感信息,例如密码、OAuth 令牌和 SSH 密钥。 将这些信息放在 secret 中比放在 Pod 的定义或者 容器镜像 中来说更加安全和灵活。

kubectl create secret docker-registry leifengyang-docker \

--docker-username=leifengyang \

--docker-password=Lfy123456 \

--docker-email=534096094@qq.com##命令格式

kubectl create secret docker-registry regcred \--docker-server=<你的镜像仓库服务器> \--docker-username=<你的用户名> \--docker-password=<你的密码> \--docker-email=<你的邮箱地址>

apiVersion: v1

kind: Pod

metadata:name: private-nginx

spec:containers:- name: private-nginximage: leifengyang/guignginx:v1.0imagePullSecrets:- name: leifengyang-docker

p 71 kubesphere 平台安装 安装前置环境 配置默认存储

前面已经安装的就不安装了,只安装要加的

1、nfs文件系统

配置默认存储

## 创建了一个存储类

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:name: nfs-storageannotations:storageclass.kubernetes.io/is-default-class: "true"

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

parameters:archiveOnDelete: "true" ## 删除pv的时候,pv的内容是否要备份---

apiVersion: apps/v1

kind: Deployment

metadata:name: nfs-client-provisionerlabels:app: nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: default

spec:replicas: 1strategy:type: Recreateselector:matchLabels:app: nfs-client-provisionertemplate:metadata:labels:app: nfs-client-provisionerspec:serviceAccountName: nfs-client-provisionercontainers:- name: nfs-client-provisionerimage: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/nfs-subdir-external-provisioner:v4.0.2# resources:# limits:# cpu: 10m# requests:# cpu: 10mvolumeMounts:- name: nfs-client-rootmountPath: /persistentvolumesenv:- name: PROVISIONER_NAMEvalue: k8s-sigs.io/nfs-subdir-external-provisioner- name: NFS_SERVERvalue: 192.168.10.100 ## 指定自己nfs服务器地址- name: NFS_PATH value: /nfs/data ## nfs服务器共享的目录volumes:- name: nfs-client-rootnfs:server: 192.168.10.100path: /nfs/data

---

apiVersion: v1

kind: ServiceAccount

metadata:name: nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: default

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: nfs-client-provisioner-runner

rules:- apiGroups: [""]resources: ["nodes"]verbs: ["get", "list", "watch"]- apiGroups: [""]resources: ["persistentvolumes"]verbs: ["get", "list", "watch", "create", "delete"]- apiGroups: [""]resources: ["persistentvolumeclaims"]verbs: ["get", "list", "watch", "update"]- apiGroups: ["storage.k8s.io"]resources: ["storageclasses"]verbs: ["get", "list", "watch"]- apiGroups: [""]resources: ["events"]verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: run-nfs-client-provisioner

subjects:- kind: ServiceAccountname: nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: default

roleRef:kind: ClusterRolename: nfs-client-provisioner-runnerapiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: leader-locking-nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: default

rules:- apiGroups: [""]resources: ["endpoints"]verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:name: leader-locking-nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: default

subjects:- kind: ServiceAccountname: nfs-client-provisioner# replace with namespace where provisioner is deployednamespace: default

roleRef:kind: Rolename: leader-locking-nfs-client-provisionerapiGroup: rbac.authorization.k8s.io

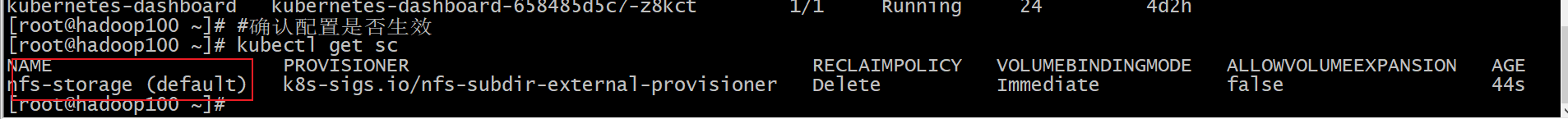

#确认配置是否生效

kubectl get sc

2、metrics-server

集群指标监控组件

apiVersion: v1

kind: ServiceAccount

metadata:labels:k8s-app: metrics-servername: metrics-servernamespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:labels:k8s-app: metrics-serverrbac.authorization.k8s.io/aggregate-to-admin: "true"rbac.authorization.k8s.io/aggregate-to-edit: "true"rbac.authorization.k8s.io/aggregate-to-view: "true"name: system:aggregated-metrics-reader

rules:

- apiGroups:- metrics.k8s.ioresources:- pods- nodesverbs:- get- list- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:labels:k8s-app: metrics-servername: system:metrics-server

rules:

- apiGroups:- ""resources:- pods- nodes- nodes/stats- namespaces- configmapsverbs:- get- list- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:labels:k8s-app: metrics-servername: metrics-server-auth-readernamespace: kube-system

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccountname: metrics-servernamespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:labels:k8s-app: metrics-servername: metrics-server:system:auth-delegator

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:auth-delegator

subjects:

- kind: ServiceAccountname: metrics-servernamespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:labels:k8s-app: metrics-servername: system:metrics-server

roleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: system:metrics-server

subjects:

- kind: ServiceAccountname: metrics-servernamespace: kube-system

---

apiVersion: v1

kind: Service

metadata:labels:k8s-app: metrics-servername: metrics-servernamespace: kube-system

spec:ports:- name: httpsport: 443protocol: TCPtargetPort: httpsselector:k8s-app: metrics-server

---

apiVersion: apps/v1

kind: Deployment

metadata:labels:k8s-app: metrics-servername: metrics-servernamespace: kube-system

spec:selector:matchLabels:k8s-app: metrics-serverstrategy:rollingUpdate:maxUnavailable: 0template:metadata:labels:k8s-app: metrics-serverspec:containers:- args:- --cert-dir=/tmp- --kubelet-insecure-tls- --secure-port=4443- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname- --kubelet-use-node-status-portimage: registry.cn-hangzhou.aliyuncs.com/lfy_k8s_images/metrics-server:v0.4.3imagePullPolicy: IfNotPresentlivenessProbe:failureThreshold: 3httpGet:path: /livezport: httpsscheme: HTTPSperiodSeconds: 10name: metrics-serverports:- containerPort: 4443name: httpsprotocol: TCPreadinessProbe:failureThreshold: 3httpGet:path: /readyzport: httpsscheme: HTTPSperiodSeconds: 10securityContext:readOnlyRootFilesystem: truerunAsNonRoot: truerunAsUser: 1000volumeMounts:- mountPath: /tmpname: tmp-dirnodeSelector:kubernetes.io/os: linuxpriorityClassName: system-cluster-criticalserviceAccountName: metrics-servervolumes:- emptyDir: {}name: tmp-dir

---

apiVersion: apiregistration.k8s.io/v1

kind: APIService

metadata:labels:k8s-app: metrics-servername: v1beta1.metrics.k8s.io

spec:group: metrics.k8s.iogroupPriorityMinimum: 100insecureSkipTLSVerify: trueservice:name: metrics-servernamespace: kube-systemversion: v1beta1versionPriority: 100

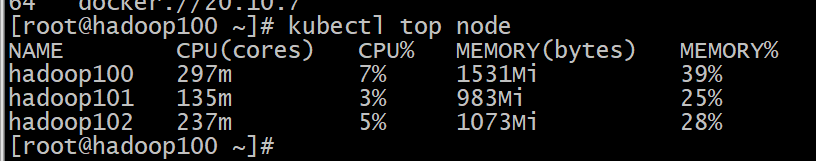

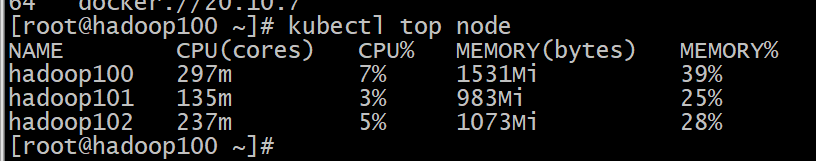

# 查看资源使用情况kubectl top nodekubectl top pod -A

p73全功能安装完成

安装KubeSphere

1、下载核心文件

wget https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/kubesphere-installer.yamlwget https://github.com/kubesphere/ks-installer/releases/download/v3.1.1/cluster-configuration.yaml

2、修改cluster-configuration

在 cluster-configuration.yaml中指定我们需要开启的功能

参照官网“启用可插拔组件”

https://kubesphere.com.cn/docs/pluggable-components/overview/

3、执行安装

kubectl apply -f kubesphere-installer.yamlkubectl apply -f cluster-configuration.yaml4、查看安装进度

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f访问任意机器的 30880端口

账号 : admin

密码 : P@88w0rd

解决etcd监控证书找不到问题

kubectl -n kubesphere-monitoring-system create secret generic kube-etcd-client-certs --from-file=etcd-client-ca.crt=/etc/kubernetes/pki/etcd/ca.crt --from-file=etcd-client.crt=/etc/kubernetes/pki/apiserver-etcd-client.crt --from-file=etcd-client.key=/etc/kubernetes/pki/apiserver-etcd-client.key

附录 略

相关文章:

尚硅谷k8s 2

p54-56 k8s核心实战 service服务发现 Service:将一组 Pods 公开为网络服务的抽象方法。 #暴露Deploy,暴露deploy会出现在svc kubectl expose deployment my-dep --port8000 --target-port80#使用标签检索Pod kubectl get pod -l appmy-depapiVersion: v1 kind: Service metad…...

机器学习---线性回归

1、线性回归 例如:对于一个房子的价格,其影响因素有很多,例如房子的面积、房子的卧室数量、房子的卫生间数量等等都会影响房子的价格。这些影响因子不妨用 x i x_{i} xi表示,那么房价 y y y可以用如下公式表示: y …...

字符串去重、集合遍历 题目

题目 JAVA38 字符串去重描述输入描述:输出描述: 示例:分析:代码:大佬代码: JAVA39 集合遍历描述输入描述:输出描述: 示例:分析:代码: JAVA38 字符串去重 描述 从键盘获取…...

SQL窗口函数详解

详细说明在sql中窗口函数是什么,为什么需要窗口函数,有普通的聚合函数了那窗口函数的意义在哪,窗口函数的执行逻辑是什么,over中的字句是如何使用和理解的(是不是句句戳到你的痛点,哼哼~&#x…...

如何用Java写一个整理Java方法调用关系网络的程序

大家好,我是猿码叔叔,一位 Java 语言工作者,也是一位算法学习刚入门的小学生。很久没有为大家带来干货了。 最近遇到了一个问题,大致是这样的:如果给你一个 java 方法,如何找到有哪些菜单在使用。我的第一想…...

176)

基于STM32设计的管道有害气体检测装置(ESP8266局域网)176

基于STM32设计的管道有害气体检测装置(176) 文章目录 一、前言1.1 项目介绍【1】项目功能介绍【2】项目硬件模块组成【3】ESP8266模块配置【4】上位机开发思路【5】项目模块划分【6】LCD显示屏界面布局【7】上位机界面布局1.2 项目功能需求1.3 项目开发背景1.4 开发工具的选择1…...

iCloud照片库全指南:云端存储与智能管理

iCloud照片库全指南:云端存储与智能管理 在数字化时代,照片和视频成为了我们生活中不可或缺的一部分。随着手机摄像头质量的提升,我们记录生活点滴的方式也越来越丰富。然而,这也带来了一个问题:如何有效管理和存储日…...

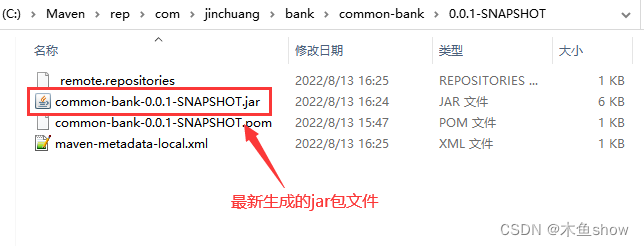

IDEA中使用Maven打包及碰到的问题

1. 项目打包 IDEA中,maven打包的方式有两种,分别是 install 和 package ,他们的区别如下: install 方式 install 打包时做了两件事,① 将项目打包成 jar 或者 war,打包结果存放在项目的 target 目录下。…...

TreeMap、HashMap 和 LinkedHashMap 的区别

TreeMap、HashMap 和 LinkedHashMap 的区别 1、HashMap2、LinkedHashMap3、TreeMap4、总结 💖The Begin💖点点关注,收藏不迷路💖 在 Java 中,TreeMap、HashMap 和 LinkedHashMap 是三种常用的集合类,它们在…...

【跟我学K8S】45天入门到熟练详细学习计划

目录 一、什么是K8S 核心功能 架构组件 使用场景 二、入门到熟练的学习计划 第一周:K8s基础和概念 第二周:核心对象和网络 第三周:进阶使用和管理 第四周:CI/CD集成和监控 第五周:实战模拟和案例分析 第六周…...

ubuntu下载Nginx

一、Nginx下载安装(Ubuntu系统) 1.nginx下载 sudo apt-get install nginx2.nginx启动 启动命令 sudo nginx重新编译(每次更改完nginx配置文件后运行): sudo nginx -s reload3.测试nginx是否启动成功 打开浏览器访问本机80端口…...

【区分vue2和vue3下的element UI Dialog 对话框组件,分别详细介绍属性,事件,方法如何使用,并举例】

在 Vue 2 和 Vue 3 中,Element UI(针对 Vue 2)和 Element Plus(针对 Vue 3)提供了 Dialog 对话框组件,用于在页面中显示模态对话框。这两个库中的 Dialog 组件在属性、事件和方法的使用上有所相似ÿ…...

docker push 推送镜像到阿里云仓库

1.登陆阿里云 镜像服务,跟着指引操作就行 创建个人实例,创建命名空间、镜像仓库,绑定代码源头 2.将镜像推送到Registry $ docker login --username*** registry.cn-beijing.aliyuncs.com $ docker tag [ImageId] registry.cn-beijing.aliy…...

伯克利、斯坦福和CMU面向具身智能端到端操作联合发布开源通用机器人Policy,可支持多种机器人执行多种任务

不同于LLM或者MLLM那样用于上百亿甚至上千亿参数量的大模型,具身智能端到端大模型并不追求参数规模上的大,而是指其能吸收大量的数据,执行多种任务,并能具备一定的泛化能力,如笔者前博客里的RT1。目前该领域一个前沿工…...

昇思25天学习打卡营第17天(+1)|Diffusion扩散模型

1. 学习内容复盘 本文基于Hugging Face:The Annotated Diffusion Model一文翻译迁移而来,同时参考了由浅入深了解Diffusion Model一文。 本教程在Jupyter Notebook上成功运行。如您下载本文档为Python文件,执行Python文件时,请确…...

【Leetcode笔记】406.根据身高重建队列

文章目录 1. 题目要求2.解题思路 注意3.ACM模式代码 1. 题目要求 2.解题思路 首先,按照每个人的身高属性(即people[i][0])来排队,顺序是从大到小降序排列,如果遇到同身高的,按照另一个属性(即p…...

)

Linux 安装pdfjam (PDF文件尺寸调整)

跟Ghostscript搭配使用,这样就可以将不同尺寸的PDF调整到相同尺寸合并了。 在 CentOS 上安装 pdfjam 需要安装 TeX Live,因为 pdfjam 是基于 TeX Live 的。以下是详细的步骤来安装 pdfjam: ### 步骤 1: 安装 EPEL 仓库 首先,安…...

python+playwright 学习-90 and_ 和 or_ 定位

前言 playwright 从v1.34 版本以后支持and_ 和 or_ 定位 XPath 中的and和or xpath 语法中我们常用的有text()、contains() 、ends_with()、starts_with() //*[text()="文本"] //*[contains(@id, "xx")] //...

亲子时光里的打脸高手,贾乃亮与甜馨的父爱如山

贾乃亮这波操作,简直是“实力打脸”界的MVP啊! 7月5号,他一甩手,甩出张合照, 瞬间让多少猜测纷飞的小伙伴直呼:“脸疼不?”带着咱家小甜心甜馨, 回了哈尔滨老家,这趟亲…...

MySQL篇-SQL优化实战

SQL优化措施 通过我们日常开发的经验可以整理出以下高效SQL的守则 表主键使用自增长bigint加适当的表索引,需要强关联字段建表时就加好索引,常见的有更新时间,单号等字段减少子查询,能用表关联的方式就不用子查询,可…...

Leetcode 3576. Transform Array to All Equal Elements

Leetcode 3576. Transform Array to All Equal Elements 1. 解题思路2. 代码实现 题目链接:3576. Transform Array to All Equal Elements 1. 解题思路 这一题思路上就是分别考察一下是否能将其转化为全1或者全-1数组即可。 至于每一种情况是否可以达到…...

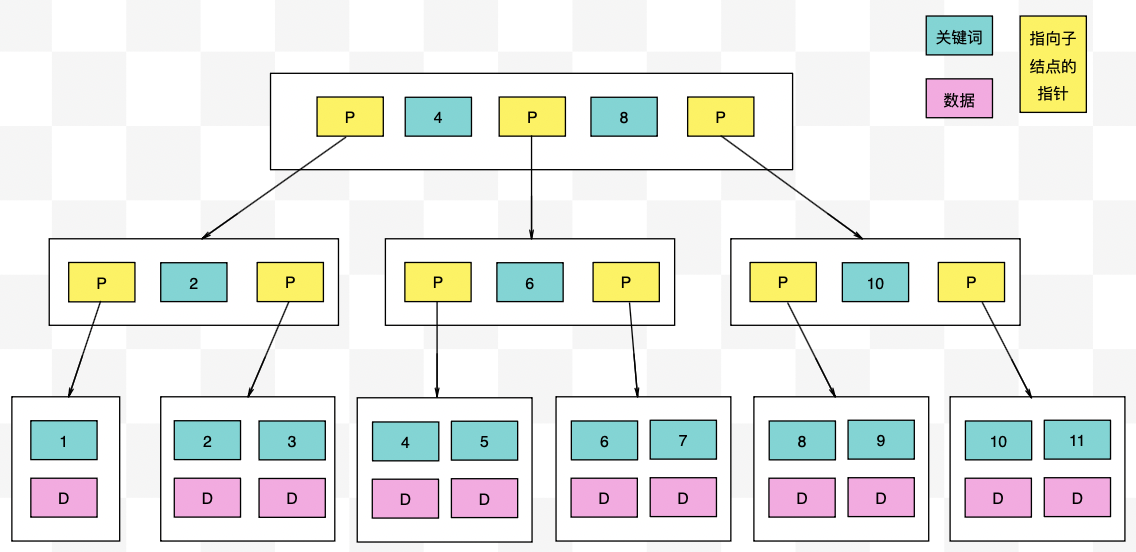

【力扣数据库知识手册笔记】索引

索引 索引的优缺点 优点1. 通过创建唯一性索引,可以保证数据库表中每一行数据的唯一性。2. 可以加快数据的检索速度(创建索引的主要原因)。3. 可以加速表和表之间的连接,实现数据的参考完整性。4. 可以在查询过程中,…...

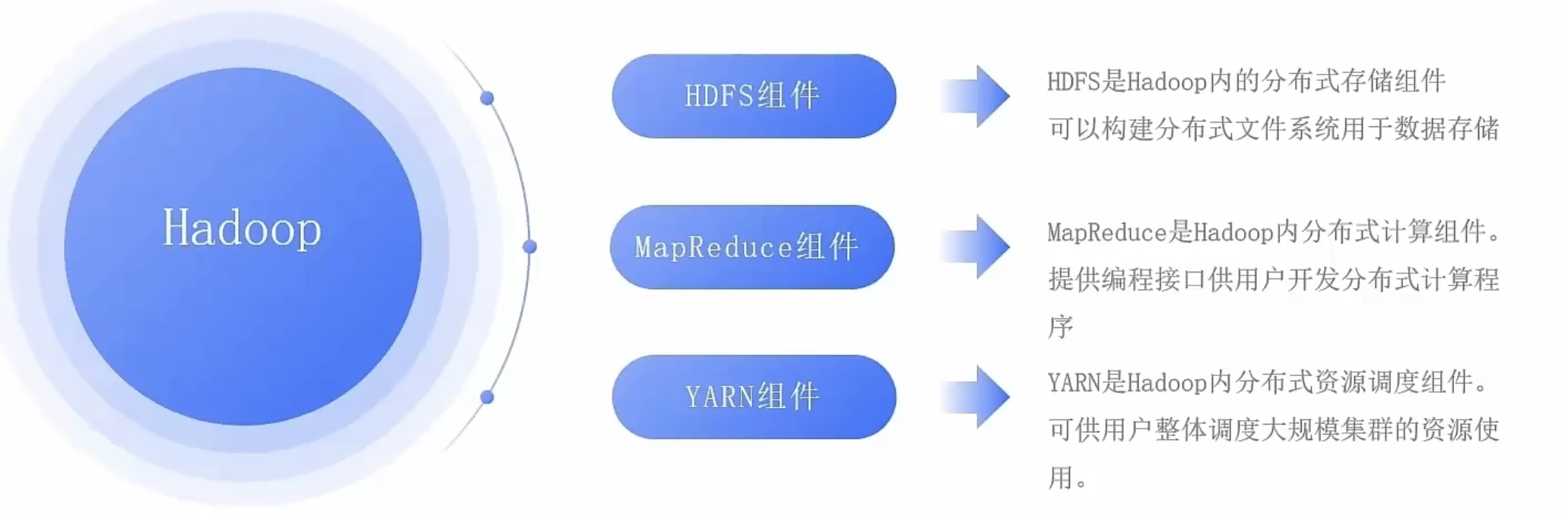

大数据零基础学习day1之环境准备和大数据初步理解

学习大数据会使用到多台Linux服务器。 一、环境准备 1、VMware 基于VMware构建Linux虚拟机 是大数据从业者或者IT从业者的必备技能之一也是成本低廉的方案 所以VMware虚拟机方案是必须要学习的。 (1)设置网关 打开VMware虚拟机,点击编辑…...

质量体系的重要

质量体系是为确保产品、服务或过程质量满足规定要求,由相互关联的要素构成的有机整体。其核心内容可归纳为以下五个方面: 🏛️ 一、组织架构与职责 质量体系明确组织内各部门、岗位的职责与权限,形成层级清晰的管理网络…...

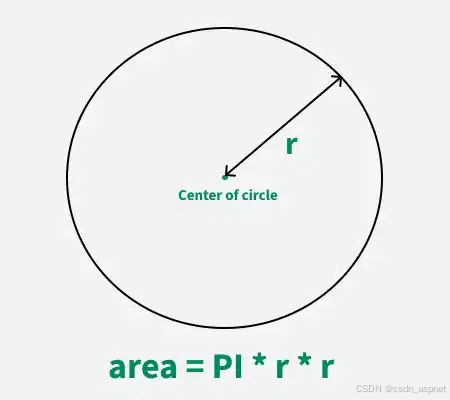

C++ 求圆面积的程序(Program to find area of a circle)

给定半径r,求圆的面积。圆的面积应精确到小数点后5位。 例子: 输入:r 5 输出:78.53982 解释:由于面积 PI * r * r 3.14159265358979323846 * 5 * 5 78.53982,因为我们只保留小数点后 5 位数字。 输…...

鸿蒙DevEco Studio HarmonyOS 5跑酷小游戏实现指南

1. 项目概述 本跑酷小游戏基于鸿蒙HarmonyOS 5开发,使用DevEco Studio作为开发工具,采用Java语言实现,包含角色控制、障碍物生成和分数计算系统。 2. 项目结构 /src/main/java/com/example/runner/├── MainAbilitySlice.java // 主界…...

LeetCode - 199. 二叉树的右视图

题目 199. 二叉树的右视图 - 力扣(LeetCode) 思路 右视图是指从树的右侧看,对于每一层,只能看到该层最右边的节点。实现思路是: 使用深度优先搜索(DFS)按照"根-右-左"的顺序遍历树记录每个节点的深度对于…...

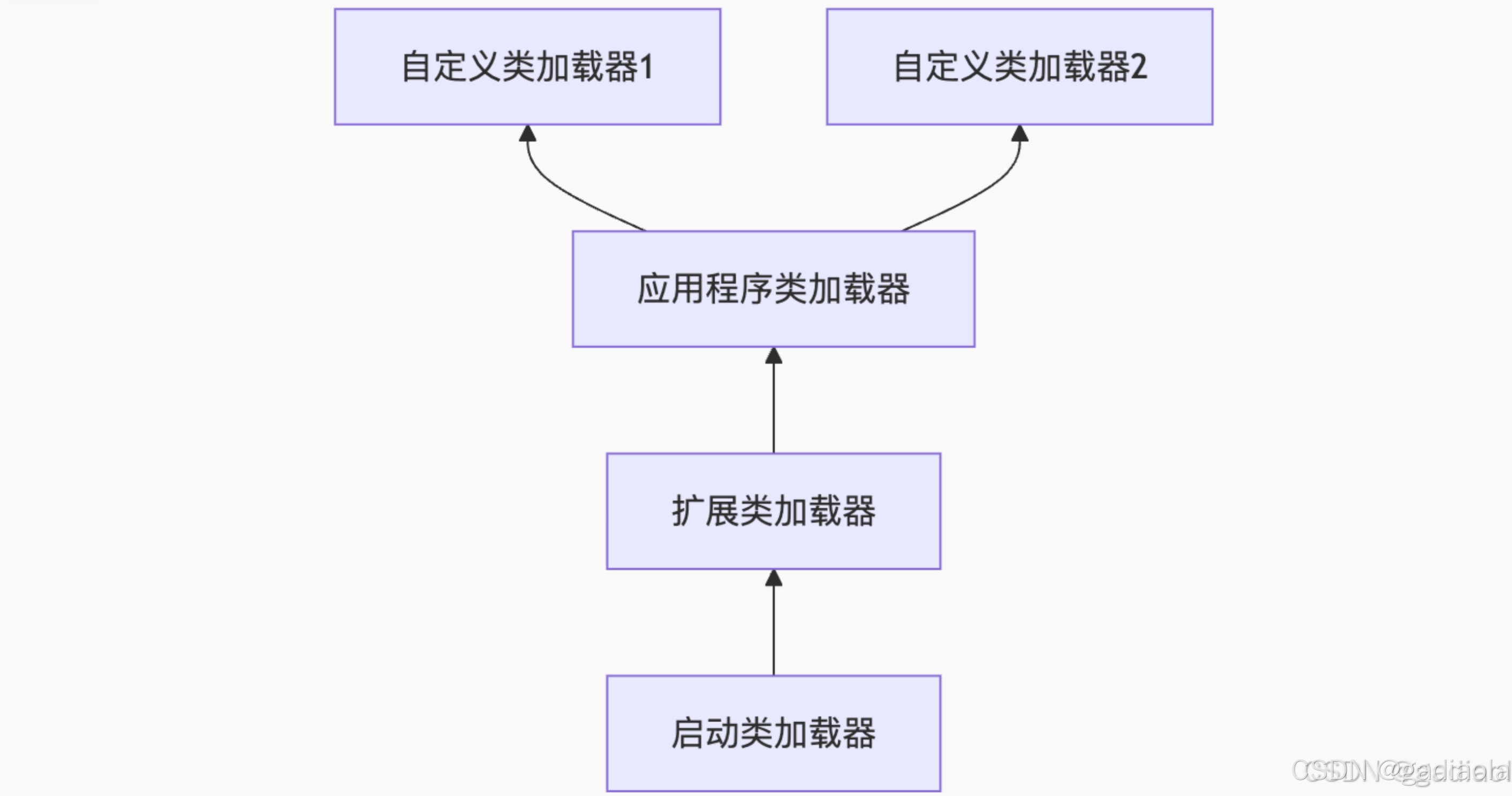

【JVM面试篇】高频八股汇总——类加载和类加载器

目录 1. 讲一下类加载过程? 2. Java创建对象的过程? 3. 对象的生命周期? 4. 类加载器有哪些? 5. 双亲委派模型的作用(好处)? 6. 讲一下类的加载和双亲委派原则? 7. 双亲委派模…...

莫兰迪高级灰总结计划简约商务通用PPT模版

莫兰迪高级灰总结计划简约商务通用PPT模版,莫兰迪调色板清新简约工作汇报PPT模版,莫兰迪时尚风极简设计PPT模版,大学生毕业论文答辩PPT模版,莫兰迪配色总结计划简约商务通用PPT模版,莫兰迪商务汇报PPT模版,…...

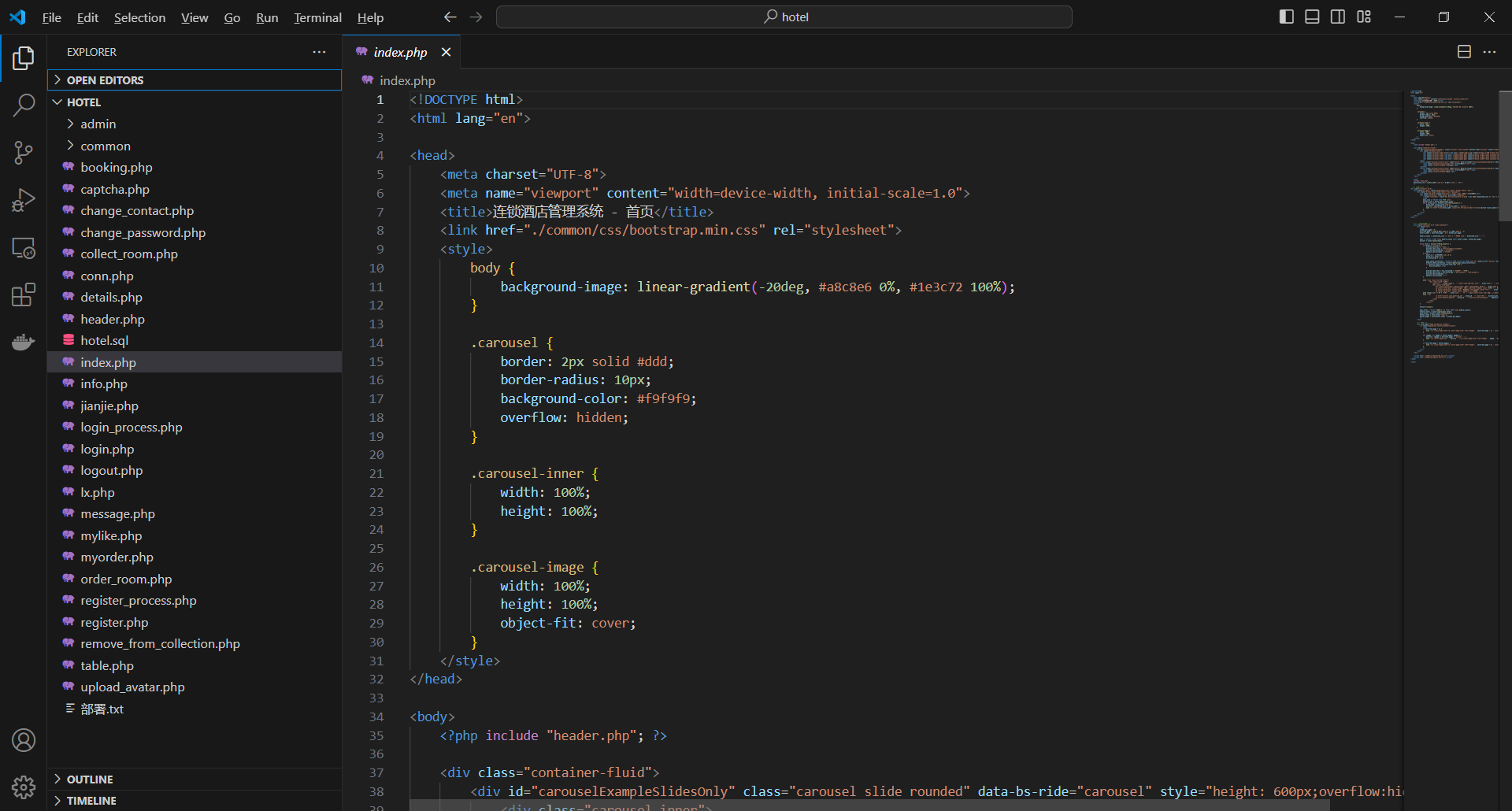

基于PHP的连锁酒店管理系统

有需要请加文章底部Q哦 可远程调试 基于PHP的连锁酒店管理系统 一 介绍 连锁酒店管理系统基于原生PHP开发,数据库mysql,前端bootstrap。系统角色分为用户和管理员。 技术栈 phpmysqlbootstrapphpstudyvscode 二 功能 用户 1 注册/登录/注销 2 个人中…...