在invidia jetpack4.5.1上运行c++版yolov8(tensorRT)

心路历程(可略过)

为了能在arm64上跑通yolov8,我试过很多很多代码,太多对库版本的要求太高了;

比如说有一个是需要依赖onnx库的,(https://github.com/UNeedCryDear/yolov8-opencv-onnxruntime-cpp)

运行成功了报错error: IOrtSessionOptionsAppendExecutionProvider CUDA’ was not declare

d in this scope,一查是不仅需要onnx库,还需要gpu版本的onnx库

因为这个函数是onnxgpu里才有的函数OrtStatus* status = OrtSessionOptionsAppendExecutionProvider_CUDA(_OrtSessionOptions, cudaID);

而onnxruntime的官方下载地址(https://github.com/microsoft/onnxruntime/releases/)

只有这个版本可以用,但是这个并不是onnxruntime的gpu版本,我在论坛上上搜到onnx官方是不提供nvidia gpu的库的,所以需要自己编译。

我就尝试自己编译,结果有各种各样的版本不匹配的问题,先是说opencv版本低,然后又是杂七杂八的。我都按照要求升级了,最后来一个gcc版本也得升级,那我真是得放弃了,因为当前硬件得这些基础环境是不能改变的,我只能放弃上面这个关于onnxruntime的yolov8代码;(所以得到一个经验,这种大型的库最好直接下载官方现成的,自己编译真的非常麻烦,不到万不得已的时候建议直接换代码,这种版本匹配与编译的问题是最难解决的)

好在很幸运,找到了一个轻量级的能在nvidia arm64硬件上成功运行的轻量级c++yolov8代码,非常简洁好用,不需要依赖杂七杂八的库,可以说直接用jetpack默认的库就能可以简单编译而成,能找到非常不容易,下面是全部代码。

-

jetpack版本

-

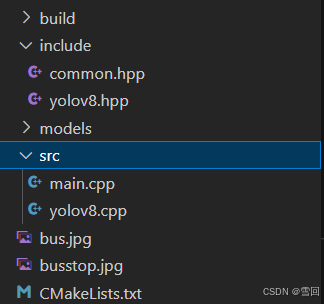

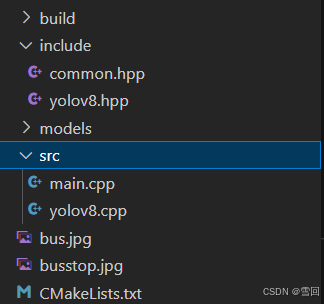

文件结构

-

main.cpp

//

// Created by triple-Mu on 24-1-2023.

// Modified by Q-engineering on 6-3-2024

//#include "chrono"

#include "opencv2/opencv.hpp"

#include "yolov8.hpp"using namespace std;

using namespace cv;//#define VIDEOcv::Size im_size(640, 640);

const int num_labels = 80;

const int topk = 100;

const float score_thres = 0.25f;

const float iou_thres = 0.65f;int main(int argc, char** argv)

{float f;float FPS[16];int i, Fcnt=0;cv::Mat image;std::chrono::steady_clock::time_point Tbegin, Tend;if (argc < 3) {fprintf(stderr,"Usage: ./YoloV8_RT [model_trt.engine] [image or video path] \n");return -1;}const string engine_file_path = argv[1];const string imagepath = argv[2];for(i=0;i<16;i++) FPS[i]=0.0;cout << "Set CUDA...\n" << endl;//wjp// cudaSetDevice(0);cudaStream_t(0);cout << "Loading TensorRT model " << engine_file_path << endl;cout << "\nWait a second...." << std::flush;auto yolov8 = new YOLOv8(engine_file_path);cout << "\rLoading the pipe... " << string(10, ' ')<< "\n\r" ;cout << endl;yolov8->MakePipe(true);#ifdef VIDEOVideoCapture cap(imagepath);if (!cap.isOpened()) {cerr << "ERROR: Unable to open the stream " << imagepath << endl;return 0;}

#endif // VIDEOwhile(1){

#ifdef VIDEOcap >> image;if (image.empty()) {cerr << "ERROR: Unable to grab from the camera" << endl;break;}

#elseimage = cv::imread(imagepath);

#endifyolov8->CopyFromMat(image, im_size);std::vector<Object> objs;Tbegin = std::chrono::steady_clock::now();yolov8->Infer();Tend = std::chrono::steady_clock::now();yolov8->PostProcess(objs, score_thres, iou_thres, topk, num_labels);yolov8->DrawObjects(image, objs);//calculate frame ratef = std::chrono::duration_cast <std::chrono::milliseconds> (Tend - Tbegin).count();cout << "Infer time " << f << endl;if(f>0.0) FPS[((Fcnt++)&0x0F)]=1000.0/f;for(f=0.0, i=0;i<16;i++){ f+=FPS[i]; }putText(image, cv::format("FPS %0.2f", f/16),cv::Point(10,20),cv::FONT_HERSHEY_SIMPLEX,0.6, cv::Scalar(0, 0, 255));//show output// imshow("Jetson Orin Nano- 8 Mb RAM", image);// char esc = cv::waitKey(1);// if(esc == 27) break;imwrite("./out.jpg", image);return 0;}cv::destroyAllWindows();delete yolov8;return 0;

}- yolov8.cpp

//

// Created by triple-Mu on 24-1-2023.

// Modified by Q-engineering on 6-3-2024

//#include "yolov8.hpp"

#include <cuda_runtime_api.h>

#include <cuda.h>//----------------------------------------------------------------------------------------

//using namespace det;

//----------------------------------------------------------------------------------------

const char* class_names[] = {"person", "bicycle", "car", "motorcycle", "airplane", "bus", "train", "truck", "boat", "traffic light","fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow","elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee","skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard","tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple","sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "couch","potted plant", "bed", "dining table", "toilet", "tv", "laptop", "mouse", "remote", "keyboard", "cell phone","microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear","hair drier", "toothbrush"

};

//----------------------------------------------------------------------------------------

YOLOv8::YOLOv8(const std::string& engine_file_path)

{std::ifstream file(engine_file_path, std::ios::binary);assert(file.good());file.seekg(0, std::ios::end);auto size = file.tellg();file.seekg(0, std::ios::beg);char* trtModelStream = new char[size];assert(trtModelStream);file.read(trtModelStream, size);file.close();initLibNvInferPlugins(&this->gLogger, "");this->runtime = nvinfer1::createInferRuntime(this->gLogger);assert(this->runtime != nullptr);this->engine = this->runtime->deserializeCudaEngine(trtModelStream, size);assert(this->engine != nullptr);delete[] trtModelStream;this->context = this->engine->createExecutionContext();assert(this->context != nullptr);cudaStreamCreate(&this->stream);this->num_bindings = this->engine->getNbBindings();for (int i = 0; i < this->num_bindings; ++i) {Binding binding;nvinfer1::Dims dims;nvinfer1::DataType dtype = this->engine->getBindingDataType(i);std::string name = this->engine->getBindingName(i);binding.name = name;binding.dsize = type_to_size(dtype);bool IsInput = engine->bindingIsInput(i);if (IsInput) {this->num_inputs += 1;dims = this->engine->getProfileDimensions(i, 0, nvinfer1::OptProfileSelector::kMAX);binding.size = get_size_by_dims(dims);binding.dims = dims;this->input_bindings.push_back(binding);// set max opt shapethis->context->setBindingDimensions(i, dims);}else {dims = this->context->getBindingDimensions(i);binding.size = get_size_by_dims(dims);binding.dims = dims;this->output_bindings.push_back(binding);this->num_outputs += 1;}}

}

//----------------------------------------------------------------------------------------

YOLOv8::~YOLOv8()

{this->context->destroy();this->engine->destroy();this->runtime->destroy();cudaStreamDestroy(this->stream);for (auto& ptr : this->device_ptrs) {CHECK(cudaFree(ptr));}for (auto& ptr : this->host_ptrs) {CHECK(cudaFreeHost(ptr));}

}

//----------------------------------------------------------------------------------------

void YOLOv8::MakePipe(bool warmup)

{

#ifndef CUDART_VERSION

#error CUDART_VERSION Undefined!

#endiffor (auto& bindings : this->input_bindings) {void* d_ptr;

#if(CUDART_VERSION < 11000)CHECK(cudaMalloc(&d_ptr, bindings.size * bindings.dsize));

#elseCHECK(cudaMallocAsync(&d_ptr, bindings.size * bindings.dsize, this->stream));

#endifthis->device_ptrs.push_back(d_ptr);}for (auto& bindings : this->output_bindings) {void * d_ptr, *h_ptr;size_t size = bindings.size * bindings.dsize;

#if(CUDART_VERSION < 11000)CHECK(cudaMalloc(&d_ptr, bindings.size * bindings.dsize));

#elseCHECK(cudaMallocAsync(&d_ptr, bindings.size * bindings.dsize, this->stream));

#endifCHECK(cudaHostAlloc(&h_ptr, size, 0));this->device_ptrs.push_back(d_ptr);this->host_ptrs.push_back(h_ptr);}if (warmup) {for (int i = 0; i < 10; i++) {for (auto& bindings : this->input_bindings) {size_t size = bindings.size * bindings.dsize;void* h_ptr = malloc(size);memset(h_ptr, 0, size);CHECK(cudaMemcpyAsync(this->device_ptrs[0], h_ptr, size, cudaMemcpyHostToDevice, this->stream));free(h_ptr);}this->Infer();}}

}

//----------------------------------------------------------------------------------------

void YOLOv8::Letterbox(const cv::Mat& image, cv::Mat& out, cv::Size& size)

{const float inp_h = size.height;const float inp_w = size.width;float height = image.rows;float width = image.cols;float r = std::min(inp_h / height, inp_w / width);int padw = std::round(width * r);int padh = std::round(height * r);cv::Mat tmp;if ((int)width != padw || (int)height != padh) {cv::resize(image, tmp, cv::Size(padw, padh));}else {tmp = image.clone();}float dw = inp_w - padw;float dh = inp_h - padh;dw /= 2.0f;dh /= 2.0f;int top = int(std::round(dh - 0.1f));int bottom = int(std::round(dh + 0.1f));int left = int(std::round(dw - 0.1f));int right = int(std::round(dw + 0.1f));cv::copyMakeBorder(tmp, tmp, top, bottom, left, right, cv::BORDER_CONSTANT, {114, 114, 114});cv::dnn::blobFromImage(tmp, out, 1 / 255.f, cv::Size(), cv::Scalar(0, 0, 0), true, false, CV_32F);this->pparam.ratio = 1 / r;this->pparam.dw = dw;this->pparam.dh = dh;this->pparam.height = height;this->pparam.width = width;;

}

//----------------------------------------------------------------------------------------

void YOLOv8::CopyFromMat(const cv::Mat& image)

{cv::Mat nchw;auto& in_binding = this->input_bindings[0];auto width = in_binding.dims.d[3];auto height = in_binding.dims.d[2];cv::Size size{width, height};this->Letterbox(image, nchw, size);this->context->setBindingDimensions(0, nvinfer1::Dims{4, {1, 3, height, width}});CHECK(cudaMemcpyAsync(this->device_ptrs[0], nchw.ptr<float>(), nchw.total() * nchw.elemSize(), cudaMemcpyHostToDevice, this->stream));

}

//----------------------------------------------------------------------------------------

void YOLOv8::CopyFromMat(const cv::Mat& image, cv::Size& size)

{cv::Mat nchw;this->Letterbox(image, nchw, size);this->context->setBindingDimensions(0, nvinfer1::Dims{4, {1, 3, size.height, size.width}});CHECK(cudaMemcpyAsync(this->device_ptrs[0], nchw.ptr<float>(), nchw.total() * nchw.elemSize(), cudaMemcpyHostToDevice, this->stream));

}

//----------------------------------------------------------------------------------------

void YOLOv8::Infer()

{this->context->enqueueV2(this->device_ptrs.data(), this->stream, nullptr);for (int i = 0; i < this->num_outputs; i++) {size_t osize = this->output_bindings[i].size * this->output_bindings[i].dsize;CHECK(cudaMemcpyAsync(this->host_ptrs[i], this->device_ptrs[i + this->num_inputs], osize, cudaMemcpyDeviceToHost, this->stream));}cudaStreamSynchronize(this->stream);

}

//----------------------------------------------------------------------------------------

void YOLOv8::PostProcess(std::vector<Object>& objs, float score_thres, float iou_thres, int topk, int num_labels)

{objs.clear();auto num_channels = this->output_bindings[0].dims.d[1];auto num_anchors = this->output_bindings[0].dims.d[2];auto& dw = this->pparam.dw;auto& dh = this->pparam.dh;auto& width = this->pparam.width;auto& height = this->pparam.height;auto& ratio = this->pparam.ratio;std::vector<cv::Rect> bboxes;std::vector<float> scores;std::vector<int> labels;std::vector<int> indices;cv::Mat output = cv::Mat(num_channels, num_anchors, CV_32F, static_cast<float*>(this->host_ptrs[0]));output = output.t();for (int i = 0; i < num_anchors; i++) {auto row_ptr = output.row(i).ptr<float>();auto bboxes_ptr = row_ptr;auto scores_ptr = row_ptr + 4;auto max_s_ptr = std::max_element(scores_ptr, scores_ptr + num_labels);float score = *max_s_ptr;if (score > score_thres) {float x = *bboxes_ptr++ - dw;float y = *bboxes_ptr++ - dh;float w = *bboxes_ptr++;float h = *bboxes_ptr;float x0 = clamp((x - 0.5f * w) * ratio, 0.f, width);float y0 = clamp((y - 0.5f * h) * ratio, 0.f, height);float x1 = clamp((x + 0.5f * w) * ratio, 0.f, width);float y1 = clamp((y + 0.5f * h) * ratio, 0.f, height);int label = max_s_ptr - scores_ptr;cv::Rect_<float> bbox;bbox.x = x0;bbox.y = y0;bbox.width = x1 - x0;bbox.height = y1 - y0;bboxes.push_back(bbox);labels.push_back(label);scores.push_back(score);}}#ifdef BATCHED_NMScv::dnn::NMSBoxesBatched(bboxes, scores, labels, score_thres, iou_thres, indices);

#elsecv::dnn::NMSBoxes(bboxes, scores, score_thres, iou_thres, indices);

#endifint cnt = 0;for (auto& i : indices) {if (cnt >= topk) {break;}Object obj;obj.rect = bboxes[i];obj.prob = scores[i];obj.label = labels[i];objs.push_back(obj);cnt += 1;}

}

//----------------------------------------------------------------------------------------

void YOLOv8::DrawObjects(cv::Mat& bgr, const std::vector<Object>& objs)

{char text[256];for (auto& obj : objs) {cv::rectangle(bgr, obj.rect, cv::Scalar(255, 0, 0));sprintf(text, "%s %.1f%%", class_names[obj.label], obj.prob * 100);int baseLine = 0;cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine);int x = (int)obj.rect.x;int y = (int)obj.rect.y - label_size.height - baseLine;if (y < 0) y = 0;if (y > bgr.rows) y = bgr.rows;if (x + label_size.width > bgr.cols) x = bgr.cols - label_size.width;cv::rectangle(bgr, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)), cv::Scalar(255, 255, 255), -1);cv::putText(bgr, text, cv::Point(x, y + label_size.height), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0));}

}

//----------------------------------------------------------------------------------------- common.hpp

//

// Created by triple-Mu on 24-1-2023.

// Modified by Q-engineering on 6-3-2024

//#ifndef DETECT_NORMAL_COMMON_HPP

#define DETECT_NORMAL_COMMON_HPP

#include "NvInfer.h"

#include "opencv2/opencv.hpp"#define CHECK(call) \do { \const cudaError_t error_code = call; \if (error_code != cudaSuccess) { \printf("CUDA Error:\n"); \printf(" File: %s\n", __FILE__); \printf(" Line: %d\n", __LINE__); \printf(" Error code: %d\n", error_code); \printf(" Error text: %s\n", cudaGetErrorString(error_code)); \exit(1); \} \} while (0)class Logger: public nvinfer1::ILogger {

public:nvinfer1::ILogger::Severity reportableSeverity;explicit Logger(nvinfer1::ILogger::Severity severity = nvinfer1::ILogger::Severity::kINFO):reportableSeverity(severity){}void log(nvinfer1::ILogger::Severity severity, const char* msg) noexcept override{if (severity > reportableSeverity) {return;}switch (severity) {case nvinfer1::ILogger::Severity::kINTERNAL_ERROR:std::cerr << "INTERNAL_ERROR: ";break;case nvinfer1::ILogger::Severity::kERROR:std::cerr << "ERROR: ";break;case nvinfer1::ILogger::Severity::kWARNING:std::cerr << "WARNING: ";break;case nvinfer1::ILogger::Severity::kINFO:std::cerr << "INFO: ";break;default:std::cerr << "VERBOSE: ";break;}std::cerr << msg << std::endl;}

};inline int get_size_by_dims(const nvinfer1::Dims& dims)

{int size = 1;for (int i = 0; i < dims.nbDims; i++) {size *= dims.d[i];}return size;

}inline int type_to_size(const nvinfer1::DataType& dataType)

{switch (dataType) {case nvinfer1::DataType::kFLOAT:return 4;case nvinfer1::DataType::kHALF:return 2;case nvinfer1::DataType::kINT32:return 4;case nvinfer1::DataType::kINT8:return 1;case nvinfer1::DataType::kBOOL:return 1;default:return 4;}

}inline static float clamp(float val, float min, float max)

{return val > min ? (val < max ? val : max) : min;

}namespace det {

struct Binding {size_t size = 1;size_t dsize = 1;nvinfer1::Dims dims;std::string name;

};struct Object {cv::Rect_<float> rect;int label = 0;float prob = 0.0;

};struct PreParam {float ratio = 1.0f;float dw = 0.0f;float dh = 0.0f;float height = 0;float width = 0;

};

} // namespace det

#endif // DETECT_NORMAL_COMMON_HPP- yolov8.hpp

//

// Created by triple-Mu on 24-1-2023.

// Modified by Q-engineering on 6-3-2024

//

#ifndef DETECT_NORMAL_YOLOV8_HPP

#define DETECT_NORMAL_YOLOV8_HPP

#include "NvInferPlugin.h"

#include "common.hpp"

#include "fstream"using namespace det;class YOLOv8 {

private:nvinfer1::ICudaEngine* engine = nullptr;nvinfer1::IRuntime* runtime = nullptr;nvinfer1::IExecutionContext* context = nullptr;cudaStream_t stream = nullptr;Logger gLogger{nvinfer1::ILogger::Severity::kERROR};

public:int num_bindings;int num_inputs = 0;int num_outputs = 0;std::vector<Binding> input_bindings;std::vector<Binding> output_bindings;std::vector<void*> host_ptrs;std::vector<void*> device_ptrs;PreParam pparam;public:explicit YOLOv8(const std::string& engine_file_path);~YOLOv8();void MakePipe(bool warmup = true);void CopyFromMat(const cv::Mat& image);void CopyFromMat(const cv::Mat& image, cv::Size& size);void Letterbox(const cv::Mat& image, cv::Mat& out, cv::Size& size);void Infer();void PostProcess(std::vector<Object>& objs, float score_thres, float iou_thres, int topk, int num_labels = 80);void DrawObjects(cv::Mat& bgr, const std::vector<Object>& objs);

};

#endif // DETECT_NORMAL_YOLOV8_HPP- CMakeLists.txt

cmake_minimum_required(VERSION 3.1)set(CMAKE_CUDA_ARCHITECTURES 60 61 62 70 72 75 86 89 90)

set(CMAKE_CUDA_COMPILER /usr/local/cuda/bin/nvcc)project(YoloV8rt LANGUAGES CXX CUDA)set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=c++14 -O3")

set(CMAKE_CXX_STANDARD 14)

set(CMAKE_BUILD_TYPE Release)

option(CUDA_USE_STATIC_CUDA_RUNTIME OFF)# CUDA

include_directories(/usr/local/cuda-10.2/targets/aarch64-linux/include)

link_directories(/usr/local/cuda-10.2/targets/aarch64-linux/lib)

# find_package(CUDA REQUIRED)

# message(STATUS "CUDA Libs: \n${CUDA_LIBRARIES}\n")

# get_filename_component(CUDA_LIB_DIR ${CUDA_LIBRARIES} DIRECTORY)

# message(STATUS "CUDA Headers: \n${CUDA_INCLUDE_DIRS}\n")# OpenCV

find_package(OpenCV REQUIRED)# TensorRT

set(TensorRT_INCLUDE_DIRS /usr/include /usr/include/aarch-linux-gnu)

set(TensorRT_LIBRARIES /usr/lib/aarch64-linux-gnu)message(STATUS "TensorRT Libs: \n\n${TensorRT_LIBRARIES}\n")

message(STATUS "TensorRT Headers: \n${TensorRT_INCLUDE_DIRS}\n")list(APPEND INCLUDE_DIRS${CUDA_INCLUDE_DIRS}${OpenCV_INCLUDE_DIRS}${TensorRT_INCLUDE_DIRS}include)list(APPEND ALL_LIBS${CUDA_LIBRARIES}${CUDA_LIB_DIR}${OpenCV_LIBRARIES}${TensorRT_LIBRARIES})include_directories(${INCLUDE_DIRS})add_executable(${PROJECT_NAME}src/main.cppsrc/yolov8.cppinclude/yolov8.hppinclude/common.hpp)target_link_libraries(${PROJECT_NAME} PUBLIC ${ALL_LIBS})

target_link_libraries(${PROJECT_NAME} PRIVATE nvinfer nvinfer_plugin cudart ${OpenCV_LIBS})#place exe in parent folder

set(EXECUTABLE_OUTPUT_PATH "./")if (${OpenCV_VERSION} VERSION_GREATER_EQUAL 4.7.0)message(STATUS "Build with -DBATCHED_NMS")add_definitions(-DBATCHED_NMS)

endif ()- 原项目地址

https://github.com/Qengineering/YoloV8-TensorRT-Jetson_Nano

相关文章:

在invidia jetpack4.5.1上运行c++版yolov8(tensorRT)

心路历程(可略过) 为了能在arm64上跑通yolov8,我试过很多很多代码,太多对库版本的要求太高了; 比如说有一个是需要依赖onnx库的,(https://github.com/UNeedCryDear/yolov8-opencv-onnxruntime-…...

Vue3 接入 i18n 实现国际化多语言

在 Vue.js 3 中实现网页的国际化多语言,最常用的包是 vue-i18n。 第一步,安装一个 Vite 下使用 <i18n> 标签的插件:unplugin-vue-i18n npm install unplugin-vue-i18n # 或 yarn add unplugin-vue-i18n 安装完成后,调整 v…...

深度学习环境坑。

前面装好了之后装pytorch之后老显示gpufalse。 https://www.jb51.net/article/247762.htm 原因就是清华源的坑。 安装的时候不要用conda, 用pip命令 我cuda12.6,4070s cudnn-windows-x86_64-8.9.7.29_cuda12-archive.zip cuda_12.5.1_555.85_windows.…...

LLM——10个大型语言模型(LLM)常见面试题以及答案解析

今天我们来总结以下大型语言模型面试中常问的问题 1、哪种技术有助于减轻基于提示的学习中的偏见? A.微调 Fine-tuning B.数据增强 Data augmentation C.提示校准 Prompt calibration D.梯度裁剪 Gradient clipping 答案:C 提示校准包括调整提示,尽量减少产生…...

MongoDB - 聚合阶段 $count、$skip、$project

文章目录 1. $count 聚合阶段2. $skip 聚合阶段3. $project 聚合阶段1. 包含指定字段2. 排除_id字段3. 排除指定字段4. 不能同时指定包含字段和排除字段5. 排除嵌入式文档中的指定字段6. 包含嵌入式文档中的指定字段7. 添加新字段8. 重命名字段 1. $count 聚合阶段 计算匹配到…...

)

如何获取文件缩略图(C#和C++实现)

在C中,可以有以下两种办法 使用COM接口IThumbnailCache 文档链接:IThumbnailCache (thumbcache.h) - Win32 apps | Microsoft Learn 示例代码如下: VOID GetFileThumbnail(PCWSTR path) {HRESULT hr CoInitialize(nullptr);IShellItem* i…...

create-vue项目的README中文版

使用方法 要使用 create-vue 创建一个新的 Vue 项目,只需在终端中运行以下命令: npm create vuelatest[!注意] (latest 或 legacy) 不能省略,否则 npm 可能会解析到缓存中过时版本的包。 或者,如果你需要支持 IE11,你…...

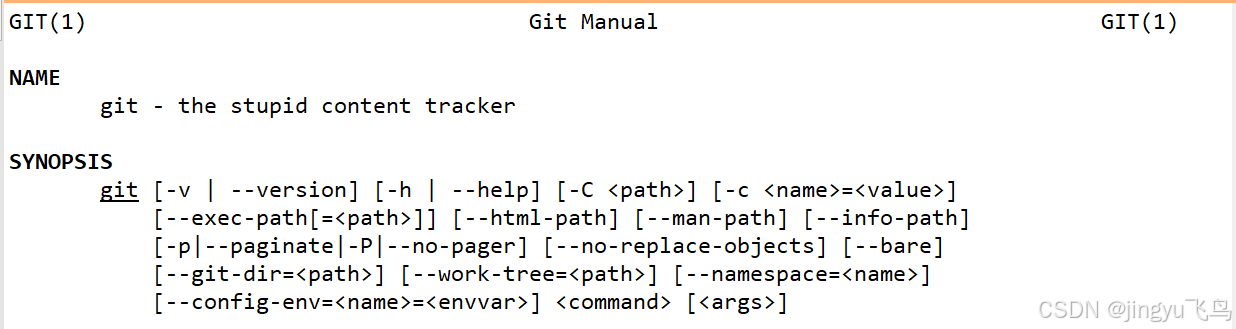

Centos 7系统(最小化安装)安装Git 、git-man帮助、补全git命令-详细文章

安装之前由于是最小化安装centos7安装一些开发环境和工具包 文章使用国内阿里源 cd /etc/yum.repos.d/ && mkdir myrepo && mv * myrepo&&lscurl -O https://mirrors.aliyun.com/repo/epel-7.repo;curl -O https://mirrors.aliyun.com/repo/Centos-7…...

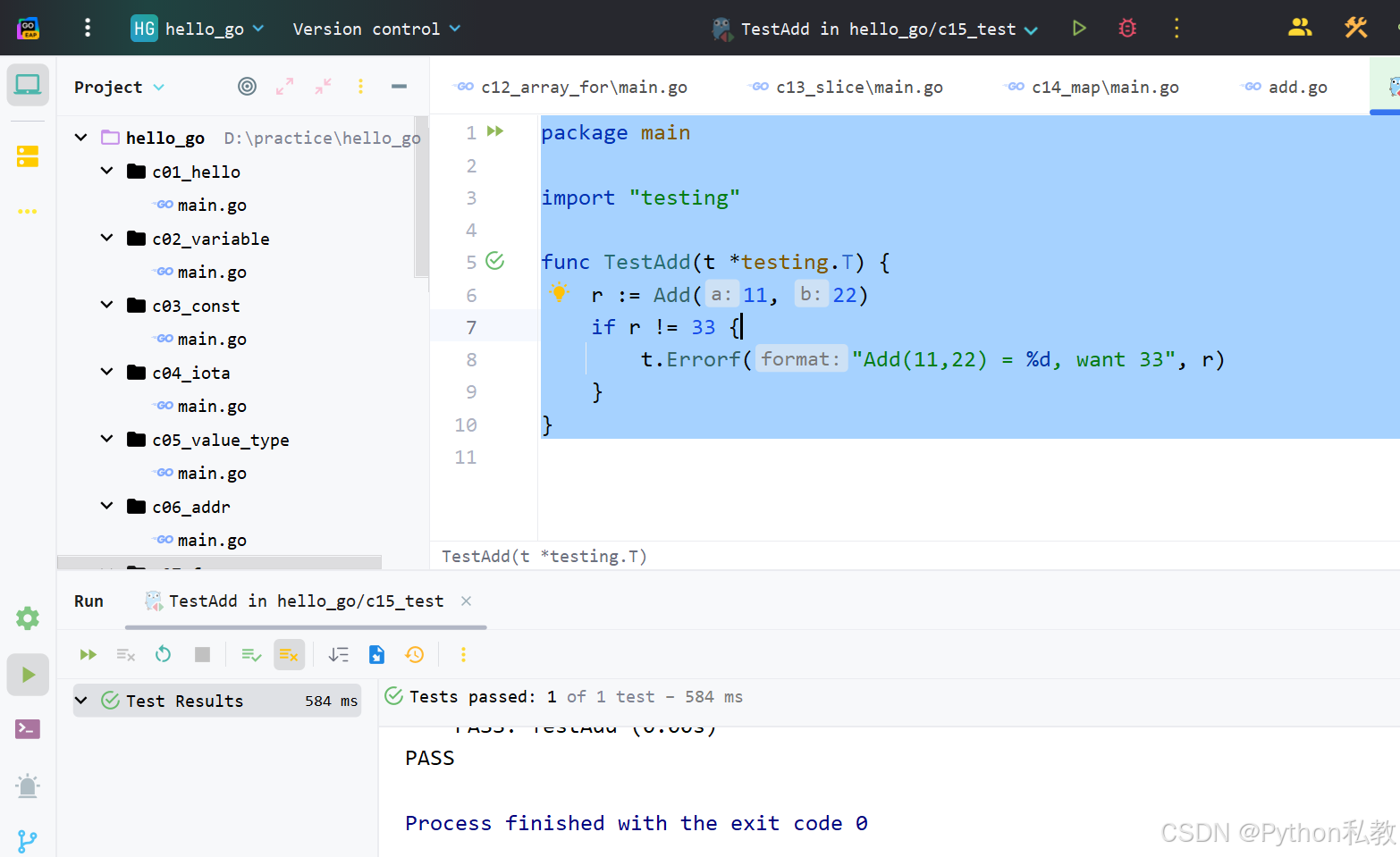

Golang零基础入门课_20240726 课程笔记

视频课程 最近发现越来越多的公司在用Golang了,所以精心整理了一套视频教程给大家,这个只是其中的第一部,后续还会有很多。 视频已经录制完成,完整目录截图如下: 课程目录 01 第一个Go程序.mp402 定义变量.mp403 …...

杂记-镜像

-i https://pypi.tuna.tsinghua.edu.cn/simple 清华 pip intall 出现 error: subprocess-exited-with-error 错误的解决办法———————————pip install --upgrade pip setuptools57.5.0 ————————————————————————————————————…...

如何将WordPress文章中的外链图片批量导入到本地

在使用采集软件进行内容创作时,很多文章中的图片都是远程链接,这不仅会导致前端加载速度慢,还会在微信小程序和抖音小程序中添加各种域名,造成管理上的麻烦。特别是遇到没有备案的外链,更是让人头疼。因此,…...

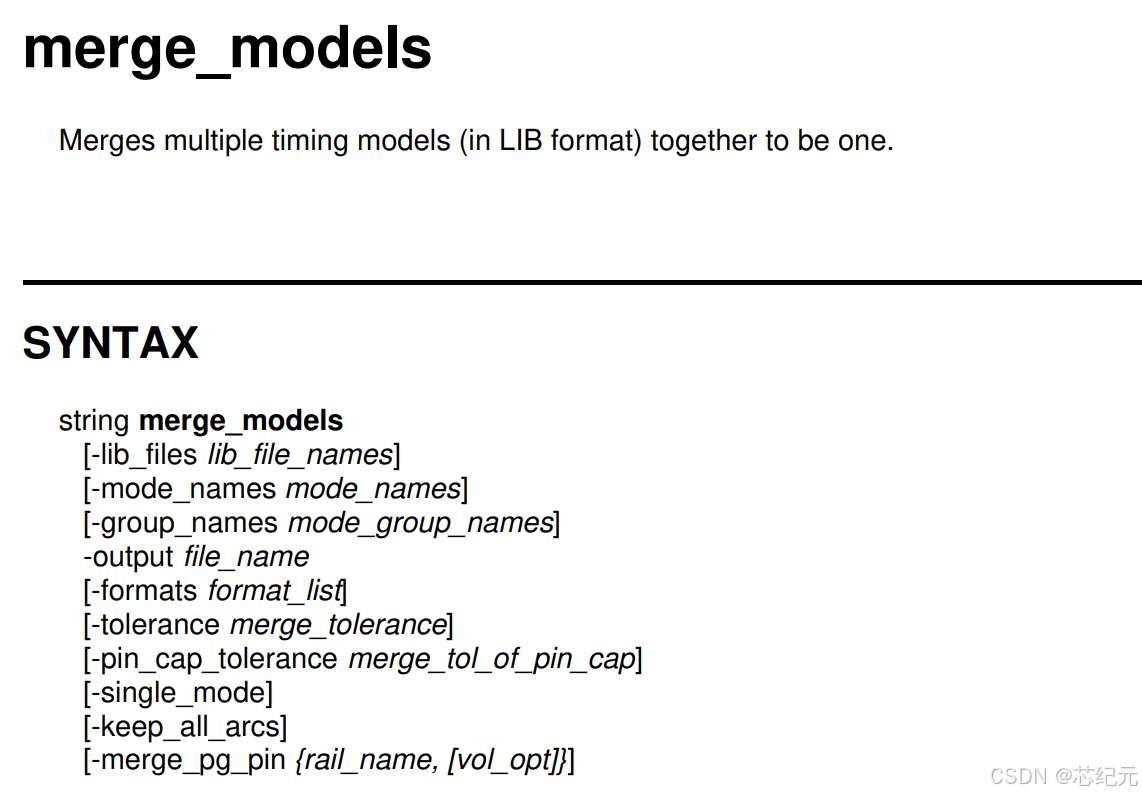

primetime如何合并不同modes的libs到一个lib文件

首先,用primetime 抽 timing model 的指令如下。 代码如下(示例): #抽lib时留一些margin, setup -max/hold -min set_extract_model_margin -port [get_ports -filter "!defined(clocks)"] -max 0.1 #抽lib extract_mod…...

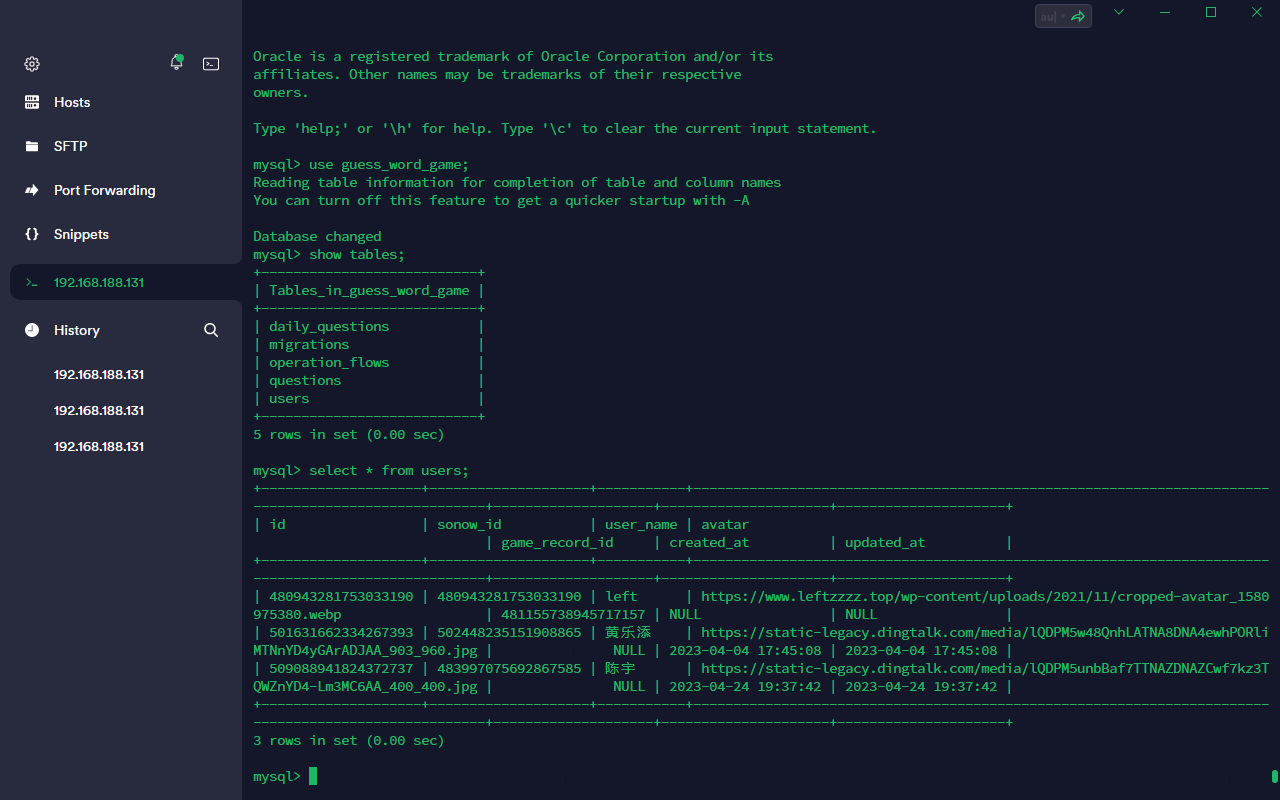

【运维笔记】数据库无法启动,数据库炸后备份恢复数据

事情起因 在做docker作业的时候,把卷映射到了宿主机原来的mysql数据库目录上,宿主机原来的mysql版本为8.0,docker容器版本为5.6,导致翻车。 具体操作 备份目录 将/var/lib/mysql备份到~/mysql_backup:cp /var/lib/…...

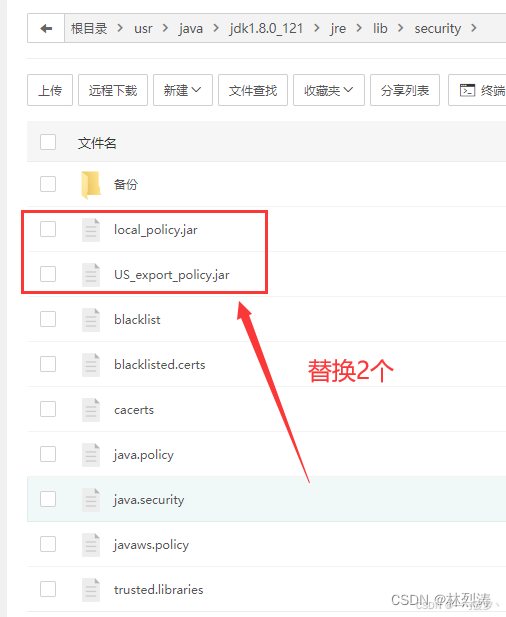

成功解决:java.security.InvalidKeyException: Illegal key size

在集成微信支付到Spring Boot项目时,可能会遇到启动报错 java.security.InvalidKeyException: Illegal key size 的问题。这是由于Java加密扩展(JCE)限制了密钥的长度。幸运的是,我们可以通过简单的替换文件来解决这个问题。 解决…...

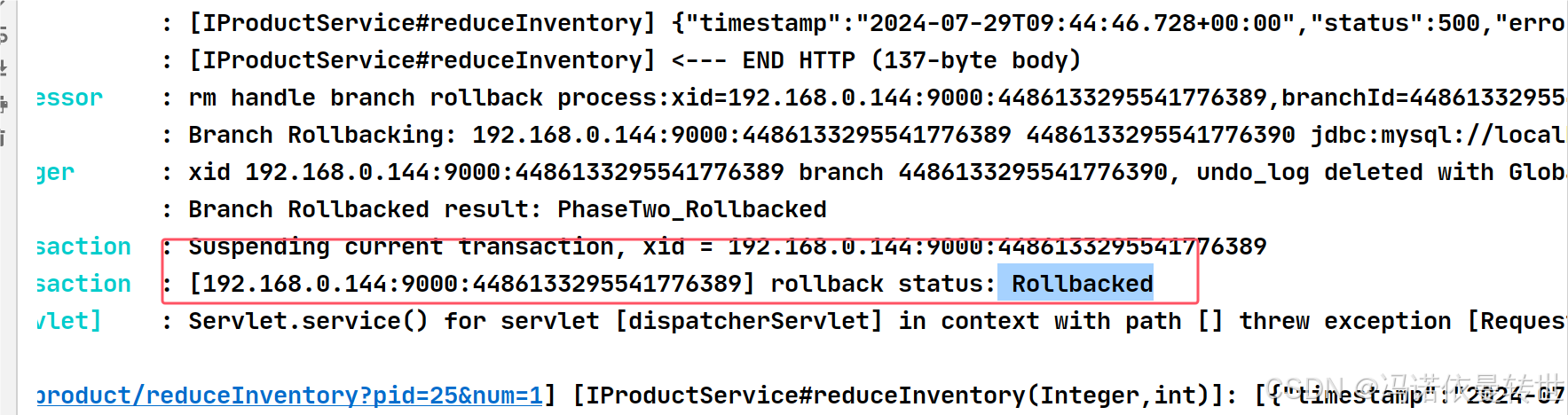

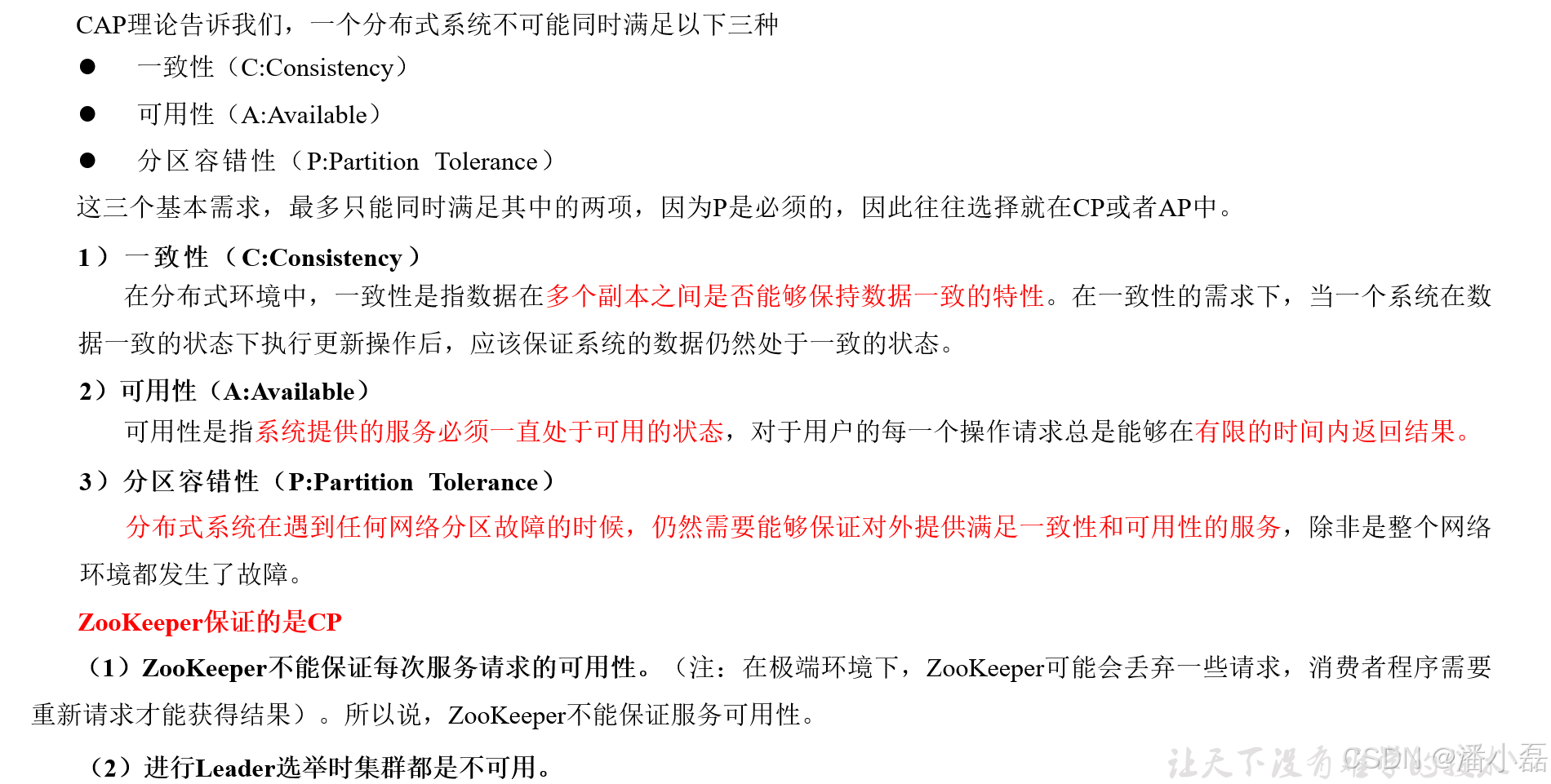

微服务事务管理(分布式事务问题 理论基础 初识Seata XA模式 AT模式 )

目录 一、分布式事务问题 1. 本地事务 2. 分布式事务 3. 演示分布式事务问题 二、理论基础 1. CAP定理 1.1 ⼀致性 1.2 可⽤性 1.3 分区容错 1.4 ⽭盾 2. BASE理论 3. 解决分布式事务的思路 三、初识Seata 1. Seata的架构 2. 部署TC服务 3. 微服务集成Se…...

—— fiddler的工作原理)

测试面试宝典(三十五)—— fiddler的工作原理

Fiddler 是一款强大的 Web 调试工具,其工作原理主要基于代理服务器的机制。 首先,当您在计算机上配置 Fiddler 为系统代理时,客户端(如浏览器)发出的所有 HTTP 和 HTTPS 请求都会被导向 Fiddler。 Fiddler 接收到这些…...

旷野之间32 - OpenAI 拉开了人工智能竞赛的序幕,而Meta 将会赢得胜利

他们通过故事做到了这一点(Snapchat 是第一个)他们用 Reels 实现了这个功能(TikTok 是第一个实现这个功能的)他们正在利用人工智能来实现这一点。 在人工智能竞赛开始时,Meta 的人工智能平台的表现并没有什么特别值得…...

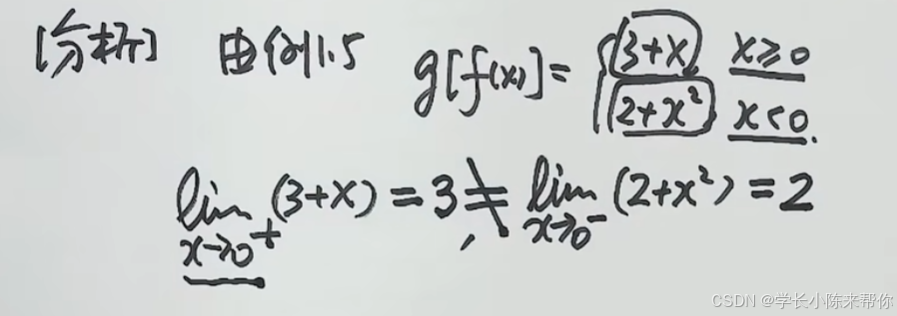

机械学习—零基础学习日志(高数15——函数极限性质)

零基础为了学人工智能,真的开始复习高数 这里我们将会学习函数极限的性质。 唯一性 来一个练习题: 再来一个练习: 这里我问了一下ChatGPT,如果一个值两侧分别趋近于正无穷,以及负无穷。理论上这个极限值应该说是不存…...

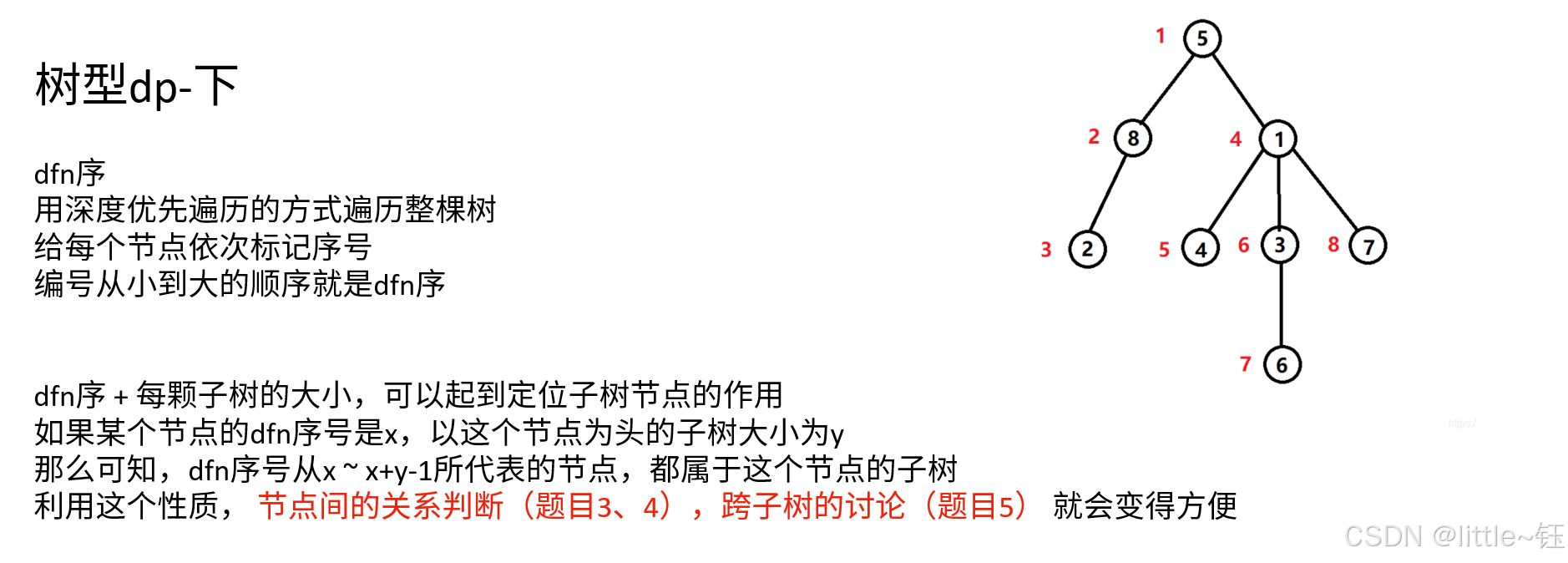

树 形 DP (dnf序)

二叉搜索子树的最大键值 /*** Definition for a binary tree node.* struct TreeNode {* int val;* TreeNode *left;* TreeNode *right;* TreeNode() : val(0), left(nullptr), right(nullptr) {}* TreeNode(int x) : val(x), left(nullptr), right(null…...

React的生命周期?

React的生命周期分为三个主要阶段:挂载(Mounting)、更新(Updating)和卸载(Unmounting)。 1、挂载(Mounting) 当组件实例被创建并插入 DOM 时调用的生命周期方法&#x…...

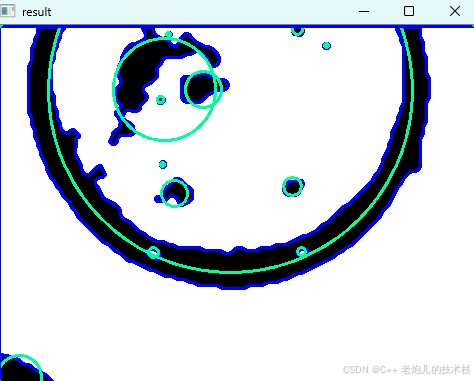

利用最小二乘法找圆心和半径

#include <iostream> #include <vector> #include <cmath> #include <Eigen/Dense> // 需安装Eigen库用于矩阵运算 // 定义点结构 struct Point { double x, y; Point(double x_, double y_) : x(x_), y(y_) {} }; // 最小二乘法求圆心和半径 …...

观成科技:隐蔽隧道工具Ligolo-ng加密流量分析

1.工具介绍 Ligolo-ng是一款由go编写的高效隧道工具,该工具基于TUN接口实现其功能,利用反向TCP/TLS连接建立一条隐蔽的通信信道,支持使用Let’s Encrypt自动生成证书。Ligolo-ng的通信隐蔽性体现在其支持多种连接方式,适应复杂网…...

python/java环境配置

环境变量放一起 python: 1.首先下载Python Python下载地址:Download Python | Python.org downloads ---windows -- 64 2.安装Python 下面两个,然后自定义,全选 可以把前4个选上 3.环境配置 1)搜高级系统设置 2…...

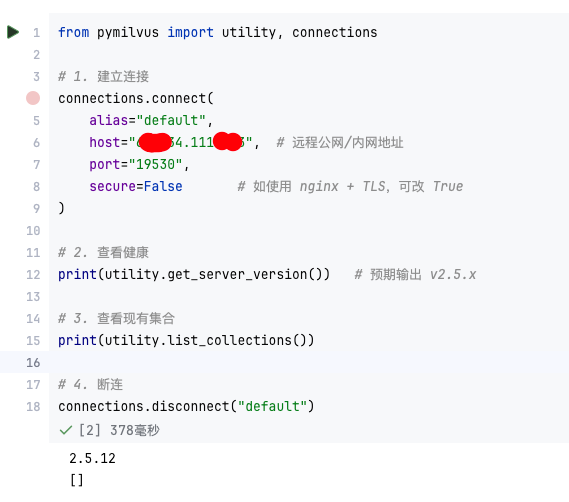

【大模型RAG】Docker 一键部署 Milvus 完整攻略

本文概要 Milvus 2.5 Stand-alone 版可通过 Docker 在几分钟内完成安装;只需暴露 19530(gRPC)与 9091(HTTP/WebUI)两个端口,即可让本地电脑通过 PyMilvus 或浏览器访问远程 Linux 服务器上的 Milvus。下面…...

高频面试之3Zookeeper

高频面试之3Zookeeper 文章目录 高频面试之3Zookeeper3.1 常用命令3.2 选举机制3.3 Zookeeper符合法则中哪两个?3.4 Zookeeper脑裂3.5 Zookeeper用来干嘛了 3.1 常用命令 ls、get、create、delete、deleteall3.2 选举机制 半数机制(过半机制࿰…...

【生成模型】视频生成论文调研

工作清单 上游应用方向:控制、速度、时长、高动态、多主体驱动 类型工作基础模型WAN / WAN-VACE / HunyuanVideo控制条件轨迹控制ATI~镜头控制ReCamMaster~多主体驱动Phantom~音频驱动Let Them Talk: Audio-Driven Multi-Person Conversational Video Generation速…...

QT3D学习笔记——圆台、圆锥

类名作用Qt3DWindow3D渲染窗口容器QEntity场景中的实体(对象或容器)QCamera控制观察视角QPointLight点光源QConeMesh圆锥几何网格QTransform控制实体的位置/旋转/缩放QPhongMaterialPhong光照材质(定义颜色、反光等)QFirstPersonC…...

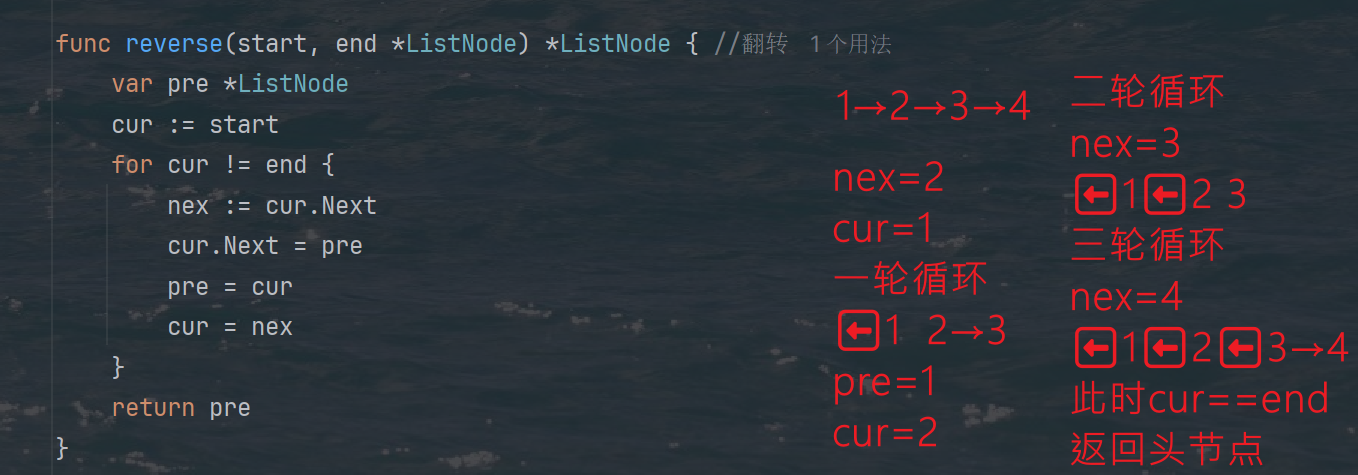

力扣热题100 k个一组反转链表题解

题目: 代码: func reverseKGroup(head *ListNode, k int) *ListNode {cur : headfor i : 0; i < k; i {if cur nil {return head}cur cur.Next}newHead : reverse(head, cur)head.Next reverseKGroup(cur, k)return newHead }func reverse(start, end *ListNode) *ListN…...

python爬虫——气象数据爬取

一、导入库与全局配置 python 运行 import json import datetime import time import requests from sqlalchemy import create_engine import csv import pandas as pd作用: 引入数据解析、网络请求、时间处理、数据库操作等所需库。requests:发送 …...

从面试角度回答Android中ContentProvider启动原理

Android中ContentProvider原理的面试角度解析,分为已启动和未启动两种场景: 一、ContentProvider已启动的情况 1. 核心流程 触发条件:当其他组件(如Activity、Service)通过ContentR…...