Resnet50网络——口腔癌病变识别

一 数据准备

1.导入数据

import matplotlib.pyplot as plt

import tensorflow as tf

import warnings as w

w.filterwarnings('ignore')

# 支持中文

plt.rcParams['font.sans-serif'] = ['SimHei'] # 用来正常显示中文标签

plt.rcParams['axes.unicode_minus'] = False # 用来正常显示负号import os,PIL,pathlib#隐藏警告

import warnings

warnings.filterwarnings('ignore')data_dir = "./data"

data_dir = pathlib.Path(data_dir)image_count = len(list(data_dir.glob('*/*')))print("图片总数为:",image_count)图片总数为: 5192

2.数据预处理

batch_size = 64

img_height = 224

img_width = 224train_ds = tf.keras.preprocessing.image_dataset_from_directory(data_dir,validation_split=0.3,subset="training",seed=12,image_size=(img_height, img_width),batch_size=batch_size)Found 5192 files belonging to 2 classes. Using 3635 files for training.

val_ds = tf.keras.preprocessing.image_dataset_from_directory(data_dir,validation_split=0.3,subset="validation",seed=12,image_size=(img_height, img_width),batch_size=batch_size)Found 5192 files belonging to 2 classes. Using 1557 files for validation.

class_names = train_ds.class_names

print(class_names)['Normal', 'OSCC']

for image_batch, labels_batch in train_ds:print(image_batch.shape)print(labels_batch.shape)break(64, 224, 224, 3) (64,)

AUTOTUNE = tf.data.AUTOTUNEdef preprocess_image(image,label):return (image/255.0,label)# 归一化处理

train_ds = train_ds.map(preprocess_image, num_parallel_calls=AUTOTUNE)

val_ds = val_ds.map(preprocess_image, num_parallel_calls=AUTOTUNE)train_ds = train_ds.cache().shuffle(1000).prefetch(buffer_size=AUTOTUNE)

val_ds = val_ds.cache().prefetch(buffer_size=AUTOTUNE)3.可视化数据

plt.figure(figsize=(15, 10)) # 图形的宽为15高为10for images, labels in train_ds.take(1):for i in range(15):ax = plt.subplot(3, 5, i + 1) plt.imshow(images[i])plt.title(class_names[labels[i]])plt.axis("off")

二 ResNet50模型的构建

from keras import layers

from keras.layers import Input,Activation,BatchNormalization,Flatten, Dropout

from keras.layers import Dense,Conv2D,MaxPooling2D,ZeroPadding2D,AveragePooling2D

from keras.models import Model

import tensorflow as tfdef identity_block(input_tensor,kernel_size,filters,stage,block):''':param input_tensor: 输入张量,通常是前一层的输出:param kernel_size: 卷积核大小,用于第二个卷积层:param filters: 一个包含三个整数的元组,分别表示三个卷积层的过滤器数量:param stage: 当前块的阶段,用于命名:param block: 当前块的名称,用于命名:return:'''# 提取过滤器数量filters1,filters2,filters3 = filters# 基础名称生成name_base = str(stage) + block +'_identity_block_'# 第一个卷积层,使用1x1卷积对输入进行处理,减少通道数。卷积层之后跟着批归一化和ReLU激活x = Conv2D(filters1,(1,1),name=name_base+'conv1')(input_tensor)x = BatchNormalization(name=name_base + 'bn1')(x)x = Activation('relu',name=name_base+'relu1')(x)# 第二个卷积层,使用给定的kernel_size进行卷积,保持输入和输出的空间尺寸相同(通过padding='same')。同样后续跟着批归一化和ReLU激活x = Conv2D(filters2,kernel_size,padding='same',name=name_base+'conv2')(x)x = BatchNormalization(name=name_base + 'bn2')(x)x = Activation('relu',name=name_base+'relu2')(x)# 第三个卷积层,再次使用1x1卷积来调整输出通道数,随后进行批归一化x = Conv2D(filters3, (1, 1), name=name_base + 'conv3')(x)x = BatchNormalization(name=name_base + 'bn3')(x)# 残差连接,将输入张量和经过卷积层处理后的输出张量相加。这种残差连接有助于缓解梯度消失问题,促进信息流动x = layers.add([x,input_tensor],name=name_base+'add')# 加和后的结果上应用ReLU激活函数x = Activation('relu',name=name_base+'relu4')(x)return x'''

在残差网络中,广泛的使用了BN层;但是没有使用MaxPooling以便减小特征图尺寸

作为替代,在每个模块的第一层,都使用了strides = (2,2)的方式进行特征图尺寸缩减

与使用MaxPooling相比,毫无疑问是减少了卷积的次数,输入图像分辨率较大时比较适合

在残差网络的最后一级,先利用layer.add()实现H(x) = x + F(x)

'''

def conv_block(input_tensor,kernel_size,filters,stage,block,strides=(2,2)):'''input_tensor: 输入张量,通常是前一层的输出。kernel_size: 卷积核的大小,用于第二个卷积层。filters: 一个包含三个整数的元组,分别表示三个卷积层的过滤器数量。stage: 当前块的阶段,通常用于命名。block: 当前块的名称,用于命名。strides: 卷积的步幅,默认值为(2, 2),用于下采样。'''# 提取过滤器数量filters1, filters2, filters3 = filters# 基础名称生成res_name_base = str(stage) + block +'_conv_block_res_'name_base = str(stage) + block +'_conv_block_'# 使用1x1卷积对输入进行处理,减少通道数。strides参数用于控制下采样,默认步幅为(2, 2),这将使输出特征图的尺寸减半。后续跟着批归一化和ReLU激活x = Conv2D(filters1, (1, 1), strides=strides,name=name_base + 'conv1')(input_tensor)x = BatchNormalization(name=name_base + 'bn1')(x)x = Activation('relu', name=name_base + 'relu1')(x)# 使用给定的kernel_size进行卷积,保持输入和输出的空间尺寸相同(通过padding='same')。后续同样进行批归一化和ReLU激活x = Conv2D(filters2, kernel_size, padding='same', name=name_base + 'conv2')(x)x = BatchNormalization(name=name_base + 'bn2')(x)x = Activation('relu', name=name_base + 'relu2')(x)# 使用1x1卷积来调整输出通道数,随后进行批归一化x = Conv2D(filters3, (1, 1), name=name_base + 'conv3')(x)x = BatchNormalization(name=name_base + 'bn3')(x)# 对输入张量进行卷积处理,以匹配输出张量的维度,确保在加法操作时两者具有相同的形状。此卷积层的步幅与主卷积块相同,确保特征图的尺寸一致。随后进行批归一化shortcut = Conv2D(filters3,(1,1),strides=strides,name=res_name_base+'conv')(input_tensor)shortcut = BatchNormalization(name=res_name_base+'bn')(shortcut)x = layers.add([x,shortcut],name=name_base+'add')x = Activation('relu',name=name_base+'relu4')(x)return x'''

定义一个ResNet50模型:输入层:接收形状为 224x224x3 的图像。零填充:对输入进行 3 像素的零填充,以保持特征图的边界。初始卷积:使用 64 个 7x7 的卷积核,步幅为 2,之后进行批归一化和 ReLU 激活。最大池化:进行 3x3 的最大池化,步幅为 2,减少特征图尺寸。残差块:通过堆叠卷积块(conv_block)和身份块(identity_block)实现特征提取,逐步增加通道数,从 64 到 2048。平均池化:在最后应用 7x7 的平均池化,降低特征维度。展平和全连接层:展平特征图,接入一个具有 softmax 激活的全连接层,用于多类分类(2 类)。加载预训练权重:从指定文件加载预训练的模型权重,便于迁移学习。

该架构旨在有效捕捉图像特征,适合深度学习任务

'''

def ResNet50(input_shape=[224,224,3],classes=2):img_input = Input(shape=input_shape)x = ZeroPadding2D((3,3))(img_input)x = Conv2D(64,(7,7),strides=(2,2),name='conv1')(x)x = BatchNormalization(name='bn_conv1')(x)x = Activation('relu')(x)x = MaxPooling2D((3,3),strides=(2,2))(x)x = conv_block(x,3,[64,64,256],stage=2,block='a',strides=(1,1))x = identity_block(x,3,[64,64,256],stage=2,block='b')x = identity_block(x,3,[64,64,256],stage=2,block='c')x = conv_block(x,3,[128,128,512],stage=3,block='a')x = identity_block(x,3,[128,128,512],stage=3,block='b')x = identity_block(x,3,[128,128,512],stage=3,block='c')x = identity_block(x,3,[128,128,512],stage=3,block='d')x = conv_block(x, 3, [256,256,1024], stage=4, block='a')x = identity_block(x, 3, [256,256,1024], stage=4, block='b')x = identity_block(x, 3, [256,256,1024], stage=4, block='c')x = identity_block(x, 3, [256,256,1024], stage=4, block='d')x = identity_block(x, 3, [256,256,1024], stage=4, block='e')x = identity_block(x, 3, [256,256,1024], stage=4, block='f')x = conv_block(x,3,[512,512,2048],stage=5,block='a')x = identity_block(x,3,[512,512,2048],stage=5,block='b')x = identity_block(x,3,[512,512,2048],stage=5,block='c')x = AveragePooling2D((7,7),name='avg_pool')(x)x = Flatten()(x)# 在全连接层之前添加 Dropout 层x = Dropout(0.5)(x) # 这里设置 Dropout 比率为 50%x = Dense(classes,activation='softmax',name='fc2')(x)model = Model(img_input,x,name='resnet50')# 加载预训练模型# model.load_weights("resnet50_weights_tf_dim_ordering_tf_kernels.h5")return modelmodel = ResNet50()

model.summary()Model: "resnet50" __________________________________________________________________________________________________Layer (type) Output Shape Param # Connected to ==================================================================================================input_2 (InputLayer) [(None, 224, 224, 3 0 [] )] zero_padding2d_1 (ZeroPadding2 (None, 230, 230, 3) 0 ['input_2[0][0]'] D) conv1 (Conv2D) (None, 112, 112, 64 9472 ['zero_padding2d_1[0][0]'] ) bn_conv1 (BatchNormalization) (None, 112, 112, 64 256 ['conv1[0][0]'] ) activation_1 (Activation) (None, 112, 112, 64 0 ['bn_conv1[0][0]'] ) max_pooling2d_1 (MaxPooling2D) (None, 55, 55, 64) 0 ['activation_1[0][0]'] 2a_conv_block_conv1 (Conv2D) (None, 55, 55, 64) 4160 ['max_pooling2d_1[0][0]'] 2a_conv_block_bn1 (BatchNormal (None, 55, 55, 64) 256 ['2a_conv_block_conv1[0][0]'] ization) 2a_conv_block_relu1 (Activatio (None, 55, 55, 64) 0 ['2a_conv_block_bn1[0][0]'] n) 2a_conv_block_conv2 (Conv2D) (None, 55, 55, 64) 36928 ['2a_conv_block_relu1[0][0]'] 2a_conv_block_bn2 (BatchNormal (None, 55, 55, 64) 256 ['2a_conv_block_conv2[0][0]'] ization) 2a_conv_block_relu2 (Activatio (None, 55, 55, 64) 0 ['2a_conv_block_bn2[0][0]'] n) 2a_conv_block_conv3 (Conv2D) (None, 55, 55, 256) 16640 ['2a_conv_block_relu2[0][0]'] 2a_conv_block_res_conv (Conv2D (None, 55, 55, 256) 16640 ['max_pooling2d_1[0][0]'] ) 2a_conv_block_bn3 (BatchNormal (None, 55, 55, 256) 1024 ['2a_conv_block_conv3[0][0]'] ization) 2a_conv_block_res_bn (BatchNor (None, 55, 55, 256) 1024 ['2a_conv_block_res_conv[0][0]'] malization) 2a_conv_block_add (Add) (None, 55, 55, 256) 0 ['2a_conv_block_bn3[0][0]', '2a_conv_block_res_bn[0][0]'] 2a_conv_block_relu4 (Activatio (None, 55, 55, 256) 0 ['2a_conv_block_add[0][0]'] n) 2b_identity_block_conv1 (Conv2 (None, 55, 55, 64) 16448 ['2a_conv_block_relu4[0][0]'] D) 2b_identity_block_bn1 (BatchNo (None, 55, 55, 64) 256 ['2b_identity_block_conv1[0][0]']rmalization) 2b_identity_block_relu1 (Activ (None, 55, 55, 64) 0 ['2b_identity_block_bn1[0][0]'] ation) 2b_identity_block_conv2 (Conv2 (None, 55, 55, 64) 36928 ['2b_identity_block_relu1[0][0]']D) 2b_identity_block_bn2 (BatchNo (None, 55, 55, 64) 256 ['2b_identity_block_conv2[0][0]']rmalization) 2b_identity_block_relu2 (Activ (None, 55, 55, 64) 0 ['2b_identity_block_bn2[0][0]'] ation) 2b_identity_block_conv3 (Conv2 (None, 55, 55, 256) 16640 ['2b_identity_block_relu2[0][0]']D) 2b_identity_block_bn3 (BatchNo (None, 55, 55, 256) 1024 ['2b_identity_block_conv3[0][0]']rmalization) 2b_identity_block_add (Add) (None, 55, 55, 256) 0 ['2b_identity_block_bn3[0][0]', '2a_conv_block_relu4[0][0]'] 2b_identity_block_relu4 (Activ (None, 55, 55, 256) 0 ['2b_identity_block_add[0][0]'] ation) 2c_identity_block_conv1 (Conv2 (None, 55, 55, 64) 16448 ['2b_identity_block_relu4[0][0]']D) 2c_identity_block_bn1 (BatchNo (None, 55, 55, 64) 256 ['2c_identity_block_conv1[0][0]']rmalization) 2c_identity_block_relu1 (Activ (None, 55, 55, 64) 0 ['2c_identity_block_bn1[0][0]'] ation) 2c_identity_block_conv2 (Conv2 (None, 55, 55, 64) 36928 ['2c_identity_block_relu1[0][0]']D) 2c_identity_block_bn2 (BatchNo (None, 55, 55, 64) 256 ['2c_identity_block_conv2[0][0]']rmalization) 2c_identity_block_relu2 (Activ (None, 55, 55, 64) 0 ['2c_identity_block_bn2[0][0]'] ation) 2c_identity_block_conv3 (Conv2 (None, 55, 55, 256) 16640 ['2c_identity_block_relu2[0][0]']D) 2c_identity_block_bn3 (BatchNo (None, 55, 55, 256) 1024 ['2c_identity_block_conv3[0][0]']rmalization) 2c_identity_block_add (Add) (None, 55, 55, 256) 0 ['2c_identity_block_bn3[0][0]', '2b_identity_block_relu4[0][0]']2c_identity_block_relu4 (Activ (None, 55, 55, 256) 0 ['2c_identity_block_add[0][0]'] ation) 3a_conv_block_conv1 (Conv2D) (None, 28, 28, 128) 32896 ['2c_identity_block_relu4[0][0]']3a_conv_block_bn1 (BatchNormal (None, 28, 28, 128) 512 ['3a_conv_block_conv1[0][0]'] ization) 3a_conv_block_relu1 (Activatio (None, 28, 28, 128) 0 ['3a_conv_block_bn1[0][0]'] n) 3a_conv_block_conv2 (Conv2D) (None, 28, 28, 128) 147584 ['3a_conv_block_relu1[0][0]'] 3a_conv_block_bn2 (BatchNormal (None, 28, 28, 128) 512 ['3a_conv_block_conv2[0][0]'] ization) 3a_conv_block_relu2 (Activatio (None, 28, 28, 128) 0 ['3a_conv_block_bn2[0][0]'] n) 3a_conv_block_conv3 (Conv2D) (None, 28, 28, 512) 66048 ['3a_conv_block_relu2[0][0]'] 3a_conv_block_res_conv (Conv2D (None, 28, 28, 512) 131584 ['2c_identity_block_relu4[0][0]']) 3a_conv_block_bn3 (BatchNormal (None, 28, 28, 512) 2048 ['3a_conv_block_conv3[0][0]'] ization) 3a_conv_block_res_bn (BatchNor (None, 28, 28, 512) 2048 ['3a_conv_block_res_conv[0][0]'] malization) 3a_conv_block_add (Add) (None, 28, 28, 512) 0 ['3a_conv_block_bn3[0][0]', '3a_conv_block_res_bn[0][0]'] 3a_conv_block_relu4 (Activatio (None, 28, 28, 512) 0 ['3a_conv_block_add[0][0]'] n) 3b_identity_block_conv1 (Conv2 (None, 28, 28, 128) 65664 ['3a_conv_block_relu4[0][0]'] D) 3b_identity_block_bn1 (BatchNo (None, 28, 28, 128) 512 ['3b_identity_block_conv1[0][0]']rmalization) 3b_identity_block_relu1 (Activ (None, 28, 28, 128) 0 ['3b_identity_block_bn1[0][0]'] ation) 3b_identity_block_conv2 (Conv2 (None, 28, 28, 128) 147584 ['3b_identity_block_relu1[0][0]']D) 3b_identity_block_bn2 (BatchNo (None, 28, 28, 128) 512 ['3b_identity_block_conv2[0][0]']rmalization) 3b_identity_block_relu2 (Activ (None, 28, 28, 128) 0 ['3b_identity_block_bn2[0][0]'] ation) 3b_identity_block_conv3 (Conv2 (None, 28, 28, 512) 66048 ['3b_identity_block_relu2[0][0]']D) 3b_identity_block_bn3 (BatchNo (None, 28, 28, 512) 2048 ['3b_identity_block_conv3[0][0]']rmalization) 3b_identity_block_add (Add) (None, 28, 28, 512) 0 ['3b_identity_block_bn3[0][0]', '3a_conv_block_relu4[0][0]'] 3b_identity_block_relu4 (Activ (None, 28, 28, 512) 0 ['3b_identity_block_add[0][0]'] ation) 3c_identity_block_conv1 (Conv2 (None, 28, 28, 128) 65664 ['3b_identity_block_relu4[0][0]']D) 3c_identity_block_bn1 (BatchNo (None, 28, 28, 128) 512 ['3c_identity_block_conv1[0][0]']rmalization) 3c_identity_block_relu1 (Activ (None, 28, 28, 128) 0 ['3c_identity_block_bn1[0][0]'] ation) 3c_identity_block_conv2 (Conv2 (None, 28, 28, 128) 147584 ['3c_identity_block_relu1[0][0]']D) 3c_identity_block_bn2 (BatchNo (None, 28, 28, 128) 512 ['3c_identity_block_conv2[0][0]']rmalization) 3c_identity_block_relu2 (Activ (None, 28, 28, 128) 0 ['3c_identity_block_bn2[0][0]'] ation) 3c_identity_block_conv3 (Conv2 (None, 28, 28, 512) 66048 ['3c_identity_block_relu2[0][0]']D) 3c_identity_block_bn3 (BatchNo (None, 28, 28, 512) 2048 ['3c_identity_block_conv3[0][0]']rmalization) 3c_identity_block_add (Add) (None, 28, 28, 512) 0 ['3c_identity_block_bn3[0][0]', '3b_identity_block_relu4[0][0]']3c_identity_block_relu4 (Activ (None, 28, 28, 512) 0 ['3c_identity_block_add[0][0]'] ation) 3d_identity_block_conv1 (Conv2 (None, 28, 28, 128) 65664 ['3c_identity_block_relu4[0][0]']D) 3d_identity_block_bn1 (BatchNo (None, 28, 28, 128) 512 ['3d_identity_block_conv1[0][0]']rmalization) 3d_identity_block_relu1 (Activ (None, 28, 28, 128) 0 ['3d_identity_block_bn1[0][0]'] ation) 3d_identity_block_conv2 (Conv2 (None, 28, 28, 128) 147584 ['3d_identity_block_relu1[0][0]']D) 3d_identity_block_bn2 (BatchNo (None, 28, 28, 128) 512 ['3d_identity_block_conv2[0][0]']rmalization) 3d_identity_block_relu2 (Activ (None, 28, 28, 128) 0 ['3d_identity_block_bn2[0][0]'] ation) 3d_identity_block_conv3 (Conv2 (None, 28, 28, 512) 66048 ['3d_identity_block_relu2[0][0]']D) 3d_identity_block_bn3 (BatchNo (None, 28, 28, 512) 2048 ['3d_identity_block_conv3[0][0]']rmalization) 3d_identity_block_add (Add) (None, 28, 28, 512) 0 ['3d_identity_block_bn3[0][0]', '3c_identity_block_relu4[0][0]']3d_identity_block_relu4 (Activ (None, 28, 28, 512) 0 ['3d_identity_block_add[0][0]'] ation) 4a_conv_block_conv1 (Conv2D) (None, 14, 14, 256) 131328 ['3d_identity_block_relu4[0][0]']4a_conv_block_bn1 (BatchNormal (None, 14, 14, 256) 1024 ['4a_conv_block_conv1[0][0]'] ization) 4a_conv_block_relu1 (Activatio (None, 14, 14, 256) 0 ['4a_conv_block_bn1[0][0]'] n) 4a_conv_block_conv2 (Conv2D) (None, 14, 14, 256) 590080 ['4a_conv_block_relu1[0][0]'] 4a_conv_block_bn2 (BatchNormal (None, 14, 14, 256) 1024 ['4a_conv_block_conv2[0][0]'] ization) 4a_conv_block_relu2 (Activatio (None, 14, 14, 256) 0 ['4a_conv_block_bn2[0][0]'] n) 4a_conv_block_conv3 (Conv2D) (None, 14, 14, 1024 263168 ['4a_conv_block_relu2[0][0]'] ) 4a_conv_block_res_conv (Conv2D (None, 14, 14, 1024 525312 ['3d_identity_block_relu4[0][0]']) ) 4a_conv_block_bn3 (BatchNormal (None, 14, 14, 1024 4096 ['4a_conv_block_conv3[0][0]'] ization) ) 4a_conv_block_res_bn (BatchNor (None, 14, 14, 1024 4096 ['4a_conv_block_res_conv[0][0]'] malization) ) 4a_conv_block_add (Add) (None, 14, 14, 1024 0 ['4a_conv_block_bn3[0][0]', ) '4a_conv_block_res_bn[0][0]'] 4a_conv_block_relu4 (Activatio (None, 14, 14, 1024 0 ['4a_conv_block_add[0][0]'] n) ) 4b_identity_block_conv1 (Conv2 (None, 14, 14, 256) 262400 ['4a_conv_block_relu4[0][0]'] D) 4b_identity_block_bn1 (BatchNo (None, 14, 14, 256) 1024 ['4b_identity_block_conv1[0][0]']rmalization) 4b_identity_block_relu1 (Activ (None, 14, 14, 256) 0 ['4b_identity_block_bn1[0][0]'] ation) 4b_identity_block_conv2 (Conv2 (None, 14, 14, 256) 590080 ['4b_identity_block_relu1[0][0]']D) 4b_identity_block_bn2 (BatchNo (None, 14, 14, 256) 1024 ['4b_identity_block_conv2[0][0]']rmalization) 4b_identity_block_relu2 (Activ (None, 14, 14, 256) 0 ['4b_identity_block_bn2[0][0]'] ation) 4b_identity_block_conv3 (Conv2 (None, 14, 14, 1024 263168 ['4b_identity_block_relu2[0][0]']D) ) 4b_identity_block_bn3 (BatchNo (None, 14, 14, 1024 4096 ['4b_identity_block_conv3[0][0]']rmalization) ) 4b_identity_block_add (Add) (None, 14, 14, 1024 0 ['4b_identity_block_bn3[0][0]', ) '4a_conv_block_relu4[0][0]'] 4b_identity_block_relu4 (Activ (None, 14, 14, 1024 0 ['4b_identity_block_add[0][0]'] ation) ) 4c_identity_block_conv1 (Conv2 (None, 14, 14, 256) 262400 ['4b_identity_block_relu4[0][0]']D) 4c_identity_block_bn1 (BatchNo (None, 14, 14, 256) 1024 ['4c_identity_block_conv1[0][0]']rmalization) 4c_identity_block_relu1 (Activ (None, 14, 14, 256) 0 ['4c_identity_block_bn1[0][0]'] ation) 4c_identity_block_conv2 (Conv2 (None, 14, 14, 256) 590080 ['4c_identity_block_relu1[0][0]']D) 4c_identity_block_bn2 (BatchNo (None, 14, 14, 256) 1024 ['4c_identity_block_conv2[0][0]']rmalization) 4c_identity_block_relu2 (Activ (None, 14, 14, 256) 0 ['4c_identity_block_bn2[0][0]'] ation) 4c_identity_block_conv3 (Conv2 (None, 14, 14, 1024 263168 ['4c_identity_block_relu2[0][0]']D) ) 4c_identity_block_bn3 (BatchNo (None, 14, 14, 1024 4096 ['4c_identity_block_conv3[0][0]']rmalization) ) 4c_identity_block_add (Add) (None, 14, 14, 1024 0 ['4c_identity_block_bn3[0][0]', ) '4b_identity_block_relu4[0][0]']4c_identity_block_relu4 (Activ (None, 14, 14, 1024 0 ['4c_identity_block_add[0][0]'] ation) ) 4d_identity_block_conv1 (Conv2 (None, 14, 14, 256) 262400 ['4c_identity_block_relu4[0][0]']D) 4d_identity_block_bn1 (BatchNo (None, 14, 14, 256) 1024 ['4d_identity_block_conv1[0][0]']rmalization) 4d_identity_block_relu1 (Activ (None, 14, 14, 256) 0 ['4d_identity_block_bn1[0][0]'] ation) 4d_identity_block_conv2 (Conv2 (None, 14, 14, 256) 590080 ['4d_identity_block_relu1[0][0]']D) 4d_identity_block_bn2 (BatchNo (None, 14, 14, 256) 1024 ['4d_identity_block_conv2[0][0]']rmalization) 4d_identity_block_relu2 (Activ (None, 14, 14, 256) 0 ['4d_identity_block_bn2[0][0]'] ation) 4d_identity_block_conv3 (Conv2 (None, 14, 14, 1024 263168 ['4d_identity_block_relu2[0][0]']D) ) 4d_identity_block_bn3 (BatchNo (None, 14, 14, 1024 4096 ['4d_identity_block_conv3[0][0]']rmalization) ) 4d_identity_block_add (Add) (None, 14, 14, 1024 0 ['4d_identity_block_bn3[0][0]', ) '4c_identity_block_relu4[0][0]']4d_identity_block_relu4 (Activ (None, 14, 14, 1024 0 ['4d_identity_block_add[0][0]'] ation) ) 4e_identity_block_conv1 (Conv2 (None, 14, 14, 256) 262400 ['4d_identity_block_relu4[0][0]']D) 4e_identity_block_bn1 (BatchNo (None, 14, 14, 256) 1024 ['4e_identity_block_conv1[0][0]']rmalization) 4e_identity_block_relu1 (Activ (None, 14, 14, 256) 0 ['4e_identity_block_bn1[0][0]'] ation) 4e_identity_block_conv2 (Conv2 (None, 14, 14, 256) 590080 ['4e_identity_block_relu1[0][0]']D) 4e_identity_block_bn2 (BatchNo (None, 14, 14, 256) 1024 ['4e_identity_block_conv2[0][0]']rmalization) 4e_identity_block_relu2 (Activ (None, 14, 14, 256) 0 ['4e_identity_block_bn2[0][0]'] ation) 4e_identity_block_conv3 (Conv2 (None, 14, 14, 1024 263168 ['4e_identity_block_relu2[0][0]']D) ) 4e_identity_block_bn3 (BatchNo (None, 14, 14, 1024 4096 ['4e_identity_block_conv3[0][0]']rmalization) ) 4e_identity_block_add (Add) (None, 14, 14, 1024 0 ['4e_identity_block_bn3[0][0]', ) '4d_identity_block_relu4[0][0]']4e_identity_block_relu4 (Activ (None, 14, 14, 1024 0 ['4e_identity_block_add[0][0]'] ation) ) 4f_identity_block_conv1 (Conv2 (None, 14, 14, 256) 262400 ['4e_identity_block_relu4[0][0]']D) 4f_identity_block_bn1 (BatchNo (None, 14, 14, 256) 1024 ['4f_identity_block_conv1[0][0]']rmalization) 4f_identity_block_relu1 (Activ (None, 14, 14, 256) 0 ['4f_identity_block_bn1[0][0]'] ation) 4f_identity_block_conv2 (Conv2 (None, 14, 14, 256) 590080 ['4f_identity_block_relu1[0][0]']D) 4f_identity_block_bn2 (BatchNo (None, 14, 14, 256) 1024 ['4f_identity_block_conv2[0][0]']rmalization) 4f_identity_block_relu2 (Activ (None, 14, 14, 256) 0 ['4f_identity_block_bn2[0][0]'] ation) 4f_identity_block_conv3 (Conv2 (None, 14, 14, 1024 263168 ['4f_identity_block_relu2[0][0]']D) ) 4f_identity_block_bn3 (BatchNo (None, 14, 14, 1024 4096 ['4f_identity_block_conv3[0][0]']rmalization) ) 4f_identity_block_add (Add) (None, 14, 14, 1024 0 ['4f_identity_block_bn3[0][0]', ) '4e_identity_block_relu4[0][0]']4f_identity_block_relu4 (Activ (None, 14, 14, 1024 0 ['4f_identity_block_add[0][0]'] ation) ) 5a_conv_block_conv1 (Conv2D) (None, 7, 7, 512) 524800 ['4f_identity_block_relu4[0][0]']5a_conv_block_bn1 (BatchNormal (None, 7, 7, 512) 2048 ['5a_conv_block_conv1[0][0]'] ization) 5a_conv_block_relu1 (Activatio (None, 7, 7, 512) 0 ['5a_conv_block_bn1[0][0]'] n) 5a_conv_block_conv2 (Conv2D) (None, 7, 7, 512) 2359808 ['5a_conv_block_relu1[0][0]'] 5a_conv_block_bn2 (BatchNormal (None, 7, 7, 512) 2048 ['5a_conv_block_conv2[0][0]'] ization) 5a_conv_block_relu2 (Activatio (None, 7, 7, 512) 0 ['5a_conv_block_bn2[0][0]'] n) 5a_conv_block_conv3 (Conv2D) (None, 7, 7, 2048) 1050624 ['5a_conv_block_relu2[0][0]'] 5a_conv_block_res_conv (Conv2D (None, 7, 7, 2048) 2099200 ['4f_identity_block_relu4[0][0]']) 5a_conv_block_bn3 (BatchNormal (None, 7, 7, 2048) 8192 ['5a_conv_block_conv3[0][0]'] ization) 5a_conv_block_res_bn (BatchNor (None, 7, 7, 2048) 8192 ['5a_conv_block_res_conv[0][0]'] malization) 5a_conv_block_add (Add) (None, 7, 7, 2048) 0 ['5a_conv_block_bn3[0][0]', '5a_conv_block_res_bn[0][0]'] 5a_conv_block_relu4 (Activatio (None, 7, 7, 2048) 0 ['5a_conv_block_add[0][0]'] n) 5b_identity_block_conv1 (Conv2 (None, 7, 7, 512) 1049088 ['5a_conv_block_relu4[0][0]'] D) 5b_identity_block_bn1 (BatchNo (None, 7, 7, 512) 2048 ['5b_identity_block_conv1[0][0]']rmalization) 5b_identity_block_relu1 (Activ (None, 7, 7, 512) 0 ['5b_identity_block_bn1[0][0]'] ation) 5b_identity_block_conv2 (Conv2 (None, 7, 7, 512) 2359808 ['5b_identity_block_relu1[0][0]']D) 5b_identity_block_bn2 (BatchNo (None, 7, 7, 512) 2048 ['5b_identity_block_conv2[0][0]']rmalization) 5b_identity_block_relu2 (Activ (None, 7, 7, 512) 0 ['5b_identity_block_bn2[0][0]'] ation) 5b_identity_block_conv3 (Conv2 (None, 7, 7, 2048) 1050624 ['5b_identity_block_relu2[0][0]']D) 5b_identity_block_bn3 (BatchNo (None, 7, 7, 2048) 8192 ['5b_identity_block_conv3[0][0]']rmalization) 5b_identity_block_add (Add) (None, 7, 7, 2048) 0 ['5b_identity_block_bn3[0][0]', '5a_conv_block_relu4[0][0]'] 5b_identity_block_relu4 (Activ (None, 7, 7, 2048) 0 ['5b_identity_block_add[0][0]'] ation) 5c_identity_block_conv1 (Conv2 (None, 7, 7, 512) 1049088 ['5b_identity_block_relu4[0][0]']D) 5c_identity_block_bn1 (BatchNo (None, 7, 7, 512) 2048 ['5c_identity_block_conv1[0][0]']rmalization) 5c_identity_block_relu1 (Activ (None, 7, 7, 512) 0 ['5c_identity_block_bn1[0][0]'] ation) 5c_identity_block_conv2 (Conv2 (None, 7, 7, 512) 2359808 ['5c_identity_block_relu1[0][0]']D) 5c_identity_block_bn2 (BatchNo (None, 7, 7, 512) 2048 ['5c_identity_block_conv2[0][0]']rmalization) 5c_identity_block_relu2 (Activ (None, 7, 7, 512) 0 ['5c_identity_block_bn2[0][0]'] ation) 5c_identity_block_conv3 (Conv2 (None, 7, 7, 2048) 1050624 ['5c_identity_block_relu2[0][0]']D) 5c_identity_block_bn3 (BatchNo (None, 7, 7, 2048) 8192 ['5c_identity_block_conv3[0][0]']rmalization) 5c_identity_block_add (Add) (None, 7, 7, 2048) 0 ['5c_identity_block_bn3[0][0]', '5b_identity_block_relu4[0][0]']5c_identity_block_relu4 (Activ (None, 7, 7, 2048) 0 ['5c_identity_block_add[0][0]'] ation) avg_pool (AveragePooling2D) (None, 1, 1, 2048) 0 ['5c_identity_block_relu4[0][0]']flatten_1 (Flatten) (None, 2048) 0 ['avg_pool[0][0]'] dropout (Dropout) (None, 2048) 0 ['flatten_1[0][0]'] fc2 (Dense) (None, 2) 4098 ['dropout[0][0]'] ================================================================================================== Total params: 23,591,810 Trainable params: 23,538,690 Non-trainable params: 53,120 __________________________________________________________________________________________________

三 编译

# 设置优化器

opt = tf.keras.optimizers.Adam(learning_rate=1e-7)model.compile(optimizer='adam',loss='sparse_categorical_crossentropy',metrics=['accuracy'])四 训练模型

from keras.callbacks import EarlyStopping

# 设置早停法

early_stopping = EarlyStopping(monitor='val_loss',patience=3,verbose=1,restore_best_weights=True

)

epochs = 10history = model.fit(train_ds,validation_data=val_ds,epochs=epochs,callbacks=[early_stopping]

)Epoch 1/10 57/57 [==============================] - 709s 12s/step - loss: 0.9055 - accuracy: 0.6853 - val_loss: 1.0100 - val_accuracy: 0.4913 Epoch 2/10 57/57 [==============================] - 681s 12s/step - loss: 0.5338 - accuracy: 0.7667 - val_loss: 0.7880 - val_accuracy: 0.4978 Epoch 3/10 57/57 [==============================] - 662s 12s/step - loss: 0.4756 - accuracy: 0.7829 - val_loss: 0.7841 - val_accuracy: 0.4290 Epoch 4/10 57/57 [==============================] - 660s 12s/step - loss: 0.4223 - accuracy: 0.8102 - val_loss: 0.7710 - val_accuracy: 0.5466 Epoch 5/10 57/57 [==============================] - 662s 12s/step - loss: 0.3743 - accuracy: 0.8347 - val_loss: 0.8795 - val_accuracy: 0.5748 Epoch 6/10 57/57 [==============================] - 665s 12s/step - loss: 0.3481 - accuracy: 0.8468 - val_loss: 1.2726 - val_accuracy: 0.4579 Epoch 7/10 57/57 [==============================] - ETA: 0s - loss: 0.3365 - accuracy: 0.8556 Restoring model weights from the end of the best epoch: 4. 57/57 [==============================] - 661s 12s/step - loss: 0.3365 - accuracy: 0.8556 - val_loss: 0.9570 - val_accuracy: 0.5812 Epoch 7: early stopping

五 模型评估

# 获取实际训练轮数

actual_epochs = len(history.history['accuracy'])acc = history.history['accuracy']

val_acc = history.history['val_accuracy']loss = history.history['loss']

val_loss = history.history['val_loss']epochs_range = range(actual_epochs)plt.figure(figsize=(12, 4))# 绘制准确率

plt.subplot(1, 2, 1)

plt.plot(epochs_range, acc, label='Training Accuracy')

plt.plot(epochs_range, val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.title('Training and Validation Accuracy')# 绘制损失

plt.subplot(1, 2, 2)

plt.plot(epochs_range, loss, label='Training Loss')

plt.plot(epochs_range, val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.title('Training and Validation Loss')plt.show()

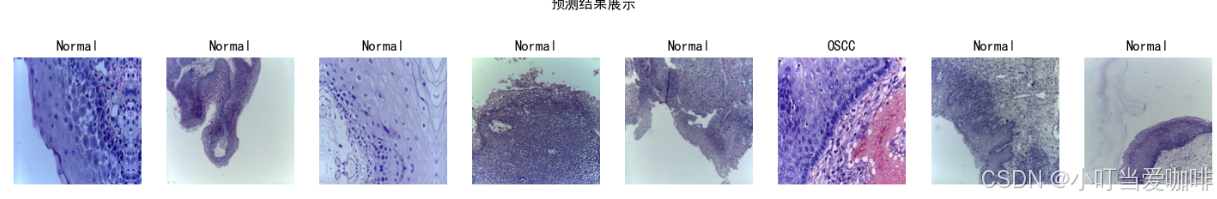

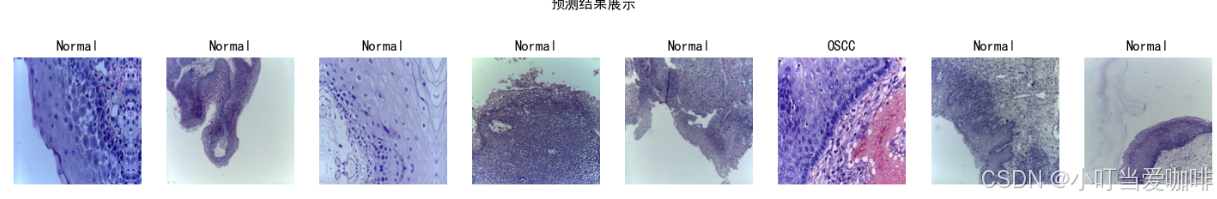

六 预测

import numpy as np# 采用加载的模型(new_model)来看预测结果

plt.figure(figsize=(18, 3)) # 图形的宽为18高为5

plt.suptitle("预测结果展示")for images, labels in val_ds.take(1):for i in range(8):ax = plt.subplot(1,8, i + 1) # 显示图片plt.imshow(images[i].numpy())# 需要给图片增加一个维度img_array = tf.expand_dims(images[i], 0) # 使用模型预测图片中的人物predictions = model.predict(img_array)plt.title(class_names[np.argmax(predictions)])plt.axis("off")1/1 [==============================] - 1s 699ms/step 1/1 [==============================] - 0s 68ms/step 1/1 [==============================] - 0s 67ms/step 1/1 [==============================] - 0s 68ms/step 1/1 [==============================] - 0s 66ms/step 1/1 [==============================] - 0s 76ms/step 1/1 [==============================] - 0s 78ms/step 1/1 [==============================] - 0s 63ms/step

相关文章:

Resnet50网络——口腔癌病变识别

一 数据准备 1.导入数据 import matplotlib.pyplot as plt import tensorflow as tf import warnings as w w.filterwarnings(ignore) # 支持中文 plt.rcParams[font.sans-serif] [SimHei] # 用来正常显示中文标签 plt.rcParams[axes.unicode_minus] False # 用来正常显示负…...

Python 中自动打开网页并点击[自动化脚本],Selenium

要在 Python 中自动打开网页并点击第一个 <a> 标签,你需要使用 Selenium,它可以控制浏览器并执行像点击这样的操作。requests 和 BeautifulSoup 只能获取并解析网页内容,但不能进行网页交互操作。 步骤: 安装 Selenium安装…...

Spring Boot-自动配置问题

**### Spring Boot自动配置问题探讨 Spring Boot 是当前 Java 后端开发中非常流行的框架,其核心特性之一便是“自动配置”(Auto-Configuration)。自动配置大大简化了应用开发过程,开发者不需要编写大量的 XML 配置或是繁琐的 Jav…...

CS61B学习 part1

本人选择了2018spring的课程,因为他免费提供了评分机器,后来得知2021也开放了,决定把其中的Lab尝试一番,听说gitlab就近好评,相当有实力,并借此学习Java的基本知识,请根据pku的cswiki做好评分机…...

我Github的问题解决了!

看的这篇,解决使用git时遇到Failed to connect to github.com port 443 after 21090 ms: Couldn‘t connect to server_git couldnt connect to server-CSDN博客 之前想推送的能推送了,拉取的也能取了。 一、如果是在挂着梯子的情况下拉取或者推送代码…...

Pytorch构建神经网络多元线性回归模型

1.模型线性方程y W ∗ X b from torch import nn import torch#手动设置的W参数(待模型学习),这里设置为12个,自己随意设置weight_settorch.tensor([[1.5,2.38,4.22,6.5,7.2,3.21,4.44,6.55,2.48,-1.75,-3.26,4.78]])#手动设置…...

如何基于Flink CDC与OceanBase构建实时数仓,实现简化链路,高效排查

本文作者:阿里云Flink SQL负责人,伍翀,Apache Flink PMC Member & Committer 众多数据领域的专业人士都很熟悉Apache Flink,它作为流式计算引擎,流批一体,其核心在于其强大的分布式流数据处理能力&…...

ActiveMQ、RabbitMQ 和 Kafka 在 Spring Boot 中的实战

在现代的微服务架构和分布式系统中,消息队列 是一种常见的异步通信工具。消息队列允许应用程序之间通过 生产者-消费者模型 进行松耦合、异步交互。在 Spring Boot 中,我们可以通过简单的配置来集成不同的消息队列系统,包括 ActiveMQ、Rabbit…...

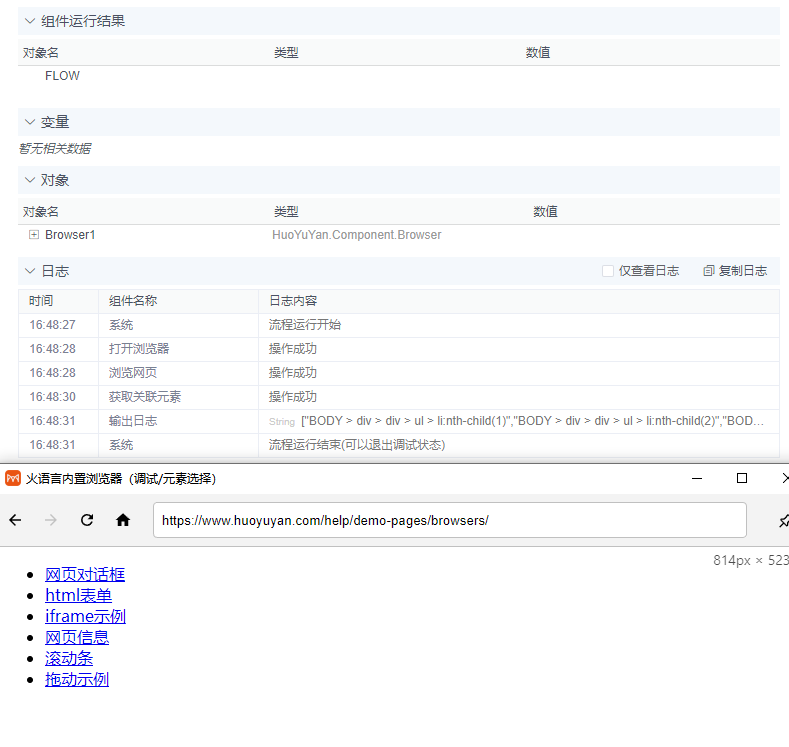

火语言RPA流程组件介绍--获取关联元素

🚩【组件功能】:获取指定元素的父元素、子元素、相邻元素等关联信息 配置预览 配置说明 目标元素 支持T或# 默认FLOW输入项 通过自动捕获工具捕获(选择元素工具使用方法)或手动填写网页元素的css,xpath,指定对应网页元素作为操作目标 关联…...

【2024研赛】【华为杯E题】2024 年研究生数学建模比赛思路、代码、论文助攻

思路将在名片下群聊分享 高速公路应急车道紧急启用模型 高速公路拥堵现象的原因众多,除了交通事故外,最典型的就是部分路段出现瓶颈现象,主要原因是车辆汇聚,而拥堵后又容易蔓延。高速公路一些特定的路段容易形成堵点࿰…...

Linux——K8s集群部署过程

1、环境准备 (1)配置好网络ip和主机名 control: node1: node2: 配置ip 主机名的过程省略 配置一个简单的基于hosts文件的名称解析 [rootnode1 ~]# vim /etc/hosts // 文件中新增以下三行 192.168.110.10 control 192.168.110.11 node1 1…...

二.Unity中使用虚拟摇杆来控制角色移动

上一篇中我们完成了不借助第三方插件实现手游的虚拟摇杆,现在借助这个虚拟摇杆来实现控制角色的移动。 虚拟摇杆实际上就给角色输出方向,类似于键盘的WSAD,也是一个二维坐标,也就是(-1,1)的范围,将摇杆的方向进行归一化…...

基于SpringBoot的旅游管理系统

系统展示 用户前台界面 管理员后台界面 系统背景 近年来,随着社会经济的快速发展和人民生活水平的显著提高,旅游已成为人们休闲娱乐、增长见识的重要方式。国家积极倡导“全民旅游”,鼓励民众利用节假日外出旅行,探索各地自然与人…...

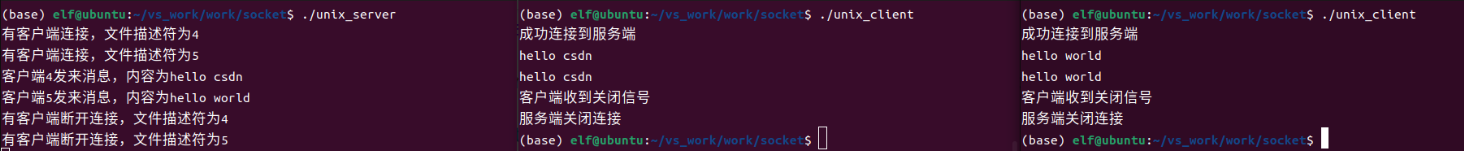

Linux套接字

目录标题 套接字套接字的基本概念套接字的功能与分类套接字的使用流程套接字的应用场景总结套接字在不同操作系统中的实现差异有哪些?如何优化套接字编程以提高网络通信的效率和安全性?原始套接字(SOCK_RAW)的具体应用场景和使用示…...

——二面(游戏测试))

软件测试面试题(5)——二面(游戏测试)

没想到测试题做完等了会儿就安排面试了,还以为自己会直接挂在测试题,这次面试很刺激。测试题总体来说不算太难,主要是实操写Bug那里真没经历过,所以写的很混乱。 我复盘一下这次面试的问题,这次面试是有两个面试官&…...

C#基于SkiaSharp实现印章管理(8)

上一章虽然增加了按路径绘制文本,支持按矩形、圆形、椭圆等路径,但测试时发现通过调整尺寸、偏移量等方式不是很好控制文本的位置。相对而言,使用弧线路径,通过弧线起始角度及弧线角度控制文本位置更简单。同时基于路径绘制文本时…...

信通院发布首个《大模型媒体生产与处理》标准,阿里云智能媒体服务作为业界首家“卓越级”通过

中国信通院近期正式发布《大模型驱动的媒体生产与处理》标准,阿里云智能媒体服务,以“首批首家”通过卓越级评估,并在9大模块50余项测评中表现为“满分”。 当下,AI大模型的快速发展带动了爆发式的海量AI运用,这其中&a…...

AI学习指南深度学习篇-Adam的Python实践

AI学习指南深度学习篇-Adam的Python实践 在深度学习领域,优化算法是影响模型性能的关键因素之一。Adam(Adaptive Moment Estimation)是一种广泛使用的优化算法,因其在多种问题上均表现优异而被广泛使用。本文将深入探讨Adam优化器…...

08_React redux

React redux 一、理解1、学习文档2、redux 是什么吗3、什么情况下需要使用 redux4、redux 工作流程5、react-redux 模型图 二、redux 的三个核心概念1、action2、reducer3、store 三、redux 的核心 API1、getState()2、dispatch() 四、使用 redux 编写应用1、求和案例\_redux 精…...

2024华为杯研究生数学建模竞赛(研赛)选题建议+初步分析

难度:DE<C<F,开放度:CDE>F。 华为专项的题目(A、B题)暂不进行选题分析,不太建议大多数同学选择,对自己专业技能有很大自信的可以选择华为专项的题目。后续会直接更新A、B题思路&#…...

Linux应用开发之网络套接字编程(实例篇)

服务端与客户端单连接 服务端代码 #include <sys/socket.h> #include <sys/types.h> #include <netinet/in.h> #include <stdio.h> #include <stdlib.h> #include <string.h> #include <arpa/inet.h> #include <pthread.h> …...

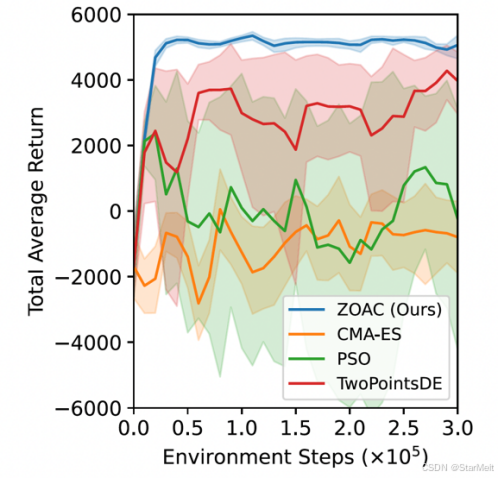

突破不可导策略的训练难题:零阶优化与强化学习的深度嵌合

强化学习(Reinforcement Learning, RL)是工业领域智能控制的重要方法。它的基本原理是将最优控制问题建模为马尔可夫决策过程,然后使用强化学习的Actor-Critic机制(中文译作“知行互动”机制),逐步迭代求解…...

【SpringBoot】100、SpringBoot中使用自定义注解+AOP实现参数自动解密

在实际项目中,用户注册、登录、修改密码等操作,都涉及到参数传输安全问题。所以我们需要在前端对账户、密码等敏感信息加密传输,在后端接收到数据后能自动解密。 1、引入依赖 <dependency><groupId>org.springframework.boot</groupId><artifactId...

【解密LSTM、GRU如何解决传统RNN梯度消失问题】

解密LSTM与GRU:如何让RNN变得更聪明? 在深度学习的世界里,循环神经网络(RNN)以其卓越的序列数据处理能力广泛应用于自然语言处理、时间序列预测等领域。然而,传统RNN存在的一个严重问题——梯度消失&#…...

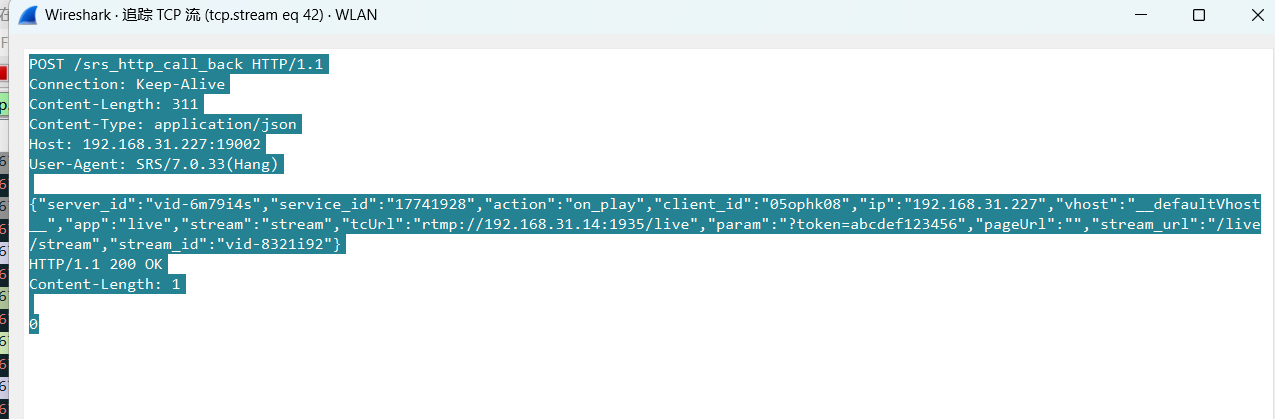

srs linux

下载编译运行 git clone https:///ossrs/srs.git ./configure --h265on make 编译完成后即可启动SRS # 启动 ./objs/srs -c conf/srs.conf # 查看日志 tail -n 30 -f ./objs/srs.log 开放端口 默认RTMP接收推流端口是1935,SRS管理页面端口是8080,可…...

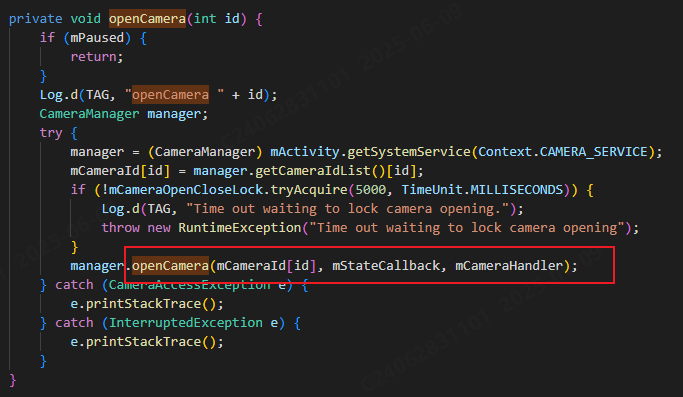

相机从app启动流程

一、流程框架图 二、具体流程分析 1、得到cameralist和对应的静态信息 目录如下: 重点代码分析: 启动相机前,先要通过getCameraIdList获取camera的个数以及id,然后可以通过getCameraCharacteristics获取对应id camera的capabilities(静态信息)进行一些openCamera前的…...

Java 加密常用的各种算法及其选择

在数字化时代,数据安全至关重要,Java 作为广泛应用的编程语言,提供了丰富的加密算法来保障数据的保密性、完整性和真实性。了解这些常用加密算法及其适用场景,有助于开发者在不同的业务需求中做出正确的选择。 一、对称加密算法…...

VTK如何让部分单位不可见

最近遇到一个需求,需要让一个vtkDataSet中的部分单元不可见,查阅了一些资料大概有以下几种方式 1.通过颜色映射表来进行,是最正规的做法 vtkNew<vtkLookupTable> lut; //值为0不显示,主要是最后一个参数,透明度…...

的原因分类及对应排查方案)

JVM暂停(Stop-The-World,STW)的原因分类及对应排查方案

JVM暂停(Stop-The-World,STW)的完整原因分类及对应排查方案,结合JVM运行机制和常见故障场景整理而成: 一、GC相关暂停 1. 安全点(Safepoint)阻塞 现象:JVM暂停但无GC日志,日志显示No GCs detected。原因:JVM等待所有线程进入安全点(如…...

代码随想录刷题day30

1、零钱兑换II 给你一个整数数组 coins 表示不同面额的硬币,另给一个整数 amount 表示总金额。 请你计算并返回可以凑成总金额的硬币组合数。如果任何硬币组合都无法凑出总金额,返回 0 。 假设每一种面额的硬币有无限个。 题目数据保证结果符合 32 位带…...