Kubernetes 使用 helm 部署 NFS Provisioner

文章目录

- 1. 介绍

- 2. 预备条件

- 3. 部署 nfs

- 4. 部署 NFS subdir external provisioner

- 4.1 集群配置 containerd 代理

- 4.2 配置代理堡垒机通过 kubeconfig 部署

- 部署 MinIO

- 添加仓库

- 修改可配置项

- 访问

- nodepot

- ingress

1. 介绍

NFS subdir external provisioner 使用现有且已配置的NFS 服务器来支持通过持久卷声明动态配置 Kubernetes 持久卷。持久卷配置为${namespace}-${pvcName}-${pvName}.

变量配置:

| Variable | Value |

|---|---|

| nfs_provisioner_namespace | nfsstorage |

| nfs_provisioner_role | nfs-provisioner-runner |

| nfs_provisioner_serviceaccount | nfs-provisioner |

| nfs_provisioner_name | hpe.com/nfs |

| nfs_provisioner_storage_class_name | nfs |

| nfs_provisioner_server_ip | hpe2-nfs.am2.cloudra.local |

| nfs_provisioner_server_share | /k8s |

注意:此存储库是从https://github.com/kubernetes-incubator/external-storage/tree/master/nfs-client迁移的。作为迁移的一部分:容器镜像名称和存储库已分别更改为

registry.k8s.io/sig-storage和nfs-subdir-external-provisioner。为了保持与早期部署文件的向后兼容性,NFS Client Provisioner 的命名保留为nfs-client-provisioner部署 YAML 中的名称

2. 预备条件

- CentOS Linux release 7.9.2009 (Core)

- kubernetes 集群

$ kubectl get node

NAME STATUS ROLES AGE VERSION

master1 Ready control-plane 275d v1.25.0

node1 Ready <none> 275d v1.25.0

node2 Ready <none> 275d v1.25.03. 部署 nfs

- linux 配置 NFS 共享服务

[root@master1 helm]# exportfs -s

/app/nfs/k8snfs 192.168.10.0/24(sync,wdelay,hide,no_subtree_check,sec=sys,rw,secure,no_root_squash,no_all_squash)4. 部署 NFS subdir external provisioner

helm repo add nfs-subdir-external-provisioner https://kubernetes-sigs.github.io/nfs-subdir-external-provisioner/

helm repo update

helm install nfs-subdir-external-provisioner nfs-subdir-external-provisioner/nfs-subdir-external-provisioner --set nfs.server=192.168.10.61 --set nfs.path=/app/nfs/k8snfs -n nfs-provisioner --create-namespace

报错:Error: INSTALLATION FAILED: failed to download "nfs-subdir-external-provisioner/nfs-subdir-external-provisioner"

忘记配置代理无法拉取 helm charts 和 registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

有两种办法,但都需要找到一个专门配置代理的节点

4.1 集群配置 containerd 代理

$ vim /etc/systemd/system/containerd.service.d/http-proxy.conf

[Service]

Environment="HTTP_PROXY=http://192.168.10.105:7890"

Environment="HTTPS_PROXY=http://192.168.10.105:7890"

Environment="NO_PROXY=localhost"#重启

$ systemctl restart containerd.service

这样镜像的问题就解决了。下面解决拉取 helm charts的问题

再执行部署 debug ,发现拉取的 helm charts 的版本

$ helm --debug install nfs-subdir-external-provisioner nfs-subdir-external-provisioner/nfs-subdir-external-provisioner --set nfs.server=192.168.10.61 --set nfs.path=/app/nfs/k8snfs -n nfs-provisioner --create-namespace

Error: INSTALLATION FAILED: Get "https://objects.githubusercontent.com/github-production-release-asset-2e65be/250135810/33156d2f-3fef-4b00-bf34-1817d30653bc?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20230716%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20230716T153116Z&X-Amz-Expires=300&X-Amz-Signature=7219da0622fe22795d526f742064ee0da00a5821c37a5e1fe1bb0eb6b046e3c0&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=250135810&response-content-disposition=attachment%3B%20filename%3Dnfs-subdir-external-provisioner-4.0.18.tgz&response-content-type=application%2Foctet-stream": read tcp 192.168.10.28:46032->192.168.10.105:7890: read: connection reset by peer

helm.go:84: [debug] Get "https://objects.githubusercontent.com/github-production-release-asset-2e65be/250135810/33156d2f-3fef-4b00-bf34-1817d30653bc?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAIWNJYAX4CSVEH53A%2F20230716%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20230716T153116Z&X-Amz-Expires=300&X-Amz-Signature=7219da0622fe22795d526f742064ee0da00a5821c37a5e1fe1bb0eb6b046e3c0&X-Amz-SignedHeaders=host&actor_id=0&key_id=0&repo_id=250135810&response-content-disposition=attachment%3B%20filename%3Dnfs-subdir-external-provisioner-4.0.18.tgz&response-content-type=application%2Foctet-stream": read tcp 192.168.10.28:46032->192.168.10.105:7890: read: connection reset by peer

手动去下载 nfs-subdir-external-provisioner-4.0.18.tgz

再指定本地 helm charts 包执行部署

helm install nfs-subdir-external-provisioner nfs-subdir-external-provisioner-4.0.18.tgz --set nfs.server=192.168.10.61 --set nfs.path=/app/nfs/k8snfs -n nfs-provisioner --create-namespace

4.2 配置代理堡垒机通过 kubeconfig 部署

拉取 registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

$ podman pull registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

Trying to pull registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2...

Getting image source signatures

Copying blob 528677575c0b done

Copying blob 60775238382e done

Copying config 932b0bface done

Writing manifest to image destination

Storing signatures

932b0bface75b80e713245d7c2ce8c44b7e127c075bd2d27281a16677c8efef3

$ podman save -o nfs-subdir-external-provisioner-v4.0.2.tar registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

Getting image source signatures

Copying blob 1a5ede0c966b done

Copying blob ad321585b8f5 done

Copying config 932b0bface done

Writing manifest to image destination

Storing signatures

$ scp nfs-subdir-external-provisioner-v4.0.2.tar root@192.168.10.62:/root

$ scp nfs-subdir-external-provisioner-v4.0.2.tar root@192.168.10.63:/root

配置 kubeconfig

$ mkdir kubeconfig

$ vim kubeconfig/61cluster.yaml

apiVersion: v1

clusters:

- cluster:certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM2VENDQWRHZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQ0FYRFRJeU1UQXhOREE1TURFeE9Gb1lEekl4TWpJd09USXdNRGt3TVRFNFdqQVZNUk13RVFZRApWUVFERXdwcmRXSmxjbTVsZEdWek1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBTUlJQkNnS0NBUUVBCnR1WTAvblE1OTZXVm5pZFFOdmJpWFNRczJjSVh5WCthZVBMZ0ptUXVpb0pjeGlyQ2dxdStLT0hQTWcwamgra1MKT0RqWS80K3hvZlpjakhydFRDYlg0U1dpUUFqK0diSTJVdmd1ei91U29JVHhhZzNId2JCVnk0REZrUjdpSVUxOQpVVWd0Yy9VYlB6L2I0aGJnT3prYkcyVGo0eDF1b3U4aTErTUVyZnRZRmtyTjJ1bzNTU1RaMVhZejB5d08xbzZvCkxiYktudDB3TUthUmFqKzRKS3lPRkd2dHVMODhjTXRYSXN3KzZ5QndqNWVlYUFnZXVRbUZYcHZ3M1BNRWt3djIKWFN6RTVMRy9SUUhaWTNTeGpWdUNPVXU5SllvNFVWK2RwRUdncUdmRXJDOHNvWHAvcG9PSkhERVhKNFlwdnFDOApJSnErRldaUXE1VEhKYy8rMUFoenhRSURBUUFCbzBJd1FEQU9CZ05WSFE4QkFmOEVCQU1DQXFRd0R3WURWUjBUCkFRSC9CQVV3QXdFQi96QWRCZ05WSFE0RUZnUVVMb0ZPcDZ1cFBHVldUQ1N3WWlpRkpqZkowOWd3RFFZSktvWkkKaHZjTkFRRUxCUUFEZ2dFQkFFV2orRmcxTFNkSnRSM1FFdFBnKzdHbEJHNnJsZktCS3U2Q041TnJGeEN5Y3UwMwpNNG1JUEg3VXREYUMyRHNtQVNUSWwrYXMzMkUrZzBHWXZDK0VWK0F4dG40RktYaHhVSkJ2Smw3RFFsY2VWQTEyCjk0bDExYUk1VE5IOGN5WDVsQ3draXRRMks4ekxTdUgySFlKeG15cTVVK092UVBaS3J4ekN3NFBCdk5Rem1lSFMKR0VuKzdVUjFFamZQaGZ5UTZIdGh5VmZ2MWNtL283L2tCWkJ4OGJmQWt4T0drUnR4eHo4V1JVVTNOUkwwbUt4YwpIc2xPMm43a09BZnB4U3Jya2w3UFRXd0doSEN1VGtxRUdaOEsycW9wK285ajQyS3U5eldqUUlaMjJLcytLMXk2CjFmd3h0Zit2c2hFaFZURGZSU2ZoTDYyUEh3RnAxQklZTFZoVUhJcz0KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=server: https://192.168.10.61:6443name: kubernetes

contexts:

- context:cluster: kubernetesuser: kubernetes-adminname: kubernetes-admin@kubernetes

current-context: kubernetes-admin@kubernetes

kind: Config

preferences: {}

users:

- name: kubernetes-adminuser:client-certificate-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURGVENDQWYyZ0F3SUJBZ0lJWVNHaHV4c1poUWt3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWdGdzB5TWpFd01UUXdPVEF4TVRoYUdBOHlNVEl5TURreU1EQTVNREV5TmxvdwpOREVYTUJVR0ExVUVDaE1PYzNsemRHVnRPbTFoYzNSbGNuTXhHVEFYQmdOVkJBTVRFR3QxWW1WeWJtVjBaWE10CllXUnRhVzR3Z2dFaU1BMEdDU3FHU0liM0RRRUJBUVVBQTRJQkR3QXdnZ0VLQW9JQkFRQ25KeHVSd0FERGw5RkMKMGRtSWVmV05hcE9DL2R1OXUwWWIwTXA5Nzh2eW5IcFJXMEI4QWlTTitkOHZCelNwMi9GdmVZeGlPSUpwbDlTVgpwcTdtSXM0T1A3cXN5Znc0TTBXKzM5c2dEditGYlJ1OUVMUlV6cXg1T1RwZVlDZVRnaFplQXRSU0dOamhKS2N0Cmd1SzA5OHJoNkpSWnZhUk1TYkYzK21GZ0RrbHNpL0Z4c2s1Uzl1Rk9Zb3lxTWdTUjdGTjFlOHVRSmxwU09Zem8KQlBWc3NsQ2FUTUNoQ2RrVnFteThiRVVtdzFvRzhhTGwrYXRuaW1QdEFXaWNzMGZjMGV0Zm9MRUpDcno4Wlo4UApBSnRackVHaDcxM0d0czdGblpXNnJ6RFppc3Z0Zml1WGFyanFQd2Z3a0ZBekJhYlRiYUF1NlJIdWloSWZSZWJxCjB2djR0c2tCQWdNQkFBR2pTREJHTUE0R0ExVWREd0VCL3dRRUF3SUZvREFUQmdOVkhTVUVEREFLQmdnckJnRUYKQlFjREFqQWZCZ05WSFNNRUdEQVdnQlF1Z1U2bnE2azhaVlpNSkxCaUtJVW1OOG5UMkRBTkJna3Foa2lHOXcwQgpBUXNGQUFPQ0FRRUF0akk4c2c3KzlORUNRaStwdDZ5bWVtWjZqOG5SQjFnbm5aU2dGN21GYk03NXdQSUQ0NDJYCkhENnIwOEF6bDZGei9sZEtxbkN0cDJ2QnJWQmxVaWl6Ry9naWVWQTVKa3NIVEtveFFpV1llWEwwYmxsVDA2RDcKV240V1BTKzUvcGZMWktmd25jL20xR0owVWtQQUJHQVdSVTFJSi9kK0dJUlFtNTJTck9VYktLUTIzbHhGa2xqMwpYaDYveEg0eVRUeGsxRjVEVUhwcnFSTVdDTXZRYkRkM0pUaEpvdWNpZWRtcCs1YWV0ZStQaGZLSUtCT1JoMC9OCnIyTWpCZjNNaENyMUMwK0dydGMyeC80eC9PejRwbGRGNmQ1a2c3NmZvOCtiTW1ISmVyaVV6MXZKSkU0bFYxUDEKK21wN1E5Y1BGUVBJdkpNakRBVXdBUkRGcHNNTEhYQ0FYZz09Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0Kclient-key-data: LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb3dJQkFBS0NBUUVBcHljYmtjQUF3NWZSUXRIWmlIbjFqV3FUZ3YzYnZidEdHOURLZmUvTDhweDZVVnRBCmZBSWtqZm5mTHdjMHFkdnhiM21NWWppQ2FaZlVsYWF1NWlMT0RqKzZyTW44T0RORnZ0L2JJQTcvaFcwYnZSQzAKVk02c2VUazZYbUFuazRJV1hnTFVVaGpZNFNTbkxZTGl0UGZLNGVpVVdiMmtURW14ZC9waFlBNUpiSXZ4Y2JKTwpVdmJoVG1LTXFqSUVrZXhUZFh2TGtDWmFVam1NNkFUMWJMSlFta3pBb1FuWkZhcHN2R3hGSnNOYUJ2R2k1Zm1yClo0cGo3UUZvbkxOSDNOSHJYNkN4Q1FxOC9HV2ZEd0NiV2F4Qm9lOWR4cmJPeFoyVnVxOHcyWXJMN1g0cmwycTQKNmo4SDhKQlFNd1dtMDIyZ0x1a1I3b29TSDBYbTZ0TDcrTGJKQVFJREFRQUJBb0lCQUNoY29DS2NtMUtmaVM4NgpYdTIralZXZGc0c2c0M3U0Q2VEVGxPRytFcUE5dXFlRWdsaXZaOFpFck9pOU03RkVZOU5JSldiZVFGZGhDenNyCnFaWDJsNDBIUkh0T3RyR1haK01FU1BRL3l1R2NEQk9tUWZVc2hxY3E4M1l3ZjczMXJwTDYyZXdOQmVtdm9SS3oKUlN6dm5MVGFKV0JhRTU4OE9EZEJaVnY5ZHl0WFoxSkVqWHZTVUowaWY4bWZvMUlxNUdBa1FLZWZuMlVLcTRROApYYzJTTkd5QTZxUThGNGd0ZWJ1WGI2QVFLdko4K05KRlI1b2ppNG9hWVlkcE5yR0MzUnJ5VHVSc29ZNFIxRko5ClA5WjcwZGtCcnExcDlNOVA2aDFxWVlaT1FISDdNRklaaFBra3dHNllVLzdzRVBZS2h1R29LVVNJR0FsUXU1czIKOGFtM0toVUNnWUVBM1AwaDRRd0xoeXFXVDBnRC9CcFRPR21iWjd2enA2Z3B3NTNhQXppWDE1UVRHcjdwaWF5RApFSlI1c01vUkF5ckthdUVVZjR1MzkyeGc0NlY4eVJNN3ZlVzZzZ2ZDSnUva3N6Nkxqa3FWRXBXUktycWVQRzhKClIwZXQ2TXRIaExxRHBDSytIdTJIYXhtbWdzMzB6Wk9EQ0Vma2dOSGY3cmM0ZnlxY2pETEpOZHNDZ1lFQXdhSisKRmhQSmpTdTVBYlJ1d2dVUDJnd0REM2ZiQ09peW5VSHpweGhUMWhRcUNPNm14dE1VaUE4bFJraTgxb1NLVEN2eAoxd1VpcnMwYzVNVFRiUS9kekpSVEtTSlRGZFhWNUdxUXppclc3SE5meGcvS1RkTVUyNDRvZG9WY2E4M0Q5WjJ6CmxybVNQQkEvaS9SOVVSNTRnODdFbHBuVi9Cc21wSDcrbUlkQzZWTUNnWUI3dGZsUlVyemhYaVhuSEJtZTk5MisKcHVBb29qODBqQjlWTXZqbzlMV01LWWpJWURlOHFxWjBrYW5PSGxDSHhWeXJtSFV4TWJZNi9LRUF6NU9idlBpawp4Z1pOdzZvY3dnNzFpUDMzR2lsNXplRUdXcEphb280L0tSRmlVT29vazRFK1VYUzlPNXVqaVNoOThXNHA1M3BqCkdGd0RBWHFxMkViNGFaSlpxZFNhSVFLQmdRQ3A2TTdneW40cVhQcGJUNXQ4cm5wcForN3JqTTFyZE56K2R0ZTUKZ1BSWHZwdmYrS0hwaDJEVnZ3eURMdUpkRGpKWWdwc1VoVklZdHEwcTVMZHRWT1hZVlRMZnZsblBxREttMndlegprUTNFcjd5VGpGbUZqcm9YcWhkQllPWm5Sa2cwWnl3bUR6SU5lR2g2ZzQvUE5ZQ2trRFFhdm1SeGN0V210RFR0ClhJdFBOd0tCZ0RkTnlRRU5pNmptd0tEaDBMeUNJTXBlWVA4TEYyKzVGSHZPWExBSFBuSzFEb2I1djMrMjFZTVoKTmtibGNJNzNBd2RiRnJpRjhqbVBxYXZmdUowNlA4UUJZVGVEbGhiSjZBZW1nWG1kVlRaL2IwTnV1ZktiNFdvVgo0eHA3TUJYa0NYNTUxWVB6djloc2M2RTZkYm5KRHJCajV4M3RsbWdyV2ZmL00weUtTOEF4Ci0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

测试

$ kubectl --kubeconfig kubeconfig/61cluster.yaml get node

NAME STATUS ROLES AGE VERSION

master1 Ready control-plane 275d v1.25.0

node1 Ready <none> 275d v1.25.0

node2 Ready <none> 275d v1.25.0

部署

$ helm install --kubeconfig kubeconfig/61cluster.yaml nfs-subdir-external-provisioner nfs-subdir-external-provisioner/nfs-subdir-external-provisioner --set nfs.server=192.168.10.61 --set nfs.path=/app/nfs/k8snfs -n nfs-provisioner --create-namespace

NAME: nfs-subdir-external-provisioner

LAST DEPLOYED: Sun Jul 16 22:51:28 2023

NAMESPACE: nfs-provisioner

STATUS: deployed

REVISION: 1

TEST SUITE: None$ kubectl --kubeconfig kubeconfig/61cluster.yaml get all -n nfs-provisioner -owide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nfs-subdir-external-provisioner-688456c5d9-f5xkt 1/1 Running 0 39m 100.108.11.220 node2 <none> <none>NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR

deployment.apps/nfs-subdir-external-provisioner 1/1 1 1 39m nfs-subdir-external-provisioner registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2 app=nfs-subdir-external-provisioner,release=nfs-subdir-external-provisionerNAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR

replicaset.apps/nfs-subdir-external-provisioner-688456c5d9 1 1 1 39m nfs-subdir-external-provisioner registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2 app=nfs-subdir-external-provisioner,pod-template-hash=688456c5d9,release=nfs-subdir-external-provisioner$ kubectl --kubeconfig kubeconfig/61cluster.yaml get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client cluster.local/nfs-subdir-external-provisioner Delete Immediate true 37m遇到这种镜像无法拉取的 helm charts ,我们可以定制属于自己的 helm charts,方便日常测试使用。

部署 MinIO

添加仓库

kubectl create ns minio

helm repo add minio https://helm.min.io/

helm repo update

helm search repo minio/minio

修改可配置项

helm show values minio/minio > values.yaml

修改内容:

accessKey: 'minio'

secretKey: 'minio123'

persistence:enabled: truestorageCalss: 'nfs-client'VolumeName: ''accessMode: ReadWriteOncesize: 5Giservice:type: ClusterIPclusterIP: ~port: 9000# nodePort: 32000resources:requests:memory: 128M

如果你想知道最终生成的模版,可以使用 helm template 命令。

helm template -f values.yaml --namespace minio minio/minio | tee -a minio.yaml

输出:

---

# Source: minio/templates/post-install-prometheus-metrics-serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: release-name-minio-update-prometheus-secretlabels:app: minio-update-prometheus-secretchart: minio-8.0.10release: release-nameheritage: Helm

---

# Source: minio/templates/serviceaccount.yaml

apiVersion: v1

kind: ServiceAccount

metadata:name: "release-name-minio"namespace: "minio"labels:app: miniochart: minio-8.0.10release: "release-name"

---

# Source: minio/templates/secrets.yaml

apiVersion: v1

kind: Secret

metadata:name: release-name-miniolabels:app: miniochart: minio-8.0.10release: release-nameheritage: Helm

type: Opaque

data:accesskey: "bWluaW8="secretkey: "bWluaW8xMjM="

---

# Source: minio/templates/configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:name: release-name-miniolabels:app: miniochart: minio-8.0.10release: release-nameheritage: Helm

data:initialize: |-#!/bin/shset -e ; # Have script exit in the event of a failed command.MC_CONFIG_DIR="/etc/minio/mc/"MC="/usr/bin/mc --insecure --config-dir ${MC_CONFIG_DIR}"# connectToMinio# Use a check-sleep-check loop to wait for Minio service to be availableconnectToMinio() {SCHEME=$1ATTEMPTS=0 ; LIMIT=29 ; # Allow 30 attemptsset -e ; # fail if we can't read the keys.ACCESS=$(cat /config/accesskey) ; SECRET=$(cat /config/secretkey) ;set +e ; # The connections to minio are allowed to fail.echo "Connecting to Minio server: $SCHEME://$MINIO_ENDPOINT:$MINIO_PORT" ;MC_COMMAND="${MC} config host add myminio $SCHEME://$MINIO_ENDPOINT:$MINIO_PORT $ACCESS $SECRET" ;$MC_COMMAND ;STATUS=$? ;until [ $STATUS = 0 ]doATTEMPTS=`expr $ATTEMPTS + 1` ;echo \"Failed attempts: $ATTEMPTS\" ;if [ $ATTEMPTS -gt $LIMIT ]; thenexit 1 ;fi ;sleep 2 ; # 1 second intervals between attempts$MC_COMMAND ;STATUS=$? ;done ;set -e ; # reset `e` as activereturn 0}# checkBucketExists ($bucket)# Check if the bucket exists, by using the exit code of `mc ls`checkBucketExists() {BUCKET=$1CMD=$(${MC} ls myminio/$BUCKET > /dev/null 2>&1)return $?}# createBucket ($bucket, $policy, $purge)# Ensure bucket exists, purging if asked tocreateBucket() {BUCKET=$1POLICY=$2PURGE=$3VERSIONING=$4# Purge the bucket, if set & exists# Since PURGE is user input, check explicitly for `true`if [ $PURGE = true ]; thenif checkBucketExists $BUCKET ; thenecho "Purging bucket '$BUCKET'."set +e ; # don't exit if this fails${MC} rm -r --force myminio/$BUCKETset -e ; # reset `e` as activeelseecho "Bucket '$BUCKET' does not exist, skipping purge."fifi# Create the bucket if it does not existif ! checkBucketExists $BUCKET ; thenecho "Creating bucket '$BUCKET'"${MC} mb myminio/$BUCKETelseecho "Bucket '$BUCKET' already exists."fi# set versioning for bucketif [ ! -z $VERSIONING ] ; thenif [ $VERSIONING = true ] ; thenecho "Enabling versioning for '$BUCKET'"${MC} version enable myminio/$BUCKETelif [ $VERSIONING = false ] ; thenecho "Suspending versioning for '$BUCKET'"${MC} version suspend myminio/$BUCKETfielseecho "Bucket '$BUCKET' versioning unchanged."fi# At this point, the bucket should exist, skip checking for existence# Set policy on the bucketecho "Setting policy of bucket '$BUCKET' to '$POLICY'."${MC} policy set $POLICY myminio/$BUCKET}# Try connecting to Minio instancescheme=httpconnectToMinio $scheme

---

# Source: minio/templates/pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:name: release-name-miniolabels:app: miniochart: minio-8.0.10release: release-nameheritage: Helm

spec:accessModes:- "ReadWriteOnce"resources:requests:storage: "1Gi"storageClassName: "nfs-client"

---

# Source: minio/templates/post-install-prometheus-metrics-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:name: release-name-minio-update-prometheus-secretlabels:app: minio-update-prometheus-secretchart: minio-8.0.10release: release-nameheritage: Helm

rules:- apiGroups:- ""resources:- secretsverbs:- get- create- update- patchresourceNames:- release-name-minio-prometheus- apiGroups:- ""resources:- secretsverbs:- create- apiGroups:- monitoring.coreos.comresources:- servicemonitorsverbs:- getresourceNames:- release-name-minio

---

# Source: minio/templates/post-install-prometheus-metrics-rolebinding.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:name: release-name-minio-update-prometheus-secretlabels:app: minio-update-prometheus-secretchart: minio-8.0.10release: release-nameheritage: Helm

roleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: release-name-minio-update-prometheus-secret

subjects:- kind: ServiceAccountname: release-name-minio-update-prometheus-secretnamespace: "minio"

---

# Source: minio/templates/service.yaml

apiVersion: v1

kind: Service

metadata:name: release-name-miniolabels:app: miniochart: minio-8.0.10release: release-nameheritage: Helm

spec:type: NodePortports:- name: httpport: 9000protocol: TCPnodePort: 32000selector:app: miniorelease: release-name

---

# Source: minio/templates/deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:name: release-name-miniolabels:app: miniochart: minio-8.0.10release: release-nameheritage: Helm

spec:strategy:type: RollingUpdaterollingUpdate:maxSurge: 100%maxUnavailable: 0selector:matchLabels:app: miniorelease: release-nametemplate:metadata:name: release-name-miniolabels:app: miniorelease: release-nameannotations:checksum/secrets: f48e042461f5cd95fe36906895a8518c7f1592bd568c0caa8ffeeb803c36d4a4checksum/config: 9ec705e3000d8e1f256b822bee35dc238f149dbb09229548a99c6409154a12b8spec:serviceAccountName: "release-name-minio"securityContext:runAsUser: 1000runAsGroup: 1000fsGroup: 1000containers:- name: minioimage: "minio/minio:RELEASE.2021-02-14T04-01-33Z"imagePullPolicy: IfNotPresentcommand:- "/bin/sh"- "-ce"- "/usr/bin/docker-entrypoint.sh minio -S /etc/minio/certs/ server /export"volumeMounts:- name: exportmountPath: /export ports:- name: httpcontainerPort: 9000env:- name: MINIO_ACCESS_KEYvalueFrom:secretKeyRef:name: release-name-miniokey: accesskey- name: MINIO_SECRET_KEYvalueFrom:secretKeyRef:name: release-name-miniokey: secretkeyresources:requests:memory: 1Gi volumes:- name: exportpersistentVolumeClaim:claimName: release-name-minio- name: minio-usersecret:secretName: release-name-minio创建 MinIO

helm install -f values.yaml minio minio/minio -n minio

输出:

NAME: minio

LAST DEPLOYED: Wed Jul 19 10:56:23 2023

NAMESPACE: minio

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Minio can be accessed via port 9000 on the following DNS name from within your cluster:

minio.minio.svc.cluster.localTo access Minio from localhost, run the below commands:1. export POD_NAME=$(kubectl get pods --namespace minio -l "release=minio" -o jsonpath="{.items[0].metadata.name}")2. kubectl port-forward $POD_NAME 9000 --namespace minioRead more about port forwarding here: http://kubernetes.io/docs/user-guide/kubectl/kubectl_port-forward/You can now access Minio server on http://localhost:9000. Follow the below steps to connect to Minio server with mc client:1. Download the Minio mc client - https://docs.minio.io/docs/minio-client-quickstart-guide2. Get the ACCESS_KEY=$(kubectl get secret minio -o jsonpath="{.data.accesskey}" | base64 --decode) and the SECRET_KEY=$(kubectl get secret minio -o jsonpath="{.data.secretkey}" | base64 --decode)3. mc alias set minio-local http://localhost:9000 "$ACCESS_KEY" "$SECRET_KEY" --api s3v44. mc ls minio-localAlternately, you can use your browser or the Minio SDK to access the server - https://docs.minio.io/categories/17查看 minio 状态

$ kubectl get pod -n minio

NAME READY STATUS RESTARTS AGE

minio-66f8b9444b-lml5f 1/1 Running 0 62s

[root@master1 helm]# kubectl get all -n minio

NAME READY STATUS RESTARTS AGE

pod/minio-66f8b9444b-lml5f 1/1 Running 0 73sNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/minio NodePort 10.96.0.232 <none> 9000:32000/TCP 73sNAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/minio 1/1 1 1 73sNAME DESIRED CURRENT READY AGE

replicaset.apps/minio-66f8b9444b 1 1 1 73s$ kubectl get pv,pvc,sc -n minio

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-667a9c76-7d14-484c-aeeb-6e07cffd2c10 1Gi RWO Delete Bound minio/minio nfs-client 2m20sNAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/minio Bound pvc-667a9c76-7d14-484c-aeeb-6e07cffd2c10 1Gi RWO nfs-client 2m20sNAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

storageclass.storage.k8s.io/nfs-client cluster.local/nfs-subdir-external-provisioner Delete Immediate true 2d12h访问

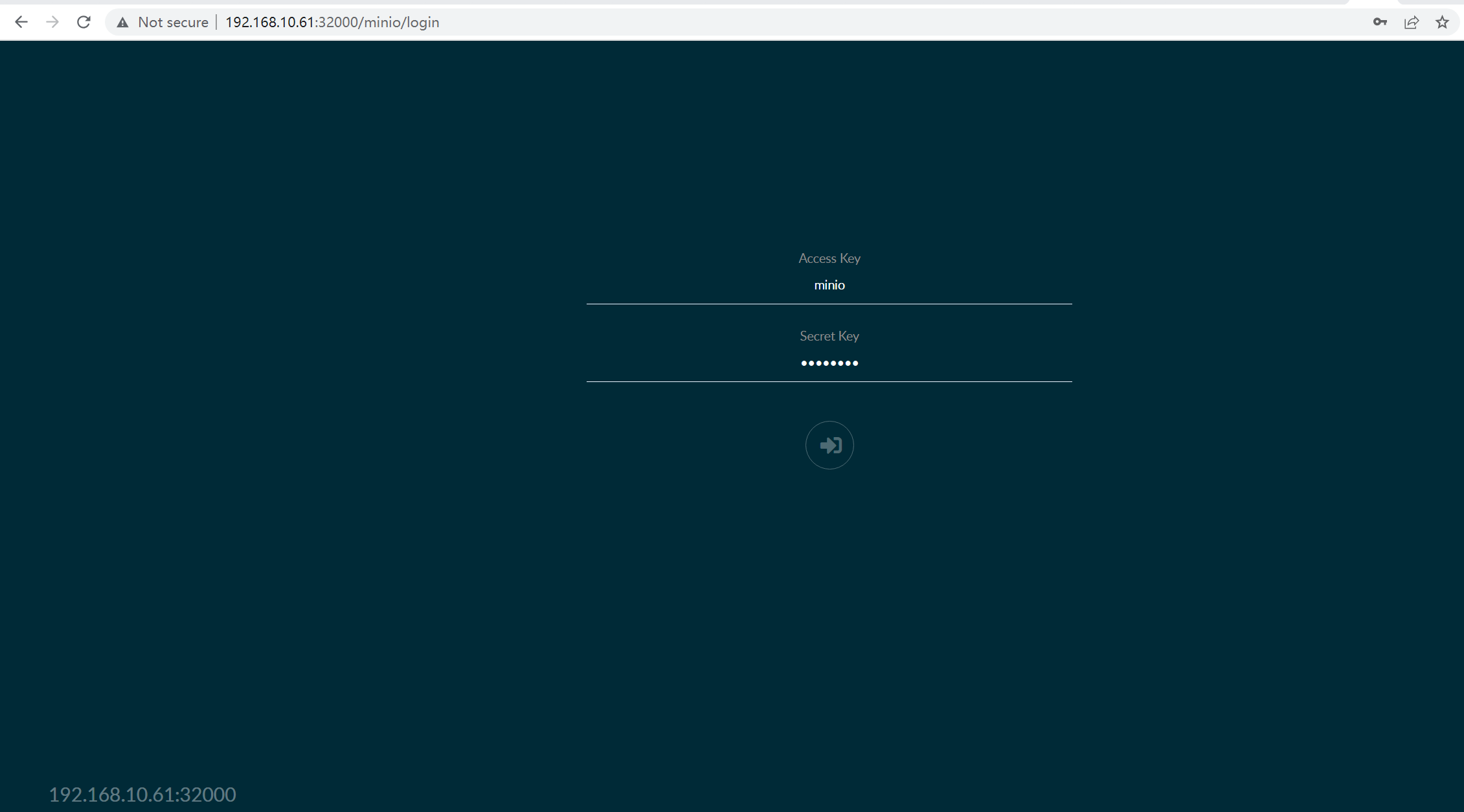

nodepot

界面访问:http://192.168.10.61:32000

ingress

修改 values.yaml 的service

service:type: ClusterIPclusterIP: ~port: 9000更新

$ helm upgrade -f values.yaml minio minio/minio -n minio

Release "minio" has been upgraded. Happy Helming!

NAME: minio

LAST DEPLOYED: Wed Jul 19 11:49:22 2023

NAMESPACE: minio

STATUS: deployed

REVISION: 2

TEST SUITE: None

NOTES:

Minio can be accessed via port 9000 on the following DNS name from within your cluster:

minio.minio.svc.cluster.local$ kubectl get all -n minio

NAME READY STATUS RESTARTS AGE

pod/minio-66f8b9444b-lml5f 1/1 Running 0 53mNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/minio ClusterIP 10.96.0.232 <none> 9000/TCP 53mNAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/minio 1/1 1 1 53mNAME DESIRED CURRENT READY AGE

replicaset.apps/minio-66f8b9444b 1 1 1 53m

service 已经由 nodePort 类型改为 ClusterIP。

接下来,我们需要配置证书和域名,你需要在集群内 部署 cert-manager

查看 minio的 secret tls 证书

$ kubectl get secret -n minio

NAME TYPE DATA AGE

minio Opaque 2 58m

minio-letsencrypt-tls-fn4vt Opaque 1 2m47s

查看已经创建好的 cluster-issuer名称

$ kubectl get ClusterIssuer

NAME READY AGE

letsencrypt-prod True 33mapiVersion: networking.k8s.io/v1

kind: Ingress

metadata:name: minionamespace: minioannotations:cert-manager.io/cluster-issuer: letsencrypt-prod # 配置自动生成 https 证书kubernetes.io/ingress.class: nginxnginx.ingress.kubernetes.io/rewrite-target: /

spec:tls:- hosts:- 'minio.demo.com'secretName: minio-letsencrypt-tlsrules:- host: minio.demo.comhttp:paths:- path: /pathType: Prefixbackend:service:name: minioport:number: 9000

创建

kubectl apply -f ingress.yaml

域名解析:

- linux 在

/etc/hosts添加192.168.10.61 minio.demo.com - windows 在

C:\Windows\System32\drivers\etc\hosts添加192.168.10.61 minio.demo.com

参考:

- Deploying the NFS provisioner for Kubernetes

- https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner

- 部署 MinIO 以支持对象存储

相关文章:

Kubernetes 使用 helm 部署 NFS Provisioner

文章目录 1. 介绍2. 预备条件3. 部署 nfs4. 部署 NFS subdir external provisioner4.1 集群配置 containerd 代理4.2 配置代理堡垒机通过 kubeconfig 部署 部署 MinIO添加仓库修改可配置项 访问nodepotingress 1. 介绍 NFS subdir external provisioner 使用现有且已配置的NFS…...

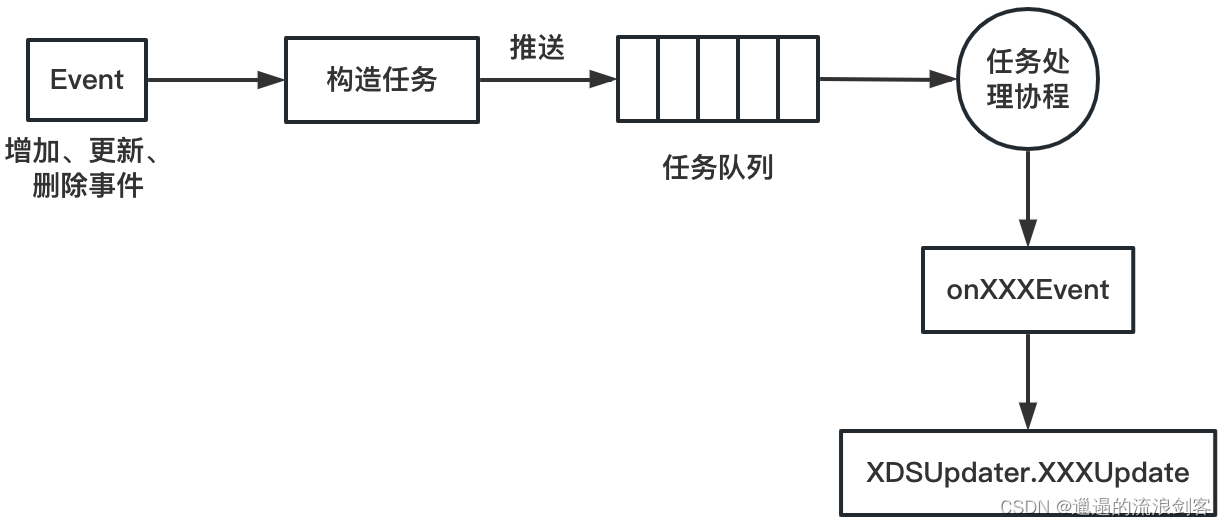

Istio Pilot源码学习(二):ServiceController服务发现

本文基于Istio 1.18.0版本进行源码学习 4、服务发现:ServiceController ServiceController是服务发现的核心模块,主要功能是监听底层平台的服务注册中心,将平台服务模型转换成Istio服务模型并缓存;同时根据服务的变化,…...

Spring框架中的ResourcePatternResolver只能指定jar包内文件,指定容器中文件路径报错:FileNotFoundException

原始代码: public static <T> T getFromFile(String specifiedFile, String defaultClasspathFile, Class<T> expectedClass) {try {ResourcePatternResolver resolver new PathMatchingResourcePatternResolver();Resource[] resources resolver.ge…...

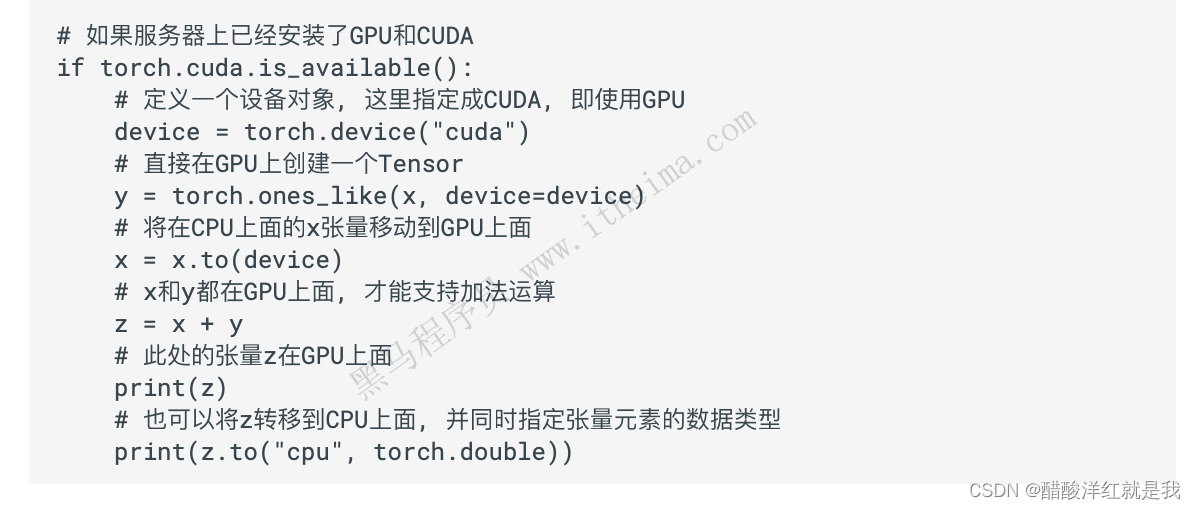

pytorch工具——认识pytorch

目录 pytorch的基本元素操作创建一个没有初始化的矩阵创建一个有初始化的矩阵创建一个全0矩阵并可指定数据元素类型为long直接通过数据创建张量通过已有的一个张量创建相同尺寸的新张量利用randn_like方法得到相同尺寸张量,并且采用随机初始化的方法为其赋值采用.si…...

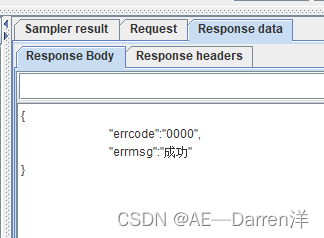

解决Jmeter响应内容显示乱码

一、问题描述 jmeter在执行接口请求后,返回的响应体里面出现乱码现象,尽管在调了对应请求的响应编码也无用,现找到解决办法。 二、解决办法 进入到jmeter的bin目录下,找到jmeter.properties,通过按ctrlF快速定位查找到…...

ChatGPT和搜索引擎哪个更好用

目录 ChatGPT和搜索引擎的概念 ChatGPT和搜索引擎的作用 ChatGPT的作用 搜索引擎的作用 ChatGPT和搜索引擎哪个更好用 总结 ChatGPT和搜索引擎的概念 ChatGPT是一种基于对话的人工智能技术,而搜索引擎则是一种用于在互联网上查找和检索信息的工具。它们各自具…...

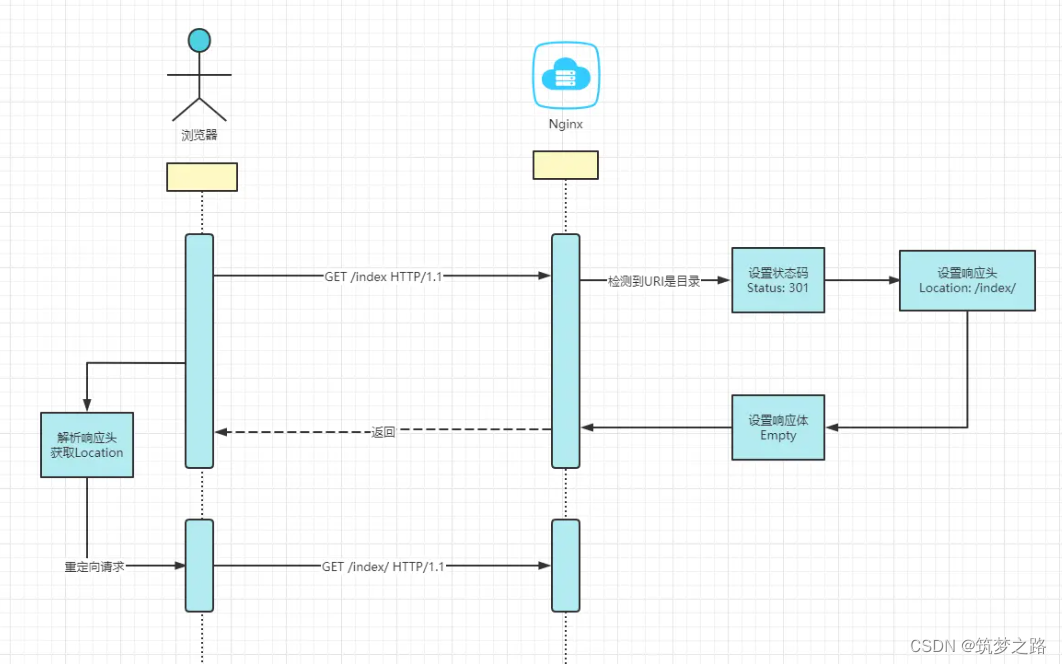

Nginx 301 https跳转后出现跨域和混合内容问题 —— 筑梦之路

问题 在浏览器地址栏敲入url访问静态资源目录时,发现默认跳转到了http协议的地址 如上图所示,客户端https请求先到达API网关,然后网关将请求通过http协议转发到静态资源服务器。 调出浏览器发现客户端发送的https请求收到了一个301状态码的响…...

记录--关于前端的音频可视化-Web Audio

这里给大家分享我在网上总结出来的一些知识,希望对大家有所帮助 背景 最近听音乐的时候,看到各种动效,突然好奇这些音频数据是如何获取并展示出来的,于是花了几天功夫去研究相关的内容,这里只是给大家一些代码实例&…...

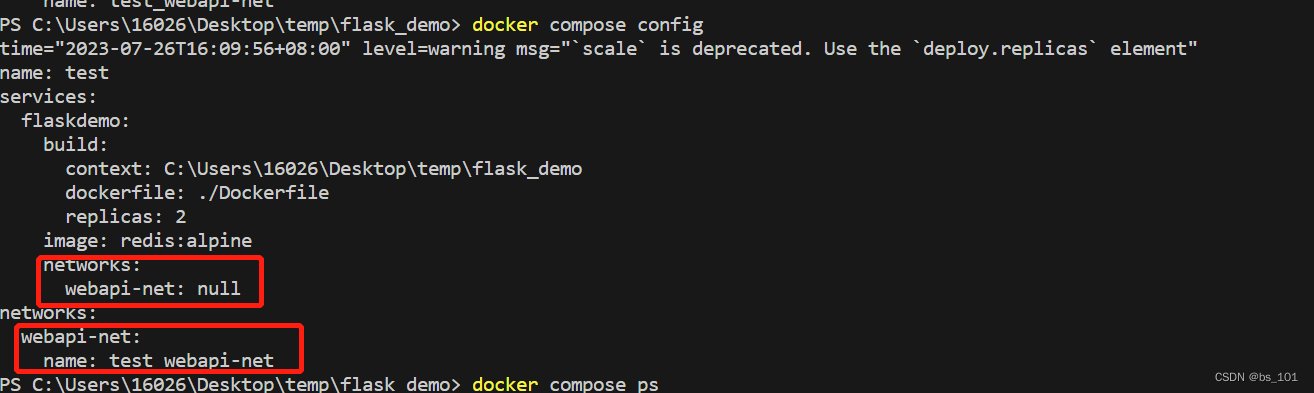

docker-compose yml配置、常用命令

下载完docker-compose后,如果想使用docker-compose命令开头,需要创建软连接 sudo ln -s /usr/local/lib/docker/cli-plugins/docker-compose /usr/bin/docker-compose 1.docker-compose.yml文件编排 一个 docker-compose.yml 文件的顶层元素有ÿ…...

—— React17+React Hook+TS4 最佳实践,仿 Jira 企业级项目(十三))

【实战】 七、Hook,路由,与 URL 状态管理(下) —— React17+React Hook+TS4 最佳实践,仿 Jira 企业级项目(十三)

文章目录 一、项目起航:项目初始化与配置二、React 与 Hook 应用:实现项目列表三、TS 应用:JS神助攻 - 强类型四、JWT、用户认证与异步请求五、CSS 其实很简单 - 用 CSS-in-JS 添加样式六、用户体验优化 - 加载中和错误状态处理七、Hook&…...

【MySQL】_5.MySQL的联合查询

目录 1. 笛卡尔积 2. 内连接 2.1 示例1:查询许仙同学的成绩 2.2 示例2: 查询所有同学的总成绩,及同学的个人信息 2.3 示例3:查询所有同学的科目及各科成绩,及同学的个人信息 3. 外连接 3.1 情况一:两…...

【后端面经】微服务构架 (1-3) | 熔断:熔断-恢复-熔断-恢复,抖来抖去怎么办?

文章目录 一、前置知识1、什么是熔断?2、什么是限流?3、什么是降级?4、怎么判断微服务出现了问题?A、指标有哪些?B、阈值如何选择?C、超过阈值之后,要不要持续一段时间才触发熔断?5、服务恢复正常二、面试环节1、面试准备2、面试基本思路三、总结 在微服务构架中…...

对UITextField输入内容的各种限制-总结

使用代理方法来限制输入框中的字数,输入的符号,输入的数字大小等各种限制 限制输入字数 已经有小数点了,就不能继续输入小数点 不能输入以0为开头的内容 不能输入以.为开头的内容 小数点后只允许输入一位数 只能输入100以下的数值 **不能包括…...

【图论】二分图

二分图,即可以将图中的所有顶点分层两个点集,每个点集内部没有边 判定图为二分图的充要条件:有向连通图不含奇数环 1、染色法 可以解决二分图判断的问题 步骤与基本思路 遍历图中每一个点,若该点未被染色,则遍历该…...

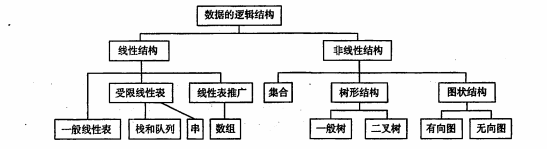

数据结构——(一)绪论

👉数据元素整体思维导图 欢迎补充 一、基本概念❤️ 1.1基本术语⭐️ (1)数据 客观事务属性的数字、字符。 (2)数据元素 数据元素是数据的基本单位,一个数据元素可由若干数据项组成,数据项是…...

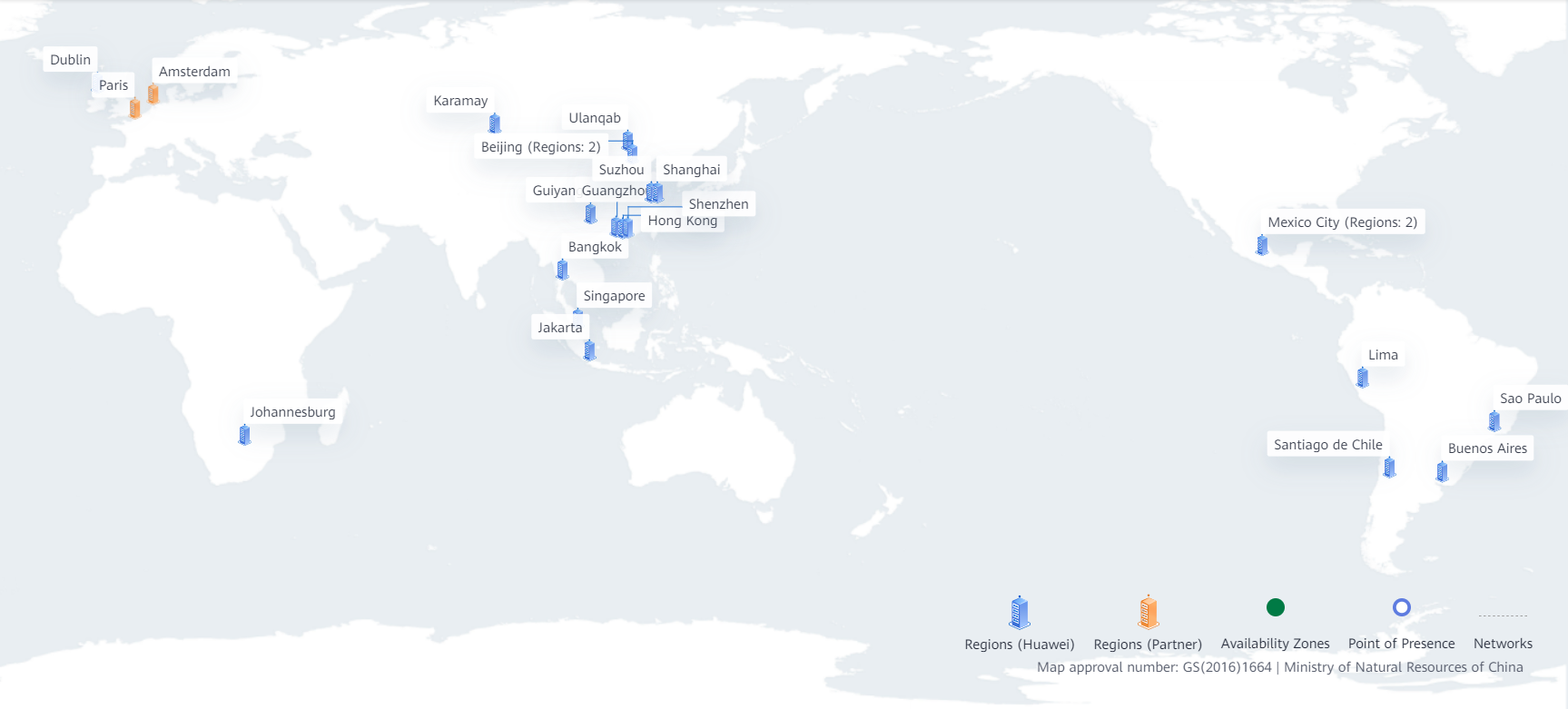

[ 华为云 ] 云计算中Region、VPC、AZ 是什么,他们又是什么关系,应该如何抉择

前几天看到一个问答帖,我回答完了才发现这个帖子居然是去年的也没人回复,其中他问了一些华为云的问题,对于其中的一些概念,这里来总结讲解一下,希望对学习华为云的小伙伴有所帮助。 文章目录 区域(Region&a…...

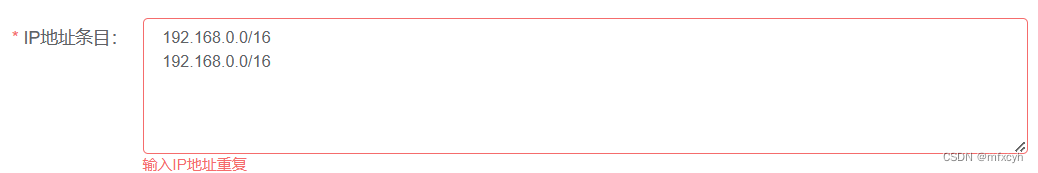

表单验证:输入的字符串以回车分隔并验证是否有

公司项目开发时,有一个需求,需要对输入的字符串按回车分隔并验证是否有重复项,效果如下: 表单代码: <el-form-item label"IP地址条目:" prop"ipAddressEntry"><el-inputtype&…...

智能财务分析-亿发财务报表管理系统,赋能中小企业财务数字化转型

对于许多中小企业来说,企业重要部门往往是财务和业务部门。业务负责创收,财务负责控制成本,降低税收风险。但因管理机制和公司运行制度的原因,中小企业往往面临着业务与财务割裂的问题,财务数据不清晰,无法…...

图为科技T501赋能工业机器人 革新传统工业流程

工业机器人已成为一个国家制造技术与科技水平的重要衡量标准,在2019年,中国工业机器人的组装量与产量均位居了全球首位。 当前,工业机器人被广泛用于电子、物流、化工等多个领域之中,是一种通过电子科技和机械关节制作出来的智能机…...

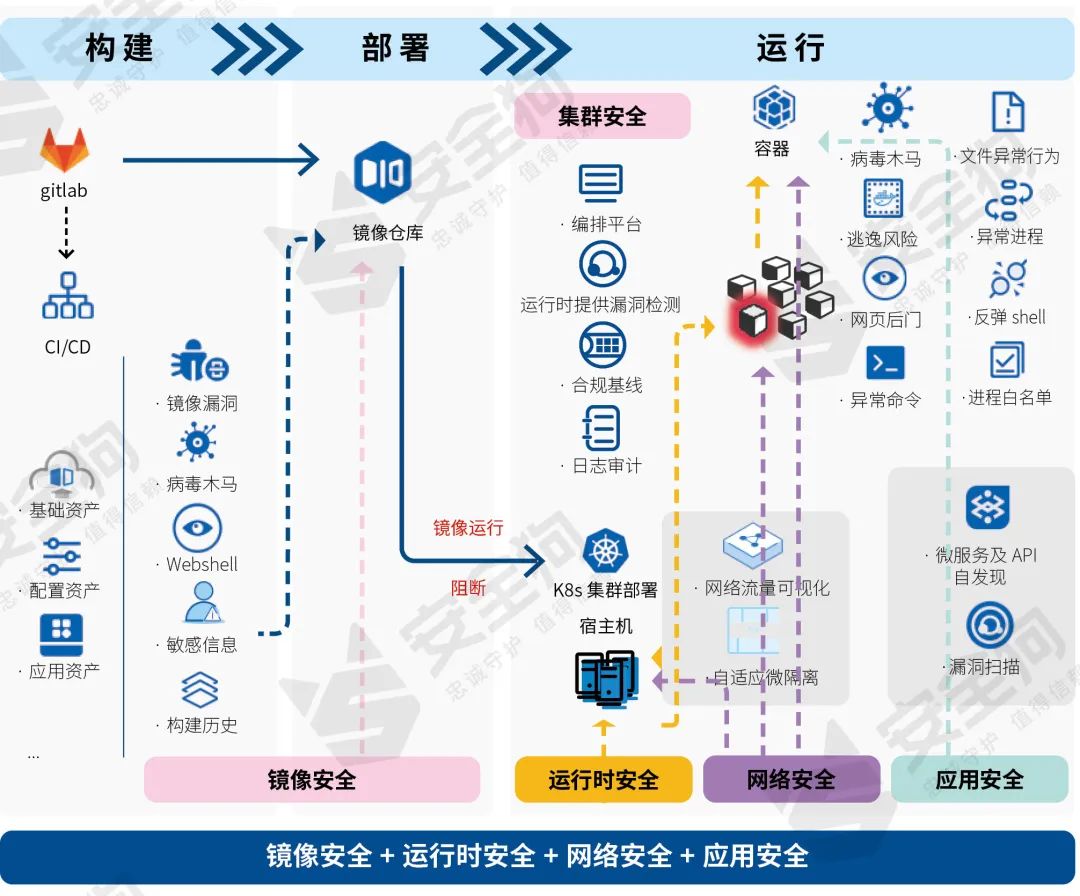

安全狗深度参与编写的《云原生安全配置基线规范》正式发布!

7月25日,由中国信息通信研究院、中国通信标准化协会主办的2023可信云大会在北京顺利开幕。 作为国内云原生安全领导厂商,安全狗受邀出席此次活动。 厦门服云信息科技有限公司(品牌名:安全狗)成立于2013年,…...

vscode里如何用git

打开vs终端执行如下: 1 初始化 Git 仓库(如果尚未初始化) git init 2 添加文件到 Git 仓库 git add . 3 使用 git commit 命令来提交你的更改。确保在提交时加上一个有用的消息。 git commit -m "备注信息" 4 …...

使用VSCode开发Django指南

使用VSCode开发Django指南 一、概述 Django 是一个高级 Python 框架,专为快速、安全和可扩展的 Web 开发而设计。Django 包含对 URL 路由、页面模板和数据处理的丰富支持。 本文将创建一个简单的 Django 应用,其中包含三个使用通用基本模板的页面。在此…...

学校时钟系统,标准考场时钟系统,AI亮相2025高考,赛思时钟系统为教育公平筑起“精准防线”

2025年#高考 将在近日拉开帷幕,#AI 监考一度冲上热搜。当AI深度融入高考,#时间同步 不再是辅助功能,而是决定AI监考系统成败的“生命线”。 AI亮相2025高考,40种异常行为0.5秒精准识别 2025年高考即将拉开帷幕,江西、…...

安装docker)

Linux离线(zip方式)安装docker

目录 基础信息操作系统信息docker信息 安装实例安装步骤示例 遇到的问题问题1:修改默认工作路径启动失败问题2 找不到对应组 基础信息 操作系统信息 OS版本:CentOS 7 64位 内核版本:3.10.0 相关命令: uname -rcat /etc/os-rele…...

深入浅出深度学习基础:从感知机到全连接神经网络的核心原理与应用

文章目录 前言一、感知机 (Perceptron)1.1 基础介绍1.1.1 感知机是什么?1.1.2 感知机的工作原理 1.2 感知机的简单应用:基本逻辑门1.2.1 逻辑与 (Logic AND)1.2.2 逻辑或 (Logic OR)1.2.3 逻辑与非 (Logic NAND) 1.3 感知机的实现1.3.1 简单实现 (基于阈…...

C#中的CLR属性、依赖属性与附加属性

CLR属性的主要特征 封装性: 隐藏字段的实现细节 提供对字段的受控访问 访问控制: 可单独设置get/set访问器的可见性 可创建只读或只写属性 计算属性: 可以在getter中执行计算逻辑 不需要直接对应一个字段 验证逻辑: 可以…...

RabbitMQ入门4.1.0版本(基于java、SpringBoot操作)

RabbitMQ 一、RabbitMQ概述 RabbitMQ RabbitMQ最初由LShift和CohesiveFT于2007年开发,后来由Pivotal Software Inc.(现为VMware子公司)接管。RabbitMQ 是一个开源的消息代理和队列服务器,用 Erlang 语言编写。广泛应用于各种分布…...

MySQL 8.0 事务全面讲解

以下是一个结合两次回答的 MySQL 8.0 事务全面讲解,涵盖了事务的核心概念、操作示例、失败回滚、隔离级别、事务性 DDL 和 XA 事务等内容,并修正了查看隔离级别的命令。 MySQL 8.0 事务全面讲解 一、事务的核心概念(ACID) 事务是…...

Qt 事件处理中 return 的深入解析

Qt 事件处理中 return 的深入解析 在 Qt 事件处理中,return 语句的使用是另一个关键概念,它与 event->accept()/event->ignore() 密切相关但作用不同。让我们详细分析一下它们之间的关系和工作原理。 核心区别:不同层级的事件处理 方…...

----- Python的类与对象)

Python学习(8) ----- Python的类与对象

Python 中的类(Class)与对象(Object)是面向对象编程(OOP)的核心。我们可以通过“类是模板,对象是实例”来理解它们的关系。 🧱 一句话理解: 类就像“图纸”,对…...