WebRTC音视频通话-实现iOS端调用ossrs视频通话服务

WebRTC音视频通话-实现iOS端调用ossrs视频通话服务

之前搭建ossrs服务,可以查看:https://blog.csdn.net/gloryFlow/article/details/132257196

这里iOS端使用GoogleWebRTC联调ossrs实现视频通话功能。

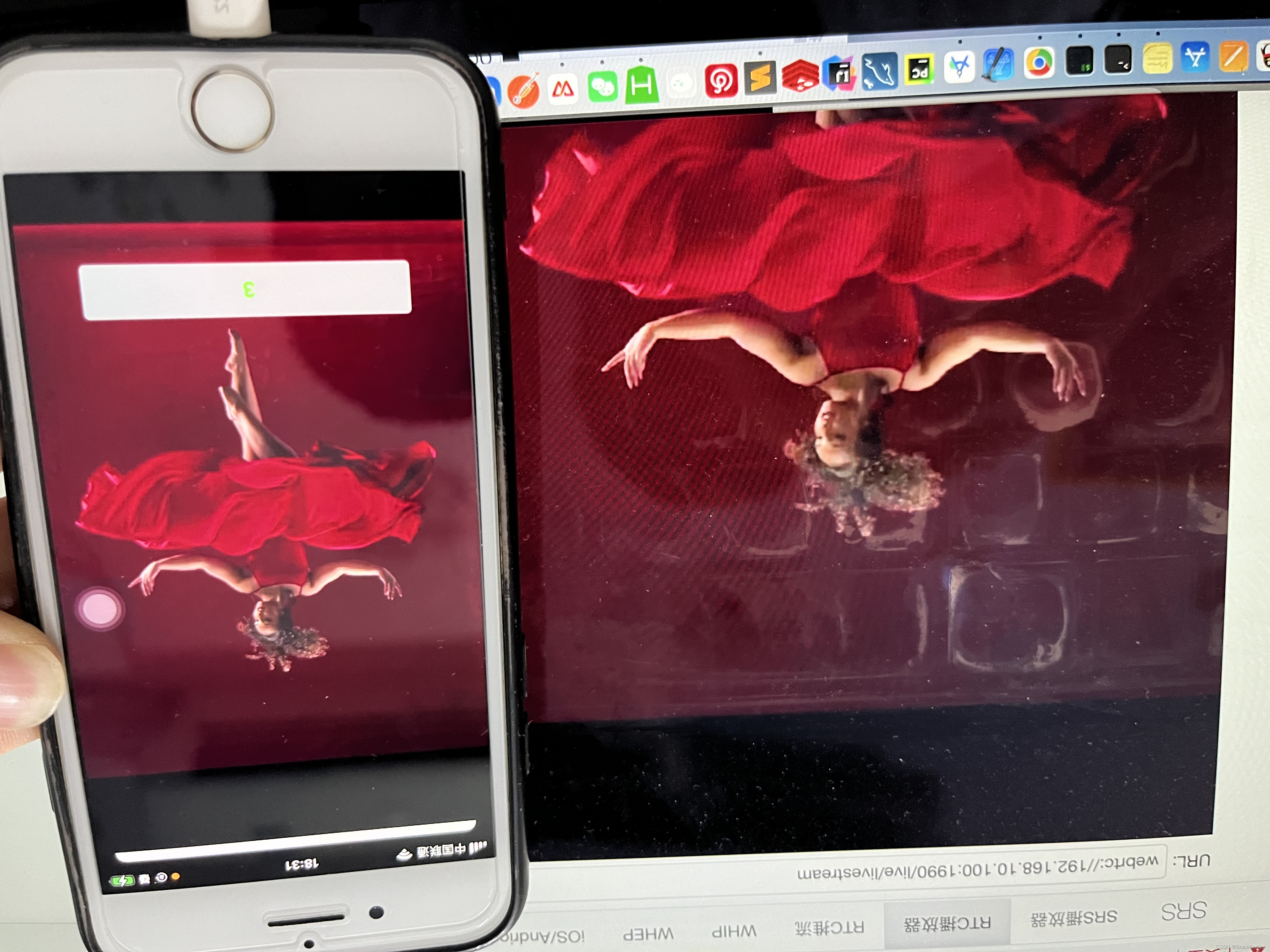

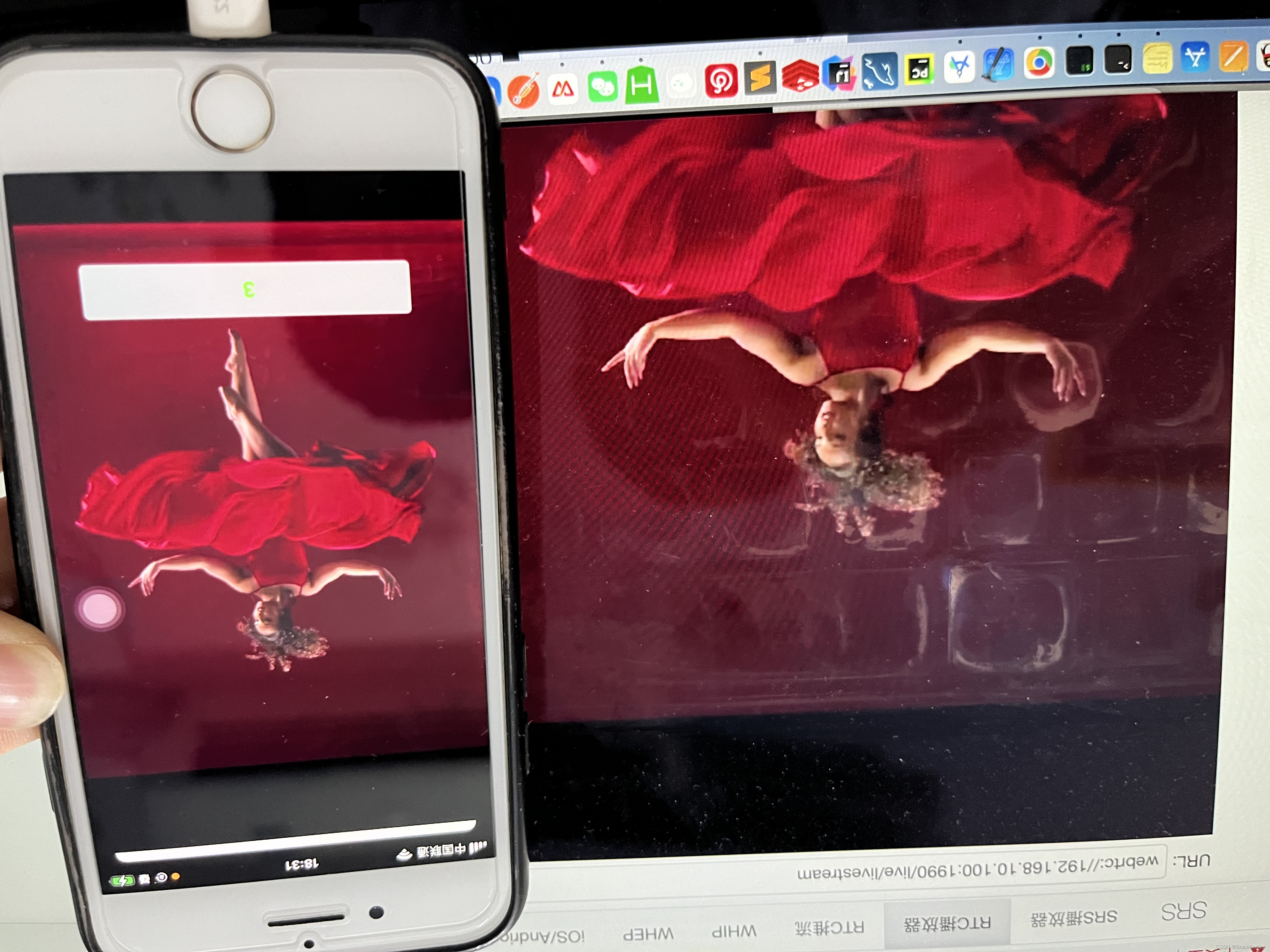

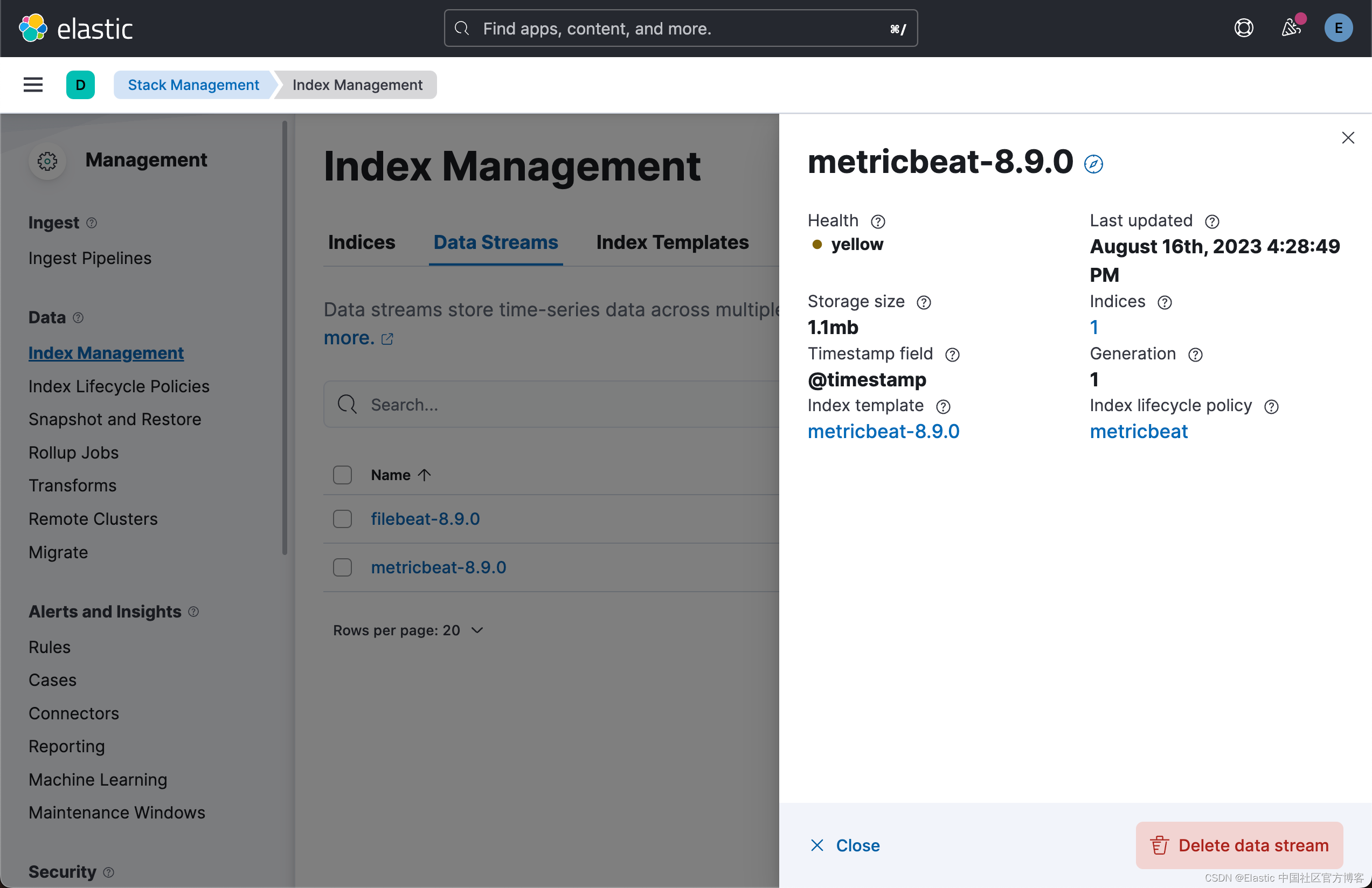

一、iOS端调用ossrs视频通话效果图

iOS端端效果图

ossrs效果图

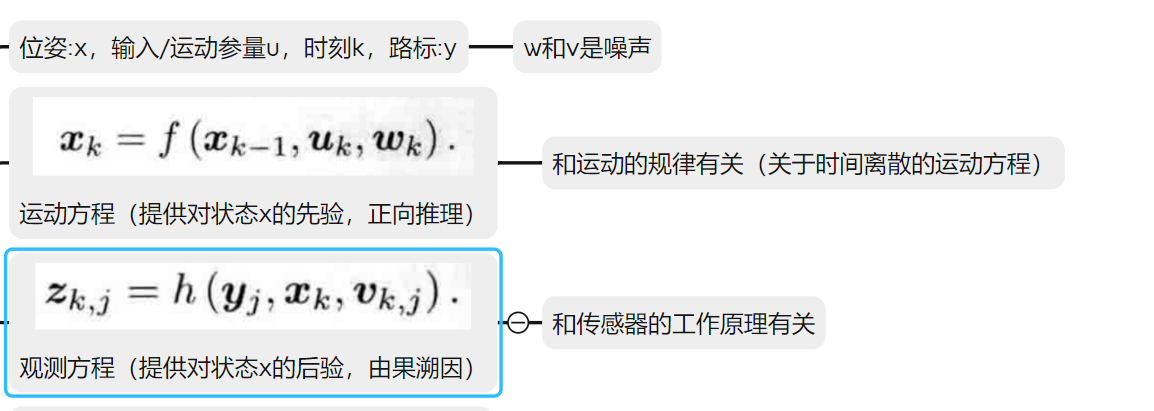

一、WebRTC是什么?

WebRTC (Web Real-Time Communications) 是一项实时通讯技术,它允许网络应用或者站点,在不借助中间媒介的情况下,建立浏览器之间点对点(Peer-to-Peer)的连接,实现视频流、音频流或者其他任意数据的传输。

查看https://zhuanlan.zhihu.com/p/421503695

需要了解的关键

- NAT

Network Address Translation(网络地址转换) - STUN

Session Traversal Utilities for NAT(NAT会话穿越应用程序) - TURN

Traversal Using Relay NAT(通过Relay方式穿越NAT) - ICE

Interactive Connectivity Establishment(交互式连接建立) - SDP

Session Description Protocol(会话描述协议) - WebRTC

Web Real-Time Communications(web实时通讯技术)

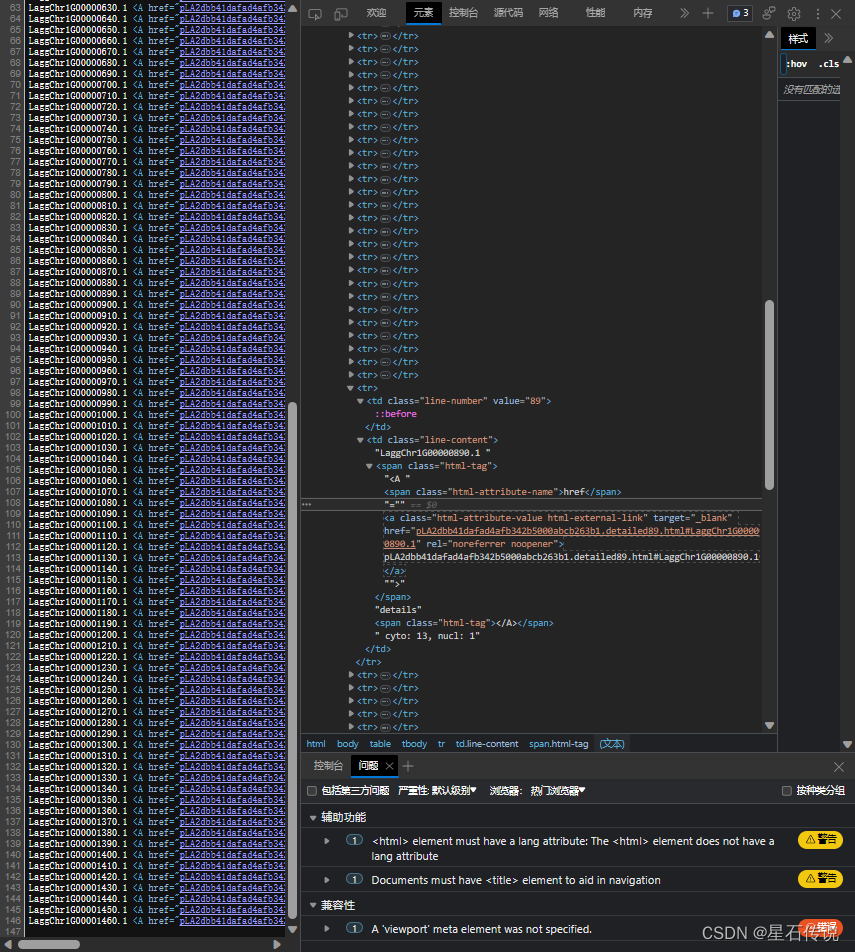

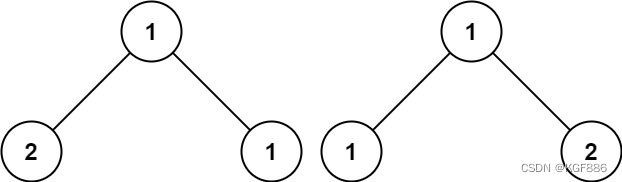

WebRTC offer交换流程如图所示

二、实现iOS端调用ossrs视频通话

创建好实现iOS端调用ossrs视频通话的工程。如果使用P2P点对点的音视频通话,信令服务器,stun/trunP2P穿透和转发服务器这类需要自己搭建了。ossrs中包含stun/trun穿透和转发服务器。我这边实现iOS端调用ossrs服务。

2.1、权限设置

在iOS端调用ossrs视频通话需要相机、语音权限

在info.plist中添加

<key>NSCameraUsageDescription</key>

<string>APP需要获取相机权限</string>

<key>NSMicrophoneUsageDescription</key>

<string>APP需要获取麦克风权限</string>

2.2、工程需要用到GoogleWebRTC

工程需要用到GoogleWebRTC库,在podfile文件中引入库,注意不同版本的GoogleWebRTC代码还是有些差别的。

target 'WebRTCApp' dopod 'GoogleWebRTC'

pod 'ReactiveObjC'

pod 'SocketRocket'

pod 'HGAlertViewController', '~> 1.0.1'end

之后执行pod install

2.3、GoogleWebRTC主要API

在使用GoogleWebRTC前,先看下主要的类

- RTCPeerConnection

RTCPeerConnection是WebRTC用于构建点对点连接器

- RTCPeerConnectionFactory

RTCPeerConnectionFactory是RTCPeerConnection工厂类

- RTCVideoCapturer

RTCVideoCapturer是摄像头采集器,获取画面与音频,这个之后可以替换掉。可以自定义,方便获取CMSampleBufferRef进行画面的美颜滤镜、虚拟头像等处理。

- RTCVideoTrack

RTCVideoTrack是视频轨Track

- RTCAudioTrack

RTCAudioTrack是音频轨Track

- RTCDataChannel

RTCDataChannel是建立高吞吐量、低延时的信道,可以传输数据。

- RTCMediaStream

RTCMediaStream是媒体流(摄像头的视频、麦克风的音频)的同步流。

- SDP

SDP即Session Description Protocol(会话描述协议)

SDP由一行或多行UTF-8文本组成,每行以一个字符的类型开头,后跟等号(=),然后是包含值或描述的结构化文本,其格式取决于类型。如下为一个SDP内容示例:

v=0

o=alice 2890844526 2890844526 IN IP4

s=

c=IN IP4

t=0 0

m=audio 49170 RTP/AVP 0

a=rtpmap:0 PCMU/8000

m=video 51372 RTP/AVP 31

a=rtpmap:31 H261/90000

m=video 53000 RTP/AVP 32

a=rtpmap:32 MPV/90000

这是会用到的WebRTC主要的API类。

2.4、使用WebRTC代码实现

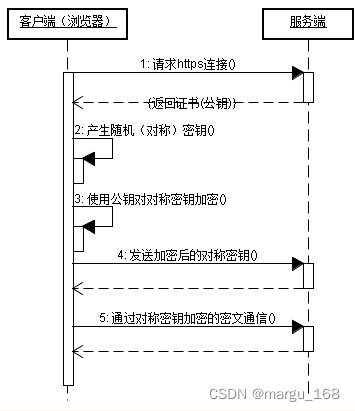

使用WebRTC实现P2P音视频流程如图

这里调用ossrs实现步骤如下

关键点设置

初始化RTCPeerConnectionFactory

#pragma mark - Lazy

- (RTCPeerConnectionFactory *)factory {if (!_factory) {RTCInitializeSSL();RTCDefaultVideoEncoderFactory *videoEncoderFactory = [[RTCDefaultVideoEncoderFactory alloc] init];RTCDefaultVideoDecoderFactory *videoDecoderFactory = [[RTCDefaultVideoDecoderFactory alloc] init];_factory = [[RTCPeerConnectionFactory alloc] initWithEncoderFactory:videoEncoderFactory decoderFactory:videoDecoderFactory];}return _factory;

}

通过RTCPeerConnectionFactory生成RTCPeerConnection

self.peerConnection = [self.factory peerConnectionWithConfiguration:newConfig constraints:constraints delegate:nil];

将RTCAudioTrack及RTCVideoTrack添加到peerConnection

NSString *streamId = @"stream";// Audio

RTCAudioTrack *audioTrack = [self createAudioTrack];

self.localAudioTrack = audioTrack;RTCRtpTransceiverInit *audioTrackTransceiver = [[RTCRtpTransceiverInit alloc] init];

audioTrackTransceiver.direction = RTCRtpTransceiverDirectionSendOnly;

audioTrackTransceiver.streamIds = @[streamId];[self.peerConnection addTransceiverWithTrack:audioTrack init:audioTrackTransceiver];// Video

RTCVideoTrack *videoTrack = [self createVideoTrack];

self.localVideoTrack = videoTrack;

RTCRtpTransceiverInit *videoTrackTransceiver = [[RTCRtpTransceiverInit alloc] init];

videoTrackTransceiver.direction = RTCRtpTransceiverDirectionSendOnly;

videoTrackTransceiver.streamIds = @[streamId];

[self.peerConnection addTransceiverWithTrack:videoTrack init:videoTrackTransceiver];

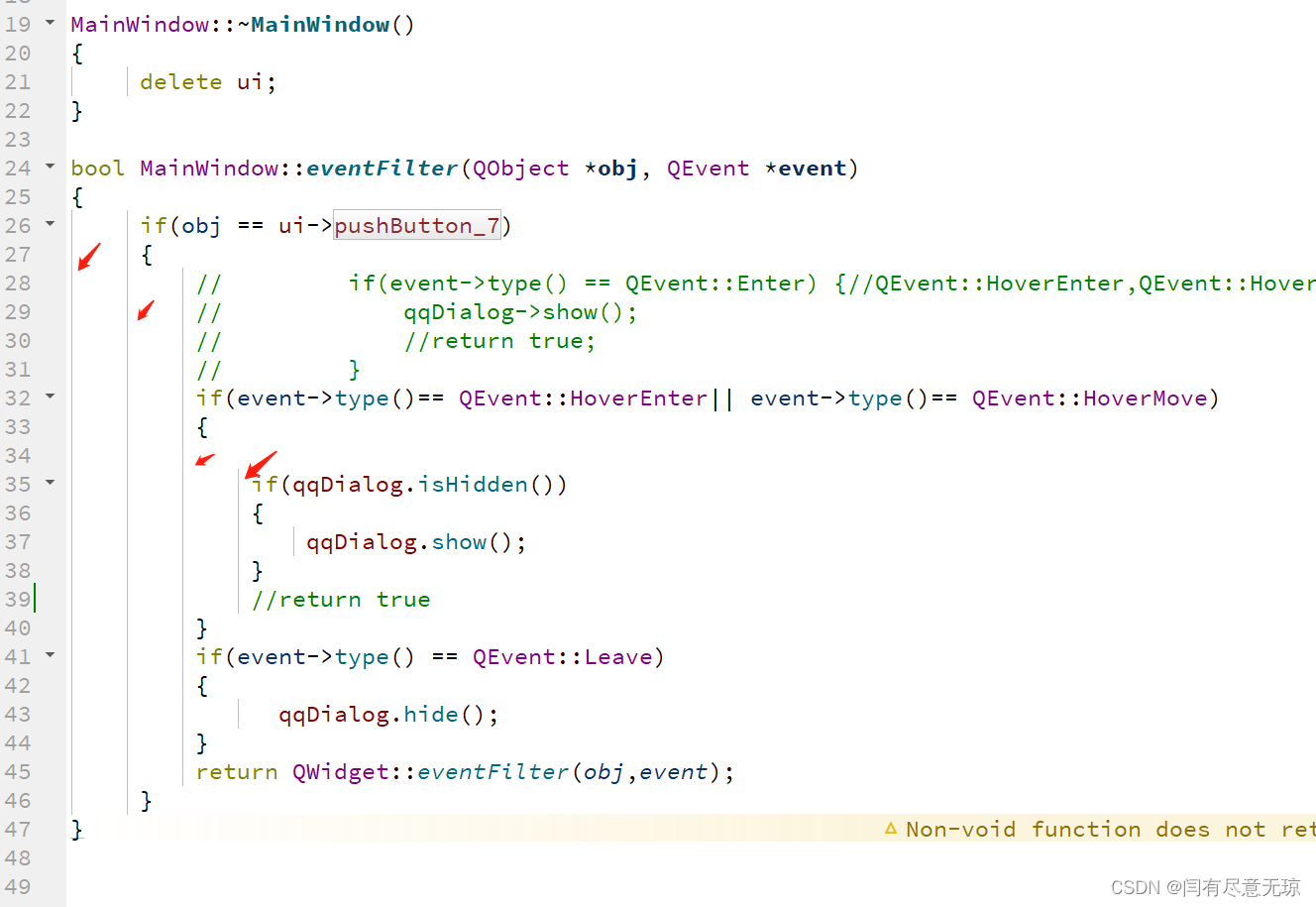

设置摄像头RTCCameraVideoCapturer及文件视频Capturer

- (RTCVideoTrack *)createVideoTrack {RTCVideoSource *videoSource = [self.factory videoSource];// 经过测试比1920*1080大的尺寸,无法通过srs播放[videoSource adaptOutputFormatToWidth:1920 height:1080 fps:20];// 如果是模拟器if (TARGET_IPHONE_SIMULATOR) {self.videoCapturer = [[RTCFileVideoCapturer alloc] initWithDelegate:videoSource];} else{self.videoCapturer = [[RTCCameraVideoCapturer alloc] initWithDelegate:videoSource];}RTCVideoTrack *videoTrack = [self.factory videoTrackWithSource:videoSource trackId:@"video0"];return videoTrack;

}

摄像头本地采集的画面本地显示

startCaptureLocalVideo的renderer为RTCEAGLVideoView

- (void)startCaptureLocalVideo:(id<RTCVideoRenderer>)renderer {if (!self.isPublish) {return;}if (!renderer) {return;}if (!self.videoCapturer) {return;}RTCVideoCapturer *capturer = self.videoCapturer;if ([capturer isKindOfClass:[RTCCameraVideoCapturer class]]) {if (!([RTCCameraVideoCapturer captureDevices].count > 0)) {return;}AVCaptureDevice *frontCamera = RTCCameraVideoCapturer.captureDevices.firstObject;

// if (frontCamera.position != AVCaptureDevicePositionFront) {

// return;

// }RTCCameraVideoCapturer *cameraVideoCapturer = (RTCCameraVideoCapturer *)capturer;AVCaptureDeviceFormat *formatNilable;NSArray *supportDeviceFormats = [RTCCameraVideoCapturer supportedFormatsForDevice:frontCamera];NSLog(@"supportDeviceFormats:%@",supportDeviceFormats);formatNilable = supportDeviceFormats[4];

// if (supportDeviceFormats && supportDeviceFormats.count > 0) {

// NSMutableArray *formats = [NSMutableArray arrayWithCapacity:0];

// for (AVCaptureDeviceFormat *format in supportDeviceFormats) {

// CMVideoDimensions videoVideoDimensions = CMVideoFormatDescriptionGetDimensions(format.formatDescription);

// float width = videoVideoDimensions.width;

// float height = videoVideoDimensions.height;

// // only use 16:9 format.

// if ((width / height) >= (16.0/9.0)) {

// [formats addObject:format];

// }

// }

//

// if (formats.count > 0) {

// NSArray *sortedFormats = [formats sortedArrayUsingComparator:^NSComparisonResult(AVCaptureDeviceFormat *obj1, AVCaptureDeviceFormat *obj2) {

// CMVideoDimensions f1VD = CMVideoFormatDescriptionGetDimensions(obj1.formatDescription);

// CMVideoDimensions f2VD = CMVideoFormatDescriptionGetDimensions(obj2.formatDescription);

// float width1 = f1VD.width;

// float width2 = f2VD.width;

// float height2 = f2VD.height;

// // only use 16:9 format.

// if ((width2 / height2) >= (1.7)) {

// return NSOrderedAscending;

// } else {

// return NSOrderedDescending;

// }

// }];

//

// if (sortedFormats && sortedFormats.count > 0) {

// formatNilable = sortedFormats.lastObject;

// }

// }

// }if (!formatNilable) {return;}NSArray *formatArr = [RTCCameraVideoCapturer supportedFormatsForDevice:frontCamera];for (AVCaptureDeviceFormat *format in formatArr) {NSLog(@"AVCaptureDeviceFormat format:%@", format);}[cameraVideoCapturer startCaptureWithDevice:frontCamera format:formatNilable fps:20 completionHandler:^(NSError *error) {NSLog(@"startCaptureWithDevice error:%@", error);}];}if ([capturer isKindOfClass:[RTCFileVideoCapturer class]]) {RTCFileVideoCapturer *fileVideoCapturer = (RTCFileVideoCapturer *)capturer;[fileVideoCapturer startCapturingFromFileNamed:@"beautyPicture.mp4" onError:^(NSError * _Nonnull error) {NSLog(@"startCaptureLocalVideo startCapturingFromFileNamed error:%@", error);}];}[self.localVideoTrack addRenderer:renderer];

}

创建的createOffer

- (void)offer:(void (^)(RTCSessionDescription *sdp))completion {if (self.isPublish) {self.mediaConstrains = self.publishMediaConstrains;} else {self.mediaConstrains = self.playMediaConstrains;}RTCMediaConstraints *constrains = [[RTCMediaConstraints alloc] initWithMandatoryConstraints:self.mediaConstrains optionalConstraints:self.optionalConstraints];NSLog(@"peerConnection:%@",self.peerConnection);__weak typeof(self) weakSelf = self;[weakSelf.peerConnection offerForConstraints:constrains completionHandler:^(RTCSessionDescription * _Nullable sdp, NSError * _Nullable error) {if (error) {NSLog(@"offer offerForConstraints error:%@", error);}if (sdp) {[weakSelf.peerConnection setLocalDescription:sdp completionHandler:^(NSError * _Nullable error) {if (error) {NSLog(@"offer setLocalDescription error:%@", error);}if (completion) {completion(sdp);}}];}}];

}

设置setRemoteDescription

- (void)setRemoteSdp:(RTCSessionDescription *)remoteSdp completion:(void (^)(NSError * _Nullable error))completion {[self.peerConnection setRemoteDescription:remoteSdp completionHandler:completion];

}

整体代码如下

WebRTCClient.h

#import <Foundation/Foundation.h>

#import <WebRTC/WebRTC.h>

#import <UIKit/UIKit.h>@protocol WebRTCClientDelegate;

@interface WebRTCClient : NSObject@property (nonatomic, weak) id<WebRTCClientDelegate> delegate;/**connect工厂*/

@property (nonatomic, strong) RTCPeerConnectionFactory *factory;/**是否push*/

@property (nonatomic, assign) BOOL isPublish;/**connect*/

@property (nonatomic, strong) RTCPeerConnection *peerConnection;/**RTCAudioSession*/

@property (nonatomic, strong) RTCAudioSession *rtcAudioSession;/**DispatchQueue*/

@property (nonatomic) dispatch_queue_t audioQueue;/**mediaConstrains*/

@property (nonatomic, strong) NSDictionary *mediaConstrains;/**publishMediaConstrains*/

@property (nonatomic, strong) NSDictionary *publishMediaConstrains;/**playMediaConstrains*/

@property (nonatomic, strong) NSDictionary *playMediaConstrains;/**optionalConstraints*/

@property (nonatomic, strong) NSDictionary *optionalConstraints;/**RTCVideoCapturer摄像头采集器*/

@property (nonatomic, strong) RTCVideoCapturer *videoCapturer;/**local语音localAudioTrack*/

@property (nonatomic, strong) RTCAudioTrack *localAudioTrack;/**localVideoTrack*/

@property (nonatomic, strong) RTCVideoTrack *localVideoTrack;/**remoteVideoTrack*/

@property (nonatomic, strong) RTCVideoTrack *remoteVideoTrack;/**RTCVideoRenderer*/

@property (nonatomic, weak) id<RTCVideoRenderer> remoteRenderView;/**localDataChannel*/

@property (nonatomic, strong) RTCDataChannel *localDataChannel;/**localDataChannel*/

@property (nonatomic, strong) RTCDataChannel *remoteDataChannel;- (instancetype)initWithPublish:(BOOL)isPublish;- (void)startCaptureLocalVideo:(id<RTCVideoRenderer>)renderer;- (void)answer:(void (^)(RTCSessionDescription *sdp))completionHandler;- (void)offer:(void (^)(RTCSessionDescription *sdp))completionHandler;#pragma mark - Hiden or show Video

- (void)hidenVideo;- (void)showVideo;#pragma mark - Hiden or show Audio

- (void)muteAudio;- (void)unmuteAudio;- (void)speakOff;- (void)speakOn;- (void)changeSDP2Server:(RTCSessionDescription *)sdpurlStr:(NSString *)urlStrstreamUrl:(NSString *)streamUrlclosure:(void (^)(BOOL isServerRetSuc))closure;@end@protocol WebRTCClientDelegate <NSObject>- (void)webRTCClient:(WebRTCClient *)client didDiscoverLocalCandidate:(RTCIceCandidate *)candidate;

- (void)webRTCClient:(WebRTCClient *)client didChangeConnectionState:(RTCIceConnectionState)state;

- (void)webRTCClient:(WebRTCClient *)client didReceiveData:(NSData *)data;@end

WebRTCClient.m

#import "WebRTCClient.h"

#import "HttpClient.h"@interface WebRTCClient ()<RTCPeerConnectionDelegate, RTCDataChannelDelegate>@property (nonatomic, strong) HttpClient *httpClient;@end@implementation WebRTCClient- (instancetype)initWithPublish:(BOOL)isPublish {self = [super init];if (self) {self.isPublish = isPublish;self.httpClient = [[HttpClient alloc] init];RTCMediaConstraints *constraints = [[RTCMediaConstraints alloc] initWithMandatoryConstraints:nil optionalConstraints:self.optionalConstraints];RTCConfiguration *newConfig = [[RTCConfiguration alloc] init];newConfig.sdpSemantics = RTCSdpSemanticsUnifiedPlan;self.peerConnection = [self.factory peerConnectionWithConfiguration:newConfig constraints:constraints delegate:nil];[self createMediaSenders];[self createMediaReceivers];// srs not support data channel.// self.createDataChannel()[self configureAudioSession];self.peerConnection.delegate = self;}return self;

}- (void)createMediaSenders {if (!self.isPublish) {return;}NSString *streamId = @"stream";// AudioRTCAudioTrack *audioTrack = [self createAudioTrack];self.localAudioTrack = audioTrack;RTCRtpTransceiverInit *audioTrackTransceiver = [[RTCRtpTransceiverInit alloc] init];audioTrackTransceiver.direction = RTCRtpTransceiverDirectionSendOnly;audioTrackTransceiver.streamIds = @[streamId];[self.peerConnection addTransceiverWithTrack:audioTrack init:audioTrackTransceiver];// VideoRTCVideoTrack *videoTrack = [self createVideoTrack];self.localVideoTrack = videoTrack;RTCRtpTransceiverInit *videoTrackTransceiver = [[RTCRtpTransceiverInit alloc] init];videoTrackTransceiver.direction = RTCRtpTransceiverDirectionSendOnly;videoTrackTransceiver.streamIds = @[streamId];[self.peerConnection addTransceiverWithTrack:videoTrack init:videoTrackTransceiver];

}- (void)createMediaReceivers {if (!self.isPublish) {return;}if (self.peerConnection.transceivers.count > 0) {RTCRtpTransceiver *transceiver = self.peerConnection.transceivers.firstObject;if (transceiver.mediaType == RTCRtpMediaTypeVideo) {RTCVideoTrack *track = (RTCVideoTrack *)transceiver.receiver.track;self.remoteVideoTrack = track;}}

}- (void)configureAudioSession {[self.rtcAudioSession lockForConfiguration];@try {NSError *error;[self.rtcAudioSession setCategory:AVAudioSessionCategoryPlayAndRecord withOptions:AVAudioSessionCategoryOptionDefaultToSpeaker error:&error];NSError *modeError;[self.rtcAudioSession setMode:AVAudioSessionModeVoiceChat error:&modeError];NSLog(@"configureAudioSession error:%@, modeError:%@", error, modeError);} @catch (NSException *exception) {NSLog(@"configureAudioSession exception:%@", exception);}[self.rtcAudioSession unlockForConfiguration];

}- (RTCAudioTrack *)createAudioTrack {/// enable google 3A algorithm.NSDictionary *mandatory = @{@"googEchoCancellation": kRTCMediaConstraintsValueTrue,@"googAutoGainControl": kRTCMediaConstraintsValueTrue,@"googNoiseSuppression": kRTCMediaConstraintsValueTrue,};RTCMediaConstraints *audioConstrains = [[RTCMediaConstraints alloc] initWithMandatoryConstraints:mandatory optionalConstraints:self.optionalConstraints];RTCAudioSource *audioSource = [self.factory audioSourceWithConstraints:audioConstrains];RTCAudioTrack *audioTrack = [self.factory audioTrackWithSource:audioSource trackId:@"audio0"];return audioTrack;

}- (RTCVideoTrack *)createVideoTrack {RTCVideoSource *videoSource = [self.factory videoSource];// 经过测试比1920*1080大的尺寸,无法通过srs播放[videoSource adaptOutputFormatToWidth:1920 height:1080 fps:20];// 如果是模拟器if (TARGET_IPHONE_SIMULATOR) {self.videoCapturer = [[RTCFileVideoCapturer alloc] initWithDelegate:videoSource];} else{self.videoCapturer = [[RTCCameraVideoCapturer alloc] initWithDelegate:videoSource];}RTCVideoTrack *videoTrack = [self.factory videoTrackWithSource:videoSource trackId:@"video0"];return videoTrack;

}- (void)offer:(void (^)(RTCSessionDescription *sdp))completion {if (self.isPublish) {self.mediaConstrains = self.publishMediaConstrains;} else {self.mediaConstrains = self.playMediaConstrains;}RTCMediaConstraints *constrains = [[RTCMediaConstraints alloc] initWithMandatoryConstraints:self.mediaConstrains optionalConstraints:self.optionalConstraints];NSLog(@"peerConnection:%@",self.peerConnection);__weak typeof(self) weakSelf = self;[weakSelf.peerConnection offerForConstraints:constrains completionHandler:^(RTCSessionDescription * _Nullable sdp, NSError * _Nullable error) {if (error) {NSLog(@"offer offerForConstraints error:%@", error);}if (sdp) {[weakSelf.peerConnection setLocalDescription:sdp completionHandler:^(NSError * _Nullable error) {if (error) {NSLog(@"offer setLocalDescription error:%@", error);}if (completion) {completion(sdp);}}];}}];

}- (void)answer:(void (^)(RTCSessionDescription *sdp))completion {RTCMediaConstraints *constrains = [[RTCMediaConstraints alloc] initWithMandatoryConstraints:self.mediaConstrains optionalConstraints:self.optionalConstraints];__weak typeof(self) weakSelf = self;[weakSelf.peerConnection answerForConstraints:constrains completionHandler:^(RTCSessionDescription * _Nullable sdp, NSError * _Nullable error) {if (error) {NSLog(@"answer answerForConstraints error:%@", error);}if (sdp) {[weakSelf.peerConnection setLocalDescription:sdp completionHandler:^(NSError * _Nullable error) {if (error) {NSLog(@"answer setLocalDescription error:%@", error);}if (completion) {completion(sdp);}}];}}];

}- (void)setRemoteSdp:(RTCSessionDescription *)remoteSdp completion:(void (^)(NSError * _Nullable error))completion {[self.peerConnection setRemoteDescription:remoteSdp completionHandler:completion];

}- (void)setRemoteCandidate:(RTCIceCandidate *)remoteCandidate {[self.peerConnection addIceCandidate:remoteCandidate];

}- (void)setMaxBitrate:(int)maxBitrate {NSMutableArray *videoSenders = [NSMutableArray arrayWithCapacity:0];for (RTCRtpSender *sender in self.peerConnection.senders) {if (sender.track && [kRTCMediaStreamTrackKindVideo isEqualToString:sender.track.kind]) {[videoSenders addObject:sender];}}if (videoSenders.count > 0) {RTCRtpSender *firstSender = [videoSenders firstObject];RTCRtpParameters *parameters = firstSender.parameters;NSNumber *maxBitrateBps = [NSNumber numberWithInt:maxBitrate];parameters.encodings.firstObject.maxBitrateBps = maxBitrateBps;}

}- (void)setMaxFramerate:(int)maxFramerate {NSMutableArray *videoSenders = [NSMutableArray arrayWithCapacity:0];for (RTCRtpSender *sender in self.peerConnection.senders) {if (sender.track && [kRTCMediaStreamTrackKindVideo isEqualToString:sender.track.kind]) {[videoSenders addObject:sender];}}if (videoSenders.count > 0) {RTCRtpSender *firstSender = [videoSenders firstObject];RTCRtpParameters *parameters = firstSender.parameters;NSNumber *maxFramerateNum = [NSNumber numberWithInt:maxFramerate];// 该版本暂时没有maxFramerate,需要更新到最新版本parameters.encodings.firstObject.maxFramerate = maxFramerateNum;}

}- (void)startCaptureLocalVideo:(id<RTCVideoRenderer>)renderer {if (!self.isPublish) {return;}if (!renderer) {return;}if (!self.videoCapturer) {return;}RTCVideoCapturer *capturer = self.videoCapturer;if ([capturer isKindOfClass:[RTCCameraVideoCapturer class]]) {if (!([RTCCameraVideoCapturer captureDevices].count > 0)) {return;}AVCaptureDevice *frontCamera = RTCCameraVideoCapturer.captureDevices.firstObject;

// if (frontCamera.position != AVCaptureDevicePositionFront) {

// return;

// }RTCCameraVideoCapturer *cameraVideoCapturer = (RTCCameraVideoCapturer *)capturer;AVCaptureDeviceFormat *formatNilable;NSArray *supportDeviceFormats = [RTCCameraVideoCapturer supportedFormatsForDevice:frontCamera];NSLog(@"supportDeviceFormats:%@",supportDeviceFormats);formatNilable = supportDeviceFormats[4];

// if (supportDeviceFormats && supportDeviceFormats.count > 0) {

// NSMutableArray *formats = [NSMutableArray arrayWithCapacity:0];

// for (AVCaptureDeviceFormat *format in supportDeviceFormats) {

// CMVideoDimensions videoVideoDimensions = CMVideoFormatDescriptionGetDimensions(format.formatDescription);

// float width = videoVideoDimensions.width;

// float height = videoVideoDimensions.height;

// // only use 16:9 format.

// if ((width / height) >= (16.0/9.0)) {

// [formats addObject:format];

// }

// }

//

// if (formats.count > 0) {

// NSArray *sortedFormats = [formats sortedArrayUsingComparator:^NSComparisonResult(AVCaptureDeviceFormat *obj1, AVCaptureDeviceFormat *obj2) {

// CMVideoDimensions f1VD = CMVideoFormatDescriptionGetDimensions(obj1.formatDescription);

// CMVideoDimensions f2VD = CMVideoFormatDescriptionGetDimensions(obj2.formatDescription);

// float width1 = f1VD.width;

// float width2 = f2VD.width;

// float height2 = f2VD.height;

// // only use 16:9 format.

// if ((width2 / height2) >= (1.7)) {

// return NSOrderedAscending;

// } else {

// return NSOrderedDescending;

// }

// }];

//

// if (sortedFormats && sortedFormats.count > 0) {

// formatNilable = sortedFormats.lastObject;

// }

// }

// }if (!formatNilable) {return;}NSArray *formatArr = [RTCCameraVideoCapturer supportedFormatsForDevice:frontCamera];for (AVCaptureDeviceFormat *format in formatArr) {NSLog(@"AVCaptureDeviceFormat format:%@", format);}[cameraVideoCapturer startCaptureWithDevice:frontCamera format:formatNilable fps:20 completionHandler:^(NSError *error) {NSLog(@"startCaptureWithDevice error:%@", error);}];}if ([capturer isKindOfClass:[RTCFileVideoCapturer class]]) {RTCFileVideoCapturer *fileVideoCapturer = (RTCFileVideoCapturer *)capturer;[fileVideoCapturer startCapturingFromFileNamed:@"beautyPicture.mp4" onError:^(NSError * _Nonnull error) {NSLog(@"startCaptureLocalVideo startCapturingFromFileNamed error:%@", error);}];}[self.localVideoTrack addRenderer:renderer];

}- (void)renderRemoteVideo:(id<RTCVideoRenderer>)renderer {if (!self.isPublish) {return;}self.remoteRenderView = renderer;

}- (RTCDataChannel *)createDataChannel {RTCDataChannelConfiguration *config = [[RTCDataChannelConfiguration alloc] init];RTCDataChannel *dataChannel = [self.peerConnection dataChannelForLabel:@"WebRTCData" configuration:config];if (!dataChannel) {return nil;}dataChannel.delegate = self;self.localDataChannel = dataChannel;return dataChannel;

}- (void)sendData:(NSData *)data {RTCDataBuffer *buffer = [[RTCDataBuffer alloc] initWithData:data isBinary:YES];[self.remoteDataChannel sendData:buffer];

}- (void)changeSDP2Server:(RTCSessionDescription *)sdpurlStr:(NSString *)urlStrstreamUrl:(NSString *)streamUrlclosure:(void (^)(BOOL isServerRetSuc))closure {__weak typeof(self) weakSelf = self;[self.httpClient changeSDP2Server:sdp urlStr:urlStr streamUrl:streamUrl closure:^(NSDictionary *result) {if (result && [result isKindOfClass:[NSDictionary class]]) {NSString *sdp = [result objectForKey:@"sdp"];if (sdp && [sdp isKindOfClass:[NSString class]] && sdp.length > 0) {RTCSessionDescription *remoteSDP = [[RTCSessionDescription alloc] initWithType:RTCSdpTypeAnswer sdp:sdp];[weakSelf setRemoteSdp:remoteSDP completion:^(NSError * _Nullable error) {NSLog(@"changeSDP2Server setRemoteDescription error:%@", error);}];}}}];

}#pragma mark - Hiden or show Video

- (void)hidenVideo {[self setVideoEnabled:NO];

}- (void)showVideo {[self setVideoEnabled:YES];

}- (void)setVideoEnabled:(BOOL)isEnabled {[self setTrackEnabled:[RTCVideoTrack class] isEnabled:isEnabled];

}- (void)setTrackEnabled:(Class)track isEnabled:(BOOL)isEnabled {for (RTCRtpTransceiver *transceiver in self.peerConnection.transceivers) {if (transceiver && [transceiver isKindOfClass:track]) {transceiver.sender.track.isEnabled = isEnabled;}}

}#pragma mark - Hiden or show Audio

- (void)muteAudio {[self setAudioEnabled:NO];

}- (void)unmuteAudio {[self setAudioEnabled:YES];

}- (void)speakOff {__weak typeof(self) weakSelf = self;dispatch_async(self.audioQueue, ^{[weakSelf.rtcAudioSession lockForConfiguration];@try {NSError *error;[self.rtcAudioSession setCategory:AVAudioSessionCategoryPlayAndRecord withOptions:AVAudioSessionCategoryOptionDefaultToSpeaker error:&error];NSError *ooapError;[self.rtcAudioSession overrideOutputAudioPort:AVAudioSessionPortOverrideNone error:&ooapError];NSLog(@"speakOff error:%@, ooapError:%@", error, ooapError);} @catch (NSException *exception) {NSLog(@"speakOff exception:%@", exception);}[weakSelf.rtcAudioSession unlockForConfiguration];});

}- (void)speakOn {__weak typeof(self) weakSelf = self;dispatch_async(self.audioQueue, ^{[weakSelf.rtcAudioSession lockForConfiguration];@try {NSError *error;[self.rtcAudioSession setCategory:AVAudioSessionCategoryPlayAndRecord withOptions:AVAudioSessionCategoryOptionDefaultToSpeaker error:&error];NSError *ooapError;[self.rtcAudioSession overrideOutputAudioPort:AVAudioSessionPortOverrideSpeaker error:&ooapError];NSError *activeError;[self.rtcAudioSession setActive:YES error:&activeError];NSLog(@"speakOn error:%@, ooapError:%@, activeError:%@", error, ooapError, activeError);} @catch (NSException *exception) {NSLog(@"speakOn exception:%@", exception);}[weakSelf.rtcAudioSession unlockForConfiguration];});

}- (void)setAudioEnabled:(BOOL)isEnabled {[self setTrackEnabled:[RTCAudioTrack class] isEnabled:isEnabled];

}#pragma mark - RTCPeerConnectionDelegate

/** Called when the SignalingState changed. */

- (void)peerConnection:(RTCPeerConnection *)peerConnection

didChangeSignalingState:(RTCSignalingState)stateChanged {NSLog(@"peerConnection didChangeSignalingState:%ld", (long)stateChanged);

}/** Called when media is received on a new stream from remote peer. */

- (void)peerConnection:(RTCPeerConnection *)peerConnection didAddStream:(RTCMediaStream *)stream {NSLog(@"peerConnection didAddStream");if (self.isPublish) {return;}NSArray *videoTracks = stream.videoTracks;if (videoTracks && videoTracks.count > 0) {RTCVideoTrack *track = videoTracks.firstObject;self.remoteVideoTrack = track;}if (self.remoteVideoTrack && self.remoteRenderView) {id<RTCVideoRenderer> remoteRenderView = self.remoteRenderView;RTCVideoTrack *remoteVideoTrack = self.remoteVideoTrack;[remoteVideoTrack addRenderer:remoteRenderView];}/**if let audioTrack = stream.audioTracks.first{print("audio track faund")audioTrack.source.volume = 8}*/

}/** Called when a remote peer closes a stream.* This is not called when RTCSdpSemanticsUnifiedPlan is specified.*/

- (void)peerConnection:(RTCPeerConnection *)peerConnection didRemoveStream:(RTCMediaStream *)stream {NSLog(@"peerConnection didRemoveStream");

}/** Called when negotiation is needed, for example ICE has restarted. */

- (void)peerConnectionShouldNegotiate:(RTCPeerConnection *)peerConnection {NSLog(@"peerConnection peerConnectionShouldNegotiate");

}/** Called any time the IceConnectionState changes. */

- (void)peerConnection:(RTCPeerConnection *)peerConnectiondidChangeIceConnectionState:(RTCIceConnectionState)newState {NSLog(@"peerConnection didChangeIceConnectionState:%ld", newState);if (self.delegate && [self.delegate respondsToSelector:@selector(webRTCClient:didChangeConnectionState:)]) {[self.delegate webRTCClient:self didChangeConnectionState:newState];}

}/** Called any time the IceGatheringState changes. */

- (void)peerConnection:(RTCPeerConnection *)peerConnectiondidChangeIceGatheringState:(RTCIceGatheringState)newState {NSLog(@"peerConnection didChangeIceGatheringState:%ld", newState);

}/** New ice candidate has been found. */

- (void)peerConnection:(RTCPeerConnection *)peerConnectiondidGenerateIceCandidate:(RTCIceCandidate *)candidate {NSLog(@"peerConnection didGenerateIceCandidate:%@", candidate);if (self.delegate && [self.delegate respondsToSelector:@selector(webRTCClient:didDiscoverLocalCandidate:)]) {[self.delegate webRTCClient:self didDiscoverLocalCandidate:candidate];}

}/** Called when a group of local Ice candidates have been removed. */

- (void)peerConnection:(RTCPeerConnection *)peerConnectiondidRemoveIceCandidates:(NSArray<RTCIceCandidate *> *)candidates {NSLog(@"peerConnection didRemoveIceCandidates:%@", candidates);

}/** New data channel has been opened. */

- (void)peerConnection:(RTCPeerConnection *)peerConnectiondidOpenDataChannel:(RTCDataChannel *)dataChannel {NSLog(@"peerConnection didOpenDataChannel:%@", dataChannel);self.remoteDataChannel = dataChannel;

}/** Called when signaling indicates a transceiver will be receiving media from* the remote endpoint.* This is only called with RTCSdpSemanticsUnifiedPlan specified.*/

- (void)peerConnection:(RTCPeerConnection *)peerConnectiondidStartReceivingOnTransceiver:(RTCRtpTransceiver *)transceiver {NSLog(@"peerConnection didStartReceivingOnTransceiver:%@", transceiver);

}/** Called when a receiver and its track are created. */

- (void)peerConnection:(RTCPeerConnection *)peerConnectiondidAddReceiver:(RTCRtpReceiver *)rtpReceiverstreams:(NSArray<RTCMediaStream *> *)mediaStreams {NSLog(@"peerConnection didAddReceiver");

}/** Called when the receiver and its track are removed. */

- (void)peerConnection:(RTCPeerConnection *)peerConnectiondidRemoveReceiver:(RTCRtpReceiver *)rtpReceiver {NSLog(@"peerConnection didRemoveReceiver");

}#pragma mark - RTCDataChannelDelegate

/** The data channel state changed. */

- (void)dataChannelDidChangeState:(RTCDataChannel *)dataChannel {NSLog(@"dataChannelDidChangeState:%@", dataChannel);

}/** The data channel successfully received a data buffer. */

- (void)dataChannel:(RTCDataChannel *)dataChannel

didReceiveMessageWithBuffer:(RTCDataBuffer *)buffer {if (self.delegate && [self.delegate respondsToSelector:@selector(webRTCClient:didReceiveData:)]) {[self.delegate webRTCClient:self didReceiveData:buffer.data];}

}#pragma mark - Lazy

- (RTCPeerConnectionFactory *)factory {if (!_factory) {RTCInitializeSSL();RTCDefaultVideoEncoderFactory *videoEncoderFactory = [[RTCDefaultVideoEncoderFactory alloc] init];RTCDefaultVideoDecoderFactory *videoDecoderFactory = [[RTCDefaultVideoDecoderFactory alloc] init];for (RTCVideoCodecInfo *codec in videoEncoderFactory.supportedCodecs) {if (codec.parameters) {NSString *profile_level_id = codec.parameters[@"profile-level-id"];if (profile_level_id && [profile_level_id isEqualToString:@"42e01f"]) {videoEncoderFactory.preferredCodec = codec;break;}}}_factory = [[RTCPeerConnectionFactory alloc] initWithEncoderFactory:videoEncoderFactory decoderFactory:videoDecoderFactory];}return _factory;

}- (dispatch_queue_t)audioQueue {if (!_audioQueue) {_audioQueue = dispatch_queue_create("cn.ifour.webrtc", NULL);}return _audioQueue;

}- (RTCAudioSession *)rtcAudioSession {if (!_rtcAudioSession) {_rtcAudioSession = [RTCAudioSession sharedInstance];}return _rtcAudioSession;

}- (NSDictionary *)mediaConstrains {if (!_mediaConstrains) {_mediaConstrains = [[NSDictionary alloc] initWithObjectsAndKeys:kRTCMediaConstraintsValueFalse, kRTCMediaConstraintsOfferToReceiveAudio,kRTCMediaConstraintsValueFalse, kRTCMediaConstraintsOfferToReceiveVideo,kRTCMediaConstraintsValueTrue, @"IceRestart",nil];}return _mediaConstrains;

}- (NSDictionary *)publishMediaConstrains {if (!_publishMediaConstrains) {_publishMediaConstrains = [[NSDictionary alloc] initWithObjectsAndKeys:kRTCMediaConstraintsValueFalse, kRTCMediaConstraintsOfferToReceiveAudio,kRTCMediaConstraintsValueFalse, kRTCMediaConstraintsOfferToReceiveVideo,kRTCMediaConstraintsValueTrue, @"IceRestart",nil];}return _publishMediaConstrains;

}- (NSDictionary *)playMediaConstrains {if (!_playMediaConstrains) {_playMediaConstrains = [[NSDictionary alloc] initWithObjectsAndKeys:kRTCMediaConstraintsValueTrue, kRTCMediaConstraintsOfferToReceiveAudio,kRTCMediaConstraintsValueTrue, kRTCMediaConstraintsOfferToReceiveVideo,kRTCMediaConstraintsValueTrue, @"IceRestart",nil];}return _playMediaConstrains;

}- (NSDictionary *)optionalConstraints {if (!_optionalConstraints) {_optionalConstraints = [[NSDictionary alloc] initWithObjectsAndKeys:kRTCMediaConstraintsValueTrue, @"DtlsSrtpKeyAgreement",nil];}return _optionalConstraints;

}@end

三、本地视频画面显示

使用RTCEAGLVideoView本地摄像头视频画面

self.localRenderer = [[RTCEAGLVideoView alloc] initWithFrame:CGRectZero];

// self.localRenderer.videoContentMode = UIViewContentModeScaleAspectFill;[self addSubview:self.localRenderer];[self.webRTCClient startCaptureLocalVideo:self.localRenderer];

代码如下

PublishView.h

#import <UIKit/UIKit.h>

#import "WebRTCClient.h"@interface PublishView : UIView- (instancetype)initWithFrame:(CGRect)frame webRTCClient:(WebRTCClient *)webRTCClient;@end

PublishView.m

#import "PublishView.h"@interface PublishView ()@property (nonatomic, strong) WebRTCClient *webRTCClient;

@property (nonatomic, strong) RTCEAGLVideoView *localRenderer;@end@implementation PublishView- (instancetype)initWithFrame:(CGRect)frame webRTCClient:(WebRTCClient *)webRTCClient {self = [super initWithFrame:frame];if (self) {self.webRTCClient = webRTCClient;self.localRenderer = [[RTCEAGLVideoView alloc] initWithFrame:CGRectZero];

// self.localRenderer.videoContentMode = UIViewContentModeScaleAspectFill;[self addSubview:self.localRenderer];[self.webRTCClient startCaptureLocalVideo:self.localRenderer];}return self;

}- (void)layoutSubviews {[super layoutSubviews];self.localRenderer.frame = self.bounds;NSLog(@"self.localRenderer frame:%@", NSStringFromCGRect(self.localRenderer.frame));

}@end

四、ossrs推流rtc服务

我这里通过调用rtc/v1/publish/从ossrs获得remotesdp,这里请求的地址如下:https://192.168.10.100:1990/rtc/v1/publish/

使用NSURLSessionDataTask实现http请求,请求代码如下

HttpClient.h

#import <Foundation/Foundation.h>

#import <WebRTC/WebRTC.h>@interface HttpClient : NSObject<NSURLSessionDelegate>- (void)changeSDP2Server:(RTCSessionDescription *)sdpurlStr:(NSString *)urlStrstreamUrl:(NSString *)streamUrlclosure:(void (^)(NSDictionary *result))closure;@end

WebRTCClient.m

#import "HttpClient.h"

#import "IPUtil.h"@interface HttpClient ()@property (nonatomic, strong) NSURLSession *session;@end@implementation HttpClient- (instancetype)init

{self = [super init];if (self) {self.session = [NSURLSession sessionWithConfiguration:[NSURLSessionConfiguration defaultSessionConfiguration] delegate:self delegateQueue:[NSOperationQueue mainQueue]];}return self;

}- (void)changeSDP2Server:(RTCSessionDescription *)sdpurlStr:(NSString *)urlStrstreamUrl:(NSString *)streamUrlclosure:(void (^)(NSDictionary *result))closure {//设置URLNSURL *urlString = [NSURL URLWithString:urlStr];//创建可变请求对象NSMutableURLRequest* mutableRequest = [[NSMutableURLRequest alloc] initWithURL:urlString];//设置请求类型[mutableRequest setHTTPMethod:@"POST"];//创建字典,存放要上传的数据NSMutableDictionary *dict = [[NSMutableDictionary alloc] init];[dict setValue:urlStr forKey:@"api"];[dict setValue:[self createTid] forKey:@"tid"];[dict setValue:streamUrl forKey:@"streamurl"];[dict setValue:sdp.sdp forKey:@"sdp"];[dict setValue:[IPUtil localWiFiIPAddress] forKey:@"clientip"];//将字典转化NSData类型NSData *dictPhoneData = [NSJSONSerialization dataWithJSONObject:dict options:0 error:nil];//设置请求体[mutableRequest setHTTPBody:dictPhoneData];//设置请求头[mutableRequest addValue:@"application/json" forHTTPHeaderField:@"Content-Type"];[mutableRequest addValue:@"application/json" forHTTPHeaderField:@"Accept"];//创建任务NSURLSessionDataTask *dataTask = [self.session dataTaskWithRequest:mutableRequest completionHandler:^(NSData * _Nullable data, NSURLResponse * _Nullable response, NSError * _Nullable error) {if (error == nil) {NSLog(@"请求成功:%@",data);NSString *dataString = [[NSString alloc] initWithData:data encoding:kCFStringEncodingUTF8];NSLog(@"请求成功 dataString:%@",dataString);NSDictionary *result = [NSJSONSerialization JSONObjectWithData:data options:NSJSONReadingAllowFragments error:nil];NSLog(@"NSURLSessionDataTask result:%@", result);if (closure) {closure(result);}} else {NSLog(@"网络请求失败!");}}];//启动任务[dataTask resume];

}- (NSString *)createTid {NSDate *date = [[NSDate alloc] init];int timeInterval = (int)([date timeIntervalSince1970]);int random = (int)(arc4random());NSString *str = [NSString stringWithFormat:@"%d*%d", timeInterval, random];if (str.length > 7) {NSString *tid = [str substringToIndex:7];return tid;}return @"";

}#pragma mark -session delegate

-(void)URLSession:(NSURLSession *)session didReceiveChallenge:(NSURLAuthenticationChallenge *)challenge completionHandler:(void (^)(NSURLSessionAuthChallengeDisposition, NSURLCredential * _Nullable))completionHandler {NSURLSessionAuthChallengeDisposition disposition = NSURLSessionAuthChallengePerformDefaultHandling;__block NSURLCredential *credential = nil;if ([challenge.protectionSpace.authenticationMethod isEqualToString:NSURLAuthenticationMethodServerTrust]) {credential = [NSURLCredential credentialForTrust:challenge.protectionSpace.serverTrust];if (credential) {disposition = NSURLSessionAuthChallengeUseCredential;} else {disposition = NSURLSessionAuthChallengePerformDefaultHandling;}} else {disposition = NSURLSessionAuthChallengePerformDefaultHandling;}if (completionHandler) {completionHandler(disposition, credential);}

}@end

这里用到了获取ip的类,代码如下

IPUtil.h

#import <Foundation/Foundation.h>@interface IPUtil : NSObject+ (NSString *)localWiFiIPAddress;@end

IPUtil.m

#import "IPUtil.h"#include <arpa/inet.h>

#include <netdb.h>#include <net/if.h>#include <ifaddrs.h>

#import <dlfcn.h>#import <SystemConfiguration/SystemConfiguration.h>@implementation IPUtil+ (NSString *)localWiFiIPAddress

{BOOL success;struct ifaddrs * addrs;const struct ifaddrs * cursor;success = getifaddrs(&addrs) == 0;if (success) {cursor = addrs;while (cursor != NULL) {// the second test keeps from picking up the loopback addressif (cursor->ifa_addr->sa_family == AF_INET && (cursor->ifa_flags & IFF_LOOPBACK) == 0){NSString *name = [NSString stringWithUTF8String:cursor->ifa_name];if ([name isEqualToString:@"en0"]) // Wi-Fi adapterreturn [NSString stringWithUTF8String:inet_ntoa(((struct sockaddr_in *)cursor->ifa_addr)->sin_addr)];}cursor = cursor->ifa_next;}freeifaddrs(addrs);}return nil;

}@end

五、调用ossrs推流rtc服务

通过ossrs推流rtc服务,实现本地createOffer之后设置setLocalDescription,再调用rtc/v1/publish/

代码如下

- (void)publishBtnClick {__weak typeof(self) weakSelf = self;[self.webRTCClient offer:^(RTCSessionDescription *sdp) {[weakSelf.webRTCClient changeSDP2Server:sdp urlStr:@"https://192.168.10.100:1990/rtc/v1/publish/" streamUrl:@"webrtc://192.168.10.100:1990/live/livestream" closure:^(BOOL isServerRetSuc) {NSLog(@"isServerRetSuc:%@",(isServerRetSuc?@"YES":@"NO"));}];}];

}

在ViewController上的界面及推流操作

PublishViewController.h

#import <UIKit/UIKit.h>

#import "PublishView.h"@interface PublishViewController : UIViewController@end

PublishViewController.m

#import "PublishViewController.h"@interface PublishViewController ()<WebRTCClientDelegate>@property (nonatomic, strong) WebRTCClient *webRTCClient;@property (nonatomic, strong) PublishView *publishView;@property (nonatomic, strong) UIButton *publishBtn;@end@implementation PublishViewController- (void)viewDidLoad {[super viewDidLoad];// Do any additional setup after loading the view.self.view.backgroundColor = [UIColor whiteColor];self.publishView = [[PublishView alloc] initWithFrame:CGRectZero webRTCClient:self.webRTCClient];[self.view addSubview:self.publishView];self.publishView.backgroundColor = [UIColor lightGrayColor];self.publishView.frame = self.view.bounds;CGFloat screenWidth = CGRectGetWidth(self.view.bounds);CGFloat screenHeight = CGRectGetHeight(self.view.bounds);self.publishBtn = [UIButton buttonWithType:UIButtonTypeCustom];self.publishBtn.frame = CGRectMake(50, screenHeight - 160, screenWidth - 2*50, 46);self.publishBtn.layer.cornerRadius = 4;self.publishBtn.backgroundColor = [UIColor grayColor];[self.publishBtn setTitle:@"publish" forState:UIControlStateNormal];[self.publishBtn addTarget:self action:@selector(publishBtnClick) forControlEvents:UIControlEventTouchUpInside];[self.view addSubview:self.publishBtn];self.webRTCClient.delegate = self;

}- (void)publishBtnClick {__weak typeof(self) weakSelf = self;[self.webRTCClient offer:^(RTCSessionDescription *sdp) {[weakSelf.webRTCClient changeSDP2Server:sdp urlStr:@"https://192.168.10.100:1990/rtc/v1/publish/" streamUrl:@"webrtc://192.168.10.100:1990/live/livestream" closure:^(BOOL isServerRetSuc) {NSLog(@"isServerRetSuc:%@",(isServerRetSuc?@"YES":@"NO"));}];}];

}#pragma mark - WebRTCClientDelegate

- (void)webRTCClient:(WebRTCClient *)client didDiscoverLocalCandidate:(RTCIceCandidate *)candidate {NSLog(@"webRTCClient didDiscoverLocalCandidate");

}- (void)webRTCClient:(WebRTCClient *)client didChangeConnectionState:(RTCIceConnectionState)state {NSLog(@"webRTCClient didChangeConnectionState");/**RTCIceConnectionStateNew,RTCIceConnectionStateChecking,RTCIceConnectionStateConnected,RTCIceConnectionStateCompleted,RTCIceConnectionStateFailed,RTCIceConnectionStateDisconnected,RTCIceConnectionStateClosed,RTCIceConnectionStateCount,*/UIColor *textColor = [UIColor blackColor];BOOL openSpeak = NO;switch (state) {case RTCIceConnectionStateCompleted:case RTCIceConnectionStateConnected:textColor = [UIColor greenColor];openSpeak = YES;break;case RTCIceConnectionStateDisconnected:textColor = [UIColor orangeColor];break;case RTCIceConnectionStateFailed:case RTCIceConnectionStateClosed:textColor = [UIColor redColor];break;case RTCIceConnectionStateNew:case RTCIceConnectionStateChecking:case RTCIceConnectionStateCount:textColor = [UIColor blackColor];break;default:break;}dispatch_async(dispatch_get_main_queue(), ^{NSString *text = [NSString stringWithFormat:@"%ld", state];[self.publishBtn setTitle:text forState:UIControlStateNormal];[self.publishBtn setTitleColor:textColor forState:UIControlStateNormal];if (openSpeak) {[self.webRTCClient speakOn];}

// if textColor == .green {

// self?.webRTCClient.speakerOn()

// }});

}- (void)webRTCClient:(WebRTCClient *)client didReceiveData:(NSData *)data {NSLog(@"webRTCClient didReceiveData");

}#pragma mark - Lazy

- (WebRTCClient *)webRTCClient {if (!_webRTCClient) {_webRTCClient = [[WebRTCClient alloc] initWithPublish:YES];}return _webRTCClient;

}@end

当点击按钮开启rtc推流。效果图如下

六、WebRTC视频文件推流

WebRTC还为我们提供了视频文件推流RTCFileVideoCapturer

if ([capturer isKindOfClass:[RTCFileVideoCapturer class]]) {RTCFileVideoCapturer *fileVideoCapturer = (RTCFileVideoCapturer *)capturer;[fileVideoCapturer startCapturingFromFileNamed:@"beautyPicture.mp4" onError:^(NSError * _Nonnull error) {NSLog(@"startCaptureLocalVideo startCapturingFromFileNamed error:%@", error);}];}

推送的本地视频效果图如下

至此实现了WebRTC音视频通话的iOS端调用ossrs视频通话服务功能。内容较多,描述可能不准确,请见谅。

七、小结

WebRTC音视频通话-实现iOS端调用ossrs视频通话服务。内容较多,描述可能不准确,请见谅。本文地址:https://blog.csdn.net/gloryFlow/article/details/132262724

学习记录,每天不停进步。

相关文章:

WebRTC音视频通话-实现iOS端调用ossrs视频通话服务

WebRTC音视频通话-实现iOS端调用ossrs视频通话服务 之前搭建ossrs服务,可以查看:https://blog.csdn.net/gloryFlow/article/details/132257196 这里iOS端使用GoogleWebRTC联调ossrs实现视频通话功能。 一、iOS端调用ossrs视频通话效果图 iOS端端效果图…...

uniapp的UI框架组件库——uView

在写uniapp项目时候,官方所推荐的样式库并不能满足日常的需求,也不可能自己去写相应的样式,费时又费力,所以我们一般会去使用第三方的组件库UI,就像vue里我们所熟悉的elementUI组件库一样的道理,在uniapp中…...

由于找不到msvcp140.dll无法继续执行代码是什么原因

使用计算机过程中,有时会遇到一些错误提示,其中之一就是关于msvcp140.dll文件丢失或损坏的错误。msvcp140.dll是Windows系统中非常重要的文件,是Microsoft Visual C Redistributable中动态链接库的文件,如果缺失或损坏,…...

kafka生产者幂等与事务

目录 前言: 幂等 事务 总结: 参考资料 前言: Kafka 消息交付可靠性保障以及精确处理一次语义的实现。 所谓的消息交付可靠性保障,是指 Kafka 对 Producer 和 Consumer 要处理的消息提供什么样的承诺。常见的承诺有以下三…...

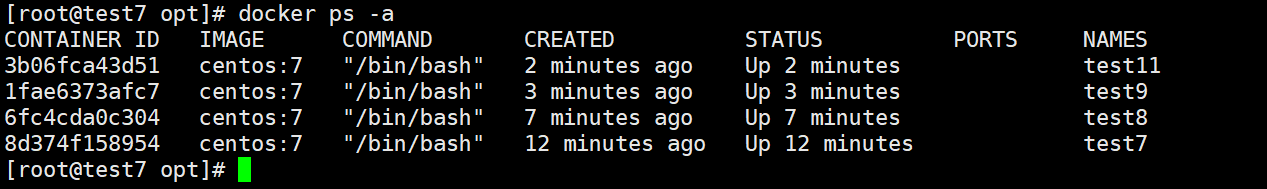

Docker容器:docker基础概述、安装、网络及资源控制

文章目录 一.docker容器概述1.什么是容器2. docker与虚拟机的区别2.1 docker虚拟化产品有哪些及其对比2.2 Docker与虚拟机的区别 3.Docker容器的使用场景4.Docker容器的优点5.Docker 的底层运行原理6.namespace的六项隔离7.Docker核心概念 二.Docker安装 及管理1.安装 Docker1.…...

实验篇——亚细胞定位

实验篇——亚细胞定位 文章目录 前言一、亚细胞定位的在线网站1. UniProt2. WoLFPSORT3. BUSCA4. TargetP-2.0 二、代码实现1. 基于UniProt(不会)2. 基于WoLFPSORT后续(已完善,有关代码放置于[python爬虫学习(一&#…...

【日常积累】HTTP和HTTPS的区别

背景 在运维面试中,经常会遇到面试官提问http和https的区别,今天咱们先来简单了解一下。 超文本传输协议HTTP被用于在Web浏览器和网站服务器之间传递信息,HTTP协议以明文方式发送内容,不提供任何方式的数据加密,如果…...

Qt creator之对齐参考线——新增可视化缩进功能

Qt creator随着官方越来越重视,更新频率也在不断加快,今天无意中发现qt creator新版有了对齐参考线,也称可视化缩进Visualize Indent,默认为启用状态。 下图为旧版Qt Creator显示设置栏: 下图为新版本Qt Creator显示设…...

Go语言之依赖管理

go module go module是Go1.11版本之后官方推出的版本管理工具,并且从Go1.13版本开始,go module将是Go语言默认的依赖管理工具。 GO111MODULE 要启用go module支持首先要设置环境变量GO111MODULE 通过它可以开启或关闭模块支持,它有三个可选…...

【定时任务处理中的分页问题】

最近要做一个定时任务处理的需求,在分页处理上。发现了大家容易遇到的一些"坑",特此分析记录一下。 场景 现在想象一下这个场景,你有一个定时处理任务,需要查询数据库任务表中的所有待处理任务,然后进行处理…...

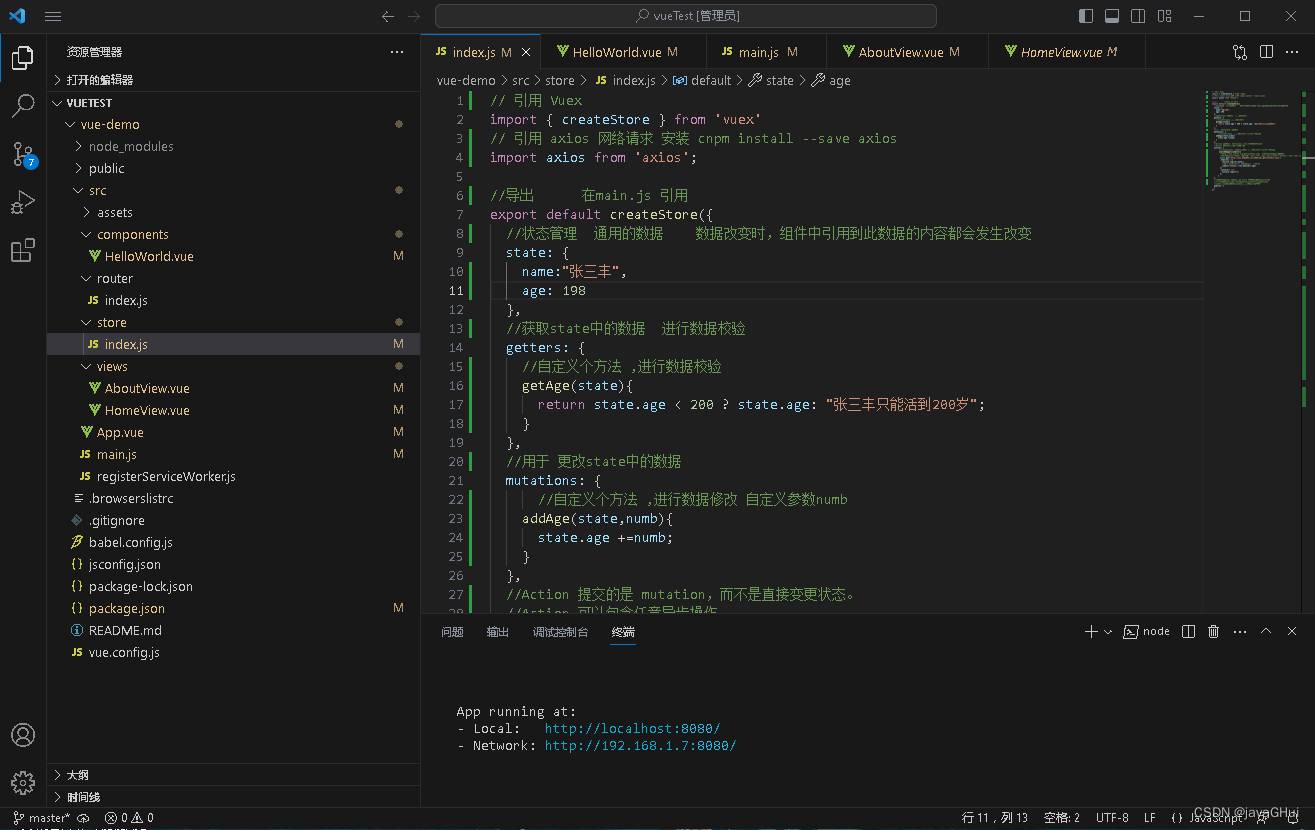

Vue3 Vuex状态管理多组件传递数据简单应用

去官网学习→安装 | Vuex cd 项目 安装 Vuex: npm install --save vuex 或着 创建项目时勾选Vuex vue create vue-demo ? Please pick a preset: Manually select features ? Check the features needed for your project: (Press <space> to se…...

Beats:安装及配置 Metricbeat (一)- 8.x

在我之前的文章: Beats:Beats 入门教程 (一)Beats:Beats 入门教程 (二) 我详细描述了如何在 Elastic Stack 7.x 安装及配置 Beats。在那里的安装,它通常不带有安全及 Elasticsearc…...

openCV使用c#操作摄像头

效果如下: 1.创建一个winform的窗体项目(框架.NET Framework 4.7.2) 2.Nuget引入opencv的c#程序包(版本最好和我一致) 3.后台代码 using System; using System.Collections.Generic; using System.ComponentModel;…...

Centos 防火墙命令

查看防火墙状态 systemctl status firewalld.service 或者 firewall-cmd --state 开启防火墙 单次开启防火墙 systemctl start firewalld.service 开机自启动防火墙 systemctl enable firewalld.service 重启防火墙 systemctl restart firewalld.service 防火墙设置开…...

【第二讲---初识SLAM】

SLAM简介 视觉SLAM,主要指的是利用相机完成建图和定位问题。如果传感器是激光,那么就称为激光SLAM。 定位(明白自身状态(即位置))建图(了解外在环境)。 视觉SLAM中使用的相机与常见…...

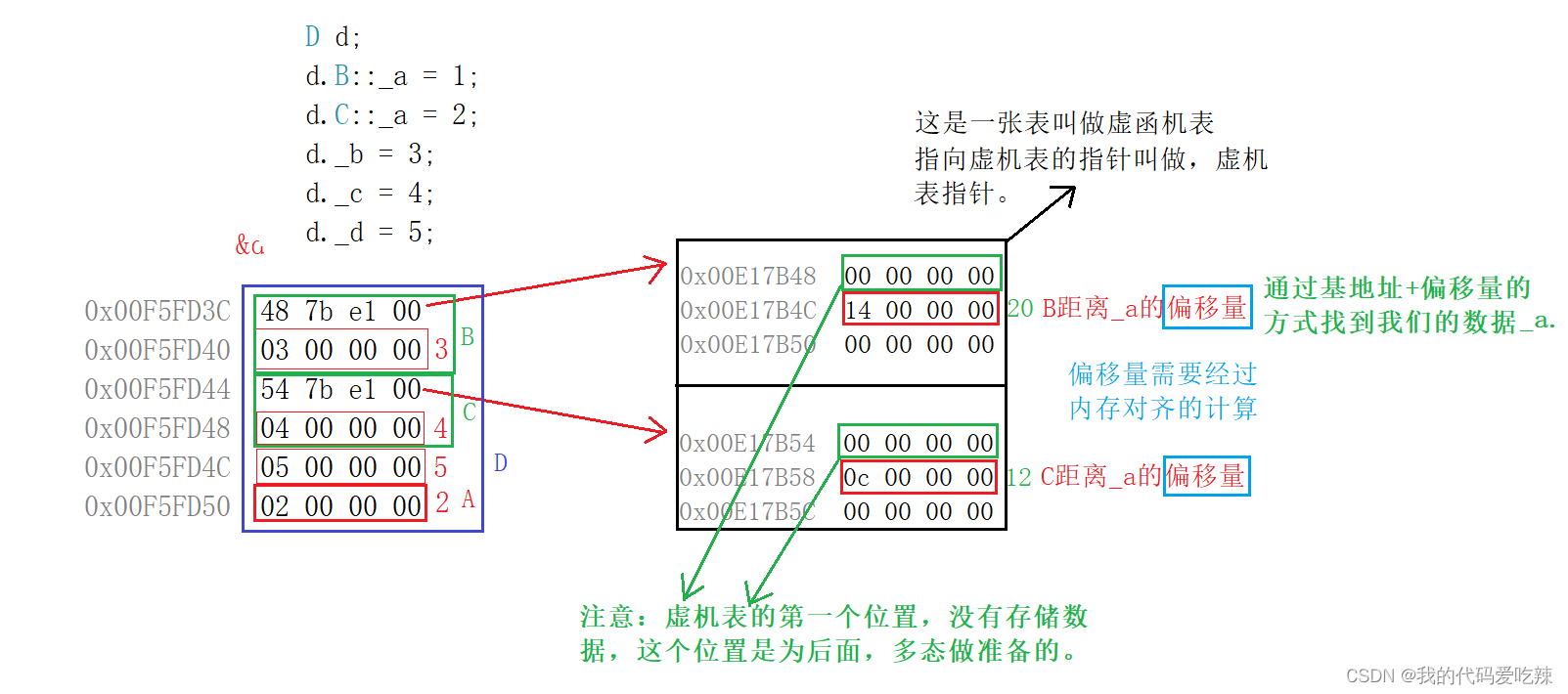

C++ 面向对象三大特性——继承

✅<1>主页:我的代码爱吃辣 📃<2>知识讲解:C 继承 ☂️<3>开发环境:Visual Studio 2022 💬<4>前言:面向对象三大特性的,封装,继承,多态ÿ…...

LC-相同的树

LC-相同的树 链接:https://leetcode.cn/problems/same-tree/solutions/363636/xiang-tong-de-shu-by-leetcode-solution/ 描述:给你两棵二叉树的根节点 p 和 q ,编写一个函数来检验这两棵树是否相同。 如果两个树在结构上相同,并…...

RocketMQ部署 Linux方式和Docker方式

一、Linux部署 准备一台Linux机器,部署单master rocketmq节点 系统ip角色模式CENTOS10.4.7.126Nameserver,brokerMaster 1. 配置JDK rocketmq运行需要依赖jdk,安装步骤略。 2. 下载和配置 从官网下载安装包 https://rocketmq.apache.org/zh/downlo…...

css内容达到最底部但滚动条没有滚动到底部

也是犯了一个傻狗一样的错误 ,滚动条样式是直接复制的蓝湖的代码,有个高度,然后就出现了这样的bug 看了好久一直以为是布局或者overflow的问题,最后发现是因为我给这个滚动条加了个高度,我也是傻狗一样的,…...

机器学习深度学习——transformer(机器翻译的再实现)

👨🎓作者简介:一位即将上大四,正专攻机器学习的保研er 🌌上期文章:机器学习&&深度学习——自注意力和位置编码(数学推导代码实现) 📚订阅专栏:机器…...

智慧医疗能源事业线深度画像分析(上)

引言 医疗行业作为现代社会的关键基础设施,其能源消耗与环境影响正日益受到关注。随着全球"双碳"目标的推进和可持续发展理念的深入,智慧医疗能源事业线应运而生,致力于通过创新技术与管理方案,重构医疗领域的能源使用模式。这一事业线融合了能源管理、可持续发…...

简易版抽奖活动的设计技术方案

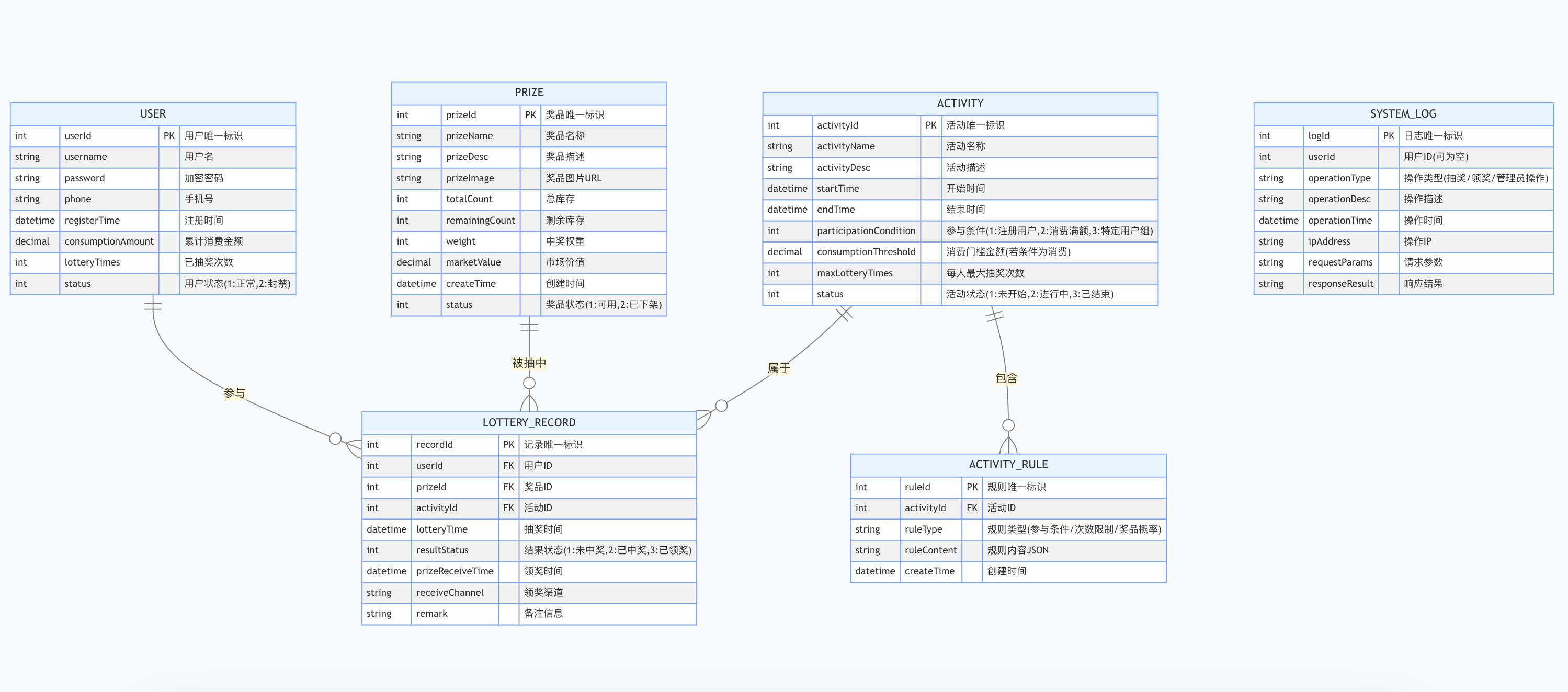

1.前言 本技术方案旨在设计一套完整且可靠的抽奖活动逻辑,确保抽奖活动能够公平、公正、公开地进行,同时满足高并发访问、数据安全存储与高效处理等需求,为用户提供流畅的抽奖体验,助力业务顺利开展。本方案将涵盖抽奖活动的整体架构设计、核心流程逻辑、关键功能实现以及…...

智慧工地云平台源码,基于微服务架构+Java+Spring Cloud +UniApp +MySql

智慧工地管理云平台系统,智慧工地全套源码,java版智慧工地源码,支持PC端、大屏端、移动端。 智慧工地聚焦建筑行业的市场需求,提供“平台网络终端”的整体解决方案,提供劳务管理、视频管理、智能监测、绿色施工、安全管…...

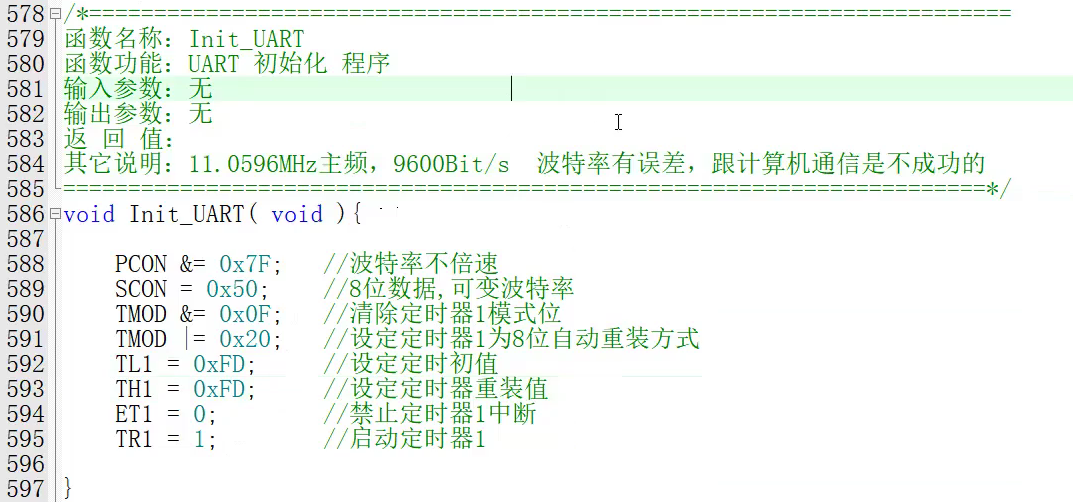

【单片机期末】单片机系统设计

主要内容:系统状态机,系统时基,系统需求分析,系统构建,系统状态流图 一、题目要求 二、绘制系统状态流图 题目:根据上述描述绘制系统状态流图,注明状态转移条件及方向。 三、利用定时器产生时…...

中关于正整数输入的校验规则)

Element Plus 表单(el-form)中关于正整数输入的校验规则

目录 1 单个正整数输入1.1 模板1.2 校验规则 2 两个正整数输入(联动)2.1 模板2.2 校验规则2.3 CSS 1 单个正整数输入 1.1 模板 <el-formref"formRef":model"formData":rules"formRules"label-width"150px"…...

深入浅出Diffusion模型:从原理到实践的全方位教程

I. 引言:生成式AI的黎明 – Diffusion模型是什么? 近年来,生成式人工智能(Generative AI)领域取得了爆炸性的进展,模型能够根据简单的文本提示创作出逼真的图像、连贯的文本,乃至更多令人惊叹的…...

6️⃣Go 语言中的哈希、加密与序列化:通往区块链世界的钥匙

Go 语言中的哈希、加密与序列化:通往区块链世界的钥匙 一、前言:离区块链还有多远? 区块链听起来可能遥不可及,似乎是只有密码学专家和资深工程师才能涉足的领域。但事实上,构建一个区块链的核心并不复杂,尤其当你已经掌握了一门系统编程语言,比如 Go。 要真正理解区…...

【QT控件】显示类控件

目录 一、Label 二、LCD Number 三、ProgressBar 四、Calendar Widget QT专栏:QT_uyeonashi的博客-CSDN博客 一、Label QLabel 可以用来显示文本和图片. 核心属性如下 代码示例: 显示不同格式的文本 1) 在界面上创建三个 QLabel 尺寸放大一些. objectName 分别…...

——Oracle for Linux物理DG环境搭建(2))

Oracle实用参考(13)——Oracle for Linux物理DG环境搭建(2)

13.2. Oracle for Linux物理DG环境搭建 Oracle 数据库的DataGuard技术方案,业界也称为DG,其在数据库高可用、容灾及负载分离等方面,都有着非常广泛的应用,对此,前面相关章节已做过较为详尽的讲解,此处不再赘述。 需要说明的是, DG方案又分为物理DG和逻辑DG,两者的搭建…...

轻量安全的密码管理工具Vaultwarden

一、Vaultwarden概述 Vaultwarden主要作用是提供一个自托管的密码管理器服务。它是Bitwarden密码管理器的第三方轻量版,由国外开发者在Bitwarden的基础上,采用Rust语言重写而成。 (一)Vaultwarden镜像的作用及特点 轻量级与高性…...