YOLO v5、v7、v8 模型优化

YOLO v5、v7、v8 模型优化

- 魔改YOLO

- yaml 文件解读

- 模型选择

- 在线做数据标注

- YOLO算法改进

- YOLOv5

- yolo.py

- yolov5.yaml

- 更换骨干网络之 SwinTransformer

- 更换骨干网络之 EfficientNet

- 优化上采样方式:轻量化算子CARAFE 替换 传统(最近邻 / 双线性 / 双立方 / 三线性 / 转置卷积)

- 优化空间金字塔池化:SPPF / SimSPPF / ASPP / RFB / SPPCSPC 替换 SPP

- 优化训练策略:SIoU / EIoU / WIoU / Focal_xIoU / MPDIoU 替换 IoU

- 优化激活函数:SiLU、Hardswish、MemoryEfficientMish、FReLU、AconC、MetaAconC 替换 Mish函数

- 优化损失函数:多种类Loss, PolyLoss / VarifocalLoss / GFL / QualityFLoss / FL

- 优化损失函数:改进用于微小目标检测的 Normalized Gaussian Wasserstein Distance

- 引入 GSConv

- 引入特征融合网络 BiFPN:提高检测精度和效率

- 引入 YOLOv6 颈部 BiFusion Neck

- 引入注意力机制:在 C3 模块添加添加注意力机制

- 添加【SE】【CBAM】【 ECA 】【CA】

- 添加【SimAM】【CoTAttention】【SKAttention】【DoubleAttention】

- 添加【EffectiveSE】【GlobalContext】【GatherExcite】【MHSA】

- 添加【Triplet】【SpatialGroupEnhance】【NAM】【S2】

- 引入小目标检测:用于低分辨率图像和小物体的新 CNN 模块SPD-Conv

- 引入轻量化卷积 替换 传统卷积

- 引入独立注意力 FPN + PAN 结构 替换 传统卷积

- 引入 YOLOX 解耦头

- 引入 YOLOv8 的 C2f 模块

- 引入 RepVGG 重参数化模块

- 引入密集连接模块

- 模型剪枝

- 知识蒸馏

- 热力图可视化

- YOLOv7

- 更换骨干网络之 SwinTransformer

- 更换骨干网络之 EfficientNet

- 优化上采样方式:轻量化算子CARAFE 替换 传统(最近邻 / 双线性 / 双立方 / 三线性 / 转置卷积)

- 优化空间金字塔池化:SPPF / SimSPPF / ASPP / RFB / SPPCSPC / SPPFCSPC 替换 SPP

- 优化训练策略:SIoU / EIoU / WIoU / Focal_xIoU / MPDIoU 替换 IoU

- 优化激活函数:SiLU、Hardswish、MemoryEfficientMish、FReLU、AconC、MetaAconC 替换 Mish函数

- 优化标签分配策略:基于TOOD标签分配策略改进

- 优化损失函数:SIoU等结合FocalLoss应用:组成Focal-EIoU|Focal-SIoU|Focal-CIoU|Focal-GIoU、DIoU

- 优化损失函数:Wise-IoU

- 优化特征集成方法:目标检测的渐近特征金字塔网络AsymptoticFPN

- 引入 BiFPN 特征融合网络:提高检测精度和效率

- 引入 YOLOv6 颈部 BiFusion Neck

- 引入小目标检测:用于低分辨率图像和小物体的新 CNN 模块SPD-Conv

- 引入轻量化卷积 替换 传统卷积

- 引入 YOLOX 解耦头

- 引入 YOLOv8 的 C2f 模块

- 引入 RepVGG 重参数化模块

- 引入密集连接模块

- 引入用于小目标检测的归一化高斯 Wasserstein Distance Loss

- 引入Generalized Focal Loss

- 模型剪枝

- 知识蒸馏

- 热力图可视化

- YOLOv8

- 更换主干网络之 VanillaNet

- 更换主干网络之 FasterNet

- 更换骨干网络之 SwinTransformer

- 更换骨干网络之 CReToNeXt

- 更换主干之 MAE

- 更换主干之 QARepNeXt

- 更换主干之 RepLKNet

- 引入注意力机制:在 C2F 模块添加添加注意力机制

- 添加【SE】 【CBAM】【 ECA 】【CA 】

- 添加【SimAM】 【CoTAttention】【SKAttention】【DoubleAttention】

- 添加【EffectiveSE】【GlobalContext】【GatherExcite】【MHSA】

- 添加【Triplet】【SpatialGroupEnhance】【NAM】【S2】

- 添加【ParNet】【CrissCross】【GAM】【ParallelPolarized】【Sequential】

- 引入 RepVGG 重参数化模块

- 引入 SPD-Conv 替换 Conv

- 引入跨空间学习的高效多尺度注意力 Efficient Multi-Scale Attention

- 引入选择性注意力 LSK 模块

- 引入空间通道重组卷积

- 引入轻量级通用上采样算子CARAFE

- 引入全维动态卷积

- 引入 BiFPN 结构

- 引入 Slim-neck

- 引入中心化特征金字塔 EVC 模块

- 引入渐进特征金字塔网络 AFPN 结构

- 引入大目标检测头、小目标检测头

- 引入谷歌 Lion 优化器

- 引入小目标检测结构:CBiF、BiFB

- 优化FPN结构

- 优化损失函数:FocalLoss结合变种IoU套装

- 优化损失函数:Wise-IoU

- 优化损失函数:遮挡损失函数 Repulsion Loss

- 优化卷积:PWConv

- 优化 EfficientRep 结构:结合硬件感知神经网络设计的 Repvgg 的 ConvNet 网络结构

- 优化特征集成方法:目标检测的渐近特征金字塔网络AsymptoticFPN

- 优化检测方法:EfficiCLNMS

魔改YOLO

yaml 文件解读

比如我们用 V8 做一个分类任务,那我们需要看 V8分类模型 的 .yaml 文件。

yolov8-cls.yaml(ultralytics/models/v8/yolov8-cls.yaml)

# Parameters

nc: 1000 # 数据集有多少类别

scales: # 调整YOLO模型的深度、宽度和通道数,通过选择不同的规模,可以根据具体的需求来调整模型的大小和性能,从而实现更好的检测结果。# 网络规模:[深度、宽度和通道数]n: [0.33, 0.25, 1024] # n: 较小的规模s: [0.33, 0.50, 1024] # s: 小规模m: [0.67, 0.75, 1024] # m: 中等规模l: [1.00, 1.00, 1024] # l: 大规模x: [1.00, 1.25, 1024] # x: 最大规模# YOLOv8.0n backbone

backbone:# [ 从哪层获取输入(-1是上一层,[-1,6]是从上一层和第6层), 模块重复次数, 模块名字(Conv是卷积层), args]- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2 第 0 层:P1是第1特征层,缩小为输入图像的1/2,64代表输出通道数,3代表卷积核大小k,2代表stride步长- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4 第 1 层:P2是第2特征层,缩小为输入图像的1/4,128代表输出通道数,3代表卷积核大小k,2代表stride步长- [-1, 3, C2f, [128, True]] 第 2 层:本层是C2f模块,3代表本层重复3次。128代表输出通道数,True表示Bottleneck有shortcut。- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8 P3是第3特征层,缩小为输入图像的1/8- [-1, 6, C2f, [256, True]]- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16 P4是第4特征层,缩小为输入图像的1/16- [-1, 6, C2f, [512, True]]- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32 P5是第5特征层,缩小为输入图像的1/32- [-1, 3, C2f, [1024, True]]# YOLOv8.0n head

head:- [-1, 1, Classify, [nc]] # Classifyyaml 文件分为:

- 参数部分:nc(数据集类别数量)、

- 网络结构部分:from(从哪层获取输入), repeats(模块重复次数), module(模块名字), args

- 头部部分

模型选择

在线做数据标注

由于 Labelimg 和 Labelme 安装起来比较麻烦,我们使用在线标注工具make sense:https://www.makesense.ai

准备类别数据集,每添加一个类别文件夹:

在添加标签:

把图中类别标注出来:

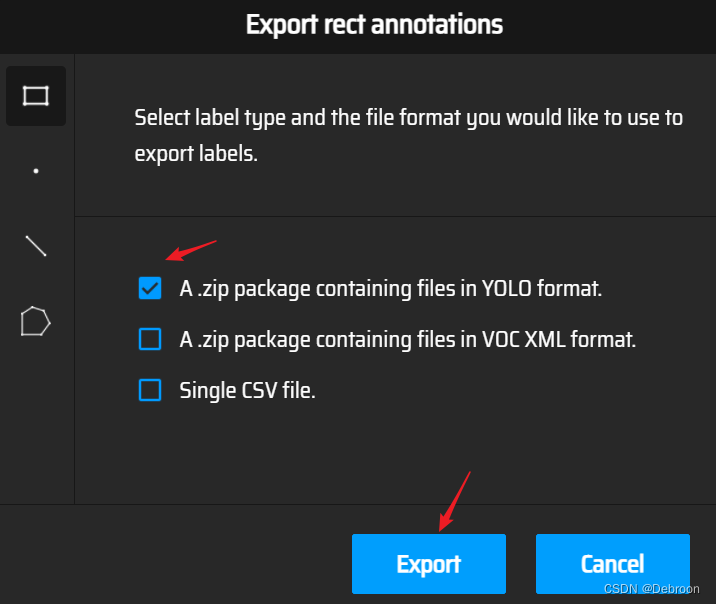

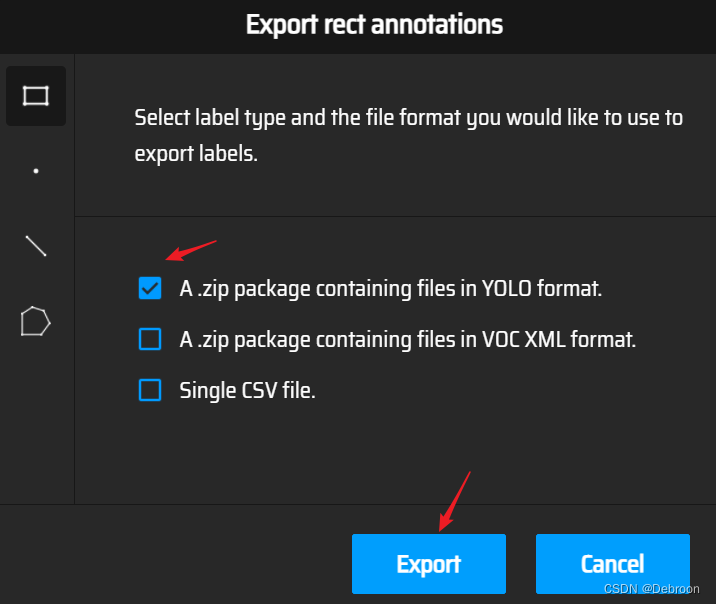

点选 Actions -> Export Annotations 导出标注:

数据标注文件:

0 0.329611 0.329853 0.339493 0.259214

0 0.698680 0.656634 0.339493 0.266585

0 0.770694 0.244472 0.318917 0.194103

0 0.813131 0.104423 0.073300 0.063882

类别标签 目标中心点坐标x 目标中心点坐标y 宽度 高度

类别标签:此处的序号是按照Make Sense中的标签"顺序"排列的,0 是第一个类别

YOLO算法改进

YOLO算法改进,无非六方面:

- 更快更强的网络架构

- 更有效的特征集成方法

- 更准确的检测方法

- 更精确的损失函数

- 更有效的标签分配方法

- 更有效的训练方法

YOLOv5

yolo.py

ultralytics/yolo5/models/yolo.py:

import argparse

import contextlib

import os

import platform

import sys

from copy import deepcopy

from pathlib import PathFILE = Path(__file__).resolve()

ROOT = FILE.parents[1] # YOLOv5 root directory

if str(ROOT) not in sys.path:sys.path.append(str(ROOT)) # add ROOT to PATH

if platform.system() != 'Windows':ROOT = Path(os.path.relpath(ROOT, Path.cwd())) # relativefrom models.common import * # noqa

from models.experimental import * # noqa

from utils.autoanchor import check_anchor_order

from utils.general import LOGGER, check_version, check_yaml, make_divisible, print_args

from utils.plots import feature_visualization

from utils.torch_utils import (fuse_conv_and_bn, initialize_weights, model_info, profile, scale_img, select_device,time_sync)try:import thop # for FLOPs computation

except ImportError:thop = Noneclass Detect(nn.Module):# YOLOv5 Detect head for detection modelsstride = None # strides computed during builddynamic = False # force grid reconstructionexport = False # export modedef __init__(self, nc=80, anchors=(), ch=(), inplace=True): # detection layersuper().__init__()self.nc = nc # number of classesself.no = nc + 5 # number of outputs per anchorself.nl = len(anchors) # number of detection layersself.na = len(anchors[0]) // 2 # number of anchorsself.grid = [torch.empty(0) for _ in range(self.nl)] # init gridself.anchor_grid = [torch.empty(0) for _ in range(self.nl)] # init anchor gridself.register_buffer('anchors', torch.tensor(anchors).float().view(self.nl, -1, 2)) # shape(nl,na,2)self.m = nn.ModuleList(nn.Conv2d(x, self.no * self.na, 1) for x in ch) # output convself.inplace = inplace # use inplace ops (e.g. slice assignment)def forward(self, x):z = [] # inference outputfor i in range(self.nl):x[i] = self.m[i](x[i]) # convbs, _, ny, nx = x[i].shape # x(bs,255,20,20) to x(bs,3,20,20,85)x[i] = x[i].view(bs, self.na, self.no, ny, nx).permute(0, 1, 3, 4, 2).contiguous()if not self.training: # inferenceif self.dynamic or self.grid[i].shape[2:4] != x[i].shape[2:4]:self.grid[i], self.anchor_grid[i] = self._make_grid(nx, ny, i)if isinstance(self, Segment): # (boxes + masks)xy, wh, conf, mask = x[i].split((2, 2, self.nc + 1, self.no - self.nc - 5), 4)xy = (xy.sigmoid() * 2 + self.grid[i]) * self.stride[i] # xywh = (wh.sigmoid() * 2) ** 2 * self.anchor_grid[i] # why = torch.cat((xy, wh, conf.sigmoid(), mask), 4)else: # Detect (boxes only)xy, wh, conf = x[i].sigmoid().split((2, 2, self.nc + 1), 4)xy = (xy * 2 + self.grid[i]) * self.stride[i] # xywh = (wh * 2) ** 2 * self.anchor_grid[i] # why = torch.cat((xy, wh, conf), 4)z.append(y.view(bs, self.na * nx * ny, self.no))return x if self.training else (torch.cat(z, 1), ) if self.export else (torch.cat(z, 1), x)def _make_grid(self, nx=20, ny=20, i=0, torch_1_10=check_version(torch.__version__, '1.10.0')):d = self.anchors[i].devicet = self.anchors[i].dtypeshape = 1, self.na, ny, nx, 2 # grid shapey, x = torch.arange(ny, device=d, dtype=t), torch.arange(nx, device=d, dtype=t)yv, xv = torch.meshgrid(y, x, indexing='ij') if torch_1_10 else torch.meshgrid(y, x) # torch>=0.7 compatibilitygrid = torch.stack((xv, yv), 2).expand(shape) - 0.5 # add grid offset, i.e. y = 2.0 * x - 0.5anchor_grid = (self.anchors[i] * self.stride[i]).view((1, self.na, 1, 1, 2)).expand(shape)return grid, anchor_gridclass Segment(Detect):# YOLOv5 Segment head for segmentation modelsdef __init__(self, nc=80, anchors=(), nm=32, npr=256, ch=(), inplace=True):super().__init__(nc, anchors, ch, inplace)self.nm = nm # number of masksself.npr = npr # number of protosself.no = 5 + nc + self.nm # number of outputs per anchorself.m = nn.ModuleList(nn.Conv2d(x, self.no * self.na, 1) for x in ch) # output convself.proto = Proto(ch[0], self.npr, self.nm) # protosself.detect = Detect.forwarddef forward(self, x):p = self.proto(x[0])x = self.detect(self, x)return (x, p) if self.training else (x[0], p) if self.export else (x[0], p, x[1])class BaseModel(nn.Module):# YOLOv5 base modeldef forward(self, x, profile=False, visualize=False):return self._forward_once(x, profile, visualize) # single-scale inference, traindef _forward_once(self, x, profile=False, visualize=False):y, dt = [], [] # outputsfor m in self.model:if m.f != -1: # if not from previous layerx = y[m.f] if isinstance(m.f, int) else [x if j == -1 else y[j] for j in m.f] # from earlier layersif profile:self._profile_one_layer(m, x, dt)x = m(x) # runy.append(x if m.i in self.save else None) # save outputif visualize:feature_visualization(x, m.type, m.i, save_dir=visualize)return xdef _profile_one_layer(self, m, x, dt):c = m == self.model[-1] # is final layer, copy input as inplace fixo = thop.profile(m, inputs=(x.copy() if c else x, ), verbose=False)[0] / 1E9 * 2 if thop else 0 # FLOPst = time_sync()for _ in range(10):m(x.copy() if c else x)dt.append((time_sync() - t) * 100)if m == self.model[0]:LOGGER.info(f"{'time (ms)':>10s} {'GFLOPs':>10s} {'params':>10s} module")LOGGER.info(f'{dt[-1]:10.2f} {o:10.2f} {m.np:10.0f} {m.type}')if c:LOGGER.info(f"{sum(dt):10.2f} {'-':>10s} {'-':>10s} Total")def fuse(self): # fuse model Conv2d() + BatchNorm2d() layersLOGGER.info('Fusing layers... ')for m in self.model.modules():if isinstance(m, (Conv, DWConv)) and hasattr(m, 'bn'):m.conv = fuse_conv_and_bn(m.conv, m.bn) # update convdelattr(m, 'bn') # remove batchnormm.forward = m.forward_fuse # update forwardself.info()return selfdef info(self, verbose=False, img_size=640): # print model informationmodel_info(self, verbose, img_size)def _apply(self, fn):# Apply to(), cpu(), cuda(), half() to model tensors that are not parameters or registered buffersself = super()._apply(fn)m = self.model[-1] # Detect()if isinstance(m, (Detect, Segment)):m.stride = fn(m.stride)m.grid = list(map(fn, m.grid))if isinstance(m.anchor_grid, list):m.anchor_grid = list(map(fn, m.anchor_grid))return selfclass DetectionModel(BaseModel):# YOLOv5 detection modeldef __init__(self, cfg='yolov5s.yaml', ch=3, nc=None, anchors=None): # model, input channels, number of classessuper().__init__()if isinstance(cfg, dict):self.yaml = cfg # model dictelse: # is *.yamlimport yaml # for torch hubself.yaml_file = Path(cfg).namewith open(cfg, encoding='ascii', errors='ignore') as f:self.yaml = yaml.safe_load(f) # model dict# Define modelch = self.yaml['ch'] = self.yaml.get('ch', ch) # input channelsif nc and nc != self.yaml['nc']:LOGGER.info(f"Overriding model.yaml nc={self.yaml['nc']} with nc={nc}")self.yaml['nc'] = nc # override yaml valueif anchors:LOGGER.info(f'Overriding model.yaml anchors with anchors={anchors}')self.yaml['anchors'] = round(anchors) # override yaml valueself.model, self.save = parse_model(deepcopy(self.yaml), ch=[ch]) # model, savelistself.names = [str(i) for i in range(self.yaml['nc'])] # default namesself.inplace = self.yaml.get('inplace', True)# Build strides, anchorsm = self.model[-1] # Detect()if isinstance(m, (Detect, Segment)):s = 256 # 2x min stridem.inplace = self.inplaceforward = lambda x: self.forward(x)[0] if isinstance(m, Segment) else self.forward(x)m.stride = torch.tensor([s / x.shape[-2] for x in forward(torch.zeros(1, ch, s, s))]) # forwardcheck_anchor_order(m)m.anchors /= m.stride.view(-1, 1, 1)self.stride = m.strideself._initialize_biases() # only run once# Init weights, biasesinitialize_weights(self)self.info()LOGGER.info('')def forward(self, x, augment=False, profile=False, visualize=False):if augment:return self._forward_augment(x) # augmented inference, Nonereturn self._forward_once(x, profile, visualize) # single-scale inference, traindef _forward_augment(self, x):img_size = x.shape[-2:] # height, widths = [1, 0.83, 0.67] # scalesf = [None, 3, None] # flips (2-ud, 3-lr)y = [] # outputsfor si, fi in zip(s, f):xi = scale_img(x.flip(fi) if fi else x, si, gs=int(self.stride.max()))yi = self._forward_once(xi)[0] # forward# cv2.imwrite(f'img_{si}.jpg', 255 * xi[0].cpu().numpy().transpose((1, 2, 0))[:, :, ::-1]) # saveyi = self._descale_pred(yi, fi, si, img_size)y.append(yi)y = self._clip_augmented(y) # clip augmented tailsreturn torch.cat(y, 1), None # augmented inference, traindef _descale_pred(self, p, flips, scale, img_size):# de-scale predictions following augmented inference (inverse operation)if self.inplace:p[..., :4] /= scale # de-scaleif flips == 2:p[..., 1] = img_size[0] - p[..., 1] # de-flip udelif flips == 3:p[..., 0] = img_size[1] - p[..., 0] # de-flip lrelse:x, y, wh = p[..., 0:1] / scale, p[..., 1:2] / scale, p[..., 2:4] / scale # de-scaleif flips == 2:y = img_size[0] - y # de-flip udelif flips == 3:x = img_size[1] - x # de-flip lrp = torch.cat((x, y, wh, p[..., 4:]), -1)return pdef _clip_augmented(self, y):# Clip YOLOv5 augmented inference tailsnl = self.model[-1].nl # number of detection layers (P3-P5)g = sum(4 ** x for x in range(nl)) # grid pointse = 1 # exclude layer counti = (y[0].shape[1] // g) * sum(4 ** x for x in range(e)) # indicesy[0] = y[0][:, :-i] # largei = (y[-1].shape[1] // g) * sum(4 ** (nl - 1 - x) for x in range(e)) # indicesy[-1] = y[-1][:, i:] # smallreturn ydef _initialize_biases(self, cf=None): # initialize biases into Detect(), cf is class frequency# https://arxiv.org/abs/1708.02002 section 3.3# cf = torch.bincount(torch.tensor(np.concatenate(dataset.labels, 0)[:, 0]).long(), minlength=nc) + 1.m = self.model[-1] # Detect() modulefor mi, s in zip(m.m, m.stride): # fromb = mi.bias.view(m.na, -1) # conv.bias(255) to (3,85)b.data[:, 4] += math.log(8 / (640 / s) ** 2) # obj (8 objects per 640 image)b.data[:, 5:5 + m.nc] += math.log(0.6 / (m.nc - 0.99999)) if cf is None else torch.log(cf / cf.sum()) # clsmi.bias = torch.nn.Parameter(b.view(-1), requires_grad=True)Model = DetectionModel # retain YOLOv5 'Model' class for backwards compatibilityclass SegmentationModel(DetectionModel):# YOLOv5 segmentation modeldef __init__(self, cfg='yolov5s-seg.yaml', ch=3, nc=None, anchors=None):super().__init__(cfg, ch, nc, anchors)class ClassificationModel(BaseModel):# YOLOv5 classification modeldef __init__(self, cfg=None, model=None, nc=1000, cutoff=10): # yaml, model, number of classes, cutoff indexsuper().__init__()self._from_detection_model(model, nc, cutoff) if model is not None else self._from_yaml(cfg)def _from_detection_model(self, model, nc=1000, cutoff=10):# Create a YOLOv5 classification model from a YOLOv5 detection modelif isinstance(model, DetectMultiBackend):model = model.model # unwrap DetectMultiBackendmodel.model = model.model[:cutoff] # backbonem = model.model[-1] # last layerch = m.conv.in_channels if hasattr(m, 'conv') else m.cv1.conv.in_channels # ch into modulec = Classify(ch, nc) # Classify()c.i, c.f, c.type = m.i, m.f, 'models.common.Classify' # index, from, typemodel.model[-1] = c # replaceself.model = model.modelself.stride = model.strideself.save = []self.nc = ncdef _from_yaml(self, cfg):# Create a YOLOv5 classification model from a *.yaml fileself.model = Nonedef parse_model(d, ch): # model_dict, input_channels(3)# Parse a YOLOv5 model.yaml dictionaryLOGGER.info(f"\n{'':>3}{'from':>18}{'n':>3}{'params':>10} {'module':<40}{'arguments':<30}")anchors, nc, gd, gw, act = d['anchors'], d['nc'], d['depth_multiple'], d['width_multiple'], d.get('activation')if act:Conv.default_act = eval(act) # redefine default activation, i.e. Conv.default_act = nn.SiLU()LOGGER.info(f"{colorstr('activation:')} {act}") # printna = (len(anchors[0]) // 2) if isinstance(anchors, list) else anchors # number of anchorsno = na * (nc + 5) # number of outputs = anchors * (classes + 5)layers, save, c2 = [], [], ch[-1] # layers, savelist, ch outfor i, (f, n, m, args) in enumerate(d['backbone'] + d['head']): # from, number, module, argsm = eval(m) if isinstance(m, str) else m # eval stringsfor j, a in enumerate(args):with contextlib.suppress(NameError):args[j] = eval(a) if isinstance(a, str) else a # eval stringsn = n_ = max(round(n * gd), 1) if n > 1 else n # depth gainif m in {Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, nn.ConvTranspose2d, DWConvTranspose2d, C3x}:c1, c2 = ch[f], args[0]if c2 != no: # if not outputc2 = make_divisible(c2 * gw, 8)args = [c1, c2, *args[1:]]if m in {BottleneckCSP, C3, C3TR, C3Ghost, C3x}:args.insert(2, n) # number of repeatsn = 1elif m is nn.BatchNorm2d:args = [ch[f]]elif m is Concat:c2 = sum(ch[x] for x in f)# TODO: channel, gw, gdelif m in {Detect, Segment}:args.append([ch[x] for x in f])if isinstance(args[1], int): # number of anchorsargs[1] = [list(range(args[1] * 2))] * len(f)if m is Segment:args[3] = make_divisible(args[3] * gw, 8)elif m is Contract:c2 = ch[f] * args[0] ** 2elif m is Expand:c2 = ch[f] // args[0] ** 2else:c2 = ch[f]m_ = nn.Sequential(*(m(*args) for _ in range(n))) if n > 1 else m(*args) # modulet = str(m)[8:-2].replace('__main__.', '') # module typenp = sum(x.numel() for x in m_.parameters()) # number paramsm_.i, m_.f, m_.type, m_.np = i, f, t, np # attach index, 'from' index, type, number paramsLOGGER.info(f'{i:>3}{str(f):>18}{n_:>3}{np:10.0f} {t:<40}{str(args):<30}') # printsave.extend(x % i for x in ([f] if isinstance(f, int) else f) if x != -1) # append to savelistlayers.append(m_)if i == 0:ch = []ch.append(c2)return nn.Sequential(*layers), sorted(save)if __name__ == '__main__':parser = argparse.ArgumentParser()parser.add_argument('--cfg', type=str, default='yolov5s.yaml', help='model.yaml')parser.add_argument('--batch-size', type=int, default=1, help='total batch size for all GPUs')parser.add_argument('--device', default='', help='cuda device, i.e. 0 or 0,1,2,3 or cpu')parser.add_argument('--profile', action='store_true', help='profile model speed')parser.add_argument('--line-profile', action='store_true', help='profile model speed layer by layer')parser.add_argument('--test', action='store_true', help='test all yolo*.yaml')opt = parser.parse_args()opt.cfg = check_yaml(opt.cfg) # check YAMLprint_args(vars(opt))device = select_device(opt.device)# Create modelim = torch.rand(opt.batch_size, 3, 640, 640).to(device)model = Model(opt.cfg).to(device)# Optionsif opt.line_profile: # profile layer by layermodel(im, profile=True)elif opt.profile: # profile forward-backwardresults = profile(input=im, ops=[model], n=3)elif opt.test: # test all modelsfor cfg in Path(ROOT / 'models').rglob('yolo*.yaml'):try:_ = Model(cfg)except Exception as e:print(f'Error in {cfg}: {e}')else: # report fused model summarymodel.fuse()

yolov5.yaml

ultralytics/models/v5/yolov5.yaml:

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov5n.yaml' will call yolov5.yaml with scale 'n'# [depth, width, max_channels]n: [0.33, 0.25, 1024]s: [0.33, 0.50, 1024]m: [0.67, 0.75, 1024]l: [1.00, 1.00, 1024]x: [1.33, 1.25, 1024]# YOLOv5 v6.0 backbone

backbone:# [from, number, module, args][[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2[-1, 1, Conv, [128, 3, 2]], # 1-P2/4[-1, 3, C3, [128]],[-1, 1, Conv, [256, 3, 2]], # 3-P3/8[-1, 6, C3, [256]],[-1, 1, Conv, [512, 3, 2]], # 5-P4/16[-1, 9, C3, [512]],[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32[-1, 3, C3, [1024]],[-1, 1, SPPF, [1024, 5]], # 9]# YOLOv5 v6.0 head

head:[[-1, 1, Conv, [512, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 6], 1, Concat, [1]], # cat backbone P4[-1, 3, C3, [512, False]], # 13[-1, 1, Conv, [256, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 4], 1, Concat, [1]], # cat backbone P3[-1, 3, C3, [256, False]], # 17 (P3/8-small)[-1, 1, Conv, [256, 3, 2]],[[-1, 14], 1, Concat, [1]], # cat head P4[-1, 3, C3, [512, False]], # 20 (P4/16-medium)[-1, 1, Conv, [512, 3, 2]],[[-1, 10], 1, Concat, [1]], # cat head P5[-1, 3, C3, [1024, False]], # 23 (P5/32-large)[[17, 20, 23], 1, Detect, [nc]], # Detect(P3, P4, P5)]

更换骨干网络之 SwinTransformer

更换骨干网络之 EfficientNet

优化上采样方式:轻量化算子CARAFE 替换 传统(最近邻 / 双线性 / 双立方 / 三线性 / 转置卷积)

上采样(Upsampling)是一种图像处理中的操作,用于将低分辨率的图像或特征图增加到高分辨率。

在计算机视觉中,上采样常常用于将低分辨率的特征图恢复到原始图像的尺寸,或者在图像生成任务中生成更高分辨率的图像。

常见的上采样方式包括:

-

最近邻插值(Nearest Neighbor Interpolation):最简单的上采样方法,将低分辨率图像的每个像素复制到对应的多个像素位置,以增加图像的尺寸和分辨率。这种方法不考虑像素之间的差异,可能会导致图像的锯齿状边缘。

-

双线性插值(Bilinear Interpolation):通过在四个最近的像素之间进行插值来计算新像素的值。这种方法考虑了像素之间的差异,可以得到更平滑的上采样结果。

-

双三次插值(Bicubic Interpolation):在双线性插值的基础上进行进一步的插值计算,使用更多的像素进行插值计算,得到更平滑的上采样结果。然而,双三次插值计算量较大。

-

转置卷积(Transposed Convolution):也称为反卷积(Deconvolution),通过使用卷积操作的反向计算过程来实现上采样。转置卷积通过填充和卷积核的反向操作,将低分辨率特征图恢复到原始尺寸。

这些上采样方法在不同的任务和场景中具有不同的适用性和效果。选择合适的上采样方法取决于任务的需求、计算资源和对图像质量的要求。

- 图像超分辨率:当需要将低分辨率图像恢复到高分辨率时,双线性插值和双三次插值是常用的方法。它们能够增加图像的细节,并且计算效率较高。

- 目标检测和语义分割:在目标检测和语义分割等任务中,需要将低分辨率的特征图上采样到原始图像的尺寸。转置卷积是常用的上采样方法,因为它能够学习到更复杂的特征重建,并且能够保持较好的位置信息。

- 图像生成:在图像生成任务中,需要生成高分辨率的图像。转置卷积通常被用于将低分辨率的噪声向量映射到高分辨率图像空间。

- 图像风格转换:在图像风格转换任务中,需要将输入图像的风格转换为目标风格。双线性插值在这种情况下也是常用的上采样方法,因为它能够保持较好的图像纹理和细节。

在 YOLOv5 中,上采样模块是使用 nn.Upsample 实现的。

具体来说,YOLOv5 中使用的上采样模块包括两种类型:

-

nn.Upsample(scale_factor=2, mode=‘nearest’),这种上采样模块是通过最近邻插值实现的。

它用于将特征图的大小增加一倍,例如在 yolov5s 中将 16x16 特征图上采样到 32x32。

-

nn.ConvTranspose2d(in_channels, out_channels, kernel_size=2, stride=2),这种上采样模块是通过转置卷积实现的。

它用于将特征图的大小增加一倍,并且可以学习更加复杂的上采样方式。在 YOLOv5 中,这种上采样模块在 yolov5m、yolov5l 和 yolov5x 中使用。

把 CARAFE 添加到 common.py:

import torch.nn.functional as Fclass CARAFE(nn.Module): # CARAFE: Content-Aware ReAssembly of FEatures https://arxiv.org/pdf/1905.02188.pdfdef __init__(self, c1, c2, kernel_size=3, up_factor=2):super(CARAFE, self).__init__()self.kernel_size = kernel_sizeself.up_factor = up_factorself.down = nn.Conv2d(c1, c1 // 4, 1)self.encoder = nn.Conv2d(c1 // 4, self.up_factor ** 2 * self.kernel_size ** 2,self.kernel_size, 1, self.kernel_size // 2)self.out = nn.Conv2d(c1, c2, 1)def forward(self, x):N, C, H, W = x.size()# N,C,H,W -> N,C,delta*H,delta*W# kernel prediction modulekernel_tensor = self.down(x) # (N, Cm, H, W)kernel_tensor = self.encoder(kernel_tensor) # (N, S^2 * Kup^2, H, W)kernel_tensor = F.pixel_shuffle(kernel_tensor, self.up_factor) # (N, S^2 * Kup^2, H, W)->(N, Kup^2, S*H, S*W)kernel_tensor = F.softmax(kernel_tensor, dim=1) # (N, Kup^2, S*H, S*W)kernel_tensor = kernel_tensor.unfold(2, self.up_factor, step=self.up_factor) # (N, Kup^2, H, W*S, S)kernel_tensor = kernel_tensor.unfold(3, self.up_factor, step=self.up_factor) # (N, Kup^2, H, W, S, S)kernel_tensor = kernel_tensor.reshape(N, self.kernel_size ** 2, H, W, self.up_factor ** 2) # (N, Kup^2, H, W, S^2)kernel_tensor = kernel_tensor.permute(0, 2, 3, 1, 4) # (N, H, W, Kup^2, S^2)# content-aware reassembly module# tensor.unfold: dim, size, stepx = F.pad(x, pad=(self.kernel_size // 2, self.kernel_size // 2,self.kernel_size // 2, self.kernel_size // 2),mode='constant', value=0) # (N, C, H+Kup//2+Kup//2, W+Kup//2+Kup//2)x = x.unfold(2, self.kernel_size, step=1) # (N, C, H, W+Kup//2+Kup//2, Kup)x = x.unfold(3, self.kernel_size, step=1) # (N, C, H, W, Kup, Kup)x = x.reshape(N, C, H, W, -1) # (N, C, H, W, Kup^2)x = x.permute(0, 2, 3, 1, 4) # (N, H, W, C, Kup^2)out_tensor = torch.matmul(x, kernel_tensor) # (N, H, W, C, S^2)out_tensor = out_tensor.reshape(N, H, W, -1)out_tensor = out_tensor.permute(0, 3, 1, 2)out_tensor = F.pixel_shuffle(out_tensor, self.up_factor)out_tensor = self.out(out_tensor)return out_tensor

model/yolo.py 添加这层:

修改前:if m in {Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, nn.ConvTranspose2d, DWConvTranspose2d, C3x}: 修改后:if m in {Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, nn.ConvTranspose2d, DWConvTranspose2d, C3x, CARAFE}:

修改一下 ultralytics/models/v5/yolov5.yaml:

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov5n.yaml' will call yolov5.yaml with scale 'n'# [depth, width, max_channels]n: [0.33, 0.25, 1024]s: [0.33, 0.50, 1024]m: [0.67, 0.75, 1024]l: [1.00, 1.00, 1024]x: [1.33, 1.25, 1024]# YOLOv5 v6.0 backbone

backbone:# [from, number, module, args][[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2[-1, 1, Conv, [128, 3, 2]], # 1-P2/4[-1, 3, C3, [128]],[-1, 1, Conv, [256, 3, 2]], # 3-P3/8[-1, 6, C3, [256]],[-1, 1, Conv, [512, 3, 2]], # 5-P4/16[-1, 9, C3, [512]],[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32[-1, 3, C3, [1024]],[-1, 1, SPPF, [1024, 5]], # 9]# YOLOv5 v6.0 head

head:[[-1, 1, Conv, [512, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 6], 1, Concat, [1]], # cat backbone P4[-1, 3, C3, [512, False]], # 13[-1, 1, Conv, [256, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 4], 1, Concat, [1]], # cat backbone P3[-1, 3, C3, [256, False]], # 17 (P3/8-small)[-1, 1, Conv, [256, 3, 2]],[[-1, 14], 1, Concat, [1]], # cat head P4[-1, 3, C3, [512, False]], # 20 (P4/16-medium)[-1, 1, Conv, [512, 3, 2]],[[-1, 10], 1, Concat, [1]], # cat head P5[-1, 3, C3, [1024, False]], # 23 (P5/32-large)[[17, 20, 23], 1, Detect, [nc]], # Detect(P3, P4, P5)]

优化空间金字塔池化:SPPF / SimSPPF / ASPP / RFB / SPPCSPC 替换 SPP

修改三步:

- 把 XXX模块 放入

common.py yolo.py放入类名- 修改

yolov5.yaml

backbone:# [from, number, module, args][[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2[-1, 1, Conv, [128, 3, 2]], # 1-P2/4[-1, 3, C3, [128]],[-1, 1, Conv, [256, 3, 2]], # 3-P3/8[-1, 6, C3, [256]],[-1, 1, Conv, [512, 3, 2]], # 5-P4/16[-1, 9, C3, [512]],[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32[-1, 3, C3, [1024]],[-1, 1, SPPF, [1024, 5]], # 9# [-1, 1, ASPP, [512]], # 9# [-1, 1, SPP, [1024]],# [-1, 1, SimSPPF, [1024, 5]],# [-1, 1, BasicRFB, [1024]],# [-1, 1, SPPCSPC, [1024]],# [-1, 1, SPPFCSPC, [1024, 5]], # 🍀]

SPP:

class SPP(nn.Module):# Spatial Pyramid Pooling (SPP) layer https://arxiv.org/abs/1406.4729def __init__(self, c1, c2, k=(5, 9, 13)):super().__init__()c_ = c1 // 2 # hidden channelsself.cv1 = Conv(c1, c_, 1, 1)self.cv2 = Conv(c_ * (len(k) + 1), c2, 1, 1)self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=x, stride=1, padding=x // 2) for x in k])def forward(self, x):x = self.cv1(x)with warnings.catch_warnings():warnings.simplefilter('ignore') # suppress torch 1.9.0 max_pool2d() warningreturn self.cv2(torch.cat([x] + [m(x) for m in self.m], 1))

SPPF:

class SPPF(nn.Module):# Spatial Pyramid Pooling - Fast (SPPF) layer for YOLOv5 by Glenn Jocherdef __init__(self, c1, c2, k=5): # equivalent to SPP(k=(5, 9, 13))super().__init__()c_ = c1 // 2 # hidden channelsself.cv1 = Conv(c1, c_, 1, 1)self.cv2 = Conv(c_ * 4, c2, 1, 1)self.m = nn.MaxPool2d(kernel_size=k, stride=1, padding=k // 2)def forward(self, x):x = self.cv1(x)with warnings.catch_warnings():warnings.simplefilter('ignore') # suppress torch 1.9.0 max_pool2d() warningy1 = self.m(x)y2 = self.m(y1)return self.cv2(torch.cat((x, y1, y2, self.m(y2)), 1))

SimSPPF:

class SimConv(nn.Module):'''Normal Conv with ReLU activation'''def __init__(self, in_channels, out_channels, kernel_size, stride, groups=1, bias=False):super().__init__()padding = kernel_size // 2self.conv = nn.Conv2d(in_channels,out_channels,kernel_size=kernel_size,stride=stride,padding=padding,groups=groups,bias=bias,)self.bn = nn.BatchNorm2d(out_channels)self.act = nn.ReLU()def forward(self, x):return self.act(self.bn(self.conv(x)))def forward_fuse(self, x):return self.act(self.conv(x))class SimSPPF(nn.Module):'''Simplified SPPF with ReLU activation'''def __init__(self, in_channels, out_channels, kernel_size=5):super().__init__()c_ = in_channels // 2 # hidden channelsself.cv1 = SimConv(in_channels, c_, 1, 1)self.cv2 = SimConv(c_ * 4, out_channels, 1, 1)self.m = nn.MaxPool2d(kernel_size=kernel_size, stride=1, padding=kernel_size // 2)def forward(self, x):x = self.cv1(x)with warnings.catch_warnings():warnings.simplefilter('ignore')y1 = self.m(x)y2 = self.m(y1)return self.cv2(torch.cat([x, y1, y2, self.m(y2)], 1))

ASPP:

# without BN version

class ASPP(nn.Module):def __init__(self, in_channel=512, out_channel=256):super(ASPP, self).__init__()self.mean = nn.AdaptiveAvgPool2d((1, 1)) # (1,1)means ouput_dimself.conv = nn.Conv2d(in_channel,out_channel, 1, 1)self.atrous_block1 = nn.Conv2d(in_channel, out_channel, 1, 1)self.atrous_block6 = nn.Conv2d(in_channel, out_channel, 3, 1, padding=6, dilation=6)self.atrous_block12 = nn.Conv2d(in_channel, out_channel, 3, 1, padding=12, dilation=12)self.atrous_block18 = nn.Conv2d(in_channel, out_channel, 3, 1, padding=18, dilation=18)self.conv_1x1_output = nn.Conv2d(out_channel * 5, out_channel, 1, 1)def forward(self, x):size = x.shape[2:]image_features = self.mean(x)image_features = self.conv(image_features)image_features = F.upsample(image_features, size=size, mode='bilinear')atrous_block1 = self.atrous_block1(x)atrous_block6 = self.atrous_block6(x)atrous_block12 = self.atrous_block12(x)atrous_block18 = self.atrous_block18(x)net = self.conv_1x1_output(torch.cat([image_features, atrous_block1, atrous_block6,atrous_block12, atrous_block18], dim=1))return net

RFB:

class BasicConv(nn.Module):def __init__(self, in_planes, out_planes, kernel_size, stride=1, padding=0, dilation=1, groups=1, relu=True, bn=True):super(BasicConv, self).__init__()self.out_channels = out_planesif bn:self.conv = nn.Conv2d(in_planes, out_planes, kernel_size=kernel_size, stride=stride, padding=padding, dilation=dilation, groups=groups, bias=False)self.bn = nn.BatchNorm2d(out_planes, eps=1e-5, momentum=0.01, affine=True)self.relu = nn.ReLU(inplace=True) if relu else Noneelse:self.conv = nn.Conv2d(in_planes, out_planes, kernel_size=kernel_size, stride=stride, padding=padding, dilation=dilation, groups=groups, bias=True)self.bn = Noneself.relu = nn.ReLU(inplace=True) if relu else Nonedef forward(self, x):x = self.conv(x)if self.bn is not None:x = self.bn(x)if self.relu is not None:x = self.relu(x)return xclass BasicRFB(nn.Module):def __init__(self, in_planes, out_planes, stride=1, scale=0.1, map_reduce=8, vision=1, groups=1):super(BasicRFB, self).__init__()self.scale = scaleself.out_channels = out_planesinter_planes = in_planes // map_reduceself.branch0 = nn.Sequential(BasicConv(in_planes, inter_planes, kernel_size=1, stride=1, groups=groups, relu=False),BasicConv(inter_planes, 2 * inter_planes, kernel_size=(3, 3), stride=stride, padding=(1, 1), groups=groups),BasicConv(2 * inter_planes, 2 * inter_planes, kernel_size=3, stride=1, padding=vision, dilation=vision, relu=False, groups=groups))self.branch1 = nn.Sequential(BasicConv(in_planes, inter_planes, kernel_size=1, stride=1, groups=groups, relu=False),BasicConv(inter_planes, 2 * inter_planes, kernel_size=(3, 3), stride=stride, padding=(1, 1), groups=groups),BasicConv(2 * inter_planes, 2 * inter_planes, kernel_size=3, stride=1, padding=vision + 2, dilation=vision + 2, relu=False, groups=groups))self.branch2 = nn.Sequential(BasicConv(in_planes, inter_planes, kernel_size=1, stride=1, groups=groups, relu=False),BasicConv(inter_planes, (inter_planes // 2) * 3, kernel_size=3, stride=1, padding=1, groups=groups),BasicConv((inter_planes // 2) * 3, 2 * inter_planes, kernel_size=3, stride=stride, padding=1, groups=groups),BasicConv(2 * inter_planes, 2 * inter_planes, kernel_size=3, stride=1, padding=vision + 4, dilation=vision + 4, relu=False, groups=groups))self.ConvLinear = BasicConv(6 * inter_planes, out_planes, kernel_size=1, stride=1, relu=False)self.shortcut = BasicConv(in_planes, out_planes, kernel_size=1, stride=stride, relu=False)self.relu = nn.ReLU(inplace=False)def forward(self, x):x0 = self.branch0(x)x1 = self.branch1(x)x2 = self.branch2(x)out = torch.cat((x0, x1, x2), 1)out = self.ConvLinear(out)short = self.shortcut(x)out = out * self.scale + shortout = self.relu(out)return out

SPPCSPC:

class SPPCSPC(nn.Module):# CSP https://github.com/WongKinYiu/CrossStagePartialNetworksdef __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5, k=(5, 9, 13)):super(SPPCSPC, self).__init__()c_ = int(2 * c2 * e) # hidden channelsself.cv1 = Conv(c1, c_, 1, 1)self.cv2 = Conv(c1, c_, 1, 1)self.cv3 = Conv(c_, c_, 3, 1)self.cv4 = Conv(c_, c_, 1, 1)self.m = nn.ModuleList([nn.MaxPool2d(kernel_size=x, stride=1, padding=x // 2) for x in k])self.cv5 = Conv(4 * c_, c_, 1, 1)self.cv6 = Conv(c_, c_, 3, 1)self.cv7 = Conv(2 * c_, c2, 1, 1)def forward(self, x):x1 = self.cv4(self.cv3(self.cv1(x)))y1 = self.cv6(self.cv5(torch.cat([x1] + [m(x1) for m in self.m], 1)))y2 = self.cv2(x)return self.cv7(torch.cat((y1, y2), dim=1))

优化训练策略:SIoU / EIoU / WIoU / Focal_xIoU / MPDIoU 替换 IoU

优化激活函数:SiLU、Hardswish、MemoryEfficientMish、FReLU、AconC、MetaAconC 替换 Mish函数

V5 自带的激活函数代码在:yolo5/utils/activations.py,需要换直接调用即可,不用自己写了:

import torch

import torch.nn as nn

import torch.nn.functional as Fclass SiLU(nn.Module):@staticmethoddef forward(x):return x * torch.sigmoid(x)class Hardswish(nn.Module):@staticmethoddef forward(x):return x * F.hardtanh(x + 3, 0.0, 6.0) / 6.0 # for TorchScript, CoreML and ONNXclass Mish(nn.Module):@staticmethoddef forward(x):return x * F.softplus(x).tanh()class MemoryEfficientMish(nn.Module):class F(torch.autograd.Function):@staticmethoddef forward(ctx, x):ctx.save_for_backward(x)return x.mul(torch.tanh(F.softplus(x))) # x * tanh(ln(1 + exp(x)))@staticmethoddef backward(ctx, grad_output):x = ctx.saved_tensors[0]sx = torch.sigmoid(x)fx = F.softplus(x).tanh()return grad_output * (fx + x * sx * (1 - fx * fx))def forward(self, x):return self.F.apply(x)class FReLU(nn.Module):def __init__(self, c1, k=3): # ch_in, kernelsuper().__init__()self.conv = nn.Conv2d(c1, c1, k, 1, 1, groups=c1, bias=False)self.bn = nn.BatchNorm2d(c1)def forward(self, x):return torch.max(x, self.bn(self.conv(x)))class AconC(nn.Module):def __init__(self, c1):super().__init__()self.p1 = nn.Parameter(torch.randn(1, c1, 1, 1))self.p2 = nn.Parameter(torch.randn(1, c1, 1, 1))self.beta = nn.Parameter(torch.ones(1, c1, 1, 1))def forward(self, x):dpx = (self.p1 - self.p2) * xreturn dpx * torch.sigmoid(self.beta * dpx) + self.p2 * xclass MetaAconC(nn.Module):def __init__(self, c1, k=1, s=1, r=16): # ch_in, kernel, stride, rsuper().__init__()c2 = max(r, c1 // r)self.p1 = nn.Parameter(torch.randn(1, c1, 1, 1))self.p2 = nn.Parameter(torch.randn(1, c1, 1, 1))self.fc1 = nn.Conv2d(c1, c2, k, s, bias=True)self.fc2 = nn.Conv2d(c2, c1, k, s, bias=True)def forward(self, x):y = x.mean(dim=2, keepdims=True).mean(dim=3, keepdims=True)beta = torch.sigmoid(self.fc2(self.fc1(y))) # bug patch BN layers removeddpx = (self.p1 - self.p2) * xreturn dpx * torch.sigmoid(beta * dpx) + self.p2 * x

修改网络中的激活函数:yolo5/models/common.py

class Conv(nn.Module):default_act = nn.SiLU() # 默认激活函数,选择你要更换的激活函数def __init__(self, c1, c2, k=1, s=1, p=None, g=1, d=1, act=True):super().__init__()self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p, d), groups=g, dilation=d, bias=False)self.bn = nn.BatchNorm2d(c2)self.act = self.default_act if act is True else act if isinstance(act, nn.Module) else nn.Identity()

更换自己想要的激活函数:

default_act = nn.SiLU() # 默认激活函数

default_act = nn.Identity()

default_act = nn.Tanh()

default_act = nn.Sigmoid()

default_act = nn.ReLU()

default_act = nn.LeakyReLU(0.1)

default_act = nn.Hardswish()

default_act = nn.SiLU()

default_act = Mish()

default_act = FReLU(c2)

default_act = AconC(c2)

default_act = MetaAconC(c2)

default_act = SiLU_beta(c2)

default_act = FReLU_noBN_biasFalse(c2)

default_act = FReLU_noBN_biasTrue(c2)

class BottleneckCSP(nn.Module):def __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansionsuper().__init__()c_ = int(c2 * e) # hidden channelsself.cv1 = Conv(c1, c_, 1, 1)self.cv2 = nn.Conv2d(c1, c_, 1, 1, bias=False)self.cv3 = nn.Conv2d(c_, c_, 1, 1, bias=False)self.cv4 = Conv(2 * c_, c2, 1, 1)self.bn = nn.BatchNorm2d(2 * c_) # applied to cat(cv2, cv3)self.act = nn.SiLU() # 选择你要更换的激活函数

优化损失函数:多种类Loss, PolyLoss / VarifocalLoss / GFL / QualityFLoss / FL

优化损失函数:改进用于微小目标检测的 Normalized Gaussian Wasserstein Distance

引入 GSConv

修改三步:

- 把 XXX模块 放入

common.py yolo.py放入类名- 修改

yolov5.yaml

把 XXX模块 放入 common.py:

# ---------------------------GSConv Begin---------------------------

class Conv_Mish(nn.Module):# Standard convolutiondef __init__(self, c1, c2, k=1, s=1, p=None, g=1, act=True): # ch_in, ch_out, kernel, stride, padding, groupssuper().__init__()self.conv = nn.Conv2d(c1, c2, k, s, autopad(k, p), groups=g, bias=False)self.bn = nn.BatchNorm2d(c2)self.act = nn.Mish() if act else nn.Identity()def forward(self, x):return self.act(self.bn(self.conv(x)))def forward_fuse(self, x):return self.act(self.conv(x))class GSConv(nn.Module):# GSConv https://github.com/AlanLi1997/slim-neck-by-gsconvdef __init__(self, c1, c2, k=1, s=1, g=1, act=True):super().__init__()c_ = c2 // 2self.cv1 = Conv_Mish(c1, c_, k, s, None, g, act)self.cv2 = Conv_Mish(c_, c_, 5, 1, None, c_, act)def forward(self, x):x1 = self.cv1(x)x2 = torch.cat((x1, self.cv2(x1)), 1)# shuffle# y = x2.reshape(x2.shape[0], 2, x2.shape[1] // 2, x2.shape[2], x2.shape[3])# y = y.permute(0, 2, 1, 3, 4)# return y.reshape(y.shape[0], -1, y.shape[3], y.shape[4])b, n, h, w = x2.data.size()b_n = b * n // 2y = x2.reshape(b_n, 2, h * w)y = y.permute(1, 0, 2)y = y.reshape(2, -1, n // 2, h, w)return torch.cat((y[0], y[1]), 1)class GSConvns(GSConv):# GSConv with a normative-shuffle https://github.com/AlanLi1997/slim-neck-by-gsconvdef __init__(self, c1, c2, k=1, s=1, g=1, act=True):super().__init__(c1, c2, k=1, s=1, g=1, act=True)c_ = c2 // 2self.shuf = nn.Conv2d(c_ * 2, c2, 1, 1, 0, bias=False)def forward(self, x):x1 = self.cv1(x)x2 = torch.cat((x1, self.cv2(x1)), 1)# normative-shuffle, TRT supportedreturn nn.ReLU(self.shuf(x2))class GSBottleneck(nn.Module):# GS Bottleneck https://github.com/AlanLi1997/slim-neck-by-gsconvdef __init__(self, c1, c2, k=3, s=1, e=0.5):super().__init__()c_ = int(c2 * e)# for lightingself.conv_lighting = nn.Sequential(GSConv(c1, c_, 1, 1),GSConv(c_, c2, 3, 1, act=False))self.shortcut = Conv_Mish(c1, c2, 1, 1, act=False)def forward(self, x):return self.conv_lighting(x) + self.shortcut(x)class VoVGSCSP(nn.Module):# VoVGSCSP module with GSBottleneckdef __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):super().__init__()c_ = int(c2 * e) # hidden channelsself.cv1 = Conv_Mish(c1, c_, 1, 1)self.cv2 = Conv_Mish(c1, c_, 1, 1)# self.gc1 = GSConv(c_, c_, 1, 1)# self.gc2 = GSConv(c_, c_, 1, 1)# self.gsb = GSBottleneck(c_, c_, 1, 1)self.gsb = nn.Sequential(*(GSBottleneck(c_, c_, e=1.0) for _ in range(n)))self.res = Conv_Mish(c_, c_, 3, 1, act=False)self.cv3 = Conv_Mish(2 * c_, c2, 1) #def forward(self, x):x1 = self.gsb(self.cv1(x))y = self.cv2(x)return self.cv3(torch.cat((y, x1), dim=1))class GSBottleneckC(GSBottleneck):# cheap GS Bottleneck https://github.com/AlanLi1997/slim-neck-by-gsconvdef __init__(self, c1, c2, k=3, s=1):super().__init__(c1, c2, k, s)self.shortcut = DWConv(c1, c2, k, s, act=False)class VoVGSCSPC(VoVGSCSP):# cheap VoVGSCSP module with GSBottleneckdef __init__(self, c1, c2, n=1, shortcut=True, g=1, e=0.5):super().__init__(c1, c2)c_ = int(c2 * 0.5) # hidden channelsself.gsb = GSBottleneckC(c_, c_, 1, 1)

# ---------------------------GSConv End---------------------------

yolo.py 放入类名:

if m in {Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, nn.ConvTranspose2d, DWConvTranspose2d, C3x, GSConvns, VoVGSCSP, VoVGSCSPC}: # 添加 GSConvns, VoVGSCSP, VoVGSCSPCc1, c2 = ch[f], args[0]if c2 != no: # if not outputc2 = make_divisible(c2 * gw, 8)args = [c1, c2, *args[1:]]if m in {BottleneckCSP, C3, C3TR, C3Ghost, C3x, VoVGSCSP, VoVGSCSPC}: # 添加 VoVGSCSP, VoVGSCSPCargs.insert(2, n) # number of repeatsdef fuse(self): # fuse model Conv2d() + BatchNorm2d() layersLOGGER.info('Fusing layers... ')for m in self.model.modules():if isinstance(m, (Conv, DWConv)) and hasattr(m, 'bn'):m.conv = fuse_conv_and_bn(m.conv, m.bn) # update convdelattr(m, 'bn') # remove batchnormm.forward = m.forward_fuse # update forwarddef fuse(self): # fuse model Conv2d() + BatchNorm2d() layersLOGGER.info('Fusing layers... ')for m in self.model.modules():if isinstance(m, (Conv, DWConv, Conv_Mish)) and hasattr(m, 'bn'): # 添加 Conv_Mishm.conv = fuse_conv_and_bn(m.conv, m.bn) # update convdelattr(m, 'bn') # remove batchnormm.forward = m.forward_fuse # update forward

修改 yolov5.yaml:

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:- [10,13, 16,30, 33,23] # P3/8- [30,61, 62,45, 59,119] # P4/16- [116,90, 156,198, 373,326] # P5/32# YOLOv5 v6.0 backbone

backbone:# [from, number, module, args][[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2[-1, 1, Conv, [128, 3, 2]], # 1-P2/4[-1, 3, C3, [128]],[-1, 1, Conv, [256, 3, 2]], # 3-P3/8[-1, 6, C3, [256]],[-1, 1, Conv, [512, 3, 2]], # 5-P4/16[-1, 9, C3, [512]],[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32[-1, 3, C3, [1024]],[-1, 1, SPPF, [1024, 5]], # 9]# YOLOv5 v6.0 head

head:[[-1, 1, GSConv, [512, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 6], 1, Concat, [1]], # cat backbone P4[-1, 3, C3, [512, False]], # 13[-1, 1, GSConv, [256, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 4], 1, Concat, [1]], # cat backbone P3[-1, 3, C3, [256, False]], # 17 (P3/8-small)[-1, 1, GSConv, [256, 3, 2]],[[-1, 14], 1, Concat, [1]], # cat head P4[-1, 3, C3, [512, False]], # 20 (P4/16-medium)[-1, 1, GSConv, [512, 3, 2]],[[-1, 10], 1, Concat, [1]], # cat head P5[-1, 3, C3, [1024, False]], # 23 (P5/32-large)[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)]引入特征融合网络 BiFPN:提高检测精度和效率

修改三步:

- 把 XXX模块 放入

common.py yolo.py放入类名- 修改

yolov5.yaml

添加到 common.py 文件:

# BiFPN 两个特征图add操作

class BiFPN_Add2(nn.Module):def __init__(self, c1, c2):super(BiFPN_Add2, self).__init__()# 设置可学习参数 nn.Parameter的作用是:将一个不可训练的类型Tensor转换成可以训练的类型parameter# 并且会向宿主模型注册该参数 成为其一部分 即model.parameters()会包含这个parameter# 从而在参数优化的时候可以自动一起优化self.w = nn.Parameter(torch.ones(2, dtype=torch.float32), requires_grad=True)self.epsilon = 0.0001self.conv = nn.Conv2d(c1, c2, kernel_size=1, stride=1, padding=0)self.silu = nn.SiLU()def forward(self, x):w = self.wweight = w / (torch.sum(w, dim=0) + self.epsilon)return self.conv(self.silu(weight[0] * x[0] + weight[1] * x[1]))# 三个特征图add操作

class BiFPN_Add3(nn.Module):def __init__(self, c1, c2):super(BiFPN_Add3, self).__init__()self.w = nn.Parameter(torch.ones(3, dtype=torch.float32), requires_grad=True)self.epsilon = 0.0001self.conv = nn.Conv2d(c1, c2, kernel_size=1, stride=1, padding=0)self.silu = nn.SiLU()def forward(self, x):w = self.wweight = w / (torch.sum(w, dim=0) + self.epsilon) # Fast normalized fusionreturn self.conv(self.silu(weight[0] * x[0] + weight[1] * x[1] + weight[2] * x[2]))

修改 yolo.py 的 parse_model:

def parse_model(d, ch): elif m is Concat:c2 = sum(ch[x] for x in f)elif m in [BiFPN_Add2, BiFPN_Add3]: # 在 Concat 后,添加 BiFPN 结构c2 = max([ch[x] for x in f)

添加 ultralytics/yolo5/train.py ,导入 BiFPN_Add3, BiFPN_Add2:

from models.common import BiFPN_Add3, BiFPN_Add2

将 BiFPN_Add2, BiFPN_Add3 的参数 w 加入 g1:

g0, g1, g2 = [], [], [] # optimizer parameter groupsfor v in model.modules():······# BiFPN_Concatelif isinstance(v, BiFPN_Add2) and hasattr(v, 'w') and isinstance(v.w, nn.Parameter):g1.append(v.w)elif isinstance(v, BiFPN_Add3) and hasattr(v, 'w') and isinstance(v.w, nn.Parameter):g1.append(v.w)

修改 yolov5.yaml 的 Concat 换成 BiFPN_Add:

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:- [10,13, 16,30, 33,23] # P3/8- [30,61, 62,45, 59,119] # P4/16- [116,90, 156,198, 373,326] # P5/32# YOLOv5 v6.0 backbone

backbone:# [from, number, module, args][[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2[-1, 1, Conv, [128, 3, 2]], # 1-P2/4[-1, 3, C3, [128]],[-1, 1, Conv, [256, 3, 2]], # 3-P3/8[-1, 6, C3, [256]],[-1, 1, Conv, [512, 3, 2]], # 5-P4/16[-1, 9, C3, [512]],[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32[-1, 3, C3, [1024]],[-1, 1, SPPF, [1024, 5]], # 9]# YOLOv5 v6.1 BiFPN head

head:[[-1, 1, Conv, [512, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 6], 1, BiFPN_Add2, [256, 256]], # cat backbone P4[-1, 3, C3, [512, False]], # 13[-1, 1, Conv, [256, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 4], 1, BiFPN_Add2, [128, 128]], # cat backbone P3[-1, 3, C3, [256, False]], # 17 [-1, 1, Conv, [512, 3, 2]], [[-1, 13, 6], 1, BiFPN_Add3, [256, 256]], #v5s通道数是默认参数的一半[-1, 3, C3, [512, False]], # 20 [-1, 1, Conv, [512, 3, 2]],[[-1, 10], 1, BiFPN_Add2, [256, 256]], # cat head P5[-1, 3, C3, [1024, False]], # 23 [[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)]引入 YOLOv6 颈部 BiFusion Neck

引入注意力机制:在 C3 模块添加添加注意力机制

添加【SE】【CBAM】【 ECA 】【CA】

添加【SimAM】【CoTAttention】【SKAttention】【DoubleAttention】

添加【EffectiveSE】【GlobalContext】【GatherExcite】【MHSA】

添加【Triplet】【SpatialGroupEnhance】【NAM】【S2】

引入小目标检测:用于低分辨率图像和小物体的新 CNN 模块SPD-Conv

修改三步:

- 把 XXX模块 放入

common.py yolo.py放入类名- 修改

yolov5.yaml

把 XXX模块 放入 common.py:

class space_to_depth(nn.Module):# Changing the dimension of the Tensordef __init__(self, dimension=1):super().__init__()self.d = dimensiondef forward(self, x):return torch.cat([x[..., ::2, ::2], x[..., 1::2, ::2], x[..., ::2, 1::2], x[..., 1::2, 1::2]], 1)

yolo.py 放入类名:

elif m is Contract: c2 = ch[f] * args[0] ** 2

elif m is space_to_depth: # 在 Contract 后面加入 space_to_depthc2 = 4 * ch[f]

修改 yolov5.yaml:

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:- [10,13, 16,30, 33,23] # P3/8- [30,61, 62,45, 59,119] # P4/16- [116,90, 156,198, 373,326] # P5/32# YOLOv5 v6.0 backbone

backbone:# [from, number, module, args][[-1, 1, Focus, [64, 3]], # 0-P1/2[-1, 1, Conv, [128, 3, 1]], # 1[-1,1,space_to_depth,[1]], # 2 -P2/4[-1, 3, C3, [128]], # 3[-1, 1, Conv, [256, 3, 1]], # 4[-1,1,space_to_depth,[1]], # 5 -P3/8[-1, 6, C3, [256]], # 6[-1, 1, Conv, [512, 3, 1]], # 7-P4/16[-1,1,space_to_depth,[1]], # 8 -P4/16[-1, 9, C3, [512]], # 9[-1, 1, Conv, [1024, 3, 1]], # 10-P5/32[-1,1,space_to_depth,[1]], # 11 -P5/32[-1, 3, C3, [1024]], # 12[-1, 1, SPPF, [1024, 5]], # 13]# YOLOv5 v6.0 head

head:[[-1, 1, Conv, [512, 1, 1]], # 14[-1, 1, nn.Upsample, [None, 2, 'nearest']], # 15[[-1, 9], 1, Concat, [1]], # 16 cat backbone P4[-1, 3, C3, [512, False]], # 17[-1, 1, Conv, [256, 1, 1]], # 18[-1, 1, nn.Upsample, [None, 2, 'nearest']], # 19[[-1, 6], 1, Concat, [1]], # 20 cat backbone P3[-1, 3, C3, [256, False]], # 21 (P3/8-small)[-1, 1, Conv, [256, 3, 1]], # 22[-1,1,space_to_depth,[1]], # 23 -P2/4[[-1, 18], 1, Concat, [1]], # 24 cat head P4[-1, 3, C3, [512, False]], # 25 (P4/16-medium)[-1, 1, Conv, [512, 3, 1]], # 26[-1,1,space_to_depth,[1]], # 27 -P2/4[[-1, 14], 1, Concat, [1]], # 28 cat head P5[-1, 3, C3, [1024, False]], # 29 (P5/32-large)[[21, 25, 29], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)]

引入轻量化卷积 替换 传统卷积

引入独立注意力 FPN + PAN 结构 替换 传统卷积

修改三步:

- 把 XXX模块 放入

common.py yolo.py放入类名- 修改

yolov5.yaml

把 XXX模块 放入 common.py:

class AttentionConv(nn.Module):def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=1, groups=1, bias=False):super(AttentionConv, self).__init__()self.out_channels = out_channelsself.kernel_size = kernel_sizeself.stride = strideself.padding = paddingself.groups = groupsassert self.out_channels % self.groups == 0, "out_channels should be divided by groups. (example: out_channels: 40, groups: 4)"self.rel_h = nn.Parameter(torch.randn(out_channels // 2, 1, 1, kernel_size, 1), requires_grad=True)self.rel_w = nn.Parameter(torch.randn(out_channels // 2, 1, 1, 1, kernel_size), requires_grad=True)self.key_conv = nn.Conv2d(in_channels, out_channels, kernel_size=1, bias=bias)self.query_conv = nn.Conv2d(in_channels, out_channels, kernel_size=1, bias=bias)self.value_conv = nn.Conv2d(in_channels, out_channels, kernel_size=1, bias=bias)self.reset_parameters()def forward(self, x):batch, channels, height, width = x.size()padded_x = F.pad(x, [self.padding, self.padding, self.padding, self.padding])q_out = self.query_conv(x)k_out = self.key_conv(padded_x)v_out = self.value_conv(padded_x)k_out = k_out.unfold(2, self.kernel_size, self.stride).unfold(3, self.kernel_size, self.stride)v_out = v_out.unfold(2, self.kernel_size, self.stride).unfold(3, self.kernel_size, self.stride)k_out_h, k_out_w = k_out.split(self.out_channels // 2, dim=1)k_out = torch.cat((k_out_h + self.rel_h, k_out_w + self.rel_w), dim=1)k_out = k_out.contiguous().view(batch, self.groups, self.out_channels // self.groups, height, width, -1)v_out = v_out.contiguous().view(batch, self.groups, self.out_channels // self.groups, height, width, -1)q_out = q_out.view(batch, self.groups, self.out_channels // self.groups, height, width, 1)out = q_out * k_outout = F.softmax(out, dim=-1)out = torch.einsum('bnchwk,bnchwk -> bnchw', out, v_out).view(batch, -1, height, width)return outdef reset_parameters(self):init.kaiming_normal_(self.key_conv.weight, mode='fan_out', nonlinearity='relu')init.kaiming_normal_(self.value_conv.weight, mode='fan_out', nonlinearity='relu')init.kaiming_normal_(self.query_conv.weight, mode='fan_out', nonlinearity='relu')init.normal_(self.rel_h, 0, 1)init.normal_(self.rel_w, 0, 1)class AttentionStem(nn.Module):def __init__(self, in_channels, out_channels, kernel_size, stride=1, padding=0, groups=1, m=4, bias=False):super(AttentionStem, self).__init__()self.out_channels = out_channelsself.kernel_size = kernel_sizeself.stride = strideself.padding = paddingself.groups = groupsself.m = massert self.out_channels % self.groups == 0, "out_channels should be divided by groups. (example: out_channels: 40, groups: 4)"self.emb_a = nn.Parameter(torch.randn(out_channels // groups, kernel_size), requires_grad=True)self.emb_b = nn.Parameter(torch.randn(out_channels // groups, kernel_size), requires_grad=True)self.emb_mix = nn.Parameter(torch.randn(m, out_channels // groups), requires_grad=True)self.key_conv = nn.Conv2d(in_channels, out_channels, kernel_size=1, bias=bias)self.query_conv = nn.Conv2d(in_channels, out_channels, kernel_size=1, bias=bias)self.value_conv = nn.ModuleList([nn.Conv2d(in_channels, out_channels, kernel_size=1, bias=bias) for _ in range(m)])self.reset_parameters()def forward(self, x):batch, channels, height, width = x.size()padded_x = F.pad(x, [self.padding, self.padding, self.padding, self.padding])q_out = self.query_conv(x)k_out = self.key_conv(padded_x)v_out = torch.stack([self.value_conv[_](padded_x) for _ in range(self.m)], dim=0)k_out = k_out.unfold(2, self.kernel_size, self.stride).unfold(3, self.kernel_size, self.stride)v_out = v_out.unfold(3, self.kernel_size, self.stride).unfold(4, self.kernel_size, self.stride)k_out = k_out[:, :, :height, :width, :, :]v_out = v_out[:, :, :, :height, :width, :, :]emb_logit_a = torch.einsum('mc,ca->ma', self.emb_mix, self.emb_a)emb_logit_b = torch.einsum('mc,cb->mb', self.emb_mix, self.emb_b)emb = emb_logit_a.unsqueeze(2) + emb_logit_b.unsqueeze(1)emb = F.softmax(emb.view(self.m, -1), dim=0).view(self.m, 1, 1, 1, 1, self.kernel_size, self.kernel_size)v_out = emb * v_outk_out = k_out.contiguous().view(batch, self.groups, self.out_channels // self.groups, height, width, -1)v_out = v_out.contiguous().view(self.m, batch, self.groups, self.out_channels // self.groups, height, width, -1)v_out = torch.sum(v_out, dim=0).view(batch, self.groups, self.out_channels // self.groups, height, width, -1)q_out = q_out.view(batch, self.groups, self.out_channels // self.groups, height, width, 1)out = q_out * k_outout = F.softmax(out, dim=-1)out = torch.einsum('bnchwk,bnchwk->bnchw', out, v_out).view(batch, -1, height, width)return outdef reset_parameters(self):init.kaiming_normal_(self.key_conv.weight, mode='fan_out', nonlinearity='relu')init.kaiming_normal_(self.query_conv.weight, mode='fan_out', nonlinearity='relu')for _ in self.value_conv:init.kaiming_normal_(_.weight, mode='fan_out', nonlinearity='relu')init.normal_(self.emb_a, 0, 1)init.normal_(self.emb_b, 0, 1)init.normal_(self.emb_mix, 0, 1)

yolo.py 放入类名:

if m in {Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, nn.ConvTranspose2d, DWConvTranspose2d, C3x, AttentionConv, AttentionStem}: # AttentionConv, AttentionStem

修改 yolov5.yaml:

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:- [10,13, 16,30, 33,23] # P3/8- [30,61, 62,45, 59,119] # P4/16- [116,90, 156,198, 373,326] # P5/32# YOLOv5 v6.0 backbone

backbone:# [from, number, module, args][[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2[-1, 1, Conv, [128, 3, 2]], # 1-P2/4[-1, 3, C3, [128]],[-1, 1, Conv, [256, 3, 2]], # 3-P3/8[-1, 6, C3, [256]],[-1, 1, Conv, [512, 3, 2]], # 5-P4/16[-1, 9, C3, [512]],[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32[-1, 3, C3, [1024]],[-1, 1, SPPF, [1024, 5]], # 9]# YOLOv5 v6.0 head

head:[ [ -1, 1, AttentionConv, [ 512, 3, 1, 1 ] ],[ -1, 1, nn.Upsample, [ None, 2, 'nearest' ] ],[ [ -1, 6 ], 1, Concat, [ 1 ] ], # cat backbone P4[ -1, 1, AttentionConv, [ 512, 3, 1, 1 ] ] , # 13[ -1, 1, AttentionConv, [ 256, 3, 1, 1 ] ],[ -1, 1, nn.Upsample, [ None, 2, 'nearest' ] ],[ [ -1, 4 ], 1, Concat, [ 1 ] ], # cat backbone P3[ -1, 1, AttentionConv, [ 256, 3, 1, 1 ] ], # 17 (P3/8-small)[-1, 1, Conv, [256, 3, 2]],[[-1, 14], 1, Concat, [1]], # cat head P4[-1, 3, AttentionConv, [512, 3, 1, 1]], # 20 (P4/16-medium)[-1, 1, Conv, [512, 3, 2]],[[-1, 10], 1, Concat, [1]], # cat head P5[-1, 3, AttentionConv, [1024, 3, 1, 1]], # 23 (P5/32-large)[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)]

引入 YOLOX 解耦头

yolo.py 添加:

class DecoupledHead(nn.Module):def __init__(self, ch=256, nc=80, width=1.0, anchors=()):super().__init__()self.nc = nc # number of classesself.nl = len(anchors) # number of detection layers 3self.na = len(anchors[0]) // 2 # number of anchors 3self.merge = Conv(ch, 256 * width, 1, 1)self.cls_convs1 = Conv(256 * width, 256 * width, 3, 1, 1)self.cls_convs2 = Conv(256 * width, 256 * width, 3, 1, 1)self.reg_convs1 = Conv(256 * width, 256 * width, 3, 1, 1)self.reg_convs2 = Conv(256 * width, 256 * width, 3, 1, 1)self.cls_preds = nn.Conv2d(256 * width, self.nc * self.na, 1)self.reg_preds = nn.Conv2d(256 * width, 4 * self.na, 1)self.obj_preds = nn.Conv2d(256 * width, 1 * self.na, 1)def forward(self, x):x = self.merge(x)# 分类=3x3conv + 3x3conv + 1x1convpredx1 = self.cls_convs1(x)x1 = self.cls_convs2(x1)x1 = self.cls_preds(x1)# 回归=3x3conv(共享) + 3x3conv(共享) + 1x1predx2 = self.reg_convs1(x)x2 = self.reg_convs2(x2)x21 = self.reg_preds(x2)# 置信度=3x3conv(共享)+ 3x3conv(共享) + 1x1predx22 = self.obj_preds(x2)out = torch.cat([x21, x22, x1], 1)return out

class Detect(nn.Module):def __init__(self, nc=80, anchors=(), ch=(), inplace=True): # detection layerself.m = nn.ModuleList(DecoupledHead(x, self.no * self.na, 1) for x in ch) # DecoupledHead 换成 nn.Conv2d

添加俩行:

class Detect(nn.Module):def __init__(self, nc=80, anchors=(), ch=(), inplace=True, Decoupled=False): # 添加:Decoupled=False ······self.decoupled = Decoupled # 添加:self.decoupled = Decoupled

修改yaml文件,最后一行加 true:

# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:- [10,13, 16,30, 33,23] # P3/8- [30,61, 62,45, 59,119] # P4/16- [116,90, 156,198, 373,326] # P5/32# YOLOv5 v6.1 backbone

backbone:# [from, number, module, args][[-1, 1, Conv, [64, 6, 2, 2]], # 0-P1/2[-1, 1, Conv, [128, 3, 2]], # 1-P2/4[-1, 3, C3, [128]],[-1, 1, Conv, [256, 3, 2]], # 3-P3/8[-1, 6, C3, [256]],[-1, 1, Conv, [512, 3, 2]], # 5-P4/16[-1, 9, C3, [512]],[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32[-1, 3, C3, [1024]],[-1, 1, SPPF, [1024, 5]], # 9]# YOLOv5 v6.0 head

head:[[-1, 1, Conv, [512, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 6], 1, Concat, [1]], # cat backbone P4[-1, 3, C3, [512, False]], # 13[-1, 1, Conv, [256, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 4], 1, Concat, [1]], # cat backbone P3[-1, 3, C3, [256, False]], # 17 (P3/8-small)[-1, 1, Conv, [256, 3, 2]],[[-1, 14], 1, Concat, [1]], # cat head P4[-1, 3, C3, [512, False]], # 20 (P4/16-medium)[-1, 1, Conv, [512, 3, 2]],[[-1, 10], 1, Concat, [1]], # cat head P5[-1, 3, C3, [1024, False]], # 23 (P5/32-large)[[17, 20, 23], 1, Detect, [nc, anchors,True]], # Detect(P3, P4, P5)]

引入 YOLOv8 的 C2f 模块

修改三步:

- 把 XXX模块 放入

common.py yolo.py放入类名- 修改

yolov5.yaml

把 XXX模块 放入 common.py:

class v8_Bottleneck(nn.Module):# Standard bottleneckdef __init__(self, c1, c2, shortcut=True, g=1, k=(3, 3), e=0.5): # ch_in, ch_out, shortcut, groups, kernels, expandsuper().__init__()c_ = int(c2 * e) # hidden channelsself.cv1 = Conv(c1, c_, k[0], 1)self.cv2 = Conv(c_, c2, k[1], 1, g=g)self.add = shortcut and c1 == c2def forward(self, x):return x + self.cv2(self.cv1(x)) if self.add else self.cv2(self.cv1(x))class C2f(nn.Module):# CSP Bottleneck with 2 convolutionsdef __init__(self, c1, c2, n=1, shortcut=False, g=1, e=0.5): # ch_in, ch_out, number, shortcut, groups, expansionsuper().__init__()self.c = int(c2 * e) # hidden channelsself.cv1 = Conv(c1, 2 * self.c, 1, 1)self.cv2 = Conv((2 + n) * self.c, c2, 1) # optional act=FReLU(c2)self.m = nn.ModuleList(v8_Bottleneck(self.c, self.c, shortcut, g, k=((3, 3), (3, 3)), e=1.0) for _ in range(n))def forward(self, x):y = list(self.cv1(x).split((self.c, self.c), 1))y.extend(m(y[-1]) for m in self.m)return self.cv2(torch.cat(y, 1))

yolo.py 放入 C2F:

if m in {Conv, GhostConv, Bottleneck, GhostBottleneck, SPP, SPPF, DWConv, MixConv2d, Focus, CrossConv,BottleneckCSP, C3, C3TR, C3SPP, C3Ghost, nn.ConvTranspose2d, DWConvTranspose2d, C3x, CARAFE, C2F}: if m in {BottleneckCSP, C3, C3TR, C3Ghost, C3x, C2F}:args.insert(2, n) # number of repeats

修改配置文件:

# YOLOv5 🚀 by Ultralytics, GPL-3.0 license# Parameters

nc: 80 # number of classes

depth_multiple: 0.33 # model depth multiple

width_multiple: 0.50 # layer channel multiple

anchors:- [10,13, 16,30, 33,23] # P3/8- [30,61, 62,45, 59,119] # P4/16- [116,90, 156,198, 373,326] # P5/32# YOLOv5 v6.0 backbone

backbone:# [from, number, module, args][[-1, 1, Conv, [64, 3, 2 ]], # 0-P1/2[-1, 1, Conv, [128, 3, 2]], # 1-P2/4[-1, 3, C2f, [128, True]],[-1, 1, Conv, [256, 3, 2]], # 3-P3/8[-1, 6, C2f, [256, True]],[-1, 1, Conv, [512, 3, 2]], # 5-P4/16[-1, 6, C2f, [512, True]],[-1, 1, Conv, [1024, 3, 2]], # 7-P5/32[-1, 3, C2f, [1024, True]],[-1, 1, SPPF, [1024]]]# YOLOv5 v6.0 head

head:[[-1, 1, Conv, [512, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 6], 1, Concat, [1]], # cat backbone P4[-1, 3, C3, [512, False]], # 13[-1, 1, Conv, [256, 1, 1]],[-1, 1, nn.Upsample, [None, 2, 'nearest']],[[-1, 4], 1, Concat, [1]], # cat backbone P3[-1, 3, C3, [256, False]], # 17 (P3/8-small)[-1, 1, Conv, [256, 3, 2]],[[-1, 14], 1, Concat, [1]], # cat head P4[-1, 3, C3, [512, False]], # 20 (P4/16-medium)[-1, 1, Conv, [512, 3, 2]],[[-1, 10], 1, Concat, [1]], # cat head P5[-1, 3, C3, [1024, False]], # 23 (P5/32-large)[[17, 20, 23], 1, Detect, [nc, anchors]], # Detect(P3, P4, P5)]

引入 RepVGG 重参数化模块

引入密集连接模块

模型剪枝

知识蒸馏

热力图可视化

YOLOv7

更换骨干网络之 SwinTransformer

更换骨干网络之 EfficientNet

优化上采样方式:轻量化算子CARAFE 替换 传统(最近邻 / 双线性 / 双立方 / 三线性 / 转置卷积)

优化空间金字塔池化:SPPF / SimSPPF / ASPP / RFB / SPPCSPC / SPPFCSPC 替换 SPP

优化训练策略:SIoU / EIoU / WIoU / Focal_xIoU / MPDIoU 替换 IoU

优化激活函数:SiLU、Hardswish、MemoryEfficientMish、FReLU、AconC、MetaAconC 替换 Mish函数

优化标签分配策略:基于TOOD标签分配策略改进

优化损失函数:SIoU等结合FocalLoss应用:组成Focal-EIoU|Focal-SIoU|Focal-CIoU|Focal-GIoU、DIoU

优化损失函数:Wise-IoU

优化特征集成方法:目标检测的渐近特征金字塔网络AsymptoticFPN

引入 BiFPN 特征融合网络:提高检测精度和效率

引入 YOLOv6 颈部 BiFusion Neck

引入小目标检测:用于低分辨率图像和小物体的新 CNN 模块SPD-Conv

引入轻量化卷积 替换 传统卷积

引入 YOLOX 解耦头

引入 YOLOv8 的 C2f 模块

引入 RepVGG 重参数化模块

引入密集连接模块

引入用于小目标检测的归一化高斯 Wasserstein Distance Loss

引入Generalized Focal Loss

模型剪枝

知识蒸馏

热力图可视化

YOLOv8

更换主干网络之 VanillaNet

更换主干网络之 FasterNet

更换骨干网络之 SwinTransformer

更换骨干网络之 CReToNeXt

更换主干之 MAE

更换主干之 QARepNeXt

更换主干之 RepLKNet

引入注意力机制:在 C2F 模块添加添加注意力机制

添加【SE】 【CBAM】【 ECA 】【CA 】

添加【SimAM】 【CoTAttention】【SKAttention】【DoubleAttention】

添加【EffectiveSE】【GlobalContext】【GatherExcite】【MHSA】

添加【Triplet】【SpatialGroupEnhance】【NAM】【S2】

添加【ParNet】【CrissCross】【GAM】【ParallelPolarized】【Sequential】

引入 RepVGG 重参数化模块

引入 SPD-Conv 替换 Conv

引入跨空间学习的高效多尺度注意力 Efficient Multi-Scale Attention

引入选择性注意力 LSK 模块

引入空间通道重组卷积

引入轻量级通用上采样算子CARAFE

引入全维动态卷积

引入 BiFPN 结构

引入 Slim-neck

引入中心化特征金字塔 EVC 模块

引入渐进特征金字塔网络 AFPN 结构

引入大目标检测头、小目标检测头

引入谷歌 Lion 优化器

引入小目标检测结构:CBiF、BiFB

优化FPN结构

优化损失函数:FocalLoss结合变种IoU套装

优化损失函数:Wise-IoU

优化损失函数:遮挡损失函数 Repulsion Loss

优化卷积:PWConv

优化 EfficientRep 结构:结合硬件感知神经网络设计的 Repvgg 的 ConvNet 网络结构

优化特征集成方法:目标检测的渐近特征金字塔网络AsymptoticFPN

优化检测方法:EfficiCLNMS

相关文章:

YOLO v5、v7、v8 模型优化

YOLO v5、v7、v8 模型优化 魔改YOLOyaml 文件解读模型选择在线做数据标注 YOLO算法改进YOLOv5yolo.pyyolov5.yaml更换骨干网络之 SwinTransformer更换骨干网络之 EfficientNet优化上采样方式:轻量化算子CARAFE 替换 传统(最近邻 / 双线性 / 双立方 / 三线…...

回归预测 | MATLAB实现SSA-BP麻雀搜索算法优化BP神经网络多输入单输出回归预测(多指标,多图)

回归预测 | MATLAB实现SSA-BP麻雀搜索算法优化BP神经网络多输入单输出回归预测(多指标,多图) 目录 回归预测 | MATLAB实现SSA-BP麻雀搜索算法优化BP神经网络多输入单输出回归预测(多指标,多图)效果一览基本…...

QT的mysql(数据库)最佳实践和常见问题解答

涉及到数据库,首先安利一个软件Navicat Premium,用来查询数据库很方便 QMysql驱动是Qt SQL模块使用的插件,用于与MySQL数据库进行通信。要编译QMysql驱动,您需要满足以下条件: 您需要安装MySQL的客户端库和开发头文件…...

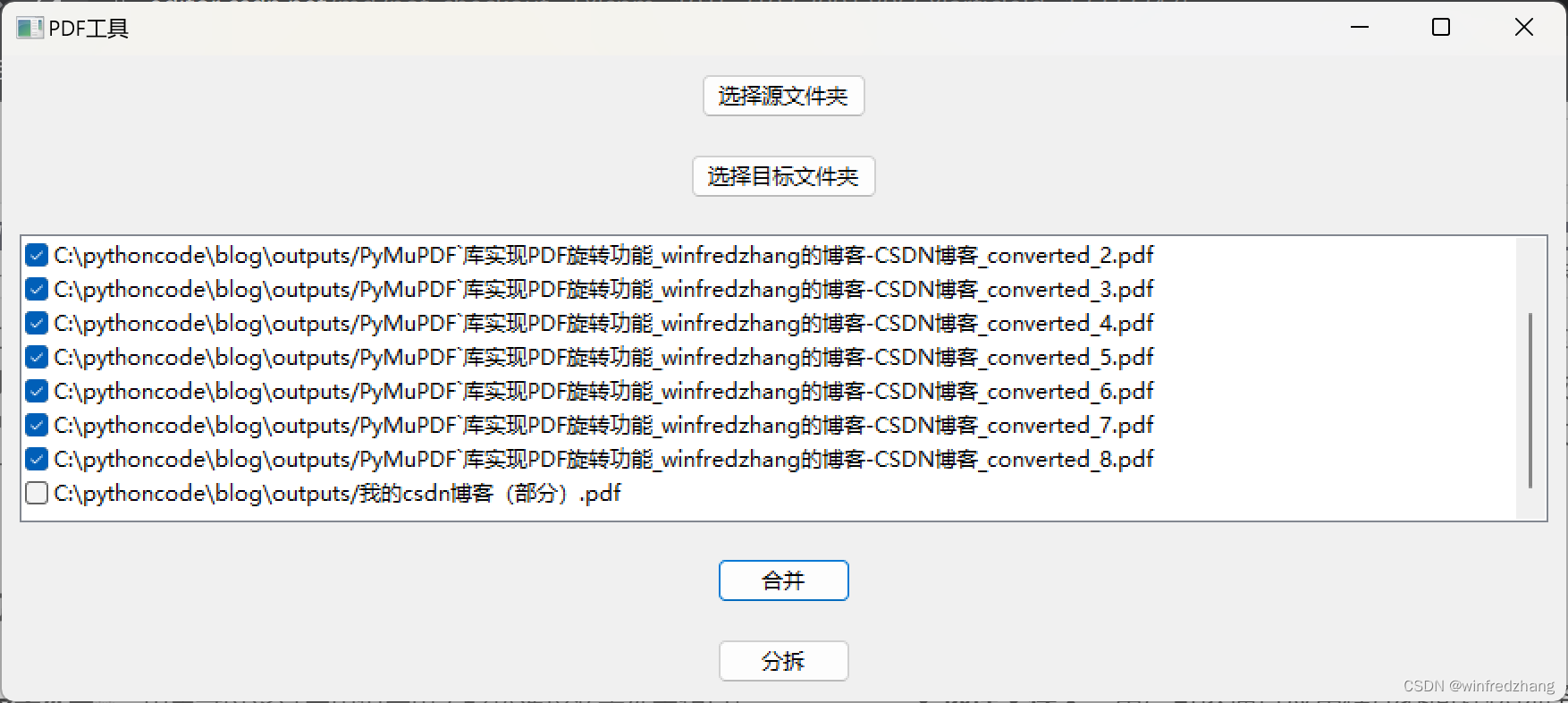

使用PyMuPDF库的PDF合并和分拆程序

PDF工具应用程序是一个使用wxPython和PyMuPDF库编写的简单工具,用于合并和分拆PDF文件。它提供了一个用户友好的图形界面,允许用户选择源文件夹和目标文件夹,并对PDF文件进行操作。 C:\pythoncode\blog\pdfmergandsplit.py 功能特点 选择文…...

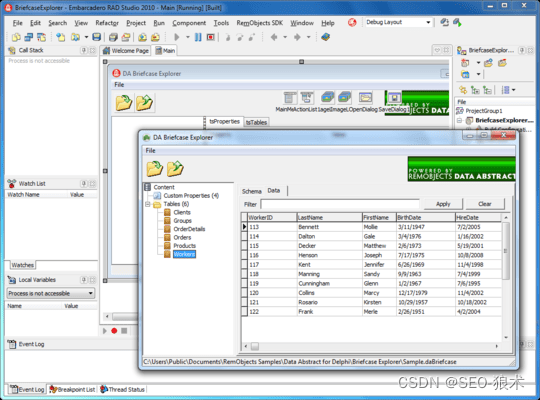

Data Abstract for .NET and Delphi Crack

Data Abstract for .NET and Delphi Crack .NET和Delphi的数据摘要是一套或RAD工具,用于在.NET、Delphi和Mono中编写多层解决方案。NET和Delphi的数据摘要是一个套件,包括RemObjects.NET和Delphi版本的数据摘要。RemObjects Data Abstract允许您创建访问…...

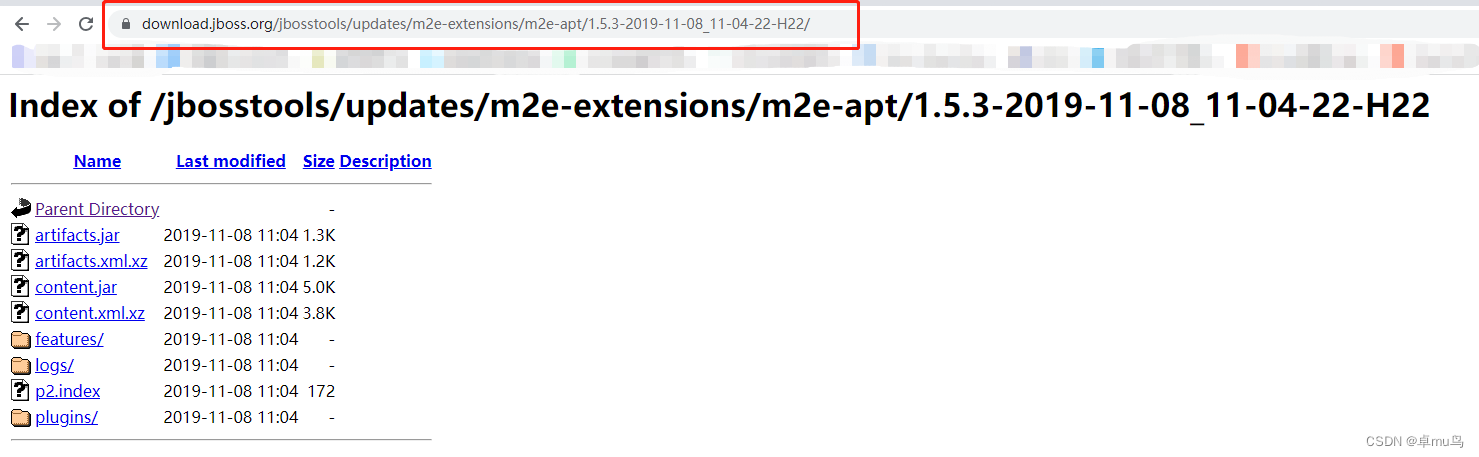

Eclipse集成MapStruct

Eclipse集成MapStruct 在Eclipse中添加MapStruct依赖配置Eclipse支持MapStruct①安装 m2e-aptEclipse Marketplace的方式安装Install new software的方式安装(JDK8用到) ②添加到pom.xml 今天拿到同事其他项目的源码,导入并运行的时候抛出了异…...

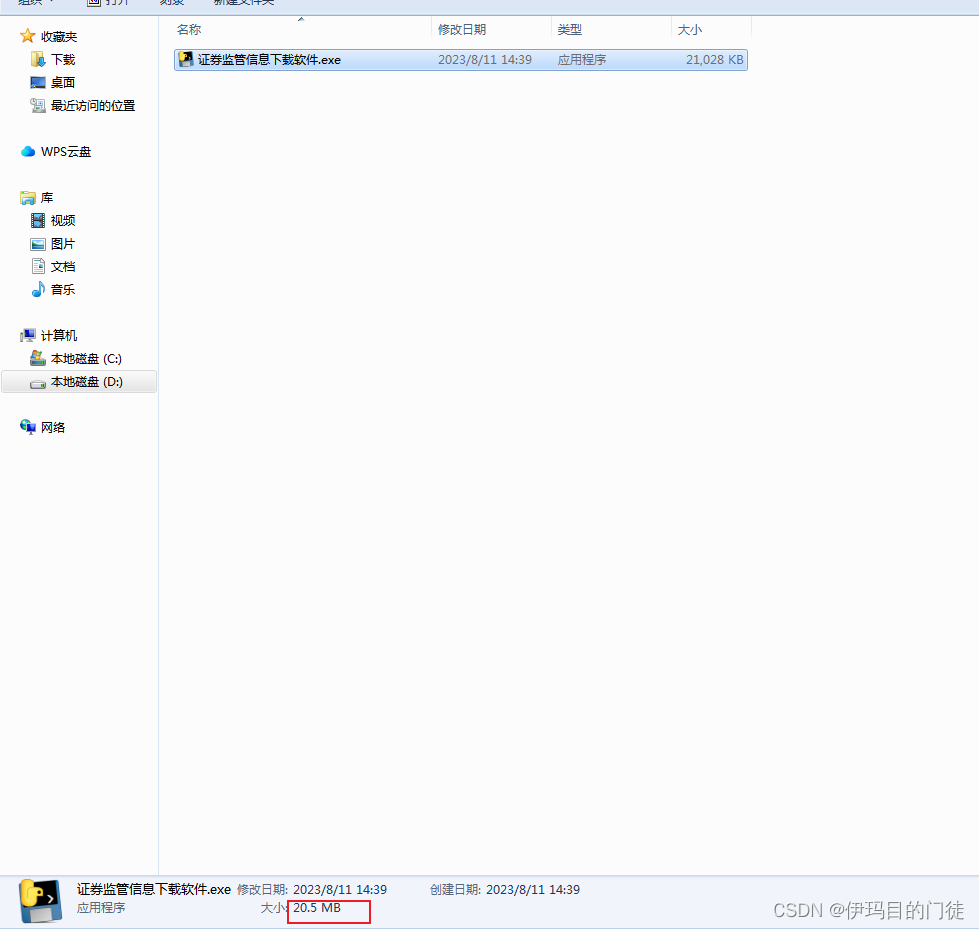

采用pycharm在虚拟环境使用pyinstaller打包python程序

一年多以前,我写过一篇博客描述了如何虚拟环境打包,这一次有所不同,直接用IDE pycharm构成虚拟环境并运行pyinstaller打包 之前的博文: 虚拟环境venu使用pyinstaller打包python程序_伊玛目的门徒的博客-CSDN博客 第一步…...

Rx.NET in Action 中文介绍 前言及序言

Rx 处理器目录 (Catalog of Rx operators) 目标可选方式Rx 处理器(Operator)创建 Observable Creating Observables直接创建 By explicit logicCreate Defer根据范围创建 By specificationRangeRepeatGenerateTimerInterval Return使用预设 Predefined primitivesThrow …...

Azure Blob存储使用

创建存储账户,性能选择标准即可,冗余选择本地冗余存储即可 容器选择类别选择专用即可 可以上传文件到blob中 打开文件可以看到文件的访问路径 4.编辑中可以修改文件 复制链接,尝试访问,可以看到没有办法访问,因为创建容器的时候选…...

mysql、redis面试题

mysql 相关 1、数据库优化查询方法 外键、索引、联合查询、选择特定字段等等2、简述mysql和redis区别 redis: 内存型非关系数据库,数据保存在内存中,速度快mysql:关系型数据库,数据保存在磁盘中,检索的话&…...

22、touchGFX学习Model-View-Presenter设计模式

touchGFX采用MVP架构,如下所示: 本文界面如下所示: 本文将实现两个操作: 1、触摸屏点击开关按键实现打印开关显示信息,模拟开关灯效果 2、板载案按键控制触摸屏LED灯的显示和隐藏 一、触摸屏点击开关按键实现打印开…...

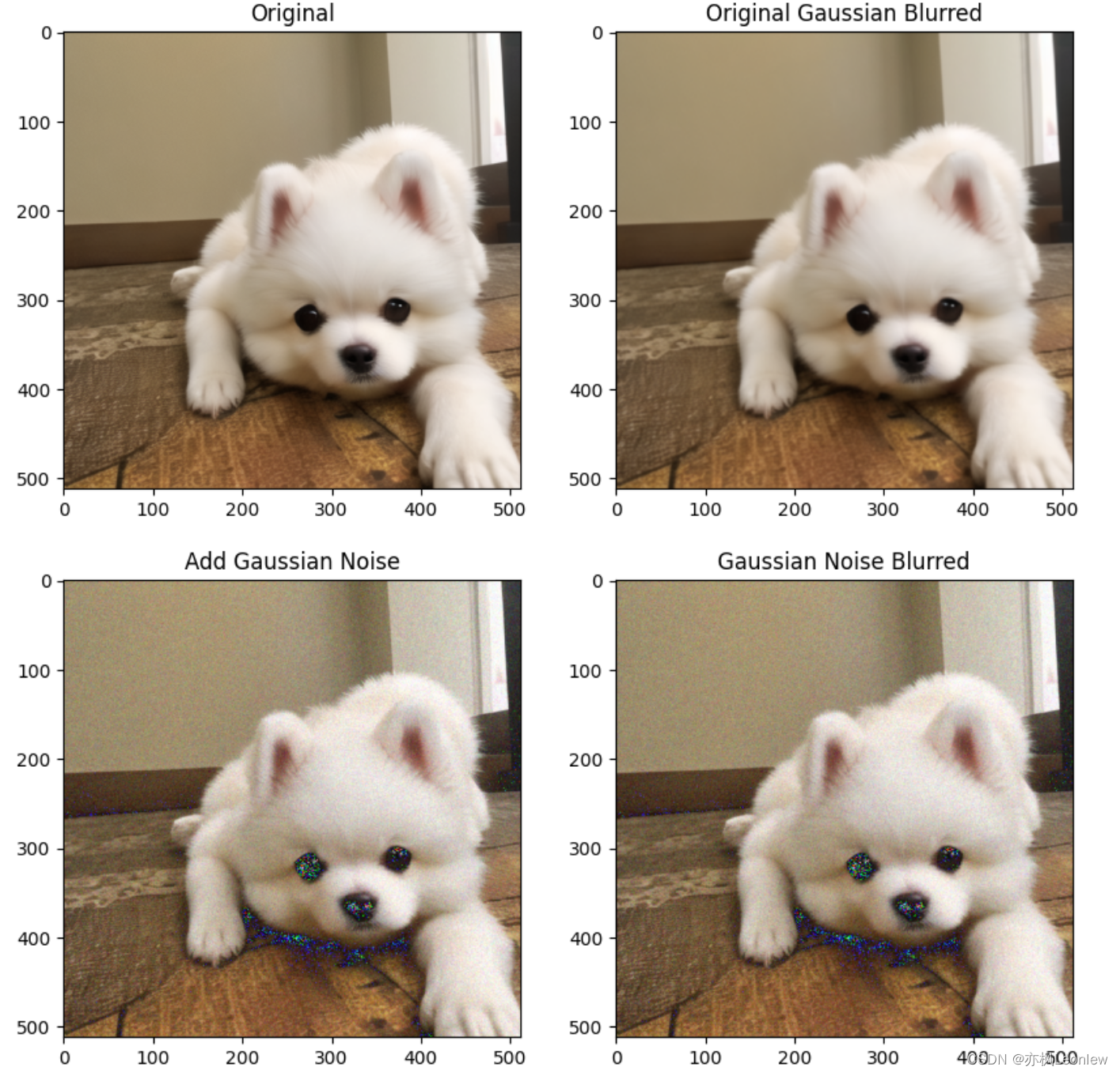

Python Opencv实践 - 图像高斯滤波(高斯模糊)

import cv2 as cv import numpy as np import matplotlib.pyplot as pltimg cv.imread("../SampleImages/pomeranian.png", cv.IMREAD_COLOR) rows,cols,channels img.shape print(rows,cols,channels)#为图像添加高斯噪声 #使用np.random.normal(loc0.0, scale1.0…...

使用 Qt 生成 Word 和 PDF 文档的详细教程

系列文章目录 文章目录 系列文章目录前言一、安装 Qt二、生成 Word 文档三、生成 PDF 文档四、运行代码并查看结果五、自定义文档内容总结 前言 Qt 是一个跨平台的应用程序开发框架,除了用于创建图形界面应用程序外,还可以用来生成 Word 和 PDF 文档。本…...

ssm+vue校园美食交流系统源码

ssmvue校园美食交流系统源码和论文026 开发工具:idea 数据库mysql5.7 数据库链接工具:navcat,小海豚等 技术:ssm 摘 要 随着现在网络的快速发展,网上管理系统也逐渐快速发展起来,网上管理模式很快融入到了许多商…...

电力系统基础知识(一)—电力系统概述

1、电压 也称作电势差或电位差,是衡量单位电荷在静电场中由于电势不同所产生的能量差的物理量。其大小等于单位正电荷因受电场力作用从A点移动到B点所做的功,电压的方向规定为从高电位指向低电位。其单位为伏特(V,简称伏),常用单位还有千伏(kV)、毫伏(mV)、微伏(uV…...

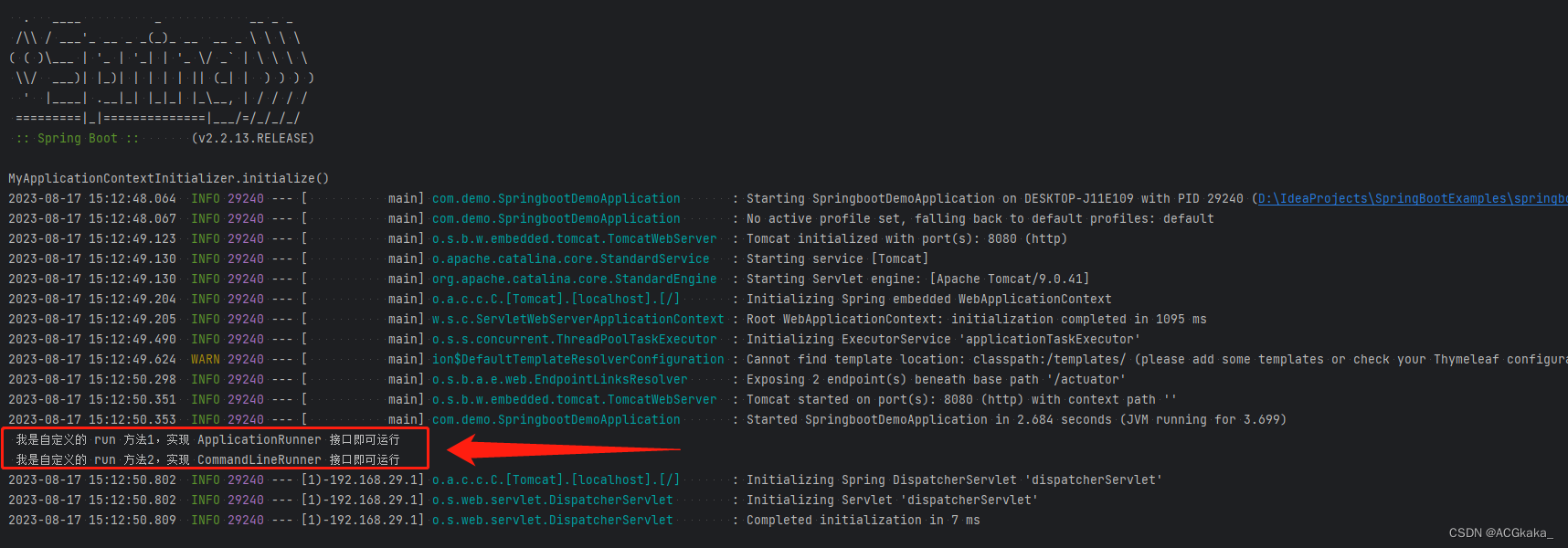

spring(15) SpringBoot启动过程

目录 一、过程简介二、过程流程图三、源码分析1、运行 SpringApplication.run() 方法2、确定应用程序类型3、加载所有的初始化器4、加载所有的监听器5、设置程序运行的主类6、开启计时器7、将 java.awt.headless 设置为 true8、获取并启用监听器9、设置应用程序参数10、准备环境…...

耕地单目标语义分割实践——Pytorch网络过程实现理解

一、卷积操作 (一)普通卷积(Convolution) (二)空洞卷积(Atrous Convolution) 根据空洞卷积的定义,显然可以意识到空洞卷积可以提取到同一输入的不同尺度下的特征图&…...

画质提升+带宽优化,小红书音视频团队端云结合超分落地实践

随着视频业务和短视频播放规模不断增长,小红书一直致力于研究:如何在保证提升用户体验质量的同时降低视频带宽成本? 在近日结束的音视频技术大会「LiveVideoStackCon 2023」上海站中,小红书音视频架构视频图像处理算法负责人剑寒向…...

【傅里叶级数与傅里叶变换】数学推导——3、[Part4:傅里叶级数的复数形式] + [Part5:从傅里叶级数推导傅里叶变换] + 总结

文章内容来自DR_CAN关于傅里叶变换的视频,本篇文章提供了一些基础知识点,比如三角函数常用的导数、三角函数换算公式等。 文章全部链接: 基础知识点 Part1:三角函数系的正交性 Part2:T2π的周期函数的傅里叶级数展开 P…...

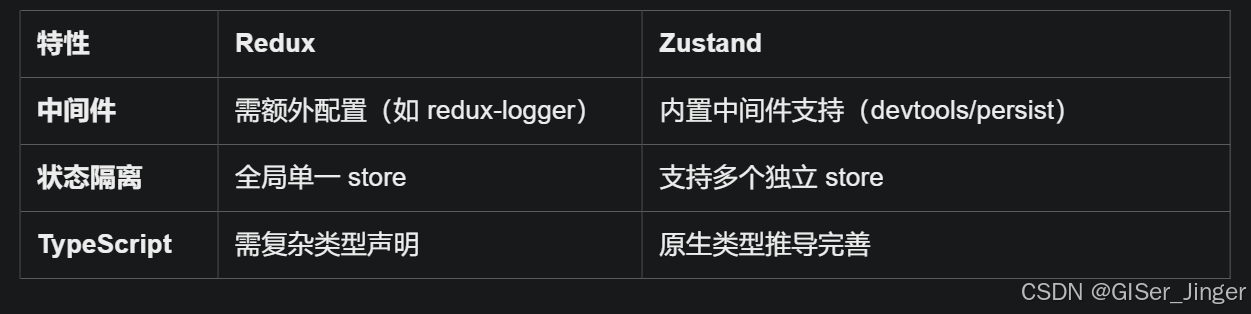

Zustand 状态管理库:极简而强大的解决方案

Zustand 是一个轻量级、快速和可扩展的状态管理库,特别适合 React 应用。它以简洁的 API 和高效的性能解决了 Redux 等状态管理方案中的繁琐问题。 核心优势对比 基本使用指南 1. 创建 Store // store.js import create from zustandconst useStore create((set)…...

TRS收益互换:跨境资本流动的金融创新工具与系统化解决方案

一、TRS收益互换的本质与业务逻辑 (一)概念解析 TRS(Total Return Swap)收益互换是一种金融衍生工具,指交易双方约定在未来一定期限内,基于特定资产或指数的表现进行现金流交换的协议。其核心特征包括&am…...

拉力测试cuda pytorch 把 4070显卡拉满

import torch import timedef stress_test_gpu(matrix_size16384, duration300):"""对GPU进行压力测试,通过持续的矩阵乘法来最大化GPU利用率参数:matrix_size: 矩阵维度大小,增大可提高计算复杂度duration: 测试持续时间(秒&…...

Python ROS2【机器人中间件框架】 简介

销量过万TEEIS德国护膝夏天用薄款 优惠券冠生园 百花蜂蜜428g 挤压瓶纯蜂蜜巨奇严选 鞋子除臭剂360ml 多芬身体磨砂膏280g健70%-75%酒精消毒棉片湿巾1418cm 80片/袋3袋大包清洁食品用消毒 优惠券AIMORNY52朵红玫瑰永生香皂花同城配送非鲜花七夕情人节生日礼物送女友 热卖妙洁棉…...

2025年渗透测试面试题总结-腾讯[实习]科恩实验室-安全工程师(题目+回答)

安全领域各种资源,学习文档,以及工具分享、前沿信息分享、POC、EXP分享。不定期分享各种好玩的项目及好用的工具,欢迎关注。 目录 腾讯[实习]科恩实验室-安全工程师 一、网络与协议 1. TCP三次握手 2. SYN扫描原理 3. HTTPS证书机制 二…...

Razor编程中@Html的方法使用大全

文章目录 1. 基础HTML辅助方法1.1 Html.ActionLink()1.2 Html.RouteLink()1.3 Html.Display() / Html.DisplayFor()1.4 Html.Editor() / Html.EditorFor()1.5 Html.Label() / Html.LabelFor()1.6 Html.TextBox() / Html.TextBoxFor() 2. 表单相关辅助方法2.1 Html.BeginForm() …...

)

【LeetCode】3309. 连接二进制表示可形成的最大数值(递归|回溯|位运算)

LeetCode 3309. 连接二进制表示可形成的最大数值(中等) 题目描述解题思路Java代码 题目描述 题目链接:LeetCode 3309. 连接二进制表示可形成的最大数值(中等) 给你一个长度为 3 的整数数组 nums。 现以某种顺序 连接…...

LCTF液晶可调谐滤波器在多光谱相机捕捉无人机目标检测中的作用

中达瑞和自2005年成立以来,一直在光谱成像领域深度钻研和发展,始终致力于研发高性能、高可靠性的光谱成像相机,为科研院校提供更优的产品和服务。在《低空背景下无人机目标的光谱特征研究及目标检测应用》这篇论文中提到中达瑞和 LCTF 作为多…...

macOS 终端智能代理检测

🧠 终端智能代理检测:自动判断是否需要设置代理访问 GitHub 在开发中,使用 GitHub 是非常常见的需求。但有时候我们会发现某些命令失败、插件无法更新,例如: fatal: unable to access https://github.com/ohmyzsh/oh…...

【iOS】 Block再学习

iOS Block再学习 文章目录 iOS Block再学习前言Block的三种类型__ NSGlobalBlock____ NSMallocBlock____ NSStackBlock__小结 Block底层分析Block的结构捕获自由变量捕获全局(静态)变量捕获静态变量__block修饰符forwarding指针 Block的copy时机block作为函数返回值将block赋给…...