RandLA-Net 训练自定义数据集

https://arxiv.org/abs/1911.11236

搭建训练环境

git clone https://github.com/QingyongHu/RandLA-Net.git- 搭建 python 环境 , 这里我用的

3.9conda create -n randlanet python=3.9 source activate randlanet pip install tensorflow==2.15.0 -i https://pypi.tuna.tsinghua.edu.cn/simple --timeout=120 pip install -r helper_requirements.txt -i https://pypi.tuna.tsinghua.edu.cn/simple pip install Cython -i https://pypi.tuna.tsinghua.edu.cn/simple conda install -c conda-forge scikit-learn - cd

utils/cpp_wrappers/cpp_subsampling/, 执行python setup.py build_ext --inplace, 输出grid_subsampling.cpython-39-x86_64-linux-gnu.so - cd

nearest_neighbors, 执行python setup.py build_ext --inplace, 输出nearest_neighbors.cpython-39-x86_64-linux-gnu.so

制作数据集

- 这里我用

CloudCompare标注的数据集 , 具体标注方法,上网找找. - 创建

make_train_dataset.py, 开始生成训练数据集# 写这段代码的时候,只有上帝和我知道它是干嘛的 # 现在,只有上帝知道 # @File : make_cloud_train_datasets.py # @Author : J. # @desc : 生成 RandLanet 训练数据集from sklearn.neighbors import KDTree from os.path import join, exists, dirname, abspath import numpy as np import os, glob, pickle import sysBASE_DIR = dirname(abspath(__file__)) ROOT_DIR = dirname(BASE_DIR) sys.path.append(BASE_DIR) sys.path.append(ROOT_DIR) from helper_ply import write_ply from helper_tool import DataProcessing as DPgrid_size = 0.01 dataset_path = './data/sample/original_data' original_pc_folder = join(dirname(dataset_path), 'original_ply') sub_pc_folder = join(dirname(dataset_path), 'input_{:.3f}'.format(grid_size)) os.mkdir(original_pc_folder) if not exists(original_pc_folder) else None os.mkdir(sub_pc_folder) if not exists(sub_pc_folder) else Nonerailway_cnt = 0 backgroud_cnt = 0 for pc_path in glob.glob(join(dataset_path, '*.txt')):file_name = os.path.basename(pc_path)[:-4]if exists(join(sub_pc_folder, file_name + '_KDTree.pkl')):continuepc = np.loadtxt(pc_path)labels = pc[:, -1].astype(np.uint8)values , counts = np.unique(labels,return_counts = True)for i in range(len(values)):# 我标注2个类别(包含背景类别)# 统计每个类别点的数量if values[i] == 0:backgroud_cnt = backgroud_cnt + counts[i]elif values[i] == 1:railway_cnt = railway_cnt + counts[i]full_ply_path = join(original_pc_folder, file_name + '.ply')# Subsample to save spacesub_points, sub_colors, sub_labels = DP.grid_sub_sampling(pc[:, :3].astype(np.float32),pc[:, 3:6].astype(np.uint8), labels, 0.01)sub_labels = np.squeeze(sub_labels)write_ply(full_ply_path, (sub_points, sub_colors, sub_labels), ['x', 'y', 'z', 'red', 'green', 'blue', 'class'])# save sub_cloud and KDTree filesub_xyz, sub_colors, sub_labels = DP.grid_sub_sampling(sub_points, sub_colors, sub_labels, grid_size)sub_colors = sub_colors / 255.0sub_labels = np.squeeze(sub_labels)sub_ply_file = join(sub_pc_folder, file_name + '.ply')write_ply(sub_ply_file, [sub_xyz, sub_colors, sub_labels], ['x', 'y', 'z', 'red', 'green', 'blue', 'class'])search_tree = KDTree(sub_xyz, leaf_size=50)kd_tree_file = join(sub_pc_folder, file_name + '_KDTree.pkl')with open(kd_tree_file, 'wb') as f:pickle.dump(search_tree, f)proj_idx = np.squeeze(search_tree.query(sub_points, return_distance=False))proj_idx = proj_idx.astype(np.int32)proj_save = join(sub_pc_folder, file_name + '_proj.pkl')with open(proj_save, 'wb') as f:pickle.dump([proj_idx, labels], f) # 统计每个类别个数 print("----> backgroud_cnt: " + str(backgroud_cnt)) print("----> railway_cnt: " + str(railway_cnt)) - 修改

helper_tools.py#import cpp_wrappers.cpp_subsampling.grid_subsampling as cpp_subsampling#import nearest_neighbors.lib.python.nearest_neighbors as nearest_neighbors# 修改成import utils.cpp_wrappers.cpp_subsampling.grid_subsampling as cpp_subsamplingimport utils.nearest_neighbors.nearest_neighbors as nearest_neighbors... # 复制一个 起个自己名字 class ConfigSample:k_n = 16 # KNNnum_layers = 5 # Number of layersnum_points = 16000 # Number of input points# 包含背景类别,如果想排除背景类别, 修改 ignored_labelsnum_classes = 2 # Number of valid classes sub_grid_size = 0.01 # preprocess_parameter # Todobatch_size = 4 # batch_size during trainingval_batch_size = 2 # batch_size during validation and testtrain_steps = 500 # Number of steps per epochsval_steps = 3 # Number of validation steps per epochsub_sampling_ratio = [4, 4, 4, 4, 2] # sampling ratio of random sampling at each layerd_out = [16, 64, 128, 256, 512] # feature dimensionnoise_init = 3.5 # noise initial parametermax_epoch = 100 # maximum epoch during traininglearning_rate = 1e-2 # initial learning ratelr_decays = {i: 0.95 for i in range(0, 500)} # decay rate of learning ratetrain_sum_dir = 'train_log'saving = Truesaving_path = Noneaugment_scale_anisotropic = Trueaugment_symmetries = [True, False, False]augment_rotation = 'vertical'augment_scale_min = 0.8augment_scale_max = 1.2augment_noise = 0.001augment_occlusion = 'none'augment_color = 0.8@staticmethoddef get_class_weights(dataset_name):# pre-calculate the number of points in each categorynum_per_class = []if dataset_name is 'S3DIS':num_per_class = np.array([3370714, 2856755, 4919229, 318158, 375640, 478001, 974733,650464, 791496, 88727, 1284130, 229758, 2272837], dtype=np.int32)elif dataset_name is 'Semantic3D':num_per_class = np.array([5181602, 5012952, 6830086, 1311528, 10476365, 946982, 334860, 269353],dtype=np.int32)elif dataset_name is 'SemanticKITTI':num_per_class = np.array([55437630, 320797, 541736, 2578735, 3274484, 552662, 184064, 78858,240942562, 17294618, 170599734, 6369672, 230413074, 101130274, 476491114,9833174, 129609852, 4506626, 1168181])# TODO 增加一个自己的类别elif dataset_name is 'Sample':# 每一个类别点的数量num_per_class = np.array([4401119, 148313])weight = num_per_class / float(sum(num_per_class))ce_label_weight = 1 / (weight + 0.02)return np.expand_dims(ce_label_weight, axis=0) ...

训练

- main_Sample.py (拷贝 main_S3DIS.py)

from os.path import join, exists

from RandLANet import Network

from tester_Railway import ModelTester

from helper_ply import read_ply

from helper_tool import Plot

from helper_tool import DataProcessing as DP

from helper_tool import ConfigRailway as cfg

import tensorflow.compat.v1 as tf

tf.disable_v2_behavior()

import numpy as np

import pickle, argparse, osclass Railway:def __init__(self):self.name = 'Sample'# 最好给绝对路径self.path = '/home/ab/workspace/train/randla-net-tf2-main/data/sample'self.label_to_names = {0: 'background', 1: 'sample'}self.num_classes = len(self.label_to_names)self.label_values = np.sort([k for k, v in self.label_to_names.items()])self.label_to_idx = {l: i for i, l in enumerate(self.label_values)}# 如果想忽略背景类别 np.sort([0])#self.ignored_labels = np.sort([0]) # TODOself.ignored_labels = np.sort([]) # TODOself.original_folder = join(self.path, 'original_data')self.full_pc_folder = join(self.path, 'original_ply')self.sub_pc_folder = join(self.path, 'input_{:.3f}'.format(cfg.sub_grid_size))#训练、验证、测试数据都在original_data数据集中,需要做划分self.val_split = ["20240430205457370","20240430205527591"] self.test_split= ["20240430205530638"]# Initial training-validation-testing filesself.train_files = []self.val_files = []self.test_files = []cloud_names = [file_name[:-4] for file_name in os.listdir(self.original_folder) if file_name[-4:] == '.txt']#根据文件名划分训练、验证、测试数据集for pc_name in cloud_names:pc_file=join(self.sub_pc_folder, pc_name + '.ply')if pc_name in self.val_split:self.val_files.append(pc_file)elif pc_name in self.test_split:self.test_files.append(pc_file)else:self.train_files.append(pc_file)# Initiate containersself.val_proj = []self.val_labels = []self.test_proj = []self.test_labels = []self.possibility = {}self.min_possibility = {}self.class_weight = {}self.input_trees = {'training': [], 'validation': [], 'test': []}self.input_colors = {'training': [], 'validation': [], 'test': []}self.input_labels = {'training': [], 'validation': []}# Ascii files dict for testingself.ascii_files = {'20240430205530638.ply': '20240430205530638-reduced.labels'}self.load_sub_sampled_clouds(cfg.sub_grid_size)def load_sub_sampled_clouds(self, sub_grid_size):tree_path = join(self.path, 'input_{:.3f}'.format(sub_grid_size))files = np.hstack((self.train_files, self.val_files, self.test_files))for i, file_path in enumerate(files):cloud_name = file_path.split('/')[-1][:-4]print('Load_pc_' + str(i) + ': ' + cloud_name)if file_path in self.val_files:cloud_split = 'validation'elif file_path in self.train_files:cloud_split = 'training'else:cloud_split = 'test'# Name of the input fileskd_tree_file = join(tree_path, '{:s}_KDTree.pkl'.format(cloud_name))sub_ply_file = join(tree_path, '{:s}.ply'.format(cloud_name))# read ply with datadata = read_ply(sub_ply_file)sub_colors = np.vstack((data['red'], data['green'], data['blue'])).Tif cloud_split == 'test':sub_labels = Noneelse:sub_labels = data['class']# Read pkl with search treewith open(kd_tree_file, 'rb') as f:search_tree = pickle.load(f)self.input_trees[cloud_split] += [search_tree]self.input_colors[cloud_split] += [sub_colors]if cloud_split in ['training', 'validation']:self.input_labels[cloud_split] += [sub_labels]# Get validation and test re_projection indicesprint('\nPreparing reprojection indices for validation and test')for i, file_path in enumerate(files):# get cloud name and splitcloud_name = file_path.split('/')[-1][:-4]# Validation projection and labelsif file_path in self.val_files:proj_file = join(tree_path, '{:s}_proj.pkl'.format(cloud_name))with open(proj_file, 'rb') as f:proj_idx, labels = pickle.load(f)self.val_proj += [proj_idx]self.val_labels += [labels]# Test projectionif file_path in self.test_files:proj_file = join(tree_path, '{:s}_proj.pkl'.format(cloud_name))with open(proj_file, 'rb') as f:proj_idx, labels = pickle.load(f)self.test_proj += [proj_idx]self.test_labels += [labels]print('finished')return# Generate the input data flowdef get_batch_gen(self, split):if split == 'training':num_per_epoch = cfg.train_steps * cfg.batch_sizeelif split == 'validation':num_per_epoch = cfg.val_steps * cfg.val_batch_sizeelif split == 'test':num_per_epoch = cfg.val_steps * cfg.val_batch_size# Reset possibilityself.possibility[split] = []self.min_possibility[split] = []self.class_weight[split] = []# Random initializefor i, tree in enumerate(self.input_trees[split]):self.possibility[split] += [np.random.rand(tree.data.shape[0]) * 1e-3]self.min_possibility[split] += [float(np.min(self.possibility[split][-1]))]if split != 'test':_, num_class_total = np.unique(np.hstack(self.input_labels[split]), return_counts=True)self.class_weight[split] += [np.squeeze([num_class_total / np.sum(num_class_total)], axis=0)]def spatially_regular_gen():# Generator loopfor i in range(num_per_epoch): # num_per_epoch# Choose the cloud with the lowest probabilitycloud_idx = int(np.argmin(self.min_possibility[split]))# choose the point with the minimum of possibility in the cloud as query pointpoint_ind = np.argmin(self.possibility[split][cloud_idx])# Get all points within the cloud from tree structurepoints = np.array(self.input_trees[split][cloud_idx].data, copy=False)# print("points........." + str(points.shape))# Center point of input regioncenter_point = points[point_ind, :].reshape(1, -1)# Add noise to the center pointnoise = np.random.normal(scale=cfg.noise_init / 10, size=center_point.shape)pick_point = center_point + noise.astype(center_point.dtype)query_idx = self.input_trees[split][cloud_idx].query(pick_point, k=cfg.num_points)[1][0]# Shuffle indexquery_idx = DP.shuffle_idx(query_idx)# Get corresponding points and colors based on the indexqueried_pc_xyz = points[query_idx]queried_pc_xyz[:, 0:2] = queried_pc_xyz[:, 0:2] - pick_point[:, 0:2]queried_pc_colors = self.input_colors[split][cloud_idx][query_idx]if split == 'test':queried_pc_labels = np.zeros(queried_pc_xyz.shape[0])queried_pt_weight = 1else:queried_pc_labels = self.input_labels[split][cloud_idx][query_idx]queried_pc_labels = np.array([self.label_to_idx[l] for l in queried_pc_labels])queried_pt_weight = np.array([self.class_weight[split][0][n] for n in queried_pc_labels])# Update the possibility of the selected pointsdists = np.sum(np.square((points[query_idx] - pick_point).astype(np.float32)), axis=1)delta = np.square(1 - dists / np.max(dists)) * queried_pt_weightself.possibility[split][cloud_idx][query_idx] += deltaself.min_possibility[split][cloud_idx] = float(np.min(self.possibility[split][cloud_idx]))if True:yield (queried_pc_xyz,queried_pc_colors.astype(np.float32),queried_pc_labels,query_idx.astype(np.int32),np.array([cloud_idx], dtype=np.int32))gen_func = spatially_regular_gengen_types = (tf.float32, tf.float32, tf.int32, tf.int32, tf.int32)gen_shapes = ([None, 3], [None, 3], [None], [None], [None])return gen_func, gen_types, gen_shapesdef get_tf_mapping(self):# Collect flat inputsdef tf_map(batch_xyz, batch_features, batch_labels, batch_pc_idx, batch_cloud_idx):batch_features = tf.map_fn(self.tf_augment_input, [batch_xyz, batch_features], dtype=tf.float32)input_points = []input_neighbors = []input_pools = []input_up_samples = []for i in range(cfg.num_layers):neigh_idx = tf.py_func(DP.knn_search, [batch_xyz, batch_xyz, cfg.k_n], tf.int32)sub_points = batch_xyz[:, :tf.shape(batch_xyz)[1] // cfg.sub_sampling_ratio[i], :]pool_i = neigh_idx[:, :tf.shape(batch_xyz)[1] // cfg.sub_sampling_ratio[i], :]up_i = tf.py_func(DP.knn_search, [sub_points, batch_xyz, 1], tf.int32)input_points.append(batch_xyz)input_neighbors.append(neigh_idx)input_pools.append(pool_i)input_up_samples.append(up_i)batch_xyz = sub_pointsinput_list = input_points + input_neighbors + input_pools + input_up_samplesinput_list += [batch_features, batch_labels, batch_pc_idx, batch_cloud_idx]return input_listreturn tf_map# data augmentation@staticmethoddef tf_augment_input(inputs):xyz = inputs[0]features = inputs[1]theta = tf.random_uniform((1,), minval=0, maxval=2 * np.pi)# Rotation matricesc, s = tf.cos(theta), tf.sin(theta)cs0 = tf.zeros_like(c)cs1 = tf.ones_like(c)R = tf.stack([c, -s, cs0, s, c, cs0, cs0, cs0, cs1], axis=1)stacked_rots = tf.reshape(R, (3, 3))# Apply rotationstransformed_xyz = tf.reshape(tf.matmul(xyz, stacked_rots), [-1, 3])# Choose random scales for each examplemin_s = cfg.augment_scale_minmax_s = cfg.augment_scale_maxif cfg.augment_scale_anisotropic:s = tf.random_uniform((1, 3), minval=min_s, maxval=max_s)else:s = tf.random_uniform((1, 1), minval=min_s, maxval=max_s)symmetries = []for i in range(3):if cfg.augment_symmetries[i]:symmetries.append(tf.round(tf.random_uniform((1, 1))) * 2 - 1)else:symmetries.append(tf.ones([1, 1], dtype=tf.float32))s *= tf.concat(symmetries, 1)# Create N x 3 vector of scales to multiply with stacked_pointsstacked_scales = tf.tile(s, [tf.shape(transformed_xyz)[0], 1])# Apply scalestransformed_xyz = transformed_xyz * stacked_scalesnoise = tf.random_normal(tf.shape(transformed_xyz), stddev=cfg.augment_noise)transformed_xyz = transformed_xyz + noise# rgb = features[:, :3]# stacked_features = tf.concat([transformed_xyz, rgb], axis=-1)return transformed_xyzdef init_input_pipeline(self):print('Initiating input pipelines')cfg.ignored_label_inds = [self.label_to_idx[ign_label] for ign_label in self.ignored_labels]gen_function, gen_types, gen_shapes = self.get_batch_gen('training')gen_function_val, _, _ = self.get_batch_gen('validation')gen_function_test, _, _ = self.get_batch_gen('test')self.train_data = tf.data.Dataset.from_generator(gen_function, gen_types, gen_shapes)self.val_data = tf.data.Dataset.from_generator(gen_function_val, gen_types, gen_shapes)self.test_data = tf.data.Dataset.from_generator(gen_function_test, gen_types, gen_shapes)self.batch_train_data = self.train_data.batch(cfg.batch_size)self.batch_val_data = self.val_data.batch(cfg.val_batch_size)self.batch_test_data = self.test_data.batch(cfg.val_batch_size)map_func = self.get_tf_mapping()self.batch_train_data = self.batch_train_data.map(map_func=map_func)self.batch_val_data = self.batch_val_data.map(map_func=map_func)self.batch_test_data = self.batch_test_data.map(map_func=map_func)self.batch_train_data = self.batch_train_data.prefetch(cfg.batch_size)self.batch_val_data = self.batch_val_data.prefetch(cfg.val_batch_size)self.batch_test_data = self.batch_test_data.prefetch(cfg.val_batch_size)iter = tf.data.Iterator.from_structure(self.batch_train_data.output_types, self.batch_train_data.output_shapes)self.flat_inputs = iter.get_next()self.train_init_op = iter.make_initializer(self.batch_train_data)self.val_init_op = iter.make_initializer(self.batch_val_data)self.test_init_op = iter.make_initializer(self.batch_test_data)if __name__ == '__main__':parser = argparse.ArgumentParser()parser.add_argument('--gpu', type=int, default=0, help='the number of GPUs to use [default: 0]')parser.add_argument('--mode', type=str, default='train', help='options: train, test, vis')parser.add_argument('--model_path', type=str, default='None', help='pretrained model path')FLAGS = parser.parse_args()GPU_ID = FLAGS.gpuos.environ["CUDA_DEVICE_ORDER"] = "PCI_BUS_ID"os.environ['CUDA_VISIBLE_DEVICES'] = str(GPU_ID)os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'Mode = FLAGS.modedataset = Railway()dataset.init_input_pipeline()if Mode == 'train':model = Network(dataset, cfg)model.train(dataset)elif Mode == 'test':cfg.saving = Falsemodel = Network(dataset, cfg)if FLAGS.model_path is not 'None':chosen_snap = FLAGS.model_pathelse:chosen_snapshot = -1logs = np.sort([os.path.join('results', f) for f in os.listdir('results') if f.startswith('Log')])chosen_folder = logs[-1]snap_path = join(chosen_folder, 'snapshots')snap_steps = [int(f[:-5].split('-')[-1]) for f in os.listdir(snap_path) if f[-5:] == '.meta']chosen_step = np.sort(snap_steps)[-1]chosen_snap = os.path.join(snap_path, 'snap-{:d}'.format(chosen_step))print(".............. chosen_snap:" + chosen_snap)tester = ModelTester(model, dataset, restore_snap=chosen_snap)tester.test(model, dataset)else:################### Visualize data ###################with tf.Session() as sess:sess.run(tf.global_variables_initializer())sess.run(dataset.train_init_op)while True:flat_inputs = sess.run(dataset.flat_inputs)pc_xyz = flat_inputs[0]sub_pc_xyz = flat_inputs[1]labels = flat_inputs[21]Plot.draw_pc_sem_ins(pc_xyz[0, :, :], labels[0, :])Plot.draw_pc_sem_ins(sub_pc_xyz[0, :, :], labels[0, 0:np.shape(sub_pc_xyz)[1]])- 开始训练

python main_Sample.py --mode train --gpu 0

参考

- https://github.com/QingyongHu/RandLA-Net

- https://blog.csdn.net/weixin_40653140/article/details/130285289

相关文章:

RandLA-Net 训练自定义数据集

https://arxiv.org/abs/1911.11236 搭建训练环境 git clone https://github.com/QingyongHu/RandLA-Net.git搭建 python 环境 , 这里我用的 3.9conda create -n randlanet python3.9 source activate randlanet pip install tensorflow2.15.0 -i https://pypi.tuna.tsinghua.e…...

洛谷 B3642:二叉树的遍历 ← 结构体方法 链式前向星方法

【题目来源】https://www.luogu.com.cn/problem/B3642【题目描述】 有一个 n(n≤10^6) 个结点的二叉树。给出每个结点的两个子结点编号(均不超过 n),建立一棵二叉树(根结点的编号为 1),如果是叶子结点&…...

飞腾+FPGA多U多串全国产工控主机

飞腾多U多串工控主机基于国产化飞腾高性能8核D2000处理器平台的国产自主可控解决方案,搭载国产化固件,支持UOS、银河麒麟等国产操作系统,满足金融系统安全运算需求,实现从硬件、操作系统到应用的完全国产、自主、可控,是国产金融信…...

uni-app实现页面通信EventChannel

uni-app实现页面通信EventChannel 之前使用了EventBus的方法实现不同页面组件之间的一个通信,在uni-app中,我们也可以使用uni-app API —— uni.navigateTo来实现页面间的通信。注:2.8.9 支持页面间事件通信通道。 1. 向被打开页面传送数据…...

等保系列之——网络安全等级保护测评工作流程及工作内容

#等保测评##网络安全# 一、网络安全等级保护测评过程概述 网络安全等级保护测评工作过程包括四个基本测评活动:测评准备活动、方案编制活动、现场测评活动、报告编制活动。而测评相关方之间的沟通与洽谈应贯穿整个测评过程。每一项活动有一定的工作任务。如下表。…...

自然语言处理中的BERT模型深度剖析

自然语言处理(NLP)是人工智能领域的一个重要分支,它致力于让计算机理解和生成人类语言。近年来,BERT(Bidirectional Encoder Representations from Transformers)模型的出现,极大地推动了NLP领域…...

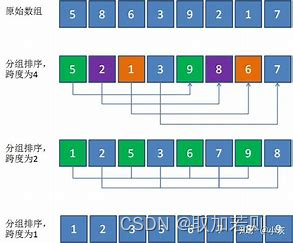

数据结构:希尔排序

文章目录 前言一、排序的概念及其运用二、常见排序算法的实现 1.插入排序2.希尔排序总结 前言 排序在生活中有许多实际的运用。以下是一些例子: 购物清单:当我们去超市购物时,通常会列出一份购物清单。将购物清单按照需要购买的顺序排序&…...

unicloud 云对象

背景和优势 20年前,restful接口开发开始流行,服务器编写接口,客户端调用接口,传输json。 现在,替代restful的新模式来了。 云对象,服务器编写API,客户端调用API,不再开发传输json…...

【车载开发系列】常用专业术语汇总

【车载开发系列】常用专业词汇汇总 英语全称说明详细HILSHardware In the Loop Simulation车硬件仿真模拟器精密仪器,价格昂贵,机能测试时一定要小心使用。使用简易HILS不能模拟电气故障。要模拟电气故障需要外接故障BoxLSBLeast Significant Bit单位精…...

如何实现Docker容器的自动化升级:不再为手动更新烦恼!

要升级 Docker 容器,你可以按照以下步骤操作,这些步骤涵盖了从拉取最新镜像到重启容器的整个过程。 步骤一:拉取最新的镜像 首先,确保你有最新版本的镜像。例如,如果你要升级一个 Spring Boot 应用的镜像,…...

SwiftUI 5.0(iOS 17)进一步定制 TipKit 外观让撸码如虎添翼

概览 在之前 SwiftUI 5.0(iOS 17)TipKit 让用户更懂你的 App 这篇博文里,我们已经初步介绍过了 TipKit 的基本知识。 现在,让我们来看看如何进一步利用 SwiftUI 对 TipKit 提供的细粒度外观定制技巧,让 Tip 更加“明眸…...

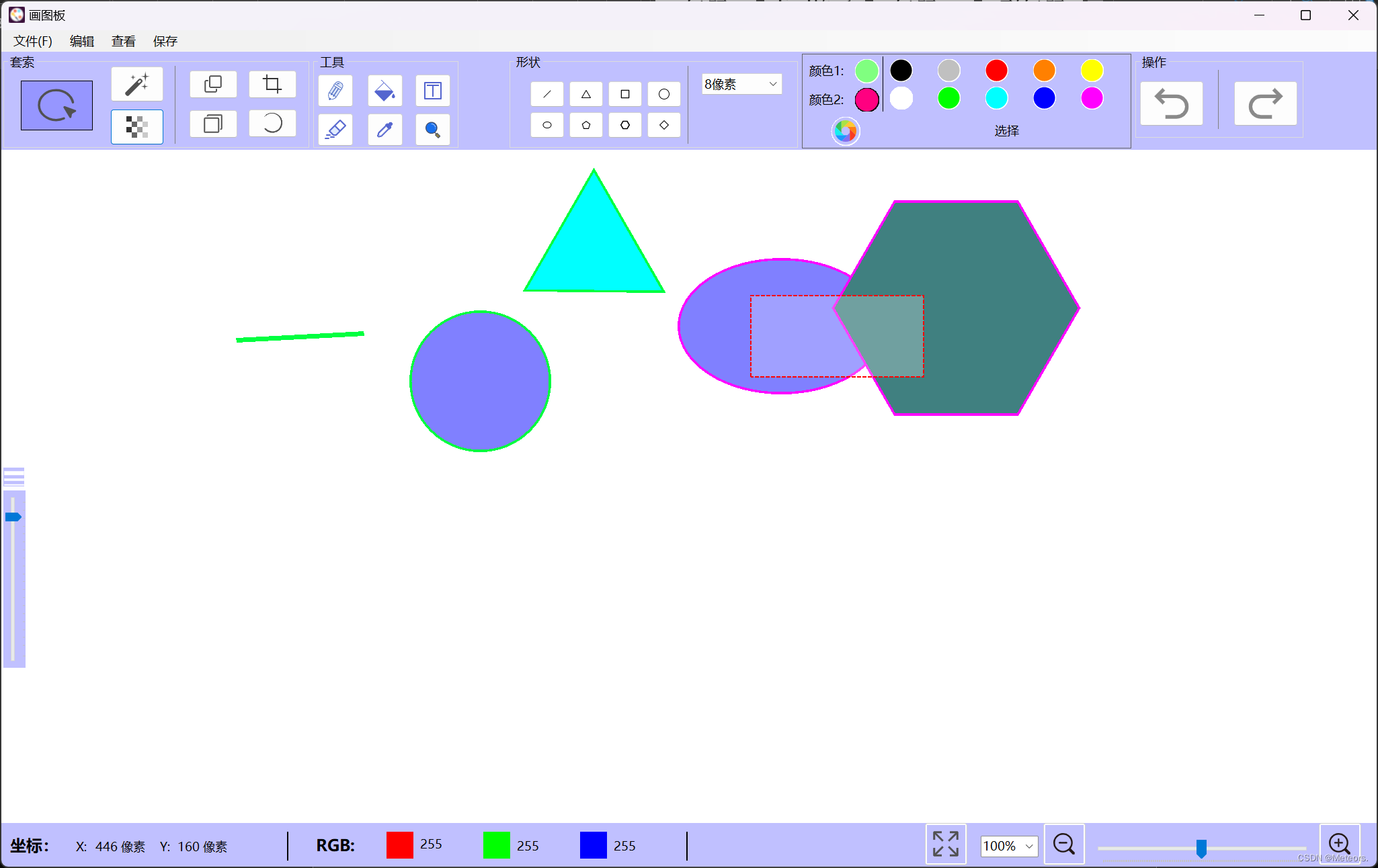

使用C#实现VS窗体应用——画图板

✅作者简介:大家好,我是 Meteors., 向往着更加简洁高效的代码写法与编程方式,持续分享Java技术内容。🍎个人主页:Meteors.的博客💞当前专栏:小项目✨特色专栏: 知识分享🥭…...

)

flutter 自定义本地化-GlobalMaterialLocalizations(重写本地化日期转换)

1. 创建自定义 GlobalMaterialLocalizations import package:flutter_localizations/flutter_localizations.dart; import package:kittlenapp/utils/base/date_time_util.dart;///[auth] kittlen ///[createTime] 2024-05-31 11:40 ///[description]class MyMaterialLocaliza…...

HTTPS 原理技术

HTTPS原理技术 背景简介原理总结 背景 随着年龄的增长,很多曾经烂熟于心的技术原理已被岁月摩擦得愈发模糊起来,技术出身的人总是很难放下一些执念,遂将这些知识整理成文,以纪念曾经努力学习奋斗的日子。本文内容并非完全原创&am…...

Linux基础指令及其作用之压缩与解压

压缩与解压targzip示例输出解释 gunzipzipunzip 压缩与解压 tar tar xzf 是一个常用的命令组合,用于解压缩由 gzip 压缩的 tarball 文件。下面是对这个命令的详细说明: tar:这是一个用于在 Linux 和类 Unix 系统上创建、查看或提取归档文件…...

ORA-08189: 因为未启用行移动功能, 不能闪回表问题

在执行闪回恢复误删数据出现“ORA-08189: 因为未启用行移动功能, 不能闪回表”的错误提示。 ORA-08189 错误表示你尝试对一个表执行闪回操作,但该表没有启用行移动(ROW MOVEMENT)功能。行移动是Oracle中的一个特性,它允许表中的行…...

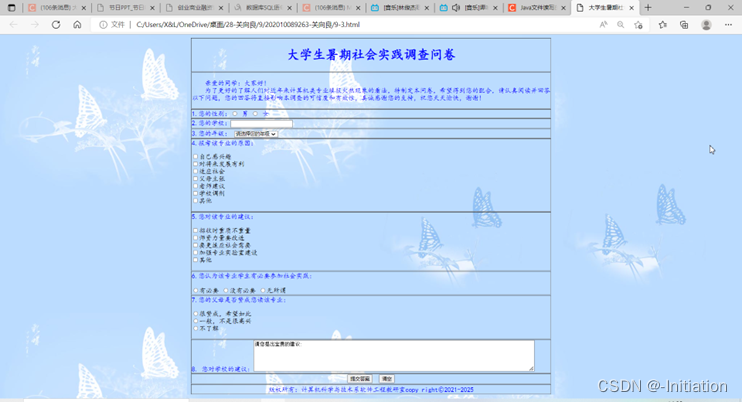

html+CSS部分基础运用9

项目1 参会注册表 1.设计参会注册表页面,效果如图9-1所示。 图9-1 参会注册表页面 项目2 设计《大学生暑期社会实践调查问卷》 1.设计“大学生暑期社会实践调查问卷”页面,如图9-2所示。 图9-2 大学生暑期社会调查表页面 2.调查表前导语的…...

五大元素之一,累不累——Java内部类

目录 简略版: 详解版: 使用场景: 内部类的优点: 内部类的分类: 一. 成员内部类 1.创建对象 2.访问方法 3. 外部类名.this. 二. 静态内部类 1. 创建对象 2. 访问特点 三. 局部内部类 四. 匿名内部类 …...

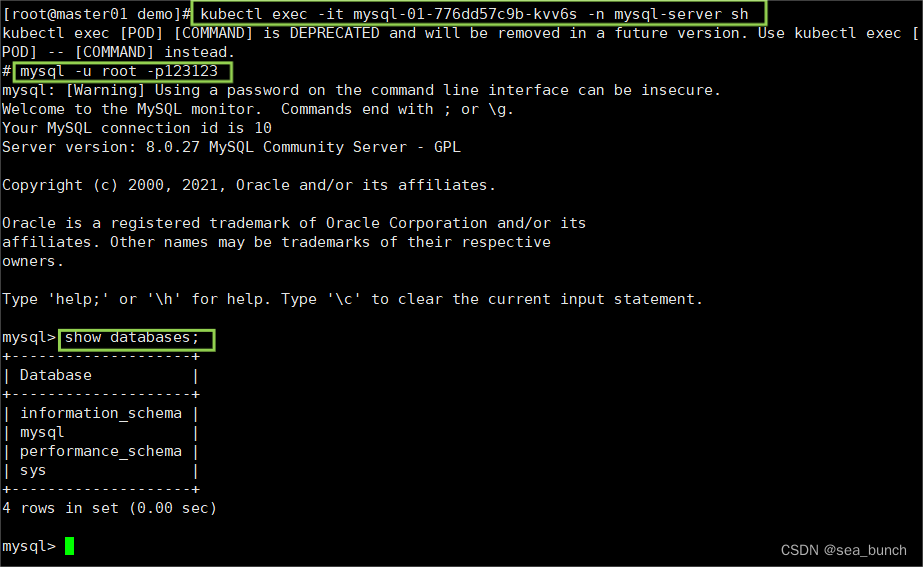

YAML快速编写示例

一、案例 1.1 自主式创建service关联上方的pod 资源名称my-nginx-kkk命名空间my-kkk容器镜像nginx:1.21容器端口80标签njzb:my-kkk 1.1.1 创建一个demo文件夹 1.1.2 创建并获取模版文件 1.1.3 查看服务并编写yaml文件 1.1.4 编写yaml文件并部署,查看服务是否运行成…...

)

2024 江苏省大学生程序设计大赛 2024 Jiangsu Collegiate Programming Contest(FGKI)

题目来源:https://codeforces.com/gym/105161 文章目录 F - Download Speed Monitor题意思路编程 G - Download Time Monitor题意思路编程 K - Number Deletion Game题意思路编程 I - Integer Reaction题意思路编程 写在前面:今天打的训练赛打的很水&…...

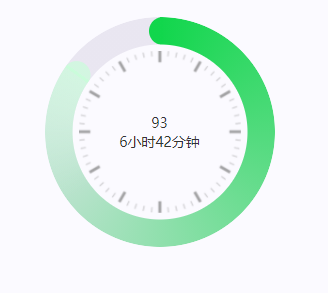

css实现圆环展示百分比,根据值动态展示所占比例

代码如下 <view class""><view class"circle-chart"><view v-if"!!num" class"pie-item" :style"{background: conic-gradient(var(--one-color) 0%,#E9E6F1 ${num}%),}"></view><view v-else …...

Qt Widget类解析与代码注释

#include "widget.h" #include "ui_widget.h"Widget::Widget(QWidget *parent): QWidget(parent), ui(new Ui::Widget) {ui->setupUi(this); }Widget::~Widget() {delete ui; }//解释这串代码,写上注释 当然可以!这段代码是 Qt …...

Auto-Coder使用GPT-4o完成:在用TabPFN这个模型构建一个预测未来3天涨跌的分类任务

通过akshare库,获取股票数据,并生成TabPFN这个模型 可以识别、处理的格式,写一个完整的预处理示例,并构建一个预测未来 3 天股价涨跌的分类任务 用TabPFN这个模型构建一个预测未来 3 天股价涨跌的分类任务,进行预测并输…...

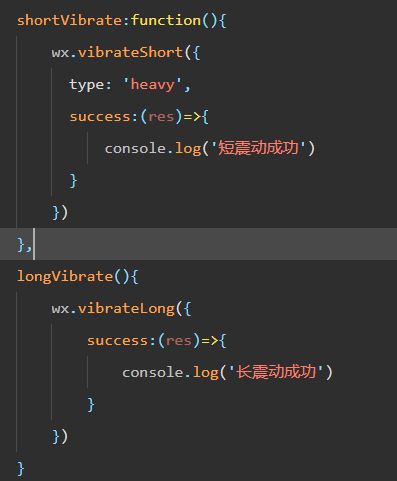

微信小程序 - 手机震动

一、界面 <button type"primary" bindtap"shortVibrate">短震动</button> <button type"primary" bindtap"longVibrate">长震动</button> 二、js逻辑代码 注:文档 https://developers.weixin.qq…...

:爬虫完整流程)

Python爬虫(二):爬虫完整流程

爬虫完整流程详解(7大核心步骤实战技巧) 一、爬虫完整工作流程 以下是爬虫开发的完整流程,我将结合具体技术点和实战经验展开说明: 1. 目标分析与前期准备 网站技术分析: 使用浏览器开发者工具(F12&…...

JDK 17 新特性

#JDK 17 新特性 /**************** 文本块 *****************/ python/scala中早就支持,不稀奇 String json “”" { “name”: “Java”, “version”: 17 } “”"; /**************** Switch 语句 -> 表达式 *****************/ 挺好的ÿ…...

JUC笔记(上)-复习 涉及死锁 volatile synchronized CAS 原子操作

一、上下文切换 即使单核CPU也可以进行多线程执行代码,CPU会给每个线程分配CPU时间片来实现这个机制。时间片非常短,所以CPU会不断地切换线程执行,从而让我们感觉多个线程是同时执行的。时间片一般是十几毫秒(ms)。通过时间片分配算法执行。…...

LINUX 69 FTP 客服管理系统 man 5 /etc/vsftpd/vsftpd.conf

FTP 客服管理系统 实现kefu123登录,不允许匿名访问,kefu只能访问/data/kefu目录,不能查看其他目录 创建账号密码 useradd kefu echo 123|passwd -stdin kefu [rootcode caozx26420]# echo 123|passwd --stdin kefu 更改用户 kefu 的密码…...

A2A JS SDK 完整教程:快速入门指南

目录 什么是 A2A JS SDK?A2A JS 安装与设置A2A JS 核心概念创建你的第一个 A2A JS 代理A2A JS 服务端开发A2A JS 客户端使用A2A JS 高级特性A2A JS 最佳实践A2A JS 故障排除 什么是 A2A JS SDK? A2A JS SDK 是一个专为 JavaScript/TypeScript 开发者设计的强大库ÿ…...

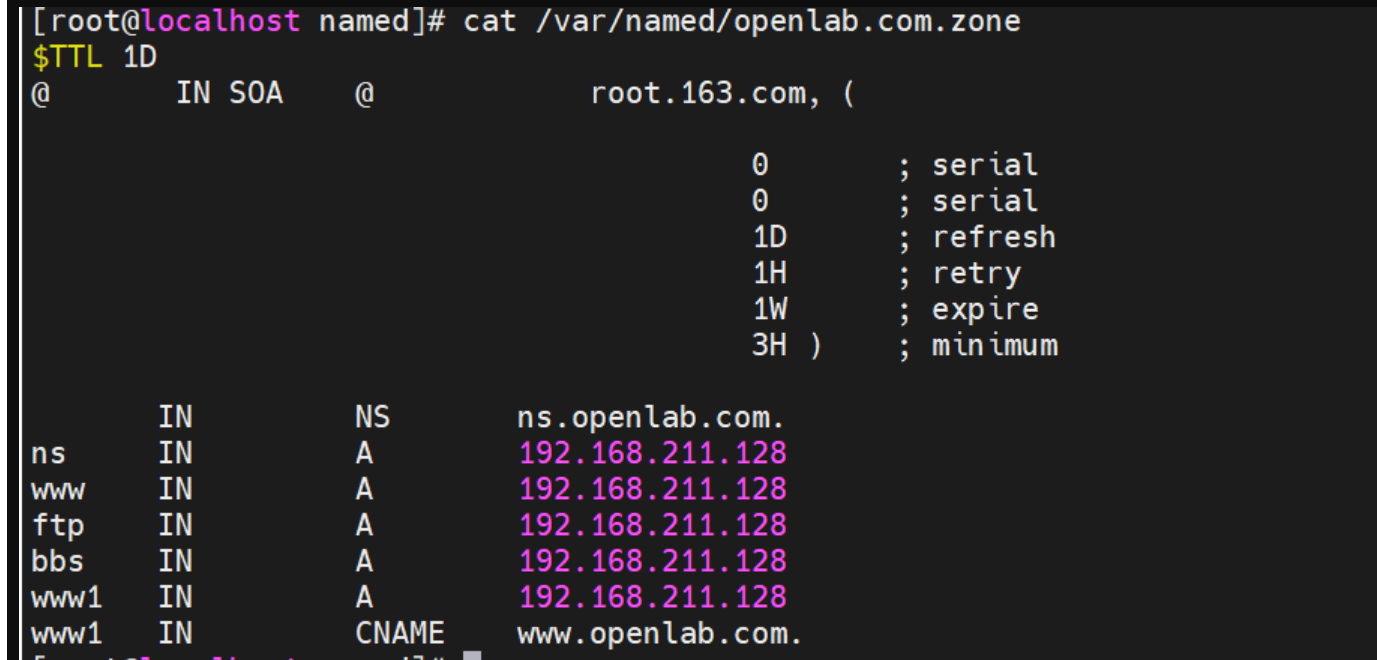

搭建DNS域名解析服务器(正向解析资源文件)

正向解析资源文件 1)准备工作 服务端及客户端都关闭安全软件 [rootlocalhost ~]# systemctl stop firewalld [rootlocalhost ~]# setenforce 0 2)服务端安装软件:bind 1.配置yum源 [rootlocalhost ~]# cat /etc/yum.repos.d/base.repo [Base…...