llama.cpp LLM_ARCH_DEEPSEEK and LLM_ARCH_DEEPSEEK2

llama.cpp LLM_ARCH_DEEPSEEK and LLM_ARCH_DEEPSEEK2

- 1. `LLM_ARCH_DEEPSEEK` and `LLM_ARCH_DEEPSEEK2`

- 2. `LLM_ARCH_DEEPSEEK` and `LLM_ARCH_DEEPSEEK2`

- 3. `struct ggml_cgraph * build_deepseek()` and `struct ggml_cgraph * build_deepseek2()`

- References

不宜吹捧中国大语言模型的同时,又去贬低美国大语言模型。

水是人体的主要化学成分,约占体重的 50% 至 70%。大语言模型的含水量也不会太少。

llama.cpp

https://github.com/ggerganov/llama.cpp

1. LLM_ARCH_DEEPSEEK and LLM_ARCH_DEEPSEEK2

/home/yongqiang/llm_work/llama_cpp_25_01_05/llama.cpp/src/llama-arch.h

/home/yongqiang/llm_work/llama_cpp_25_01_05/llama.cpp/src/llama-arch.cpp

LLM_ARCH_DEEPSEEKandLLM_ARCH_DEEPSEEK2

//

// gguf constants (sync with gguf.py)

//enum llm_arch {LLM_ARCH_LLAMA,LLM_ARCH_DECI,LLM_ARCH_FALCON,LLM_ARCH_BAICHUAN,LLM_ARCH_GROK,LLM_ARCH_GPT2,LLM_ARCH_GPTJ,LLM_ARCH_GPTNEOX,LLM_ARCH_MPT,LLM_ARCH_STARCODER,LLM_ARCH_REFACT,LLM_ARCH_BERT,LLM_ARCH_NOMIC_BERT,LLM_ARCH_JINA_BERT_V2,LLM_ARCH_BLOOM,LLM_ARCH_STABLELM,LLM_ARCH_QWEN,LLM_ARCH_QWEN2,LLM_ARCH_QWEN2MOE,LLM_ARCH_QWEN2VL,LLM_ARCH_PHI2,LLM_ARCH_PHI3,LLM_ARCH_PHIMOE,LLM_ARCH_PLAMO,LLM_ARCH_CODESHELL,LLM_ARCH_ORION,LLM_ARCH_INTERNLM2,LLM_ARCH_MINICPM,LLM_ARCH_MINICPM3,LLM_ARCH_GEMMA,LLM_ARCH_GEMMA2,LLM_ARCH_STARCODER2,LLM_ARCH_MAMBA,LLM_ARCH_XVERSE,LLM_ARCH_COMMAND_R,LLM_ARCH_COHERE2,LLM_ARCH_DBRX,LLM_ARCH_OLMO,LLM_ARCH_OLMO2,LLM_ARCH_OLMOE,LLM_ARCH_OPENELM,LLM_ARCH_ARCTIC,LLM_ARCH_DEEPSEEK,LLM_ARCH_DEEPSEEK2,LLM_ARCH_CHATGLM,LLM_ARCH_BITNET,LLM_ARCH_T5,LLM_ARCH_T5ENCODER,LLM_ARCH_JAIS,LLM_ARCH_NEMOTRON,LLM_ARCH_EXAONE,LLM_ARCH_RWKV6,LLM_ARCH_RWKV6QWEN2,LLM_ARCH_GRANITE,LLM_ARCH_GRANITE_MOE,LLM_ARCH_CHAMELEON,LLM_ARCH_WAVTOKENIZER_DEC,LLM_ARCH_UNKNOWN,

};

{ LLM_ARCH_DEEPSEEK, "deepseek" }and{ LLM_ARCH_DEEPSEEK2, "deepseek2" }

static const std::map<llm_arch, const char *> LLM_ARCH_NAMES = {{ LLM_ARCH_LLAMA, "llama" },{ LLM_ARCH_DECI, "deci" },{ LLM_ARCH_FALCON, "falcon" },{ LLM_ARCH_GROK, "grok" },{ LLM_ARCH_GPT2, "gpt2" },{ LLM_ARCH_GPTJ, "gptj" },{ LLM_ARCH_GPTNEOX, "gptneox" },{ LLM_ARCH_MPT, "mpt" },{ LLM_ARCH_BAICHUAN, "baichuan" },{ LLM_ARCH_STARCODER, "starcoder" },{ LLM_ARCH_REFACT, "refact" },{ LLM_ARCH_BERT, "bert" },{ LLM_ARCH_NOMIC_BERT, "nomic-bert" },{ LLM_ARCH_JINA_BERT_V2, "jina-bert-v2" },{ LLM_ARCH_BLOOM, "bloom" },{ LLM_ARCH_STABLELM, "stablelm" },{ LLM_ARCH_QWEN, "qwen" },{ LLM_ARCH_QWEN2, "qwen2" },{ LLM_ARCH_QWEN2MOE, "qwen2moe" },{ LLM_ARCH_QWEN2VL, "qwen2vl" },{ LLM_ARCH_PHI2, "phi2" },{ LLM_ARCH_PHI3, "phi3" },{ LLM_ARCH_PHIMOE, "phimoe" },{ LLM_ARCH_PLAMO, "plamo" },{ LLM_ARCH_CODESHELL, "codeshell" },{ LLM_ARCH_ORION, "orion" },{ LLM_ARCH_INTERNLM2, "internlm2" },{ LLM_ARCH_MINICPM, "minicpm" },{ LLM_ARCH_MINICPM3, "minicpm3" },{ LLM_ARCH_GEMMA, "gemma" },{ LLM_ARCH_GEMMA2, "gemma2" },{ LLM_ARCH_STARCODER2, "starcoder2" },{ LLM_ARCH_MAMBA, "mamba" },{ LLM_ARCH_XVERSE, "xverse" },{ LLM_ARCH_COMMAND_R, "command-r" },{ LLM_ARCH_COHERE2, "cohere2" },{ LLM_ARCH_DBRX, "dbrx" },{ LLM_ARCH_OLMO, "olmo" },{ LLM_ARCH_OLMO2, "olmo2" },{ LLM_ARCH_OLMOE, "olmoe" },{ LLM_ARCH_OPENELM, "openelm" },{ LLM_ARCH_ARCTIC, "arctic" },{ LLM_ARCH_DEEPSEEK, "deepseek" },{ LLM_ARCH_DEEPSEEK2, "deepseek2" },{ LLM_ARCH_CHATGLM, "chatglm" },{ LLM_ARCH_BITNET, "bitnet" },{ LLM_ARCH_T5, "t5" },{ LLM_ARCH_T5ENCODER, "t5encoder" },{ LLM_ARCH_JAIS, "jais" },{ LLM_ARCH_NEMOTRON, "nemotron" },{ LLM_ARCH_EXAONE, "exaone" },{ LLM_ARCH_RWKV6, "rwkv6" },{ LLM_ARCH_RWKV6QWEN2, "rwkv6qwen2" },{ LLM_ARCH_GRANITE, "granite" },{ LLM_ARCH_GRANITE_MOE, "granitemoe" },{ LLM_ARCH_CHAMELEON, "chameleon" },{ LLM_ARCH_WAVTOKENIZER_DEC, "wavtokenizer-dec" },{ LLM_ARCH_UNKNOWN, "(unknown)" },

};

2. LLM_ARCH_DEEPSEEK and LLM_ARCH_DEEPSEEK2

/home/yongqiang/llm_work/llama_cpp_25_01_05/llama.cpp/src/llama-arch.cpp

LLM_ARCH_DEEPSEEKandLLM_ARCH_DEEPSEEK2

static const std::map<llm_arch, std::map<llm_tensor, const char *>> LLM_TENSOR_NAMES = {{LLM_ARCH_LLAMA,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FREQS, "rope_freqs" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_ROT_EMBD, "blk.%d.attn_rot_embd" },{ LLM_TENSOR_FFN_GATE_INP, "blk.%d.ffn_gate_inp" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_FFN_GATE_EXP, "blk.%d.ffn_gate.%d" },{ LLM_TENSOR_FFN_DOWN_EXP, "blk.%d.ffn_down.%d" },{ LLM_TENSOR_FFN_UP_EXP, "blk.%d.ffn_up.%d" },{ LLM_TENSOR_FFN_GATE_EXPS, "blk.%d.ffn_gate_exps" },{ LLM_TENSOR_FFN_DOWN_EXPS, "blk.%d.ffn_down_exps" },{ LLM_TENSOR_FFN_UP_EXPS, "blk.%d.ffn_up_exps" },},},{LLM_ARCH_DECI,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FREQS, "rope_freqs" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_ROT_EMBD, "blk.%d.attn_rot_embd" },{ LLM_TENSOR_FFN_GATE_INP, "blk.%d.ffn_gate_inp" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_FFN_GATE_EXP, "blk.%d.ffn_gate.%d" },{ LLM_TENSOR_FFN_DOWN_EXP, "blk.%d.ffn_down.%d" },{ LLM_TENSOR_FFN_UP_EXP, "blk.%d.ffn_up.%d" },{ LLM_TENSOR_FFN_GATE_EXPS, "blk.%d.ffn_gate_exps" },{ LLM_TENSOR_FFN_DOWN_EXPS, "blk.%d.ffn_down_exps" },{ LLM_TENSOR_FFN_UP_EXPS, "blk.%d.ffn_up_exps" },},},{LLM_ARCH_BAICHUAN,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FREQS, "rope_freqs" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_ROT_EMBD, "blk.%d.attn_rot_embd" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_FALCON,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_NORM_2, "blk.%d.attn_norm_2" },{ LLM_TENSOR_ATTN_QKV, "blk.%d.attn_qkv" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_GROK,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FREQS, "rope_freqs" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_ROT_EMBD, "blk.%d.attn_rot_embd" },{ LLM_TENSOR_FFN_GATE_INP, "blk.%d.ffn_gate_inp" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE_EXP, "blk.%d.ffn_gate.%d" },{ LLM_TENSOR_FFN_DOWN_EXP, "blk.%d.ffn_down.%d" },{ LLM_TENSOR_FFN_UP_EXP, "blk.%d.ffn_up.%d" },{ LLM_TENSOR_FFN_GATE_EXPS, "blk.%d.ffn_gate_exps" },{ LLM_TENSOR_FFN_DOWN_EXPS, "blk.%d.ffn_down_exps" },{ LLM_TENSOR_FFN_UP_EXPS, "blk.%d.ffn_up_exps" },{ LLM_TENSOR_LAYER_OUT_NORM, "blk.%d.layer_output_norm" },{ LLM_TENSOR_ATTN_OUT_NORM, "blk.%d.attn_output_norm" },},},{LLM_ARCH_GPT2,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_POS_EMBD, "position_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_QKV, "blk.%d.attn_qkv" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },},},{LLM_ARCH_GPTJ,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },},},{LLM_ARCH_GPTNEOX,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_QKV, "blk.%d.attn_qkv" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_MPT,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output"},{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_ATTN_QKV, "blk.%d.attn_qkv" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_FFN_ACT, "blk.%d.ffn.act" },{ LLM_TENSOR_POS_EMBD, "position_embd" },{ LLM_TENSOR_ATTN_Q_NORM, "blk.%d.attn_q_norm"},{ LLM_TENSOR_ATTN_K_NORM, "blk.%d.attn_k_norm"},},},{LLM_ARCH_STARCODER,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_POS_EMBD, "position_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_QKV, "blk.%d.attn_qkv" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },},},{LLM_ARCH_REFACT,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_BERT,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_TOKEN_EMBD_NORM, "token_embd_norm" },{ LLM_TENSOR_TOKEN_TYPES, "token_types" },{ LLM_TENSOR_POS_EMBD, "position_embd" },{ LLM_TENSOR_ATTN_OUT_NORM, "blk.%d.attn_output_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_LAYER_OUT_NORM, "blk.%d.layer_output_norm" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_CLS, "cls" },{ LLM_TENSOR_CLS_OUT, "cls.output" },},},{LLM_ARCH_NOMIC_BERT,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_TOKEN_EMBD_NORM, "token_embd_norm" },{ LLM_TENSOR_TOKEN_TYPES, "token_types" },{ LLM_TENSOR_ATTN_OUT_NORM, "blk.%d.attn_output_norm" },{ LLM_TENSOR_ATTN_QKV, "blk.%d.attn_qkv" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_LAYER_OUT_NORM, "blk.%d.layer_output_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_JINA_BERT_V2,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_TOKEN_EMBD_NORM, "token_embd_norm" },{ LLM_TENSOR_TOKEN_TYPES, "token_types" },{ LLM_TENSOR_ATTN_NORM_2, "blk.%d.attn_norm_2" },{ LLM_TENSOR_ATTN_OUT_NORM, "blk.%d.attn_output_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_Q_NORM, "blk.%d.attn_q_norm" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_K_NORM, "blk.%d.attn_k_norm" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_LAYER_OUT_NORM, "blk.%d.layer_output_norm" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_CLS, "cls" },},},{LLM_ARCH_BLOOM,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_TOKEN_EMBD_NORM, "token_embd_norm" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_QKV, "blk.%d.attn_qkv" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },},},{LLM_ARCH_STABLELM,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FREQS, "rope_freqs" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_ATTN_Q_NORM, "blk.%d.attn_q_norm" },{ LLM_TENSOR_ATTN_K_NORM, "blk.%d.attn_k_norm" },},},{LLM_ARCH_QWEN,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FREQS, "rope_freqs" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_QKV, "blk.%d.attn_qkv" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_QWEN2,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_QWEN2VL,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_QWEN2MOE,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE_INP, "blk.%d.ffn_gate_inp" },{ LLM_TENSOR_FFN_GATE_EXPS, "blk.%d.ffn_gate_exps" },{ LLM_TENSOR_FFN_DOWN_EXPS, "blk.%d.ffn_down_exps" },{ LLM_TENSOR_FFN_UP_EXPS, "blk.%d.ffn_up_exps" },{ LLM_TENSOR_FFN_GATE_INP_SHEXP, "blk.%d.ffn_gate_inp_shexp" },{ LLM_TENSOR_FFN_GATE_SHEXP, "blk.%d.ffn_gate_shexp" },{ LLM_TENSOR_FFN_DOWN_SHEXP, "blk.%d.ffn_down_shexp" },{ LLM_TENSOR_FFN_UP_SHEXP, "blk.%d.ffn_up_shexp" },},},{LLM_ARCH_PHI2,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_QKV, "blk.%d.attn_qkv" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_PHI3,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FACTORS_LONG, "rope_factors_long" },{ LLM_TENSOR_ROPE_FACTORS_SHORT, "rope_factors_short" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_QKV, "blk.%d.attn_qkv" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_PHIMOE,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FACTORS_LONG, "rope_factors_long" },{ LLM_TENSOR_ROPE_FACTORS_SHORT, "rope_factors_short" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_QKV, "blk.%d.attn_qkv" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE_INP, "blk.%d.ffn_gate_inp" },{ LLM_TENSOR_FFN_GATE_EXPS, "blk.%d.ffn_gate_exps" },{ LLM_TENSOR_FFN_DOWN_EXPS, "blk.%d.ffn_down_exps" },{ LLM_TENSOR_FFN_UP_EXPS, "blk.%d.ffn_up_exps" },},},{LLM_ARCH_PLAMO,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FREQS, "rope_freqs" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_ROT_EMBD, "blk.%d.attn_rot_embd" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_CODESHELL,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FREQS, "rope_freqs" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_QKV, "blk.%d.attn_qkv" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_ROT_EMBD, "blk.%d.attn_rot_embd" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_ORION,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FREQS, "rope_freqs" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_ROT_EMBD, "blk.%d.attn_rot_embd" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_INTERNLM2,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_MINICPM,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FREQS, "rope_freqs" },{ LLM_TENSOR_ROPE_FACTORS_LONG, "rope_factors_long" },{ LLM_TENSOR_ROPE_FACTORS_SHORT, "rope_factors_short" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_ROT_EMBD, "blk.%d.attn_rot_embd" },{ LLM_TENSOR_FFN_GATE_INP, "blk.%d.ffn_gate_inp" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_FFN_GATE_EXP, "blk.%d.ffn_gate.%d" },{ LLM_TENSOR_FFN_DOWN_EXP, "blk.%d.ffn_down.%d" },{ LLM_TENSOR_FFN_UP_EXP, "blk.%d.ffn_up.%d" },},},{LLM_ARCH_MINICPM3,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FACTORS_LONG, "rope_factors_long" },{ LLM_TENSOR_ROPE_FACTORS_SHORT, "rope_factors_short" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q_A_NORM, "blk.%d.attn_q_a_norm" },{ LLM_TENSOR_ATTN_KV_A_NORM, "blk.%d.attn_kv_a_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_Q_A, "blk.%d.attn_q_a" },{ LLM_TENSOR_ATTN_Q_B, "blk.%d.attn_q_b" },{ LLM_TENSOR_ATTN_KV_A_MQA, "blk.%d.attn_kv_a_mqa" },{ LLM_TENSOR_ATTN_KV_B, "blk.%d.attn_kv_b" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },},},{LLM_ARCH_GEMMA,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_GEMMA2,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_POST_NORM, "blk.%d.post_attention_norm" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_FFN_POST_NORM, "blk.%d.post_ffw_norm" },},},{LLM_ARCH_STARCODER2,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FREQS, "rope_freqs" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_ROT_EMBD, "blk.%d.attn_rot_embd" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_MAMBA,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_SSM_IN, "blk.%d.ssm_in" },{ LLM_TENSOR_SSM_CONV1D, "blk.%d.ssm_conv1d" },{ LLM_TENSOR_SSM_X, "blk.%d.ssm_x" },{ LLM_TENSOR_SSM_DT, "blk.%d.ssm_dt" },{ LLM_TENSOR_SSM_A, "blk.%d.ssm_a" },{ LLM_TENSOR_SSM_D, "blk.%d.ssm_d" },{ LLM_TENSOR_SSM_OUT, "blk.%d.ssm_out" },},},{LLM_ARCH_XVERSE,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FREQS, "rope_freqs" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_ROT_EMBD, "blk.%d.attn_rot_embd" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_COMMAND_R,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_ATTN_Q_NORM, "blk.%d.attn_q_norm" },{ LLM_TENSOR_ATTN_K_NORM, "blk.%d.attn_k_norm" },},},{LLM_ARCH_COHERE2,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_DBRX,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_QKV, "blk.%d.attn_qkv" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_OUT_NORM, "blk.%d.attn_output_norm" },{ LLM_TENSOR_FFN_GATE_INP, "blk.%d.ffn_gate_inp" },{ LLM_TENSOR_FFN_GATE_EXPS, "blk.%d.ffn_gate_exps" },{ LLM_TENSOR_FFN_DOWN_EXPS, "blk.%d.ffn_down_exps" },{ LLM_TENSOR_FFN_UP_EXPS, "blk.%d.ffn_up_exps" },},},{LLM_ARCH_OLMO,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_OLMO2,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_POST_NORM, "blk.%d.post_attention_norm" },{ LLM_TENSOR_ATTN_Q_NORM, "blk.%d.attn_q_norm" },{ LLM_TENSOR_ATTN_K_NORM, "blk.%d.attn_k_norm" },{ LLM_TENSOR_FFN_POST_NORM, "blk.%d.post_ffw_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_OLMOE,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_Q_NORM, "blk.%d.attn_q_norm" },{ LLM_TENSOR_ATTN_K_NORM, "blk.%d.attn_k_norm" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE_INP, "blk.%d.ffn_gate_inp" },{ LLM_TENSOR_FFN_GATE_EXPS, "blk.%d.ffn_gate_exps" },{ LLM_TENSOR_FFN_DOWN_EXPS, "blk.%d.ffn_down_exps" },{ LLM_TENSOR_FFN_UP_EXPS, "blk.%d.ffn_up_exps" },},},{LLM_ARCH_OPENELM,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_QKV, "blk.%d.attn_qkv" },{ LLM_TENSOR_ATTN_Q_NORM, "blk.%d.attn_q_norm" },{ LLM_TENSOR_ATTN_K_NORM, "blk.%d.attn_k_norm" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_ARCTIC,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_GATE_INP, "blk.%d.ffn_gate_inp" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_FFN_NORM_EXPS, "blk.%d.ffn_norm_exps" },{ LLM_TENSOR_FFN_GATE_EXPS, "blk.%d.ffn_gate_exps" },{ LLM_TENSOR_FFN_DOWN_EXPS, "blk.%d.ffn_down_exps" },{ LLM_TENSOR_FFN_UP_EXPS, "blk.%d.ffn_up_exps" },},},{LLM_ARCH_DEEPSEEK,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FREQS, "rope_freqs" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_ROT_EMBD, "blk.%d.attn_rot_embd" },{ LLM_TENSOR_FFN_GATE_INP, "blk.%d.ffn_gate_inp" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_FFN_GATE_EXPS, "blk.%d.ffn_gate_exps" },{ LLM_TENSOR_FFN_DOWN_EXPS, "blk.%d.ffn_down_exps" },{ LLM_TENSOR_FFN_UP_EXPS, "blk.%d.ffn_up_exps" },{ LLM_TENSOR_FFN_GATE_INP_SHEXP, "blk.%d.ffn_gate_inp_shexp" },{ LLM_TENSOR_FFN_GATE_SHEXP, "blk.%d.ffn_gate_shexp" },{ LLM_TENSOR_FFN_DOWN_SHEXP, "blk.%d.ffn_down_shexp" },{ LLM_TENSOR_FFN_UP_SHEXP, "blk.%d.ffn_up_shexp" },},},{LLM_ARCH_DEEPSEEK2,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q_A_NORM, "blk.%d.attn_q_a_norm" },{ LLM_TENSOR_ATTN_KV_A_NORM, "blk.%d.attn_kv_a_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_Q_A, "blk.%d.attn_q_a" },{ LLM_TENSOR_ATTN_Q_B, "blk.%d.attn_q_b" },{ LLM_TENSOR_ATTN_KV_A_MQA, "blk.%d.attn_kv_a_mqa" },{ LLM_TENSOR_ATTN_KV_B, "blk.%d.attn_kv_b" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_GATE_INP, "blk.%d.ffn_gate_inp" },{ LLM_TENSOR_FFN_GATE_EXPS, "blk.%d.ffn_gate_exps" },{ LLM_TENSOR_FFN_DOWN_EXPS, "blk.%d.ffn_down_exps" },{ LLM_TENSOR_FFN_UP_EXPS, "blk.%d.ffn_up_exps" },{ LLM_TENSOR_FFN_GATE_INP_SHEXP, "blk.%d.ffn_gate_inp_shexp" },{ LLM_TENSOR_FFN_GATE_SHEXP, "blk.%d.ffn_gate_shexp" },{ LLM_TENSOR_FFN_DOWN_SHEXP, "blk.%d.ffn_down_shexp" },{ LLM_TENSOR_FFN_UP_SHEXP, "blk.%d.ffn_up_shexp" },{ LLM_TENSOR_FFN_EXP_PROBS_B, "blk.%d.exp_probs_b" },},},{LLM_ARCH_CHATGLM,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_ROPE_FREQS, "rope_freqs" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_QKV, "blk.%d.attn_qkv" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },},},{LLM_ARCH_BITNET,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_SUB_NORM, "blk.%d.attn_sub_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_SUB_NORM, "blk.%d.ffn_sub_norm" },},},{LLM_ARCH_T5,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_DEC_OUTPUT_NORM, "dec.output_norm" },{ LLM_TENSOR_DEC_ATTN_NORM, "dec.blk.%d.attn_norm" },{ LLM_TENSOR_DEC_ATTN_Q, "dec.blk.%d.attn_q" },{ LLM_TENSOR_DEC_ATTN_K, "dec.blk.%d.attn_k" },{ LLM_TENSOR_DEC_ATTN_V, "dec.blk.%d.attn_v" },{ LLM_TENSOR_DEC_ATTN_OUT, "dec.blk.%d.attn_o" },{ LLM_TENSOR_DEC_ATTN_REL_B, "dec.blk.%d.attn_rel_b" },{ LLM_TENSOR_DEC_CROSS_ATTN_NORM, "dec.blk.%d.cross_attn_norm" },{ LLM_TENSOR_DEC_CROSS_ATTN_Q, "dec.blk.%d.cross_attn_q" },{ LLM_TENSOR_DEC_CROSS_ATTN_K, "dec.blk.%d.cross_attn_k" },{ LLM_TENSOR_DEC_CROSS_ATTN_V, "dec.blk.%d.cross_attn_v" },{ LLM_TENSOR_DEC_CROSS_ATTN_OUT, "dec.blk.%d.cross_attn_o" },{ LLM_TENSOR_DEC_CROSS_ATTN_REL_B, "dec.blk.%d.cross_attn_rel_b" },{ LLM_TENSOR_DEC_FFN_NORM, "dec.blk.%d.ffn_norm" },{ LLM_TENSOR_DEC_FFN_GATE, "dec.blk.%d.ffn_gate" },{ LLM_TENSOR_DEC_FFN_DOWN, "dec.blk.%d.ffn_down" },{ LLM_TENSOR_DEC_FFN_UP, "dec.blk.%d.ffn_up" },{ LLM_TENSOR_ENC_OUTPUT_NORM, "enc.output_norm" },{ LLM_TENSOR_ENC_ATTN_NORM, "enc.blk.%d.attn_norm" },{ LLM_TENSOR_ENC_ATTN_Q, "enc.blk.%d.attn_q" },{ LLM_TENSOR_ENC_ATTN_K, "enc.blk.%d.attn_k" },{ LLM_TENSOR_ENC_ATTN_V, "enc.blk.%d.attn_v" },{ LLM_TENSOR_ENC_ATTN_OUT, "enc.blk.%d.attn_o" },{ LLM_TENSOR_ENC_ATTN_REL_B, "enc.blk.%d.attn_rel_b" },{ LLM_TENSOR_ENC_FFN_NORM, "enc.blk.%d.ffn_norm" },{ LLM_TENSOR_ENC_FFN_GATE, "enc.blk.%d.ffn_gate" },{ LLM_TENSOR_ENC_FFN_DOWN, "enc.blk.%d.ffn_down" },{ LLM_TENSOR_ENC_FFN_UP, "enc.blk.%d.ffn_up" },},},{LLM_ARCH_T5ENCODER,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ENC_OUTPUT_NORM, "enc.output_norm" },{ LLM_TENSOR_ENC_ATTN_NORM, "enc.blk.%d.attn_norm" },{ LLM_TENSOR_ENC_ATTN_Q, "enc.blk.%d.attn_q" },{ LLM_TENSOR_ENC_ATTN_K, "enc.blk.%d.attn_k" },{ LLM_TENSOR_ENC_ATTN_V, "enc.blk.%d.attn_v" },{ LLM_TENSOR_ENC_ATTN_OUT, "enc.blk.%d.attn_o" },{ LLM_TENSOR_ENC_ATTN_REL_B, "enc.blk.%d.attn_rel_b" },{ LLM_TENSOR_ENC_FFN_NORM, "enc.blk.%d.ffn_norm" },{ LLM_TENSOR_ENC_FFN_GATE, "enc.blk.%d.ffn_gate" },{ LLM_TENSOR_ENC_FFN_DOWN, "enc.blk.%d.ffn_down" },{ LLM_TENSOR_ENC_FFN_UP, "enc.blk.%d.ffn_up" },},},{LLM_ARCH_JAIS,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_QKV, "blk.%d.attn_qkv" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },},},{LLM_ARCH_NEMOTRON,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FREQS, "rope_freqs" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_ROT_EMBD, "blk.%d.attn_rot_embd" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_EXAONE,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ROPE_FREQS, "rope_freqs" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_ATTN_ROT_EMBD, "blk.%d.attn_rot_embd" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_RWKV6,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_TOKEN_EMBD_NORM, "token_embd_norm" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_NORM_2, "blk.%d.attn_norm_2" },{ LLM_TENSOR_TIME_MIX_W1, "blk.%d.time_mix_w1" },{ LLM_TENSOR_TIME_MIX_W2, "blk.%d.time_mix_w2" },{ LLM_TENSOR_TIME_MIX_LERP_X, "blk.%d.time_mix_lerp_x" },{ LLM_TENSOR_TIME_MIX_LERP_W, "blk.%d.time_mix_lerp_w" },{ LLM_TENSOR_TIME_MIX_LERP_K, "blk.%d.time_mix_lerp_k" },{ LLM_TENSOR_TIME_MIX_LERP_V, "blk.%d.time_mix_lerp_v" },{ LLM_TENSOR_TIME_MIX_LERP_R, "blk.%d.time_mix_lerp_r" },{ LLM_TENSOR_TIME_MIX_LERP_G, "blk.%d.time_mix_lerp_g" },{ LLM_TENSOR_TIME_MIX_LERP_FUSED, "blk.%d.time_mix_lerp_fused" },{ LLM_TENSOR_TIME_MIX_FIRST, "blk.%d.time_mix_first" },{ LLM_TENSOR_TIME_MIX_DECAY, "blk.%d.time_mix_decay" },{ LLM_TENSOR_TIME_MIX_DECAY_W1, "blk.%d.time_mix_decay_w1" },{ LLM_TENSOR_TIME_MIX_DECAY_W2, "blk.%d.time_mix_decay_w2" },{ LLM_TENSOR_TIME_MIX_KEY, "blk.%d.time_mix_key" },{ LLM_TENSOR_TIME_MIX_VALUE, "blk.%d.time_mix_value" },{ LLM_TENSOR_TIME_MIX_RECEPTANCE, "blk.%d.time_mix_receptance" },{ LLM_TENSOR_TIME_MIX_GATE, "blk.%d.time_mix_gate" },{ LLM_TENSOR_TIME_MIX_LN, "blk.%d.time_mix_ln" },{ LLM_TENSOR_TIME_MIX_OUTPUT, "blk.%d.time_mix_output" },{ LLM_TENSOR_CHANNEL_MIX_LERP_K, "blk.%d.channel_mix_lerp_k" },{ LLM_TENSOR_CHANNEL_MIX_LERP_R, "blk.%d.channel_mix_lerp_r" },{ LLM_TENSOR_CHANNEL_MIX_KEY, "blk.%d.channel_mix_key" },{ LLM_TENSOR_CHANNEL_MIX_VALUE, "blk.%d.channel_mix_value" },{ LLM_TENSOR_CHANNEL_MIX_RECEPTANCE, "blk.%d.channel_mix_receptance" },},},{LLM_ARCH_RWKV6QWEN2,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_TIME_MIX_W1, "blk.%d.time_mix_w1" },{ LLM_TENSOR_TIME_MIX_W2, "blk.%d.time_mix_w2" },{ LLM_TENSOR_TIME_MIX_LERP_X, "blk.%d.time_mix_lerp_x" },{ LLM_TENSOR_TIME_MIX_LERP_FUSED, "blk.%d.time_mix_lerp_fused" },{ LLM_TENSOR_TIME_MIX_FIRST, "blk.%d.time_mix_first" },{ LLM_TENSOR_TIME_MIX_DECAY, "blk.%d.time_mix_decay" },{ LLM_TENSOR_TIME_MIX_DECAY_W1, "blk.%d.time_mix_decay_w1" },{ LLM_TENSOR_TIME_MIX_DECAY_W2, "blk.%d.time_mix_decay_w2" },{ LLM_TENSOR_TIME_MIX_KEY, "blk.%d.time_mix_key" },{ LLM_TENSOR_TIME_MIX_VALUE, "blk.%d.time_mix_value" },{ LLM_TENSOR_TIME_MIX_RECEPTANCE, "blk.%d.time_mix_receptance" },{ LLM_TENSOR_TIME_MIX_GATE, "blk.%d.time_mix_gate" },{ LLM_TENSOR_TIME_MIX_OUTPUT, "blk.%d.time_mix_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_GRANITE,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },},},{LLM_ARCH_GRANITE_MOE,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE_INP, "blk.%d.ffn_gate_inp" },{ LLM_TENSOR_FFN_GATE_EXPS, "blk.%d.ffn_gate_exps" },{ LLM_TENSOR_FFN_DOWN_EXPS, "blk.%d.ffn_down_exps" },{ LLM_TENSOR_FFN_UP_EXPS, "blk.%d.ffn_up_exps" },},},{LLM_ARCH_CHAMELEON,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_ATTN_NORM, "blk.%d.attn_norm" },{ LLM_TENSOR_ATTN_Q, "blk.%d.attn_q" },{ LLM_TENSOR_ATTN_K, "blk.%d.attn_k" },{ LLM_TENSOR_ATTN_V, "blk.%d.attn_v" },{ LLM_TENSOR_ATTN_OUT, "blk.%d.attn_output" },{ LLM_TENSOR_FFN_NORM, "blk.%d.ffn_norm" },{ LLM_TENSOR_FFN_GATE, "blk.%d.ffn_gate" },{ LLM_TENSOR_FFN_DOWN, "blk.%d.ffn_down" },{ LLM_TENSOR_FFN_UP, "blk.%d.ffn_up" },{ LLM_TENSOR_ATTN_Q_NORM, "blk.%d.attn_q_norm" },{ LLM_TENSOR_ATTN_K_NORM, "blk.%d.attn_k_norm" },},},{LLM_ARCH_WAVTOKENIZER_DEC,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },{ LLM_TENSOR_TOKEN_EMBD_NORM, "token_embd_norm" },{ LLM_TENSOR_CONV1D, "conv1d" },{ LLM_TENSOR_CONVNEXT_DW, "convnext.%d.dw" },{ LLM_TENSOR_CONVNEXT_NORM, "convnext.%d.norm" },{ LLM_TENSOR_CONVNEXT_PW1, "convnext.%d.pw1" },{ LLM_TENSOR_CONVNEXT_PW2, "convnext.%d.pw2" },{ LLM_TENSOR_CONVNEXT_GAMMA, "convnext.%d.gamma" },{ LLM_TENSOR_OUTPUT_NORM, "output_norm" },{ LLM_TENSOR_OUTPUT, "output" },{ LLM_TENSOR_POS_NET_CONV1, "posnet.%d.conv1" },{ LLM_TENSOR_POS_NET_CONV2, "posnet.%d.conv2" },{ LLM_TENSOR_POS_NET_NORM, "posnet.%d.norm" },{ LLM_TENSOR_POS_NET_NORM1, "posnet.%d.norm1" },{ LLM_TENSOR_POS_NET_NORM2, "posnet.%d.norm2" },{ LLM_TENSOR_POS_NET_ATTN_NORM, "posnet.%d.attn_norm" },{ LLM_TENSOR_POS_NET_ATTN_Q, "posnet.%d.attn_q" },{ LLM_TENSOR_POS_NET_ATTN_K, "posnet.%d.attn_k" },{ LLM_TENSOR_POS_NET_ATTN_V, "posnet.%d.attn_v" },{ LLM_TENSOR_POS_NET_ATTN_OUT, "posnet.%d.attn_output" },},},{LLM_ARCH_UNKNOWN,{{ LLM_TENSOR_TOKEN_EMBD, "token_embd" },},},

};

3. struct ggml_cgraph * build_deepseek() and struct ggml_cgraph * build_deepseek2()

/home/yongqiang/llm_work/llama_cpp_25_01_05/llama.cpp/src/llama.cpp

struct ggml_cgraph * build_deepseek()

struct ggml_cgraph * build_deepseek() {struct ggml_cgraph * gf = ggml_new_graph_custom(ctx0, model.max_nodes(), false);// mutable variable, needed during the last layer of the computation to skip unused tokensint32_t n_tokens = this->n_tokens;const int64_t n_embd_head = hparams.n_embd_head_v;GGML_ASSERT(n_embd_head == hparams.n_embd_head_k);GGML_ASSERT(n_embd_head == hparams.n_rot);struct ggml_tensor * cur;struct ggml_tensor * inpL;inpL = llm_build_inp_embd(ctx0, lctx, hparams, ubatch, model.tok_embd, cb);// inp_pos - contains the positionsstruct ggml_tensor * inp_pos = build_inp_pos();// KQ_mask (mask for 1 head, it will be broadcasted to all heads)struct ggml_tensor * KQ_mask = build_inp_KQ_mask();const float kq_scale = hparams.f_attention_scale == 0.0f ? 1.0f/sqrtf(float(n_embd_head)) : hparams.f_attention_scale;for (int il = 0; il < n_layer; ++il) {struct ggml_tensor * inpSA = inpL;// normcur = llm_build_norm(ctx0, inpL, hparams,model.layers[il].attn_norm, NULL,LLM_NORM_RMS, cb, il);cb(cur, "attn_norm", il);// self-attention{// rope freq factors for llama3; may return nullptr for llama2 and other modelsstruct ggml_tensor * rope_factors = build_rope_factors(il);// compute Q and K and RoPE themstruct ggml_tensor * Qcur = llm_build_lora_mm(lctx, ctx0, model.layers[il].wq, cur);cb(Qcur, "Qcur", il);if (model.layers[il].bq) {Qcur = ggml_add(ctx0, Qcur, model.layers[il].bq);cb(Qcur, "Qcur", il);}struct ggml_tensor * Kcur = llm_build_lora_mm(lctx, ctx0, model.layers[il].wk, cur);cb(Kcur, "Kcur", il);if (model.layers[il].bk) {Kcur = ggml_add(ctx0, Kcur, model.layers[il].bk);cb(Kcur, "Kcur", il);}struct ggml_tensor * Vcur = llm_build_lora_mm(lctx, ctx0, model.layers[il].wv, cur);cb(Vcur, "Vcur", il);if (model.layers[il].bv) {Vcur = ggml_add(ctx0, Vcur, model.layers[il].bv);cb(Vcur, "Vcur", il);}Qcur = ggml_rope_ext(ctx0, ggml_reshape_3d(ctx0, Qcur, n_embd_head, n_head, n_tokens), inp_pos, rope_factors,n_rot, rope_type, n_ctx_orig, freq_base, freq_scale,ext_factor, attn_factor, beta_fast, beta_slow);cb(Qcur, "Qcur", il);Kcur = ggml_rope_ext(ctx0, ggml_reshape_3d(ctx0, Kcur, n_embd_head, n_head_kv, n_tokens), inp_pos, rope_factors,n_rot, rope_type, n_ctx_orig, freq_base, freq_scale,ext_factor, attn_factor, beta_fast, beta_slow);cb(Kcur, "Kcur", il);cur = llm_build_kv(ctx0, lctx, kv_self, gf,model.layers[il].wo, model.layers[il].bo,Kcur, Vcur, Qcur, KQ_mask, n_tokens, kv_head, n_kv, kq_scale, cb, il);}if (il == n_layer - 1) {// skip computing output for unused tokensstruct ggml_tensor * inp_out_ids = build_inp_out_ids();n_tokens = n_outputs;cur = ggml_get_rows(ctx0, cur, inp_out_ids);inpSA = ggml_get_rows(ctx0, inpSA, inp_out_ids);}struct ggml_tensor * ffn_inp = ggml_add(ctx0, cur, inpSA);cb(ffn_inp, "ffn_inp", il);cur = llm_build_norm(ctx0, ffn_inp, hparams,model.layers[il].ffn_norm, NULL,LLM_NORM_RMS, cb, il);cb(cur, "ffn_norm", il);if ((uint32_t) il < hparams.n_layer_dense_lead) {cur = llm_build_ffn(ctx0, lctx, cur,model.layers[il].ffn_up, NULL, NULL,model.layers[il].ffn_gate, NULL, NULL,model.layers[il].ffn_down, NULL, NULL,NULL,LLM_FFN_SILU, LLM_FFN_PAR, cb, il);cb(cur, "ffn_out", il);} else {// MoE branchggml_tensor * moe_out =llm_build_moe_ffn(ctx0, lctx, cur,model.layers[il].ffn_gate_inp,model.layers[il].ffn_up_exps,model.layers[il].ffn_gate_exps,model.layers[il].ffn_down_exps,nullptr,n_expert, n_expert_used,LLM_FFN_SILU, false,false, hparams.expert_weights_scale,LLAMA_EXPERT_GATING_FUNC_TYPE_SOFTMAX,cb, il);cb(moe_out, "ffn_moe_out", il);// FFN shared expert{ggml_tensor * ffn_shexp = llm_build_ffn(ctx0, lctx, cur,model.layers[il].ffn_up_shexp, NULL, NULL,model.layers[il].ffn_gate_shexp, NULL, NULL,model.layers[il].ffn_down_shexp, NULL, NULL,NULL,LLM_FFN_SILU, LLM_FFN_PAR, cb, il);cb(ffn_shexp, "ffn_shexp", il);cur = ggml_add(ctx0, moe_out, ffn_shexp);cb(cur, "ffn_out", il);}}cur = ggml_add(ctx0, cur, ffn_inp);cur = lctx.cvec.apply_to(ctx0, cur, il);cb(cur, "l_out", il);// input for next layerinpL = cur;}cur = inpL;cur = llm_build_norm(ctx0, cur, hparams,model.output_norm, NULL,LLM_NORM_RMS, cb, -1);cb(cur, "result_norm", -1);// lm_headcur = llm_build_lora_mm(lctx, ctx0, model.output, cur);cb(cur, "result_output", -1);ggml_build_forward_expand(gf, cur);return gf;}

struct ggml_cgraph * build_deepseek2()

struct ggml_cgraph * build_deepseek2() {struct ggml_cgraph * gf = ggml_new_graph_custom(ctx0, model.max_nodes(), false);// mutable variable, needed during the last layer of the computation to skip unused tokensint32_t n_tokens = this->n_tokens;bool is_lite = (hparams.n_layer == 27);// We have to pre-scale kq_scale and attn_factor to make the YaRN RoPE work correctly.// See https://github.com/ggerganov/llama.cpp/discussions/7416 for detailed explanation.const float mscale = attn_factor * (1.0f + hparams.rope_yarn_log_mul * logf(1.0f / freq_scale));const float kq_scale = 1.0f*mscale*mscale/sqrtf(float(hparams.n_embd_head_k));const float attn_factor_scaled = 1.0f / (1.0f + 0.1f * logf(1.0f / freq_scale));const uint32_t n_embd_head_qk_rope = hparams.n_rot;const uint32_t n_embd_head_qk_nope = hparams.n_embd_head_k - hparams.n_rot;const uint32_t kv_lora_rank = hparams.n_lora_kv;struct ggml_tensor * cur;struct ggml_tensor * inpL;// {n_embd, n_tokens}inpL = llm_build_inp_embd(ctx0, lctx, hparams, ubatch, model.tok_embd, cb);// inp_pos - contains the positionsstruct ggml_tensor * inp_pos = build_inp_pos();// KQ_mask (mask for 1 head, it will be broadcasted to all heads)struct ggml_tensor * KQ_mask = build_inp_KQ_mask();for (int il = 0; il < n_layer; ++il) {struct ggml_tensor * inpSA = inpL;// normcur = llm_build_norm(ctx0, inpL, hparams,model.layers[il].attn_norm, NULL,LLM_NORM_RMS, cb, il);cb(cur, "attn_norm", il);// self_attention{struct ggml_tensor * q = NULL;if (!is_lite) {// {n_embd, q_lora_rank} * {n_embd, n_tokens} -> {q_lora_rank, n_tokens}q = ggml_mul_mat(ctx0, model.layers[il].wq_a, cur);cb(q, "q", il);q = llm_build_norm(ctx0, q, hparams,model.layers[il].attn_q_a_norm, NULL,LLM_NORM_RMS, cb, il);cb(q, "q", il);// {q_lora_rank, n_head * hparams.n_embd_head_k} * {q_lora_rank, n_tokens} -> {n_head * hparams.n_embd_head_k, n_tokens}q = ggml_mul_mat(ctx0, model.layers[il].wq_b, q);cb(q, "q", il);} else {q = ggml_mul_mat(ctx0, model.layers[il].wq, cur);cb(q, "q", il);}// split into {n_head * n_embd_head_qk_nope, n_tokens}struct ggml_tensor * q_nope = ggml_view_3d(ctx0, q, n_embd_head_qk_nope, n_head, n_tokens,ggml_row_size(q->type, hparams.n_embd_head_k),ggml_row_size(q->type, hparams.n_embd_head_k * n_head),0);cb(q_nope, "q_nope", il);// and {n_head * n_embd_head_qk_rope, n_tokens}struct ggml_tensor * q_pe = ggml_view_3d(ctx0, q, n_embd_head_qk_rope, n_head, n_tokens,ggml_row_size(q->type, hparams.n_embd_head_k),ggml_row_size(q->type, hparams.n_embd_head_k * n_head),ggml_row_size(q->type, n_embd_head_qk_nope));cb(q_pe, "q_pe", il);// {n_embd, kv_lora_rank + n_embd_head_qk_rope} * {n_embd, n_tokens} -> {kv_lora_rank + n_embd_head_qk_rope, n_tokens}struct ggml_tensor * kv_pe_compresseed = ggml_mul_mat(ctx0, model.layers[il].wkv_a_mqa, cur);cb(kv_pe_compresseed, "kv_pe_compresseed", il);// split into {kv_lora_rank, n_tokens}struct ggml_tensor * kv_compressed = ggml_view_2d(ctx0, kv_pe_compresseed, kv_lora_rank, n_tokens,kv_pe_compresseed->nb[1],0);cb(kv_compressed, "kv_compressed", il);// and {n_embd_head_qk_rope, n_tokens}struct ggml_tensor * k_pe = ggml_view_3d(ctx0, kv_pe_compresseed, n_embd_head_qk_rope, 1, n_tokens,kv_pe_compresseed->nb[1],kv_pe_compresseed->nb[1],ggml_row_size(kv_pe_compresseed->type, kv_lora_rank));cb(k_pe, "k_pe", il);kv_compressed = ggml_cont(ctx0, kv_compressed); // TODO: the CUDA backend does not support non-contiguous normkv_compressed = llm_build_norm(ctx0, kv_compressed, hparams,model.layers[il].attn_kv_a_norm, NULL,LLM_NORM_RMS, cb, il);cb(kv_compressed, "kv_compressed", il);// {kv_lora_rank, n_head * (n_embd_head_qk_nope + n_embd_head_v)} * {kv_lora_rank, n_tokens} -> {n_head * (n_embd_head_qk_nope + n_embd_head_v), n_tokens}struct ggml_tensor * kv = ggml_mul_mat(ctx0, model.layers[il].wkv_b, kv_compressed);cb(kv, "kv", il);// split into {n_head * n_embd_head_qk_nope, n_tokens}struct ggml_tensor * k_nope = ggml_view_3d(ctx0, kv, n_embd_head_qk_nope, n_head, n_tokens,ggml_row_size(kv->type, n_embd_head_qk_nope + hparams.n_embd_head_v),ggml_row_size(kv->type, n_head * (n_embd_head_qk_nope + hparams.n_embd_head_v)),0);cb(k_nope, "k_nope", il);// and {n_head * n_embd_head_v, n_tokens}struct ggml_tensor * v_states = ggml_view_3d(ctx0, kv, hparams.n_embd_head_v, n_head, n_tokens,ggml_row_size(kv->type, (n_embd_head_qk_nope + hparams.n_embd_head_v)),ggml_row_size(kv->type, (n_embd_head_qk_nope + hparams.n_embd_head_v)*n_head),ggml_row_size(kv->type, (n_embd_head_qk_nope)));cb(v_states, "v_states", il);v_states = ggml_cont(ctx0, v_states);cb(v_states, "v_states", il);v_states = ggml_view_2d(ctx0, v_states, hparams.n_embd_head_v * n_head, n_tokens,ggml_row_size(kv->type, hparams.n_embd_head_v * n_head),0);cb(v_states, "v_states", il);q_pe = ggml_cont(ctx0, q_pe); // TODO: the CUDA backend used to not support non-cont. RoPE, investigate removing thisq_pe = ggml_rope_ext(ctx0, q_pe, inp_pos, nullptr,n_rot, rope_type, n_ctx_orig, freq_base, freq_scale,ext_factor, attn_factor_scaled, beta_fast, beta_slow);cb(q_pe, "q_pe", il);// shared RoPE keyk_pe = ggml_cont(ctx0, k_pe); // TODO: the CUDA backend used to not support non-cont. RoPE, investigate removing thisk_pe = ggml_rope_ext(ctx0, k_pe, inp_pos, nullptr,n_rot, rope_type, n_ctx_orig, freq_base, freq_scale,ext_factor, attn_factor_scaled, beta_fast, beta_slow);cb(k_pe, "k_pe", il);struct ggml_tensor * q_states = ggml_concat(ctx0, q_nope, q_pe, 0);cb(q_states, "q_states", il);struct ggml_tensor * k_states = ggml_concat(ctx0, k_nope, ggml_repeat(ctx0, k_pe, q_pe), 0);cb(k_states, "k_states", il);cur = llm_build_kv(ctx0, lctx, kv_self, gf,model.layers[il].wo, NULL,k_states, v_states, q_states, KQ_mask, n_tokens, kv_head, n_kv, kq_scale, cb, il);}if (il == n_layer - 1) {// skip computing output for unused tokensstruct ggml_tensor * inp_out_ids = build_inp_out_ids();n_tokens = n_outputs;cur = ggml_get_rows(ctx0, cur, inp_out_ids);inpSA = ggml_get_rows(ctx0, inpSA, inp_out_ids);}struct ggml_tensor * ffn_inp = ggml_add(ctx0, cur, inpSA);cb(ffn_inp, "ffn_inp", il);cur = llm_build_norm(ctx0, ffn_inp, hparams,model.layers[il].ffn_norm, NULL,LLM_NORM_RMS, cb, il);cb(cur, "ffn_norm", il);if ((uint32_t) il < hparams.n_layer_dense_lead) {cur = llm_build_ffn(ctx0, lctx, cur,model.layers[il].ffn_up, NULL, NULL,model.layers[il].ffn_gate, NULL, NULL,model.layers[il].ffn_down, NULL, NULL,NULL,LLM_FFN_SILU, LLM_FFN_PAR, cb, il);cb(cur, "ffn_out", il);} else {// MoE branchggml_tensor * moe_out =llm_build_moe_ffn(ctx0, lctx, cur,model.layers[il].ffn_gate_inp,model.layers[il].ffn_up_exps,model.layers[il].ffn_gate_exps,model.layers[il].ffn_down_exps,model.layers[il].ffn_exp_probs_b,n_expert, n_expert_used,LLM_FFN_SILU, hparams.expert_weights_norm,true, hparams.expert_weights_scale,(enum llama_expert_gating_func_type) hparams.expert_gating_func,cb, il);cb(moe_out, "ffn_moe_out", il);// FFN shared expert{ggml_tensor * ffn_shexp = llm_build_ffn(ctx0, lctx, cur,model.layers[il].ffn_up_shexp, NULL, NULL,model.layers[il].ffn_gate_shexp, NULL, NULL,model.layers[il].ffn_down_shexp, NULL, NULL,NULL,LLM_FFN_SILU, LLM_FFN_PAR, cb, il);cb(ffn_shexp, "ffn_shexp", il);cur = ggml_add(ctx0, moe_out, ffn_shexp);cb(cur, "ffn_out", il);}}cur = ggml_add(ctx0, cur, ffn_inp);cur = lctx.cvec.apply_to(ctx0, cur, il);cb(cur, "l_out", il);// input for next layerinpL = cur;}cur = inpL;cur = llm_build_norm(ctx0, cur, hparams,model.output_norm, NULL,LLM_NORM_RMS, cb, -1);cb(cur, "result_norm", -1);// lm_headcur = ggml_mul_mat(ctx0, model.output, cur);cb(cur, "result_output", -1);ggml_build_forward_expand(gf, cur);return gf;}

case LLM_ARCH_DEEPSEEK:andcase LLM_ARCH_DEEPSEEK2:

switch (model.arch) {case LLM_ARCH_LLAMA:case LLM_ARCH_MINICPM:case LLM_ARCH_GRANITE:case LLM_ARCH_GRANITE_MOE:{result = llm.build_llama();} break;case LLM_ARCH_DECI:{result = llm.build_deci();} break;case LLM_ARCH_BAICHUAN:{result = llm.build_baichuan();} break;case LLM_ARCH_FALCON:{result = llm.build_falcon();} break;case LLM_ARCH_GROK:{result = llm.build_grok();} break;case LLM_ARCH_STARCODER:{result = llm.build_starcoder();} break;case LLM_ARCH_REFACT:{result = llm.build_refact();} break;case LLM_ARCH_BERT:case LLM_ARCH_JINA_BERT_V2:case LLM_ARCH_NOMIC_BERT:{result = llm.build_bert();} break;case LLM_ARCH_BLOOM:{result = llm.build_bloom();} break;case LLM_ARCH_MPT:{result = llm.build_mpt();} break;case LLM_ARCH_STABLELM:{result = llm.build_stablelm();} break;case LLM_ARCH_QWEN:{result = llm.build_qwen();} break;case LLM_ARCH_QWEN2:{result = llm.build_qwen2();} break;case LLM_ARCH_QWEN2VL:{lctx.n_pos_per_token = 4;result = llm.build_qwen2vl();} break;case LLM_ARCH_QWEN2MOE:{result = llm.build_qwen2moe();} break;case LLM_ARCH_PHI2:{result = llm.build_phi2();} break;case LLM_ARCH_PHI3:case LLM_ARCH_PHIMOE:{result = llm.build_phi3();} break;case LLM_ARCH_PLAMO:{result = llm.build_plamo();} break;case LLM_ARCH_GPT2:{result = llm.build_gpt2();} break;case LLM_ARCH_CODESHELL:{result = llm.build_codeshell();} break;case LLM_ARCH_ORION:{result = llm.build_orion();} break;case LLM_ARCH_INTERNLM2:{result = llm.build_internlm2();} break;case LLM_ARCH_MINICPM3:{result = llm.build_minicpm3();} break;case LLM_ARCH_GEMMA:{result = llm.build_gemma();} break;case LLM_ARCH_GEMMA2:{result = llm.build_gemma2();} break;case LLM_ARCH_STARCODER2:{result = llm.build_starcoder2();} break;case LLM_ARCH_MAMBA:{result = llm.build_mamba();} break;case LLM_ARCH_XVERSE:{result = llm.build_xverse();} break;case LLM_ARCH_COMMAND_R:{result = llm.build_command_r();} break;case LLM_ARCH_COHERE2:{result = llm.build_cohere2();} break;case LLM_ARCH_DBRX:{result = llm.build_dbrx();} break;case LLM_ARCH_OLMO:{result = llm.build_olmo();} break;case LLM_ARCH_OLMO2:{result = llm.build_olmo2();} break;case LLM_ARCH_OLMOE:{result = llm.build_olmoe();} break;case LLM_ARCH_OPENELM:{result = llm.build_openelm();} break;case LLM_ARCH_GPTNEOX:{result = llm.build_gptneox();} break;case LLM_ARCH_ARCTIC:{result = llm.build_arctic();} break;case LLM_ARCH_DEEPSEEK:{result = llm.build_deepseek();} break;case LLM_ARCH_DEEPSEEK2:{result = llm.build_deepseek2();} break;case LLM_ARCH_CHATGLM:{result = llm.build_chatglm();} break;case LLM_ARCH_BITNET:{result = llm.build_bitnet();} break;case LLM_ARCH_T5:{if (lctx.is_encoding) {result = llm.build_t5_enc();} else {result = llm.build_t5_dec();}} break;case LLM_ARCH_T5ENCODER:{result = llm.build_t5_enc();} break;case LLM_ARCH_JAIS:{result = llm.build_jais();} break;case LLM_ARCH_NEMOTRON:{result = llm.build_nemotron();} break;case LLM_ARCH_EXAONE:{result = llm.build_exaone();} break;case LLM_ARCH_RWKV6:{result = llm.build_rwkv6();} break;case LLM_ARCH_RWKV6QWEN2:{result = llm.build_rwkv6qwen2();} break;case LLM_ARCH_CHAMELEON:{result = llm.build_chameleon();} break;case LLM_ARCH_WAVTOKENIZER_DEC:{result = llm.build_wavtokenizer_dec();} break;default:GGML_ABORT("fatal error");}

References

[1] Yongqiang Cheng, https://yongqiang.blog.csdn.net/

[2] huggingface/gguf, https://github.com/huggingface/huggingface.js/tree/main/packages/gguf

相关文章:

llama.cpp LLM_ARCH_DEEPSEEK and LLM_ARCH_DEEPSEEK2

llama.cpp LLM_ARCH_DEEPSEEK and LLM_ARCH_DEEPSEEK2 1. LLM_ARCH_DEEPSEEK and LLM_ARCH_DEEPSEEK22. LLM_ARCH_DEEPSEEK and LLM_ARCH_DEEPSEEK23. struct ggml_cgraph * build_deepseek() and struct ggml_cgraph * build_deepseek2()References 不宜吹捧中国大语言模型的同…...

C语言自定义数据类型详解(二)——结构体类型(下)

书接上回,前面我们已经给大家介绍了如何去声明和创建一个结构体,如何初始化结构体变量等这些关于结构体的基础知识。下面我们将继续给大家介绍和结构体有关的知识: 今天的主题是:结构体大小的计算并简单了解一下位段的相关知识。…...

DeepSeek学术写作测评第二弹:数据分析、图表解读,效果怎么样?

我是娜姐 迪娜学姐 ,一个SCI医学期刊编辑,探索用AI工具提效论文写作和发表。 针对最近全球热议的DeepSeek开源大模型,娜姐昨天分析了关于论文润色、中译英的详细效果测评: DeepSeek学术写作测评第一弹:论文润色&#…...

深入理解 Python 中的 `__all__`:控制模块的公共接口

在 Python 编程中,模块化设计是构建可维护和可扩展代码的关键。模块不仅帮助我们组织代码,还能通过隐藏实现细节来提高代码的可读性和安全性。Python 提供了多种机制来控制模块的可见性,其中 __all__ 是一个非常重要但常被忽视的特性。本文将…...

虚幻基础07:蓝图接口

能帮到你的话,就给个赞吧 😘 文章目录 作用原理事件函数 作用 实现对象间的通知。 A 通知 B 做什么。 原理 将接口抽象为蓝图,使得任意蓝图都能直接访问。 只需要再传入对象地址,就能执行对象的功能。 事件 黄色:…...

数据结构---哈希表

基本概念 哈希函数(Hash Function)是一种将输入的数据(通常是任意大小的)映射到固定大小的输出(通常是一个固定长度的值)的函数。这个输出值通常称为“哈希值”(Hash Value)或“哈希…...

DataWhale组队学习 leetCode task4

1. 滑动窗口算法介绍 想象你正在用一台望远镜观察一片星空。望远镜的镜头大小是固定的,你可以通过滑动镜头来观察不同的星区。滑动窗口算法就像这台望远镜,它通过一个固定或可变大小的“窗口”来观察数组或字符串中的连续区间。 滑动操作:就像…...

【ESP32】ESP-IDF开发 | WiFi开发 | UDP用户数据报协议 + UDP客户端和服务器例程

1. 简介 UDP协议(User Datagram Protocol),全称用户数据报协议,它是一种面向非连接的协议,面向非连接指的是在正式通信前不必与对方先建立连接, 不管对方状态就直接发送。至于对方是否可以接收到这些数据内…...

【PyQt5】数据库连接失败: Driver not loaded Driver not loaded

报错内容如下: 可以看到目前所支持的数据库驱动仅有[‘QSQLITE’, ‘QMARIADB’, ‘QODBC’, ‘QODBC3’, ‘QPSQL’, ‘QPSQL7’] 我在网上查找半天解决方法未果,其中有一篇看评论反馈是可以使用的,但是PyQt5的版本有点低,5.12…...

Unity游戏(Assault空对地打击)开发(1) 创建项目和选择插件

目录 前言 创建项目 插件导入 地形插件 前言 这是游戏开发第一篇,进行开发准备。 创作不易,欢迎支持。 我的编辑器布局是【Tall】,建议调整为该布局,如下。 创建项目 首先创建一个项目,过程略,名字请勿…...

Rust:如何动态调用字符串定义的 Rhai 函数?

在 Rust 中使用 Rhai 脚本引擎时,你可以动态地调用传入的字符串表示的 Rhai 函数。Rhai 是一个嵌入式脚本语言,专为嵌入到 Rust 应用中而设计。以下是一个基本示例,展示了如何在 Rust 中调用用字符串传入的 Rhai 函数。 首先,确保…...

A星算法两元障碍物矩阵转化为rrt算法四元障碍物矩阵

对于a星算法obstacle所表示的障碍物障碍物信息,每行表示一个障碍物的坐标,例如2 , 3; % 第一个障碍物在第二行第三列,也就是边长为1的正方形障碍物右上角横坐标是2,纵坐标为3,障碍物的宽度和高度始终为1.在rrt路径规划…...

【C++】设计模式详解:单例模式

文章目录 Ⅰ. 设计一个类,不允许被拷贝Ⅱ. 请设计一个类,只能在堆上创建对象Ⅲ. 请设计一个类,只能在栈上创建对象Ⅳ. 请设计一个类,不能被继承Ⅴ. 请设计一个类,只能创建一个对象(单例模式)&am…...

单细胞分析基础-第一节 数据质控、降维聚类

scRNA_pipeline\1.Seurat 生物技能树 可进官网查询 添加链接描述 分析流程 准备:R包安装 options("repos"="https://mirrors.ustc.edu.cn/CRAN/") if(!require("BiocManager")) install.packages("BiocManager",update = F,ask =…...

多项日常使用测试,带你了解如何选择AI工具 Deepseek VS ChatGpt VS Claude

多项日常使用测试,带你了解如何选择AI工具 Deepseek VS ChatGpt VS Claude 注:因为考虑到绝大部分人的使用,我这里所用的模型均为免费模型。官方可访问的。ChatGPT这里用的是4o Ai对话,编程一直以来都是人们所讨论的话题。Ai的出现…...

每日一题-判断是否是平衡二叉树

判断是否是平衡二叉树 题目描述数据范围题解解题思路递归算法代码实现代码解析时间和空间复杂度分析示例示例 1示例 2 总结 ) 题目描述 输入一棵节点数为 n 的二叉树,判断该二叉树是否是平衡二叉树。平衡二叉树定义为: 它是一棵空树。或者它的左右子树…...

FLTK - FLTK1.4.1 - 搭建模板,将FLTK自带的实现搬过来做实验

文章目录 FLTK - FLTK1.4.1 - 搭建模板,将FLTK自带的实现搬过来做实验概述笔记my_fltk_test.cppfltk_test.hfltk_test.cxx用adjuster工程试了一下,好使。END FLTK - FLTK1.4.1 - 搭建模板,将FLTK自带的实现搬过来做实验 概述 用fluid搭建UI…...

《多阶段渐进式图像修复》学习笔记

paper:2102.02808 GitHub:swz30/MPRNet: [CVPR 2021] Multi-Stage Progressive Image Restoration. SOTA results for Image deblurring, deraining, and denoising. 目录 摘要 1、介绍 2、相关工作 2.1 单阶段方法 2.2 多阶段方法 2.3 注意力机…...

AWScurl笔记

摘要 AWScurl是一款专为与AWS服务交互设计的命令行工具,它模拟了curl的功能并添加了AWS签名版本4的支持。这一特性使得用户能够安全有效地执行带有AWS签名的请求,极大地提升了与AWS服务交互时的安全性和有效性。 GitHub - okigan/awscurl: curl-like acc…...

QT使用eigen

QT使用eigen 1. 下载eigen https://eigen.tuxfamily.org/index.php?titleMain_Page#Download 下载后解压 2. QT引入eigen eigen源码好像只有头文件,因此只需要引入头文件就好了 qt新建项目后。修改pro文件. INCLUDEPATH E:\222078\qt\eigen-3.4.0\eigen-3.…...

日语AI面试高效通关秘籍:专业解读与青柚面试智能助攻

在如今就业市场竞争日益激烈的背景下,越来越多的求职者将目光投向了日本及中日双语岗位。但是,一场日语面试往往让许多人感到步履维艰。你是否也曾因为面试官抛出的“刁钻问题”而心生畏惧?面对生疏的日语交流环境,即便提前恶补了…...

)

【RockeMQ】第2节|RocketMQ快速实战以及核⼼概念详解(二)

升级Dledger高可用集群 一、主从架构的不足与Dledger的定位 主从架构缺陷 数据备份依赖Slave节点,但无自动故障转移能力,Master宕机后需人工切换,期间消息可能无法读取。Slave仅存储数据,无法主动升级为Master响应请求ÿ…...

如何在最短时间内提升打ctf(web)的水平?

刚刚刷完2遍 bugku 的 web 题,前来答题。 每个人对刷题理解是不同,有的人是看了writeup就等于刷了,有的人是收藏了writeup就等于刷了,有的人是跟着writeup做了一遍就等于刷了,还有的人是独立思考做了一遍就等于刷了。…...

用机器学习破解新能源领域的“弃风”难题

音乐发烧友深有体会,玩音乐的本质就是玩电网。火电声音偏暖,水电偏冷,风电偏空旷。至于太阳能发的电,则略显朦胧和单薄。 不知你是否有感觉,近两年家里的音响声音越来越冷,听起来越来越单薄? —…...

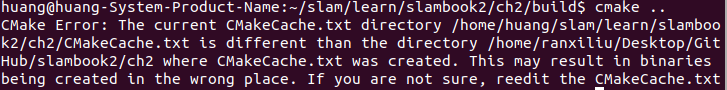

视觉slam十四讲实践部分记录——ch2、ch3

ch2 一、使用g++编译.cpp为可执行文件并运行(P30) g++ helloSLAM.cpp ./a.out运行 二、使用cmake编译 mkdir build cd build cmake .. makeCMakeCache.txt 文件仍然指向旧的目录。这表明在源代码目录中可能还存在旧的 CMakeCache.txt 文件,或者在构建过程中仍然引用了旧的路…...

基于Springboot+Vue的办公管理系统

角色: 管理员、员工 技术: 后端: SpringBoot, Vue2, MySQL, Mybatis-Plus 前端: Vue2, Element-UI, Axios, Echarts, Vue-Router 核心功能: 该办公管理系统是一个综合性的企业内部管理平台,旨在提升企业运营效率和员工管理水…...

Git 3天2K星标:Datawhale 的 Happy-LLM 项目介绍(附教程)

引言 在人工智能飞速发展的今天,大语言模型(Large Language Models, LLMs)已成为技术领域的焦点。从智能写作到代码生成,LLM 的应用场景不断扩展,深刻改变了我们的工作和生活方式。然而,理解这些模型的内部…...

为什么要创建 Vue 实例

核心原因:Vue 需要一个「控制中心」来驱动整个应用 你可以把 Vue 实例想象成你应用的**「大脑」或「引擎」。它负责协调模板、数据、逻辑和行为,将它们变成一个活的、可交互的应用**。没有这个实例,你的代码只是一堆静态的 HTML、JavaScript 变量和函数,无法「活」起来。 …...

AI语音助手的Python实现

引言 语音助手(如小爱同学、Siri)通过语音识别、自然语言处理(NLP)和语音合成技术,为用户提供直观、高效的交互体验。随着人工智能的普及,Python开发者可以利用开源库和AI模型,快速构建自定义语音助手。本文由浅入深,详细介绍如何使用Python开发AI语音助手,涵盖基础功…...

comfyui 工作流中 图生视频 如何增加视频的长度到5秒

comfyUI 工作流怎么可以生成更长的视频。除了硬件显存要求之外还有别的方法吗? 在ComfyUI中实现图生视频并延长到5秒,需要结合多个扩展和技巧。以下是完整解决方案: 核心工作流配置(24fps下5秒120帧) #mermaid-svg-yP…...